PointNet with KAN versus PointNet with MLP for 3D Classification and Segmentation of Point Sets

Abstract

Kolmogorov-Arnold Networks (KANs) have recently gained attention as an alternative to traditional Multilayer Perceptrons (MLPs) in deep learning frameworks. KANs have been integrated into various deep learning architectures such as convolutional neural networks, graph neural networks, and transformers, with their performance evaluated. However, their effectiveness within point-cloud-based neural networks remains unexplored. To address this gap, we incorporate KANs into PointNet for the first time to evaluate their performance on 3D point cloud classification and segmentation tasks. Specifically, we introduce PointNet-KAN, built upon two key components. First, it employs KANs instead of traditional MLPs. Second, it retains the core principle of PointNet by using shared KAN layers and applying symmetric functions for global feature extraction, ensuring permutation invariance with respect to the input features. In traditional MLPs, the goal is to train the weights and biases with fixed activation functions; however, in KANs, the goal is to train the activation functions themselves. We use Jacobi polynomials to construct the KAN layers. We extensively and systematically evaluate PointNet-KAN across various polynomial degrees and special types such as the Lagrange, Chebyshev, and Gegenbauer polynomials. Our results show that PointNet-KAN achieves competitive performance compared to PointNet with MLPs on benchmark datasets for 3D object classification and segmentation, despite employing a shallower and simpler network architecture. We hope this work serves as a foundation and provides guidance for integrating KANs, as an alternative to MLPs, into more advanced point cloud processing architectures.

1 Introduction

Kolmogorov-Arnold Networks (KANs), introduced by Liu et al. (2024), have recently emerged as an alternative modeling framework to traditional Multilayer Perceptrons (MLPs) (Cybenko, 1989; Hornik et al., 1989). KANs are based on the Kolmogorov-Arnold representation theorem (Kolmogorov, 1957; Arnold, 2009). Unlike MLPs, which rely on fixed activation functions while training weights and biases, the objective in KANs is to train the activation functions themselves (Liu et al., 2024).

The performance of KANs has been evaluated across various domains, including scientific machine learning tasks (Wang et al., 2024b; Shukla et al., 2024; Abueidda et al., 2024; Koenig et al., 2024), image classification (Azam & Akhtar, 2024; Cheon, 2024; Lobanov et al., 2024; Yu et al., 2024; Tran et al., 2024), image segmentation (Li et al., 2024; Tang et al., 2024), image detection (Wang et al., 2024a), audio classification (Yu et al., 2024), and other applications. Additionally, from a neural network architecture perspective, KANs have been integrated into convolutional neural networks (CNNs) (Azam & Akhtar, 2024; Bodner et al., 2024) and graph neural networks (Kiamari et al., 2024; Bresson et al., 2024; Zhang & Zhang, 2024; De Carlo et al., 2024).

However, the efficiency of KANs for 3D point cloud data has not yet been explored. Point cloud data plays a critical role in various domains, including computer graphics, computer vision, robotics, and autonomous driving (Uy & Lee, 2018; Li et al., 2020; Guo et al., 2020; Zhang et al., 2023a; b). One of the most successful neural networks for deep learning on point cloud data is PointNet, introduced by Qi et al. (2017a). Following this, several modified and advanced versions of PointNet have been developed (Qi et al., 2017b; Shen et al., 2018; Thomas et al., 2019; Wang et al., 2019; Zhao et al., 2021). PointNet has gained attention in the fields of genetics, molecular simulations, and computational mechanics (Lu et al., 2024; Shen et al., 2021; 2023; Kashefi et al., 2021; Kashefi & Mukerji, 2022; Kashefi et al., 2023; Kashefi & Mukerji, 2023; 2021). To the best of our knowledge, the only existing work embedding KANs into PointNet involves 2D supervised learning in the context of computational fluid dynamics (Kashefi, 2024). In this work, we integrate KANs into PointNet for the first time to evaluate its performance on classification and segmentation tasks for 3D point cloud data.

It is important to clarify that by embedding KANs into PointNet, we do not simply mean replacing every instance of MLPs with KANs. While such an approach could be considered a research case, our goal is to preserve and utilize the core principles upon which PointNet is built. First, we apply shared KANs, meaning that the same KANs are applied to all input points. Second, we utilize a symmetric function, such as the max function, to extract global features from the points. These two elements are fundamental to PointNet, and by maintaining them, we ensure that the network remains invariant to input permutations. Our objective is to propose a version of PointNet integrated with KANs that retains these two essential properties, which we refer to as PointNet-KAN throughout the rest of this article. Moreover, we focus on PointNet (Qi et al., 2017a) rather than more advanced versions (Qi et al., 2017b; Shen et al., 2018; Thomas et al., 2019; Wang et al., 2019; Zhao et al., 2021) to directly and explicitly investigate the effect of KANs on the network’s performance. Using more complex versions of PointNet could introduce other factors that might obscure the direct influence of KANs, making it challenging to determine whether any performance changes are due to the KAN architecture or other components of the network.

We use Jacobi polynomials to construct PointNet-KAN and investigate its performance across different polynomial degrees. Additionally, we examine the effect of special cases of Jacobi polynomials, including Legendre polynomials, Chebyshev polynomials of the first and second kinds, and Gegenbauer polynomials. The performance of PointNet-KAN is evaluated across classification and part segmentation tasks. Overall, the summary of our key contributions is as follows:

-

•

We introduce PointNet with KANs (i.e., PointNet-KAN) for the first time and evaluate its performance against PointNet with MLPs.

-

•

We embed KAN into a point-cloud-based neural network for the first time, for computer vision tasks on unordered 3D point sets.

-

•

We conduct an extensive evaluation of the hyperparameters of PointNet-KAN, specifically the degree and type of polynomial used in constructing KANs.

-

•

We assess the efficiency of PointNet-KAN on benchmarks for 3D object classification and segmentation tasks.

-

•

We demonstrate that PointNet-KAN achieves competitive performance to PointNet, despite having a much shallower and simpler network architecture.

-

•

We release our code to support reproducibility and future research.

2 Kolmogorov-Arnold Network (KAN) layers

Inspired by the Kolmogorov-Arnold representation theorem (Kolmogorov, 1957; Arnold, 2009), Kolmogorov-Arnold Network (KAN) has been proposed as a novel neural network architecture by Liu et al. (2024). According to the theorem, multivariate continuous function can be expressed as a finite composition of continuous univariate functions and additions. To describe the structure of KAN straightforwardly, consider a single-layer KAN. The network’s input is a vector of size , and its output is a vector of size . In this configuration, the single-layer KAN maps the input to the output as follows:

| (1) |

where the tensor is expressed as:

| (2) |

where each (the subscript is removed to lighten notation) is defined as:

| (3) |

where represents the Jacobi polynomial of degree , is the polynomial order of , and are trainable parameters. Hence, the total number of trainable parameters embedded in is . We implement using a recursive relation (Szegő, 1939):

| (4) |

where the coefficients , , and are given by:

| (5) |

| (6) |

| (7) |

with the following initial conditions:

| (8) |

| (9) |

Since is recursively constructed, the polynomials for are computed sequentially. Additionally, because the input to the Jacobi polynomials must lie within the interval , the input vector needs to be scaled to fit this range before being passed to the KAN layer. To achieve this, we apply the hyperbolic tangent function. Finally, setting yields the Legendre polynomial (Abramowitz, 1974; Szegő, 1939), while the Chebyshev polynomials of the first and second kinds are obtained with and , respectively (Abramowitz, 1974; Szegő, 1939). Additionally, the Gegenbauer (or ultraspherical) polynomials arise when (Szegő, 1939).

3 Overview of PointNet and its key principles

Consider a point cloud as an unordered set with points, defined as . The dimension (or number of features) of each is shown by . According to the Theorem 1 proposed in Qi et al. (2017a), a set function can be defined to map this set of points to a vector as follows:

| (10) |

where is a vector-wise max operator that takes vectors as input and returns a new vector, computed as the element-wise maximum. In PointNet (Qi et al., 2017a), the continuous functions and are implemented as MLPs. In this work, we replace and with KANs, resulting in PointNet-KAN. Note that the function is invariant to the permutation of input points. Details of this theorem and its proof can be found in Qi et al. (2017a).

4 Architecture of PointNet-KAN

Classification branch

The top panel of Fig. 1 demonstrates the classification branch of PointNet-KAN. The architecture of the classification branch is explained as follows. The PointNet-KAN model accepts input with dimensionality corresponding to 3D spatial coordinates (i.e., ) and possibly the 3D normal vector as part of the point set representation (i.e., ). A shared KAN layer maps the input feature vector from its original space to an intermediate feature space of dimension 3072. Following the first shared KAN layer, batch normalization (Ioffe & Szegedy, 2015) is applied. After normalization, a max pooling operation is performed to extract global features by computing the maximum value across all points in the point cloud. Next, the global feature is passed through a KAN layer, which reduces the dimensionality to the number of output channels (i.e., ), corresponding to the classification task. A softmax function is applied to the output to convert the logits into class probabilities. The concept of shared KANs is analogous to the shared MLPs used in PointNet (Qi et al., 2017a). It means that the same functional tensor, , is applied uniformly to the input or intermediate features in PointNet-KAN. The use of the shared KAN layers and the symmetric max-pooling function ensure that PointNet-KAN is invariant to the order of the points in the point cloud.

Part segmentation branch

As shown in the bottom panel of Fig. 1, the part segmentation branch of the PointNet-KAN is described as follows. The input is first passed through a shared KAN layer, transforming it to an intermediate feature space of size 640, followed by batch normalization. These local features are then processed by a second shared KAN layer, mapping them to a higher-dimensional space of size 5120, and another batch normalization step is applied. A max pooling operation extracts a global feature representing the entire point cloud, which is then expanded to match the number of points. The one-hot encoded class label, representing the object category, is concatenated with the local features and the global feature. This combined feature, consisting of local features of size 640, global features of size 5120, and the class label, is passed through a shared KAN layer to reduce the feature size to 640, followed by batch normalization. A final shared KAN layer generates the output, delivering point-wise segmentation predictions, followed by a softmax function to convert the logits into class probabilities.

normal vector number of points Mean class accuracy Overall accuracy FLOPs/sample PointNet++ (Qi et al., 2017b) no 2048 - 90.7 - PointNet++ (Qi et al., 2017b) yes 2048 - 91.9 - DGCNN (Wang et al., 2019) no 2048 90.7 93.5 - Point Transformer (Zhao et al., 2021) yes - 90.6 93.7 - PointMLP (Ma et al., 2022) no 1000 91.4 94.5 - ShapeLLM (Qi et al., 2024) no 1000 94.8 95.0 - PointNet (baseline) (Qi et al., 2017a) no 1024 72.6 77.4 148M PointNet (Qi et al., 2017a) no 1024 86.2 89.2 440M PointNet-KAN no 1024 82.7 87.5 60M PointNet-KAN yes 1024 87.2 90.5 110M

5 Experiment and discussion

5.1 3D object classification

We evaluate PointNet-KAN on the ModelNet40 Wu et al. (2015) shape classification benchmark, which contains 12,311 models across 40 categories, with 9,843 models allocated for training and 2,468 for testing. Similar to Qi et al. (2017a), we uniformly sample 1,024 points from the mesh faces and normalize them into a unit sphere. We also conduct an experiment with included normal vectors as input features, computed using the trimesh library (Dawson-Haggerty et al., ). Table 1 presents the classification results of PointNet-KAN, with a polynomial degree of 4 (i.e., in Eq. 3) and . Training details are provided in A.1. The obtained results can be interpreted from two perspectives.

First, comparing PointNet-KAN with PointNet (baseline) (Qi et al., 2017a) and PointNet (Qi et al., 2017a) shows that PointNet-KAN (with or without normal vectors) achieves higher accuracy than PointNet (baseline). Additionally, PointNet-KAN with normal vectors as input features outperforms PointNet. The number of trainable parameters for PointNet-KAN with , PointNet (baseline), and PointNet in the classification branch is approximately 1M, 0.8M, and 3.5M, respectively. It is worth noting that PointNet-KAN with has only roughly 0.6M trainable parameters, making it lighter than PointNet (baseline) while still achieving an overall accuracy of 89.9 (see Table 3). Notably, despite its simpler architecture—lacking the input and feature transforms found in PointNet, as shown in Fig. 2 of Qi et al. (2017a), and having only 3 hidden layers compared to the 8 hidden layers of PointNet—PointNet-KAN still delivers competitive results, with overall accuracy of 90.5% versus 89.2%. From a time complexity perspective, the number of floating-point operations required for one forward pass of the PointNet-KAN model is significantly lower than that of PointNet, as shown in Table 1.

From the second perspective, we observe that other advanced point-cloud-based deep learning frameworks, such as PointNet++ (Qi et al., 2017b), DGCNN (Wang et al., 2019), Point Transform (Zhao et al., 2021), PointMLP (Ma et al., 2022), and ShapeLLM (Qi et al., 2024), outperform PointNet-KAN, as listed in Table 1, though these models employ more advanced and complex architectures involving MLPs. This raises the question of whether redesigning these networks using KANs instead of MLPs could improve their accuracy. While the current article focuses on evaluating KAN within the simplest point-cloud-based neural network, PointNet, we hope that the promising results of PointNet-KAN motivate future efforts to embed KANs into more advanced architectures.

Mean aero bag cap car chair ear guitar knife lamp laptop motor mug pistol rocket skate table IoU phone board # shapes 2690 76 55 898 3758 69 787 392 1547 451 202 184 283 66 152 5271 Wu et al. - 63.2 - - - 73.5 - - - 74.4 - - - - - 74.8 3DCNN 79.4 75.1 72.8 73.3 70.0 87.2 63.5 88.4 79.6 74.4 93.9 58.7 91.8 76.4 51.2 65.3 77.1 Yi et al. 81.4 81.0 78.4 77.7 75.7 87.6 61.9 92.0 85.4 82.5 95.7 70.6 91.9 85.9 53.1 69.8 75.3 DGCNN 85.2 84.0 83.4 86.7 77.8 90.6 74.7 91.2 87.5 82.8 95.7 66.3 94.9 81.1 63.5 74.5 82.6 KPConv 86.4 84.6 86.3 87.2 81.1 91.1 77.8 92.6 88.4 82.7 96.2 78.1 95.8 85.4 69.0 82.0 83.6 TAP 86.9 84.8 86.1 89.5 82.5 92.1 75.9 92.3 88.7 85.6 96.5 79.8 96.0 85.9 66.2 78.1 83.2 PointNet 83.7 83.4 78.7 82.5 74.9 89.6 73.0 91.5 85.9 80.8 95.3 65.2 93.0 81.2 57.9 72.8 80.6 PN-KAN 83.3 81.0 76.8 79.8 74.6 88.7 65.4 90.9 85.3 79.9 95.0 65.3 93.0 83.0 54.3 71.9 81.6

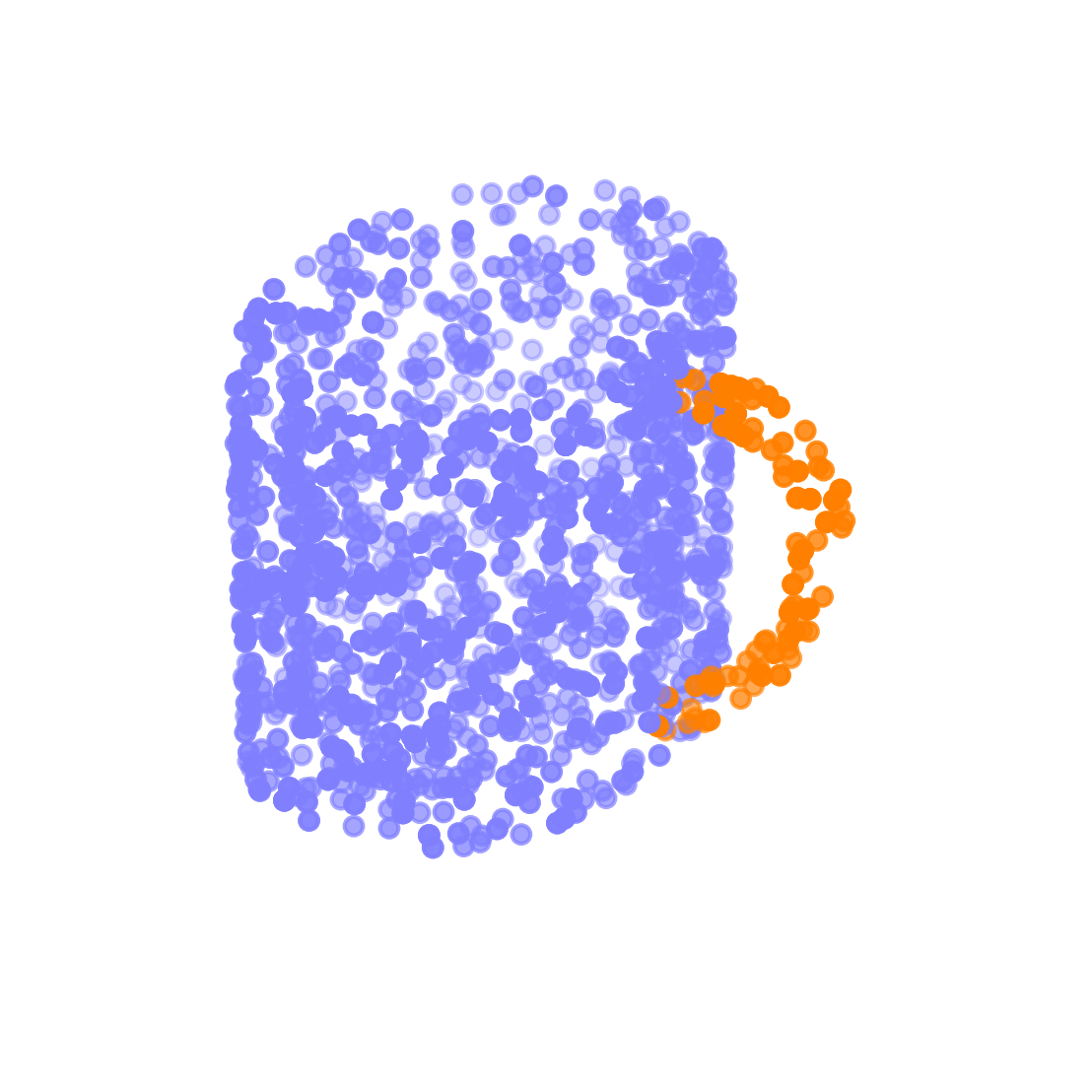

5.2 3D object part segmentation

For the part segmentation task, we assess PointNet-KAN on the ShapeNet part dataset (Yi et al., 2016), which includes 16,881 shapes across 16 categories, with annotations for 50 distinct parts. The number of parts per category ranges from 2 to 6. We adhere to the official train, validation, and test splits as outlined in the literature (Chang et al., 2015; Qi et al., 2017a; Wang et al., 2019). In our experiment, we uniformly sample 2,048 points from each shape within a unit ball. The input features for PointNet-KAN consist solely of spatial coordinates, and normal vectors are not utilized (i.e., ). The evaluation metric used is Intersection-over-Union (IoU) on points, as described by Qi et al. (2017a). Training details are provided in A.1. Qualitative results for part segmentation are shown in Fig. 2. The performance of PointNet-KAN compared to PointNet Qi et al. (2017a) is presented in Table 2. Accordingly, PointNet-KAN demonstrates competitive results compared to PointNet, with a mean IoU of 83.3% versus 83.7%. As shown in Table 2, for categories such as motorbike, pistol, and table, PointNet-KAN provides more accurate predictions than PointNet Qi et al. (2017a). Based on our machine learning experiments, adding normal vectors as input features does not improve the performance of PointNet-KAN. A comparison between the segmentation branch of PointNet-KAN, shown in Fig. 1, and the part segmentation branch of PointNet, shown in Fig. 9 of Qi et al. (2017a), highlights the simplicity of the PointNet-KAN architecture, which consists of only 4 layers and uses a single local feature, whereas PointNet has 11 layers and uses 5 local features. Additionally, while PointNet includes input and feature transform networks, the PointNet-KAN architecture does not. Overall, PointNet-KAN outperforms earlier methodologies such as those in Wu et al. (2014), 3DCNN (Qi et al., 2017a), and Yi et al. (2016). However, more recent architectures, including DGCNN (Wang et al., 2019), KPConv (Thomas et al., 2019), and TAP (Wang et al., 2023), surpass PointNet-KAN. As discussed in Sect. 5.1, incorporating KANs into the core of these networks as a replacement for MLPs could potentially enhance their performance.

| Jacobi polynomial degree | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|

| Number of trainable parameters | 620928 | 823680 | 1026432 | 1229184 | 1431936 |

| Mean class accuracy | 86.7 | 87.0 | 87.2 | 86.8 | 86.1 |

| Overall accuracy | 89.9 | 90.4 | 90.5 | 89.9 | 89.9 |

Polynomial type Mean class accuracy 85.6 86.0 86.7 86.7 85.4 86.2 Overall accuracy 89.5 89.9 90.1 89.9 89.4 89.8

5.3 Ablation studies

Influence of polynomial type and polynomial degree

Concerning the classification task discussed in Sect. 5.1, Table 3 illustrates the effect of varying the polynomial degree from 2 to 6, with held constant. While increasing the degree does not significantly affect accuracy, it does increase the number of trainable parameters. Moreover, Table 4 reports the results of varying and with a fixed polynomial degree of 2, showing that different Jacobi polynomial types do not significantly impact performance. Concerning the segmentation task discussed in Sect. 5.2, we investigate the effect of the Jacobi polynomial degree and the roles of and on performance. The results are tabulated in Table 5 and 6. Similar to the classification task discussed in Sect. 5.1, no significant differences are observed. As shown in Table 5, increasing the degree of the Jacobi polynomial does not improve prediction accuracy. According to Table 6, the best performance is achieved with the Chebyshev polynomial of the first kind when .

Influence of the size of tensors and global feature

We investigate the effect of the size of the tensor (see Eq. 2) and, consequently, the size of the global feature on prediction accuracy. In the classification branch (see Fig. 1), choosing the shared KAN layer with the size of 1024 (i.e., and global feature size of 1024) and 2048 (i.e., and global feature size of 2048) results in the overall accuracy of 89.7% and 90.3%, respectively, for the ModelNet40 (Wu et al., 2015) benchmark. In the segmentation branch (see Fig. 1), there are four shared KAN layers, each corresponding to a tensor. From left to right, we refer to them as , , , and . For example, selecting the sets , , , and , , , , respectively, results in a mean IoU of 82.6% and 82.2% for the ShapeNet part (Yi et al., 2016) benchmark. Note that the size of the global feature in the segmentation branch is determined by the number of rows () in tensor .

Robustness

Figure 3 shows the overall accuracy on the ModelNet40 (Wu et al., 2015) benchmark when input points from the test set are randomly dropped. PointNet-KAN (with , ) demonstrates relatively stable performance as the number of points decreases from 1024 to 128, with accuracy gradually dropping from 90.5% to 83.7% when using normal vectors (), and from 87.5% to 77.5% without normal vectors (). Interestingly, PointNet-KAN shows stronger stability compared to other models (Qi et al., 2017a; b; Wang et al., 2019), as indicated in Fig. 3.

Influence of input and feature transform networks and deeper architectures

In Sect. 5.1 and Sect. 5.2, we pointed out that PointNet-KAN is effective, despite its simple and shallow architecture, and the absence of input and feature transform networks. A question arises: if such a simple structure performs well, why not improve PointNet-KAN’s performance by deepening the network and adding input and feature transform networks to achieve even better results? To answer this question, a straightforward approach is to replace all MLPs in the PointNet architecture (see Fig. 2 of Qi et al. (2017a) for the classification branch and Fig. 9 of Qi et al. (2017a) for the segmentation branch) with KAN to create an equivalent model. We conduct this experiment as follows. We utilize KAN layers with a Jacobi polynomial degree of 2 (i.e., ) and parameters . The size of the sequential KAN layers is chosen to match the corresponding size of the MLPs in PointNet, such as (64, 64), (64, 128, 1024), and so on, as illustrated in Qi et al. (2017a). To conserve space, we omit sketching the full network architecture again. Interestingly, the network’s performance does not improve. The overall accuracy of classification on ModelNet40 (Wu et al., 2015) is 88.9% and the mean IoU on the ShapeNet part dataset (Yi et al., 2016) is 82.1%.

Mean aero bag cap car chair ear guitar knife lamp laptop motor mug pistol rocket skate table IoU phone board # shapes 2690 76 55 898 3758 69 787 392 1547 451 202 184 283 66 152 5271 82.8 81.1 76.8 78.7 74.4 88.4 64.8 90.5 84.5 78.8 95.0 66.9 93.0 82.3 56.8 73.5 80.7 81.8 80.0 76.3 79.6 72.1 88.0 69.4 89.0 83.0 79.4 95.0 61.5 91.3 81.0 55.3 70.0 79.0 82.4 81.2 71.2 75.6 70.7 87.9 68.3 90.0 81.8 78.4 94.0 60.7 90.7 80.1 51.3 70.8 81.7 80.7 78.2 72.0 79.0 67.8 87.5 68.9 87.6 81.3 76.6 94.5 60.8 88.0 81.0 47.3 69.3 79.3 82.2 80.5 70.8 78.0 71.7 87.5 62.5 88.0 82.7 76.8 94.6 62.8 92.0 78.9 48.7 65.9 81.6

Mean aero bag cap car chair ear guitar knife lamp laptop motor mug pistol rocket skate table IoU phone board # shapes 2690 76 55 898 3758 69 787 392 1547 451 202 184 283 66 152 5271 83.1 82.0 73.5 80.2 75.4 88.5 68.9 90.4 83.9 80.6 95.2 65.3 92.7 81.2 56.9 72.4 80.9 83.3 81.0 76.8 79.8 74.6 88.7 65.4 90.9 85.3 79.9 95.0 65.3 93.0 83.0 54.3 71.9 81.6 81.7 80.5 74.9 78.9 69.3 87.5 66.3 89.5 84.1 77.3 95.0 64.5 92.0 81.7 53.1 71.3 79.7 82.8 81.1 76.8 78.7 74.4 88.4 64.8 90.5 84.5 78.8 95.0 66.9 93.0 82.3 56.8 73.5 80.7 82.6 81.0 75.8 81.5 72.1 88.1 68.0 90.9 83.5 79.5 95.2 63.2 91.2 80.5 58.2 74.0 80.8 82.5 81.0 73.3 82.4 71.6 88.3 68.5 90.7 84.3 79.3 95.4 64.2 91.3 81.9 54.6 70.4 80.5

6 Related work

Relevant work on KANs can be discussed from two perspectives. The first focuses on using KANs for classification and segmentation tasks in computer graphics and computer vision. For classification, researchers (Cheon, 2024; Bodner et al., 2024; Azam & Akhtar, 2024) have embedded KANs as a replacement for MLPs in various popular CNN-based neural networks for two-dimensional image classification, such as VGG16 (Simonyan & Zisserman, 2014), MobileNetV2 (Sandler et al., 2018), EfficientNet (Tan, 2019), ConvNeXt (Liu et al., 2022), ResNet-101 (He et al., 2016), and Vision Transformer (Dosovitskiy, 2020), and evaluated the performance of these networks with KANs. For 3D image segmentation tasks, KANs have been embedded into U-Net (Ronneberger et al., 2015) as a replacement for MLPs (Tang et al., 2024; Wu et al., 2024). However, no prior work has explored the use of KANs in point-cloud-based neural networks for 3D classification and segmentation of unordered point sets or evaluated their performance on complex benchmark datasets such as ModelNet40 (Wu et al., 2015) and the ShapeNet Part dataset (Yi et al., 2016). From the second perspective, KANs were originally constructed using B-spline as the basis polynomial (Liu et al., 2024), and researchers employed this type of polynomial for image classification and segmentation (Cheon, 2024; Bodner et al., 2024; Azam & Akhtar, 2024). However, studies have shown that B-splines are computationally expensive and pose difficulties in implementation (Howard et al., 2024; Rigas et al., 2024). To address these issues, recent advancements in scientific machine learning suggested the use of Jacobi polynomials as an alternative in KANs (SS, 2024; Seydi, 2024). Accordingly, Jacobi polynomials are not only easier to implement but also computationally more efficient. However, no prior work has explored the use of KANs with Jacobi polynomials in computer vision for classification and segmentation tasks.

7 Summary

In this work, we proposed, for the first time, PointNet with shared KANs (i.e., PointNet-KAN) and compared its performance to PointNet with shared MLPs. Our results demonstrated that PointNet-KAN achieved competitive performance to PointNet in both classification and segmentation tasks, while using a simpler and much shallower network compared to the deep PointNet with shared MLPs. In our implementation of shared KAN, we compared various families of the Jacobi polynomials, including Lagrange, Chebyshev, and Gegenbauer polynomials, and observed no significant differences in performance among them. Additionally, we found that a polynomial degree of 2 was sufficient. We hope this work lays a foundation and offers insights for incorporating KANs, as an alternative to MLPs, into more advanced architectures for point cloud deep learning frameworks.

Acknowledgments

We express our gratitude to the Sherlock Computing Center at Stanford University for providing the computational resources that supported this research.

Reproducibility Statement

The Python code is available on the following GitHub repository at https://github.com/Ali-Stanford/PointNet_KAN_Graphic.

References

- Abramowitz (1974) Milton Abramowitz. Handbook of Mathematical Functions, With Formulas, Graphs, and Mathematical Tables,. Dover Publications, Inc., USA, 1974. ISBN 0486612724.

- Abueidda et al. (2024) Diab W Abueidda, Panos Pantidis, and Mostafa E Mobasher. Deepokan: Deep operator network based on kolmogorov arnold networks for mechanics problems. arXiv preprint arXiv:2405.19143, 2024.

- Arnold (2009) Vladimir I Arnold. On functions of three variables. Collected Works: Representations of Functions, Celestial Mechanics and KAM Theory, 1957–1965, pp. 5–8, 2009.

- Azam & Akhtar (2024) Basim Azam and Naveed Akhtar. Suitability of kans for computer vision: A preliminary investigation. arXiv preprint arXiv:2406.09087, 2024.

- Bodner et al. (2024) Alexander Dylan Bodner, Antonio Santiago Tepsich, Jack Natan Spolski, and Santiago Pourteau. Convolutional kolmogorov-arnold networks. arXiv preprint arXiv:2406.13155, 2024.

- Bresson et al. (2024) Roman Bresson, Giannis Nikolentzos, George Panagopoulos, Michail Chatzianastasis, Jun Pang, and Michalis Vazirgiannis. Kagnns: Kolmogorov-arnold networks meet graph learning. arXiv preprint arXiv:2406.18380, 2024.

- Chang et al. (2015) Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, et al. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012, 2015.

- Cheon (2024) Minjong Cheon. Kolmogorov-arnold network for satellite image classification in remote sensing. arXiv preprint arXiv:2406.00600, 2024.

- Cybenko (1989) George Cybenko. Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4):303–314, 1989.

- (10) Dawson-Haggerty et al. trimesh. URL https://trimesh.org/.

- De Carlo et al. (2024) Gianluca De Carlo, Andrea Mastropietro, and Aris Anagnostopoulos. Kolmogorov-arnold graph neural networks. arXiv preprint arXiv:2406.18354, 2024.

- Dosovitskiy (2020) Alexey Dosovitskiy. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Guo et al. (2020) Yulan Guo, Hanyun Wang, Qingyong Hu, Hao Liu, Li Liu, and Mohammed Bennamoun. Deep learning for 3d point clouds: A survey. IEEE transactions on pattern analysis and machine intelligence, 43(12):4338–4364, 2020.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Hornik et al. (1989) Kurt Hornik, Maxwell Stinchcombe, and Halbert White. Multilayer feedforward networks are universal approximators. Neural networks, 2(5):359–366, 1989.

- Howard et al. (2024) Amanda A Howard, Bruno Jacob, Sarah H Murphy, Alexander Heinlein, and Panos Stinis. Finite basis kolmogorov-arnold networks: domain decomposition for data-driven and physics-informed problems. arXiv preprint arXiv:2406.19662, 2024.

- Ioffe & Szegedy (2015) Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning, pp. 448–456. pmlr, 2015.

- Kashefi (2024) Ali Kashefi. Kolmogorov-Arnold PointNet: Deep learning for prediction of fluid fields on irregular geometries. arXiv preprint arXiv:2408.02950, 2024.

- Kashefi & Mukerji (2021) Ali Kashefi and Tapan Mukerji. Point-cloud deep learning of porous media for permeability prediction. Physics of Fluids, 33(9), 2021.

- Kashefi & Mukerji (2022) Ali Kashefi and Tapan Mukerji. Physics-informed pointnet: A deep learning solver for steady-state incompressible flows and thermal fields on multiple sets of irregular geometries. Journal of Computational Physics, 468:111510, 2022.

- Kashefi & Mukerji (2023) Ali Kashefi and Tapan Mukerji. Prediction of fluid flow in porous media by sparse observations and physics-informed pointnet. Neural Networks, 167:80–91, 2023.

- Kashefi et al. (2021) Ali Kashefi, Davis Rempe, and Leonidas J Guibas. A point-cloud deep learning framework for prediction of fluid flow fields on irregular geometries. Physics of Fluids, 33(2), 2021.

- Kashefi et al. (2023) Ali Kashefi, Leonidas J Guibas, and Tapan Mukerji. Physics-informed pointnet: On how many irregular geometries can it solve an inverse problem simultaneously? application to linear elasticity. Journal of Machine Learning for Modeling and Computing, 4(4), 2023.

- Kiamari et al. (2024) Mehrdad Kiamari, Mohammad Kiamari, and Bhaskar Krishnamachari. Gkan: Graph kolmogorov-arnold networks. arXiv preprint arXiv:2406.06470, 2024.

- Koenig et al. (2024) Benjamin C Koenig, Suyong Kim, and Sili Deng. Kan-odes: Kolmogorov-arnold network ordinary differential equations for learning dynamical systems and hidden physics. arXiv preprint arXiv:2407.04192, 2024.

- Kolmogorov (1957) A. K. Kolmogorov. On the representation of continuous functions of several variables by superposition of continuous functions of one variable and addition. Doklady Akademii Nauk SSSR, 114:369–373, 1957.

- Li et al. (2024) Chenxin Li, Xinyu Liu, Wuyang Li, Cheng Wang, Hengyu Liu, and Yixuan Yuan. U-kan makes strong backbone for medical image segmentation and generation. arXiv preprint arXiv:2406.02918, 2024.

- Li et al. (2020) Ying Li, Lingfei Ma, Zilong Zhong, Fei Liu, Michael A Chapman, Dongpu Cao, and Jonathan Li. Deep learning for lidar point clouds in autonomous driving: A review. IEEE Transactions on Neural Networks and Learning Systems, 32(8):3412–3432, 2020.

- Liu et al. (2022) Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, and Saining Xie. A convnet for the 2020s. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 11976–11986, 2022.

- Liu et al. (2024) Ziming Liu, Yixuan Wang, Sachin Vaidya, Fabian Ruehle, James Halverson, Marin Soljačić, Thomas Y Hou, and Max Tegmark. Kan: Kolmogorov-arnold networks. arXiv preprint arXiv:2404.19756, 2024.

- Lobanov et al. (2024) Valeriy Lobanov, Nikita Firsov, Evgeny Myasnikov, Roman Khabibullin, and Artem Nikonorov. Hyperkan: Kolmogorov-arnold networks make hyperspectral image classificators smarter. arXiv preprint arXiv:2407.05278, 2024.

- Lu et al. (2024) Hao Lu, Mostafa Rezapour, Haseebullah Baha, Muhammad Khalid Khan Niazi, Aarthi Narayanan, and Metin Nafi Gurcan. Gene pointnet for tumor classification. Neural Computing and Applications, pp. 1–15, 2024.

- Ma et al. (2022) Xu Ma, Can Qin, Haoxuan You, Haoxi Ran, and Yun Fu. Rethinking network design and local geometry in point cloud: A simple residual mlp framework. arXiv preprint arXiv:2202.07123, 2022.

- Qi et al. (2017a) Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 652–660, 2017a.

- Qi et al. (2017b) Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J Guibas. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in neural information processing systems, 30, 2017b.

- Qi et al. (2024) Zekun Qi, Runpei Dong, Shaochen Zhang, Haoran Geng, Chunrui Han, Zheng Ge, Li Yi, and Kaisheng Ma. Shapellm: Universal 3d object understanding for embodied interaction. arXiv preprint arXiv:2402.17766, 2024.

- Rigas et al. (2024) Spyros Rigas, Michalis Papachristou, Theofilos Papadopoulos, Fotios Anagnostopoulos, and Georgios Alexandridis. Adaptive training of grid-dependent physics-informed kolmogorov-arnold networks. arXiv preprint arXiv:2407.17611, 2024.

- Ronneberger et al. (2015) Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18, pp. 234–241. Springer, 2015.

- Sandler et al. (2018) Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4510–4520, 2018.

- Seydi (2024) Seyd Teymoor Seydi. Exploring the potential of polynomial basis functions in kolmogorov-arnold networks: A comparative study of different groups of polynomials. arXiv preprint arXiv:2406.02583, 2024.

- Shen et al. (2023) Yang Shen, Wei Huang, Zhen-guo Wang, Da-fu Xu, and Chao-Yang Liu. A deep learning framework for aerodynamic pressure prediction on general three-dimensional configurations. Physics of Fluids, 35(10), 2023.

- Shen et al. (2018) Yiru Shen, Chen Feng, Yaoqing Yang, and Dong Tian. Mining point cloud local structures by kernel correlation and graph pooling. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4548–4557, 2018.

- Shen et al. (2021) Zhengyuan Shen, Yangzesheng Sun, Timothy P Lodge, and J Ilja Siepmann. Development of a pointnet for detecting morphologies of self-assembled block oligomers in atomistic simulations. The Journal of Physical Chemistry B, 125(20):5275–5284, 2021.

- Shukla et al. (2024) Khemraj Shukla, Juan Diego Toscano, Zhicheng Wang, Zongren Zou, and George Em Karniadakis. A comprehensive and fair comparison between mlp and kan representations for differential equations and operator networks. arXiv preprint arXiv:2406.02917, 2024.

- Simonyan & Zisserman (2014) Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- SS (2024) Sidharth SS. Chebyshev polynomial-based kolmogorov-arnold networks: An efficient architecture for nonlinear function approximation. arXiv preprint arXiv:2405.07200, 2024.

- Szegő (1939) G. Szegő. Orthogonal polynomials, 12 1939. ISSN 0065-9258. URL http://dx.doi.org/10.1090/coll/023.

- Tan (2019) Mingxing Tan. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv preprint arXiv:1905.11946, 2019.

- Tang et al. (2024) Tianze Tang, Yanbing Chen, and Hai Shu. 3d u-kan implementation for multi-modal mri brain tumor segmentation. arXiv preprint arXiv:2408.00273, 2024.

- Thomas et al. (2019) Hugues Thomas, Charles R Qi, Jean-Emmanuel Deschaud, Beatriz Marcotegui, François Goulette, and Leonidas J Guibas. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 6411–6420, 2019.

- Tran et al. (2024) Van Duy Tran, Tran Xuan Hieu Le, Thi Diem Tran, Hoai Luan Pham, Vu Trung Duong Le, Tuan Hai Vu, Van Tinh Nguyen, and Yasuhiko Nakashima. Exploring the limitations of kolmogorov-arnold networks in classification: Insights to software training and hardware implementation, 2024.

- Uy & Lee (2018) Mikaela Angelina Uy and Gim Hee Lee. Pointnetvlad: Deep point cloud based retrieval for large-scale place recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4470–4479, 2018.

- Wang et al. (2024a) Yanheng Wang, Xiaohan Yu, Yongsheng Gao, Jianjun Sha, Jian Wang, Lianru Gao, Yonggang Zhang, and Xianhui Rong. Spectralkan: Kolmogorov-arnold network for hyperspectral images change detection. arXiv preprint arXiv:2407.00949, 2024a.

- Wang et al. (2024b) Yizheng Wang, Jia Sun, Jinshuai Bai, Cosmin Anitescu, Mohammad Sadegh Eshaghi, Xiaoying Zhuang, Timon Rabczuk, and Yinghua Liu. Kolmogorov arnold informed neural network: A physics-informed deep learning framework for solving pdes based on kolmogorov arnold networks. arXiv preprint arXiv:2406.11045, 2024b.

- Wang et al. (2019) Yue Wang, Yongbin Sun, Ziwei Liu, Sanjay E Sarma, Michael M Bronstein, and Justin M Solomon. Dynamic graph cnn for learning on point clouds. ACM Transactions on Graphics (tog), 38(5):1–12, 2019.

- Wang et al. (2023) Ziyi Wang, Xumin Yu, Yongming Rao, Jie Zhou, and Jiwen Lu. Take-a-photo: 3d-to-2d generative pre-training of point cloud models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5640–5650, 2023.

- Wu et al. (2024) Yanlin Wu, Tao Li, Zhihong Wang, Hong Kang, and Along He. Transukan: Computing-efficient hybrid kan-transformer for enhanced medical image segmentation. arXiv preprint arXiv:2409.14676, 2024.

- Wu et al. (2015) Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1912–1920, 2015.

- Wu et al. (2014) Zizhao Wu, Ruyang Shou, Yunhai Wang, and Xinguo Liu. Interactive shape co-segmentation via label propagation. Computers & Graphics, 38:248–254, 2014.

- Yi et al. (2016) Li Yi, Vladimir G Kim, Duygu Ceylan, I-Chao Shen, Mengyan Yan, Hao Su, Cewu Lu, Qixing Huang, Alla Sheffer, and Leonidas Guibas. A scalable active framework for region annotation in 3d shape collections. ACM Transactions on Graphics (ToG), 35(6):1–12, 2016.

- Yu et al. (2024) Runpeng Yu, Weihao Yu, and Xinchao Wang. Kan or mlp: A fairer comparison. arXiv preprint arXiv:2407.16674, 2024.

- Zhang & Zhang (2024) Fan Zhang and Xin Zhang. Graphkan: Enhancing feature extraction with graph kolmogorov arnold networks. arXiv preprint arXiv:2406.13597, 2024.

- Zhang et al. (2023a) Huang Zhang, Changshuo Wang, Shengwei Tian, Baoli Lu, Liping Zhang, Xin Ning, and Xiao Bai. Deep learning-based 3d point cloud classification: A systematic survey and outlook. Displays, 79:102456, 2023a.

- Zhang et al. (2023b) Rui Zhang, Yichao Wu, Wei Jin, and Xiaoman Meng. Deep-learning-based point cloud semantic segmentation: A survey. Electronics, 12(17):3642, 2023b.

- Zhao et al. (2021) Hengshuang Zhao, Li Jiang, Jiaya Jia, Philip HS Torr, and Vladlen Koltun. Point transformer. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 16259–16268, 2021.

Appendix A Supplementary materials

A.1 Training Details

The models for both classification and part segmentation are implemented using PyTorch. For classification tasks, a batch size of 64 is used, while part segmentation uses a batch size of 32. The training process employs the Adam optimizer, configured with , , and . An initial learning rate of 0.0005 and 0.001 is chosen respectively for the classification and part segmentation tasks. To progressively decrease the learning rate during training, a learning rate scheduler is applied, which reduces the learning rate by a factor of 0.5 after every 20 epochs. The cross-entropy loss function is used. All experiments run on an NVIDIA A100 Tensor Core GPU with 80 GB of RAM.