PointCG: Self-supervised Point Cloud Learning via Joint Completion and Generation

Abstract

The core of self-supervised point cloud learning lies in setting up appropriate pretext tasks, to construct a pre-training framework that enables the encoder to perceive 3D objects effectively. In this paper, we integrate two prevalent methods, masked point modeling (MPM) and 3D-to-2D generation, as pretext tasks within a pre-training framework. We leverage the spatial awareness and precise supervision offered by these two methods to address their respective limitations: ambiguous supervision signals and insensitivity to geometric information. Specifically, the proposed framework, abbreviated as PointCG, consists of a Hidden Point Completion (HPC) module and an Arbitrary-view Image Generation (AIG) module. We first capture visible points from arbitrary views as inputs by removing hidden points. Then, HPC extracts representations of the inputs with an encoder and completes the entire shape with a decoder, while AIG is used to generate rendered images based on the visible points’ representations. Extensive experiments demonstrate the superiority of the proposed method over the baselines in various downstream tasks. Our code will be made available upon acceptance.

Index Terms:

PointCG, self-supervised learning, hidden point completion, arbitrary-view image generation, point clouds1 Introduction

Self-supervised representation learning (SSRL) aims to fully exploit the statistical and structural knowledge inherent in unlabeled datasets, enabling the encoder of the pre-training model to extract informative and discriminative representations. The pre-trained encoder can be subsequently applied to various downstream tasks such as classification, segmentation, and object detection [1, 2]. The core of SSRL lies in the design of appropriate pretext tasks aimed at aiding the encoder in achieving a full perception and understanding of the inputs.

Based on the tasks employed, existing self-supervised pre-training methods can be broadly classified into two paradigms: contrastive learning and generative learning, both of which have attained great success in processing 2D images [4, 5, 6] and 3D point clouds [7, 3, 8, 9, 10, 11]. Compared to contrastive learning, generative learning is considered as a more data-efficient pre-training method, capable of capturing the patterns of the inputs with relatively limited data volume [12]. Therefore, it is highly favored in the context of data scarcity within the field of 3D vision, where masked point modeling [7, 3, 8, 13, 10] and 3D-to-2D generation [11, 14] stand out as two representative generative learning methods. Among them, masked point modeling drives the model to predict arbitrary missing parts based on the remaining points. Accomplishing this task requires a thorough understanding of the spatial properties and global-local context of point clouds. 3D-to-2D generation employs a cross-modal pretext task which translates a 3D object point cloud to its diverse forms of 2D rendered images (e.g., silhouette, depth, contour). Pre-training with pixel-wise precise supervision drives the backbone to perceive the fine-grained edge details of 3D objects.

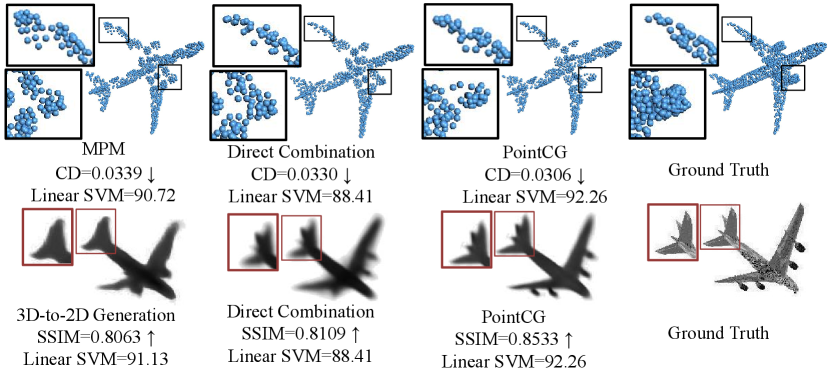

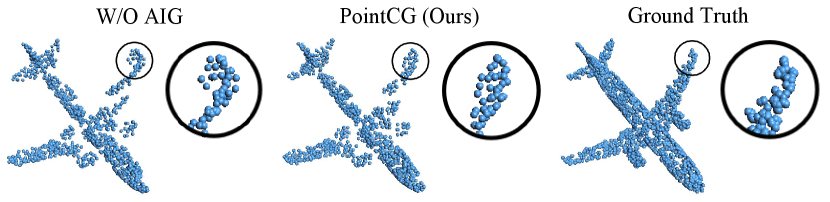

However, both of the above methods have their own limitations. As revealed in [15, 16, 17], due to the irregularity of point clouds, commonly used point set similarity metrics (e.g., Chamfer Distance and Earth Mover’s Distance) in masked point modeling cannot provide explicit point-to-point supervision between ground truth and generated point clouds. The lack of precise correspondence results in limited feature representation capability of the pre-trained backbone network. Conversely, 3D-to-2D generation [11, 14] alleviates the issue of insufficient supervision signals by utilizing regular 2D images as the generation objective, offering pixel-wise precise supervision. However, relying solely on images from limited views as ground truth may overlook the structural information from occluded point sets, diminishing the backbone’s perception of the spatial properties of point clouds. As shown in Fig. 1, masked point modeling exhibits subpar performance in reconstructing some challenging areas (e.g., edges) due to the lack of point-to-point supervision. Besides, 3D-to-2D generation yields images lacking three-dimensional structural information, attributed to the lack of explicit geometric guidance. These observations collectively indicate the models’ inadequate perception of the inputs, consequently reducing their performance on downstream tasks.

Based on the aforementioned analysis, an intuitive method is to combine these two pretext tasks to retain their individual merits while compensating for their respective limitations. However, as shown in Fig. 1, while the model directly combining both tasks outperforms those relying solely on MPM or 3D-to-2D generation in generating high-quality point clouds or images, its Linear-SVM accuracy is lower ( vs and ). We argue that the encoder’s involvement in both tasks can lead to confusion when generating content for two modalities concurrently. Furthermore, to accomplish both tasks, the model shifts its training focus toward the decoder, which is typically discarded after pre-training. This phenomenon diminishes the feature extraction capability of the encoder, ultimately reducing the Linear SVM accuracy.

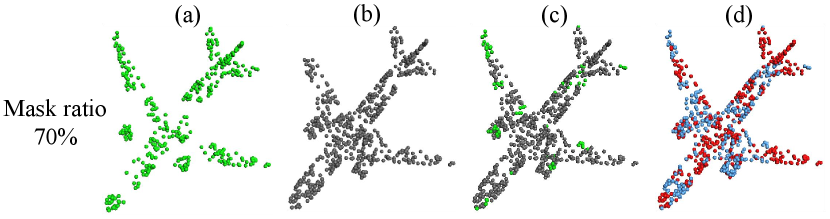

To address these issues, we propose PointCG, a framework that effectively integrates masked point modeling and 3D-to-2D generation tasks. This framework incorporates a Hidden Point Completion (HPC) module and an Arbitrary-view Image Generation (AIG) module. Existing MAE-based MPM methods often employ a random masking strategy based on Farthest Point Sampling (FPS) and K-Nearest Neighbor (KNN) techniques. However, the inputs of unmasked patches (Fig. 2 (a)) preserve the overall shape of an object and exhibit substantial overlap with the target points (highlighted in red in Fig. 2 (d)). The leakage of overall structure and point location information enables the model to reconstruct the object without a holistic comprehension of the entire structure, which limits the learning capacity of the encoder during pre-training. To overcome this limitation, we select the visible points from arbitrary views by removing hidden points as input and introduce the HPC module to complete the point clouds. For the 3D-to-2D generation task, we employ the arbitrary-view image generation as the pretext task, which generates the image from an arbitrary view based on the representations of visible points extracted by the encoder. Furthermore, the cross-modal feature alignment is introduced to align the feature spaces of point clouds and images, which enables simultaneous content generation across both modalities and refocuses the training on the encoder. Specifically, we extract features from both the input point clouds and their corresponding rendered 2D images, encouraging feature proximity for the same instance while maintaining feature separation for different instances.

Through the effective integration of HPC and AIG, the pre-trained encoder achieves a comprehensive understanding of 3D objects and can extract high-quality 3D representations. We evaluate our model and the proposed modules with a variety of downstream tasks and ablation studies. We further demonstrate that informative representations can be effectively learned from the restricted points, and such representations facilitate effortless masked point modeling and arbitrary-view image generation.

2 Related Work

2.1 Self-supervised Representation Learning

Self-supervised representation learning aims to derive robust and general representations from unlabeled datasets, which can be broadly classified into two categories based on the types of pretext tasks: contrastive learning and generative learning.

Contrastive learning-based methods (e.g., BYOL [18], SimSiam [6], DINO [19], STRL [9], CrossPoint [20]) define the augmented views of a sample as positive samples, while considering other instances as negative samples, thereby constructing discriminative tasks. Generative learning-based methods (e.g., GPT [21], Point-BERT [7], Point-MAE [3], OcCo [10], MaskFeat3D [22]) are based on the intuition that effective feature abstractions contain sufficient information to reconstruct the original geometric structures [11]. In the point cloud processing community, where 3D assets are relatively scarce, generative learning has garnered widespread attention due to its data efficiency [4, 3, 13]. Among them, MAE stands out as one of the representative paradigms. It involves masking a substantial portion of input data, followed by the use of an encoder to extract informative representations and a decoder to reconstruct explicit features (e.g., pixels or points) or implicit features (e.g., discrete tokens). Taking Point-BERT [7], MaskFeat3D [22], and IAE [23] as examples, each of these methods utilizes the visible groups as input after masking and reconstructs the positions of masked points, surface normals, and surface variations, as well as the implicit features of the masked points. However, after random masking [3] or partial occlusion [13], the visible groups often retain the overall structure of the object (Fig. 7), and there are substantial overlap regions between input and target patches (Fig. 2). The leakage of overall structure and point location information will reduce the difficulty in reconstructing masked patches, thus limiting the learning and inference capabilities of the encoder.

To avoid the leakage of the object’s overall shape and minimize overlap, we simulate scanners to capture visible points from arbitrary views as input. Our approach is conceptually aligned with OcCo [10], which employs the Z-Buffer algorithm [24] to select visible points from multiple views, subsequently completing the original point clouds with an encoder-decoder architecture. The Z-Buffer algorithm addressed within rendering relies on two assumptions: the points satisfy sampling criteria (e.g., Nyquist condition) and the points are associated with normals (or the normals can be estimated) [25]. However, our method seeks rigorous theoretical support for visibility computation without requiring normal estimation, point rendering, or surface reconstruction. Therefore, we employ the Hidden Point Removal (HPR) operator to compute visibility in a more robust manner.

2.2 Cross-modal Learning

Recently, cross-modal learning has been a popular research topic, aiming at extracting informative representations from multiple modalities such as images, audio, and point clouds. It has the potential to enhance the performance of various tasks, including visual recognition, speech recognition, and point cloud analysis.

In point cloud analysis, a variety of methods have been proposed for cross-modal learning, such as CrossPoint [20], PointMCD [26], TAP [14], and PointVST [11]. CrossPoint [20] establishes cross-modal contrastive learning between images and point clouds, demonstrating that the correspondence between images and points can enhance 3D object understanding. PointMCD [26] obtains a powerful point encoder by aligning the multi-view visual and geometric descriptors generated by a pretrained image encoder and a learnable point encoder. Both CrossPoint [20] and PointMCD [26] are based on the contrastive paradigm and rely heavily on extensive 3D-2D paired data. Generative methods, such as TAP [14] and PointVST [11], generate images from specific views based on the input point clouds. These methods use regular 2D images as generation objectives to provide precise supervision.

In this paper, we follow the generative learning paradigm and propose a unified pre-training framework with two complementary pretext tasks: hidden point completion and arbitrary-view image generation. The spatial awareness provided by 3D completion addresses the geometric insensitivity inherent in image supervision, as shown in the second line of column one in Fig. 1. Additionally, we demonstrate the mutual enhancement between the two pretext tasks through various experiments.

3 Methodology

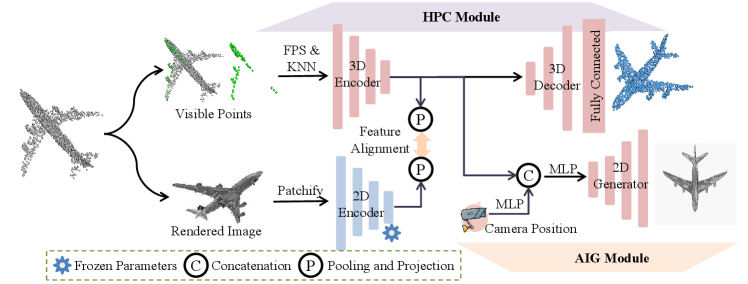

As illustrated in Fig. 3, PointCG mainly consists of a hidden point completion (HPC) module and an arbitrary-view image generation (AIG) module. Specifically, we begin by selecting the visible points from arbitrary views as the inputs (Sec. 3.1), and then introduce an asymmetric Transformer-based encoder-decoder architecture for extracting representations and completing hidden points (Sec. 3.2). Finally, we generate arbitrary-view images (Sec. 3.3) based on the aligned representations extracted by the encoder. In the following, we will delve into the details of these modules.

3.1 Data Organization

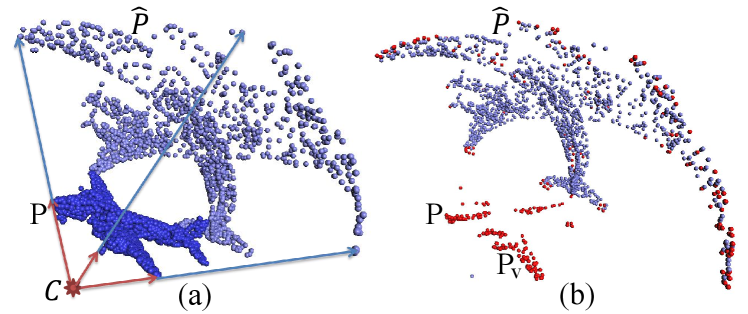

Given a complete point cloud , we randomly select the camera position , where is fixed at 1.0. and are randomly chosen within the range of . The HPR operator [25] is employed to determine whether is visible from . It mainly consists of two steps: inversion transformation and convex hull construction.

Inversion transformation. We employ spherical flip [27] to reflect each point to the spherical surface (denoted as ) along the ray from through to the spherical surface, as illustrated in Fig. 4 (a).

Convex hull construction. The visible points from inverted on the spherical surface are situated on the convex hull of . Therefore, we need to compute the collection of triangular planes, which make up the convex hull. Then we extract all vertices (magenta points in in Fig. 4 (b)) of the convex hull and project them back onto the original point cloud to obtain the visible points (magenta points in in Fig. 4 (b)). The remaining points of the original point cloud are hidden from , denoted as .

3.2 Occluded Point Completion

For each input, we employ the FPS and KNN to divide the visible points into patches with centers. Simultaneously, we extract central points from the hidden points and retrieve nearest neighbor points from the complete point cloud as the target patches . Then, the visible patches are projected into tokens with a lightweight PointNet [28], where is the dimension of features. Subsequently, a learnable Multi-Layer Perceptron (MLP) is adopted to embed the visible and hidden centers into positional tokens denoted as and , respectively. Finally, we extract representations by an encoder and capture the tokens with a decoder for completing the original point clouds:

| (1) |

| (2) |

where represents the hidden tokens, which is initialized by duplicating a learnable token of dimension . We concatenate the visible points’ features and , as well as the positional tokens and as the inputs of the decoder. Based on the outputs of the decoder, we will reconstruct the nearest neighbors of center points by a reconstruction head of a fully connected (FC) layer:

| (3) |

where denotes as the predicted hidden point patches.

Loss function. The Chamfer distance [29] is employed as the reconstruction loss:

| (4) |

where denotes the reconstruction targets.

3.3 Arbitrary-view Image Generation

3.3.1 Feature Alignment

To shift the pre-training focus towards enhancing the encoder for better 3D understanding, we employ the feature alignment module to build correspondence between images and point clouds in the feature space.

During pre-training, a pre-trained CLIP-visual [30] module is used to extract features from the rendered image . Then, the image features and the 3D features are projected into the invariant space with functions and , respectively, resulting in and .

Loss function. In the invariant space, we aim to maximize the similarity between and when they correspond to the same objects. The cross-modal instance discrimination loss can be formulated as:

| (5) |

where is the mini-batch size. is the temperature co-efficient, and denotes the cosine similarity function.

3.3.2 Image Generation

AIG generates rendered images from arbitrary views based on the visible points’ representations extracted by the encoder.

In pre-training, we randomly select a rendered image as the target and capture the corresponding view parameters as the input. comprises azimuth , elevation , and distance , described as . To enhance flexibility, we apply several learnable transformation layers for positional embedding tokens . Then, we concatenate with and encode the combined representation using MLP (): .

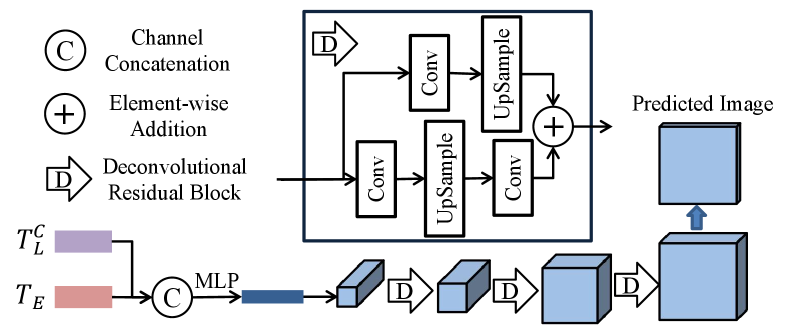

Finally, we design an image generator (Fig. 5) to generate rendered images based on .

Specifically, we start by reshaping from its original vectorized representation to a 2D feature map. This feature map is then passed through a series of deconvolutional residual blocks and three parallel convolutional blocks to generate the rendered image .

Loss function. We utilize the loss as the content loss for image generation:

| (6) |

where and represent the predicted and GT images, respectively, while denotes the number of sample points. Besides, we incorporate multi-scale frequency reconstruction (MSFR) loss [31] as the auxiliary loss alongside the content loss to reduce the differences in the frequency space. MSFR loss measures the distance between multi-scale GT and predicted images in the frequency domain:

| (7) |

where denotes the fast Fourier transform (FFT) that transfers the image signal to the frequency domain. MSFR can effectively maintain contrast in high-frequency regions, complementing the ability of to preserve colors and luminance [32]. The image generation loss is given by:

| (8) |

where the contribution of and can be adjusted by modifying the values of and .

Our loss function during pre-training is formulated as:

| (9) |

where enforces 3D completion, introduces 3D-2D correspondence, and ensures image generation.

4 Experiment

In this section, we present extensive experiments to demonstrate the effectiveness of our method. We begin by introducing the pre-training process on ShapeNet55 [33]. Then, we showcase its performance on 3D completion tasks in Sec. 4.1. Then, in Sec. 4.2, Sec. 4.3, and Sec. 4.4, we follow the previous works to conduct experiments of object classification, part segmentation, and semantic segmentation. Finally, we validate the effectiveness of our modules through various ablation studies in Sec. 4.6.

In the following tables, indicates whether the model is initialized with a pre-trained model, while signifies that the result is reproduced with the official code. Please note that we reproduce experiments of Point-MAE [3] and Point-M2AE [8] with their official codes, and all settings are consistent with our experimental configuration.

Pre-training. We pre-train the encoder on ShapeNet55 [33], which contains clean 3D models, covering common object categories. The input point number is , and the rendered images have a size of . The encoder and decoder include and standard Transformer blocks, respectively. Each Transformer block has hidden dimensions with heads. We employ the AdamW optimizer [34] and cosine learning rate decay [35]. The initial learning rate is set to , and the weight decay is .

4.1 3D Completion

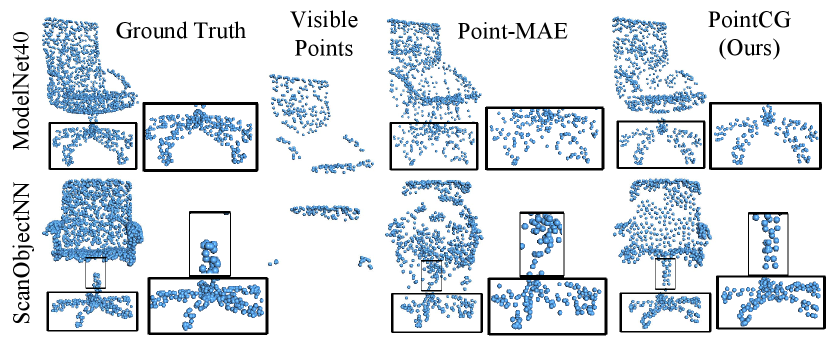

Completion based on visible points from random views. To assess the effectiveness of our self-supervised model initialized with pre-trained weights, we randomly select an instance from synthetic dataset ModelNet40 [36] and real-world dataset ScanObjectNN [37] separately and reconstruct the original point clouds. The visualization results of Point-MAE [3] and our model are shown in Fig. 6.

Compared to Point-MAE, our method not only completes the chair’s pivot axis and the five-pronged base with greater fidelity but also obtains smoother surface structures. Our method achieves remarkable performance in reconstructing both synthetic and real-world data with visible points from arbitrary views.

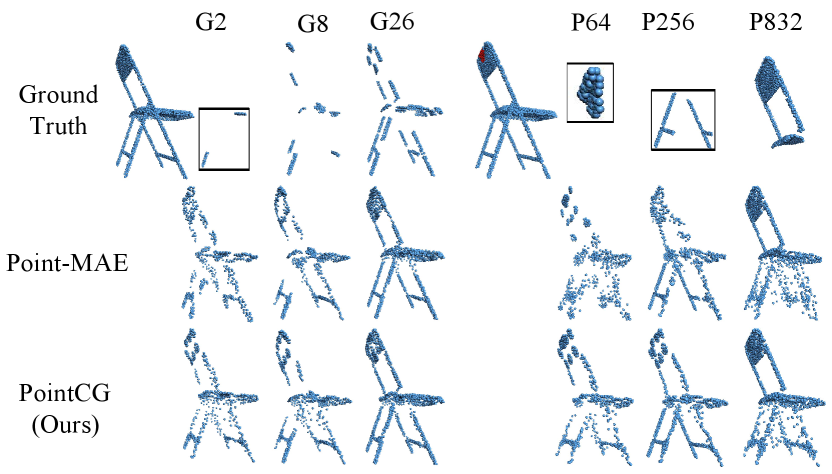

Completion based on grouped patches and partial points. To demonstrate the robustness and generalizability of our method in the 3D completion task, we devise two methods to obtain grouped patches and partial points as inputs. The reconstruction results are visualized in Fig. 7.

In the first method, we obtain , , and central points via FPS, and then acquire neighboring points with KNN to form groups [3, 8] denoted by ‘G2’, ‘G8’, and ‘G26’. In the second method, we randomly select one group, consisting of , , and points, denoted as ‘P64’, ‘P256’, and ‘P832’, respectively.

For ‘G2’ and ‘G8’, both Point-MAE and our method can complete the overall structure, but our method excels in recovering finer geometric details and sharper edges. The ‘G26’ column retains sufficient information, and the results are satisfactory for both methods. For ‘P64’ and ‘P256’, our method successfully completes entire structures and captures many local details. In contrast, the results of Point-MAE appear quite blurry. Although the ‘G26’ and ‘P832’ have the same number of input points, the reconstruction results of Point-MAE based on ‘P832’ are significantly lower than ‘G26’.

As shown, the inputs obtained by random masking retain objects’ structural information while exposing the coordinates of target points. These MAE methods employing random masking strategy, such as Point-MAE, exhibit poorer reconstruction performance when the inputs are partial and lack of complete structural integrity. Our method, however, excels in extracting informative representations and demonstrates strong inference capability, leading to superior reconstruction performance, even from highly partial point data.

4.2 Shape Classification

To assess the discrimination of the representations extracted by the pre-trained encoder, we validate the encoder on the shape classification task using the ModelNet40 [36] and ScanObjectNN [37] datasets.

Shape classification on synthetic data. ModelNet40 [36] contains clean 3D CAD models, covering object categories. We fine-tune the pre-trained encoder, and the results are presented in Tab. I. Our method achieves 94.03% with global fine-tuning, surpassing the reproduced version of Point-MAE (Rep.) (93.21%) by 0.82% and the publicly released accuracy by 0.23%. To validate the effectiveness of our architecture, we incorporate Point-M2AE as the backbone and evaluate its classification performance on ModelNet40. The classification results outperform the outcomes of the reproduced Point-M2AE model.

| Methods | Pre-T | booktitle/year. | Acc (Vote). |

|---|---|---|---|

| DGCNN [38] | - | ACM/2019 | 92.9 |

| RSCNN [39] | - | CVPR/2019 | 93.6 |

| PointTransformer [40] | - | ICCV/2021 | 93.7 |

| DGCNN+OcCo [10] | Y | ICCV/2021 | 93.0 |

| DGCNN+STRL [9] | Y | ICCV/2021 | 93.1 |

| DGCNN+MAE3D [13] | Y | TMM/2023 | 93.4 |

| Point-BERT [7] | Y | CVPR/2022 | 93.2 |

| MaskPoint [41] | Y | ECCV/2022 | 93.8 |

| Point-MAE [3] | Y | ECCV/2022 | 93.8 |

| Joint-MAE [42] | Y | CoRR/2023 | 94.0 |

| Point-MAE (Rep.) | Y | ECCV/2022 | 93.21 |

| PointCG (Point-MAE) | Y | - | 94.03 |

| Point-M2AE [8] | Y | NeurIPS/2022 | 94.0 |

| Point-M2AE (Rep.) | Y | NeurIPS/2022 | 93.59 |

| PointCG (Point-M2AE) | Y | - | 94.11 |

Besides, we also attempt to freeze the parameters of our pre-trained model, and validate it with a Linear-SVM classifier in Tab. II.

Our method outperforms Point-MAE [3] and Point-M2AE [8] by margins of +1.46% and +0.29%, respectively. The results highlight the superior quality of the 3D representation learned by our method.

| Methods | Pre-T | Acc. |

|---|---|---|

| FoldingNet [43] | - | 88.4 |

| DGCNN+Jiasaw [44] | Y | 90.6 |

| DGCNN+OcCo [10] | Y | 89.2 |

| DGCNN+CrossPoint [20] | Y | 91.2 |

| FoldingNet+PointMCD [26] | Y | 89.8 |

| Point-BERT [7] | Y | 87.4 |

| Joint-MAE [42] | Y | 92.4 |

| Point-MAE [3] | Y | 91.0 |

| PointCG (Point-MAE) | Y | 92.26 |

| Point-M2AE [8] | Y | 92.9 |

| Point-M2AE (Rep.) | Y | 92.63 |

| PointCG (Point-M2AE) | Y | 92.92 |

Shape classification on the real-world data. Evaluating a pre-trained model’s performance on real-world datasets is crucial, as real-world scenes tend to be more complex than synthetic ones. We follow the common practice to evaluate our model on three variants: ‘OBJ-BG’, ‘OBJ-ONLY’, and ‘PB-T50-RS’ of ScanObjectNN [37].

To further validate the effectiveness of our design, we follow PointVST [11] with DGCNN as the encoder to construct a pre-training network (DGCNN+PointCG) and evaluate it on the ‘PB-T50-RS’ variant. Additionally, we reproduce TAP [14] and PointVST [11] using their official pretrained models.

As presented in Tab. III, our method outperforms CrossPoint [20], as well as the reproduced TAP [14] and PointVST [11], all using DGCNN [38] as the encoder. When utilizing Point-MAE or Point-M2AE as the backbone, the classification results exhibit a significant improvement over the reproduced Point-MAE and Point-M2AE. These results underscore the discriminative power of the representations extracted by the encoder, even in complex real-world scenes.

| Methods | OBJ-BG | OBJ-ONLY | PB-T50-RS |

|---|---|---|---|

| DGCNN [38] | 82.8 | 86.2 | 78.1 |

| DGCNN+MAE3D [13] | 87.7 | 88.4 | 86.2 |

| DGCNN+CrossPoint [20] | - | - | 86.2 |

| DGCNN+TAP [14] | - | - | 86.6 |

| DGCNN+TAP [14](Rep.) | - | - | 86.54 |

| DGCNN+PointVST [11] | - | - | 89.3 |

| DGCNN+PointVST [11] (Rep.) | - | - | 87.6 |

| DGCNN+PointCG | - | - | 87.90 |

| Transformer+OcCo [10] | 84.85 | 85.54 | 78.79 |

| Transformer+TAP [14] | 90.36 | 89.50 | 85.67 |

| Point-BERT [7] | 87.43 | 88.12 | 83.07 |

| Joint-MAE [42] | 90.94 | 88.86 | 86.07 |

| Point-MAE [3] | 90.02 | 88.29 | 85.18 |

| PointCG (Point-MAE) | 91.16 | 88.99 | 86.47 |

| Point-M2AE [8] | 91.22 | 88.81 | 86.43 |

| Point-M2AE (Rep.) | 90.87 | 88.12 | 85.39 |

| PointCG (Point-M2AE) | 91.19 | 88.72 | 86.41 |

Few-shot Learning. Following previous works [45, 7, 10, 3], we conduct few-shot learning experiments using the pre-trained model on ModelNet40 [36]. We adopt -way, -shot setting, where denotes the number of classes randomly selected from the dataset, and represents the number of objects randomly sampled for each class. This yields objects for training. For evaluation, we randomly select unseen objects from each of classes.

The results with settings of and are presented in Tab. IV. As shown, our method consistently outperforms the baselines in nearly all few-shot settings, with minimal deviation. This highlights the robustness and generalization of the representations extracted by the PointCG encoder, even in data-limited scenarios.

| Methods | 5-way, 10-shot | 5-way, 20-shot | 10-way, 10-shot | 10-way, 20-shot |

|---|---|---|---|---|

| Transformer [46] | ||||

| Transformer+OcCo [10] | ||||

| Point-BERT [7] | ||||

| Point-MAE [3] | 95.0 3.0 | |||

| PointCG (Point-MAE) | 96.7 2.1 | 98.0 1.3 | 93.1 3.6 | 95.8 2.6 |

| Point-M2AE [8] | 96.8 1.8 | 98.3 1.4 | 92.3 4.5 | 95.0 3.0 |

| PointCG (Point-M2AE) | 97.0 1.9 | 98.4 1.6 | 92.8 3.8 | 95.5 2.9 |

4.3 Part Segmentation

The task of part segmentation aims to predict more fine-grained class labels for every instance. We conduct part segmentation on ShapeNetPart [47], which comprises samples shared by categories, annotated with parts in total. As illustrated in Tab. V, our method achieves competitive results and outperforms others in eleven categories.

| Methods | aero | bag | cap | car | chair | ear | guitar | knife | lamp | laptop | motor | mug | pistol | rocket | skate | table | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| plane | phone | bike | board | |||||||||||||||

| Transformer [7] | 83.42 | 85.1 | 82.9 | 85.4 | 87.7 | 78.8 | 90.5 | 80.8 | 91.1 | 87.7 | 85.3 | 95.6 | 73.9 | 94.9 | 83.5 | 61.2 | 74.9 | 80.6 |

| Point-BERT [7] | 84.11 | 85.6 | 84.3 | 84.8 | 88.0 | 79.8 | 91.0 | 81.7 | 91.6 | 87.9 | 85.2 | 95.6 | 75.6 | 94.7 | 84.3 | 63.4 | 76.3 | 81.5 |

| Point-MAE [3] | 84.19 | 86.1 | 84.3 | 85.0 | 88.3 | 80.5 | 91.3 | 78.5 | 92.1 | 87.4 | 86.1 | 96.1 | 75.2 | 94.6 | 84.7 | 63.5 | 77.1 | 82.4 |

| PointCG (Point-MAE) | 84.48 | 86.2 | 84.4 | 86.1 | 88.4 | 80.7 | 91.3 | 81.2 | 91.8 | 88.3 | 85.9 | 95.9 | 75.7 | 94.9 | 85.1 | 63.7 | 76.5 | 81.8 |

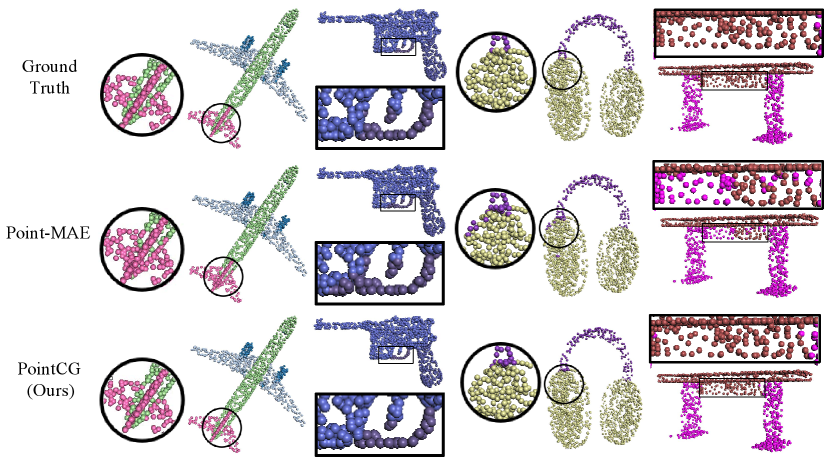

Visualization of part segmentation. Fine-grained part segmentation holds immense practical value. To highlight the clear advantage of our method in this task, we visualize the results and compare them with Point-MAE [3] in Fig. 8.

As depicted in the third line, our method accurately segments the fuselage and tail fin of the airplane, along with the earphone and headband. This reveals the capability of our method to capture discriminative features of points belonging to distinct sections within the same instance.

4.4 Semantic Segmentation

Large-scale indoor datasets introduce more complexities as they cover larger scenes in real-world environments with noise and outliers. We evaluate the performance of our pre-trained model on the 3D semantic segmentation task using the Stanford large-scale 3D Indoor Spaces (S3DIS) [48] dataset. S3DIS includes data from indoor areas, comprising a total of rooms. We fine-tune the pre-trained model with Area 1-5 and evaluate it with Area 6. The results for each category are shown in Tab. VI. Our method outperforms Point-MAE [3] across all categories except ‘beam’ and ‘board’. The results underscore our model’s capability to extract contextual and semantic information, which is crucial for producing fine-grained segmentation outcomes.

| Methods | ceiling | floor | wall | beam | column | window | door | table | chair | sofa | bookcase | board | clutter | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Point-MAE [3] | 90.29 | 82.18 | 73.14 | 94.4 | 96.8 | 77.6 | 82.0 | 68.5 | 78.4 | 79.0 | 73.3 | 82.9 | 45.4 | 52.6 | 55.5 | 64.6 |

| PointCG (Point-MAE) | 92.22 | 85.17 | 76.58 | 96.5 | 98.4 | 81.6 | 81.9 | 82.0 | 82.9 | 83.5 | 75.1 | 84.7 | 49.6 | 57.5 | 52.6 | 69.1 |

In reference to the semantic segmentation experiments of STRL [9], we fine-tune our pre-trained model on one area in Area 1-5, followed by evaluation on Area 6. We extend the experiments of Point-MAE [3] based on the pre-trained model released in the official code and present the mean across all class categories and the classification accuracy Acc (%) in Tab. VII. Our model exhibits a significant improvement in accuracy and compared to STRL [9] and Point-MAE [3]. These results demonstrate the capability of our model to extract contextual and semantic information, leading to fine-grained segmentation results.

| Fine-tuning Area | Method | Acc. | mIoU |

|---|---|---|---|

| Area 1 (3687 samples) | STRL [9] | 85.28 | 59.15 |

| Point-MAE [3] | 89.03 | 71.92 | |

| PointCG (Point-MAE) | 90.29 | 74.28 | |

| Area 2 (4440 samples) | STRL [9] | 72.37 | 39.21 |

| Point-MAE [3] | 76.72 | 47.13 | |

| PointCG (Point-MAE) | 78.31 | 48.95 | |

| Area 3 (1650 samples) | STRL [9] | 79.12 | 51.88 |

| Point-MAE [3] | 84.09 | 64.29 | |

| PointCG (Point-MAE) | 85.21 | 65.63 | |

| Area 4 (3662samples) | STRL [9] | 73.81 | 39.28 |

| Point-MAE [3] | 77.34 | 45.15 | |

| PointCG (Point-MAE) | 78.07 | 47.30 | |

| Area 5 (6852 samples) | STRL [9] | 77.28 | 49.53 |

| Point-MAE [3] | 80.56 | 51.46 | |

| PointCG (Point-MAE) | 81.79 | 54.04 |

4.5 Indoor 3D object detection

To validate the effectiveness of our method in scene-level prediction tasks, we conduct object detection experiments on ScanNetV2 [49], a 3D indoor scene dataset with rich annotations, including 1,513 scenes across 18 object classes. The dataset includes semantic labels, per-point instances, and both 2D and 3D bounding boxes. Following TAP [14], we adopt 3DETR [50] as a baseline, construct the PointCG network, and pre-train on the object-level dataset ShapeNet55 [33].

As shown in Tab. VIII, our method demonstrates superior performance compared to both the baseline 3DETR [50] and TAP [14] in both and metrics. This improvement indicates that the encoder trained by PointCG effectively captures discriminative information and generalizes well to complex scenes even when pre-trained with object-level datasets.

| Methods | Pre-T | ||

|---|---|---|---|

| VoteNet | - | 58.6 | 33.5 |

| 3DETR | - | 62.1 | 37.9 |

| 3DETR+TAP | ShapeNet | 63.0(+0.9) | 41.4(+3.5) |

| 3DETR+PointCG | ShapeNet | 63.21(+1.11) | 42.17(+4.27) |

4.6 Ablation Study

To investigate the architectural designs of our method, we conduct comprehensive ablation studies with Point-MAE as the backbone model and elucidate the individual contribution of each module.

Effectiveness of the components. As shown in Tab. IX, we validate the effectiveness of each module by enhancing and replacing modules on the baseline.We adopt Point-MAE [3] for comparison and utilize hidden point completion (HPC) as the baseline (a). In (b), we add the feature alignment module with pre-trained Vit-B/16 [30]. Based on (b), we incorporate the arbitrary-view image generation (AIG) module, constituting our PointCG, denoted as (c). As shown in Tab. IX, while the inclusion of the feature alignment module based on HPC does not substantially improve classification accuracy, the exclusion of this module from PointCG yields a diminished Linear-SVM accuracy of .

| Methods | Linear-SVM. | Acc. | Acc+Vote |

|---|---|---|---|

| Point-MAE (Rep.) [3] | - | 92.22 | 93.21 |

| (a) HPC | 91.15 | 92.76 | 93.47 |

| (b) + Feature Alignment | 91.23 | 92.99 | 93.51 |

| (c) + AIG | 92.26 | 93.52 | 94.03 |

| (d) PointCG (Vit-B/32) | 92.21 | 93.05 | 93.97 |

| (e) PointCG (ResNet50 [51]) | 91.01 | 92.75 | 93.39 |

| (f) PointCG (Grayscale image) | 91.75 | 93.19 | 93.52 |

| (g) PointCG (depth map) | 91.09 | 92.78 | 93.43 |

We further replace Vit-B/16 with Vit-B/32 (d) and ResNet50 [51] (e). While Vit-B/32 outperforms Vit-B/16 in the image domain, it does not improve the accuracy of classification. The model with ResNet50 [51] exhibits comparatively poorer performance.

To assess the impact of color in rendered images, we extract grayscale images from the rendered ones and pre-train with them in (f). This operation leads to a decrease in shape classification. Grayscale images may potentially lose structural or finer details inherent in the original images. In experiment (g), we extract depth maps from point clouds following PointCLIP [52] and pre-train with them instead of rendered images. The classification result shows poor performance.

Training and inference time. Tab. X presents pre-training and inference times for the classification task of each module. The results demonstrate that the pre-training time is notably longer than that of the baseline, whereas the inference time for classification remains comparable.

The pre-training of HPC requires approximately per epoch. Compared to the baseline (Point-MAE), the primary time consumption arises from the data organization module, which is responsible for obtaining visible points from arbitrary views as inputs. Among the components, the 3D completion module has the shortest pre-training duration, while the feature alignment and AIG modules demand considerably more time.

| Methods | Epoch time (s) | Inference time (s) |

|---|---|---|

| Point-MAE (Rep.) [3] | 54.73-57.59 | 36.85 |

| HPC | 395.18-399.26 | 36.65 |

| PointCG W/O AIG | 413.96-419.47 | 37.65 |

| PointCG W/O Feature Alignment | 405.52-411.22 | 35.67 |

| PointCG W/O 3D Completion | 520.97-524.73 | 36.79 |

| PointCG (Point-MAE) | 534.56-538.72 | 37.37 |

Visualization of 3D Completion. To validate the positive impact of AIG on the 3D completion task, we exclude AIG from PointCG (W/O AIG) and pre-train the network. The 3D completion results of this variant and PointCG are shown in Fig. 9. As depicted, the edges of the aircraft wings are sharpened with our method. Without AIG, the completion edges exhibit point groups, attributed to solely relying on 3D completion, while the completion targets are point clusters.

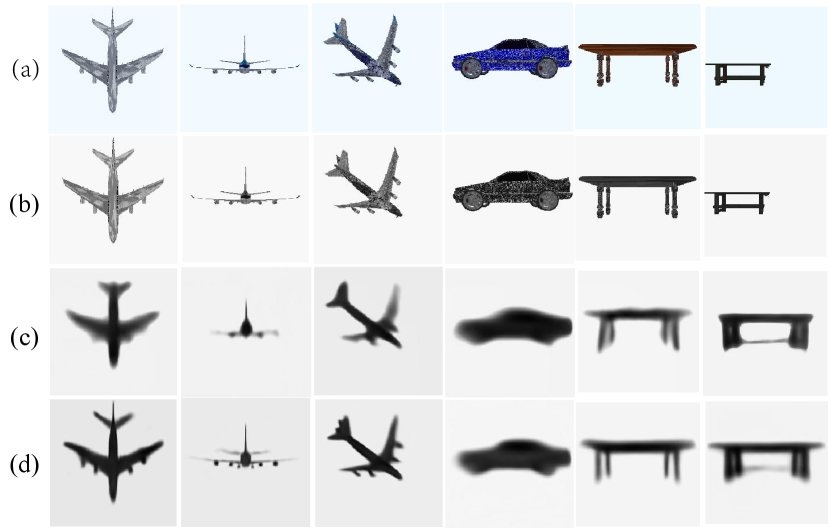

Visualization of the generated images. To validate the provision of geometric structural information by 3D completion, we showcase the results of image generation after excluding the completion module and compare them with PointCG’s results in Fig. 10. As depicted in line (c), outcomes exhibit significant artifacts and lack local structural information. Specifically, in the case of the aircraft tail wing, only the wing’s shape is generated, omitting volumetric structural details. However, our method in line (d) successfully predicts the accurate shapes of the objects.

Camera perspectives. To identify more suitable inputs and predicted images, we design experiments with different camera positions as inputs, and the results are reported in Tab. XI. (a) takes the left-view image as input and the front-view as target, and (b) takes the left-view image as input and an arbitrary-view image as target. In (c), we use images from three different views (front, left, and top views) as inputs and an arbitrary-view image as target. Both the input and target of (d) are from arbitrary views, yielding the optimal results. Therefore, we utilize two arbitrary-view images as the input and target, respectively.

| Input/Prediction | Linear-SVM. | Acc. | Acc+Vote |

|---|---|---|---|

| (a) Left view /front view | 91.13 | 92.66 | 93.31 |

| (b) Left view /arbitrary view | 91.65 | 92.83 | 93.68 |

| (c) Front, left, and top views/arbitrary view | 92.22 | 93.03 | 93.40 |

| (d) Arbitrary view/arbitrary view | 92.26 | 93.52 | 94.03 |

Pre-training with more complete inputs. We posit that if the inputs of 3D completion contain more structural information and more overlap areas with the targets, the completion task will be accomplished more easily. This leads to a reduced training intensity for the backbone, thereby diminishing the backbone’s perception of 3D objects. We design this study based on different inputs. The inputs in (a) are derived from a single arbitrary view. The inputs for (b) and (c) involve the addition of two and eight extra patches, respectively, to the input of (a). The points from two arbitrary views serve as the inputs for (d). Results are reported in Tab. XII.

| Input | Linear-SVM. | Acc. | Acc+Vote |

|---|---|---|---|

| (a) One arbitrary view | 92.26 | 93.52 | 94.03 |

| (b) One arbitrary view + 2 patches | 91.73 | 93.14 | 93.55 |

| (c) One arbitrary view + 8 patches | 91.41 | 92.85 | 93.25 |

| (d) Two arbitrary views | 91.02 | 92.75 | 93.27 |

In cases (b) and (c), the accuracy of the Linear-SVM during pre-training decreases as more patches are included in the inputs. The classification results via fine-tuning also show a decline. In case (d), inputs from two views offer more structural information about the input objects, which leads to a significant decrease in shape classification. This experiment reveals that as additional structural information is progressively included in the input, shape classification accuracy steadily decreases. This indicates that excessive exposure to object structure within the inputs hinders the model’s learning ability.

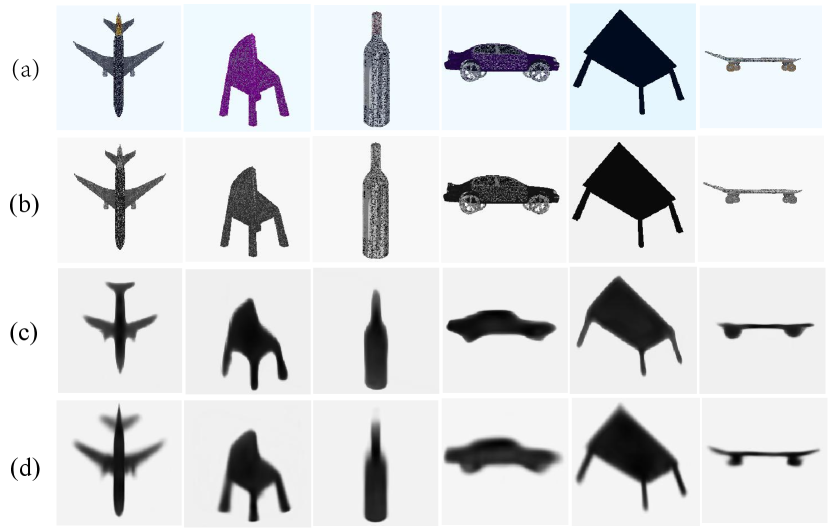

Image generation losses. AIG significantly impacts the backbone’s perception of 3D objects by precise supervision between the ground truth and the generated images. It always affects the quality of the generated images (as shown in Fig. 11). We conduct experiments to examine the performance of various loss functions for supervision, as outlined in Tab. XIII.

| Generation Loss | Linear-SVM | Acc. | Acc+Vote |

|---|---|---|---|

| 90.32 | 93.07 | 93.43 | |

| 91.13 | 92.97 | 93.40 | |

| 91.97 | 93.35 | 93.76 | |

| 91.73 | 93.23 | 93.61 | |

| 91.65 | 93.48 | 93.81 | |

| 92.26 | 93.52 | 94.03 |

The loss penalizes large errors more heavily and is more tolerant of small errors. In contrast, the loss does not excessively penalize large errors. The classification results of the model with the loss yield superior results compared to the loss.

The multi-scale structural similarity (MS_SSIM) index preserves the contrast in high-frequency regions. While is effective in preserving colors and luminance [32], but does not produce quite the same contrast as MS_SSIM. To leverage the benefits of both loss functions, we utilize the combination of them: . However, the classification quality is slightly lower than others. As shown in this table, the model with achieves the best classification results

Visualization of the generated images. To assess a more suitable image generation loss for our model, we visualize the generated images with different losses in Fig. 11. Despite minor artifacts near the edges or corners, our model consistently produces complete and structurally clear images in line (d). This indicates that our model possesses the capability to capture geometric structures and stereoscopic knowledge about 3D objects, as well as the ability to infer the occluded points from arbitrary views.

Qualitative results of 2D image generation. While Figs. 10 and 11 provide visual examples of the generated images, they lack rigorous qualitative results to substantiate any significant improvements over baseline methods in terms of preserving 3D structure in 2D images. To address this, we present Tab. XIV, which provides quantitative evaluations of the generated images under various architectures and image generation losses.

We employ Mean Squared Error (MSE), Structural Similarity index (SSIM), Peak Signal to Noise Ratio (PSNR), and Normalized Mutual Information (NMI) as our primary image evaluation metrics. Clearly, the configuration of PointCG with (d) achieves the best performance across all metrics. By contrast, PointCG without 3D Completion (a) exhibits the poorest results. This indicates that the 3D completion module significantly enhances the quality of the generated images.

| Methods/Generation Loss | MSE | PSNR | SSIM | NMI |

|---|---|---|---|---|

| (a) PointCG W/O 3D Completion | 0.054 | 30.099 | 0.795 | 0.485 |

| (b) | 0.038 | 31.599 | 0.831 | 0.515 |

| (c) | 0.042 | 31.519 | 0.827 | 0.509 |

| (d) | 0.034 | 32.106 | 0.856 | 0.558 |

5 Conclusion

In this paper, we propose PointCG, a unified framework with hidden points completion and arbitrary-view image generation for self-supervised point cloud learning. Completion and generation based on partial points prompt the encoder to extract high-quality representations with 3D structural intricacies and alleviate ambiguous supervision. Thereby, our method achieves notable enhancements over baseline methods and outperforms similar methods in classification and reconstruction tasks on real datasets. We expect our pre-trained models will benefit a wide range of 3D tasks, including 3D object detection, semantic segmentation, and visual grounding.

While performing well across multiple tasks, there is much room for improving PointCG. This includes expanding the modality of images to incorporate other formats such as language or audio. Furthermore, while we focus on instance-level tasks, scene-level understanding is crucial in real-world applications. Therefore, our future studies may involve delving into applications in scene understanding and investigating interactions among multiple modalities for 3D reasoning.

References

- [1] Y. Liu, X. Yan, Z. Li, Z. Chen, Z. Wei, and M. Wei, “Pointgame: Geometrically and adaptively masked autoencoder on point clouds,” IEEE Trans. Geosci. Remote. Sens., vol. 61, pp. 1–12, 2023.

- [2] L. Gu, X. Yan, P. Cui, L. Gong, H. Xie, F. L. Wang, J. Qin, and M. Wei, “PointSee: Image enhances point cloud,” IEEE Trans. Vis. Comput. Graph., vol. 0, no. 0, pp. 1–18, 2023.

- [3] Y. Pang, W. Wang, F. E. H. Tay, W. Liu, Y. Tian, and L. Yuan, “Masked autoencoders for point cloud self-supervised learning,” in Computer Vision - ECCV, 2022, pp. 604–621.

- [4] K. He, X. Chen, S. Xie, Y. Li, P. Dollár, and R. B. Girshick, “Masked autoencoders are scalable vision learners,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 15 979–15 988.

- [5] K. He, H. Fan, Y. Wu, S. Xie, and R. B. Girshick, “Momentum contrast for unsupervised visual representation learning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 9726–9735.

- [6] X. Chen and K. He, “Exploring simple siamese representation learning,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 15 750–15 758.

- [7] X. Yu, L. Tang, Y. Rao, T. Huang, J. Zhou, and J. Lu, “Point-bert: Pre-training 3d point cloud transformers with masked point modeling,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 19 291–19 300.

- [8] R. Zhang, Z. Guo, P. Gao, R. Fang, B. Zhao, D. Wang, Y. Qiao, and H. Li, “Point-m2ae: Multi-scale masked autoencoders for hierarchical point cloud pre-training,” in Advances in Neural Information Processing Systems, 2022, pp. 27 061–27 074.

- [9] S. Huang, Y. Xie, S. Zhu, and Y. Zhu, “Spatio-temporal self-supervised representation learning for 3d point clouds,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 6515–6525.

- [10] H. Wang, Q. Liu, X. Yue, J. Lasenby, and M. J. Kusner, “Unsupervised point cloud pre-training via occlusion completion,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 9762–9772.

- [11] Q. Zhang and J. Hou, “Pointvst: Self-supervised pre-training for 3d point clouds via view-specific point-to-image translation,” IEEE Transactions on Visualization and Computer Graphics, 2023.

- [12] Z. Qi, R. Dong, G. Fan, Z. Ge, X. Zhang, K. Ma, and L. Yi, “Contrast with reconstruct: Contrastive 3d representation learning guided by generative pretraining,” in International Conference on Machine Learning, ICML, ser. Proceedings of Machine Learning Research, vol. 202, 2023, pp. 28 223–28 243.

- [13] J. Jiang, X. Lu, L. Zhao, R. Dazeley, and M. Wang, “Masked autoencoders in 3d point cloud representation learning,” CoRR, vol. abs/2207.01545, 2022.

- [14] Z. Wang, X. Yu, Y. Rao, J. Zhou, and J. Lu, “Take-a-photo: 3d-to-2d generative pre-training of point cloud models,” CoRR, vol. abs/2307.14971, 2023.

- [15] W. Feng, J. Zhang, H. Cai, H. Xu, J. Hou, and H. Bao, “Recurrent multi-view alignment network for unsupervised surface registration,” in IEEE Conference on Computer Vision and Pattern Recognition, CVPR, 2021, pp. 10 297–10 307.

- [16] J. Sauder and B. Sievers, “Self-supervised deep learning on point clouds by reconstructing space,” in NeurIPS, 2019, pp. 12 942–12 952.

- [17] Y. Zeng, Y. Qian, Z. Zhu, J. Hou, H. Yuan, and Y. He, “Corrnet3d: Unsupervised end-to-end learning of dense correspondence for 3d point clouds,” in IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021, 2021, pp. 6052–6061.

- [18] J. Grill, F. Strub, F. Altché, C. Tallec, P. H. Richemond, E. Buchatskaya, C. Doersch, B. Á. Pires, Z. Guo, M. G. Azar, B. Piot, K. Kavukcuoglu, R. Munos, and M. Valko, “Bootstrap your own latent - A new approach to self-supervised learning,” in Advances in Neural Information Processing Systems, 2020, pp. 21 271–21 284.

- [19] M. Caron, H. Touvron, I. Misra, H. Jégou, J. Mairal, P. Bojanowski, and A. Joulin, “Emerging properties in self-supervised vision transformers,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 9630–9640.

- [20] M. Afham, I. Dissanayake, D. Dissanayake, A. Dharmasiri, K. Thilakarathna, and R. Rodrigo, “Crosspoint: Self-supervised cross-modal contrastive learning for 3d point cloud understanding,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 9892–9902.

- [21] A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, I. Sutskever et al., “Language models are unsupervised multitask learners,” OpenAI blog, vol. 1, no. 8, p. 9, 2019.

- [22] S. Yan, Y. Yang, Y. Guo, H. Pan, P. Wang, X. Tong, Y. Liu, and Q. Huang, “3d feature prediction for masked-autoencoder-based point cloud pretraining,” in The Twelfth International Conference on Learning Representations, ICLR. OpenReview.net, 2024.

- [23] S. Yan, Z. Yang, H. Li, L. Guan, H. Kang, G. Hua, and Q. Huang, “Implicit autoencoder for point cloud self-supervised representation learning,” CoRR, vol. abs/2201.00785, 2022.

- [24] W. StraBer, “Schnelle kurven-und flachendarstellung auf graphischen sichtgeraten,” Ph.D. dissertation, PhD thesis, 1974.

- [25] S. Katz, A. Tal, and R. Basri, “Direct visibility of point sets,” ACM Trans. Graph., vol. 26, no. 3, p. 24, 2007.

- [26] Q. Zhang, J. Hou, and Y. Qian, “Pointmcd: Boosting deep point cloud encoders via multi-view cross-modal distillation for 3d shape recognition,” IEEE Transactions on Multimedia, 2023.

- [27] S. Katz, G. Leifman, and A. Tal, “Mesh segmentation using feature point and core extraction,” Vis. Comput., vol. 21, no. 8-10, pp. 649–658, 2005.

- [28] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017, pp. 77–85.

- [29] H. Fan, H. Su, and L. J. Guibas, “A point set generation network for 3d object reconstruction from a single image,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017, pp. 2463–2471.

- [30] A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark, G. Krueger, and I. Sutskever, “Learning transferable visual models from natural language supervision,” in International Conference on Machine Learning, vol. 139, 2021, pp. 8748–8763.

- [31] S. Cho, S. Ji, J. Hong, S. Jung, and S. Ko, “Rethinking coarse-to-fine approach in single image deblurring,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 4621–4630.

- [32] H. Zhao, O. Gallo, I. Frosio, and J. Kautz, “Loss functions for image restoration with neural networks,” IEEE Trans. Computational Imaging, vol. 3, no. 1, pp. 47–57, 2017.

- [33] A. X. Chang, T. A. Funkhouser, L. J. Guibas, P. Hanrahan, Q. Huang, Z. Li, S. Savarese, M. Savva, S. Song, H. Su, J. Xiao, L. Yi, and F. Yu, “Shapenet: An information-rich 3d model repository,” CoRR, vol. abs/1512.03012, 2015.

- [34] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” in International Conference on Learning Representations, 2019, pp. 1–8.

- [35] ——, “SGDR: stochastic gradient descent with warm restarts,” in 5th International Conference on Learning Representations, ICLR, 2017.

- [36] Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang, and J. Xiao, “3d shapenets: A deep representation for volumetric shapes,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2015, pp. 1912–1920.

- [37] M. A. Uy, Q. Pham, B. Hua, D. T. Nguyen, and S. Yeung, “Revisiting point cloud classification: A new benchmark dataset and classification model on real-world data,” in IEEE/CVF International Conference on Computer Vision, 2019, pp. 1588–1597.

- [38] Y. Wang, Y. Sun, Z. Liu, S. E. Sarma, M. M. Bronstein, and J. M. Solomon, “Dynamic graph CNN for learning on point clouds,” ACM Trans. Graph., vol. 38, no. 5, pp. 146:1–146:12, 2019.

- [39] Y. Liu, B. Fan, S. Xiang, and C. Pan, “Relation-shape convolutional neural network for point cloud analysis,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 8895–8904.

- [40] H. Zhao, L. Jiang, J. Jia, P. H. S. Torr, and V. Koltun, “Point transformer,” in IEEE/CVF International Conference on Computer Vision, 2021, pp. 16 239–16 248.

- [41] H. Liu, M. Cai, and Y. J. Lee, “Masked discrimination for self-supervised learning on point clouds,” in Computer Vision - ECCV, vol. 13662, 2022, pp. 657–675.

- [42] Z. Guo, X. Li, and P. Heng, “Joint-mae: 2d-3d joint masked autoencoders for 3d point cloud pre-training,” CoRR, vol. abs/2302.14007, 2023.

- [43] Y. Yang, C. Feng, Y. Shen, and D. Tian, “Foldingnet: Point cloud auto-encoder via deep grid deformation,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 206–215.

- [44] J. Sauder and B. Sievers, “Self-supervised deep learning on point clouds by reconstructing space,” in Advances in Neural Information Processing Systems, 2019, pp. 12 942–12 952.

- [45] C. Sharma and M. Kaul, “Self-supervised few-shot learning on point clouds,” in Advances in Neural Information Processing Systems, 2020, pp. 7212–7221.

- [46] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems, 2017, pp. 5998–6008.

- [47] L. Yi, V. G. Kim, D. Ceylan, I. Shen, M. Yan, H. Su, C. Lu, Q. Huang, A. Sheffer, and L. J. Guibas, “A scalable active framework for region annotation in 3d shape collections,” ACM Trans. Graph., vol. 35, no. 6, pp. 210:1–210:12, 2016.

- [48] I. Armeni, O. Sener, A. R. Zamir, H. Jiang, I. K. Brilakis, M. Fischer, and S. Savarese, “3d semantic parsing of large-scale indoor spaces,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2016, pp. 1534–1543.

- [49] A. Dai, A. X. Chang, M. Savva, M. Halber, T. A. Funkhouser, and M. Nießner, “Scannet: Richly-annotated 3d reconstructions of indoor scenes,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2017, pp. 2432–2443.

- [50] I. Misra, R. Girdhar, and A. Joulin, “An end-to-end transformer model for 3d object detection,” in 2021 IEEE/CVF International Conference on Computer Vision, ICCV. IEEE, 2021, pp. 2886–2897.

- [51] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- [52] R. Zhang, Z. Guo, W. Zhang, K. Li, X. Miao, B. Cui, Y. Qiao, P. Gao, and H. Li, “Pointclip: Point cloud understanding by CLIP,” in IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 8542–8552.