Point process models for sequence detection in high-dimensional neural spike trains

Abstract

Sparse sequences of neural spikes are posited to underlie aspects of working memory [1], motor production [2], and learning [3, 4]. Discovering these sequences in an unsupervised manner is a longstanding problem in statistical neuroscience [5, 6, 7]. Promising recent work [4, 8] utilized a convolutive nonnegative matrix factorization model [9] to tackle this challenge. However, this model requires spike times to be discretized, utilizes a sub-optimal least-squares criterion, and does not provide uncertainty estimates for model predictions or estimated parameters. We address each of these shortcomings by developing a point process model that characterizes fine-scale sequences at the level of individual spikes and represents sequence occurrences as a small number of marked events in continuous time. This ultra-sparse representation of sequence events opens new possibilities for spike train modeling. For example, we introduce learnable time warping parameters to model sequences of varying duration, which have been experimentally observed in neural circuits [10]. We demonstrate these advantages on experimental recordings from songbird higher vocal center and rodent hippocampus.

1 Introduction

Identifying interpretable patterns in multi-electrode recordings is a longstanding and increasingly pressing challenge in neuroscience. Depending on the brain area and behavioral task, the activity of large neural populations may be low-dimensional as quantified by principal components analysis (PCA) or other latent variable models [11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23]. However, many datasets do not conform to these modeling assumptions and instead exhibit high-dimensional behaviors [24]. Neural sequences are an important example of high-dimensional structure: if neurons fire sequentially with no overlap, the resulting dynamics are -dimensional and cannot be efficiently summarized by PCA or other linear dimensionality reduction methods [4]. Such sequences underlie current theories of working memory [1, 25], motor production [2], and memory replay [10]. More generally, neural sequences are seen as flexible building blocks for learning and executing complex neural computations [26, 27, 28].

Prior Work In practice, neural sequences are usually identified in a supervised manner by correlating neural firing with salient sensory cues or behavioral actions. For example, hippocampal place cells fire sequentially when rodents travel down a narrow hallway, and these sequences can be found by averaging spikes times over multiple traversals of the hallway. After identifying this sequence on a behavioral timescale lasting seconds, a template matching procedure can be used to show that these sequences reoccur on compressed timescales during wake [29] and sleep [30]. In other cases, sequences can be identified relative to salient features of the local field potential (LFP; [31, 32]).

Developing unsupervised alternatives that directly extract sequences from multineuronal spike trains would broaden the scope of this research and potentially uncover new sequences that are not linked to behaviors or sensations [33]. Several works have shown preliminary progress in this direction Maboudi et al. [34] proposed fitting a hidden Markov model (HMM) and then identifying sequences from the state transition matrix. Grossberger et al. [35] and van der Meij & Voytek [36] apply clustering to features computed over a sliding window. Others use statistics such as time-lagged correlations to detect sequences and other spatiotemporal patterns in a bottom-up fashion [6, 7].

In this paper, we develop a Bayesian point process modeling generalization of convolutive nonnegative matrix factorization (convNMF; [9]), which was recently used by [8] and [4] to model neural sequences. Briefly, the convNMF model discretizes each neuron’s spike train into a vector of time bins, for neuron , and approximates this collection of vectors as a sum of convolutions, . The model parameters ( and ) are optimized with respect to a least-squares criterion. Each component of the model, indexed by , consists of a neural factor, and a temporal factor, . The neural factor encodes a spatiotemporal pattern of neural activity over time bins (which is hoped to be sequence), while the temporal factor indicates the times at which this pattern of neural activity occurs. A total of motifs or sequence types, each corresponding to a different component, are extracted by this model.

There are several compelling features of this approach. Similar to classical nonnegative matrix factorization [37], and in contrast to clustering methods, convNMF captures sequences with overlapping groups of neurons by an intuitive “parts-based” representation. Indeed, convNMF uncovered overlapping sequences in experimental data from songbird higher vocal center (HVC) [4]. Further, if the same sequence is repeated with different peak firing rates, convNMF can capture this by varying the magnitude of the entries in , unlike many clustering methods. Finally, convNMF efficiently pools statistical power across large populations of neurons to identify sequences even when the correlations between temporally adjacent neurons are noisy—other methods, such as HMMs and bottom-up agglomerative clustering, require reliable pairwise correlations to string together a full sequence.

Our Contributions We propose a point process model for neural sequences (PP-Seq) which extends and generalizes convNMF to continuous time and uses a fully probabilistic Bayesian framework. This enables us to better quantify uncertainty in key parameters—e.g. the overall number of sequences the times at which they occur—and also characterize the data at finer timescales—e.g. whether individual spikes were evoked by a sequence. Most importantly, by achieving an extremely sparse representation of sequence event times, the PP-Seq model enables a variety of model extensions that are not easily incorported into convNMF or other common methods. We explore one such extension that introduces time warping factors to model sequences of varying duration, as is often observed in neural data [10].

Though we focus on applications in neuroscience, our approach could be adapted to other temporal point processes, which are a natural framework to describe data that are collected at irregular intervals (e.g. social media posts, consumer behaviors, and medical records) [38, 39]. We draw a novel connection between Neyman-Scott processes [40], which encompass PP-Seq and other temporal point process models as special cases, and mixture of finite mixture models [63]. Exploiting this insight, we develop innovative Markov chain Monte Carlo (MCMC) methods for PP-Seq.

2 Model

2.1 Point Process Models and Neyman-Scott Processes

Point processes are probabilistic models that generate discrete sets over some continuous space . Each member of the set is called an “event.” A Poisson process [58] is a point process which satisfies three properties. First, the number of events falling within any region is Poisson distributed. Second, there exists a function , called the intensity function, for which equals the expected number of events in . Third, the number of events in non-overlapping regions of are independent. A Poisson process is said to be homogeneous when for some constant . Finally, a marked point process extends the usual definition of a point process to incorporate additional information into each event. A marked point process on generates random tuples , where represents the random location and is the additional “mark” specifying metadata associated with event . See Supplement A for further background details and references.

Neyman-Scott Processes [40] use Poisson processes as building blocks to model clustered data. To simulate a Neyman-Scott process, first sample a set of latent events from an initial Poisson process with intensity function . Thus, the number of latent events is a Poisson-distributed random variable, . Given the latent events, the observed events are drawn from a second Poisson process with conditional intensity function . The nonnegative functions can be thought of as impulse responses—each latent event adds to the rate of observed events, and stochastically generates some number of observed offspring events. Finally, the scalar parameter specifies a “background” rate; thus, if , the observations follow a homogeneous Poisson process with intensity .

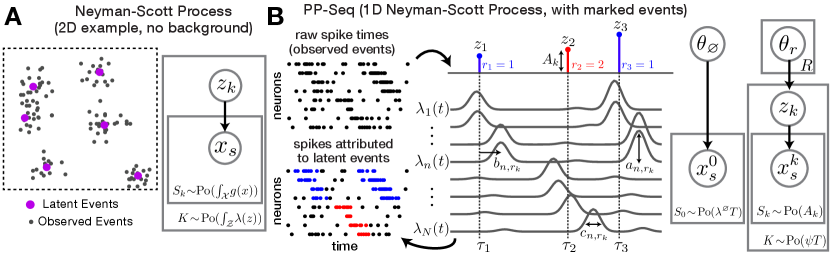

A simple example of a Neyman-Scott Process in is shown in fig. 1A. Latent events (purple dots) are first drawn from a homogenous Poisson process and specify the cluster centroids. Each latent event induces an isotropic Gaussian impulse response. Observed events (gray dots) are then sampled from a second Poisson process, whose intensity function is found by summing all impulse responses.

Importantly, we do not observe the number of latent events nor their locations. As described below, we use probabilistic inference to characterize this unobserved structure. A key idea is to attribute each observed event to a latent cause (either one of the latent events or the background process); these attributions are valid due to the additive nature of the latent intensity functions and the superposition principle of Poisson processes (see Supplement A). From this perspective, inferring the set of latent events is similar to inferring the number and location of clusters in a nonparametric mixture model.

2.2 Point Process Model of Neural Sequences (PP-Seq)

We model neural sequences as a Neyman-Scott process with marked events in a model we call PP-Seq. Consider a dataset with neurons, emitting a total of spikes over a time interval . This can be encoded as a set of marked spike times—the observed events are tuples specifying the time, , and neuron, , of each spike. Sequences correspond to latent events, which are also tuples specifying the time, , type, , and amplitude, of the sequence. The hyperparameter specifies the number of recurring sequence types, which is analogous to the number of components in convNMF.

To draw samples from the PP-Seq model, we first sample sequences (i.e., latent events) from a Poisson process with intensity . Here, sets the rate at which sequences occur within the data, sets the probability of the sequence types, and parameterize a gamma density which models the sequence amplitude, . Note that the number of sequence events is a Poisson-distributed random variable, , where the rate parameter is found by integrating over all sequence types, amplitudes, and times.

Conditioned on the set of sequence events, the firing rate of neuron is given by a sum of nonnegative impulse responses:

| (1) |

We assume these impulse responses vary across neurons and follow a Gaussian form:

| (2) |

where denotes a Gaussian density. The parameters , , and correspond to the weight, latency, and width, respectively, of neurons’ firing rates in sequences of type . Since the firing rate is a sum of non-negative impulse responses, the superposition principle of Poisson processes (see Supplement A) implies that we can view the data as a union of “background” spikes and “induced” spikes from each sequence, justifying the connection to clustering. The expected number of spikes induced by sequence is:

| (3) |

and thus we may view as the amplitude of sequence event .

Figure 1B schematizes a simple case containing sequence events and sequence types. A complete description of the model’s generative process is provided in Supplement B, but it can be summarized by the graphical model in fig. 1B, where we have global parameters with for each sequence type, and for the background process. We place weak priors on each parameter: the neural response weights follow a Dirichlet prior for each sequence type, and follows a normal-inverse-gamma prior for every neuron and sequence type. The background rate, , follows a gamma prior. We set the sequence event rate, , to be a fixed hyperparameter, though this assumption could be relaxed.

Time-warped sequences PP-Seq can be extended to model more diverse sequence patterns by using higher-dimensional marks on the latent sequences. For example, we can model variability in sequence duration by introducing a time warping factor, , to each sequence event and changing eq. 2 to,

| (4) |

This has the effect of linearly compressing or stretching each sequence in time (when or , respectively). Such time warping is commonly observed in neural data [43, 44], and indeed, hippocampal sequences unfold 15-20 times faster during replay than during lived experiences [10]. We characterize this model in Supplement E and demonstrate its utility below.

In principle, it is equally possible to incorporate time warping into discrete time convNMF. However, since convNMF involves a dense temporal factor matrix , the most straightforward extension would be to introduce a time warping factor for each component and each time bin . This results in new trainable parameters, which poses non-trivial challenges in terms of computational efficiency, overfitting, and human interpretability. In contrast, PP-Seq represents sequence events as a set of latent events in continuous time. This ultra-sparse representation of sequence events (since ) naturally lends itself to modeling additional sequence features since this introduces only new parameters.

3 Collapsed Gibbs Sampling for Neyman-Scott Processes

Developing efficient algorithms for parameter inference in Neyman-Scott process models is an area of active research [45, 46, 47, 48]. To address this challenge, we developed a collapsed Gibbs sampling routine for Neyman-Scott processes, which encompasses the PP-Seq model as a special case. The method resembles “Algorithm 3” of [62]—a well-known approach for sampling from a Dirichlet process mixture model—and the collapsed Gibbs sampling algorithm for “mixture of finite mixtures” models developed by [63]. The idea is to partition observed spikes into background spikes and spikes induced by latent sequences, integrating over the sequence times, types, and amplitudes. Starting from an initial partition, the sampler iterates over individual spikes and probabilistically re-assigns them to (a) the background, (b) one of the remaining sequences, or (c) to a new sequence. The number of sequences in the partition, , changes as spikes are removed and re-assigned; thus, the algorithm is able to explore the full trans-dimensional space of partitions.

The re-assignment probabilities are determined by the prior distribution of partitions under the Neyman-Scott process and by the likelihood of the induced spikes assigned to each sequence. We state the conditional probabilities below and provide a full derivation in Supplement D. Let denote the number of sequences in the current partition after spike has been removed from its current assignment. (Note that the number of latent sequences may exceed if some sequences produce zero spikes.) Likewise, let denote the sequence assignment of the -th spike, where indicates assignment to the background process and indicates assignment to one of the latent sequence events. Finally, let denote the spikes in the -th cluster, excluding , and let denote its size. The conditional probability of the partition under the possible assignments of spike are,

| (5) | ||||

| (6) | ||||

| (7) |

The sampling algorithm iterates over all spikes and updates their assignments holding the other spikes’ assignments fixed. The probability of assigning spike to an existing cluster marginalizes the time, type, and amplitude of the sequence, resulting in a collapsed Gibbs sampler [50, 62]. The exact form of the posterior probability and the parameters of the posterior predictive in eq. 6 are given in Supplement C.

After attributing each spike to a latent cause, it is straightforward to draw samples over the remaining model parameters—the latent sequences and global parameters . Given the spikes and assignments , we sample the sequences (i.e. their time, amplitude, types, etc.) from the closed-form conditional . Given the sequences and spikes, we sample the conditional distribution on global parameters . Under conjugate formulations, these updates are straightforward. With these steps, the Markov chain targets the posterior distribution on model parameters and partitions. Complete derivations are in Supplement D.

Improving MCMC mixing times The intensity of sequence amplitudes is proportional to the gamma density , and these hyperparameters affect the mixing time of the Gibbs sampler. Intuitively, if there is little probability of low-amplitude sequences, the sampler is unlikely to create new sequences and is therefore slow to explore different partitions of spikes.111This problem is common to other nonparametric Bayesian mixture models as well [63, e.g.]. If, on the other hand, the variance of is large relative to the mean, then the probability of forming new clusters is non-negligible and the sampler tends to mix more effectively. Unfortunately, this latter regime is also probably of lesser scientific interest, since neural sequences are typically large in amplitude—they can involve many thousands of cells, each potentially contributing a small number of spikes [28, 2].

To address this issue, we propose an annealing procedure to initialize the Markov chain. We fix the mean of and adjust and to slowly lower variance of amplitude distribution. Initially, the sampler produces many small clusters of spikes, and as we lower the variance of to its target value, the Markov chain typically combines these clusters into larger sequences. We further improve performance by interspersing “split-merge” Metropolis-Hastings updates [64, 65] between Gibbs sweeps (see Supplement D.6). Finally, though we have not found it necessary, one could use convNMF to initialize the MCMC algorithm.

Parallel MCMC Resampling the sequence assignments is the primary computational bottleneck for the Gibbs sampler. One pass over the data requires operations, which quickly becomes costly when the operations are serially executed. While this computational cost is manageable for many datasets, we can improve performance substantially by parallelizing the computation [53]. Given processors and a spike train lasting seconds, we divide the dataset into intervals lasting seconds, and allocate one interval per processor. The current global parameters, , are first broadcast to all processors. In parallel, the processors update the sequence assignments for their assigned spikes, and then send back sufficient statistics describing each sequence. After these sufficient statistics are collected on a single processor, the global parameters are re-sampled and then broadcast back to the processors to initiate another iteration. This algorithm introduces some error since clusters are not shared across processors. In essence, this introduces erroneous edge effects if a sequence of spikes is split across two processors. However, these errors are negligible when the sequence length is much less than , which we expect is the practical regime of interest.

4 Experiments

4.1 Cross-Validation and Demonstration of Computational Efficiency

We evaluate model performance by computing the log-likelihood assigned to held-out data. Partitioning the data into training and testing sets must be done somewhat carefully—we cannot withhold time intervals completely (as in fig. 2A, top) or else the model will not accurately predict latent sequences occurring in these intervals; likewise, we cannot withhold individual neurons completely (as in fig. 2A, middle) or else the model will not accurately predict the response parameters of those held out cells. Thus, we adopt a “speckled” holdout strategy [54] as diagrammed at the bottom of fig. 2A. We treat held-out spikes as missing data and sample them as part of the MCMC algorithm. (Their conditional distribution is given by the PP-Seq generative model.) This approach involving a speckled holdout pattern and multiple imputation of missing data may be viewed as a continuous time extension of the methods proposed by [27] for convNMF.

Panels B-E in fig. 2 show the results of this cross-validation scheme on a synthetic dataset with sequence types. The predictions of the model in held-out test regions closely match the ground truth—missing spikes are reliably imputed when they are part of a sequence (fig. 2C). Further, the likelihood of the train and test sets improves over the course of MCMC sampling (fig. 2D), and can be used as a metric for model comparison—in agreement with the ground truth, test performance plateaus for models containing greater than sequence types (fig. 2E).

Finally, to be of practical utility, the algorithm needs to run in a reasonable amount of time. Figure 2G shows that our Julia [55] implementation can fit a recording of 120 hippocamapal neurons with hundreds of thousands of spikes in a matter of minutes, on a 2017 MacBook Pro (3.1 GHz Intel Core i7, 4 cores, 16 GB RAM). Run-time grows linearly with the number of spikes, as expected, but even with a single thread it only takes six minutes to perform 1000 Gibbs sweeps on a 15-minute recording with spikes. With parallel MCMC, this laptop performs the same number of sweeps in under two minutes. Our open-source implementation is available at:

4.2 Zebra Finch Higher Vocal Center (HVC)

We first applied PP-Seq to a recording of HVC premotor neurons in a zebra finch,222These data are available at http://github.com/FeeLab/seqNMF; originally published in [4]. which generate sequences that are time-locked to syllables in the bird’s courtship song. Figure 3A qualitatively compares the performance of convNMF and PP-Seq. The raw data (top panel) shows no visible spike patterns; however, clear sequences are revealed by sorting the neurons lexographically by preferred sequence type and the temporal offset parameter inferred by PP-Seq. While both models extract similar sequences, PP-Seq provides a finer scale annotation of the final result, providing, for example, attributions at the level of individual spikes to sequences (bottom left of fig. 3A).

Further, PP-Seq can quantify uncertainty in key parameters by considering the full sequence of MCMC samples. Figure 3B summarizes uncertainty in the total number of sequence events, i.e. , over three independent MCMC chains with different random seeds—all chains converge to similar estimates; the uncertainty is largely due to the rapid sequences (in blue) shown in panel A. Figure 3C displays a symmetric matrix where element corresponds to the probability that spike and spike are attributed to same sequence. Finally, fig. 3D-E shows the amplitude and offset for each neuron’s sequence-evoked response with 95% posterior credible intervals. These results naturally fall out of the probabilistic construction of the PP-Seq model, but have no obvious analogue in convNMF.

4.3 Time Warping Extension and Robustness to Noise

Songbird HVC is a specialized circuit that generates unusually clean and easy-to-detect sequences. To compare the robustness of PP-Seq and convNMF under more challenging circumstances, we created a simple synthetic dataset with sequence type and neurons. We varied four parameters to manipulate the difficulty of sequence extraction: the rate of background spikes, (“additive noise,” fig. 4A), the expected value of sequence amplitudes, (“participation noise”, fig. 4B), the expected variance of the Gaussian impulse responses, (“jitter noise”, fig. 4C), and, finally, the maximal time warping coefficient (see eq. 4; fig. 4D). All simulated datasets involved sequences with low spike counts ( spikes). In this regime, the Poisson likelihood criterion used by PP-Seq is better matched to the statistics of the spike train. Since convNMF optimizes an alternative loss function (squared error instead of Poisson likelihood) we compared the models by their ability to extract the ground truth sequence event times. Using area under reciever operating characteriztic (ROC) curves as a performance metric (see Supplement F.1), we see favorable results for PP-Seq as noise levels are increased.

We demonstrate the abilities of time-warped PP-Seq further in fig. 4E-F. Here, we show a synthetic dataset containing sequences with 9-fold variability in their duration, which is similar to levels observed in some experimental systems [10]. While convNMF fails to reconstruct many of these warped sequences, the PP-Seq model identifies sequences that are closely matched to ground truth.

4.4 Rodent Hippocampal Sequences

Finally, we tested PP-Seq and its time warping variant on a hippocampal recording in a rat making repeated runs down a linear track.333These data are available at http://crcns.org/data-sets/hc/hc-11; originally published in [56]. This dataset is larger ( minutes, 137,482) and contains less stereotyped sequences than the songbird data. From prior work [56], we expect to see two sequences with overlapping populations of neurons, corresponding to the two running directions on the track. PP-Seq reveals these expected sequences in an unsupervised manner—i.e. without reference to the rat’s position—as shown in fig. 5A-C.

We performed a large cross-validation sweep over 2,000 random hyperparameter settings for this dataset (see Supplement F.2). This confirmed that models with sequence performed well in terms of heldout performance (fig. 5D). Interestingly, despite variability in running speeds, this same analysis did not show a consistent benefit to including larger time warping factors into the model (fig. 5E). Higher performing models were characterized by larger sequence amplitudes, i.e. larger values of , and smaller background rates, i.e. smaller values of . Other parameters had less pronounced effects on performance. Overall, these results demonstrate that PP-Seq can be fruitfully applied to large-scale and “messy” neural datasets, that hyperparameters can be tuned by cross-validation, and that the unsupervised learning of neural sequences conforms to existing scientific understanding gained via supervised methods.

5 Conclusion

We proposed a point process model (PP-Seq) inspired by convolutive NMF [9, 8, 4] to identify neural sequences. Both models approximate neural activity as the sum of nonnegative features, drawn from a fixed number of spatiotemporal motif types. Unlike convNMF, PP-Seq restricts each motif to be a true sequence—the impulse responses are Gaussian and hence unimodal. Further, PP-Seq is formulated in a probabilistic framework that better quantifies uncertainty (see fig. 3) and handles low firing rate regimes (see fig. 4). Finally, PP-Seq produces an extremely sparse representation of sequence events in continuous time, opening the door to a variety of model extensions including the introduction of time warping (see fig. 4F), as well as other possibilities like truncated sequences and “clusterless” observations [57], which could be explored in future work.

Despite these benefits, fitting PP-Seq involves a tackling a challenging trans-dimensional inference problem inherent to Neyman-Scott point processes. We took several important steps towards overcoming this challenge by connecting these models to a more general class of Bayesian mixture models [63], developing and parallelizing a collapsed Gibbs sampler, and devising an annealed sampling approach to promote fast mixing. These innovations are sufficient to fit PP-Seq on datasets containing hundreds of thousands of spikes in just a few minutes on a modern laptop.

Acknowledgements

A.H.W. received funding support from the National Institutes of Health BRAIN initiative (1F32MH122998-01), and the Wu Tsai Stanford Neurosciences Institute Interdisciplinary Scholar Program. S.W.L. was supported by grants from the Simons Collaboration on the Global Brain (SCGB 697092) and the NIH BRAIN Initiative (U19NS113201 and R01NS113119). We thank the Stanford Research Computing Center for providing computational resources and support that contributed to these research results.

Broader Impact

Understanding neural computations in biological systems and ultimately the human brain is a grand and long-term challenge with broad implications for human health and society. The field of neuroscience is still taking early and incremental steps towards this goal. Our work develops a general-purpose, unsupervised method for identifying an important structure—neural sequences—which have been observed in a variety of experimental datasets and have been studied extensively by theorists. This work will serve to advance this growing understanding by providing new analytical tools for neuroscientists. We foresee no immediate impacts, positive or negative, concerning the general public.

References

- [1] Mark S Goldman “Memory without feedback in a neural network” In Neuron 61.4, 2009, pp. 621–634

- [2] Richard H R Hahnloser, Alexay A Kozhevnikov and Michale S Fee “An ultra-sparse code underlies the generation of neural sequences in a songbird” In Nature 419.6902, 2002, pp. 65–70

- [3] Howard Eichenbaum “Time cells in the hippocampus: a new dimension for mapping memories” In Nat. Rev. Neurosci. 15.11 nature.com, 2014, pp. 732–744

- [4] Emily L Mackevicius, Andrew H Bahle, Alex H Williams, Shijie Gu, Natalia I Denisenko, Mark S Goldman and Michale S Fee “Unsupervised discovery of temporal sequences in high-dimensional datasets, with applications to neuroscience” In Elife 8, 2019

- [5] Moshe Abeles and Itay Gat “Detecting precise firing sequences in experimental data” In J. Neurosci. Methods 107.1-2 Elsevier, 2001, pp. 141–154

- [6] Eleonora Russo and Daniel Durstewitz “Cell assemblies at multiple time scales with arbitrary lag constellations” In Elife 6 elifesciences.org, 2017

- [7] Pietro Quaglio, Vahid Rostami, Emiliano Torre and Sonja Grün “Methods for identification of spike patterns in massively parallel spike trains” In Biol. Cybern. 112.1-2, 2018, pp. 57–80

- [8] Sven Peter, Elke Kirschbaum, Martin Both, Lee Campbell, Brandon Harvey, Conor Heins, Daniel Durstewitz, Ferran Diego and Fred A Hamprecht “Sparse convolutional coding for neuronal assembly detection” In Advances in Neural Information Processing Systems 30 Curran Associates, Inc., 2017, pp. 3675–3685

- [9] Paris Smaragdis “Convolutive speech bases and their application to supervised speech separation” In IEEE Trans. Audio Speech Lang. Processing ieeexplore.ieee.org, 2006

- [10] Thomas J Davidson, Fabian Kloosterman and Matthew A Wilson “Hippocampal replay of extended experience” In Neuron 63.4, 2009, pp. 497–507

- [11] Anne C Smith and Emery N Brown “Estimating a state-space model from point process observations” In Neural Computation 15.5 MIT Press, 2003, pp. 965–991

- [12] K L Briggman, H D I Abarbanel and W B Kristan “Optical imaging of neuronal populations during decision-making” In Science 307.5711 science.sciencemag.org, 2005, pp. 896–901

- [13] Byron M Yu, John P Cunningham, Gopal Santhanam, Stephen I Ryu, Krishna V Shenoy and Maneesh Sahani “Gaussian-process factor analysis for low-dimensional single-trial analysis of neural population activity” In J. Neurophysiol. 102.1, 2009, pp. 614–635

- [14] Liam Paninski, Yashar Ahmadian, Daniel Gil Ferreira, Shinsuke Koyama, Kamiar Rahnama Rad, Michael Vidne, Joshua Vogelstein and Wei Wu “A new look at state-space models for neural data” In Journal of Computational Neuroscience 29.1-2 Springer, 2010, pp. 107–126

- [15] Jakob H Macke, Lars Buesing, John P Cunningham, M Yu Byron, Krishna V Shenoy and Maneesh Sahani “Empirical models of spiking in neural populations” In Advances in Neural Information Processing Systems, 2011, pp. 1350–1358

- [16] David Pfau, Eftychios A Pnevmatikakis and Liam Paninski “Robust learning of low-dimensional dynamics from large neural ensembles” In Advances in Neural Information Processing Systems, 2013, pp. 2391–2399

- [17] Peiran Gao and Surya Ganguli “On simplicity and complexity in the brave new world of large-scale neuroscience” In Curr. Opin. Neurobiol. 32, 2015, pp. 148–155

- [18] Yuanjun Gao, Evan Archer, Liam Paninski and John P Cunningham “Linear dynamical neural population models through nonlinear embeddings”, 2016 arXiv:1605.08454 [q-bio.NC]

- [19] Yuan Zhao and Il Memming Park “Variational Latent Gaussian Process for Recovering Single-Trial Dynamics from Population Spike Trains” In Neural Comput. 29.5 MIT Press, 2017, pp. 1293–1316

- [20] Anqi Wu, Stan Pashkovski, Sandeep R Datta and Jonathan W Pillow “Learning a latent manifold of odor representations from neural responses in piriform cortex” In Advances in Neural Information Processing Systems 31 Curran Associates, Inc., 2018, pp. 5378–5388

- [21] Alex H Williams, Tony Hyun Kim, Forea Wang, Saurabh Vyas, Stephen I Ryu, Krishna V Shenoy, Mark Schnitzer, Tamara G Kolda and Surya Ganguli “Unsupervised discovery of demixed, low-dimensional neural dynamics across multiple timescales through tensor component analysis” In Neuron 98.6, 2018, pp. 1099–1115.e8

- [22] Scott Linderman, Annika Nichols, David Blei, Manuel Zimmer and Liam Paninski “Hierarchical recurrent state space models reveal discrete and continuous dynamics of neural activity in C. elegans” In bioRxiv, 2019, pp. 621540

- [23] Lea Duncker, Gergo Bohner, Julien Boussard and Maneesh Sahani “Learning interpretable continuous-time models of latent stochastic dynamical systems” In Proceedings of the 36th International Conference on Machine Learning 97, Proceedings of Machine Learning Research Long Beach, California, USA: PMLR, 2019, pp. 1726–1734

- [24] Carsen Stringer, Marius Pachitariu, Nicholas Steinmetz, Matteo Carandini and Kenneth D Harris “High-dimensional geometry of population responses in visual cortex” In Nature 571.7765, 2019, pp. 361–365

- [25] Christopher D Harvey, Philip Coen and David W Tank “Choice-specific sequences in parietal cortex during a virtual-navigation decision task” In Nature 484.7392, 2012, pp. 62–68

- [26] Mikhail I Rabinovich, Ramón Huerta, Pablo Varona and Valentin S Afraimovich “Generation and reshaping of sequences in neural systems” In Biol. Cybern. 95.6 Springer, 2006, pp. 519–536

- [27] Emily Lambert Mackevicius and Michale Sean Fee “Building a state space for song learning” In Curr. Opin. Neurobiol. 49 Elsevier, 2018, pp. 59–68

- [28] György Buzsáki and David Tingley “Space and time: the hippocampus as a sequence generator” In Trends Cogn. Sci. 22.10, 2018, pp. 853–869

- [29] Eva Pastalkova, Vladimir Itskov, Asohan Amarasingham and György Buzsáki “Internally generated cell assembly sequences in the rat hippocampus” In Science 321.5894, 2008, pp. 1322–1327

- [30] Daoyun Ji and Matthew A Wilson “Coordinated memory replay in the visual cortex and hippocampus during sleep” In Nat. Neurosci. 10.1 nature.com, 2007, pp. 100–107

- [31] Hannah R Joo and Loren M Frank “The hippocampal sharp wave-ripple in memory retrieval for immediate use and consolidation” In Nat. Rev. Neurosci. 19.12, 2018, pp. 744–757

- [32] Wei Xu, Felipe Carvalho and Andrew Jackson “Sequential neural activity in primary motor cortex during sleep” In J. Neurosci. 39.19, 2019, pp. 3698–3712

- [33] David Tingley and Adrien Peyrache “On the methods for reactivation and replay analysis” In Philos. Trans. R. Soc. Lond. B Biol. Sci. 375.1799 royalsocietypublishing.org, 2020, pp. 20190231

- [34] Kourosh Maboudi, Etienne Ackermann, Laurel Watkins Jong, Brad E Pfeiffer, David Foster, Kamran Diba and Caleb Kemere “Uncovering temporal structure in hippocampal output patterns” In Elife 7, 2018

- [35] Lukas Grossberger, Francesco P Battaglia and Martin Vinck “Unsupervised clustering of temporal patterns in high-dimensional neuronal ensembles using a novel dissimilarity measure” In PLoS Comput. Biol. 14.7, 2018, pp. e1006283

- [36] Roemer Meij and Bradley Voytek “Uncovering neuronal networks defined by consistent between-neuron spike timing from neuronal spike recordings” In eNeuro 5.3 ncbi.nlm.nih.gov, 2018

- [37] Daniel D Lee and H Sebastian Seung “Learning the parts of objects by non-negative matrix factorization” In Nature 401.6755, 1999, pp. 788–791

- [38] Jesper Moller and Rasmus Plenge Waagepetersen “Statistical Inference and Simulation for Spatial Point Processes” Taylor & Francis, 2003

- [39] Isabel Valera Manuel Gomez Rodriguez “Learning with Temporal Point Processes”, Tutorial at ICML, 2018

- [40] Jerzy Neyman and Elizabeth L Scott “Statistical approach to problems of cosmology” In J. R. Stat. Soc. Series B Stat. Methodol. 20.1, 1958, pp. 1–29

- [41] Jeffrey W Miller and Matthew T Harrison “Mixture models with a prior on the number of components” In J. Am. Stat. Assoc. 113.521, 2018, pp. 340–356

- [42] John Frank Charles Kingman “Poisson processes” Clarendon Press, 2002

- [43] Lea Duncker and Maneesh Sahani “Temporal alignment and latent Gaussian process factor inference in population spike trains” In Advances in Neural Information Processing Systems 31 Curran Associates, Inc., 2018, pp. 10445–10455

- [44] Alex H Williams, Ben Poole, Niru Maheswaranathan, Ashesh K Dhawale, Tucker Fisher, Christopher D Wilson, David H Brann, Eric M Trautmann, Stephen Ryu, Roman Shusterman, Dmitry Rinberg, Bence P Ölveczky, Krishna V Shenoy and Surya Ganguli “Discovering precise temporal patterns in large-scale neural recordings through robust and interpretable time warping” In Neuron 105.2, 2020, pp. 246–259.e8

- [45] Ushio Tanaka, Yosihiko Ogata and Dietrich Stoyan “Parameter estimation and model selection for Neyman-Scott point processes” In Biometrical Journal: Journal of Mathematical Methods in Biosciences 50.1 Wiley Online Library, 2008, pp. 43–57

- [46] Ushio Tanaka and Yosihiko Ogata “Identification and estimation of superposed Neyman–Scott spatial cluster processes” In Ann. Inst. Stat. Math. 66.4, 2014, pp. 687–702

- [47] Jiří Kopecký and Tomáš Mrkvička “On the Bayesian estimation for the stationary Neyman-Scott point processes” In Appl. Math. 61.4, 2016, pp. 503–514

- [48] Yosihiko Ogata “Cluster analysis of spatial point patterns: posterior distribution of parents inferred from offspring” In Japanese Journal of Statistics and Data Science, 2019

- [49] Radford M Neal “Markov chain sampling methods for Dirichlet process mixture models” In J. Comput. Graph. Stat. 9.2 Taylor & Francis, 2000, pp. 249–265

- [50] Jun S Liu, Wing Hung Wong and Augustine Kong “Covariance structure of the Gibbs sampler with applications to the comparisons of estimators and augmentation schemes” In Biometrika 81.1 Oxford Academic, 1994, pp. 27–40

- [51] Sonia Jain and Radford M Neal “A split-merge Markov chain Monte Carlo procedure for the Dirichlet process mixture model” In J. Comput. Graph. Stat. 13.1 Taylor & Francis, 2004, pp. 158–182

- [52] Sonia Jain and Radford M Neal “Splitting and merging components of a nonconjugate Dirichlet process mixture model” In Bayesian Anal. 2.3 International Society for Bayesian Analysis, 2007, pp. 445–472

- [53] Elaine Angelino, Matthew James Johnson and Ryan P Adams “Patterns of scalable Bayesian inference” In Foundations and Trends® in Machine Learning 9.2-3 Now Publishers, 2016, pp. 119–247

- [54] Svante Wold “Cross-validatory estimation of the number of components in factor and principal components models” In Technometrics 20.4 [Taylor & Francis, Ltd., American Statistical Association, American Society for Quality], 1978, pp. 397–405

- [55] Jeff Bezanson, Alan Edelman, Stefan Karpinski and Viral B Shah “Julia: A fresh approach to numerical computing” In SIAM review 59.1 SIAM, 2017, pp. 65–98

- [56] Andres D Grosmark and György Buzsáki “Diversity in neural firing dynamics supports both rigid and learned hippocampal sequences” In Science 351.6280, 2016, pp. 1440–1443

- [57] Xinyi Deng, Daniel F Liu, Kenneth Kay, Loren M Frank and Uri T Eden “Clusterless decoding of position from multiunit activity using a marked point process filter” In Neural computation 27.7 MIT Press, 2015, pp. 1438–1460

References

- [58] John Frank Charles Kingman “Poisson processes” Clarendon Press, 2002

- [59] D J Daley and D Vere-Jones “An Introduction to the Theory of Point Processes: Volume I: Elementary Theory and Methods” Springer, New York, NY, 2003

- [60] Andrew Gelman, John B Carlin, Hal S Stern, David B Dunson, Aki Vehtari and Donald B Rubin “Bayesian data analysis” CRC press, 2013

- [61] Kevin P Murphy “Machine learning: a probabilistic perspective” MIT press, 2012

- [62] Radford M Neal “Markov chain sampling methods for Dirichlet process mixture models” In J. Comput. Graph. Stat. 9.2 Taylor & Francis, 2000, pp. 249–265

- [63] Jeffrey W Miller and Matthew T Harrison “Mixture models with a prior on the number of components” In J. Am. Stat. Assoc. 113.521, 2018, pp. 340–356

- [64] Sonia Jain and Radford M Neal “A split-merge Markov chain Monte Carlo procedure for the Dirichlet process mixture model” In J. Comput. Graph. Stat. 13.1 Taylor & Francis, 2004, pp. 158–182

- [65] Sonia Jain and Radford M Neal “Splitting and merging components of a nonconjugate Dirichlet process mixture model” In Bayesian Anal. 2.3 International Society for Bayesian Analysis, 2007, pp. 445–472

[]

Supplementary Material: Point process models for sequence detection in high-dimensional spike trains

Appendix A Background

This section describes some basic properties and characteristics of Poisson processes that are relevant to understand the PP-Seq model. See [58] for a targeted introduction to Poisson processes and [59] for a broader introduction to point processes.

Definition of a Poisson Process — A point process model satisfying the following three properties is called a Poisson process.

-

(i)

The number of events falling within any region of the sample space is a random variable following a Poisson distribution. For a region we denote the number of events occuring in as .

-

(ii)

There exists a function , called the intensity function, which satisfies for every region .

-

(iii)

For any two non-overlapping regions and , and are independent random variables.

We write to denote that a set of events is distributed according to a Poisson process with intensity function .

Marked Poisson Processes — A marked Poisson process extends the above definition to sets of events marked with additional information. For example, we write to denote a marked Poisson process where is the “mark” associated with each event. Note that the domain of the intensity function is extended to . To pick a concrete example of interest, the events could represent spike times, in which case would be an interval of the real line, e.g. , representing the recorded period. Further, would be a discrete set of neuron labels, e.g. for a recording of cells.

Likelihood function — Given a set of events in the likelihood of this set, with respect to Poisson process is:

| (8) |

Sampling — Given an intensity function and a sample space , we can simulate data from a Poisson process by first sampling the number of events:

| (9) |

and then sampling the location of each event according to the normalized intensity function. That is, for , we independently sample the events according to:

| (10) |

Superposition Principle — The union of independent Poisson processes with nonnegative intensity functions is a Poisson process with intensity function .

As a consequence, we can sample from a complicated Poisson process by first breaking it down into a series of simpler Poisson processes, drawing independent samples from these simpler processes, and taking the union of all events to achieve a sample from the original Poisson process. We exploit this property in section B.1 to draw samples from the PP-Seq model.

Appendix B The PP-Seq Model

B.1 Generative Process

Recall the generative model described in the main text. Let denote the -th latent sequence, which consists of a time , type , and amplitude . Extensions of the model introduce further properties like time warping factors for each latent sequence. The latent events (i.e. instantiations of a sequence) are sampled from a Poisson process,

| (11) | ||||

| (12) |

Note that this is a homogeneous Poisson process since the intensity function does not depend on time. Conditioned on the latent events, the observed spikes are then sampled from a second, inhomogeneous, Poisson process,

| (13) | ||||

| (14) | ||||

| (15) |

In the main text we abbreviate , , and .

Observe that the firing rates are sums of non-negative intensity functions. We can use the superposition principle of Poisson processes (see appendix A) to write the observed spikes as the union of spikes generated by the background and by each of the latent events.

| (16) | ||||||

| for | (17) | |||||

| (18) | ||||||

where denotes the number of spikes generated by the -th component, with denoting the background spikes.

Next, note that each of the constituent Poisson processes intensity functions are simple, in that the normalized intensities correspond to simple densities. In the case of the background, the normalized intensity is categorical in the neuron indices and uniform in time. The impulse responses, once normalized, are categorical in the neuron indices and conditionally Gaussian in time. We use these facts to sample the Poisson processes as described in Appendix A.

The final sampling procedure for PP-Seq is as follows. First sample the global parameters,

| (19) | |||||

| (20) | |||||

| (21) | |||||

| for | (22) | ||||

| for | (23) | ||||

| (24) | |||||

Then sample the latent events,

| (25) | |||||

| for | (26) | ||||

| for | (27) | ||||

| (28) | |||||

Next sample the background spikes,

| (29) | |||||

| for | (30) | ||||

| for | (31) | ||||

Note that we have decomposed each neuron’s background firing rate as the product: . Finally, sample the induced spikes in each sequence,

| for | (32) | ||||

| for ; for | (33) | ||||

| for ; for | (34) |

The hyperparameters of the model are , and the global parameters consist of , where denotes parameters related to the background spiking process and denotes parameters associated with each sequence type for . The resulting dataset is the union of background and induced spikes, .

B.2 Expected Sequence Sizes

As described in the main text, each sequence type induces a different pattern of activation across the neural population. In particular, each neuron’s response is modeled by a scaled normal distribution described by three variables—the amplitude (), the mean (), and the variance ().

We can interpret as the temporal offset or delay for neuron , while sets the width of the response. They are drawn from a normal-inverse-chi-squared distribution, which is a standard choice for the conjugate prior when inferring the mean and variance of a univariate normal distribution. Refer to [60] (section 3.3) and [61] (section 4.6.3.7) for appropriate background material.

We can interpret as the expected number of spikes emitted by neuron for a sequence of type with unit amplitude (i.e., when ). By sampling from a symmetric Dirichlet prior, we introduce the constraint that , which avoids a degeneracy in the amplitude parameters over neurons () and sequences (). As a consequence, we can interpret as the expected number of spikes evoked by latent event over the full neural population:

| (35) |

This approximation ignores boundary effects, but it is justified when and ; i.e. when the sequences are short relative to the interval . This condition will generally be satisfied.

B.3 Log Likelihood

Given a set of latent events and sequence type parameters , we can evaluate the likelihood of the dataset as:

| (36) |

where terms and respectively correspond to the logarithms of and appearing in Equation 8.

Appendix C Complete Gibbs Sampling Algorithm

In this section we describe the collapsed Gibbs sampling routine for PP-Seq in detail. It adapts “Algorithm 3” of [62] and the extension by [63] to draw approximate samples from the model posterior. To do this, we introduce auxiliary parent variables for every spike. These variables denote assignments of each spike to of the latent sequence events, for some , or to the background process, . Let denote the set of spikes assigned to sequence under parent assignments . Similar assignment variables are used in standard Gibbs sampling routines for finite mixture models (see, e.g., section 24.2.4 in [61]) and for Dirichlet Process Mixture (DPM) models (see [62]; section 25.2.4 in [61]). In these contexts, each may be thought of as a sequence assignment or indicator variable. In the present context of the PP-Seq model, the attributions of spikes to latent events follows from the superposition principle of Poisson processes (see appendix A), which allows us to decompose each neuron’s firing rate function into a sum of simple functions (in this case, univariate Gaussians) and thus derive efficient Gibbs sampler updates.

The Gibbs sampling routine is summarized in Algorithm 1. While the approach closely mirrors existing work by [62, 63] there are a couple notable differences. First, the background spikes in the PP-Seq model do not have an obvious counterpart in these prior algorithms, and this feature of the model renders the partition of datapoints only partially exchangeable (intuitively, the set of datapoints defined by is treated differently from all other groups). Second, the factors and in the collapsed Gibbs updates are specific to the special form of the PP-Seq model as a Neyman-Scott process; for example, in the analogous Gibbs sampling routine for DPMs, is replaced with , and is replaced with the concentration parameter of the Dirichlet process.

Appendix D Derivations

D.1 Prior Distribution Over Partitions

First we derive the prior distribution on the partition of spikes under a Neyman-Scott process. Technically, we derive the prior distribution on spike indices, , not yet including the spike times and neurons. A partition of indices is a set of disjoint, non-empty sets whose union is . We represent the partition as , where contains the indices of spikes assigned to sequence , with denoting the background spikes. Here, denotes the number of non-empty sequences in the partition, and denotes the number of spikes assigned to the -th sequence. Likewise, denotes the number of spikes assigned to the background.

Proposition 1.

The prior probability of the partition, integrating over the latent event parameters, is,

| (37) |

where and are the hyperparameters of the gamma intensity on amplitudes, is the expected number of background events, and

| (38) |

Proof.

Our derivation roughly follows that of [63] for mixture of finite mixture models, but we adapt it to Neyman-Scott processes. The major difference is that the distribution above is over partitions of random size. First, return to the generative process described in Section B.1 and integrate over the latent event amplitudes to obtain the marginal distribution on sequence sizes given the total number of sequences . We have,

| (39) | ||||

| (40) | ||||

| (41) | ||||

| (42) |

Let denote a set of sequence assignments. There are parent assignments consistent with the sequence sizes , and they are all equally likely under the prior, so the conditional probability of the parent assignments is,

| (43) | ||||

| (44) |

The parent assignments above induce a partition, but technically they assume a particular ordering of the latent events. Moreover, if some of the latent events fail to produce any observed events, they will not be included in the partition. In performing a change of variables from parent assignments to partitions, we need to sum over latent event assignments that produce the same partition. There are such assignments if there are latent events but only partitions. Thus,

| (45) |

Clearly, must be at least in order to produce the partition.

Finally, we sum over the number of latent events to obtain the marginal probability of the partition,

| (46) | ||||

| (47) |

where

| (48) |

∎

D.2 Marginal Likelihood of Spikes in a Partition

Now we combine the prior on partitions of spike indices with a likelihood of spikes under that partition. To match the notation in the main text and Appendix C, let denote a partition of the spikes where denote the set of spikes in sequence . In this section we derive the marginal probability of spikes in a sequence, integrating out the sequence time and type. For the background spikes, there are no parameters to integrate and the marginal probability is simply,

| (49) | ||||

| (50) |

For the spikes attributed to a latent event, however, we have to marginalize over the type and time of the event. We have,

| (51) | ||||

| (52) | ||||

| (53) |

Let , and . Then,

| (54) | ||||

| (55) | ||||

| (56) |

where

| (57) |

is the normalizing constant of a Gaussian density in information form with precision and linear coefficient . For a sequence with a single spike, this reduces to,

| (58) |

The approximations above are due to the truncated integral over rather than the whole real line. In practice, this truncation is negligible for sequences far from the boundaries of the interval.

D.3 Collapsed Gibbs Sampling the Partitions

The conditional distribution on partitions given data and model parameters is given by,

| (59) | ||||

| (60) |

Let denote the partition with spike removed. Though it is slightly inconsistent with the proof in Section D.1, let denote the assignment of spike , as in the main text. Moreover, let denote the size of the -th sequence in the partition, not including spike . We now consider the relative probability of the full partition when is added to each one of the possible parts: the background, an existing sequence, or a new sequence.

For the background assignment,

| (61) | ||||

| (62) | ||||

| (63) | ||||

| (64) |

By a similar process, we arrive at the conditional probabilities of adding a spike to an existing sequence,

| (65) | ||||

| (66) |

The predictive likelihood can be obtained via the ratio of marginal likelihoods derived above, or by explicitly calculating the categorical posterior distribution on types and then the categorical and Gaussian posterior predictive distributions of and , respectively, given the type and the other spikes. The latter approach is what is presented in the main text.

Finally, the conditional probability of assigning a spike to a new sequence simplifies as,

| (67) | ||||

| (68) | ||||

| (69) | ||||

| (70) | ||||

| (71) |

D.4 Gibbs Sampling the Latent Event Parameters

Given the partition and global parameters, it is straightforward to sample the latent events. In fact, most of the calculations were derived in Section D.2. The conditional distribution of the event type is,

| (72) | ||||

| (73) |

where, again, the approximation comes from truncating the integral to the range , and is negligible in our cases.

Given the type, the latent event time is conditionally Gaussian,

| (74) | ||||

| (75) | ||||

| (76) |

where

| (77) | ||||

| (78) |

Finally, the amplitude is independent of the type and time, and its conditional distribution is given by,

| (79) | ||||

| (80) |

D.5 Gibbs Sampling the Global Parameters

Finally, the Gibbs updates of the global parameters are simple due to their conjugate priors.

Background rates

The conditional distribution of the background rates is,

| (81) | ||||

| (82) |

Sequence type probabilities

The conditional distribution of the sequence type probability vector is,

| (83) | ||||

| (84) |

Neuron weights for each sequence type

The conditional distribution of the neuron weight vector is,

| (85) | ||||

| (86) | ||||

| (87) |

Neuron widths and delays

The conditional distribution of the neuron delays and widths is,

| (88) | ||||

| (89) |

where

| (90) | ||||

| (91) | ||||

| (92) | ||||

| (93) | ||||

| (94) |

D.6 Split-Merge Sampling Moves

As discussed in the main text, when sequences containing of a small number of spikes are unlikely under the prior, Gibbs sampling can be slow to mix over the posterior of spike partitions since the probability of forming new sequences (i.e. singleton clusters) is very low. In addition to the annealed sampling approach outlined in the main text, we adapted the split-merge sampling method proposed by [64] for Dirichlet process mixture models to PP-Seq.444See also [65] for the case of non-conjugate priors. For simplicity, we implemented randomized split-merge proposals—i.e. without the additional restricted Gibbs sweeps described proposed in [64]. In practice, we found that these random proposals were sufficient for the sampler to accept both split and merge moves at a satisfactorily high rate.

Briefly, our method starts by randomly choosing two spikes and that are not assigned to the background partition and satisfy for some user-specified time window . One could set , in which case all pairs of spikes not assigned to the background are considerd; however, we will see that this will generally result in proposals that are extremely likely to be rejected, so it is advisable to set to be an upper bound on the expected sequence length to encourage faster mixing. After identifying the pair of spikes, we propose to either merge their sequences (if ), or, if they belong to the same sequence () we propose to form two new sequences, each containing one of the two spikes, the remaining spikes are randomly assigned with equal probability.

Given the proposed split or merge move, , the Metropolis-Hastings acceptance probability is:

| (95) |

where is the proposal density, and is given in eqs. 59 and 60. The sampling method then directly follows [64]. The ratio of proposal probabilities for split moves is:

| (96) |

And the ratio for merge moves is:

| (97) |

Appendix E Time-Warped Sequences

To account for sequences that unfold at different speeds, we incorporate a time-warping component into the generative model. Each sequence is endowed with a warp value, which is drawn from a discrete distribution,

| (98) |

where and are the probabilities of the corresponding warp values . In this paper, we set

| (99) | ||||

| (100) |

The hyperparameters include the number of warp values , the maximum warp value , and the variance of the warp probabilities . We use an odd number of warp values so that .

The warp value changes the distribution of spike times in the corresponding sequence by scaling the mean and standard deviation,

| (101) |

Note that one could also choose to linearly scale the variance rather than the standard deviation, as in a warped random walk. Ultimately, this is a subjective modeling choice, and scaling the standard deviation leads to slightly easier Gibbs updates later on.

We can equivalently endow each sequence with a latent warp index where and,

| (102) |

Since there are only a finite number of warp values, we can treat the warp index and the sequence type as a single discrete latent variable . This formulation allows us to re-use all of the collapsed Gibbs updates derived above, replacing our inferences over with inferences of . Computationally, this increases the run-time by a factor of .

Given the sequence types, times, and warp values, the conditional distribution of the neuron delays and widths is,

| (103) | ||||

| (104) | ||||

| (105) |

where

| (106) | ||||

| (107) | ||||

| (108) | ||||

| (109) | ||||

| (110) | ||||

| (111) |

If instead you choose to linearly scale the variances, as mentioned above, the conditional distribution of the delays and widths is no longer inverse . However, it is straightforward to sample the delays and widths one at a time from their univariate conditional distributions.

Appendix F Supplemental Details on Experiments

F.1 ROC curve comparison of convNMF and PP-Seq

Simulated datasets consisted of neurons, time units, sequence type, sequence event rate , a default average background rate of , default settings of and . Further, we set and so that neuron offsets had unit standard deviation and small jitter by default. For the case of “jitter noise” we modified but continued to set to preserve the expected distribution of neuron offsets.

For each synthetic dataset, we fit convNMF models with component and a time bin size of 0.2 time units. We optimized using alternating projected gradient descent with a backtracking line search. Each convNMF model produced a temporal factor (where is the total number of time bins). We encoded the ground truth sequence times in a binary vector of length and computed ROC curves by thresholing over a fine grid of values over the range . We consider each timebin, indexed by , a false positive if exceeds the threshold, but no ground truth sequence was present in the timebin. Likewise, a timebin is a true positive when exceeds the threshold and a sequence event did occur in this timebin.

We repeated a similar analysis with 100 (approximate) samples from the PP-Seq posterior distribution. Specifically, we discretized the sampled sequence event times, i.e. for every sample, into the same time bins used by convNMF. For every timebin we computed the empirical probability that it contained a sampled event from the model, averaging over MCMC samples. This resulted in a discretized, nonnegative temporal factor that is directly analogous to the factor produced convNMF. We compute an ROC curve by the same method outlined above.

Unfortunately, both convNMF and PP-Seq parameters are only weakly identifiable. For example, in PP-Seq, one can add a constant to all latent event times, , and subtract the same constant from all neuron offsets . This manipulation does not modify the likelihood of the data (it does, however, affect the probability of the parameters under the prior, and so we say the model is “weakly identifiable”). The result of this is that both PP-Seq and convNMF may consistently misestimate the “true” sequence times by a small constant of time bins. To discount this nuisance source of model error we repeated the above analysis on shifted copies of the temporal factor (up to 20 time bins in each direction); we then selected the optimal ROC curve for each model and computed the area under the curve to quantify accuracy.

F.2 Hyperparameter sweep on hippocampal data

We performed MCMC inference on 2000 randomly sampled hyperparameter sets, masking out a random 7.5% of the data as a validation set on every run. We fixed the following hyperparameter values: , , for every neuron and sequence type, for every neuron and sequence type. We also fixed the following hyperparameters, pertaining to time warping: , . We randomized the number of sequence types uniformly. We randomized the maximum warp value uniformly on (1, 1.5). We randomized the mean sequence amplitude, , log-uniformly over the interval , and set the variance of the sequence amplitude equal to the mean. We randomized the expected total background amplitude, , log-uniformly over the interval and set the variance of this parameter equal to its mean. We randomized the sequence rate log-uniformly over . Finally, we randomized the neuron response widths log-uniformly over .

We randomly initialized all spike assignments either with convNMF or the annealed MCMC procedure outlined in the main text (we observed successful outcomes in both cases). In annealed runs, we set an initial temperature of 500 and exponentially relaxed the temperature to one over 20 stages, each containing 100 Gibbs sweeps. (Recall that this temperature parameter scales the variance of while preserving the mean of this prior distribution.) After annealing was completed we performed 100 final Gibbs sweeps over the data, the final half of which were used as approximate samples from the posterior. In this final stage of sampling, we also performed 1000 randomized split-merge Metropolis Hastings moves after every Gibbs sweep.

All MCMC runs were performed using our Julia implementation of PP-Seq, run in parallel on the Sherlock cluster at Stanford University. Each job was allocated 1 CPU and 8 GB of RAM.