Point Cloud Recognition with Position-to-Structure Attention Transformers

Abstract

In this paper, we present Position-to-Structure Attention Transformers (PS-Former), a Transformer-based algorithm for 3D point cloud recognition. PS-Former deals with the challenge in 3D point cloud representation where points are not positioned in a fixed grid structure and have limited feature description (only 3D coordinates () for scattered points). Existing Transformer-based architectures in this domain often require a pre-specified feature engineering step to extract point features. Here, we introduce two new aspects in PS-Former: 1) a learnable condensation layer that performs point downsampling and feature extraction; and 2) a Position-to-Structure Attention mechanism that recursively enriches the structural information with the position attention branch. Compared with the competing methods, while being generic with less heuristics feature designs, PS-Former demonstrates competitive experimental results on three 3D point cloud tasks including classification, part segmentation, and scene segmentation.

1 Introduction

3D point cloud recognition is an active research area in computer vision that has gained steady progress in the past years [32, 24, 34, 46, 13]. The availability of large-scale 3D datasets [44] and real-world applications [15] in autonomous driving [4, 30], computer graphics [31], and 3D scene understanding [16] make the task of 3D point cloud recognition increasingly important.

|

Amongst recent 3D shape representations for deep-learning based recognition tasks, including meshes [11], voxels (or volumetric grid) [42], and implicit functions [5], the point cloud representation [32] remains a viable choice to represent 3D shapes due to its flexibility and effectiveness in computation and modeling. However, adopting the point cloud representation in downstream tasks poses some special challenges: 1) unlike the voxel-based volumetric representation that has a fixed grid-structure where 3D convolutions [42] can be readily applied, point clouds are basically collections of scattered points that hold no order; 2) each sample point carries only the 3D coordinate information () without rich explicit feature descriptions. A good understanding about the overall shape, as well as the object parts represented by point clouds depends on extracting the “correct” and “informative” features of the individual points through their relations with the neighboring points (context).

The main challenge: a chicken-and-egg problem. Point clouds come in as scattered points without known connections and relations. Point cloud recognition is a chicken-and-egg problem: a rich feature description benefits from the robust extraction of the point structure relations (local graphs), whereas creating a reliable local graph also depends on an informative feature description for the points. In a nutshell, the local graph building and feature extraction processes are tightly coupled in 3D point cloud recognition, which is the central issue we are combating here.

Transformers [39] are emerging machine learning models that have undergone exploding development in natural language processing [8] and computer vision [3, 9]. Unlike Convolutional Neural Networks [22] that operate in the fixed image lattice space, the attention mechanism in the Transformers [39] include the positional embeddings in the individual tokens that themselves are already orderless. This makes Transformers a viable representation and computation framework for 3D point cloud recognition. Using the vanilla Transformers on point clouds [39, 9] under the standard self-attention mechanism, however, leads to a sub-optimal solution (see ablation study in section 5.1). The weighted sum mechanism learns an average of the tokens which are not ideal for structure extraction. Existing works like PCT[13] have a special non-learnable feature engineering step that pre-extracts features for each sampled point.

Given these challenges, we propose in this paper a new point cloud recognition method, Position-to-Structure Attention TransFormer (PS-Former), that consists of two interesting properties:

-

1.

A learnable Condensation Layer that performs point cloud downsampling and feature extraction automatically. Unlike the Transformer-based PCT approach [13], where a fixed strategy using farthest point sampling and a feature engineering process using KNN grouping are adopted, we extract structural features by utilizing the internal self-attention matrix for computing the point relations.

-

2.

A Position-to-Structure Attention mechanism that recursively enriches the structure information using the position attention branch. This is different from the standard cross-attention mechanism where two working branches cross attend each other in a symmetric way. An illustration can be found in Figure 4.

We conduct experiments on three main point cloud recognition tasks including ModelNet40 classification[44], ShapeNet part segmentation[51], and 3SDIS scene segmentation[1], to evaluate the effectiveness of our proposed model. ModelNet40 classification requires the recognition of the entire input point cloud while the latter two focus on single point labeling. PS-Former is shown to be able to achieve competitive results when compared with state-of-the-art methods, and improve over the PCT method [13] that computes the features before the attention layer by grouping them based on the original 3D space distance.

2 Related Work

3D Point Cloud Recognition. Due to the point cloud’s non-grid data structure, a number of works have been proposed in the past by first converting the points to a grid data structure e.g. 2D images or 3D voxels[37, 12]. The grid data after this pre-processing can be directly learned by CNN-like structure [22]. However, this conversion process from point clouds to volumetric data may lead to information loss e.g. occlusion in the 2D images and resolution bottleneck in the 3D voxels.

The seminal work of PointNet [32] chose to perform learning directly on the original point cloud data in which max pooling operation is adopted to retain invariance in the point sets. Since the work of PointNet [32], there has been a wealthy body of methods proposed along this direction. There are methods [38, 43, 47] attempting to simulate the convolution process in 2D images whereas other approaches [40, 48] adopt graph convolutional neural networks (GCN) to build connections for the neighboring points for feature extraction.

Transformer Architecture. A notable recent development in natural language processing is the invention and widespread adoption of the Transformer architectures [39, 7]. At the core, Transformers [39] model the relations among tokens with two attention mechanisms, namely self-attention and cross-attention. Another important component of Transformers is the positional encoding that embeds the position information into the tokens, relaxing the requirement to maintain the order for the input data. Recently, Transformers have also been successfully adopted in image classification [9] and object detection [3]. Typically, vision transformers adopt similar absolute/relative positional encoding strategies used in language transformers [39, 36] to encode grid structures from 2D images. In 3D point cloud tasks, the input point cloud data only contains the 3D coordinates without any texture information, which makes the computation from position to structure feature extraction a challenging task. In point cloud recognition, Transformers were adopted in [46]; PCT [13] applies sampling and grouping operations introduced by PointNet++[34] to capture the structure information.

Compared to existing 3D point cloud recognition methods [28, 24, 41, 45], our proposed PS-Former model consists of learnable components that are generic and easy to adapt. Versus the competing Transformer based approaches such as PCT [13], PS-Former (1) removes the non-learnable feature engineering stage with a Condensation Layer and (2) incorporates newly designed Position-to-Structure Attention that learns informative features from point positions and their neighboring structures.

3 Method

In this section, we present our proposed model, Position-to-Structure Transformers (PS-Former). We first give an overview of our model, followed by a description of the two key components of our model: Position-Structure Attention Layer and Condensation Layer.

|

3.1 Overview

Our proposed PS-Former model is a Transformer-based architecture, as seen in Figure 2. The overall goal of the PS-Former is to learn an effective representation for downstream tasks such as classification and segmentation. The input is coordinate data which will first go through an input embedding module to project the data from the low-dimension space to the high-dimension space. Two self-attention layers are adopted to make points interact with each other to obtain more developed features before condensation. Following are our two key designs: Condensation Layer and Position-to-Structure Attention Layer. The features are concatenated together for further recognition tasks.

3.2 Attention Mechanism

The vanilla attention mechanism [39] is as follows:

| (1) |

where and are query and key respectively, is the feature dimensions and is the final attention matrix. However, in the 3D space of points, a more meaningful way to consider the correlation between points is the euclidean distance instead of the dot product. Thus, we introduce a new attention mechanism based on euclidean distance in our model. We also keep this characteristic in the high dimensional space as well to facilitate an easier learning process. Moreover, we use a similar normalization method as PCT[13] and we’ll see later in the Condensation Layer (section 3.3) it will be a key design for our algorithm. Our final attention equation is as following:

| (2) | |||

| (3) |

3.3 Condensation Layer

A common challenge in point cloud recognition is exploiting rich local and global structures. Methods such as PointNet++[34] and PCT[13] have adopted a combination of sampling—Farthest Point Sampling (FPS)—and grouping methods to tackle this difficulty. Our approach extracts the local structures through a two-step process based entirely on attention: sampling and feature construction. The generation of these features through attention create a much richer and more focused local neighborhood context as compared to the more handcrafted approach of PCT and PointNet++. Furthermore, the proposed method proves to be a natural integration into the Transformer network. The Condensation Layer also compresses information for less computational complexity. The schema of the Condensation Layer can be viewed in Figure 3.

|

We select points from the point cloud to serve as center points of local neighborhoods. The traditional Farthest Point Sampling strategy does not identify the most important neighborhoods because its main objective, to maximize inter-point distance, does not motivate meaningful center points. Since attention is a learned measure of affinity and an integral part to the Transformer, we sample the center points based on the row sum of the attention matrix. We apply the softmax operation in columns at first, which can be understood as each point’s contribution to each other. The row sum can be seen as how much information each point will take. We make the choice of the center points based on this computed row sum. Note that we don’t simply choose the top-k points with the largest sum since it will likely cause points to crowd together. The detailed algorithm is shown in Algorithm 1.

After we get the center points, we use the strategy which will be described in section 3.4 to obtain the structure features for each point from the relative position features. Moreover, the position features are updated using the vanilla attention mechanism.

Input: Masked Attention: , : the number of points, : the number of selected points, : input points

Output: : sampled points

3.4 Position-to-Structure Attention Layer

|

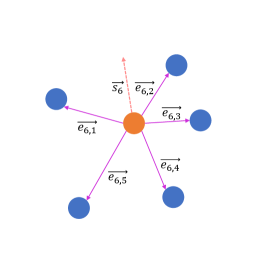

Our key design is to decouple the position and structure information and allow the position feature to gradually enrich the structure feature. To achieve this, we design the Position-to-Structure Attention Layer as presented in Figure 4. The structure information is acquired using the relative position features which can be seen as the neighborhood structure features around one specific point. In our Position-to-Structure Attention Layer, there are three main processes to update the features which can be seen in Figure 5. Each point’s position feature is updated through the weighted sum along with its structure feature, while the relative position feature is also used to enrich the structure feature.

|

|

|

| (a) position to position | (b) position to structure | (c) structure to structure |

In particular, the attention matrix is computed by both the position features and the structure features as following:

| (4) | ||||

| (5) |

Both the position features and structure features are updated through attention while structure features are also influenced by top-k related points according to the attention matrix.

| (6) | ||||

| (7) |

where are mapping functions: , is the concatenate operation, is the position features for each point, is the structure features for each point and is the features gathered using the relative position features.

4 Experiments

In this section, we evaluate our proposed model in three point cloud recognition tasks: point cloud classification on ModelNet40[44], point cloud part segmentation on ShapeNet[51] and indoor scene segmentation on Stanford 3D Indoor Space (S3DIS)[1].

4.1 Classification on ModelNet40

Dataset and implementation. A primary assay for point cloud classification, ModelNet40[44] is a 3D point cloud dataset composed of 12,311 CAD models from 40 different object classes. To maintain consistency with other studies, the same train-test split is used as the given dataset: 9,843 training samples and 2,468 testing samples. In processing the data, we randomly select 1,024 points, as many other methods do. It is important to note that we discard information regarding surface normals and solely use the 3D coordinates. Following PointNet[32] convention, we augment the training data with random translation, scaling and dropout. While testing, augmentation is omitted and the test set is evaluated without any voting strategy. The model is trained with the Adam optimizer [18] with its learning rate and weight decay both set at 1e-4.

| Model | Input | # Points | Accuracy (%) |

|---|---|---|---|

| VoxNet [28] | v | - | 85.9 |

| Subvolume [33] | v | - | 89.2 |

| PointNet [32] | pc | 1k | 89.2 |

| PAT [50] | pc | 1k | 91.7 |

| Kd-Net [19] | pc | 32k | 91.8 |

| PointNet++ [34] | pc | 5k | 91.9 |

| PointCNN [24] | pc | 1k | 92.5 |

| DGCNN [41] | pc | 1k | 92.9 |

| InterpCNN [27] | pc | 1k | 93.0 |

| GeoCNN [20] | pc | 1k | 93.4 |

| PAConv [47] | pc | 1k | 93.6 |

| RPNet [35] | pc | 1k | 94.1 |

| CurveNet [45] | pc | 1k | 93.8 |

| A-SCN [46] | pc | 1k | 89.8 |

| Point Transformer [10] | pc | 1k | 92.8 |

| Cloud Transformers [29] | pc | 1k | 93.1 |

| PCT [13] | pc | 1k | 93.2 |

| PointTransformer [54] | pc | 1k | 93.7 |

| PS-Former (Ours) | pc | 1k | 93.9 |

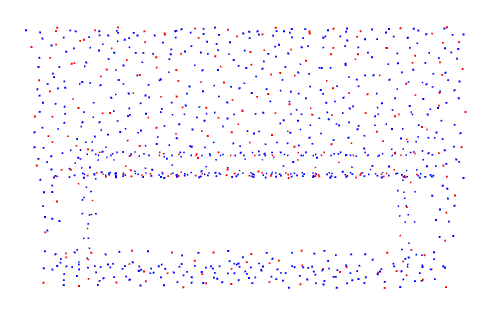

Results and analysis. We achieved a competitive result on the ModelNet40 dataset, as seen in Table 1. The best overall accuracy achieved by the PS-Former without the assistance of normals or voting ensembles was 93.9%. For the experiment, we investigated the results of our novel Condensation Layer (shown in Figure 6). We observe that our Condensation Layer identifies points of interests much more commonly in central areas, while the Farthest Point Sampling (FPS) strategy identifies points in an even distribution in the point cloud. We hypothesize that our Condensation Layer favors more information rich centers that hold high attention values with respect to its neighboring points; the Farthest Point Sampling technique likely produces an even collection of points due to it’s objective to maximize point-to-point distance. With intuitively chosen attention-based centers, our model is able to outperform FPS-based methods such as PointNet++ and PCT. Also, with the novel Position-to-Structure Attention and Condensation Layer, our PS-Former outperforms other Transformer-based methods.

|

|

|

|

|

|

|

|

4.2 Part Segmentation on ShapeNet

Dataset and implementation. We further evaluate our model on ShapeNet[51] for part segmentation. It contains 16,881 shapes with 14,007 as training samples and the remaining 2,874 as testing samples. The dataset contains 50 object parts in total with each object containing no more than 6 object parts. 2048 points are sampled for each point cloud and all the points are assigned with a part label. To classify each point into the correct label, our Condensation Layer’s output token number is set to the input token number which in this case is 2048. After the Position-to-Structure Attention layers, we concatenate the node feature with the whole data feature which is obtained using max pooling as in ModelNet40 classification task. To reduce the computation, in this experiment, we only use the structure feature since this task is more focused on point-wise classification and structure information is more important in this case. We use SGD as our optimizer with the initial learning rate set to 0.1 and the weight decay set to 1e-4. Cosine Annealing Scheduler is used to adjust the learning rate with the minimal learning rate set to 1e-3 [26].

| Method | mIOU | aero | bag | cap | car | chair | ear | guitar | knife | lamp | lap | motor | mug | pistol | rocket | skate | table |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [32] | 83.7 | 83.4 | 78.7 | 82.5 | 74.9 | 89.6 | 73.0 | 91.5 | 85.9 | 80.8 | 95.3 | 65.2 | 93.0 | 81.2 | 57.9 | 72.8 | 80.6 |

| SO-Net [23] | 84.9 | 82.8 | 77.8 | 88.0 | 77.3 | 90.6 | 73.5 | 90.7 | 83.9 | 82.8 | 94.8 | 69.1 | 94.2 | 80.9 | 53.1 | 72.9 | 83.0 |

| PointNet++ [34] | 85.1 | 82.4 | 79.0 | 87.7 | 77.3 | 90.8 | 71.8 | 91.0 | 85.9 | 83.7 | 95.3 | 71.6 | 94.1 | 81.3 | 58.7 | 76.4 | 82.6 |

| SynSpecCNN [52] | 84.7 | 81.6 | 81.7 | 81.9 | 75.2 | 90.2 | 74.9 | 93.0 | 86.1 | 84.7 | 95.6 | 66.7 | 92.7 | 81.6 | 60.6 | 82.9 | 82.1 |

| PCNN [2] | 85.1 | 82.4 | 80.1 | 85.5 | 79.5 | 90.8 | 73.2 | 91.3 | 86.0 | 85.0 | 95.7 | 73.2 | 94.8 | 83.3 | 51.0 | 75.0 | 81.8 |

| SpiderCNN [49] | 85.3 | 83.5 | 81.0 | 87.2 | 77.5 | 90.7 | 76.8 | 91.1 | 87.3 | 83.3 | 95.8 | 70.2 | 93.5 | 82.7 | 59.7 | 75.8 | 82.8 |

| PointCNN [24] | 86.1 | 84.1 | 86.5 | 86.0 | 80.8 | 90.6 | 79.7 | 92.3 | 88.4 | 85.3 | 96.1 | 77.2 | 95.3 | 84.2 | 64.2 | 80.0 | 83.0 |

| Point2Seq [25] | 85.2 | 82.6 | 81.8 | 87.5 | 77.3 | 90.8 | 77.1 | 91.1 | 86.9 | 83.9 | 95.7 | 70.8 | 94.6 | 79.3 | 58.1 | 75.2 | 82.8 |

| RS-CNN [14] | 86.2 | 83.5 | 84.8 | 88.8 | 79.6 | 91.2 | 81.1 | 91.6 | 88.4 | 86.0 | 96.0 | 73.7 | 94.1 | 83.4 | 60.5 | 77.7 | 83.6 |

| KPConv [38] | 86.4 | 84.6 | 86.3 | 87.2 | 81.1 | 91.1 | 77.8 | 92.6 | 88.4 | 82.7 | 96.2 | 78.1 | 95.8 | 85.4 | 69.0 | 82.0 | 83.6 |

| 3D-GCN [6] | 85.1 | 83.1 | 84.0 | 86.6 | 77.5 | 90.3 | 74.1 | 90.0 | 86.4 | 83.8 | 95.6 | 66.8 | 94.8 | 81.3 | 59.6 | 75.7 | 82.8 |

| DGCNN [41] | 85.2 | 84.0 | 83.4 | 86.7 | 77.8 | 90.6 | 74.7 | 91.2 | 87.5 | 82.8 | 95.7 | 66.3 | 94.9 | 81.1 | 63.5 | 74.5 | 82.6 |

| PAConv [47] | 86.1 | 84.3 | 85.0 | 90.4 | 79.7 | 90.6 | 80.8 | 92.0 | 88.7 | 82.2 | 95.9 | 73.9 | 94.7 | 84.7 | 65.9 | 81.4 | 84.0 |

| PCT [13] | 86.4 | 85.0 | 82.4 | 89.0 | 81.2 | 91.9 | 71.5 | 91.3 | 88.1 | 86.3 | 95.8 | 64.6 | 95.8 | 83.6 | 62.2 | 77.6 | 83.7 |

| PS-Former (ours) | 86.4 | 85.2 | 81.3 | 86.1 | 81.4 | 91.6 | 72.0 | 92.3 | 88.4 | 85.5 | 96.2 | 73.5 | 95.3 | 83.7 | 61.7 | 76.9 | 82.9 |

4.3 Scene Segmentation on S3DIS

|

|

|

|

|

|

|

|

Dataset and implementation. We also use S3DIS[1] to evaluate our model on scene segmentation. The dataset contains 6 areas and 271 rooms in total. Each data sample contains 4,096 points with each point assigned with a label from 13 classes. Different from ModelNet40 classification and ShapeNet part segmentation, the input of each point contains 9-dimensional features including coordinate, RGB and normal vector information. Due to this change, our model removes subtraction in the gather operation in the Condensation Layer since we want to keep the color and norm information in each point’s structure information while keeping the position information to continue gradually enriching the structure information through the Position-to-Structure Attention Layer. In our training-testing procedure, the training dataset is all the areas except area 5 which leaves area 5 to be the testing dataset. The same optimizer and learning schedule are used as part segmentation which is SGD with the initial learning to 0.1 and Cosine Annealing Scheduler[26].

Results and analysis. The mAcc and mIou of the test dataset are presented in Table 3. Note that since our model is trying to decouple the position and structure features and gradually enrich the structure features through the Position-to-Structure Attention Layer, this task which includes RGB and normal vector input as well as position input is not a direct fit for our task. However, it can still benefit from it given by our results. Furthermore, we present several visualization results on Area 5 in Figure 8.

| Model | mAcc | mIoU |

|---|---|---|

| PointNet [32] | 48.98 | 41.09 |

| PCNN [2] | 67.01 | 58.27 |

| PointCNN [24] | 63.86 | 57.26 |

| SPG [21] | 66.50 | 58.04 |

| HPEIN [17] | 68.30 | 61.85 |

| DGCNN [41] | 84.10 | 56.10 |

| KPConv [38] | 72.8 | 67.1 |

| PointWeb [53] | 66.64 | 60.28 |

| PointTransformer [54] | 76.5 | 70.4 |

| PCT [13] | 67.65 | 61.33 |

| PS-Former (ours) | 68.57 | 59.36 |

5 Ablation Studies

In this section we explore the effects and impact of the proposed components of the PS-Former—Position-to-Structure Attention, Condensation Layer—and performances of other model variations through ablations evaluated on ModelNet40 classification. Training parameters and testing measures are kept consistent with the description in Section 4.1.

5.1 Position-to-Structure Attention

We analyze the importance of PS-Former’s awareness and distinction between position information and structure information by altering its use and access to such features, as reported in Table 4. We conduct two ablations: 1) removing structure features completely, 2) combining structure features with position features. For the first ablation, the position-only features are fed through vanilla attention. Input dimension is doubled to keep attention dimensions and modeling capability constant. We observe that our model with the proposed Position-to-Structure Attention exhibits a +1.8% improvement over a position-only attention model. The decrease in performance for the first ablation likely comes from the loss of information on local structures. In the second ablation, the structure features are computed once and concatenated with position features to be passed through classical attention. Compared to the ablation, our proposed method performs +1.1% better. The results of the two ablations not only suggest the importance of structure features but also of an awareness of the distinction between structure and position.

5.2 Condensation Layer

In an effort to demonstrate the Condensation Layer’s performance improvements, we conduct ablations on the number of points sampled. As shown in Table 5, our proposed model with the Condensation Layer configured to 512 points performed better than 1024 points, suggesting the efficacy of our attention-based point sampling in creating information rich local structures. This observation coincides with our hypothesis made in Section 4.1. Furthermore, the down-sampling of points provides great relief in terms of computational efficiency because attention is an operation with complexity. Through the stated ablations, we verify the utility and desired properties of our proposed Condensation Layer.

5.3 Multi-Scale Approach

Previous research has demonstrated a boost in performance gained from multi-scale features that incorporate both global and local structure[34]. To investigate its effects regarding our PS-Former, we trained a multi-scale PS-Former and applied it to ModelNet40 for classification. The model is comprised of two branches: a PS-Former that samples 256 points and one that samples 1024 points. The features generated by the two are concatenated together after max pooling and fed through the same classification network used for Section 4.1. The model attained a best overall accuracy of 93.6%. We hypothesize that the multi-scale approach does not provide complementary information, as our Condensation Layer and Position-to-Structure Attention layers already serve as effective extractors of structural feature representation.

5.4 Cross Attention

We also conduct ablation studies on using the cross-attention operation to mediate position and structure feature interaction. After the Condensation Layer each point will have two features: position and structure. We use cross-attention to enrich the structure features from the position features. Besides this, we also conduct two experiments using cross-attention from structure to position and two-way interaction. The results are presented in Table 6. It can be seen clearly that with cross-attention from position features to structures, the result is still decent compared to PCT[13]. However, the application of cross-attention on structure to position, causes the results to go down. This further demonstrates that the weighted sum mechanism is not suited for capturing the structure features from the position features while the decoupling still works. The decrease in performance with structure enriching position validates our design of position to structure.

| Method | Accuracy (%) |

|---|---|

| (Vanilla cross attention) | |

| pos str | 93.3 |

| str pos | 92.5 |

| pos str & str pos | 92.8 |

| Position-to-Structure Attn (ours) | 93.9 |

6 Conclusion

In this paper, we have presented Position-to-Structure Transformer (PS-Former) for 3D points by designing a learnable Condensation Layer and Position-to-Structure Attention mechanism for effective and efficient point cloud feature extraction. Compared to the existing Transformer-based approaches in this domain, PS-Former is more general with less artificial feature engineering, as well as improved performance.

Acknowledgement This work is supported by NSF Award IIS-2127544.

References

- [1] Iro Armeni, Ozan Sener, Amir R Zamir, Helen Jiang, Ioannis Brilakis, Martin Fischer, and Silvio Savarese. 3d semantic parsing of large-scale indoor spaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1534–1543, 2016.

- [2] Matan Atzmon, Haggai Maron, and Yaron Lipman. Point convolutional neural networks by extension operators. arXiv preprint arXiv:1803.10091, 2018.

- [3] Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. End-to-end object detection with transformers. In ECCV, pages 213–229, 2020.

- [4] Xiaozhi Chen, Huimin Ma, Ji Wan, Bo Li, and Tian Xia. Multi-view 3d object detection network for autonomous driving. In CVPR, pages 1907–1915, 2017.

- [5] Zhiqin Chen and Hao Zhang. Learning implicit fields for generative shape modeling. In CVPR, 2019.

- [6] Hyeoncheol Cho and Insung S Choi. Three-dimensionally embedded graph convolutional network (3dgcn) for molecule interpretation. arXiv preprint arXiv:1811.09794, 2018.

- [7] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [8] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. In NAACL-HLT, 2019.

- [9] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In ICLR, 2021.

- [10] Nico Engel, Vasileios Belagiannis, and Klaus Dietmayer. Point transformer. IEEE Access, 9:134826–134840, 2021.

- [11] Georgia Gkioxari, Jitendra Malik, and Justin Johnson. Mesh r-cnn. In CVPR, 2019.

- [12] Ankit Goyal, Hei Law, Bowei Liu, Alejandro Newell, and Jia Deng. Revisiting point cloud shape classification with a simple and effective baseline. arXiv preprint arXiv:2106.05304, 2021.

- [13] Meng-Hao Guo, Jun-Xiong Cai, Zheng-Ning Liu, Tai-Jiang Mu, Ralph R Martin, and Shi-Min Hu. Pct: Point cloud transformer. arXiv preprint arXiv:2012.09688, 2020.

- [14] Linshu Hu, Mengjiao Qin, Feng Zhang, Zhenhong Du, and Renyi Liu. Rscnn: A cnn-based method to enhance low-light remote-sensing images. Remote Sensing, 13(1):62, 2021.

- [15] Qingyong Hu, Bo Yang, Linhai Xie, Stefano Rosa, Yulan Guo, Zhihua Wang, Niki Trigoni, and Andrew Markham. Randla-net: Efficient semantic segmentation of large-scale point clouds. In CVPR, pages 11108–11117, 2020.

- [16] Maximilian Jaritz, Jiayuan Gu, and Hao Su. Multi-view pointnet for 3d scene understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, 2019.

- [17] Li Jiang, Hengshuang Zhao, Shu Liu, Xiaoyong Shen, Chi-Wing Fu, and Jiaya Jia. Hierarchical point-edge interaction network for point cloud semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10433–10441, 2019.

- [18] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [19] Roman Klokov and Victor Lempitsky. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, pages 863–872, 2017.

- [20] Shiyi Lan, Ruichi Yu, Gang Yu, and Larry S Davis. Modeling local geometric structure of 3d point clouds using geo-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 998–1008, 2019.

- [21] Loic Landrieu and Martin Simonovsky. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4558–4567, 2018.

- [22] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R.E. Howard, W. Hubbard, and L.D. Jackel. Backpropagation applied to handwritten zip code recognition. Neural Computation, 1989.

- [23] Jiaxin Li, Ben M Chen, and Gim Hee Lee. So-net: Self-organizing network for point cloud analysis. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 9397–9406, 2018.

- [24] Yangyan Li, Rui Bu, Mingchao Sun, Wei Wu, Xinhan Di, and Baoquan Chen. Pointcnn: Convolution on x-transformed points. Advances in neural information processing systems, 31:820–830, 2018.

- [25] Xinhai Liu, Zhizhong Han, Yu-Shen Liu, and Matthias Zwicker. Point2sequence: Learning the shape representation of 3d point clouds with an attention-based sequence to sequence network. In AAAI, pages 8778–8785, 2019.

- [26] Ilya Loshchilov and Frank Hutter. Sgdr: Stochastic gradient descent with warm restarts. arXiv preprint arXiv:1608.03983, 2016.

- [27] Jiageng Mao, Xiaogang Wang, and Hongsheng Li. Interpolated convolutional networks for 3d point cloud understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 1578–1587, 2019.

- [28] Daniel Maturana and Sebastian Scherer. Voxnet: A 3d convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 922–928. IEEE, 2015.

- [29] Kirill Mazur and Victor Lempitsky. Cloud transformers: A universal approach to point cloud processing tasks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10715–10724, 2021.

- [30] Gregory P Meyer, Ankit Laddha, Eric Kee, Carlos Vallespi-Gonzalez, and Carl K Wellington. Lasernet: An efficient probabilistic 3d object detector for autonomous driving. In CVPR, pages 12677–12686, 2019.

- [31] Kaichun Mo, Paul Guerrero, Li Yi, Hao Su, Peter Wonka, Niloy J Mitra, and Leonidas J Guibas. Structurenet: hierarchical graph networks for 3d shape generation. ACM Transactions on Graphics, 38(6):1–19, 2019.

- [32] Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 652–660, 2017.

- [33] Charles R Qi, Hao Su, Matthias Nießner, Angela Dai, Mengyuan Yan, and Leonidas J Guibas. Volumetric and multi-view cnns for object classification on 3d data. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5648–5656, 2016.

- [34] Charles R Qi, Li Yi, Hao Su, and Leonidas J Guibas. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv preprint arXiv:1706.02413, 2017.

- [35] Haoxi Ran, Wei Zhuo, Jun Liu, and Li Lu. Learning inner-group relations on point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 15477–15487, 2021.

- [36] Peter Shaw, Jakob Uszkoreit, and Ashish Vaswani. Self-attention with relative position representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), 2018.

- [37] Hang Su, Subhransu Maji, Evangelos Kalogerakis, and Erik Learned-Miller. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE international conference on computer vision, pages 945–953, 2015.

- [38] Hugues Thomas, Charles R Qi, Jean-Emmanuel Deschaud, Beatriz Marcotegui, François Goulette, and Leonidas J Guibas. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 6411–6420, 2019.

- [39] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

- [40] Chu Wang, Babak Samari, and Kaleem Siddiqi. Local spectral graph convolution for point set feature learning. In Proceedings of the European conference on computer vision (ECCV), pages 52–66, 2018.

- [41] Yue Wang, Yongbin Sun, Ziwei Liu, Sanjay E Sarma, Michael M Bronstein, and Justin M Solomon. Dynamic graph cnn for learning on point clouds. Acm Transactions On Graphics (tog), 38(5):1–12, 2019.

- [42] Jiajun Wu, Yifan Wang, Tianfan Xue, Xingyuan Sun, Bill Freeman, and Josh Tenenbaum. Marrnet: 3d shape reconstruction via 2.5 d sketches. In Advances in Neural Information Processing Systems, 2017.

- [43] Wenxuan Wu, Zhongang Qi, and Li Fuxin. Pointconv: Deep convolutional networks on 3d point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9621–9630, 2019.

- [44] Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1912–1920, 2015.

- [45] Tiange Xiang, Chaoyi Zhang, Yang Song, Jianhui Yu, and Weidong Cai. Walk in the cloud: Learning curves for point clouds shape analysis. ICCV, 2021.

- [46] Saining Xie, Sainan Liu, Zeyu Chen, and Zhuowen Tu. Attentional shapecontextnet for point cloud recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4606–4615, 2018.

- [47] Mutian Xu, Runyu Ding, Hengshuang Zhao, and Xiaojuan Qi. Paconv: Position adaptive convolution with dynamic kernel assembling on point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3173–3182, 2021.

- [48] Qiangeng Xu, Xudong Sun, Cho-Ying Wu, Panqu Wang, and Ulrich Neumann. Grid-gcn for fast and scalable point cloud learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5661–5670, 2020.

- [49] Yifan Xu, Tianqi Fan, Mingye Xu, Long Zeng, and Yu Qiao. Spidercnn: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision (ECCV), pages 87–102, 2018.

- [50] Jiancheng Yang, Qiang Zhang, Bingbing Ni, Linguo Li, Jinxian Liu, Mengdie Zhou, and Qi Tian. Modeling point clouds with self-attention and gumbel subset sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3323–3332, 2019.

- [51] Li Yi, Vladimir G Kim, Duygu Ceylan, I-Chao Shen, Mengyan Yan, Hao Su, Cewu Lu, Qixing Huang, Alla Sheffer, and Leonidas Guibas. A scalable active framework for region annotation in 3d shape collections. ACM Transactions on Graphics (ToG), 35(6):1–12, 2016.

- [52] Li Yi, Hao Su, Xingwen Guo, and Leonidas J Guibas. Syncspeccnn: Synchronized spectral cnn for 3d shape segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2282–2290, 2017.

- [53] Hengshuang Zhao, Li Jiang, Chi-Wing Fu, and Jiaya Jia. PointWeb: Enhancing local neighborhood features for point cloud processing. In CVPR, 2019.

- [54] Hengshuang Zhao, Li Jiang, Jiaya Jia, Philip HS Torr, and Vladlen Koltun. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 16259–16268, 2021.