Plant Performance in Precision Horticulture:

Optimal climate control under stochastic dynamics

Abstract

This paper presents a risk mitigating, time-varying feedback control algorithm for crop production when state dynamics are subject to uncertainty. The model based case study concerns a 40 day production round of lettuce in a greenhouse where control input consists of daily and nightly temperature set points. The control problem was formulated in terms of a stochastic Markov decision process with the objective to maximize the expected net revenue at harvest time.

The importance of time-varying feedback and of risk mitigation was investigated by making a comparison with a controller that takes uncertainty into account but is static and a controller which is dynamic but ignores the uncertainty in the state dynamics. For the case of heat limited crop growth, and strict requirements on harvest weight precision, the dynamic stochastic controller outperformed the static controller in terms of both maximal expected net revenue (by 19 %) and state precision at harvest time (with 50 % less standard deviation). It also outperformed the deterministic controller for both criteria (15 % in maximal expected net revenue and 8 % less standard deviation). A detailed sensitivity analysis showed that such improvements in performance levels are robust, since they hold over large ranges of uncertainty in state dynamics, required harvest precision levels, starting days, and initial weights.

The results provide insights in potential of dynamic feedback and risk mitigation strategies for high precision requirements under uncertainty. Although the results should be interpreted with caution, they illustrate the considerable potential benefit for stochastic greenhouse climate control under uncertainty when high precision is required.

Keywords

Markov decision process, greenhouse, lettuce

1 Introduction

In precision horticulture, there are various causes that lead to uncertainty in the net revenues. First off, there exists quite some unexplained variation in crop development, which is corroborated by the observation that crop models display a broad range in yield prediction accuracy, from to [1, 2]. Uncertainty may also increase because of spatiotemporal variability in climatic variables [3] that is unforeseen or not modelled. Second, due to sensor noise it might be hard to obtain accurate estimates of the state of the crop and the climate, that form the starting point of any predictions about future development. Filter algorithms have been shown to be able to mitigate the effect of sensing errors using model based predictions [4, 5]. However, even well tuned filters may not meet the accuracy standards that are required in modern greenhouses [6]. Third, forecast errors of processes like the weather, energy prices and crop market can substantially increase prediction uncertainty and thereby hinder control performance [7, 8, 9]. Uncertainty in crop response and indoor climate dynamics is often modelled with parametric uncertainty [10, 11, 12], or stochastic noise [4]. State estimation methods such as Kalman filters are based on modeling the stochastic processes for noise in the state and the measured output [7, 13].

In this paper we focus on the control problem of optimizing the expected costs of a dynamic system with stochastic uncertainty. Our problem is in the class of Markov decision processes (MDP) [14] and can be solved by stochastic dynamic programming techniques [15]. Research that concerns MDP in precision farming spans a wide range of applications such as precision field irrigation [16], pig breeding [17], crop disease control [18, 19], and planting, fertilization and harvesting [20]. Greenhouse horticulture research for optimal control under stochastic disturbances includes optimal indoor climate management using a stochastic error forecast model [21, 22], optimal energy management under random renewable energy availability [23], temperature control under stochastic energy prices [24], and tracking and predicting crop states such as dry weight and leaf area in lettuce using a dynamic Bayesian network [25].

To deal with the high dimensional decision space and the corresponding computational challenges that characterize Markov decision process optimization, reinforcement learning for greenhouse climate control is an active topic of investigation [26, 27, 28]. Stochastic dynamics add a layer of complexity to the control problem, which increases computational demand and might hinder transparency of software and result in outcomes that are harder to explain. The question that arises is: what is the added performance of a complex stochastic controller compared to more straightforward control methods? So far, not much has been reported about the effectiveness of stochastic control in a greenhouse setting. A study which found that a stochastic controller outperformed expert growers in a tomato greenhouse regarding net profit (93%) and crop yield (by 10%) [29], suggests substantial benefits, but the question remains to what extent the complexity of the controller contributes to the performance.

This paper introduces a dynamic stochastic controller that is obtained by optimizing an MDP in terms of expected net revenue by means of dynamic programming. The case study concerns a 40 day production round of lettuce in a greenhouse where control input consists of daily and nightly temperature set points. The control problem was formulated with the objective to maximize the expected net revenue at harvest time and we obtain a control strategy with an excellent performance, a clear interpretation and limited complexity.

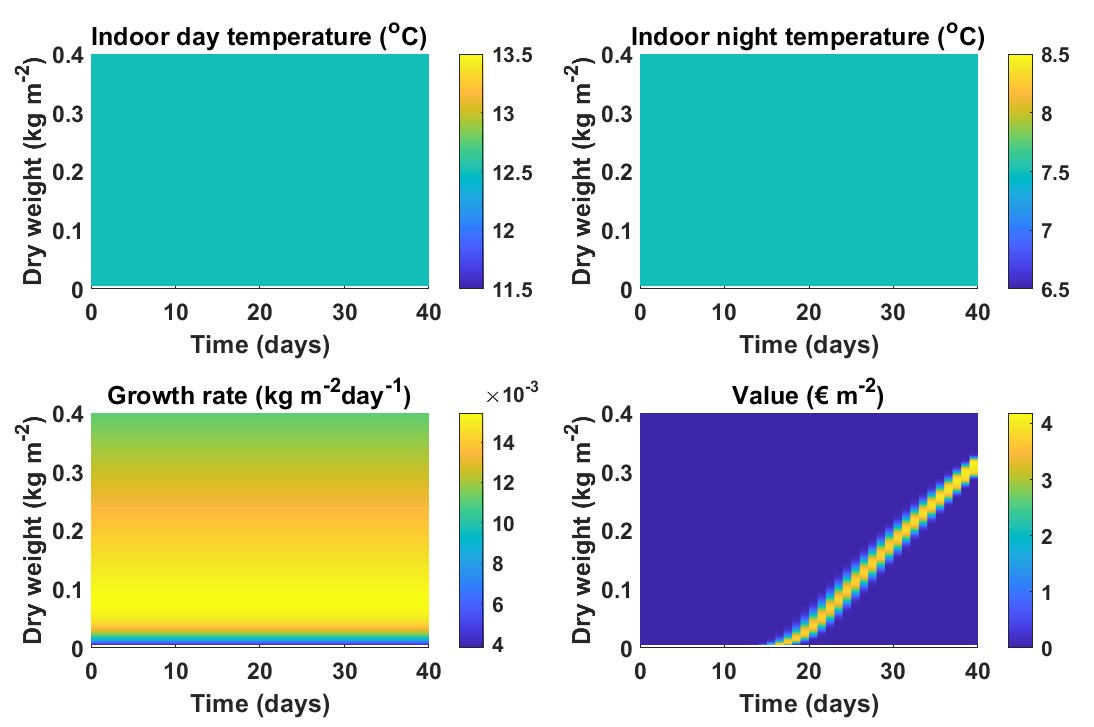

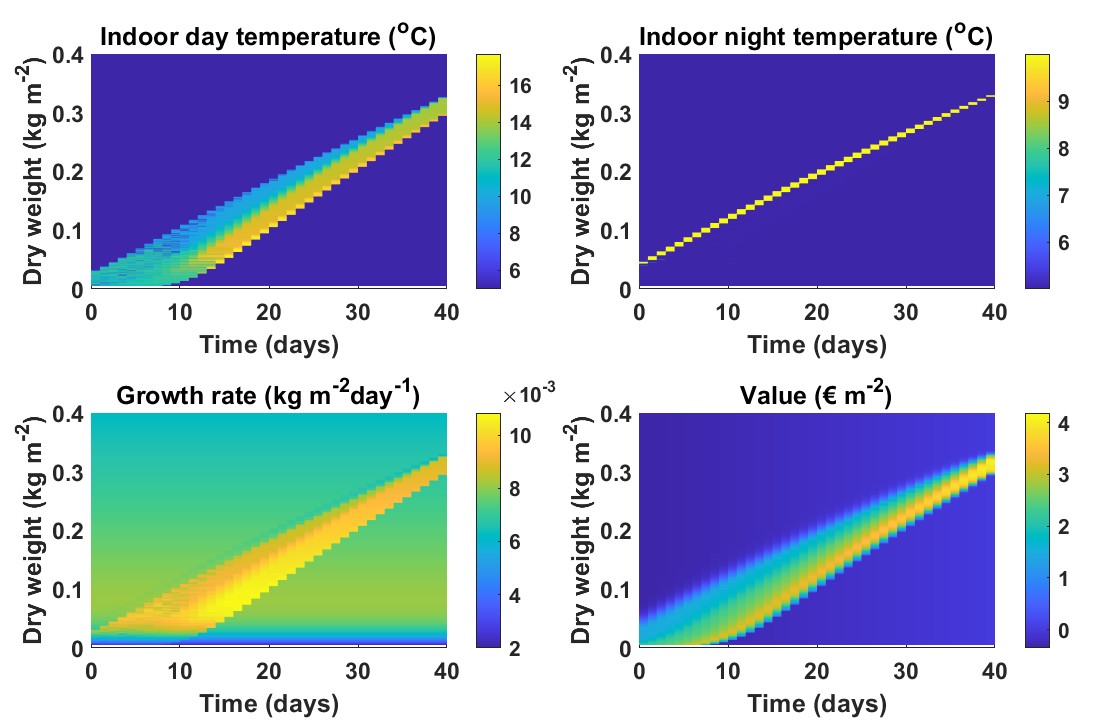

To study the properties of the optimal control strategy, the control policy, and corresponding dynamics and performance are visualized in order to gain insight into the rationale behind the control policy. The results are visualized in four maps that show on the discrete grid of time and state the controller’s set points for day and night temperatures, the associated crop growth rates, and the associated value functions in terms of expected net revenue.

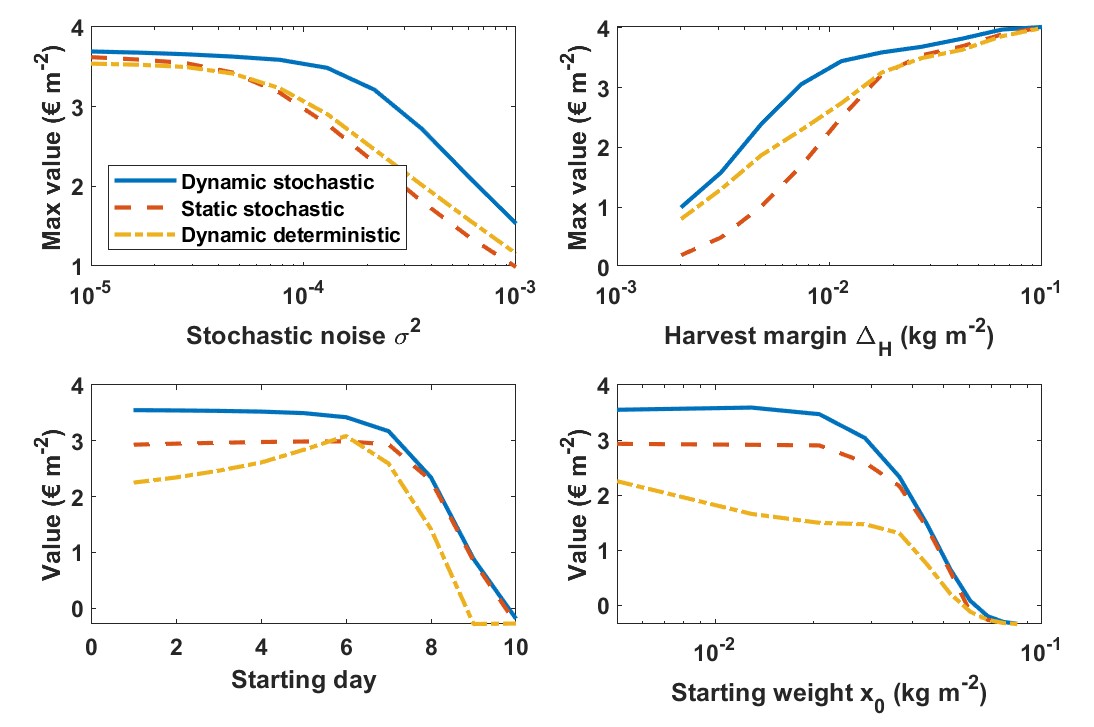

Subsequently, the optimized dynamic stochastic time-varying feedback controller is compared against a static stochastic controller and a dynamic but deterministic time-varying feedback controller. Performance was assessed in terms of maximum expected net revenue at the start of a production round, the crop state uncertainty at harvesting time, and robustness against deviations from the optimal starting time.

2 Materials and methods

2.1 System dynamics

For times we define the stochastic scalar dynamic system

| (1) | ||||

| (2) |

Here is the state variable, with time index ; time is discretized as for a given . The function describes the dynamic interactions between state and input. The vectors are the control inputs, which are determined by a state feedback control policy , which consists of a sequence of input functions for all time steps . The stochastic variables are independent and identically normally distributed. We use the notation for the states that are generated if a policy is applied for a given initial state .

2.2 Control problem

The goal is to determine a policy that maximizes value, defined by the expected revenues at the final time , minus the sum over all running costs . The associated control problem is to find a policy that solves the optimization problem

| (3) |

over all control laws such that is in an admissible set which we denote by . We use to denote the collection of admissible sets

2.3 Dynamic programming

Optimal solutions can be obtained through dynamic programming. The value of the goal function after time when starting from state value under policy is represented by the value functions for all and

| (4) |

The control problem can thus be formulated as finding the control policy resulting in the maximum value

| (5) |

for all and for all states that can be reached on day .

The dynamic programming principle implies that the sequence of functions can be found using the backward recursion

| (6) | |||||

| (7) |

with and the policy is optimal [30].

The conditional expectations depend on the conditional probability of state transitions described by the system model (1) [31], whereas the running costs at time are known once the policy has been decided. This results in

| (8) | ||||

| (9) |

and the conditional probabilities of state transitions are described by

| (10) |

2.4 Discretization

2.5 Crop and Greenhouse Model

We consider the case of lettuce crop (Lactuca sativa L.) production inside a greenhouse that is temperature controlled. Here is the crop state and the control input at time (in days) consists of the indoor temperature during the day and during the night, in degrees Celsius. The unit for the scalar plant mass is kg per square meter and the time step represents one day.

2.5.1 Growth Dynamics

The crop growth model is based on the single state crop model of [32], which describes the state dynamics of the dry weight of 16 crops per in terms of a function that is given by:

| (17) |

with the crop dry weight on day , a scale factor for the number of seconds in a day, and with [-], [-]. The photosynthesis as described by the function depends, among others, on the sequence of average radiation levels in the Netherlands for each hour of day which equals

since it is assumed that the measured outdoor radiation is partly absorbed or reflected by the cover. Here is the transmissivity of the cover. Empirical observations of values for are available and used as external input. The function characterizes respiration. Photosynthesis and respiration are described separately in the following two sub-paragraphs.

2.5.2 Photosynthesis

The photosynthesis rate on any given day is the weighted average over daily and nightly photosynthesis rates which are themselves the average photosynthesis over hours during the day and night respectively111The criterion to decide whether hour of day is day or night is defined as follows: it is night whenever radiation during that hour is lower than the critical value . This leads to the specification222Note that the unit is , and that the growth rate is converted to by in (17).

The values are based on the average daytime temperature , the average nighttime temperature and average daytime and nighttime radiation levels and that are defined below; we use these averages instead of hourly values to speed up computations. Temperature averages depend on the control variables for indoor temperatures but also on the outside temperature for hour of day , . It is assumed that on average the indoor temperature is in steady state. Since there is no active cooling (e.g. by pad fan or humidifiers), and since indoor temperature is usually kept equal to, or higher than outside temperature to prevent problems with high relative humidity, it is required that the indoor temperature is equal to, or higher than, the outside temperature. This gives the following averages:

Here denotes the maximum between and . The photosynthesis function itself is

with extinction coefficient [-], shoot leaf area ratio [m2 kg-1], root to total crop dry weight ratio [-]. It depends on

The carbon dioxide compensation point in this expression equals

We have [kg m-3], [-], [] and [], with an average density of in air of [kg m-3] (corresponding to 400 ppm), and photosynthetically active radiation ratio [-].

The effect of photorespiration on light use efficiency is

with light use efficiency coefficient [kg J-1]. The leaf conductance to carbon dioxide transport function satisfies

with [s-1] and [s-1]. Further,

with , and .

2.5.3 Respiration Dynamics

The respiration rate is averaged over the hours in a day, as was the case for photosynthesis,

with

| (18) |

with , and with [-]. Maintenance respiration for shoot is [s-1], maintenance respiration for root is [s-1] while as before [-] and .

2.6 Cost and revenue functions

2.6.1 Running costs

The running costs on day depend on the amount of heating required. This is a function of the incoming outside radiation for hour of day and the average daytime and nighttime temperatures based on the control set points and . Running costs also depend on the outside air temperature , sky temperature and wind speed , in a way that is specified below.

Heating is needed during hour of day when . Furthermore, Here is the fraction of radiation that is converted into sensible heat inside the greenhouse, and is the specific heat loss through ventilation and transmission heat per Kelvin temperature difference [].

It is assumed that both and remain constant over time. Heating costs are calculated separately for the heating demand in daily and nightly hours since at night it is assumed that the thermal screens are closed, to reduce heat loss.

The heating cost can then be expressed as:

in [€ ], with . The number represents the conversion from seconds to hours, and from joule to gigajoule (). Furthermore, is the estimated price in € per GJ gross energy333 This is based on a gross natural gas price of 0.04 €/kWh = 11€/GJ. , and is the water sided efficiency of the heater.

The energy flux is calculated as follows:

Here is specific heat of greenhouse air which is approximately 1206 [] at 20 oC air temperature, is the specific ventilation rate , the specific greenhouse cladding area, the energy screen factor, [] the average ventilation rate when the greenhouse is heated, and the average greenhouse height. The heat conductivity is a sum of effects due to radiation and convection so with [] and []. The constant is the emission constant for the greenhouse cover material for thermal infrared, and is the Stefan Boltzmann constant. Furthermore,

2.6.2 Revenues

The revenues associated with crop yield are expressed by the following goal function

| (19) |

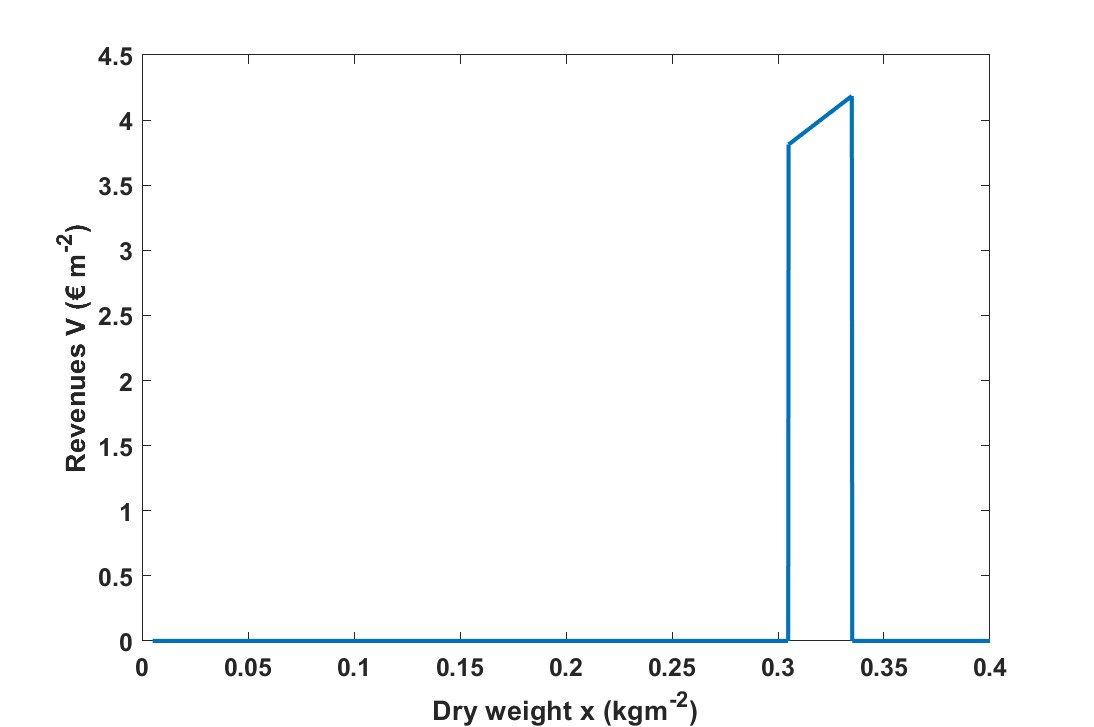

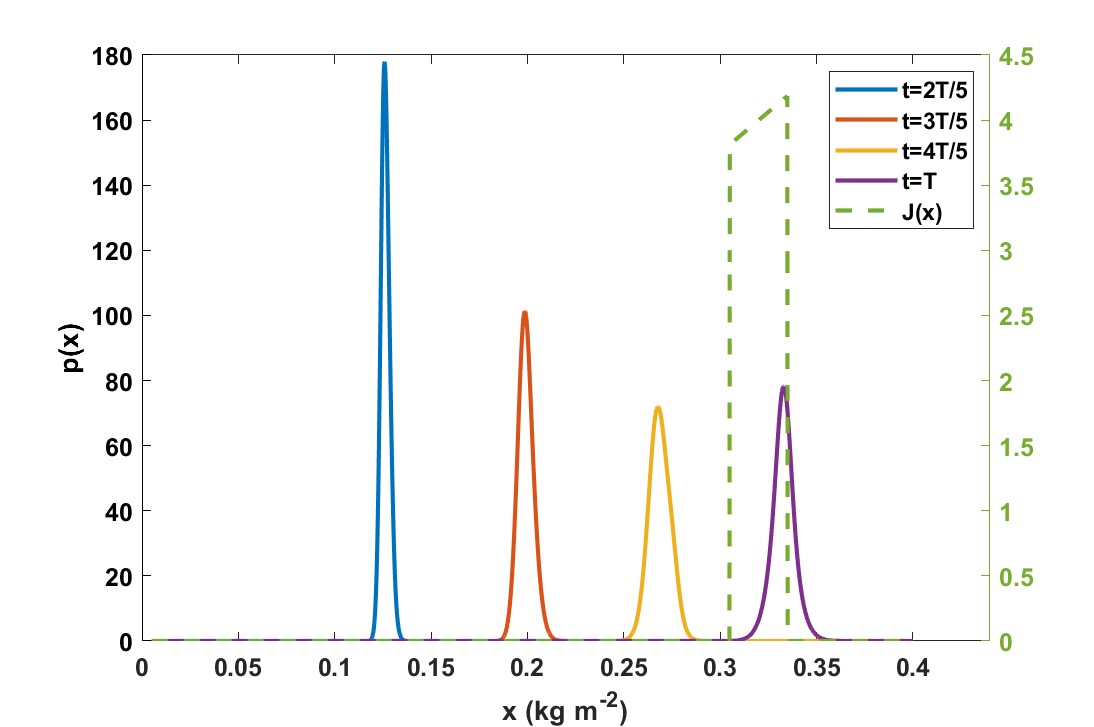

Here determines the allowed margin of harvested weight, e.g. due to delivery contracts based on consumer demand for product uniformity. The nominal value is . Further, at a dry matter content of 5% and [ €. The two constants represent the conversion from dry to fresh weight, and the revenues associated with fresh weight, respectively. Assuming a target fresh weight of 400 grams per crop head [35], and 16 plants per , this comes to a dry weight of . The revenue function is displayed in Figure 1.

2.7 Visualization

The outcomes of the dynamic programming algorithm to find the optimal policy form a 4-tuple for every day and every possible value of the state . Here is defined as the growth according to the optimal policy given the current state, so .

In the following section we show plots of these four characteristics of the optimal day and night temperatures, optimal plant growth and optimal revenues to create some intuition for the rationale behind the optimized policies.

2.8 Computational settings

-

•

All numerical computations were carried out within a Matlab environment. The computational grid used was . That is, the state was discretized into parts, and the time horizon was days, and 1 day.

-

•

The state space range is , and the initial state in the dynamic simulations was unless stated otherwise.

-

•

The optimization algorithm to solve equation (15) was carried out with the ’fmincon’ routine. The set of admissible control values is , in . This means that the night temperature set point is within the range , and the day set point is within the range .

-

•

The weather data was retrieved for 2014 from Bleiswijk, The Netherlands (52°02N 4°32E), as was done in a previous study [36].

-

•

For each selected day, the following period of 40 days is assumed to have the same daily weather pattern as the selected day. This simplification allows us to examine the control strategy based on solely crop state and time before harvest, without changes in weather. The uncertainty as a result of weather forecast errors is assumed to be accounted for by the introduction of uncertainty in the state process.

-

•

The maximum initial value was defined as , the maximum expected net revenue at the start of production cycle, with initial value .

2.8.1 Dynamic stochastic controller

The following settings were used to investigate the system dynamics, control policy, and performance.

-

•

The nominal weather pattern has relatively much light, and low temperatures (on day 79 in 2014, mean temperature was 4.6 , and the mean light intensity was ), which creates a heat limitation in the greenhouse. That is, extra heating during the day will result in increased crop growth. The control laws and corresponding performance have been computed for two other weather patterns: for day 5 of the year (with mean temperature -4 , and mean light intensity ), and for day 187 (with mean temperature 18 , and mean light intensity ).

-

•

The nominal level of the parameter which determines the uncertainty in the state dynamics was .

2.8.2 Comparison to other controllers

The following settings and methods were used to compare the dynamic stochastic controller to two other controllers.

-

•

The static stochastic controller input , as a function of starting time was determined by carrying out the optimization in equation (3), using the ’fmincon’ algorithm in Matlab for varying starting times, and for fixed initial weight .

-

•

A dynamic deterministic optimal controller was found by applying the dynamic stochastic control algorithm at nominal settings, with a variance that was reduced with a factor 50 (). Strictly speaking this is still a stochastic controller, but it approaches a deterministic one in the sense that it is designed under the assumption that uncertainty is almost absent (as can be seen in figure 6) and therefore deemed negligible.

-

•

The controllers were compared to the dynamic stochastic controller by evaluating the expected net revenues at the optimal starting time.

-

•

A separate sensitivity analysis was carried out for each of four relevant parameters. The performance of the controllers was compared for different levels of uncertainty , harvest margin , starting day, and initial weight . The performance was represented by the maximal initial expected revenue for variations in uncertainty and harvest margin. For variations in starting day and starting weight, the performance was represented by the value ( for corresponding to the selected starting day and starting weight ). For each simulation the deterministic controller was designed assuming an extremely small value of (as described above), and the performance was calculated using the actual value. For each selected parameter value, and for each controller, the optimal control policy for that specific parameter value was applied.

3 Results

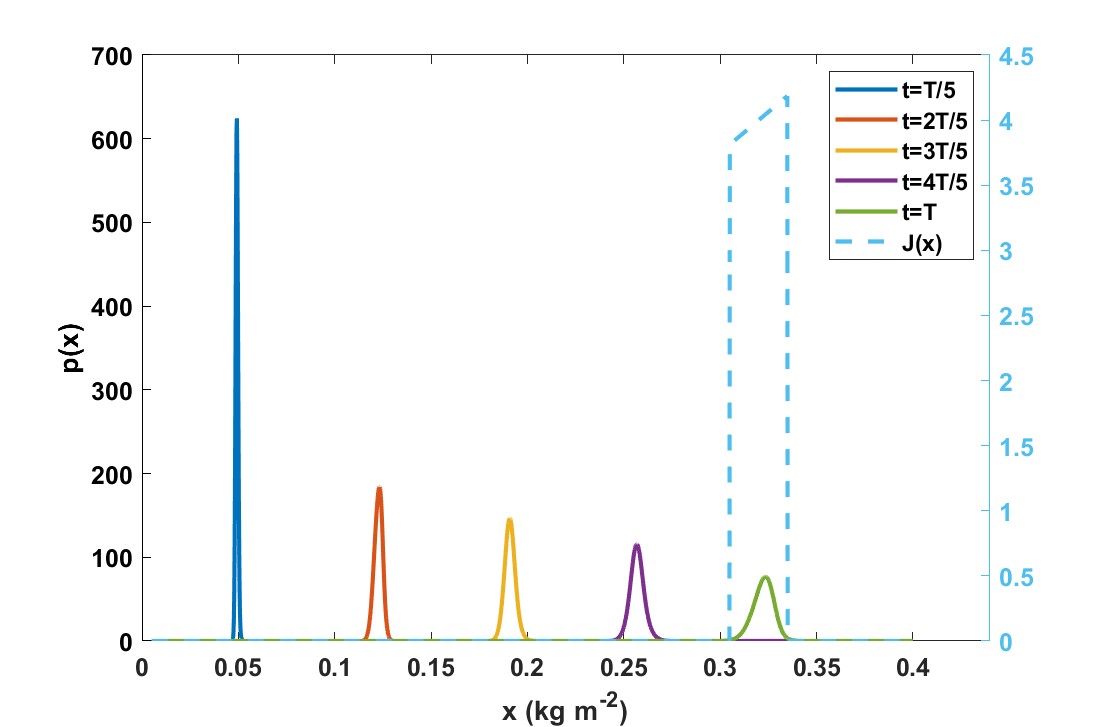

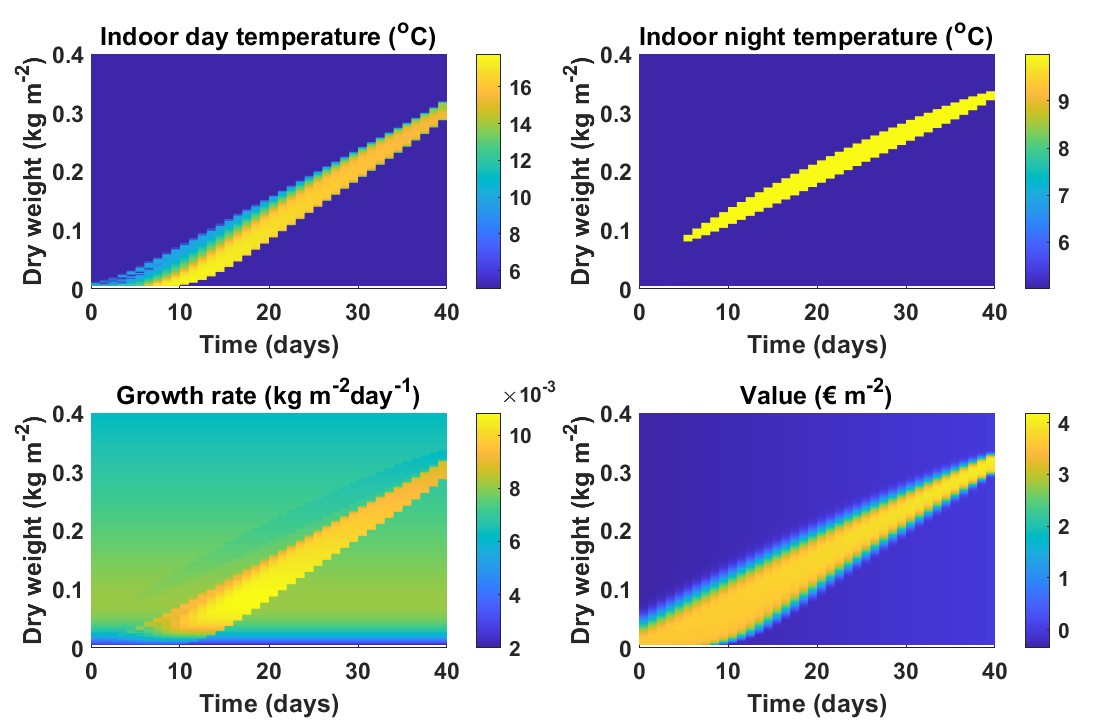

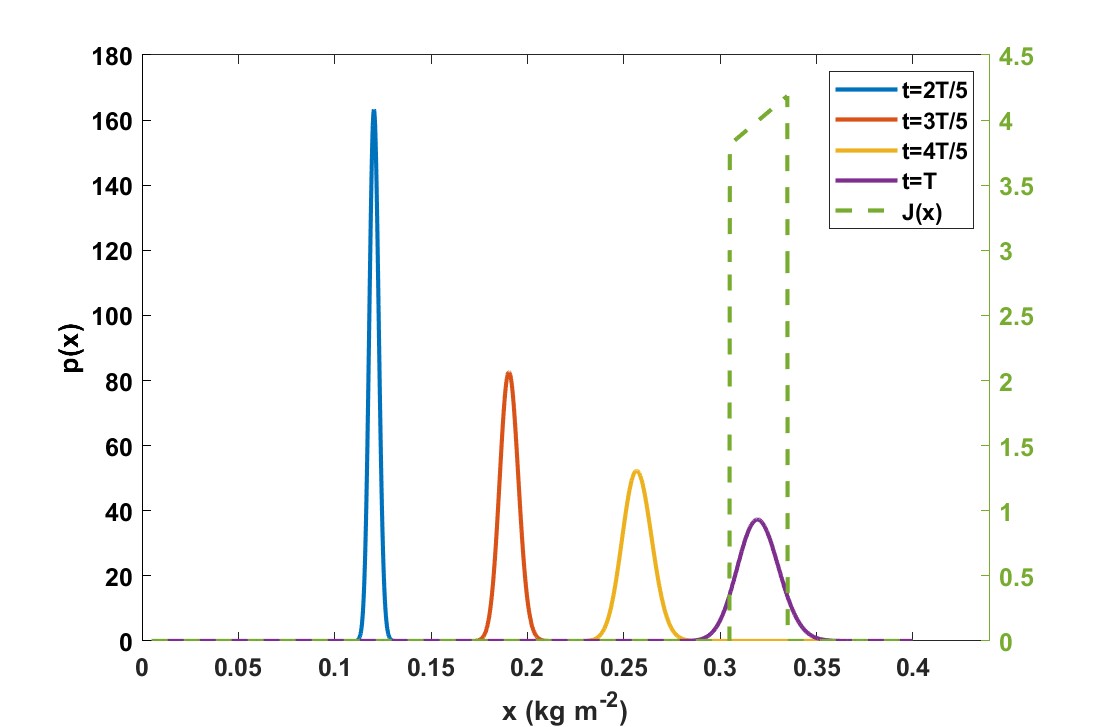

The controlled dynamics of the probability density of crop weight for different times after the starting time can be found in Figure 2, together with the goal function. Figure 3 shows 1) the control strategy (daily and nightly temperature set-points), 2) resulting growth rates, and 3) the associated value function (expected net revenue) for all and in four heat maps with color scales.

3.1 Controlled dynamics

Figure 2 shows how the probability density of crop weight disperses over time. The final dispersion is relatively small compared to the allowed harvest margin, so there is not much risk that the harvest weight will end up outside the allowed margin (dotted line).

The controller balances optimality with robustness towards the effects of uncertainty. An optimal harvest weight would be 345 since this maximizes but a distribution centered around 320 since this minimizes the risk that crop weight will fall outside the required weight range. The mean of the state at harvest time that is realized by the control action is . This is slightly higher than the target weight of , and the risk of a zero payoff is very small.

3.2 Control action

The optimal indoor night and day temperatures show clearly that the decision to heat or not depends on time and state. This suggests that a controller whose actions do not depend on time or state could be suboptimal, and this will indeed be confirmed in our later discussion of Figures 5 and 6.

The regions in the state space with the most control action form convex yellow bands in figure 3. In these regions growth rate and consequently expected net revenues are influenced the most. There is little to no control input outside these quite narrow regions, since there is only a single input (heating) that can be exploited. Including more actuators such as lamps and blackout screens might result in larger controlled regions. Energy cost considerations do not seem to influence the sizes of the controlled regions much. In a study not shown here, it was found that decreasing the energy price by a factor 2 barely altered the controlled regions.

3.3 Controlled growth

The controlled regions translate into a single band in the growth rate plot where the values are relatively high. For these states it is likely that the crop weight at harvest time will be inside the desired range of harvest weight defined in (19), i.e. close to the target weight of 320 . Outside the band the value is zero or close to zero, since the harvest weight is expected to fall outside that range. The indoor day temperature is used to elevate the growth rate: under high light intensities and low temperatures, growth is heat limited due to inhibition of metabolic processes that convert carbohydrates produced by photosynthesis, to dry matter. The indoor night temperatures is used to lower the growth rate (in absence of photosynthesis in the night, a higher temperature will increase the maintenance respiration rate (equation (18)) and thereby decrease growth rate (equation (17)). The regions with elevated day and night temperatures coincide largely with the yellow band in the value plot.

3.4 Revenues

The dispersion of the state densities translates into dispersion of the value function, which narrows and brightens as it approaches the time of harvest. An early start of the crop production cycle leads to more dispersion of the bands in the value plot since increasing the length of a production cycle will increase the uncertainty in the outcomes. On average, the value function increases with time, since the remaining cumulative running costs decrease over time. The values just outside the controlled regions can become slightly negative, due to the constraint that the minimal indoor temperature equals the outdoor temperature. Especially at night, the heat loss due to radiation may require additional heating input, which may result in a negative net revenue.

The sensitivity of the optimal expected net revenue with respect to the starting day of the growth cycle is quite limited. This value varies only 1.5 % over the first 5 days. This shows that the application of a stochastic dynamic controller can compensate for the effects of choosing a different starting day in the production cycle.

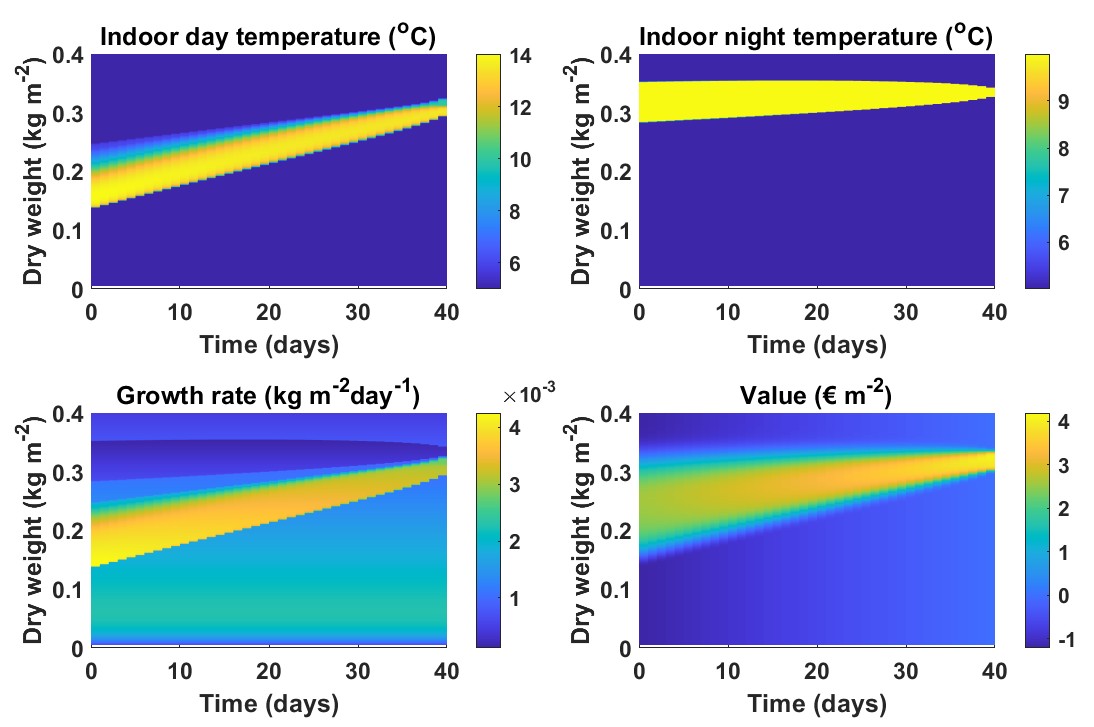

3.5 Influence of weather patterns

To assess the influence of the weather, optimal control design has been repeated for two other starting days, day 5 and day 187 in 2014. These days were selected since they show weather patterns that differ markedly from that on day 79. Figure 4 shows how the control policy depends on the weather.

On day 5, there was a low temperature and a low light intensity: the mean temperature was -4 and the mean light intensity was .

The growth rate plot shows that only limited growth is possible. The maximal growth rate is around 4 , compared to 10 for day 79, which results in a more moderate growth curve. The value plot suggests an optimal initial weight of around 0.25 , and during the production cycle of 40 days only 0.07 of dry weight is gained. However, the costs of starting with such developed plants will be much higher than the costs of a start with smaller plants, and this aspect is not incorporated in the cost function. For a full performance assessment for different weather types, one may need to adapt the duration of the production round to allow a full crop growth cycle for each weather type. Extra costs for running the greenhouse for a longer time, such as staff work hours and rent, would then also have to be incorporated.

At day 187 growth the mean temperature was 18 , and the mean light intensity was . This means that growth is more restricted due to the light then as a result of the temperature. There is therefore no role at all for heating as a means to control growth, and the daily and nightly set points assume the values of the outside temperatures during day and night, respectively. Despite it being light limited, the maximal growth rate is relatively high: around 14 , compared to 10 for day 79, due to the high mean light intensity. The value plot indicates an optimal state trajectory with a steep growth curve, and a production round that only lasts around 24 days. The thin yellow band in the value plot can be explained by low dispersion of the state due to a short production round, combined with the absence of feedback control to steer suboptimal outcomes towards better values.

4 Comparison with other controllers

The stochastic control policy is state and time dependent. Its performance is compared to a static control policy that does not depend on state or time, but is optimized for stochastic state dynamics. The second comparison is against a dynamic deterministic controller, that is optimized with respect to state and time, but does not deal with uncertainty in the state.

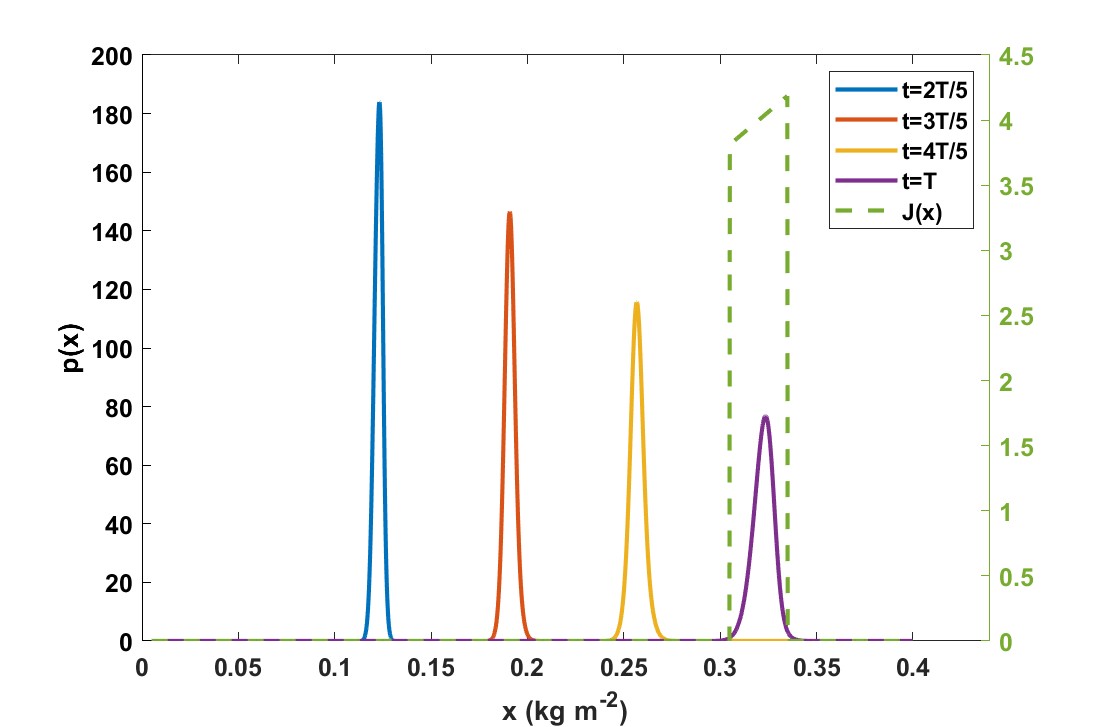

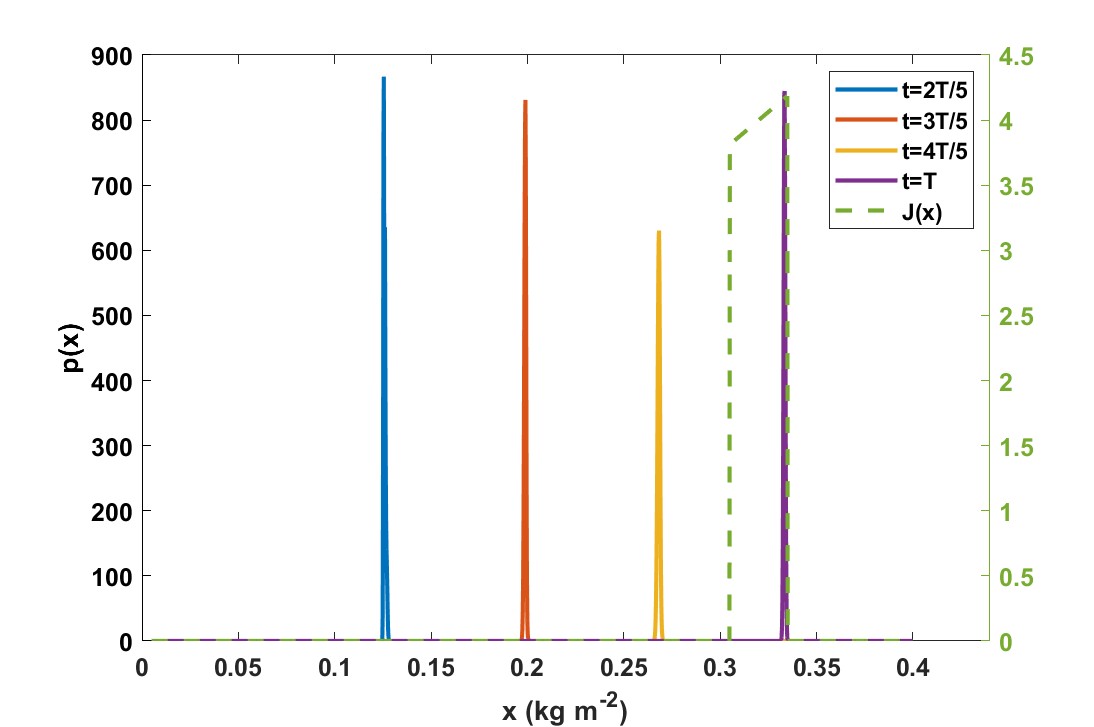

4.1 Comparison of controlled state dynamics

Figure 5 shows The dynamics of state probabilities with a static stochastic controller, compared to the dynamic stochastic controller for the nominal case, starting from day 1. It can be seen that the uncertainty is considerably larger when this static control is used. At harvest time the standard deviation of the state is almost twice as large compared to the dynamic stochastic controller ( versus ), because the latter actively steers the weight towards the optimal trajectory by adjusting the input whereas the static controller does not have such a compensation mechanism. Furthermore, static control results in a smaller maximal value at the starting time compared to the dynamic stochastic controller ( versus € ).

4.2 Comparison of controller action

Figure 6 shows the state dynamics when a dynamic but deterministic controller is used. When the controller is applied to the (almost) deterministic system that it is designed for, it steers the state precisely towards the value which maximizes revenues. However, when it is applied to the stochastic system, the standard deviation at harvest time is larger than the one generated by the dynamic stochastic controller: instead of . The state that maximizes is theoretically optimal but not very robust, because even a small positive deviation from this optimum will result in zero revenues from harvesting. Since the controller is not designed to deal with uncertainty, it does not balance optimality in expectation with robustness in the face of uncertainty.

Figure 7 shows the control policy and performance of the deterministic controller. Comparison with Figure 3 shows that for indoor day temperature, the size of the controlled region is now a bit larger. The magnitude of the control input is a subtle function of time and state, as can be seen by the nuanced color changes within the control band. This may be caused by the assumption that the dynamics are (almost) precisely known, which allows for more subtle input control.

Applying the deterministic controller to the stochastic system results in a considerable loss of initial value compared to the dynamic stochastic controller ( versus €). There is no sharp contrast anymore between regions with strong or weak control action: the values decline more gradually. The fact that the bandwidth with active control is much smaller than for the dynamic stochastic controller indicates a loss of robustness towards deviations of the state from its optimal trajectory.

4.3 Comparison of performance

Figure 8 shows the sensitivity of the performance, in terms of net revenue, for changes in the following configuration parameters: the level of uncertainty , the harvest margin , the starting day and the starting weight .

The dynamic stochastic controller outperforms the static stochastic and dynamic deterministic controllers for all parameter choices. This was to be expected since for the latter two state dynamics or uncertainty are discarded in the design process. For some cases, the added value of including dynamics or uncertainty turns out to be substantial, but the increase in value varies with the value of the configuration parameters.

The upper left plot reveals that the dynamic stochastic controller outperforms the other controllers by a large margin for a substantial range of levels of uncertainty. If uncertainty is small we observe similar performance under dynamic deterministic and dynamic stochastic control laws. For very small values of we also observe limited performance differences between the static stochastic and dynamic stochastic controllers, indicating that in this case the state dynamics do not play a large role. When uncertainty is large, the performance deteriorates for all controllers, since the dispersion in the state density causes the standard deviation at harvest time to be larger than the allowed harvest margin.

The upper right plot shows performance as a function of allowed harvest margins. For values above all controllers show more or less the same performance. As explained in 4, dynamic feedback and risk mitigation lead to the strongest improvements under more precise harvest requirements. A decrease in harvest margin to levels below results in a more or less linear decrease in performance for all controllers.

The lower left plot shows the sensitivity with respect to a change in the start of the production round. It shows that the deterministic controller is not very robust in the face of such changes. This confirms the earlier observation from Figure 7 that the bandwidth of high revenue values is very small.

The results in the lower right plot also show the severe limitations of the dynamic deterministic controller for different starting weights: losses may be as high as for some cases. This confirms what can be seen from the values along the vertical axis at the starting day in Figure 7.

5 Conclusions and suggestions for further research

The dynamic stochastic controller that we designed for a greenhouse model with uncertain dynamics outperformed the stochastic but static controller and the dynamic but deterministic controller considerably in terms of maximal expected net revenue (by 19 % and 15 % respectively). This testifies to the value of incorporating knowledge of time and state variables in greenhouse control systems, to allow time-varying feedback and the mitigation of uncertainty.

The dynamic stochastic controller also achieved better state precision at harvest time (50 % and 8% less standard deviation, respectively) than the static controller. This suggests that time-varying feedback may increase state precision dramatically, and that taking uncertainty into account may increase state precision considerably.

The sensitivity analysis results indicate that the added value is largest in the case of large uncertainty in state dynamics, high required precision in harvest weight, and possibly large deviations from the optimal starting day and the optimal starting weight. As discussed in the introduction, these cases are of particular relevance in the modern greenhouse industry, where prediction uncertainty is generally large.

5.1 Crop physiology

By visually linking together the optimal control policy, subsequent crop dynamics and performance in terms of expected net revenues as a function of time and state (figure 3), transparency is created in the sense that some mechanisms that underlie the outcomes of a rather complex optimization algorithm become intuitively clear. In particular, the comparison between control input, crop dynamics, and the value function reveals how temperature adjustments are used to steer the weight in case it deviates from its optimal trajectory towards a desirable harvest weight.

It is particularly interesting to see that an elevated indoor temperature is prescribed to accelerate the crop growth rate during daytime and to decelerate it during nighttime. Heating during the day to accelerate the growth makes sense physiologically, since increasing assimilate production and conversion to cell matter requires heat and light. Heating during the night to decelerate the growth makes sense as well, since without light there will be no dry matter production, so assimilate loss will increase due to increased maintenance respiration.

However, it is well known that an imbalance between heat and light input can result in thick yellow leaves due to starch buildup in case of heat limitation, or create long thin leaves in case of light limitation. One may want to avoid violations to the requirements regarding the heat-light balance through refinements in the definition of the control input domain . This may also help to define further model improvements in the form of model extensions, such as added state variables or control variables.

5.2 Computational demand

Model extensions come at a computational price as a result of the so-called curse of dimensionality. A possible way to mitigate higher computational costs is to employ a reinforcement learning strategy [26, 27, 28], where an optimal policy is constructed by exploring only a fraction of all possible states. As can be see in the control map (e.g. figure 3), a large fraction of the possible states is unlikely to be realized (large weights near the start, and small weights near the end of the cultivation round), hence an optimal policy does not necessarily need to cover those instances.

5.3 Refinement of the model for uncertainty

In our greenhouse specification, all uncertainty sources are indirectly modelled via the stochastic dynamics of the state. In order to disentangle the roles of the various sources of uncertainty, a promising approach could be to extend the framework with a state estimator such as the Kalman filter, to incorporate the fact that in practice it may be impossible to observe the state exactly. In this paper, the control policy is designed based on the assumption that the crop state can be measured online and without error. In practice, online crop weight measurement is still not standard, even in sophisticated greenhouse systems, since it requires costly weight sensors or the employment of state of the art computer vision via cameras to estimate weight in a smart way. Promising results have been achieved e.g. via point cloud estimation [37], or a neural network approach [38]. In these studies the measurement errors were quite small, resulting in high values (between and ) but they may still negatively effect control performance. Hence, it would be useful to investigate the effect of measurement accuracy on control performance in further research.

Another possible extension of the framework would be to incorporate uncertainty in the running costs. Energy prices vary over time in an unpredictable manner and one might want to incorporate the stochastic nature of energy costs in a more realistic model. In a similar vein, modelling uncertainty in the weather may help to design controllers which are even more robust to the changing circumstances in which they have to operate.

We believe that these and the other suggestions for further studies may offer valuable additional insights, but we expect that they will leave the conclusions of the present study intact: risk mitigation and a time-varying feedback control policy can lead to considerable improvements for greenhouse management in an uncertain environment.

Acknowledgements

We would like to thank Christian Hamster (Wageningen University) for useful discussions.

References

- [1] Hendrik Poorter, Niels PR Anten, and Leo FM Marcelis. Physiological mechanisms in plant growth models: do we need a supra-cellular systems biology approach? Plant, cell & environment, 36(9):1673–1690, 2013.

- [2] Isabella Righini, Bram Vanthoor, Michèl J Verheul, Muhammad Naseer, Henk Maessen, Tomas Persson, and Cecilia Stanghellini. A greenhouse climate-yield model focussing on additional light, heat harvesting and its validation. Biosystems Engineering, 194:1–15, 2020.

- [3] Jos Balendonck, EA Van Os, Rob van der Schoor, BAJ Van Tuijl, and LCP Keizer. Monitoring spatial and temporal distribution of temperature and relative humidity in greenhouses based on wireless sensor technology. In International Conference on Agricultural Engineering-AgEng, pages 443–452, 2010.

- [4] SL Speetjens, JD Stigter, and G Van Straten. Towards an adaptive model for greenhouse control. Computers and electronics in agriculture, 67(1-2):1–8, 2009.

- [5] Ibrahim A Hameed. Using the extended kalman filter to improve the efficiency of greenhouse climate control. International Journal of innovative Computing, information and control, 6(6):2671–2680, 2010.

- [6] S. van Mourik, van Beveren P. J. M., I. L. López-Cruz, and E. J. van Henten. Improving climate monitoring in greenhouse cultivation via model based filtering. Biosystems Engineering, vol. 181, pp. 40-51, 2019.

- [7] W. J. P. Kuijpers, D. Katzin, S. van Mourik, D. J. Antunes, S. Hemming, and M. J. G. van de Molengraft. Lighting systems and strategies compared in an optimally controlled greenhouse. Biosystems Engineering, vol. 202, pp. 195-216, 2021.

- [8] Yuanping Su, Lihong Xu, and Erik D. Goodman. Multi-layer hierarchical optimisation of greenhouse climate setpoints for energy conservation and improvement of crop yield. Biosystems Engineering, 205:212–233, 2021.

- [9] Henry J. Payne, Silke Hemming, Bram A.P. van Rens, Eldert J. van Henten, and Simon van Mourik. Quantifying the role of weather forecast error on the uncertainty of greenhouse energy prediction and power market trading. Biosystems Engineering, 224:1–15, 2022.

- [10] MA Vazquez-Cruz, R Guzman-Cruz, IL Lopez-Cruz, O Cornejo-Perez, I Torres-Pacheco, and RG Guevara-Gonzalez. Global sensitivity analysis by means of efast and sobol methods and calibration of reduced state-variable tomgro model using genetic algorithms. Computers and Electronics in Agriculture, 100:1–12, 2014.

- [11] IL López-Cruz, A Martínez-Ruiz, A Ruiz-García, and M Gallardo. Uncertainty analyses of the vegsyst model applied to greenhouse crops. In XXX International Horticultural Congress IHC2018: III International Symposium on Innovation and New Technologies in Protected 1271, pages 199–206, 2018.

- [12] Rosario Guzmán-Cruz, R Castañeda-Miranda, Juan José García-Escalante, IL López-Cruz, Alfredo Lara-Herrera, and JI De la Rosa. Calibration of a greenhouse climate model using evolutionary algorithms. Biosystems engineering, 104(1):135–142, 2009.

- [13] Frauke Oldewurtel, Colin Neil Jones, Alessandra Parisio, and Manfred Morari. Stochastic model predictive control for building climate control. IEEE Transactions on Control Systems Technology, 22(3):1198–1205, 2013.

- [14] Richard J Boucherie and Nico M Van Dijk. Markov decision processes in practice. Springer, 2017.

- [15] Sheldon M Ross. Introduction to stochastic dynamic programming. Academic press, 2014.

- [16] Truong Thu Huong, Nguyen Huu Thanh, Nguyen Thi Van, Nguyen Tien Dat, Nguyen Van Long, and Alan Marshall. Water and energy-efficient irrigation based on markov decision model for precision agriculture. In 2018 IEEE Seventh International Conference on Communications and Electronics (ICCE), pages 51–56. IEEE, 2018.

- [17] S Kovács, P Balogh, et al. Application of the hierarchic markovian decision processes in the decision making processes of pig keeping. Agrárinformatika Folyóirat, 3(2):37–49, 2012.

- [18] David W Onstad and Rudy Rabbinge. Dynamic programming and the computation of economic injury levels for crop disease control. Agricultural Systems, 18(4):207–226, 1985.

- [19] JE Sells. Optimising weed management using stochastic dynamic programming to take account of uncertain herbicide performance. Agricultural Systems, 48(3):271–296, 1995.

- [20] David Boussios, Paul V Preckel, Yigezu A Yigezu, Prakash N Dixit, Samia Akroush, Hatem Cheikh M’hamed, Mohamed Annabi, Aden Aw-Hassan, Yahya Shakatreh, Omar Abdel Hadi, et al. Modeling producer responses with dynamic programming: A case for adaptive crop management. Agricultural Economics, 50(1):101–111, 2019.

- [21] Wouter J.P. Kuijpers, Duarte J. Antunes, Simon van Mourik, Eldert J. van Henten, and Marinus J.G. van de Molengraft. Weather forecast error modelling and performance analysis of automatic greenhouse climate control. Biosystems Engineering, 214:207–229, 2022.

- [22] A Della Noce, M Carrier, and P-H Cournède. Optimal control of non-smooth greenhouse models. In International Symposium on Advanced Technologies and Management for Innovative Greenhouses: GreenSys2019 1296, pages 125–132, 2019.

- [23] Peng Zhuang, Hao Liang, and Mitchell Pomphrey. Stochastic multi-timescale energy management of greenhouses with renewable energy sources. IEEE Transactions on Sustainable Energy, 10(2):905–917, 2018.

- [24] Francisco García-Mañas, Francisco Rodríguez, Manuel Berenguel, and José María Maestre. Multi-scenario model predictive control for greenhouse crop production considering market price uncertainty. IEEE Transactions on Automation Science and Engineering, 2023.

- [25] Alexander Kocian, Daniele Massa, Samantha Cannazzaro, Luca Incrocci, Sara Di Lonardo, Paolo Milazzo, and Stefano Chessa. Dynamic bayesian network for crop growth prediction in greenhouses. Computers and Electronics in Agriculture, 169:105167, 2020.

- [26] Marc Tchamitchian, Constantin Kittas, Thomas Bartzanas, and Christos Lykas. Daily temperature optimisation in greenhouse by reinforcement learning. IFAC Proceedings Volumes, 38(1):131–136, 2005. 16th IFAC World Congress.

- [27] Wanpeng Zhang, Xiaoyan Cao, Yao Yao, Zhicheng An, Xi Xiao, and Dijun Luo. Robust model-based reinforcement learning for autonomous greenhouse control. In Vineeth N. Balasubramanian and Ivor Tsang, editors, Proceedings of The 13th Asian Conference on Machine Learning, volume 157 of Proceedings of Machine Learning Research, pages 1208–1223. PMLR, 17–19 Nov 2021.

- [28] Akshay Ajagekar and Fengqi You. Deep reinforcement learning based automatic control in semi-closed greenhouse systems. IFAC-PapersOnLine, 55(7):406–411, 2022. 13th IFAC Symposium on Dynamics and Control of Process Systems, including Biosystems DYCOPS 2022.

- [29] Xiaoyan Cao, Yao Yao, Lanqing Li, Wanpeng Zhang, Zhicheng An, Zhong Zhang, Li Xiao, Shihui Guo, Xiaoyu Cao, and Meihong Wu. igrow: A smart agriculture solution to autonomous greenhouse control. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 11837–11845, 2022.

- [30] Dimitri Bertsekas. Dynamic programming and optimal control: Volume I, volume 1. Athena scientific, 2012.

- [31] Matteo Giuliani, Stefano Galelli, and Rodolfo Soncini-Sessa. A dimensionality reduction approach for many-objective markov decision processes: Application to a water reservoir operation problem. Environmental Modelling & Software, 57:101–114, 2014.

- [32] E. J. van Henten. Greenhouse climate management: an optimal control approach. PhD thesis, University Wageningen, 1994.

- [33] Gerardus PA Bot. Greenhouse climate: from physical processes to a dynamic model. Wageningen University and Research, 1983.

- [34] HF De Zwart. Analyzing energy-saving options in greenhouse cultivation using a simulation model. Wageningen University and Research, 1996.

- [35] M Raaphorst, J Benninga, and BA Eveleens. Quantitative information on dutc h greenhouse horticulture 2019. Report WPR-898. Wageningen University and Research, The Netherlands, 2019.

- [36] D. Katzin, S. van Mourik, F. L. K. Kempkes, and E. J. van Henten. Energy saving measures in optimally controlled greenhouse lettuce cultivation. International Symposium on Innovation and New Technologies in Protected Cultivation, 2020.

- [37] Anders Krogh Mortensen, Asher Bender, Brett Whelan, Margaret M Barbour, Salah Sukkarieh, Henrik Karstoft, and René Gislum. Segmentation of lettuce in coloured 3d point clouds for fresh weight estimation. Computers and Electronics in Agriculture, 154:373–381, 2018.

- [38] Lingxian Zhang, Zanyu Xu, Dan Xu, Juncheng Ma, Yingyi Chen, and Zetian Fu. Growth monitoring of greenhouse lettuce based on a convolutional neural network. Horticulture research, 7, 2020.