Pipeline Parallelism for Inference on Heterogeneous Edge Computing

Abstract

Deep neural networks with large model sizes achieve state-of-the-art results for tasks in computer vision (CV) and natural language processing (NLP). However, these large-scale models are too compute- or memory-intensive for resource-constrained edge devices. Prior works on parallel and distributed execution primarily focus on training—rather than inference—using homogeneous accelerators in data centers. We propose EdgePipe, a distributed framework for edge systems that uses pipeline parallelism to both speed up inference and enable running larger (and more accurate) models that otherwise cannot fit on single edge devices. EdgePipe achieves these results by using an optimal partition strategy that considers heterogeneity in compute, memory, and network bandwidth. Our empirical evaluation demonstrates that EdgePipe achieves and speedup using 16 edge devices for the ViT-Large and ViT-Huge models, respectively, with no accuracy loss. Similarly, EdgePipe improves ViT-Huge throughput by over a 4-node baseline using 16 edge devices, which independently cannot fit the model in memory. Finally, we show up to throughput improvement over the state-of-the-art PipeDream when using a heterogeneous set of devices.

1 Introduction

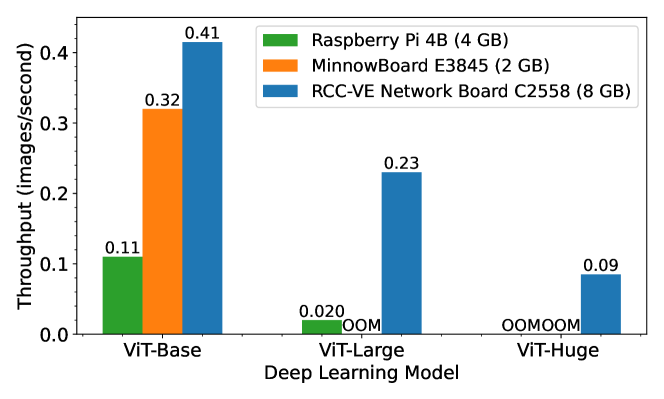

In recent years, deep neural network (DNN) model sizes have increased exponentially to provide better accuracy Krizhevsky and others (2012); Redmon and Farhadi (2017); Tao et al. (2018). In particular, large transformer-based models have achieved state-of-the-art accuracy in various computer vision (CV) Dosovitskiy et al. (2020); Yuan et al. (2021) and natural language processing (NLP) tasks Vaswani et al. (2017); Dosovitskiy et al. (2020); Carion et al. (2020). However, these large models cause significant challenges for training and inference in all environments, especially at the edge, which consists of resource-constrained devices in close proximity to a data source Satyanarayanan (2017). For example, the base vision transformer model (ViT-Base) Dosovitskiy et al. (2020) has 86.6M parameters and requires about 110B FLOPs to perform inference on one image Narayanan et al. (2021b), resulting in limited throughput on a MinnowBoard edge device, as shown in Figure 1. The ViT-Large and ViT-Huge models have considerably more parameters, which makes their deployment on resource-constrained edge devices more difficult, e.g., they do not even fit in memory on the MinnowBoard.

Various methods have been proposed to address large model inference challenges on edge devices, including model compression Hinton et al. (2015); Han et al. (2015); Yao et al. (2021); Kundu and Sundaresan (2021), adaptive inference Tambe et al. (2020), and neural architecture search Wang et al. (2020). These approaches reduce the number of required computation operations, but at the cost of reduced accuracy. Moreover, most of these approaches are limited to a single device, and do not take advantage of idle devices that may be available to assist and improve performance in distributed settings.

Pipeline parallelism partitions models into multiple stages, which can accelerate processing without accuracy loss by utilizing additional resources. Pipelining is complementary to compression methods, providing additional opportunities to mitigate model complexity. Research on pipeline parallelism has focused on data center scenarios with high interconnect bandwidth and homogeneous accelerators like graphics processing units (GPUs) and tensor processing units (TPUs) Yang et al. (2021); Huang et al. (2019); Li et al. (2021); He et al. (2021). In contrast, edge environments are more resource-constrained, with heterogeneous communication and computation characteristics.

Several other frameworks consider pipeline parallelism for limited heterogeneity in data centers, e.g., heterogeneous communication topologies with homogeneous GPUs Narayanan et al. (2019, 2021a), or heterogeneous GPU clusters with homogeneous networks Park et al. (2020a). Torchpipe Kim et al. (2020) provides an automatic balancing strategy for large models, but only for the single-node scenario and does not claim optimality. Finding an optimal partition strategy under fully heterogeneous conditions (heterogeneous devices and network), which is critical to edge scenarios, remains a largely open problem.

We address these challenges with EdgePipe, a distributed inference framework that exploits pipeline parallelism to improve inference performance on heterogeneous edge devices and networks. In particular, this paper makes the following contributions:

-

•

A distributed pipeline parallelism framework to accelerate large-scale model inference on heterogeneous edge computing without accuracy loss.

-

•

A dynamic programming (DP) algorithm to determine the optimal partition mapping of pipeline parallelism to heterogeneous devices and communication channels.

-

•

A detailed experimental evaluation on a real edge testbed, demonstrating throughput performance improvements up to speedup in a 16-device homogeneous cluster and speedup over the state-of-the-art PipeDream in a heterogeneous cluster111To support reproducibility, we will open-source our evaluation code upon acceptance of the paper..

2 Background and Motivation

2.1 Background

Pipeline Parallelism. Pipeline parallelism partitions a neural network model into multiple stages, where each stage consists of a consecutive set of layers in the original model Yang et al. (2021); Narayanan et al. (2019). Each stage in the pipeline is assigned to a worker to achieve the parallel execution of model training or inference. The input minibatch is split into multiple chunks of equal size, which are called microbatches Huang et al. (2019). The microbatch size affects the pipeline performance, with the optimal size depending on multiple factors including the characteristics of the model and the number of pipeline stages Narayanan et al. (2021b). A worker in a system that has pipeline parallelism need only send its output data to a single worker, which avoids the collective communication to synchronize results with all workers. Pipelining can also overlap computation and communication to improve the performance Narayanan et al. (2019).

Transformer-based Models. The transformer model was proposed to improve the effectiveness in learning dependencies between distant positions for sequence modeling tasks Vaswani et al. (2017). A transformer encoder includes multiple transformer layers with identical structures. Every transformer layer is composed of a multi-head self-attention layer, a multi-layer perceptron (MLP), two layer normalization operations, and residual connections. The multi-head self-attention layer calculates the attention score of the input sequence through dot product operations and generates the output representation with the same dimension. Outputs and (where ) are operationally independent, which provides the possibility of parallel execution for both training and inference Park et al. (2020b). Attention-based models have recently been extended to replace conventional convolutional neural networks (CNNs) Dosovitskiy et al. (2020); Kundu and Sundaresan (2021) in performing complex CV tasks. In particular, the ViT models enjoy superior representation ability d’Ascoli et al. (2021), and also suffer less from positional invariance issues which are prevalent in conventional CNNs Su et al. (2019).

2.2 Motivation

Large-scale model inference is a challenging task for resource-limited devices. Classical model compression techniques, including pruning Zhang et al. (2018); Kundu et al. (2021), quantization Yao et al. (2021), low-rank approximation Chin et al. (2020), and knowledge distillation Hinton et al. (2015) can shrink neural network model sizes to potentially accelerate deep neural networks. However, these techniques often require iterative retraining and a full-precision pre-trained model to avoid significant accuracy-drop. More importantly, these methods generally focus on a single compute node. Distributed edge computing scenarios, in contrast, often include a large number of resource-limited devices, e.g., in vehicular edge computing (VEC) for internet of vehicles Liu et al. (2021), wireless-connected AI-enabled sensors Sharma et al. (2021), and smart home systems Isyanto et al. (2020); Cheng and Kunz (2009).

Pipeline parallelism has proven to be effective for distributed training on accelerators in data centers where devices are relatively homogeneous and the network bandwidth is generally high Huang et al. (2019); Yang et al. (2021); He et al. (2021). However, edge computing has unique characteristics:

-

•

Small Memory Capacity. Compared with data-center servers, edge devices usually have smaller memory capacities, ranging from tens of MB to several GB.

-

•

Heterogeneous Devices. Edge devices have diverse computational performance and memory capacities.

-

•

Limited Bandwidth. Unlike communication within data centers, edge computing systems often rely on wireless communication with limited bandwidth.

-

•

Heterogeneous Network Link Capacities. The bandwidth between different pairs of devices depends on physical distance and channel interference, and could range from tens of Kbps to hundreds of Mbps.

Model partition methods for homogeneous clusters will therefore perform poorly in heterogeneous edge environments. A new pipeline parallelism framework is needed to overcome these challenges. In the next section, we introduce a framework for distributed edge clusters that uses heterogeneity-aware pipeline parallelism to improve inference performance and enable running larger—and more accurate—models.

3 Parallelism for Edge Devices

In this section, we present our system design and discuss the problem of partitioning a transformer model for a fully heterogeneous cluster. We introduce the DP-based optimal partition algorithm in Section 3.3.

3.1 EdgePipe System Design

Figure 2 presents the EdgePipe system design, which consists of three major components: the partitioning algorithm for heterogeneous clusters, the data loader, and the runtime framework that implements pipeline parallelism. First, the configuration of the original transformer model and partition constraints are sent to the partition algorithm to generate an optimal partition method. The partition constraints include available edge devices, computation and memory capabilities of these devices, and the bandwidth between devices. The specific partition problem will be introduced in Section 3.2. To fit into every edge device and improve the throughput, input data should be split into small chunks, called a microbatch. At runtime, selected devices are only responsible for the inference of one part of the original model. After finishing the inference of one microbatch, the edge device transmits intermediate outputs to the device in the next pipeline stage. The device in the final stage produces the final result, which could be transmitted to another host or stored locally.

To construct a pipeline with stages, the transformer model should be partitioned into parts . Part includes layers, and , where is the number of layers. Part is assigned to the -th device to construct the -th pipeline stage. For one microbatch input , the process of inference may be denoted as , where is the final output of the inference. The intermediate output of the -th device is sent to the -th device to continue the computation. The number of pipeline inference stages in EdgePipe is equal to the number of devices participating in inference.

3.2 Partition Problem Formulation

Fully heterogeneous clusters include heterogeneity in both the devices and the communication networks. It is common in edge computing for devices have different computation and memory capabilities. In addition, the network bandwidth between devices may be different. It is therefore challenging to decide the partition method for the cluster.

We define a transformer model with layers, inter-layer transmission data size for the -th layer, and a list of heterogeneous devices () with different memory, computation, and communication capabilities. In heterogeneous communication, the bandwidth between a pair of devices and may be different than the bandwidth between a different pair of devices and : . The optimal strategy partitions the model into parts and allocates them to the selected devices to achieve maximal throughput and conform to the memory limitations of the selected devices.

3.3 Target Optimization

We denote as the execution time for the set of layers on a device . is the time to communicate data between devices and , which is computed by Equation (1), where is the bandwidth between devices and .

| (1) |

| Symbol | Description |

|---|---|

| A transformer model with layers. The -th layer has parameters for transmission and requires runtime memory for execution. | |

| A list of available devices. Device has the memory capacity . | |

| The list of selected devices. Every device should participate in the inference. | |

| Bandwidth between devices. is the bandwidth between devices and | |

| The optimal mapping strategy. | |

| Computation time with the set of layers on the device . | |

| Communication time for transferring data from devices to . | |

| The maximum latency of executing pipeline stage on device with transferring data to device . | |

| The optimal time for the pipeline stage under given conditions. |

We assume the pipeline system supports asynchronous communication, and the computation time and communication time are perfectly overlapped. Thus, the maximum latency for the single device can be calculated as:

| (2) |

For the selected devices, achieving the maximal throughput is equivalent to minimizing the execution time , which is determined by the slowest stage under the given strategy and is equal to the largest . The problem of pipeline partitioning can itself be partitioned. The optimal solution for partitioning the whole pipeline on given set of devices can be constructed from the optimal partitioning result for the sub-problem, which could be solved by DP methods.

To tackle this partition problem for fully heterogeneous clusters, we design a three-dimensional DP algorithm recording the state of processed layers, used devices, and the device in the last pipeline stage. Let denote the minimum time to process the first layers with the set of used devices , and the device is the next device to be used. is the optimal solution of the subproblem for layers and devices. The final optimal solution of this partition problem is the minimum with .

The calculation of needs to use the optimal subproblem property: it is determined by the previous state , or the calculation time from -th layer to -th layer on the current device . We further analyze these two situations:

-

•

, the slowest pipeline stage for transformer layers is determined by the previous stage . Since device implements the current stage from the -th to the -th layer in the current pipeline, the used devices set for the next state should include the device , noted as . Parameters and will be enumerated to find the optimal solution of the subproblem.

-

•

, the device that constitutes the slowest stage of the current pipeline and limits the performance of the system. The is calculated from Equation 2. Similarly, device and first layers are enumerated to obtain the minimum value.

Thus, the state transition equation can be formulated as:

| (3) |

where the first term inside the max is the minimum time for the first layers with the set of devices and the next used devices ; the second term is communication time of transferring data from device to device ; the third term is the computation time for the last layers on the device . For initialization, is set to .

Equation 3 calculates the optimal pipeline execution time. However, we need to obtain the selected devices and their order in the pipeline for the optimal strategy. Algorithm 1 describes the memoization technique pseudo-code to find the optimal time and the corresponding pipelining strategy.

The computational complexity of the proposed algorithm is , where is the number of available devices and is the number of layers. The factor is due to the assumption that all devices are distinct. As a comparison, for the naive brute force solution, the search space is , which has a much higher complexity. Moreover, in most scenarios, there should exist identical devices with the same computation and communication capabilities. Therefore, the number of devices could be divided into categories, where the -th category has devices (). The search state of DP can be further reduced, hence the computation complexity could be reduced to . For instance, consider the case where there are three types of devices, , and each type has the same number of devices, i.e., . Then the actual computational complexity is . For example, given device types, where each type has devices, we measure the execution time for these three methods using the ViT-Base model on a 1.6 GHz Intel Core i5 CPU and present the results in Table 2.

| Algorithm | Time |

|---|---|

| Category dynamic programming | 0.01 sec |

| Naive dynamic programming | 18.6 sec |

| Brute force search | 71 min |

4 Experimental Setup

This section describes the experimental hardware, software dependencies, and evaluation baselines.

Testbed. We conduct experiments on the Dispersed Computing Program Testbed (DCompTB) for edge computing platforms Goodfellow et al. (2019). DCompTB exposes the two edge device types described in Table 3: a MinnowBoard and an RCC-VE Network Board. For evaluation, we first configure homogeneous edge clusters using both MinnowBoards and RCC-VE Network Boards, where each cluster is composed of identical devices and network bandwidth. For heterogeneous experiments, we mix device types and further increase heterogeneity by leveraging system software tools. We use the cpulimit tool to limit the CPU usage and the ulimit tool to limit the memory size on the RCC-VE boards. We then use the tc tool to vary network bandwidth between edge devices. In multiple edge computing scenarios, it is common to have tens of milliseconds latency Premsankar et al. (2018), thus we impose a fixed 20 ms latency when varying the bandwidth.

| Configuration | Value |

|---|---|

| Device name | MinnowBoard |

| Processors | Intel Atom E3845 @ 1.91 GHz |

| Memory size | 2 GB |

| Max bandwidth | 1 Gbps |

| Device name | RCC-VE Network Board |

| Processors | Intel Atom C2558 @ 2.4 GHz |

| Memory size | 8 GB |

| Max bandwidth | 1 Gbps |

Software. Each node runs Debian GNU/Linux 10 with Linux kernel 4.19.0-11-amd64. We use PyTorch 1.9.0 as the deep learning inference engine and the PyTorch RPC library with the TensorPipe backend as the distributed framework Paszke et al. (2019).

Deep Learning Models. The definition, configuration, and implementation of the ViT-Base, ViT-Large, and ViT-Huge models are taken from HuggingFace 4.10.0 Wolf et al. (2020). We evaluate ViT models for the image classification task. Input images are from ImageNet 2012 Deng et al. (2009) and resized after the embedding layer with a uniform input dimension to the transformer layers.

Baselines. To the best of our knowledge, this is the first work that evaluates pipeline parallelism for inference on heterogeneous edge devices, so we adopt baselines from pipelines developed for training in data centers. We re-implement GPipe Huang et al. (2019) with an even partitioning method for inference on the CPU as the baseline. Although PipeDream targets asynchronous parallel training, its partition method can be applied to inference by considering only the forward pass. PipeDream also provides open-source code for partitioning for inference Narayanan et al. (2019). PipeDream considers a hierarchical interconnect that represents a data center interconnect topology, but that does not model the ad hoc networks of edge systems. To apply PipeDream to the edge, we assume a one-level communication network and compare with its pipeline partitioning scheme. We choose the optimal microbatch size for GPipe and PipeDream and compare the system performance.

5 Evaluation

This section describes the EdgePipe experimental evaluation. We first show the runtime performance on homogeneous clusters using two DCompTB device types. We then demonstrate the effectiveness of our partitioning method on heterogeneous clusters. We also explore the effects of communication bandwidth and the relationship between microbatch size and throughput. Finally, we evaluate EdgePipe with compressed models.

5.1 Runtime Performance Analysis

We first evaluate EdgePipe’s performance on the 2 GB MinnowBoard and the 8 GB RCC-VE Network Board devices in homogeneous clusters. Figure 3 presents throughput on these clusters for up to 16 stages (devices).

For MinnowBoard devices, we achieve images per second throughput with devices using the ViT-Base model, which is faster compared to the single-device performance. The ViT-Large and ViT-Huge models cannot fit in memory on a single MinnowBoard device. Hence, we use 2-stage and 4-stage throughput as the speedup baselines for the ViT-Large and ViT-Huge models, respectively. With 16 MinnowBoard devices, EdgePipe achieves images per second throughput, which is a speedup over the 2-stage baseline (where the optimal speedup is 16/2=8). For the ViT-Huge model, EdgePipe achieves images per second throughput with MinnowBoard devices, which gives a speedup over the 4-stage baseline (where the optimal speedup is 16/4=4).

We achieve similar scalability on the RCC-VE Network Board devices. With the ViT-Base model, EdgePipe achieves images per second throughput with speedup with four devices. Compared with the single-device baseline, EdgePipe achieves and images per second throughput for the ViT-Large and ViT-Huge models with and speedup using devices, respectively.

The sub-linear performance for the ViT-Base model is primarily due to uneven execution times of different stages. We observe the performance difference for the slowest and fastest stages is about for the 2-stage pipeline. With stages, this time difference increases to and causes a more serious performance loss. Figure 4 illustrates the issue by quantifying each ViT-Base layer’s execution time on the MinnowBoard. The second dense layer in the -th transformer layer, which only includes the linear transformation with the matrix multiplication operation and thus cannot be further partitioned with pipeline parallelism, requires considerably more execution time than other layers. The variation in execution time is due to differences in the sparsity of weights. We observe the similar performance behavior on the RCC-VE Network Board devices. In contrast, each transformer layer in the ViT-Large and ViT-Huge models have similar inference times, so EdgePipe scales better on the ViT-Large and ViT-Huge models. In addition, we observe compressed models reduce stage imbalances and mitigate this challenge. We report on compressed models in subsection 5.5.

EdgePipe achieves nearly linear performance improvements with the ViT-Large and ViT-Huge models when pipelining with both edge device types. EdgePipe achieves speedup (over a 4-node baseline) on 16 MinnowBoard devices and speedup on 16 RCC-VE Network Board devices with the ViT-Huge model. These results demonstrate EdgePipe’s effectiveness for large-scale models, including ones that otherwise cannot fit on single devices.

5.2 Heterogeneous Clusters

Compared with the data center, edge devices are more heterogeneous in computation and communication capabilities. To better simulate heterogeneous resource-constrained devices, we throttle the CPU usage of the RCC-VE Network Boards to emulate diminished inference performance. We also vary the maximum bandwidth for both MinnowBoard and RCC-VE Network Board devices to emulate different network link capacities. We compare EdgePipe with GPipe and PipeDream on six clusters with increasing heterogeneity. Because GPipe and PipeDream do not specify the device mapping order, we test them with random device orders and measure average performance and variance. Device configuration details are presented in Table 4, and experimental results are shown in Figure 5.

| Case | Devices | CPU | Memory | Bandwidth |

|---|---|---|---|---|

| 1 | RCC-VE | 100% | 8 GB | 1 Gbps |

| MinnowBoard | 100% | 2 GB | 1 Gbps | |

| 2 | RCC-VE | 100% | 8 GB | 1 Gbps |

| RCC-VE | 75% | 4 GB | 1 Gbps | |

| RCC-VE | 25% | 4 GB | 1 Gbps | |

| MinnowBoard | 100% | 2 GB | 1 Gbps | |

| 3 | RCC-VE | 100% | 8 GB | 40 Mbps |

| MinnowBoard | 100% | 2 GB | 10 Mbps | |

| 4 | RCC-VE | 100% | 8 GB | 30 Mbps |

| RCC-VE | 100% | 8 GB | 20 Mbps | |

| MinnowBoard | 100% | 2 GB | 10 Mbps | |

| MinnowBoard | 100% | 2 GB | 5 Mbps | |

| 5 | RCC-VE | 100% | 8 GB | 50 Mbps |

| RCC-VE | 10% | 4 GB | 20 Mbps | |

| MinnowBoard | 100% | 2 GB | 30 Mbps | |

| 6 | RCC-VE | 100% | 8 GB | 100 Mbps |

| RCC-VE | 75% | 4 GB | 60 Mbps | |

| RCC-VE | 50% | 4 GB | 40 Mbps | |

| RCC-VE | 25% | 4 GB | 20 Mbps | |

| RCC-VE | 10% | 4 GB | 10 Mbps | |

| MinnowBoard | 100% | 2 GB | 80 Mbps |

By comparing Cases 1 and 2, we show the effect of introducing heterogeneous computing capabilities on system performance. For the ViT-Base model, EdgePipe achieves the best performance of images per second in both cases. GPipe and PipeDream show a significant variance with different device orders for the ViT-Base model. In Case 1, GPipe’s throughput ranges from to images per second, and PipeDream’s throughput ranges from to images per second. In Case 2, which introduces more heterogeneity, GPipe and PipeDream show a larger variance of to and to for the ViT-Base model. For the ViT-Large and ViT-Huge models, both GPipe and PipeDream adopt the even partitioning strategy and obtain the same performance. EdgePipe achieves and images per second for the ViT-Large model and and images per second for the ViT-Huge model in Cases 1 and 2, respectively. For the same two cases, GPipe and PipeDream only achieve and images per second for the ViT-Large model and and images per second for the ViT-Huge model. EdgePipe demonstrates both better performance and robustness when compute capabilities are more heterogeneous.

Case 3 has the same compute resources as Case 1, but with less communication bandwidth. We further vary the bandwidth in Case 4 with the same compute resources. For the ViT-Base model, EdgePipe achieves the best throughput of and images per second in both Cases 3 and 4. GPipe and PipeDream show a significant performance degradation due to the limited bandwidth. In Case 3 for the ViT-Base model, GPipe’s throughput ranges from to images per second, and PipeDream’s throughput ranges from to images per second. In Case 4 for the same model, GPipe’s throughput ranges from to images per second, and PipeDream’s throughput ranges from to images per second. For the ViT-Large and ViT-Huge models, EdgePipe achieves the best performance with fewer devices than GPipe and PipeDream for Cases 3 and 4. For the ViT-Large and ViT-Huge models on Case 3, EdgePipe selects devices with Mbps bandwidth and one device with Mbps bandwidth as the last stage and achieves throughput of and images per second, respectively. GPipe and PipeDream use devices and performance degrades with and images per second for the ViT-Large and ViT-Huge models in Case 3. In Case 4, EdgePipe selects devices for the ViT-Large model and devices for the ViT-Huge model to achieve throughput of and images per second, respectively. GPipe and PipeDream achieve and for the ViT-Large and ViT-Huge models. In Case 4, EdgePipe shows and speedup compared to PipeDream for the ViT-Large and ViT-Huge models, respectively. These two cases demonstrate the effectiveness of EdgePipe’s partitioning strategy for heterogeneous network.

In Cases 5 and 6, we mix the heterogeneity of devices and networks. In Case 5, we added extremely resource-constrained devices with CPUs at capacity and Mbps bandwidth. EdgePipe achieves the best throughput with , , and images per second using , , and devices for the ViT-Base, ViT-Large, and ViT-Huge models. For the ViT-Base model in Case 5, GPipe’s throughput ranges from to images per second, and PipeDream’s throughput ranges from to images per second. For the ViT-Large and ViT-Huge models in Case 5, GPipe and PipeDream achieve and images per second. In Case 5, EdgePipe shows , , speedup relative to PipeDream’s average throughput for the ViT-Base, ViT-Large, and ViT-Huge models, respectively. Case 6 shows a scenario with types of devices weighted toward devices with medium performance. In this case, EdgePipe uses , , and devices to achieve , , and images per second for these three ViT models. For the ViT-Base model in Case 6, GPipe’s throughput ranges from to , and PipeDream’s throughput ranges from to images per second. GPipe and PipeDream achieve and images per second for the ViT-Large and ViT-Huge models, respectively. EdgePipe achieves speedup of , , and for the ViT-Base, ViT-Large, and ViT-Huge models compared to PipeDream’s average throughput. Cases 5 and 6 demonstrate EdgePipe’s ability to schedule around low-performance devices and map the task reasonably to achieve the best throughput.

EdgePipe performs significantly better than the GPipe and PipeDream partition methods on all six heterogeneous clusters. Unlike GPipe and PipeDream, EdgePipe successfully avoids the lowest-performing devices by considering multiple factors in exploiting pipelining to improve performance.

5.3 Impact of Bandwidth

Edge computing often has more limited bandwidth between devices compared to the data center. To evaluate the relationship between system performance and bandwidth, we vary the bandwidth between all devices from 120 Mbps to 5 Mbps. We test with 4 pipeline stages using the ViT-Base model and 16 pipeline stages using the ViT-Large and ViT-Huge models. As shown in Figure 6, performance does not decrease significantly until bandwidth drops below 30 Mbps for all three ViT models, demonstrating the feasibility of our system for practical edge applications. Upon further reduction in bandwidth from 30 Mbps to 5 Mbps, although the performance shows a linear decline, the throughput still shows some improvements compared to the single-device baseline. For the ViT-Base model with Mbps bandwidth, MinnowBoards and RCC-VE Network Boards show about and speedup compared to the single-device baseline. For the ViT-Large and ViT-Huge models with 5 Mbps, EdgePipe still achieves and speedup compared to the single device baseline on RCC-VE Network Board devices. These results demonstrate that EdgePipe is still effective for large-scale models under limited network conditions.

5.4 Impact of Microbatch Size

As in other pipeline frameworks, performance is affected by microbatch size. We demonstrate the relationship between throughput and microbatch size in Figure 7. For a -stage pipeline, the maximum throughput of the even partitioning method in GPipe is around images per second with a microbatch size . The throughput shows a significant increase before microbatch size , which is mainly due to the continuous increase in CPU utilization as the microbatch size increases. Beyond this size, the throughput begins to slowly decline because larger microbatch sizes reduce the efficiency of the pipeline parallelism. There is a similar pattern for microbatch size and the system throughput for EdgePipe. However, it achieves a significant performance improvement with a maximum throughput of images per second with a microbatch size of . The fine-grained partitioning method in EdgePipe achieves more efficient CPU utilization than even partitioning. Other pipeline stages show similar behavior. To give a fair comparison of throughput, we choose the optimal microbatch and its related performance for all methods in the evaluations.

5.5 EdgePipe with Model Compression

Model compression techniques shrink the model size to reduce compute cost and potentially accelerate inference Han et al. (2015); Hinton et al. (2015) and training Kundu et al. (2020). These approaches can be considered as important complementary strategies to EdgePipe to improve the performance on resource-constrained platforms. For example, compared to the ViT-Base model, DeiT-Tiny and DeiT-Small use distillation to achieve similar ImageNet top-1 accuracy with compressed models of up to and , respectively Touvron et al. (2021). To demonstrate the efficacy of using compressed models with EdgePipe, we evaluate DeiT-Base, Small, and Tiny on up to RCC-VE Network Board devices. We also provide the throughput of the ViT-Base model on same devices for comparison. Figure 8 presents the throughput results.

DeiT-Base, which has identical model structure as ViT-Base, achieves images per second throughput on a single RCC-VE Network Board. With devices, the DeiT-Base model achieves images per second and outperforms ViT-Base’s images per second. DeiT-Small and DeiT-Tiny demonstrate more significant improvements. In particular, DeiT-Small and DeiT-Tiny can provide throughput of up to and images per second, respectively. Interestingly, we observe DeiT-Small and DeiT-Tiny models ease the uneven execution times seen with ViT-Base and demonstrate better scalability with EdgePipe. The model compression technique, as an orthogonal method, can potentially further improve the performance of EdgePipe, making this combined approach a promising solution for large scale model inference at the edge.

6 Related Work

Current techniques to enable the execution of large models on edge devices mainly fall into two categories: single device optimization and distributed inference on multiple devices or servers. Aggressive model compression is an example of single device optimization methods. EdgeBERT Tambe et al. (2020) combines network pruning, entropy-based early exit, and adaptive attention span to reduce the model size and the inference latency of Bidirectional Encoder Representations from Transformers (BERT) for NLP tasks. Lite Transformer Wu et al. (2019) adopts adaptive inference to reduce inference computation cost. Another promising solution includes neural architecture search (NAS), that trains a flexible supernet model to yield various subnets suitable for different targeted hardware platforms Wang et al. (2020). However, most of the above methods are either not suitable for distributed platform or need redesigning and retraining of a pre-trained models and can potentially incur non-negligible drop in accuracy.

Through the assistance of cloud servers or distributed edge devices, the latency and computations for each device can be reduced without sacrificing accuracy. In several works Chen et al. (2021); Kang et al. (2017); Eshratifar et al. (2021), the distributed inference of DNN models on edge devices is partitioned and offloaded to cloud servers to reduce the minimize the latency and computations. Considering the limited bandwidth and uncertain delay between the edge and the cloud, MoDNN Mao et al. (2017) employs a MapReduce-like distributed inference paradigm and only utilizes idle mobile devices to execute CNN models. DeepThings Zhao et al. (2018) proposes a fine-grain partition method for CNN models on edge clusters. In Zhou et al. (2019), the proposed adaptive parallel inference method for CNN models is extend to heterogeneous edge devices.

With the emergence of transformer-based models, the model size continues to increase making distributed execution more important. Megatron-LM Shoeybi et al. (2019) implements operation partitioning for transformer-based models. Pipeline parallelism is proposed to address the problem of communication overheads. GPipe Huang et al. (2019) presents effective pipeline parallelism for training large models on multiple TPU accelerators. PipeDream Narayanan et al. (2019) and its subsequent work Narayanan et al. (2021a) target heterogeneous platforms and adopt pipeline parallelism to accelerate training. PipeMare Yang et al. (2021) proposes a memory-efficient pipeline parallelism without sacrificing utilization. These work target data centers, and are difficult to directly apply to edge computing.

7 Conclusion

In this paper, we presented EdgePipe, a distributed inference acceleration system using pipeline parallelism. Unlike current pipeline parallelism frameworks for model training on cloud servers, EdgePipe focuses on heterogeneous resource-constrained devices. To address the workload balance problem for heterogeneous clusters, we design a dynamic programming-based partition method. We achieve and throughput speedup with 16 devices for the ViT-Large and ViT-Huge models, and demonstrate the ability to accelerate large-scale models on devices without sufficient memory. EdgePipe demonstrates effectiveness and robustness for multiple heterogeneous cases, e.g., we show up to throughput speedup compared to GPipe and PipeDream when using a heterogeneous set of devices. Finally, we demonstrate the efficacy of our proposed scheme on compressed models to show that the throughput benefits of our pipelining approach are complementary to the improvement from compression and that we still achieve speedup by leveraging multiple devices.

References

- Carion et al. [2020] Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. End-to-end object detection with transformers. In European Conference on Computer Vision, pages 213–229. Springer, 2020.

- Chen et al. [2021] Xing Chen, Ming Li, Hao Zhong, Yun Ma, and Ching-Hsien Hsu. Dnnoff: Offloading dnn-based intelligent iot applications in mobile edge computing. IEEE Transactions on Industrial Informatics, 2021.

- Cheng and Kunz [2009] Jin Cheng and Thomas Kunz. A survey on smart home networking. Carleton University, Systems and Computer Engineering, Technical Report SCE-09-10, 2009.

- Chin et al. [2020] Ting-Wu Chin, Ruizhou Ding, Cha Zhang, and Diana Marculescu. Towards efficient model compression via learned global ranking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1518–1528, 2020.

- d’Ascoli et al. [2021] Stéphane d’Ascoli, Hugo Touvron, Matthew Leavitt, Ari Morcos, Giulio Biroli, and Levent Sagun. Convit: Improving vision transformers with soft convolutional inductive biases. arXiv preprint arXiv:2103.10697, 2021.

- Deng et al. [2009] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pages 248–255. Ieee, 2009.

- Dosovitskiy et al. [2020] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Eshratifar et al. [2021] Amir Erfan Eshratifar, Mohammad Saeed Abrishami, and Massoud Pedram. Jointdnn: An efficient training and inference engine for intelligent mobile cloud computing services. IEEE Transactions on Mobile Computing, 20(2):565–576, 2021.

- Goodfellow et al. [2019] Ryan Goodfellow, Stephen Schwab, Erik Kline, Lincoln Thurlow, and Geoff Lawler. The dcomp testbed. In 12th USENIX Workshop on Cyber Security Experimentation and Test (CSET 19), Santa Clara, CA, August 2019. USENIX Association.

- Han et al. [2015] Song Han, Huizi Mao, and William J Dally. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv preprint arXiv:1510.00149, 2015.

- He et al. [2021] Chaoyang He, Shen Li, Mahdi Soltanolkotabi, and Salman Avestimehr. Pipetransformer: Automated elastic pipelining for distributed training of transformers. arXiv preprint arXiv:2102.03161, 2021.

- Hinton et al. [2015] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531, 2015.

- Huang et al. [2019] Yanping Huang, Youlong Cheng, Ankur Bapna, Orhan Firat, Dehao Chen, Mia Xu Chen, HyoukJoong Lee, Jiquan Ngiam, Quoc V Le, Yonghui Wu, et al. Gpipe: Efficient training of giant neural networks using pipeline parallelism. In NeurIPS, 2019.

- Isyanto et al. [2020] Haris Isyanto, Ajib Setyo Arifin, and Muhammad Suryanegara. Design and implementation of iot-based smart home voice commands for disabled people using google assistant. In 2020 International Conference on Smart Technology and Applications (ICoSTA), pages 1–6. IEEE, 2020.

- Kang et al. [2017] Yiping Kang, Johann Hauswald, Cao Gao, Austin Rovinski, Trevor Mudge, Jason Mars, and Lingjia Tang. Neurosurgeon: Collaborative intelligence between the cloud and mobile edge. SIGARCH Comput. Archit. News, 45(1):615–629, April 2017.

- Kim et al. [2020] Chiheon Kim, Heungsub Lee, Myungryong Jeong, Woonhyuk Baek, Boogeon Yoon, Ildoo Kim, Sungbin Lim, and Sungwoong Kim. torchgpipe: On-the-fly pipeline parallelism for training giant models. CoRR, abs/2004.09910, 2020.

- Krizhevsky and others [2012] Alex Krizhevsky et al. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems, pages 1097–1105, 2012.

- Kundu and Sundaresan [2021] Souvik Kundu and Sairam Sundaresan. Attentionlite: Towards efficient self-attention models for vision. In ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pages 2225–2229. IEEE, 2021.

- Kundu et al. [2020] Souvik Kundu, Mahdi Nazemi, Massoud Pedram, Keith M Chugg, and Peter A Beerel. Pre-defined sparsity for low-complexity convolutional neural networks. IEEE Transactions on Computers, 69(7):1045–1058, 2020.

- Kundu et al. [2021] Souvik Kundu, Mahdi Nazemi, Peter A Beerel, and Massoud Pedram. Dnr: A tunable robust pruning framework through dynamic network rewiring of dnns. In Proceedings of the 26th Asia and South Pacific Design Automation Conference, pages 344–350, 2021.

- Li et al. [2021] Zhuohan Li, Siyuan Zhuang, Shiyuan Guo, Danyang Zhuo, Hao Zhang, Dawn Song, and Ion Stoica. Terapipe: Token-level pipeline parallelism for training large-scale language models. arXiv preprint arXiv:2102.07988, 2021.

- Liu et al. [2021] Lei Liu, Chen Chen, Qingqi Pei, Sabita Maharjan, and Yan Zhang. Vehicular edge computing and networking: A survey. Mobile Networks and Applications, 26(3):1145–1168, 2021.

- Mao et al. [2017] Jiachen Mao, Xiang Chen, Kent W Nixon, Christopher Krieger, and Yiran Chen. Modnn: Local distributed mobile computing system for deep neural network. In Design, Automation & Test in Europe Conference & Exhibition (DATE), 2017, pages 1396–1401. IEEE, 2017.

- Narayanan et al. [2019] Deepak Narayanan, Aaron Harlap, Amar Phanishayee, Vivek Seshadri, Nikhil Devanur, Greg Granger, Phil Gibbons, and Matei Zaharia. Pipedream: Generalized pipeline parallelism for dnn training. In ACM Symposium on Operating Systems Principles (SOSP 2019), October 2019.

- Narayanan et al. [2021a] Deepak Narayanan, Amar Phanishayee, Kaiyu Shi, Xie Chen, and Matei Zaharia. Memory-efficient pipeline-parallel dnn training. In 2021 International Conference on Machine Learning (ICML 2021), July 2021.

- Narayanan et al. [2021b] Deepak Narayanan, Mohammad Shoeybi, Jared Casper, Patrick LeGresley, Mostofa Patwary, Vijay Anand Korthikanti, Dmitri Vainbrand, Prethvi Kashinkunti, Julie Bernauer, Bryan Catanzaro, Amar Phanishayee, and Matei Zaharia. Efficient large-scale language model training on gpu clusters, 2021.

- Park et al. [2020a] Jay H Park, Gyeongchan Yun, M Yi Chang, Nguyen T Nguyen, Seungmin Lee, Jaesik Choi, Sam H Noh, and Young-ri Choi. Hetpipe: Enabling large DNN training on (whimpy) heterogeneous GPU clusters through integration of pipelined model parallelism and data parallelism. In 2020 USENIX Annual Technical Conference (USENIXATC 20), pages 307–321, 2020.

- Park et al. [2020b] Junki Park, Hyunsung Yoon, Daehyun Ahn, Jungwook Choi, and Jae-Joon Kim. Optimus: Optimized matrix multiplication structure for transformer neural network accelerator. Proceedings of Machine Learning and Systems, 2:363–378, 2020.

- Paszke et al. [2019] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32, pages 8024–8035. 2019.

- Premsankar et al. [2018] Gopika Premsankar, Mario Di Francesco, and Tarik Taleb. Edge computing for the internet of things: A case study. IEEE Internet of Things Journal, 5(2):1275–1284, 2018.

- Redmon and Farhadi [2017] Joseph Redmon and Ali Farhadi. Yolo9000: better, faster, stronger. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7263–7271, 2017.

- Satyanarayanan [2017] Mahadev Satyanarayanan. The emergence of edge computing. Computer, 50(1):30–39, 2017.

- Sharma et al. [2021] Himanshu Sharma, Ahteshamul Haque, and Frede Blaabjerg. Machine learning in wireless sensor networks for smart cities: A survey. Electronics, 10(9), 2021.

- Shoeybi et al. [2019] Mohammad Shoeybi, Mostofa Patwary, Raul Puri, Patrick LeGresley, Jared Casper, and Bryan Catanzaro. Megatron-lm: Training multi-billion parameter language models using model parallelism. CoRR, abs/1909.08053, 2019.

- Su et al. [2019] Hang Su, Varun Jampani, Deqing Sun, Orazio Gallo, Erik Learned-Miller, and Jan Kautz. Pixel-adaptive convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11166–11175, 2019.

- Tambe et al. [2020] Thierry Tambe, Coleman Hooper, Lillian Pentecost, Tianyu Jia, En-Yu Yang, Marco Donato, Victor Sanh, Paul Whatmough, Alexander M Rush, David Brooks, et al. Edgebert: Sentence-level energy optimizations for latency-aware multi-task nlp inference. arXiv preprint arXiv:2011.14203, 2020.

- Tao et al. [2018] Hu Tao, Weihua Li, Xianxiang Qin, and Dan Jia. Image semantic segmentation based on convolutional neural network and conditional random field. In 2018 Tenth International Conference on Advanced Computational Intelligence (ICACI), pages 568–572. IEEE, 2018.

- Touvron et al. [2021] Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International Conference on Machine Learning, pages 10347–10357. PMLR, 2021.

- Vaswani et al. [2017] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. arXiv preprint arXiv:1706.03762, 2017.

- Wang et al. [2020] Hanrui Wang, Zhanghao Wu, Zhijian Liu, Han Cai, Ligeng Zhu, Chuang Gan, and Song Han. Hat: Hardware-aware transformers for efficient natural language processing. In Annual Conference of the Association for Computational Linguistics, 2020.

- Wolf et al. [2020] Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 38–45, Online, October 2020.

- Wu et al. [2019] Zhanghao Wu, Zhijian Liu, Ji Lin, Yujun Lin, and Song Han. Lite transformer with long-short range attention. In International Conference on Learning Representations, 2019.

- Yang et al. [2021] Bowen Yang, Jian Zhang, Jonathan Li, Christopher Re, Christopher Aberger, and Christopher De Sa. Pipemare: Asynchronous pipeline parallel dnn training. In A. Smola, A. Dimakis, and I. Stoica, editors, Proceedings of Machine Learning and Systems, volume 3, pages 269–296, 2021.

- Yao et al. [2021] Zhewei Yao, Zhen Dong, Zhangcheng Zheng, Amir Gholami, Jiali Yu, Eric Tan, Leyuan Wang, Qijing Huang, Yida Wang, Michael Mahoney, et al. Hawq-v3: Dyadic neural network quantization. In International Conference on Machine Learning, pages 11875–11886. PMLR, 2021.

- Yuan et al. [2021] Li Yuan, Yunpeng Chen, Tao Wang, Weihao Yu, Yujun Shi, Zihang Jiang, Francis EH Tay, Jiashi Feng, and Shuicheng Yan. Tokens-to-token vit: Training vision transformers from scratch on imagenet. arXiv preprint arXiv:2101.11986, 2021.

- Zhang et al. [2018] Tianyun Zhang, Shaokai Ye, Kaiqi Zhang, Jian Tang, Wujie Wen, Makan Fardad, and Yanzhi Wang. A systematic dnn weight pruning framework using alternating direction method of multipliers. In Proceedings of the European Conference on Computer Vision (ECCV), pages 184–199, 2018.

- Zhao et al. [2018] Zhuoran Zhao, Kamyar Mirzazad Barijough, and Andreas Gerstlauer. Deepthings: Distributed adaptive deep learning inference on resource-constrained iot edge clusters. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, 37(11):2348–2359, 2018.

- Zhou et al. [2019] Li Zhou, Mohammad Hossein Samavatian, Anys Bacha, Saikat Majumdar, and Radu Teodorescu. Adaptive parallel execution of deep neural networks on heterogeneous edge devices. New York, NY, USA, 2019. Association for Computing Machinery.