PillarGrid: Deep Learning-based Cooperative Perception for 3D Object Detection from Onboard-Roadside LiDAR

Abstract

3D object detection plays a fundamental role in enabling automated driving, which is regarded as the significant leap forward for contemporary transportation systems from the perspectives of safety, mobility, and sustainability. Most of the state-of-the-art object detection methods from point clouds are developed based on a single onboard LiDAR, whose performance will be inevitably limited by the range and occlusion, especially in dense traffic scenarios. In this paper, we propose PillarGrid, a novel cooperative perception method fusing information from multiple 3D LiDARs (both on-board and roadside), to enhance the situation awareness for connected and automated vehicles (CAVs). PillarGrid consists of four main components: 1) cooperative preprocessing of point clouds, 2) pillar-wise voxelization and feature extraction, 3) grid-wise deep fusion of features from multiple sensors, and 4) convolutional neural network (CNN)-based augmented 3D object detection. A novel cooperative perception platform is developed for model training and testing. Extensive experimentation shows that PillarGrid outperforms other single-LiDAR-based 3D object detection methods concerning both accuracy and range by a large margin.

Index Terms:

Cooperative Perception, 3D Object Detection, Onboard-Roadside LiDAR, Deep Fusion, Connected and Automated Vehicles.I Introduction

The rapid progress of intelligent transportation systems (ITS) has greatly promoted the efficiency of daily commuting and goods movement. However, drastically increased number of vehicles has resulted in several major problems in terms of safety, mobility, and sustainability. Taking advantage of advanced perception, wireless communication, and precise control, Cooperative Driving Automation (CDA) has the promise to be the revolutionary solution to the aforementioned challenges [1].

Deploying CDA in urban environments poses a series of technological challenges. Among others, object detection is arguably the most significant since it lays the perception foundation for other subsequent tasks in CDA [2]. To achieve this, high-fidelity sensors such as cameras and LiDARs are implemented to collect data from the surrounding environment. In particular, LiDAR is widely utilized in 3D object detection tasks due to its capability of returning the 3D distance and intensity information with high accuracy, which is crucial in CDA applications [3].

Traditionally, a LiDAR-based object detection pipeline is mainly developed for those 3D LiDARs installed on the top of the equipped vehicle and relies on an onboard computing system to generate the 3D object detection results in real-time [4]. Following the tremendous success in the application of deep learning methods for computer vision, a large body of literature has explored various algorithms to improve the 3D object detection performance based on point clouds from on-board LiDARs [5, 6, 7]. Meanwhile, many onboard sensor-based datasets have been collected and labeled to serve as the foundation for these data-driven methods [8, 9]. However, due to the spatial constraints in the mounting of onboard sensors, their performance is largely limited by the range and occlusion, especially in a dense traffic environment [10]. As a complement, infrastructure-based perception systems have the potential to achieve better object perception results due to fewer occlusion effects and more flexibility in terms of the mounting height and pose.

To further unlock the bottleneck of object detection caused by sensing range and occlusion, Cooperative Perception (CP) is regarded as a promising solution by fusing information from spatially separated sensors [11, 12]. In terms of the stage when the information is fused, CP can be divided into three categories: 1) early-fusion-based CP (E-CP) which directly runs the perception pipeline on the integrated raw data from multiple sensors; 2) deep-fusion-based CP (D-CP) whose fusion process happens within the perception pipeline, i.e., feature-level fusion; and 3) late-fusion-based CP (L-CP) which merges the perception results generated from different pipelines running on respective sensors. E-CP methods need to transmit the raw sensor data which is feasible for sensors with low data volume, e.g., loop detectors and radars. However, it is difficult to apply E-CP methods to LiDAR-based systems due to the limitation of communication bandwidth. Although L-CP methods require much fewer communication capacities, the late-fusion scheme is arguably limited for tapping the potential of cooperative perception to improve detection performance [11].

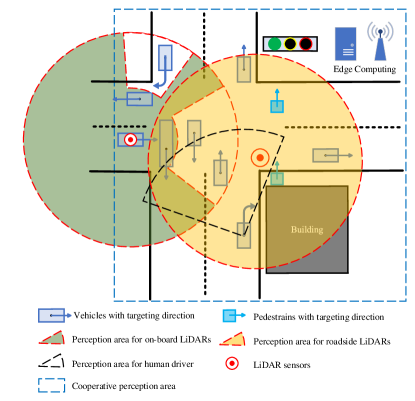

In this paper, we propose PillarGrid, a novel CP approach that can be applied to both connected and automated vehicles (CAVs) and the roadside infrastructures in terms of LiDAR sensors. Fig. 1 depicts the core idea of CP applied in this study, considering both onboard and roadside LiDARs. To be specific, a CAV equipped with an onboard LiDAR can perceive the surrounding environment by itself, covering the area shown in Fig 1. However, due to the occlusion by other vehicles (or buildings), some road users cannot be detected. For connected human-driven vehicles (CHVs), because the pedestrian is blocked by the building, the human driver may not be able to see him/her and take reactions in time, resulting in a risky situation. A roadside LiDAR is usually less impacted by the occlusion, but it still suffers from limited range and/or perception performance degradation due to the decreased resolution with the increase of sensing distance.

To the best of the authors’ knowledge, this paper is the first deep-fusion-based cooperative perception approach that combines point cloud data from an onboard LiDAR and a roadside LiDAR. In this paper, PillarGrid, a novel CP method is proposed to generate 3D object detection based on feature-level cooperation from both onboard and roadside LiDARs. Specifically, Grid-wise Feature Fusion (GFF), a novel deep-fusion method is proposed and a deep neural network is designed to generate the oriented 3D bounding boxes for vehicles and pedestrians. For model training and evaluation, a cooperative perception platform is developed for scenarios design and data acquisition.

II Related Work

II-A Object Detection in LiDAR Point Clouds

With the tremendous progress achieved by a convolutional neural network (CNN) in image-based computer vision tasks, CNN is also widely applied to object detection based on point clouds [13]. Since LiDAR-based object detection is an intrinsically three-dimensional task, early work [14, 15] tends to deploy 3D convolutional layers. Although 3D convolutional layers work well for extracting hidden features from 3D point clouds data, they require much higher computational power compared with 2D convolution, resulting in longer processing time. For instance, as a fast object detection model, Vote3Deep proposed by Engelcke et al. [14] utilized sparse convolutional layers to deal with 3D point clouds and required about to process a single frame of point clouds data, i.e., inference frequency. Comparatively, the fast 2D object detection models can run at a frequency over [16, 17].

To speed up the 3D object detection, one popular paradigm is to encode the 3D point clouds into a 2D birds-eye-view (BEV) pseudo-image which can be processed by a standard image detection architecture, such as ComplexYolo [18] and PointPillars [5]. By discretizing point clouds into 3D voxels and then encoding them to a pseudo-image format, these object detectors can run the inference pipeline at a similar frequency to camera-based 2D detectors [18, 5, 16, 17].

II-B Roadside-LiDAR-based Object Detection

Although general object detection has gone through a rapid development era, research focusing on roadside sensors is still an emerging topic and has the potential to break the ground for cooperative automated driving, especially in a mixed traffic environment [19]. Due to the lack of labeled datasets, most of the roadside-LiDAR-based object detectors are still following a traditional paradigm to deal with point clouds, which consists of 1) Background Filtering to remove the laser points reflected from the road surface or buildings by applying filtering methods, such as statistics-based background filtering [20]; 2) Clustering to generate clusters for the laser points by implementing clustering methods, such as Density-Based Spatial Clustering Applications with Noise (DBSCAN) [21]; and 3) Classification to generate different labels for different traffic objects, such as vehicles and pedestrians, based on neural networks [22]. This paradigm is straightforward and popular for implementation in the real world [23], but has a large performance gap compared with SOTA learning-based detectors in terms of accuracy and real-time performance [13]. Although some of the learning-based object detectors trained with on-board datasets can be implemented directly in roadside sensors without further training [24, 25], the system performance is still inevitably limited by the lack of training data.

II-C Cooperative Perception for Object Detection

Considering the existence of the physical occlusion, it is extremely difficult to further unlock the bottleneck for the detection performance of a single-sensor-based perception system. Perceiving the environment from spatially separated sensors is increasingly becoming a promising solution to address the issues mentioned above [10].

Sensor fusion plays the most significant role in a CP system. In terms of the real-world communication bandwidth, the early-fusion scheme requires high-volume data transmission which is more likely to be satisfied in an infrastructure-based CP system, while late fusion suffers from the detection accuracy [11]. Recently, the deep-fusion scheme is an emerging way to support cooperative object perception by sharing the internal hidden features, which requires less communication power but contains more information than the detection results [12].

III PillarGrid Network

III-A Cooperative Preprocessing

In this paper, the sensors are assumed to be equipped onboard and at the roadside, respectively. Since the two LiDAR sensors are spatially separated, preprocessing has to be done cooperatively in terms of the calibration matrix of these sensors.

III-A1 Cooperative Transformation

In this paper, the sensor data are collected from a customized CP platform which is introduced later in section IV-B. It is to be noted that the calibration matrices for both sensors are designed as the 3D location and rotation information, named Sensor Location and Pose (SLaP). Since the point clouds data (PCD) are collected based on a 3D Cartesian coordinate centered with the SLaP of the sensor, cooperative transformation is designed to unify the PCD from different sensors, which is demonstrated in Fig 2. The raw point cloud data can be described by:

| (1) |

The SLaP information is defined by a six-dimensional vector:

| (2) |

where , , , , , and represent the 3D location along axis, axis, axis and the pitch, yaw, and roll angles of the sensors in the global coordinate, respectively.

The process to transform the PCD from sensors’ coordinates to the global coordinate is designed by:

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

where , , , and represent the rotation matrix along axis, axis, axis, and the translation matrix, respectively. and represent the PCD with respect to the sensor’s coordinate and the global coordinate, respectively.

III-A2 Cooperative Geo-Fencing

After the cooperative transformation, geo-fencing process is applied to PCD. In this paper, the detected region for each of the LiDAR sensors is defined as a area centered at the location of the respective LiDAR. Specifically, is geo-fenced by:

| (8) |

where represents the 3D point cloud data after geo-fencing; and and are set as for both LiDARs. is set as for the roadside LiDAR and for the onboard LiDAR.

III-B Cooperative Feature Extraction

III-B1 Pillar-wise Voxelization

III-B2 Pillar Feature Extraction

To extract the feature simultaneously from two LiDAR sensors, we design a multi-stream neural network as shown in Fig. 2. For each stream, there are two steps to further solve the PCD. The first step is geometrical feature extraction, which generates a dimensional feature vector for every points in pillars, defined by:

| (9) |

where , and represent the distance of each point with respect to the arithmetic center of all points in the th pillar (the subscript) and the geometrical center of the pillar (the subscript), the number of points in the pillar and the number of pillars, respectively.

Then, to extract the hidden features of , a fully connected layer followed by batch normalization is applied. To be specific, the size of is and the size of the output tensor is , where is the hidden feature channel and set as in this paper. Due to the limited communication bandwidth, a data compression method is implemented by selecting the maximum number of features along every channel to synthesize a feature with the size of , i.e., to compress points into a -dimensional feature to be transmitted to the edge computer for sensor fusion.

III-C Grid-wise Feature Fusion

Grid-wise Feature Fusion (GFF) is one of the most important stages in PillarGrid, which fuses multi-sensor data based on a mechanism with high expandability. Although only two sensors are deployed in this paper to illustrate our method, GFF can fuse features generated from more than two sensors.

Fig. 3 depicts the core process in GFF. The whole process of GFF can be divided into three stages. First, map pillar features with the size of into a grid format with the size of according to the pillar index generated in the voxelization stage. Specifically, and are two dimensions of the grid, which are related to the size of voxels and PCD geo-fencing range .

After transforming the feature data into the grid format, each grid contains the hidden features representing the PCD at the specific spatial location. The grid feature from each sensor is then augmented by an additional dimension to enable grid-on-grid fusion by unsqueezing from the size of to . Then concatenation process is implemented to all the features in terms of the last dimension, i.e., generating the output size of where is the number of fused sensors, i.e., in this paper.

As demonstrated in Fig. 3, a max operation layer is applied to fuse features over different channels. A 3D max-pooling is implemented to generate the output with the size of , followed by a squeeze operation to transform the size to which is compatible with 2D CNN backbones.

III-D CNN Backbone

The backbone consists of two sub-networks: 1) one 2D convolutional (Conv2D) layer-based network that generates the extracted features with increasingly small spatial resolution; and 2) one deconvolutional (DeConv2D) layer-based network that generates output features by performing upsampling and concatenation. Each Conv2D block consists of one Conv2D layer with the kernel of , followed by several Conv2D layers with kernels of . Specifically, the numbers of Conv2D layers in each block are , , and , respectively.

III-E 3D Detection Head

An anchor-based 3D detection head [5] is applied to generate 3D bounding boxes. Intersection over Union (IoU) is implemented to match the prior boxes to the ground truth. In this paper, since only vehicles and pedestrians are detected, the number of classes is set to be .

IV Experimental Details

IV-A Loss Function

Ground truth boxes and anchors are defined by a 7-dimensional vector where , , , and represent the width, length, height, and yaw angle, respectively. The loss function is defined as:

| (10) |

| (11) |

| (12) |

where the superscript and represent the ground truth and anchor, respectively; and is defined by:

| (13) |

The total localization loss is:

| (14) |

The object classification loss is defined as:

| (15) |

where is the class probability of an anchor; and and are set to be and , respectively. Hence, the total loss is:

| (16) |

where is the number of positive anchors; and , and are set as , , and , respectively.

IV-B Dataset Acquisition

Since so far, no dataset is available for supporting cooperative perception (CP) between one onboard LiDAR and one roadside LiDAR, a CP platform is developed to collect sensor data and ground truth labels based on the CARLA simulator [26]. In addition, to ease the re-usability and accessibility, we organize the new dataset to be compatible with existing open-source training platforms (e.g., OpenMMLab [27]), by following the KITTI’s format [8]. However, the KITTI’s settings and CARLA have different coordinate systems, as shown in Fig. 5. Therefore, a transformation is designed to generate the ground truth labels in the KITTI coordinate.

This dataset (named “CARTI”) consists of data from two LiDAR sensors, one is equipped at the roadside (i.e., on a traffic signal pole of an intersection) with the height of , while the other LiDAR is mounted on the top a vehicle with the height of . The simulation is running in a synchronization mode at Hz. Data is collected at Hz, and totally frames of 3D point clouds are available, including for training, for evaluation, and for testing. Fig. 6 shows the visualized 3D point clouds and ground truth labels in one data frame.

V Results and Analysis

The training and testing platform is equipped with Intel Core™ i7-10700K [email protected]×16 and NVIDIA GPU@GeForce RTX 3090. The training pipeline is designed with 160 epochs with Batchsize of 10. Two single-sensor-based object detection models based on PointPillar [5], a SOTA object detection method, are applied to demonstrate the performance of our method.

V-A Quantitative Analysis

Two benchmarks are applied in terms of object detection performance: bird’s-eye view (BEV) and 3D perspective. Average precision (AP) and average recall (AR) are measured for each class under the IoU of 0.50 for cars and 0.25 for pedestrians. Mean average precision (mAP) is calculated across all the classes to illustrate the overall performance of the model.

As shown in Table I and Table II, PillarGrid outperforms all baselines in terms of mAP for both BEV and 3D benchmarks. Specifically, for BEV detection, PillarGrid outperforms 44.96% for onboard detection mAP and 23.72% for roadside detection mAP. For 3D detection, PillarGrid outperforms 90.52% for onboard detection mAP and 25.39% for roadside detection mAP.

For vehicle detection, in both benchmarks, PillarGrid surpasses all the baselines with a large margin in terms of both precision and recall. The results also validate the feasibility of the core idea of the cooperative perception shown in Fig. 1, which represents that PillarGrid can take advantage of both onboard and roadside point clouds. For pedestrian detection, PillarGrid outperforms the baselines in terms of the AP but has similar AR performance to roadside detection. According to the Pedestrian AR from onboard detection (lower than 1%), one hypothesis is that current voxel settings are too large () to generate effective pillar features for pedestrians (with the size of ), which may cause the slight degradation of the fused features.

| Method | Modality | mAP | Car | Pedestrian | ||

| AP50 | AR50 | AP25 | AR25 | |||

| PointPillar | Onboard Det. | 35.96 | 63.63 | 41.19 | 9.09 | 0.26 |

| PointPillar | Roadside Det. | 42.03 | 80.67 | 64.74 | 28.25 | 13.16 |

| PillarGrid | Cooperative Det. | 52.00 | 90.91 | 85.33 | 30.68 | 12.70 |

| Method | Modality | mAP | Car | Pedestrian | ||

| AP50 | AR50 | AP25 | AR25 | |||

| PointPillar | Onboard Det. | 27.11 | 63.61 | 40.97 | 9.09 | 0.25 |

| PointPillar | Roadside Det. | 41.19 | 80.63 | 64.48 | 26.89 | 12.42 |

| PillarGrid | Cooperative Det. | 51.65 | 90.91 | 85.19 | 30.01 | 12.23 |

V-B Qualitative Analysis

To further illustrate the performance of PillarGrid, we provide qualitative analysis results, as shown in Fig. 7. The left, middle and right subfigures depict the detection results for roadside baseline, onboard baseline, and PillarGrid, respectively, where wrongly detected results are highlighted.

From the perspective of precision, both roadside detection and onboard detection have one wrong detection result marked as False Positive (FP). In the right subfigure of Fig. 7, i.e., results from PillarGrid, the Grid-wise Feature Fusion process can successfully merge the feature-level information from two spatially separated LiDARs to improve the detection precision (e.g., detection marked as fused feature).

To depict the performance of PillarGrid in terms of recall, we focus on the roadside LiDAR point cloud data in the right subfigure of Fig. 7. Due to the physical occlusion and sensing range, two vehicles located at the lower-left corner cannot be detected by the roadside LiDAR (due to the building blockage) and another two vehicles on the top of the subfigure cannot be detected by the onboard LiDAR. However, PillarGrid can detect all of these occluded or out-of-range vehicles by fusing the features from both onboard and roadside sensors, which further demonstrates the capability of our deep fusion-based cooperative perception method.

VI Conclusions and Future Work

In this paper, we proposed PillarGrid, a feature-level cooperative 3D object detection method based on point cloud data (PCD). To the best of our knowledge, this is the first deep learning-based cooperative perception approach that can be applied to PCD from both onboard and roadside LiDARs. The cooperative data preprocess method is designed for point cloud data transformation and geo-fencing. A two-stream neural network is designed to extract and compress hidden features for data transmission. Grid-wise Feature Fusion (GFF), a novel deep-fusion method, is proposed to fuse deep features. To evaluate the proposed method, we develop a CARLA-based cooperative perception platform and generate a cooperative perception dataset named CARTI. The results demonstrate that the PillarGrid can improve the performance of 3D object detection by 90.52% and 25.39% compared with SOTA detection methods based on point clouds only from an onboard LiDAR or a roadside LiDAR, respectively.

To explore the horizon of PillarGrid in the future, early fusion and late fusion schemes can be integrated to form a hybrid fusion-based perception system to further improve the perception range. Different network configuration can be applied to improve the detection results for pedestrian and other active transportation modes. Furthermore, behavioral analysis can be conducted based on the object-level detection results to support subsequent CDA applications.

Acknowledgments

This research was funded by the Toyota Motor North America InfoTech Labs. The contents of this paper reflect the views of the authors, who are responsible for the facts and the accuracy of the data presented herein. The contents do not necessarily reflect the official views of Toyota Motor North America.

References

- [1] D. J. Fagnant and K. Kockelman, “Preparing a nation for autonomous vehicles: opportunities, barriers and policy recommendations,” Transportation Research Part A: Policy and Practice, vol. 77, pp. 167–181, 2015.

- [2] SAE, “Taxonomy and definitions for terms related to cooperative driving automation for on-road motor vehicles j3216_202005,” Available: https://www.sae.org/standards/content/j3216_202005/, 2021.

- [3] E. Arnold, O. Y. Al-Jarrah, M. Dianati, S. Fallah, D. Oxtoby, and A. Mouzakitis, “A survey on 3d object detection methods for autonomous driving applications,” IEEE Transactions on Intelligent Transportation Systems, vol. 20, no. 10, pp. 3782–3795, 2019.

- [4] G. Zamanakos, L. Tsochatzidis, A. Amanatiadis, and I. Pratikakis, “A comprehensive survey of lidar-based 3d object detection methods with deep learning for autonomous driving,” Computers & Graphics, vol. 99, pp. 153–181, 2021.

- [5] A. H. Lang, S. Vora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom, “Pointpillars: Fast encoders for object detection from point clouds,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 12 697–12 705.

- [6] Y. Zhou and O. Tuzel, “Voxelnet: End-to-end learning for point cloud based 3d object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4490–4499.

- [7] T. Yin, X. Zhou, and P. Krahenbuhl, “Center-based 3d object detection and tracking,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2021, pp. 11 784–11 793.

- [8] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in Conference on Computer Vision and Pattern Recognition (CVPR), 2012.

- [9] H. Caesar, V. Bankiti, A. H. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom, “nuscenes: A multimodal dataset for autonomous driving,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11 621–11 631.

- [10] Z. Bai, G. Wu, X. Qi, Y. Liu, K. Oguchi, and M. J. Barth, “Infrastructure-based object detection and tracking for cooperative driving automation: A survey,” arXiv preprint arXiv:2201.11871, 2022.

- [11] E. Arnold, M. Dianati, R. de Temple, and S. Fallah, “Cooperative perception for 3d object detection in driving scenarios using infrastructure sensors,” IEEE Transactions on Intelligent Transportation Systems, 2020.

- [12] E. E. Marvasti, A. Raftari, A. E. Marvasti, Y. P. Fallah, R. Guo, and H. Lu, “Cooperative lidar object detection via feature sharing in deep networks,” in 2020 IEEE 92nd Vehicular Technology Conference (VTC2020-Fall). IEEE, 2020, pp. 1–7.

- [13] Z. Zou, Z. Shi, Y. Guo, and J. Ye, “Object detection in 20 years: A survey,” arXiv preprint arXiv:1905.05055, 2019.

- [14] M. Engelcke, D. Rao, D. Z. Wang, C. H. Tong, and I. Posner, “Vote3deep: Fast object detection in 3d point clouds using efficient convolutional neural networks,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017, pp. 1355–1361.

- [15] B. Li, “3d fully convolutional network for vehicle detection in point cloud,” in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2017, pp. 1513–1518.

- [16] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788.

- [17] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in European conference on computer vision. Springer, 2016, pp. 21–37.

- [18] M. Simony, S. Milzy, K. Amendey, and H.-M. Gross, “Complex-yolo: An euler-region-proposal for real-time 3d object detection on point clouds,” in Proceedings of the European Conference on Computer Vision (ECCV) Workshops, 2018, pp. 0–0.

- [19] A. Gupta, A. Anpalagan, L. Guan, and A. S. Khwaja, “Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues,” Array, p. 100057, 2021.

- [20] J. Wu, H. Xu, and J. Zheng, “Automatic background filtering and lane identification with roadside lidar data,” in 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2017, pp. 1–6.

- [21] M. Ester, H.-P. Kriegel, J. Sander, X. Xu et al., “A density-based algorithm for discovering clusters in large spatial databases with noise.” in kdd, vol. 96, no. 34, 1996, pp. 226–231.

- [22] J. Li, J.-h. Cheng, J.-y. Shi, and F. Huang, “Brief introduction of back propagation (bp) neural network algorithm and its improvement,” in Advances in computer science and information engineering. Springer, 2012, pp. 553–558.

- [23] J. Zhao, H. Xu, H. Liu, J. Wu, Y. Zheng, and D. Wu, “Detection and tracking of pedestrians and vehicles using roadside lidar sensors,” Transportation research part C: emerging technologies, vol. 100, pp. 68–87, 2019.

- [24] Z. Bai, S. P. Nayak, X. Zhao, G. Wu, M. J. Barth, X. Qi, Y. Liu, and K. Oguchi, “Cyber mobility mirror: Deep learning-based real-time 3d object perception and reconstruction using roadside lidar,” arXiv preprint arXiv:2202.13505, 2022.

- [25] Z. Bai, G. Wu, X. Qi, K. Oguchi, and M. J. Barth, “Cyber mobility mirror for enabling cooperative driving automation: A co-simulation platform,” arXiv preprint arXiv:2201.09463, 2022.

- [26] A. Dosovitskiy, G. Ros, F. Codevilla, A. Lopez, and V. Koltun, “Carla: An open urban driving simulator,” in Conference on robot learning. PMLR, 2017, pp. 1–16.

- [27] M. Contributors, “MMDetection3D: OpenMMLab next-generation platform for general 3D object detection,” https://github.com/open-mmlab/mmdetection3d, 2020.