Phrase Decoupling Cross-Modal Hierarchical Matching and Progressive Position Correction for Visual Grounding

Abstract

Visual grounding has attracted wide attention thanks to its broad application in various visual language tasks. Although visual grounding has made significant research progress, existing methods ignore the promotion effect of the association between text and image features at different hierarchies on cross-modal matching. This paper proposes a Phrase Decoupling Cross-Modal Hierarchical Matching and Progressive Position Correction Visual Grounding method. It first generates a mask through decoupled sentence phrases, and a text and image hierarchical matching mechanism is constructed, highlighting the role of association between different hierarchies in cross-modal matching. In addition, a corresponding target object position progressive correction strategy is defined based on the hierarchical matching mechanism to achieve accurate positioning for the target object described in the text. This method can continuously optimize and adjust the bounding box position of the target object as the certainty of the text description of the target object improves. This design explores the association between features at different hierarchies and highlights the role of features related to the target object and its position in target positioning. The proposed method is validated on different datasets through experiments, and its superiority is verified by the performance comparison with the state-of-the-art methods. The source code of the proposed method is available at https://github.com/X7J92/VGNet.

Index Terms:

Visual Grounding, Cross-Modal Hierarchical Matching, Progressive Position Correction.I Introduction

Visual grounding [1, 2, 3, 4, 5, 6, 7] aims to locate the corresponding target object described in the text. Unlike traditional object detection [8, 9], it does not rely on predefined class labels and can detect objects solely based on the content of textual description. Visual grounding plays a guiding role in downstream computer vision tasks, such as image captioning [10, 11, 12], visual question answering [13, 14, 15], and image generation [16, 17, 18]. It involves cross-modal matching between textual description and local image features, which requires the grounding model to understand the high-level semantic features of text and images deeply, and to correlate the features associated with the two modalities implicitly. Due to the modality gap between text and image, it is challenging to achieve these goals. Current research in visual grounding primarily focuses on supervised settings, and its performance on the standard benchmark still far from expectations, which implies that the visual grounding remains challenging even in supervised settings.

This work is conducted in a supervised setting and primarily explores the construction of cross-modal matching mechanisms between textual description and local image region. Based on whether the region proposals of the objects are used to assist in target localization, existing supervised visual grounding methods can be categorized into two types: two-stage visual grounding [19, 20, 21, 22] and one-stage visual grounding [23, 24, 25, 26, 27]. The two-stage methods rely on pre-trained object detectors [8, 9] to obtain proposals for all objects in the image. They search for the object described in the text by measuring the similarity between the text features and the features of all proposals. These methods benefit from the assistance of object detectors, making cross-modal matching more convenient. However, this type of method is highly dependent on the performance of the object detector. The failure of the object detector to identify objects may result in false negatives during cross-modal matching. On the contrary, the one-stage methods can avoid this issue. Due to the lack of assistance from object detectors, one-stage methods need to address the problem arising from missing region proposals. The most popular way is to fuse visual features with text features and directly use the fused features for object localization [28, 29, 30, 24, 27]. These methods boost feature representation by fusing the textual and visual features, enabling visual grounding even without the assistance of region proposals. However, they only consider the correspondence between local or global text and image features while neglecting to explore the role of the association between text and image features at different hierarchies in target localization.

Fig. 1 illustrates the text “A pink umbrella over a woman with a tan shoulder bag” and its corresponding image. One can observe that the phrase “a pink umbrella” only refers to one pink umbrella. Still, in the image, there are two objects that match this phrase. However, when the description is extended to “a pink umbrella over a woman,” it implies a scene in which the umbrella is positioned above someone. The corresponding image content becomes increasingly specific as the description becomes more refined. Each hierarchical text phrase points to a shared target object. As the hierarchy increases, the position of the text-indicated target in the image is gradually highlighted, while non-specified objects remain stable or even suppressed. It means that when different hierarchical phrases interact with visual features from the previous hierarchy, the features corresponding to the described object will gradually receive more attention, as seen in Fig. 1 with the pink umbrella and the pedestrian’s shoulder bag. If this progressive mechanism can be leveraged to emphasize the role of features in the target object’s corresponding region, it would improve detection performance.

This paper proposes the Phrase Decoupling Cross-Modal Hierarchical Matching and Progressive Position Correction for visual grounding. The method belongs to the one-stage visual grounding approach but differs from most existing methods because it does not rely on feature fusion to achieve object localization. To achieve progressive hierarchies relationship exploration among features, a hierarchical mask generation mechanism is designed. This mechanism can separate features at different hierarchies, facilitating the relationship exploration between hierarchies. Then, a hierarchical matching scheme for text and image is constructed to emphasize the role of keywords in cross-modal matching. Furthermore, with the hierarchical matching mechanism, a corresponding progressive position correction method is proposed, which can progressively correct the target object’s position based on the matching results between hierarchies. This progressive strategy continuously refines and adjusts the location of the target object’s bounding box as the certainty of the target object described in the text improves. This design explores the association between features at different hierarchical levels and highlights the features corresponding to the target object at different hierarchies. The main contribution of this work can be summarized as follows.

-

•

We propose a progressive hierarchical association mining approach, which establishes a structured hierarchical association between text and image features, highlighting the effect of features aligned with the target object.

-

•

Based on the hierarchical matching association between text and image, we further design a progressive position correction scheme for target object detection, achieving precise localization of the target object specified in the text.

-

•

Experimental results on three referring expression datasets demonstrated this approach’s effectiveness and its superiority compared to the state-of-the-art methods. Additionally, it also exhibited a certain advantage in terms of computational efficiency.

II Related Work

II-A Two-stage Visual Grounding

Two-stage visual grounding first uses a pre-trained object detection model to generate region proposals and then selects the proposals that best match the textual description. In the first stage, detectors such as Fast R-CNN [31] or Mask R-CNN [32] are commonly used. In the second stage, proposals are matched with the provided textual description, and the proposal with the highest matching score is selected as the prediction output. To ensure accurate matching, a typical approach is to model the relationships between proposals and language descriptions by introducing a graph structure and transforming the visual grounding task into an optimal matching problem of graph nodes [33, 34, 35, 36]. However, such methods do not consider the impact of syntactic structures on text features, which limits the expressive power of text features. Parsing and understanding the semantics of sentences using syntactic tree structures and then matching them with proposals can alleviate this issue [20, 21]. Moreover, MattNet [19] employs a modular design strategy to describe the object in the text, and its position and relationship with the subject. To enhance the cross-modal feature matching capability, CM-Att-Erase [37] generates challenging samples by erasing words from the text or image content, emphasizing the remaining information’s role in cross-modal matching. Two-stage methods leverage object detection models to locate described objects, mitigating the challenges of blind matching between text and target regions. However, those methods require high precision of the object detection results. When the object detector fails or generates inaccurate region proposals, it may directly impact the subsequent matching of text and targe features.

II-B One-stage Visual Grounding

The one-stage visual grounding typically involves 1) fusing visual and textual features, 2) partitioning the fused features into grids, and 3) performing position regression for each grid. This approach eliminates the reliance on object detectors in cross-modal matching. For example, FAOSA-VG [38] concatenates visual and textual features and feeds them into YOLOv3 [39] to regress the bounding boxes of the target objects. ReSC [40] splits the textual description using attention mechanism and performs multiple rounds of inference between the image and text to gradually reduce referring ambiguity, addressing the limitations of previous one-stage methods when understanding complex queries. RCCF [41] treats visual grounding as a correlation filtering process, mapping text features from the language domain to the visual domain and using the mapping results as templates (kernels) to filter image features to generate the center positions and sizes of target boxes.

Recently, the Transformer architecture has been extensively studied. It was initially introduced in [42] for neural machine translation. Influenced by the successful application of the self-attention mechanism, researchers have further explored applying the Transformer framework to the vision tasks, such as image classification [43, 44],action recognition [45, 46, 47, 48], and visual grounding [27, 49, 50, 51, 23, 52], among other tasks. In visual grounding tasks, transformers are used to enhance the interaction between vision and text, thereby improving visual grounding performance. Specifically, TransVG [27] uses a series of simple transformer encoders for multimodal fusion and inference in visual grounding, avoiding the need for complex handcrafted fusion modules. Dynamic MDETR [51] decouples the whole grounding process into encoding and decoding stages and uses sparse priors on object locations to develop a dynamic multimodal transformer decoder to improve the efficiency of the visual grounding process. CLIP-VG [52] employs adaptive curriculum learning and a simple end-to-end network to achieve visual grounding based on CLIP [53]. Word2Pix [23] utilizes a one-stage visual grounding network with an encoder-decoder transformer structure to achieve attention learning from words to pixels. Although these methods are effective, they do not match the text with the described objects at different hierarchies, failing to highlight the association between text and image. This limits further improvement in matching performance. The proposed method establishes a connection between text and image features across hierarchies within a one-stage framework, in which a progressive refinement of the target regions can be achieved.

III The Proposed Method

III-A Overview

The visual grounding model proposed in this paper comprises four components: Global Feature Cross-Modal Alignment (GFCMA), Hierarchical Mask Generation (HMG), Cross-Modal Hierarchical Matching (CMHM), and Progressive Position Correction (PPC), as shown in Fig. 2. GFCMA primarily focuses on establishing global relationship between text and image, providing global information for the subsequent creation of hierarchical association. HMG parses phrases from the input text and uses them to create masks for CMHM. Driven by the hierarchical mask, CMHM achieves cross-modal feature hierarchical matching, allowing features to be associated across different hierarchies. PPC uses the hierarchical matching results to progressively correct the target object position. In the following sections, we will elaborate on each component.

III-B Global Feature Cross-Modal Alignmehnt

GFCMA mainly comprises a visual encoding branch and a textual encoding branch (Fig. 2). Assuming that the source image fed into the visual encoding branch is , its corresponding sentence is . is first input into ResNet101 to extract shallow features . , , and denote the number of channels, height, and width of , respectively. For each pixel position of , the feature map is vectorized to obtain the feature , composed of tokens , where . is input into the Transformer layer, resulting in , where . For textual branches, assume that the features extracted from text via the Transformer layer are , composed of tokens , where is the number of textual tokens.

As shown in Fig. 3, and pass through a cross-modal attention layer and a cross-modal global alignment layer to obtain aligned coarse-grained features. is utilized to highlight the corresponding features between and . Specifically, in the cross-modal attention layer, is transformed into the query vector via linear mapping, while is linearly mapped to both the key vector and the value vector . The semantic feature is then obtained through multi-head attention (MH Attn), with each token in incorporating global information from the text.

Furthermore, in the cross-modal global alignment layer, the visual feature and the semantic feature are linearly mapped into the same semantic space. The resulting mapped features are denoted as and , respectively. Then, we can establish a global relationship between the tokens in and the corresponding tokens in , highlighting the feature tokens of the targeted object described in the text, without the need for pairwise feature metrics. Specifically, the similarity between the corresponding tokens of and can be measured as:

| (1) |

By using the following formula, is normalized to , resulting in :

| (2) |

where is the inverse temperature of the softmax function. Each value indicates the degree of relevance between the visual token and the corresponding textual token. Leveraging , we obtain the globally aligned cross-modal features:

| (3) |

The cross-modal global alignment layer emphasizes the related features between images and text, suppressing the non-related ones. This provides support for subsequent hierarchical feature matching.

III-C Hierarchical Mask Generation

There is a hierarchical correspondence between the text phrase and the potential target area. That is, the more phrases describing the target object, the more accurate the target object position in the image. With this hierarchical association, we devise a feature cross-modal hierarchical matching scheme. In detail, we propose a hierarchical mask generation mechanism to achieve hierarchical matching of features. For texts that require phrase decoupling, we employ the phrase decoupling model from [54] to extract related noun phrases, verb phrases, and adjective phrases, etc. Take the text “white and black cat laying on orange cat” as an example. After model decoupling of this text, and we can obtain four phrases: “white and black cat” (noun phrase), “laying” (verb phrase), “on” (prepositional phrase), and “orange cat” (noun phrase). We assign a specific binary positional encoding to each phrase , where denotes the position encoding of the -th phrase, is a vector entirely constituted of 0, is a vector fully made of 1 and is the number of words that make up the current phrase. The mask at the -th hierarchy is:

| (4) |

III-D Cross-Modal Hierarchical Matching

In the paper, we present CMHM for cross-modal matching, which relies on the generated hierarchical mask, as shown in Fig. 4. CMHM consists of multiple CMHM layers, each including a Hierarchical Mask Attention (HM Attn) and a Multi-head Attention (MH Attn). HM Attn is used to extract features consistent with hierarchical masks, while MH Attn highlights cross-modal consistent features. The number of hierarchies formed by the textual phrases corresponds to the number of layers in CMHM. For the -th CMHM layer, the hierarchical mask can be represented as , which consists of . Assuming the input features to this layer are , and , where is the output of the -th CMHM. When , . By performing linear mapping, is transformed into the query vector , and is mapped to both the key vector and the value vector . Thus, the resulting output by HM Attn can be represented as:

| (5) |

where , indicates the dimensionality of , denotes the element-wise multiplication. is a modulating factor greater than . It is used to retain the visual information corresponding to the current hierarchical text query while appropriately weakening the visual information corresponding to the previous hierarchical text query. This ensures that the model does not overly rely on the previous hierarchical information during hierarchical cross-modal matching. Instead, it can fully utilize the new information of the current hierarchy in addition to referencing the previous hierarchical information.

To enhance the consistent information between and , they are summed to produce:

| (6) |

After different linear mapping, the results of serve as Query and Key, and the visual feature serves as Value in MH Attn, yielding an enhanced feature . Guided by the hierarchical text masks, we achieve a fine-grained interaction between textual and visual features. This interaction allows to incorporate hierarchical textual information consistently. This addresses the limitations of coarse-grained matching and leverages the association across different hierarchies to boost cross-modal matching. It is worth noting that the context between words is retained by segmenting the text at the phrase level, allowing the model to understand these relationships and further encourage its fine-grained matching more comprehensively.

III-E Progressive Position Correction

With the guidance of hierarchical matching, we develop a novel PPC module for target localization, which achieves precise localization by continuously refining the target’s position. As illustrated in Fig. 2, the PPC module consists of PPC layers. The PPC layer comprises Hierarchical Semantic Aggregation (HSA) and Hierarchical Position Correction (HPC), as depicted in Fig. 5. HSA is used to aggregate both textual and visual feature at the current hierarchy, consisting of HM Attn and MH Attn. HM Attn is used to extract features consistent with hierarchical masks, while MH Attn is used to highlight cross-modal consistent features. HPC is used to refine the position information of the target object from the previous layer by utilizing the target’s location data at the current layer, thereby strengthening the connections across layers.

Assume that the inputs of the -th PPC layer are the target query , textual features , hierarchical masks , visual features , and , and the output is . In the first PPC layer, a random vector is used as . In the -th PPC layer, the HSA’s output is denoted as . Since incorporates both textual and visual feature from the current layer, it carries more information about the target object compared to . These information are directly related to the positional information of the target object. Consequently, can be used to refine the predicted position information from the previous hierarchy. Let be the bounding box position predicted in the -th hierarchy, (when , is a position vector consisting of 0) be the corrected bounding box position, then the change from to can be predicted by:

| (7) |

where represents the sigmoid activation function, and MLP represents a multi-layer perceptron. In this case, the bounding box position predicted in the current stage can be expressed as:

| (8) |

In HPC, the current position information plays a crucial role in predicting the position at the next hierarchy. Therefore, we embed the position information predicted in the current stage into to assist the position predicting of the next hierarchy. Suppose the coordinate of is , where denotes the center point coordinate of the bounding box, and respectively denote the height and width of the box. Since the dimensions of and are not consistent, we adopt the method of [55] to encode the position of , then project it to the same dimension with , resulting in :

| (9) |

Models Backbone RefCOCO RefCOCOg ReferItGame Time val () testA () testB () val () testA () testB () val () test () test () () Two-stage CMN [56] Vgg16 - 71.03 65.77 - 54.32 47.76 - - - - VC [57] Vgg16 - 73.33 67.44 - 58.40 53.18 - - - - ParalAttn [58] Vgg16 - 75.31 65.52 - 61.34 50.58 - - - - DGA [34] Vgg16 - 78.42 65.53 - 69.07 51.99 - - 63.28 - RvG-Tree [20] ResNet-101 75.06 78.61 69.85 63.51 67.45 56.66 66.95 66.51 - - NMTree [21] ResNet-101 76.41 70.09 57.52 65.87 66.44 - - Ref-NMS [22] ResNet-101 78.82 82.71 73.94 66.95 71.29 58.40 68.89 68.67 - - CM-Att-Erase [37] ResNet-101 78.85 83.14 71.32 68.09 73.65 58.03 67.99 68.07 - - MAttNet [19] ResNet-101 76.65 81.14 69.99 65.33 71.62 56.02 66.58 67.27 29.04 385 One-stage RealGIN [5] DarkNet-53 77.25 78.70 72.10 62.78 67.17 54.21 - 62.75 62.33 - FAOA [38] DarkNet-53 71.15 74.88 66.32 56.86 61.89 49.46 59.44 58.90 59.30 - MCN [59] DarkNet-53 80.08 82.29 74.98 67.16 72.86 57.31 66.46 66.01 - - ReSC [40] DarkNet-53 77.63 80.45 72.30 63.59 68.36 56.81 67.30 67.20 64.60 - LBYL [60] DarkNet-53 79.67 82.91 74.15 68.64 73.38 59.49 - - 67.47 - SeqTR [61] DarkNet-53 81.23 85.00 76.08 68.82 75.37 58.78 71.35 71.58 69.66 - TRAR [62] DarkNet-53 - 81.40 78.60 - 69.10 56.10 68.90 68.30 - - CTMDI [63] DarkNet-53 82.59 85.13 79.82 69.52 75.53 63.48 73.43 73.05 - - PFOS [24] ResNet-101 78.44 81.94 73.61 65.86 72.43 55.26 67.89 67.63 67.90 - PLV-FPN [64] ResNet-101 81.93 84.99 76.25 71.20 77.40 61.08 70.45 71.08 - - Word2Pixel [23] ResNet-101 81.20 84.39 78.12 69.71 76.11 61.24 70.81 71.34 - 56 TransVG [27] ResNet-101 81.02 82.72 78.35 64.82 70.70 56.94 68.67 67.73 70.73 40 VLTVG* [3] ResNet-101 83.10 85.43 78.65 71.52 76.06 61.28 72.63 71.52 68.76 30 ResNet-101 D-MDETR [51] ResNet-50 82.80 84.99 77.74 69.05 74.20 60.17 70.73 70.68 69.48 - ResNet-50 LUNA [65] Swin-Base 86.25 88.31 82.73 76.01 80.72 68.46 76.91 76.65 73.89 - Swin-Base TransVG++ [1] ViT-Base 86.28 88.37 80.97 75.39 80.45 73.86 76.30 74.70 - JMRI [66] ViT-Base 82.97 87.30 74.62 71.17 79.82 57.01 71.96 72.04 68.23 - ViT-Base 65.89 CLIP-VG [52] CLIP 84.29 87.76 78.43 69.55 77.33 57.62 73.18 72.54 70.89 47 CLIP

where PE is the sine positional encoding function, represents concatenation along the channel dimension, and represents a fully connected layer. By adding to , we obtain the target query for the hierarchy:

| (10) |

We perform iterations on the PPC module. The output of the -th PPC-layer at -th iteration is used to predict the bounding box corresponding to the textual reference. can be generated as:

| (11) |

where MLP represents a multi-layer perceptron. We use the Generalized IoU loss () [67] and -loss to constrain in each iteration. The details are as follows:

| (12) |

where represents the ground truth, and are balancing weights and is the total number of iterations. Let be the position predicted by Eq.(8) at the -th hierarchy and -th iterations. Additionally, we use the to make and consistent:

| (13) |

The entire network is trained in an end-to-end manner, and model parameters are optimized using the total loss :

| (14) |

IV Experiments

IV-A Datasets and Evaluation Protocol

Datasets. The proposed method is evaluated on visual grounding datasets, including RefCOCO [68], RefCOCO+ [68], RefCOCOg [69] and ReferItGame [70]. Those datasets consists of images and their corresponding referring expressions.

RefCOCO/RefCOCO+/RefCOCOg The RefCOCO [68], RefCOCO+ [68] and RefCOCOg [69] datasets are created by selecting images and target objects from MSCOCO [71]. The RefCOCO dataset consists of images, which contains referring expressions for target objects. The RefCOCO+ dataset contains images with referring expressions for 49,856 objects. RefCOCOg includes images and expressions for objects. For the RefCOCO and RefCOCO+ datasets, they are divided into four sections: train, val, testA, and testB. Among them, testA focuses on images containing multiple persons, while testB involves images with a variety of non-human objects. The images in the RefCOCO and RefCOCO+ datasets contain multiple objects of the same category. Moreover, in RefCOCO+, the referring expressions exclude the words that denote absolute position (such as ’left’). The RefCOCOg dataset is divided into train, val, and test sections, with its images typically containing to objects of the same category. In addition, the average text length of RefCOCOg is words, which is significantly longer than that of the RefCOCO and RefCOCO+ datasets ( and words, respectively)

ReferItGame The ReferItGame dataset [70] is created by selecting images and target objects from SAIAPR12 [72]. It contains expressions for objects within natural scene images. The ReferItGame is divided into train, val, and test sets.

Evaluation Protocol. We follow the method used in the work of [27] to evaluate the detection performance. A prediction is deemed correct and denoted as [email protected] when the Intersection over Union (IoU) between the predicted bounding box and its Ground Truth surpasses the threshold of .

IV-B Implementation Details

In this paper, the maximum text length is set to words and the input images are resized to . For visual encoding branch, we use ResNet-50/101 [73] and transformer layers to extract visual features. For textual encoding branch, the basic BERT-base-uncased model [55] is used for initialization. We employ the phrase decoupling model from [54] for phrase decoupling. During training, we use the AdamW [74] optimizer with an initial learning rate of and batch size of for model training. We set and in the loss function described in Eq.(13). To ensure a fair comparison with more methods, we added ViT-Base[44] and Swin-Base[75] as visual backbones, while keeping the text encoder unchanged. Inspired by the large-scale pre-training visual language models, we use the visual-language model CLIP [53] as the backbone of the proposed method. To improve training stability, we fix the CLIP encoder and introduce an MLP layer for feature modulation. All other settings are consistent with the original configurations. The training is conducted on four RTX4090 GPUs for epochs.

IV-C Comparison with State-of-the-Art Methods

The experimental results of different methods on the ReferItGame, RefCOCO, RefCOCO+ and RefCOCOg datasets are compared in Table I. Those comparative methods are divided into two-stage and one-stage methods. The proposed method belongs to the one-stage methods. Compared to the one-stage methods using ResNet-50 or ResNet-101 as the backbone, the proposed method outperforms all the comparison methods. Specifically, when we use ResNet-50 as the visual backbone, the proposed method achieves a performance improvement on the RefCOCOg validation dataset compared to the D-MDETR[51]. Additionally, when using ResNet-101, the proposed method achieves a increase on the RefCOCO+ testB dataset compared to the VLTVG[3]. When we replace the ResNet-101 with ViT-base, the proposed method achieves a improvement compared to JMRI[66] on the RefCOCO+ testB dataset. Additionally, when we use Swin-Base as the visual backbone, the proposed method achieves a improvement compared to LUNA[65] on the RefCOCOg validation dataset. Furthermore, we compare the proposed method with CLIP-VG which is developed based on a large-scale visual language model. For a fair comparison, we replace the backbone in the proposed method with CLIP. As shown in Table I, the proposed method outperforms the CLIP-VG, demonstrating a significant enhancement of on the RefCOCO+ validation dataset. Additionally, it exhibits advancements of on the ReferItGame test dataset and on the RefCOCO+ testB dataset, respectively. It can be attributed to the effectiveness of the proposed hierarchical relationship mining and matching. Moreover, the proposed model using ResNet-50 as its backbone achieves the fastest speed, with an inference time of when evaluated under equivalent conditions.

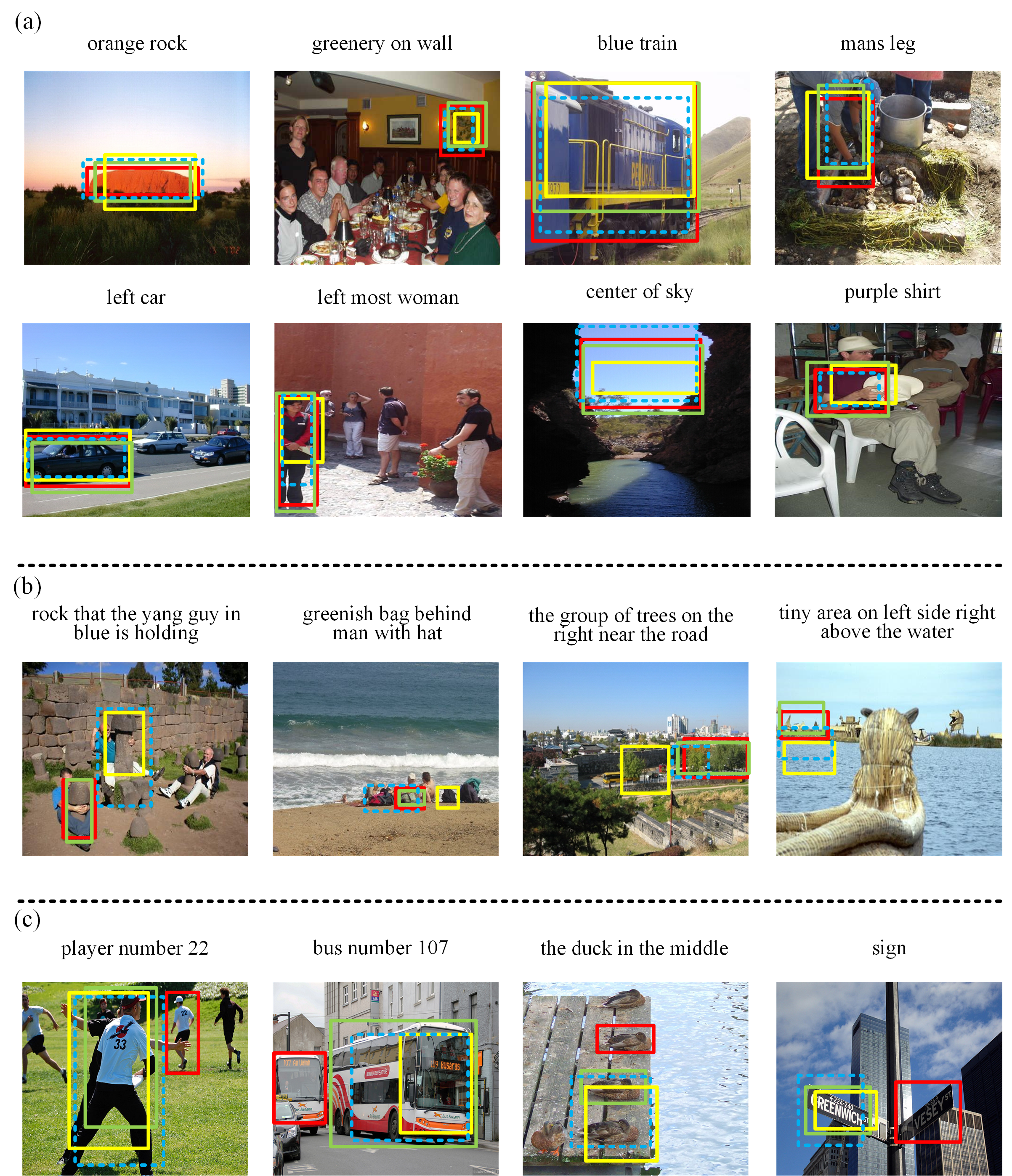

In Fig. 6, we compare the results predicted by the proposed method, CLIP-VG [52] and Baseline. The red box represents the ground truth. The green and yellow boxes denote the results predicted by the proposed method and Baseline, respectively. The blue dashed box denotes the prediction results of CLIP-VG. Fig. 6(a) shows the prediction results when the query images and simple referring expressions are fed to the models. It can be seen that all the methods can focus well on the target objects when the hierarchy of the referring expressions is less. However, as the hierarchy of the referring expressions increases, a significant discrepancy emerges between the labels and the prediction results of both the Baseline and CLIP-VG, as shown in Fig. 6(b). In contrast, the proposed method consistently sustains high localization precision. It is attributed to our method’s ability to mine the hierarchical relations of text, thereby facilitating precise localization of the target objects. Additionally, Fig. 6(c) shows some failure cases for our method, CLIP-VG and the Baseline. In the first two test cases of Fig. 6(c), we observe that our model and other methods struggle to accurately locate text queries containing numbers. This difficulty arises from the models’ challenges in recognizing numerical information. In the last two failed test cases of Fig. 6(c), the ambiguous text queries lead to our method, CLIP-VG and the Baseline predicting boxes that diverge from the ground truth annotations.

| Baseline | GFCMA | CMHM | PCC w/o HPC | PCC | Val(%) | Test(%) |

|---|---|---|---|---|---|---|

| ✓ | 65.23 | 63.89 | ||||

| ✓ | ✓ | 68.19 | 66.48 | |||

| ✓ | ✓ | ✓ | 71.33 | 68.54 | ||

| ✓ | ✓ | ✓ | ✓ | 72.94 | 70.02 | |

| ✓ | ✓ | ✓ | ✓ | 74.15 | 71.40 |

IV-D Ablation Study

The proposed network mainly includes GFCMA, CMHM and PPC modules. We conduct the ablation study on ReferItGame dataset to evaluation their contribution. We remove the cross-modal global alignment layer from the GFCMA module, and the residual part of the GFCMA module is used as the Baseline model. This network is trained with loss and generalized IoU loss. The results of ablation experiments are presented in Table II.

Effectiveness of GFCMA. As shown in Table II, when the GFCMA is added into the Baseline, the accuracy on [email protected] is improved by and on validation and test sets, respectively. The accuracy increases due to that the GFCMA focuses on global relationships between text and image, providing global information for subsequent fine-grained interactions.

Effectiveness of CMHM. When CMHM is added into Baseline+GFCMA, the performance of Baseline+GFCMA+CMHM is further improved on validation and test sets. It implies that the CMHM can mine correlation relationships between different hierarchies in cross-modal matching, and highlight the role of the shared features at different hierarchies.

Effectiveness of PCC. Compared to Baseline+GFCMA+CMHM, the inclusion of PCC results in an increase of () on the validation (test) set. It is because that the position of the detected bounding box can be gradually corrected by the PCC.

Effectiveness of HPC. To validate the contribution of the HPC in PPC module to the proposed model, the position correction in Eqs.(8)-(11) is removed and () aggregates textual and visual information layer by layer. The is used to regress and determine the final bounding box location. The model without hierarchical position correction is denoted as ‘PPC w/o HPC’. As observed in Table II, the addition of HPC results in an increase of and in [email protected] on the validation and test sets of ReferItGame, respectively. It is proved that the position correction information is useful for precise localization of target object.

IV-E Parameter Analysis

Analysis of iteration times . In Fig. 7(a), we evaluate influence of the iteration times of PPC. As shown in Fig. 7 (a), the proposed mehtod achieves the best performance when the iteration times reaches . Therefore, we set throughout the experiment.

Analysis of hyperparameter . In the proposed method, the hyperparameters used in Eq. (6) is a constant greater than . To determine the value of , Fig. 7 (b) shows the changes of model performance when takes different values. From those results, we can see that the index [email protected] reaches its maximum when .

Analysis of hyperparameter and . In this section, we analyze the impact of the hyperparameters and in Eq.(13) on detection performance on the test set of the RefCOCOg. Fig. 8 shows the changes of model performance when and take different values, respectively. It is observed from Fig. 8(a) that when , the [email protected] reaches its peak. Therefore, we set to . Moreover, the proposed mehtod achieves the best performance when , as shown in Fig. 8(b). Consequently, we set .

IV-F Further Discussion

In the proposed method, the number of decoupling phrases in the text corresponds to the number of hierarchies. On the test and validation sets of the ReferItGame and RefCOCOg datasets, we analyze the influence of the number of hierarchies on the prediction accuracy of the proposed method. Fig. 9 describes the effect of the number of hierarchies on model performance. As shown in Fig. 9, as the number of hierarchies increases, the prediction accuracy of the model gradually improves. It indicates that the information extracted from each hierarchical phrase has been successfully leveraged, thereby improving the model performance.

To further understand this phenomenon, we analyze the changes in the attention maps of the CMHM at each hierarchy, as shown in Fig. 10. We find that as the hierarchy of text description increases, the attention received by the target area increases, and the attention received by the non-target area gradually decreases. It is mainly because the features at different hierarchies involve the description of the target object. Therefore, the features referring to the target object between levels are highlighted by exploiting the correlation between different hierarchies, while those related to non-target objects are suppressed. Taking “person in middle above rock” as an example, at the first hierarchy “person”, the model pays attention to the areas where people is located. The text “person” in this hierarchy contains multiple target locations including the target that the complete text refers to. On the second hierarchy ”person in middle”, since both this hierarchy and the previous hierarchy include the target person, in exploiting hierarchical relationships, the “person” related to the target object is highlighted, and target-irrelevant “person” is suppressed. The third hierarchy is ”person in middle above rock”. Since three hierarchies involve the person and two levels involve “person in middle”, the features related to “person” and “middle” are highlighted by the proposed method, and the person corresponding to the middle and rock receives more attention from the network. Therefore, the proposed hierarchical cross-modal matching method can highlight the features of the target object and suppress the features of non-target objects.

To further validate the effectiveness of the hierarchical cross-modal matching method, we conduct visualizations of the coarse-grained and fine-grained tokens, as shown in Fig. 11. It is evident from these visualizations that coarse-grained tokens mainly focus on the rough visual regions corresponding to the text query. After processing through the hierarchical cross-modal matching, the fine-grained tokens are able to focus more precisely on the specific regions specified by text query. This further demonstrates the effectiveness of the proposed hierarchical cross-modal matching method in highlighting the features of the target object and suppressing the features of non-target objects.

V Conclusion

In this paper, we propose a phrase decoupling cross-modal hierarchical matching and progressive position correction method for visual grounding. The method can highlight the referred features of the target object in the text by exploiting the shared information at different hierarchies. Furthermore, to effectively use these features for target localization, we develop a text and image hierarchical matching mechanism, with which a corresponding target object position progressive correction strategy is designed. It allows the position of the target object bounding box to be continuously optimized and adjusted by predicting the corrected value of the target object position. Experimental results show that the proposed method can improve the performance in terms of precision for visual grounding. The ablation experiments demonstrate the effectiveness of different components of the proposed method.

References

- [1] J. Deng, Z. Yang, D. Liu, T. Chen, W. Zhou, Y. Zhang, H. Li, and W. Ouyang, “Transvg++: End-to-end visual grounding with language conditioned vision transformer,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [2] Y. Du, Z. Fu, Q. Liu, and Y. Wang, “Visual grounding with transformers,” in 2022 IEEE International Conference on Multimedia and Expo (ICME), 2022, pp. 1–6.

- [3] L. Yang, Y. Xu, C. Yuan, W. Liu, B. Li, and W. Hu, “Improving visual grounding with visual-linguistic verification and iterative reasoning,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 9489–9498.

- [4] Y. Jiao, Z. Jie, J. Chen, L. Ma, and Y.-G. Jiang, “Suspected objects matter: Rethinking model’s prediction for one-stage visual grounding,” in Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 17–26.

- [5] Y. Zhou, R. Ji, G. Luo, X. Sun, J. Su, X. Ding, C.-W. Lin, and Q. Tian, “A real-time global inference network for one-stage referring expression comprehension,” IEEE Transactions on Neural Networks and Learning Systems, vol. 34, no. 1, pp. 134–143, 2023.

- [6] Z. Yang, K. Kafle, F. Dernoncourt, and V. Ordonez, “Improving visual grounding by encouraging consistent gradient-based explanations,” in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 19 165–19 174.

- [7] Y. Bu, L. Li, J. Xie, Q. Liu, Y. Cai, Q. Huang, and Q. Li, “Scene-text oriented referring expression comprehension,” IEEE Transactions on Multimedia, vol. 25, pp. 7208–7221, 2023.

- [8] W. Wen, C. Yang, Z. Jing, H. Fengxiang, Z. Zheng-Jun, W. Yonggang, and T. Dacheng, “Exploring sequence feature alignment for domain adaptive detection transformers,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, p. 1730–1738.

- [9] C. Liu, Y. Tao, J. Liang, K. Li, and Y. Chen, “Object detection based on yolo network,” in 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), 2018, pp. 799–803.

- [10] Y. Li, Y. Pan, T. Yao, and T. Mei, “Comprehending and ordering semantics for image captioning,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 17 969–17 978.

- [11] X. Hu, Z. Gan, J. Wang, Z. Yang, Z. Liu, Y. Lu, and L. Wang, “Scaling up vision-language pretraining for image captioning,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 17 959–17 968.

- [12] M. Barraco, M. Cornia, S. Cascianelli, L. Baraldi, and R. Cucchiara, “The unreasonable effectiveness of clip features for image captioning: An experimental analysis,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2022, pp. 4661–4669.

- [13] V. Gupta, Z. Li, A. Kortylewski, C. Zhang, Y. Li, and A. Yuille, “Swapmix: Diagnosing and regularizing the over-reliance on visual context in visual question answering,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5068–5078.

- [14] Y. Ding, J. Yu, B. Liu, Y. Hu, M. Cui, and Q. Wu, “Mukea: Multimodal knowledge extraction and accumulation for knowledge-based visual question answering,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5079–5088.

- [15] Z. Shao, Z. Yu, M. Wang, and J. Yu, “Prompting large language models with answer heuristics for knowledge-based visual question answering,” in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 14 974–14 983.

- [16] Y. Zhou, R. Zhang, C. Chen, C. Li, C. Tensmeyer, T. Yu, J. Gu, J. Xu, and T. Sun, “Towards language-free training for text-to-image generation,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 17 886–17 896.

- [17] Y. Li, H. Liu, Q. Wu, F. Mu, J. Yang, J. Gao, C. Li, and Y. J. Lee, “Gligen: Open-set grounded text-to-image generation,” in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 22 511–22 521.

- [18] Y. Kim, J. Lee, J.-H. Kim, J.-W. Ha, and J.-Y. Zhu, “Dense text-to-image generation with attention modulation,” in 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023, pp. 7701–7711.

- [19] L. Yu, Z. Lin, X. Shen, J. Yang, X. Lu, M. Bansal, and T. L. Berg, “Mattnet: Modular attention network for referring expression comprehension,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 1307–1315.

- [20] R. Hong, D. Liu, X. Mo, X. He, and H. Zhang, “Learning to compose and reason with language tree structures for visual grounding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 2, pp. 684–696, 2022.

- [21] D. Liu, H. Zhang, Z.-J. Zha, and F. Wu, “Learning to assemble neural module tree networks for visual grounding,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 4672–4681.

- [22] L. Chen, W. Ma, J. Xiao, H. Zhang, and S.-F. Chang, “Ref-nms: Breaking proposal bottlenecks in two-stage referring expression grounding,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 1036–1044.

- [23] H. Zhao, J. T. Zhou, and Y.-S. Ong, “Word2pix: Word to pixel cross-attention transformer in visual grounding,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–11, 2022.

- [24] M. Sun, W. Suo, P. Wang, Y. Zhang, and Q. Wu, “A proposal-free one-stage framework for referring expression comprehension and generation via dense cross-attention,” IEEE Transactions on Multimedia, vol. 25, pp. 2446–2458, 2023.

- [25] G. Hua, M. Liao, S. Tian, Y. Zhang, and W. Zou, “Multiple relational learning network for joint referring expression comprehension and segmentation,” IEEE Transactions on Multimedia, pp. 1–13, 2023.

- [26] W. Suo, M. Sun, P. Wang, Y. Zhang, and Q. Wu, “Rethinking and improving feature pyramids for one-stage referring expression comprehension,” IEEE Transactions on Image Processing, vol. 32, pp. 854–864, 2023.

- [27] J. Deng, Z. Yang, T. Chen, W. Zhou, and H. Li, “Transvg: End-to-end visual grounding with transformers,” in 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 1749–1759.

- [28] B. Huang, D. Lian, W. Luo, and S. Gao, “Look before you leap: Learning landmark features for one-stage visual grounding,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 16 883–16 892.

- [29] H. Qiu, H. Li, Q. Wu, F. Meng, H. Shi, T. Zhao, and K. N. Ngan, “Language-aware fine-grained object representation for referring expression comprehension,” in Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 4171–4180.

- [30] J. Ye, X. Lin, L. He, D. Li, and Q. Chen, “One-stage visual grounding via semantic-aware feature filter,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 1702–1711.

- [31] R. Girshick, “Fast r-cnn,” in 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1440–1448.

- [32] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2980–2988.

- [33] C. Jing, Y. Wu, M. Pei, Y. Hu, Y. Jia, and Q. Wu, “Visual-semantic graph matching for visual grounding,” in Proceedings of the 28th ACM International Conference on Multimedia, 2020, pp. 4041–4050.

- [34] S. Yang, Y. Yu, and G. Li, “Dynamic graph attention for referring expression comprehension,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 4643–4652.

- [35] S. Yang, G. Li, and Y. Yu, “Cross-modal relationship inference for grounding referring expressions,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4140–4149.

- [36] S. Yang, Y. Yu, and G. Li, “Relationship-embedded representation learning for grounding referring expressions,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 8, pp. 2765–2779, 2021.

- [37] X. Liu, Z. Wang, J. Shao, X. Wang, and H. Li, “Improving referring expression grounding with cross-modal attention-guided erasing,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 1950–1959.

- [38] Z. Yang, B. Gong, L. Wang, W. Huang, D. Yu, and J. Luo, “A fast and accurate one-stage approach to visual grounding,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 4682–4692.

- [39] J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” arXiv preprint arXiv:1804.02767, 2018.

- [40] Z. Yang, T. Chen, L. Wang, and J. Luo, “Improving one-stage visual grounding by recursive sub-query construction,” in Computer Vision – ECCV 2020, 2020, pp. 387–404.

- [41] Y. Liao, S. Liu, G. Li, F. Wang, Y. Chen, C. Qian, and B. Li, “A real-time cross-modality correlation filtering method for referring expression comprehension,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 10 877–10 886.

- [42] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [43] C.-F. R. Chen, Q. Fan, and R. Panda, “Crossvit: Cross-attention multi-scale vision transformer for image classification,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 357–366.

- [44] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

- [45] B. Xu, X. Shu, J. Zhang, G. Dai, and Y. Song, “Spatiotemporal decouple-and-squeeze contrastive learning for semisupervised skeleton-based action recognition,” IEEE Transactions on Neural Networks and Learning Systems, pp. 1–14, 2023.

- [46] B. Xu and X. Shu, “Pyramid self-attention polymerization learning for semi-supervised skeleton-based action recognition,” arXiv preprint arXiv:2302.02327, 2023.

- [47] X. Shu, B. Xu, L. Zhang, and J. Tang, “Multi-granularity anchor-contrastive representation learning for semi-supervised skeleton-based action recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 6, pp. 7559–7576, 2023.

- [48] B. Xu, X. Shu, and Y. Song, “X-invariant contrastive augmentation and representation learning for semi-supervised skeleton-based action recognition,” IEEE Transactions on Image Processing, vol. 31, pp. 3852–3867, 2022.

- [49] J. Ye, J. Tian, M. Yan, X. Yang, X. Wang, J. Zhang, L. He, and X. Lin, “Shifting more attention to visual backbone: Query-modulated refinement networks for end-to-end visual grounding,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 15 481–15 491.

- [50] Y. Zhan, Z. Xiong, and Y. Yuan, “Rsvg: Exploring data and models for visual grounding on remote sensing data,” IEEE Transactions on Geoscience and Remote Sensing, vol. 61, pp. 1–13, 2023.

- [51] F. Shi, R. Gao, W. Huang, and L. Wang, “Dynamic mdetr: A dynamic multimodal transformer decoder for visual grounding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–18, 2023.

- [52] L. Xiao, X. Yang, F. Peng, M. Yan, Y. Wang, and C. Xu, “Clip-vg: Self-paced curriculum adapting of clip for visual grounding,” IEEE Transactions on Multimedia, pp. 1–14, 2023.

- [53] A. Radford, J. W. Kim, C. Hallacy, A. Ramesh, G. Goh, S. Agarwal, G. Sastry, A. Askell, P. Mishkin, J. Clark et al., “Learning transferable visual models from natural language supervision,” in Proceedings of the 38th International Conference on Machine Learning, 2021, pp. 8748–8763.

- [54] A. Akbik, D. Blythe, and R. Vollgraf, “Contextual string embeddings for sequence labeling,” in Proceedings of the 27th International Conference on Computational Linguistics, 2018, pp. 1638–1649.

- [55] J. D. M.-W. C. Kenton and L. K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” in Proceedings of naacL-HLT, vol. 1, 2019, p. 2.

- [56] R. Hu, M. Rohrbach, J. Andreas, T. Darrell, and K. Saenko, “Modeling relationships in referential expressions with compositional modular networks,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 4418–4427.

- [57] Y. Niu, H. Zhang, Z. Lu, and S.-F. Chang, “Variational context: Exploiting visual and textual context for grounding referring expressions,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 1, pp. 347–359, 2021.

- [58] B. Zhuang, Q. Wu, C. Shen, I. Reid, and A. v. d. Hengel, “Parallel attention: A unified framework for visual object discovery through dialogs and queries,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 4252–4261.

- [59] G. Luo, Y. Zhou, X. Sun, L. Cao, C. Wu, C. Deng, and R. Ji, “Multi-task collaborative network for joint referring expression comprehension and segmentation,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 10 031–10 040.

- [60] B. Huang, D. Lian, W. Luo, and S. Gao, “Look before you leap: Learning landmark features for one-stage visual grounding,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, pp. 16 883–16 892.

- [61] C. Zhu, Y. Zhou, Y. Shen, G. Luo, X. Pan, M. Lin, C. Chen, L. Cao, X. Sun, and R. Ji, “Seqtr: A simple yet universal network for visual grounding,” in European Conference on Computer Vision, 2022, pp. 598–615.

- [62] Y. Zhou, T. Ren, C. Zhu, X. Sun, J. Liu, X. Ding, M. Xu, and R. Ji, “Trar: Routing the attention spans in transformer for visual question answering,” in 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 2054–2064.

- [63] J. Wu, C. Wu, F. Wang, L. Wang, and Y. Wei, “Improving visual grounding with multi-scale discrepancy information and centralized-transformer,” Expert Systems with Applications, vol. 247, p. 123223, 2024.

- [64] Y. Liao, A. Zhang, Z. Chen, T. Hui, and S. Liu, “Progressive language-customized visual feature learning for one-stage visual grounding,” IEEE Transactions on Image Processing, vol. 31, pp. 4266–4277, 2022.

- [65] Y. Liang, Z. Yang, Y. Tang, J. Fan, Z. Li, J. Wang, P. H. Torr, and S.-L. Huang, “Luna: Language as continuing anchors for referring expression comprehension,” in Proceedings of the 31th ACM International Conference on Multimedia, 2023, pp. 5174–5184.

- [66] H. Zhu, Q. Lu, L. Xue, M. Xue, G. Yuan, and B. Zhong, “Visual grounding with joint multimodal representation and interaction,” IEEE Transactions on Instrumentation and Measurement, vol. 72, pp. 1–11, 2023.

- [67] H. Rezatofighi, N. Tsoi, J. Gwak, A. Sadeghian, I. Reid, and S. Savarese, “Generalized intersection over union: A metric and a loss for bounding box regression,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 658–666.

- [68] L. Yu, P. Poirson, S. Yang, A. C. Berg, and T. L. Berg, “Modeling context in referring expressions,” in Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, 2016, pp. 69–85.

- [69] J. Mao, J. Huang, A. Toshev, O. Camburu, A. Yuille, and K. Murphy, “Generation and comprehension of unambiguous object descriptions,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 11–20.

- [70] S. Kazemzadeh, V. Ordonez, M. Matten, and T. Berg, “Referitgame: Referring to objects in photographs of natural scenes,” in Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 2014, pp. 787–798.

- [71] O. Vinyals, A. Toshev, S. Bengio, and D. Erhan, “Show and tell: Lessons learned from the 2015 mscoco image captioning challenge,” IEEE transactions on pattern analysis and machine intelligence, vol. 39, no. 4, pp. 652–663, 2016.

- [72] H. J. Escalante, C. A. Hernández, J. A. Gonzalez, A. López-López, M. Montes, E. F. Morales, L. E. Sucar, L. Villasenor, and M. Grubinger, “The segmented and annotated iapr tc-12 benchmark,” Computer vision and image understanding, vol. 114, no. 4, pp. 419–428, 2010.

- [73] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [74] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” in International Conference on Learning Representations, 2018.

- [75] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 10 012–10 022.