Phase Transitions in Recovery of Structured Signals from Corrupted Measurements

Abstract

This paper is concerned with the problem of recovering a structured signal from a relatively small number of corrupted random measurements. Sharp phase transitions have been numerically observed in practice when different convex programming procedures are used to solve this problem. This paper is devoted to presenting theoretical explanations for these phenomenons by employing some basic tools from Gaussian process theory. Specifically, we identify the precise locations of the phase transitions for both constrained and penalized recovery procedures. Our theoretical results show that these phase transitions are determined by some geometric measures of structure, e.g., the spherical Gaussian width of a tangent cone and the Gaussian (squared) distance to a scaled subdifferential. By utilizing the established phase transition theory, we further investigate the relationship between these two kinds of recovery procedures, which also reveals an optimal strategy (in the sense of Lagrange theory) for choosing the tradeoff parameter in the penalized recovery procedure. Numerical experiments are provided to verify our theoretical results.

Index Terms:

Phase transition, corrupted sensing, signal separation, signal demixing, compressed sensing, structured signals, corruption, Gaussian process.I Introduction

This paper studies the problem of recovering a structured signal from a relatively small number of corrupted measurements

| (1) |

where is the sensing matrix, denotes the structured signal to be estimated, stands for the structured corruption, and represents the unstructured observation noise. The objective is to estimate and from given knowledge of and . If contains some useful information, then this model (1) can be regarded as the signal separation (or demixing) problem. In particular, if there is no corruption , then the model (1) reduces to the standard compressed sensing problem.

This problem arises in many practical applications of interest, such as face recognition [1], subspace clustering [2], sensor network [3], latent variable modeling [4], principle component analysis [5], source separation [6], and so on. The theoretical aspects of this problem have also been studied under different scenarios in the literature, important examples include sparse signal recovery from sparse corruption [7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18], low-rank matrix recovery from sparse corruption [4, 5, 19, 20, 21, 22], and structured signal recovery from structured corruption [23, 24, 25, 26, 27, 28, 29].

Since this problem is ill-posed in general, tractable recovery is possible when both signal and corruption are suitably structured. Typical examples of structured signal (or corruption) include sparse vectors and low-rank matrices. Let and be suitable proper convex functions which promote structures for signal and corruption respectively. There are three popular convex optimization approaches to reconstruct signal and corruption when different kinds of prior information are available. Specifically, when we have access to the prior knowledge of either signal or corruption and the noise level (in terms of the norm), it is natural to consider the following constrained convex recovery procedures

| (2) |

and

| (3) |

When only the noise level is known, it is convenient to employ the partially penalized convex recovery procedure

| (4) |

where is a tradeoff parameter. When there is no prior knowledge available, it is practical to use the fully penalized convex recovery procedure

| (5) |

where are some tradeoff parameters.

A large number of numerical results in the literature have suggested that phase transitions emerge in all above three recovery procedures (under random measurements), see e.g., [10, 11, 13, 15, 16, 17, 23, 24, 25, 26, 27]. Concretely, for a specific recovery procedure, when the number of the measurements exceeds a threshold, this procedure can faithfully reconstruct both signal and corruption with high probability, when the number of the measurements is below the threshold, this procedure fails with high probability. A fundamental question then is:

Q1: How to determine the locations of these phase transitions accurately?

In addition, in partially and fully penalized recovery procedures, the optimization problems also rely on some tradeoff parameters. Another important question is:

Q2: How to choose these tradeoff parameters to achieve the best possible performance?

I-A Model Assumptions and Contributions

This paper tries to provide answers for the above two questions in the absence of unstructured noise (). In this scenario, the observation model becomes

| (6) |

Here we assume that is a Gaussian sensing matrix with i.i.d. entries (), and the factor in (6) makes the columns of and have the same scale, which helps our theoretical results to be more interpretable. Accordingly, the constrained convex recovery procedures become

| (7) |

and

| (8) |

and partially and fully penalized recovery procedures reduce to

| (9) |

where is a tradeoff parameter. For each recovery procedure, we declare it succeeds when its unique solution satisfies and , otherwise, it fails.

Under the above model settings, the contribution of this paper is twofold:

-

•

First, we develop a new analytical framework which allows us to establish the phase transition theory of both constrained and penalized recovery procedures in a unified way. Specifically, for constrained recovery procedures (7) and (8), our analysis shows that their phase transitions locate at

where (or ) is the tangent cone induced by (or ) at the true signal (or corruption ), (or ) is the spherical Gaussian width of this cone, defined in Section II. For the penalized recovery procedure (9), our results indicate that its critical point locates at

where (or ) is the subdifferential of (or ) at the true signal (or corruption ), and denote the Gaussian distance and the Gaussian squared distance to a set respectively, also defined in Section II, and and .

-

•

Second, we investigate the relationship between these two kinds of recovery procedures by utilizing the established critical points and , which also reveals an optimal parameter selection strategy for (in the sense of Lagrange theory):

More precisely, under mild conditions, if the penalized procedure (9) is likely to succeed, then the constrained procedures (7) and (8) succeed with high probability, namely, if , then we have . On the contrary, if the constrained procedures (7) and (8) are likely to succeed, then we can choose the tradeoff parameter as such that the penalized procedure (9) succeeds with high probability, namely, if , then we have .

I-B Related Works

During the past few decades, there have been abundant works investigating the phase transition phenomenons in random convex optimization problems. Most of these works fit in with the framework of compressed sensing (). In this paper, we focus on the scenario in which the random measurements are contaminated by some structured corruption. We will review the works related to these two aspects in details.

I-B1 Related Works in Compressed Sensing

The works in the context of compressed sensing can be roughly divided into four groups according to their analytical tools.

The early works study phase transitions in the context of sparse signal recovery via polytope angle calculations [30]. Under Gaussian measurements, Donoho [31] analyzes the -minimization method in the asymptotic regime and establishes an empirically tight lower bound on the number of measurements required for successful recovery. In contrast to [31], Donoho and Tanner [32] also prove the existence of sharp phase transitions in the asymptotic regime when using -minimization to reconstruct sparse signals from random projections. These results are later extended to other related -minimization problems. For instance, Donoho and Tanner [33, 34] identify a precise phase transition of the sparse signal recovery problem with an additional nonnegative constraint; Khajehnejad et al. [35] introduce a nonuniform sparse model and analyze the performance of weighted -minimization over that model; Xu and Hassibi [36] present sharp performance bounds on the number of measurements required for recovering approximately sparse signals from noisy measurements via -minimization.

In [37, Fact 10.1], Amelunxen et al. explore the relationship between polytope angle theory and conical integral geometry in details. Their results have shown that conical integral geometry could go beyond many inherent limitations of polytope angle theory, such as dealing with the nuclear norm regularizer in low-rank matrix recovery problems, non-asymptotic analysis, and establishing phase transition from absolute success to absolute failure. In summary, [37] provides the first comprehensive analysis that explains phase transition phenomenons in some random convex optimization problems. Other authors further use conical integral geometry to analyze convex optimization problems with random data. For examples, Amelunxen and Bürgisser [38, 39] apply conical integral geometry to study conic optimization problems; Goldstein et al. [40] show that the sequence of conic intrinsic volumes can be approximated by a suitable Gaussian distribution in the high-dimensional limit, which provides more precise probabilities for successful and failed recovery.

Whereas the above works involve combinatorial geometry, there are some others using minimax decision theory to analyze the phase transition problems. Several papers [41, 42, 43] have observed a close agreement between the asymptotic mean square error (MSE) and the location of phase transition in the linear inverse problems. Donoho et al. [44, 45] then have shown that the minimax MSE for denoising empirically predicts the locations of phase transitions in both sparse and low-rank recovery problems. Recently, Oymak and Hassibi [46] prove that the minimax MSE risk in structured signal denoising problems is almost the same as the statistical dimension. Combining with the results in [37], their results provide a theoretical explanation for using minimax risk to describe the location of phase transition in regularized linear inverse problems.

The last line of works study the compressed sensing problem by utilizing some tools from Gaussian process theory. The key technique is a sharp comparison inequality for Gaussian processes due to Gordon [47]. Rudelson and Vershynin [48] first use Gordon’s inequality to study the -minimization problem. Stojnin [49] refines this method and obtains an empirically sharp success recovery condition under Gaussian measurements. Stojnin’s calculation is then extended to more general settings. Oymak and Hassibi [50] use it to study the nuclear norm minimization problem. Chandrasekaran et al. [51] consider a more general case in which the regularizer can be any convex function. Stojnic [52, 53] has also investigated the error behaviors of -minimization and its variants in random optimization problems, these works have been extended by a series of researches [54, 55] by Oymak, Thrampoulidis and Hassibi. Although the mentioned works provide detailed discussions for using Gaussian process theory to analyze random convex optimization problems, few of them consider the failure case for recovery. In a recent work [56], Oymak and Tropp demonstrate a universality property for randomized dimension reduction, which also proves the phase transition in the recovery of structured signals from a large class of measurement models.

I-B2 Related Works in Corrupted Sensing

There are several works in the literature studying the phase transition theory of corrupted sensing problems. For instance, in [37], Amelunxen et al. study the demixing problem , where is a random orthogonal matrix. They establish sharp phase transition results when the constrained convex program is used to solve this demixing problem. In [56], Oymak and Tropp consider a more general demixing model , where are two random transformation matrices drawing from a wide class of distributions. They attempt to reconstruct the original signal pair by solving and establish the related phase transition theory. More related to this work, Foygel and Mackey [24] consider the corrupted sensing problem (1) and analyze both the constrained recovery procedures (2) or (3) and the partially penalized recovery procedure (4). In each case, they provide sufficient conditions for stable signal recovery from structured corruption with added unstructured noise under Gaussian measurements. Very recently, Chen and Liu [27] develop an extended matrix deviation inequality and use it to analyze all three kinds of convex procedures ((2), (3), (4), and (5)) in a unified way under sub-Gaussian measurements. In terms of failure case of corrupted sensing, Zhang, Liu, and Lei [25] establish a sharp threshold below which the constrained convex procedures (7) and (8) fail to recover both signal and corruption under Gaussian measurements. Together with the work in [24], their results provide a theoretical explanation for the phase transition when the constrained procedures are used to solve corrupted sensing problems.

I-C Organization

The remainder of the paper is organized as follows. We start with reviewing some preliminaries that are necessary for our subsequent analysis in Section II. Section III is devoted to presenting the main theoretical results of this paper. In Section IV, we present a series of numerical experiments to verify our theoretical results. We conclude the paper in Section V. All proofs of our main results are included in Appendixes.

II Preliminaries

In this section, we introduce some notations and facts that underlie our analysis. Throughout the paper, and represent the unit sphere and unit ball in under the norm, respectively.

II-A Convex Geometry

II-A1 Subdifferential

The subdifferential of a convex function at is the set of vectors

If is convex and , then is a nonempty, compact, convex set. For any number , we denote the scaled subdifferential as .

II-A2 Cone and Polar Cone

A subset is called a cone if for every and , we have . For a cone , the polar cone of is defined as

The polar cone is always closed and convex. A subset is called spherically convex if is the intersection of a convex cone with the unit sphere.

II-A3 Tangent Cone and Normal Cone

The tangent cone of a convex function at is defined as the set of descent directions of at

The tangent cone of a proper convex function is always convex, but they may not be closed.

The normal cone of a convex function at is the polar of the tangent cone

Suppose that , the normal cone can also be written as the cone hull of the subdifferential [57, Theorem 23.7]

II-B Geometric Measures

II-B1 Gaussian Width

For any , a popular way to quantify the “size” of is through its Gaussian width

II-B2 Gaussian Distance and Gaussian Squared Distance

Recall that the Euclidean distance to a set is defined as

We define the Gaussian distance to a set as

Similarly, the Gaussian squared distance to a set is defined as

These two quantities are closely related 111The lower and upper bounds follow from Fact 4 (in Appendix E) and Jensen’s inequality, respectively.

| (10) |

II-C Tools from Gaussian Analysis

Our analysis makes heavy use of two well-known results in Gaussian analysis. The first one is a comparison principle for Gaussian processes due to Gordon [47, Theorem 1.1]. This result provides a convenient way to bound the probability of an event from below by that of another one. It is worth noting that the original lemma can be naturally extended from discrete index sets to compact index sets, see e.g., [54, Lemma C.1].

Fact 1 (Gordon’s Lemma).

[47, Theorem 1.1] Let , , , be two centered Gaussian processes. If and satisfy the following inequalities:

Then, we have

for all choices of .

The second one is the Gaussian concentration inequality which allows us to establish tail bounds for different kinds of Gaussian Lipschitz functions. Recall that a function is -Lipschitz with respect to the Euclidean norm if

Then the Gaussian concentration inequality reads as

Fact 2 (Gaussian concentration inequality).

[58, Theorem 1.7.6] Let , and let be -Lipschitz with respect to the Euclidean metric. Then for any , we have

and

III Main Results

In this section, we will present our main results. Section III-A is devoted to analyzing the phase transition of constrained recovery procedures (7) and (8). The phase transition of penalized recovery procedure (9) will be established in Section III-B. Section III-C explores the relationship between these two kinds of recovery procedures and illustrates how to choose the optimal tradeoff parameter . The proofs are included in Appendixes.

III-A Phase Transition of the Constrained Recovery Procedures

We start with analyzing the phase transition of constrained recovery procedures (7) and (8). Recall that a recovery procedure succeeds if it has a unique optimal solution which coincides with the true value; otherwise it fails. First of all, it is necessary to specify some analytic conditions under which the constrained procedures (7) and (8) succeed or fail to recover the original signal and corruption. To this end, we have following lemma.

Lemma 1 (Sufficient conditions for successful and failed recovery).

Armed with this lemma, our first theorem shows that the phase transition of constrained recovery procedures (7) and (8) occurs around the sum of squares of spherical Gaussian widths of and . This result ensures that the recovery is likely to succeed when the number of measurements exceeds the critical point. On the contrary, the recovery is likely to fail when the number of measurements is smaller than the critical point.

Theorem 1 (Phase transition of constrained recovery procedures).

Consider the corrupted sensing model (6) with Gaussian measurements. Assume and are non-empty and closed. Define . If the number of measurements satisfies

| (14) |

then the constrained procedures (7) and (8) succeed with probability at least . If the number of measurements satisfies

| (15) |

then the constrained procedures (7) and (8) fail with probability at least .

Remark 1 (Relation to existing results).

In [24, Theorem 1], Foygel and Mackey have shown that when

the constrained procedures (7) and (8) succeed with probability at least . Here, the Gaussian squared complexity of a set is defined as with and . On the other hand, the third author and his coauthors [25, Theorem 1] have demonstrated that when

the constrained procedures (7) and (8) fail with probability at least . Since (or ) is very close to (or ), the above two results have essentially established the phase transition theory of the constrained procedures (7) and (8).

However, in this paper, we have developed a new analytical framework which allows us to unify the results in both success and failure cases in terms of Gaussian width, which makes the phase transition theory of the constrained recovery procedures more natural. More importantly, this framework can be easily applied to establish the phase transition theory of the penalized recovery procedure.

Remark 2 (Related works).

In [37], Amelunxen et al. consider the following demixing problem

where are unknown structured signals and is a known orthogonal matrix. They have shown that the phase transition occurs around when the constrained recovery procedures are employed to solve this problem. Here, the statistical dimension of a convex cone is defined as with . Although the model assumptions of this demixing problem are different from ours, the results in the two cases are essentially consistent, since we have (by Fact 7 in Appendix E).

Recently, Oymak and Tropp [56] consider a more general demixing model

where are unknown structured signals and are random matrices. They have demonstrated that the critical point of the constrained recovery procedures is nearly located at for a large class of random matrices drawing from some models. Their model assumptions are also different from ours, because is a deterministic matrix in our case, which makes our analysis different from that of [56].

III-A1 How to evaluate the critical point ?

Theorem 1 has demonstrated that the phase transition of the constrained recovery procedures occurs around

A natural question then is how to determine the value of this critical point. To this end, it suffices to estimate and . It is now well-known that there are some standard recipes to estimate these two quantities, see e.g., [51, 37, 24]. Actually, Facts 7 and 10 indicate that can be accurately approximated by

| (16) |

To illustrate this result (16), we consider two typical examples: sparse signal recovery from sparse corruption and low-rank matrix recovery from sparse corruption. In the first example, we assume and are -sparse and -sparse vectors, respectively. Direct calculations (see Appendix D) lead to

and

In the case of low-rank matrix recovery from sparse corruption, suppose is an -rank matrix, the Gaussian squared distance of the signal in (16) is given by

where is an standard Gaussian matrix, and is the -th largest singular value of . The Gaussian squared distance of the corruption can be similarly evaluated as in the first example. In addition, we should mention that it is also possible to estimate the Gaussian width in numerically by approximating the expectation in its definition with an empirical average.

III-B Phase Transition of the Penalized Recovery Procedure

We then study the phase transition theory of the penalized recovery procedure (9). Firstly, we also need to establish sufficient conditions under which the penalized recovery procedure (9) succeeds or fails to recover the original signal and corruption.

Lemma 2 (Sufficient conditions for successful and failed recovery).

Our second theorem shows that the critical point of the penalized recovery procedure is nearly located at , which is determined by two Gaussian distances to scaled subdifferentials. This result asserts that the recovery succeeds with high probability when the number of measurements is larger than the critical point. On the other hand, the recovery fails with high probability when the number of measurements is below the critical point. In addition, the critical point is influenced by the tradeoff parameter .

Theorem 2 (Phase transition of penalized recovery procedure).

Consider the corrupted sensing model (6) with Gaussian measurements. Suppose that the subdifferential does not contain the origin. Let and . Define

If the number of measurements satisfies

| (20) |

then the penalized problem (9) succeeds with probability at least . If the number of measurements satisfies

| (21) |

then the penalized problem (9) fails with probability at least .

Remark 3 (Related works).

In [24], Foygel and Mackey have shown that, under Gaussian measurements, when

the penalized problem (9) succeeds with probability at least . Here . Very recently, Chen and Liu [27] have illustrated that, under sub-Gaussian measurements, when

the penalized problem (9) succeeds with high probability. Here is an absolute constant and is the upper bound for the sub-Gaussian norm of rows of the sensing matrix. These two sufficient conditions for successful recovery are demonstrated to be unsharp by their numerical experiments. To the best of our knowledge, the present results (Theorem 2) first establish the complete phase transition theory of the penalized recovery procedure (9), which closes an important open problem in the literature, see e.g., [23], [24], and [27].

III-B1 How to evaluate the critical point ?

Theorem 2 has suggested that the phase transition of the penalized recovery procedure occurs around

The next important question is how to calculate accurately. To this end, we have the following lemma.

Lemma 3.

The quantity can be bounded as

Proof.

Lemma 3 demonstrates that can be accurately estimated by

| (23) |

Thus it is sufficient to estimate and . There are also some standard methods to estimate these two quantities, see e.g., [51, 37, 24]. To illustrate this result (23), we also consider two typical examples: sparse signal recovery from sparse corruption and low-rank matrix recovery from sparse corruption. In the first example, we assume the signal and the corruption are -sparse and -sparse vectors, then we can obtain (see Appendix D for details)

and

The parameter in (23) takes value from to . Consider the example of low-rank matrix recovery from sparse corruption, the signal is an -rank matrix, the first Gaussian squared distance in (23) can be calculated as

where is an standard Gaussian matrix, and is the -th largest singular value of . The calculations of the Gaussian width of the corruption and the range of parameter are similar to the first example. In addition, it is possible to estimate the Gaussian distance and Gaussian width numerically by approximating the expectations in their definitions with empirical averages.

III-C Relationship between Constrained and Penalized Recovery Procedures and Optimal Choice of

The theory of Lagrange multipliers [57, Section 28] asserts that solving the constrained recovery procedures is essentially equivalent to solving the penalized problem with a best choice of the tradeoff parameter . More precisely, this equivalence consists of the following two aspects [23, Appendix A]. On the one hand,

- (I).

On the other hand, as a partial converse to (I), one has

- (II).

The above relations indicate that the performance of the constrained procedures can be interpreted as the best possible one for the penalized problem. However, the main difficulty in these results lies in how to select a suitable tradeoff parameter that leads to this equivalence. Since we have identified the precise phase transitions of both constrained and penalized recovery procedures, it is possible to allow us to explore the relationship between these two kinds of approaches in a quantitative way, which in turn implies an explicit strategy to choose the optimal .

Theorem 3 (Relationship between constrained and penalized recovery procedures and optimal choice of ).

Assume that and are non-empty and closed, and that the subdifferentials and do not contain the origin. If , then we have

On the other hand, if , then we can choose the tradeoff parameter as

| (24) |

such that 222As shown in the proof of Theorem 3, the gap can be easily reduced by introducing an extra condition , namely, if we further let , then we have . It is worth noting that this condition is easy to satisfy in practical applications.

Combining the phase transition results in Theorems 1 and 2, the first part of Theorem 3 implies that if the penalized procedure (9) is likely to succeed, then the constrained procedures (7) and (8) succeed with high probability. Similarly, the second part of Theorem 3 conveys that if the constrained procedures (7) and (8) are likely to succeed, then we can choose the tradeoff parameter as in (24) such that the penalized procedure (9) succeeds with high probability. Thus our results provide a quantitative characterization for the relations (I) and (II).

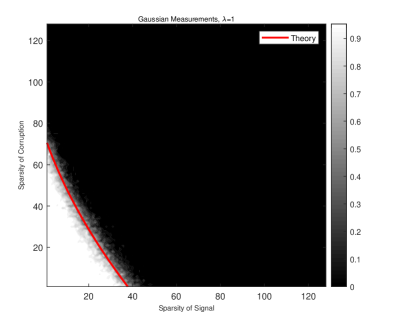

The results in Theorem 3 also enjoy a geometrical explanation in the phase transition program: The first part implies that the successful area of penalized recovery procedure should be smaller than that of the constrained procedures. The second part indicates that for any point in the successful area of the constrained recovery procedures, we can find at least a such that this point also belongs to the successful area of the corresponding penalized recovery procedure. In other words, the successful area of the constrained procedures can be regarded as the union of that of the penalized one (with different s). Fig.1 illustrates this relationship in the case of sparse signal recovery from sparse corruption.

Moreover, Theorem 3 has suggested an explicit way to choose the best parameter predicted by the Lagrange theory, i.e.,

which is equivalent to

| (25) |

We provide some insights for this parameter selection strategy. Recall that Theorem 2 has demonstrated that the penalized procedure succeeds with high probability if the number of measurements exceeds the critical point . Then the strategy (24) implies that we should pick the which makes the number of observations required for successful recovery of the penalized procedure as small as possible. Another explanation comes from the relationship between these two kinds of recovery procedures. For a given corrupted sensing problem (with fixed and ), the first part of Theorem 3 indicates that the phase transition threshold of the penalized procedure is always bounded from below by that of constrained ones, it is natural to choose the such that we can achieve the possibly smallest gap between these two thresholds i.e., , which also leads to the strategy (24).

Remark 4 (Related works).

In [24] and [27], the authors also provide an explicit way to select the tradeoff parameter . Specifically, their results have shown that measurements are sufficient to guarantee the success of the penalized procedure. In order to achieve the smallest number of measurements, it is natural to choose the as follows:

| (26) |

However, a visible mismatch between the penalized program with the strategy (26) and the constrained ones has been observed in their numerical experiments. This suggests that the choice (26) might not be optimal in the sense of the Lagrange theory. As shown in our simulations (Section IV), the empirical performance of the penalized procedure (9) with our optimal choice of the tradeoff parameter is nearly the same as that of the constrained convex procedures. Thus, our strategy (24) solves another significant open problem in [23].

IV Numerical Simulations

In this section, we perform a series of numerical experiments to verify our theoretical results. We consider two typical structured signal recovery problems: sparse signal recovery from sparse corruption and low-rank matrix recovery from sparse corruption. In each case, we employ both constrained and penalized recovery procedures to reconstruct the original signal and corruption. Throughout these experiments, the related convex optimization problems are solved by CVX Matlab package [59, 60]. In addition to the Gaussian measurements, we also consider sub-Gaussian measurements 333In fact, we have tested other distributions of such as sparse Rademacher distribution and Student’s distribution, the obtained results are quite similar, so we omit them here..

IV-A Phase Transition of the Constrained Recovery Procedures

We first consider the empirical behavior of the constrained recovery procedures in the following two structured signal recovery problems.

IV-A1 Sparse Signal Recovery from Sparse Corruption

In this example, both signal and corruption are sparse, and we use the -norm to promote their structures, i.e., and . Suppose the -norm of the true signal are known beforehand. We fix the sample size and ambient signal dimension . For each signal sparsity and each corruption sparsity . We repeat the following experiments 20 times:

-

(1)

Generate a signal vector with non-zero entries and set the other entries to 0. The locations of the non-zero entries are uniformly selected among all possible supports, and nonzero entries are independently sampled from the normal distribution.

-

(2)

Similarly, generate a corruption vector with non-zero entries and set the other entries to 0.

-

(3)

For Gaussian measurements, we draw the sensing matrix with i.i.d. standard normal entries. For sub-Gaussian measurements, we draw the sensing matrix with i.i.d. symmetric Bernoulli entries.

-

(4)

Solve the following constrained optimization problem (8):

-

(5)

Set . Declare success if .

IV-A2 Low-rank Matrix Recovery from Sparse Corruption

In this case, the desired signal is an -rank matrix and the corruption is a -sparse vector. We use the nuclear norm to promote the structure of signal. Suppose the nuclear norm of true signal are known exactly. Let and consider signal matrices. Set the sample size . For each rank and each corruption sparsity . We repeat the following experiment 20 times:

-

(1)

Generate an -rank matrix , where and are independent matrices with orthonormal columns.

-

(2)

Generate a corruption vector with non-zero entries and set the other entries to 0.

-

(3)

For Gaussian measurements, we draw the sensing matrix with i.i.d standard normal entries. For sub-Gaussian measurements, we draw the sensing matrix with i.i.d. symmetric Bernoulli entries.

-

(4)

Solve the following constrained problem (8):

-

(5)

Set . Declare success if .

In order to compare the empirical behaviors with theoretical results, we overlay the phase transition curve that predicted in Theorem 1:

| (27) |

Fig.2 reports the empirical probability of success for the constrained procedures in these two typical structured signal recovery problems. It is not hard to find that our theoretical predictions sharply align with the empirical phase transitions under both Gaussian and Bernoulli measurements.

IV-B Phase Transition of the Penalized Recovery Procedure

We next consider the empirical phase transition of the penalized procedure in these two examples.

IV-B1 Sparse Signal Recovery from Sparse Corruption

The experiment settings are almost the same as the constrained case except that we require neither nor , and we solve the following penalized procedure instead of the constrained one in step (4):

Here we test two tradeoff parameters: and . To compare the empirical behaviors with theoretical results, we overlay the phase transition curve that predicted in Theorem 2:

| (28) |

IV-B2 Low-rank Matrix Recovery from Sparse Corruption

Similarly, the experiment settings are nearly the same as the constrained case except that we recover the original signal and corruption via the following penalized procedure in step (4):

The tradeoff parameter is set to be or . To compare our theory with the empirical results, we overlay the theoretical threshold (28). Fig. 4 shows the empirical probability of success for penalized problem in low-rank matrix recovery from sparse corruption. We can find that the theoretical threshold (28) predicts the empirical phase transition quite well under different tradeoff parameter s.

IV-C Optimal Choice of the Tradeoff Parameter

In this section, we explore the empirical phase transition of the penalized procedure with the optimal tradeoff parameter . Similarly, we consider these two typical examples.

IV-C1 Sparse Signal Recovery from Sparse Corruption

The experiment settings are the same as the penalized case except that we solve the following penalized procedure in step (4):

with the optimal parameter selection strategy as in (24).

IV-C2 Low-rank Matrix Recovery from Sparse Corruption

We carry out similar experiments as the penalized case except that we solve the following penalized procedure in step (4):

The tradeoff parameter is set to according to (24).

To compare our theory with the empirical results, we overlay the theoretical threshold (27) of the constrained procedures. Fig.5 shows the empirical probability of success for the penalized procedure with the optimal parameter in both examples. We can find that the theoretical threshold of the constrained problems predicts the empirical phase transition of penalized problems perfectly under both Gaussian and Bernoulli measurements, which indicates that our strategy to choose is optimal in the sense of the Lagrange theory.

V Conclusion and Future Directions

This paper has developed a unified framework to establish the phase transition theory for both constrained and penalized recovery procedures which are used to solve corrupted sensing problems under different scenarios. The analysis is only based on some well-known results in Gaussian process theory. Our theoretical results have shown that the phase transitions of these two recovery procedures are determined by some geometric measures, e.g., the spherical Gaussian width of a tangent cone, the Gaussian (squared) distance to a scaled subdifferential. We have also explored the relationship between these two procedures from a quantitative perspective, which in turn indicates how to pick the optimal tradeoff parameter in the penalized recovery procedure. The numerical experiments have demonstrated a close agreement between our theoretical results and the empirical phase transitions. For future work, we enlist two promising directions:

-

•

Universality: Under Gaussian measurements, our results provide a thorough explanation for the phase transition phenomenon of corrupted sensing. The Gaussian assumption is critical in the establishment of our main results. However, extensive numerical examples in Section IV have suggested that the phase transition results of corrupted sensing are universal. Thus, an important question is to establish the phase transition theory for corrupted sensing beyond Gaussian measurements.

-

•

Noisy phase transition: Throughout the paper, we analyze the phase transition of corrupted sensing in the noiseless setting. It might be interesting to consider the noisy measurements , and to provide precise error analysis for different convex recovery procedures. In [44], Donoho et al. have considered the noisy compressed sensing problem with , and use the penalized -minimization to recover the original signal. They have shown that the normalized MSE is bounded throughout an asymptotic region and is unbounded throughout the complementary region. The phase boundary of the interested region is identical to the previously known phase transition for the noiseless problem. We may expect a non-asymptotic characterization of the normalized MSE for the noisy corrupted sensing problem, which implies a new perspective for the phase transition results in noiseless case.

Appendix A Proofs of Lemma 1 and Theorem 1

In this appendix, we present a detailed proof for the phase transition result of the constrained recovery procedures. For brevity, we denote and by and respectively. Some auxiliary lemma and facts used in the proofs are included in Appendix E.

A-A Proof of Lemma 1

Proof.

It follows from the optimization condition for linear inverse problems [48, Section 4] or [51, Proposition 2.1] that is the unique optimal solution of (7) or (8) if and only if , which is equivalent to . Therefore, if , i.e.,

then the constrained procedures (7) and (8) succeed. If , i.e.,

| (29) |

Obviously, (29) holds if . Since and are proper convex functions, then and are convex, and hence is spherically convex. By assumption, and are nonempty and closed, the desired sufficient condition (13) follows by directly applying the polarity principle (Fact 3).

∎

A-B Proof of Theorem 1

Proof.

Success case: Lemma 1 indicates that the constrained procedures (7) and (8) succeed if

Our goal then reduces to show that if the number of measurements satisfies (14), then the above inequality holds with high probability. For clarity, the proof is divided into three steps.

Step 1: Problem reduction. We first apply Gordon’s Lemma to convert the probability of the targeted event to a surrogate which is convenient to handle. Observe that

| (30) |

For any and , define the following two Gaussian processes

and

where , , and are independent of each other. It is not hard to check that the above Gaussian processes satisfy the conditions of Gordon’s Lemma, i.e.,

where, in the last line, the equality holds when . It then follows from Gordon’s Lemma (Fact 1) that (by setting )

where the second inequality is due to the law of total probability and the third inequality holds by noting when . Rearranging the above inequality leads to

| (31) |

Moreover, can be rewritten as

| (32) |

In the last line, we have let , .

Define

Let

If , then we have

If , then equation , which implies

Thus we have

| (33) |

Combining (30), (A-B), and (33) yields

| (34) |

Therefore, it is sufficient to establish the lower bound for .

Step 2: Establish the lower bound for . We then apply the Gaussian concentration inequality to establish the lower bound for .

Note that can reformulated as

where, in the second line, we have let . It then follows from Lemma 4 that the function is a -Lipschitz function. To apply the Gaussian concentration inequality, it suffices to bound the expectation of . To this end,

| (35) |

The third line is due to the fact that (or ) and (or ) share the same distribution. The first inequality has used Fact 4, i.e., The fifth line holds because of Moreau’s decomposition theorem (Fact 5). The next two lines follows from Facts 6 and 7, respectively. The last two inequalities are due to the measurement condition (14).

Step 3: Complete the proof.

Combining the results in Steps 1 and 2 ((34) and (36)), we have

Therefore, we have established that when , the constrained procedures (7) and (8) succeed with probability at least .

Failure case: According to Lemma 1, the constrained procedures (7) and (8) fail if

where . So it suffices to show that if the number of measurements satisfies (15), then the above inequality holds with high probability. For clarity, the proof is similarly divided into three steps.

Step 1: Problem reduction. In this step, we also use Gordon’s Lemma to convert the probability of the targeted event to another one which is easy to handle.

Let and . Note first that for any , we have

The inequality is due to the max-min inequality. The last line has used the fact that when , otherwise it equals . Thus we obtain

| (37) |

We then use Gordon’s Lemma to bound the probability of the targeted event from below. To this end, for any and , define the following two Gaussian processes

and

where , , and are independent of each other. It can be easily checked that these two Gaussian processes satisfy the conditions in Gordon’s Lemma:

Here, in the last line, the equality holds when . It follows from Gordon’s Lemma (Fact 1) that (by setting )

which implies

| (38) |

Moreover, can be bounded

The inequality holds because of the max-min inequality. In the last line, we have let , and .

Define

Let 444The effective domain of an extended real-valued function is defined as .

Clearly, , since as . Then we have

| (39) |

Combining (37), (A-B), and (A-B), we obtain

| (40) |

Therefore, our goal reduces to establish the lower bound for .

Step 2: Establish the lower bound for . In this step, we use the Gaussian concentration inequality to establish the lower bound for .

Similar to the success case, can be rewritten as

It then follows from Lemma 4 that is a -Lipschitz function. Moreover, its expectation can be bounded from below:

| (41) |

The second line holds because and have the same distribution. The first inequality is due to Jensen’s inequality. The fourth line has used Moreau’s decomposition theorem (Fact 5). The next two lines follow from Facts 6 and 7, respectively. The last two lines holds because of the measurement condition (15).

Thus we have established that when , the constrained procedures (7) and (8) fail with probability at least . This completes the proof.

∎

Appendix B Proofs of Lemma 2 and Theorem 2

In this appendix, we prove the phase transition result of the penalized recovery procedure. Some auxiliary lemma and facts used in the proofs are included in Appendix E.

B-A Proof of Lemma 2

Proof.

The penalized recovery procedure (9) can be reformulated as the following unconstrained form

Define . Clearly, is a proper convex function. It follows from [57, Theorems 23.8 and 23.9] that the subdifferential of at is given by

Moreover, attains its minimum at if and only if [57, Theorems 27.1]. Therefore, if

| (43) |

then the penalized problem (9) succeeds. If , i.e.,

then the penalized problem (9) fails.

Clearly, (43) holds if . Since and are proper convex functions, then and are nonempty, closed convex sets, and hence is nonempty, closed, and spherically convex. A direct application of the polarity principle (Fact 3) yields the desired sufficient condition (19).

∎

B-B Proof of Theorem 2

Proof.

Success case: By Lemma 2, the penalized problem (9) succeeds if

where . So it is sufficient to show that if the number of measurements satisfies (20), then the above inequality holds with high probability. The proof is also divided into three steps.

Step 1: Problem reduction. In this step, we similarly use Gordon’s Lemma to convert the probability of the targeted event to another one which can be handled easily.

Let and . Note first that for any , we have

The inequality is due to the max-min inequality. The last step has used the fact that when , otherwise it equals . Thus we obtain

| (44) |

We then use Gordon’s Lemma to establish a lower bound for the probability of the targeted event. To this end, for any and , define the following two Gaussian processes

and

where , , and are independent of each other. Direct calculations show that these two defined Gaussian processes satisfy the conditions in Gordon’s Lemma:

In the last line, the equality holds when . It follows from Gordon’s lemma (Fact 1) that (by setting )

which implies

| (45) |

Moreover, can be bounded from below as follows

The first inequality holds because of the max-min inequality. In the last line, recall that the joint cone is defined as , so we have let and for .

Since and are nonempty and closed, we choose such that

which leads to

In the last line, we have let , , and .

Define

and choose such that

Then we have

| (46) |

Combining (44), (B-B), and (46) yields

| (47) |

where the last line holds because , and hence . Thus it suffices to establish the lower bound for .

Step 2: Establish the lower bound for . In this step, we apply the Gaussian concentration inequality to establish the lower bound for .

It follows from Lemma 4 that the function is a -Lipschitz function. Its expectation can be bounded from below as follows:

The last line is due to the measurement condition (20).

Step 3: Complete the proof.

Combining (47) and (48), we have

This means that when , the penalized problem (9) succeeds with probability at least .

Failure case: According to Lemma 2, the penalized problem (9) fails if

So it is enough to show that if the number of measurements satisfies (21), then the above inequality holds with high probability. For clarity, the proof is similarly divided into three steps.

Step 1: Problem reduction. In this step, we employ Gordon’s Lemma to convert the probability of the targeted event to another one which is easy to handle.

Note that

| (49) |

We then use Gordon’s Lemma to establish a lower bound for the probability of the targeted event. To this end, for any and , define the following two Gaussian processes

and

here , , and are independent of each other. It is not hard to check that these two defined processes satisfy the conditions in Gordon’s Lemma:

In the last line, the equality holds when . It follows from Gordon’s Lemma (Fact 1) that (by setting )

which implies

| (50) |

Moreover, can be bounded from below as follows:

In the third line, we have chosen such that

In the last line, we have let , , and .

Define

and choose such that

Then we have

| (51) |

Combining (49), (B-B), and (51) yields

| (52) |

where we have used the fact that and hence . Thus it is enough to establish the lower bound for .

Step 2: Establish the lower bound for . In this step, we use the Gausian concentration inequaltiy to establish the lower bound for .

By Lemma 4, the function is a -Lipschitz function. Its expectation can be bounded:

| (53) |

The second line is due to the fact that and have the same distribution. The last inequality is due to the measurement condition (21).

Step 3: Complete the proof.

Putting (52) and (54) together, we have

Thus we have shown that when , the penalized problem (9) fails with probability at least . This completes the proof.

∎

Appendix C Proof of Theorem 3

In this appendix, we prove our last theorem which establishes the relationship between constrained and penalized recovery procedures and illustrates how to select the optimal parameter for the penalized method. Some auxiliary lemma and facts used in the proof are included in Appendix E.

Proof.

The core ingredient in the proof of Theorem 3 is the fact that the distance of a vector to the scaled subdifferential can always be bounded from below by that of this vector to the cone of the subdifferential (see Fig. 6). With this observation in mind, we have

| (55) |

By assumptions, and , we obtain

Substituting the above equalities into (C) and rearranging yields

| (56) |

We are now ready to establish the bounds in Theorem 3. First consider the case in which , then (56) implies that

The second inequality is due to the relation (10). The third line has used Fact 6 and the assumption that is closed (thus ). The next two lines follow from Facts 7 and 8, respectively. Thus we have shown that if , then .

We next consider the case where . It is not hard to find that for , the two Gaussian distances in have the following lower bounds:

and

Then we can choose

such that the above two Gaussian distances attain their lower bounds simultaneously. The second lower bound is achievable because can take any positive number if . Thus attains its minimum at , i.e.,

Further, we have the following upper bound

| (57) | ||||

Here, the first inequality is due to Jensen’s inequality. The next two lines have used Facts 6 and 7, respectively. The third equality holds because of Moreau’s decomposition theorem (Fact 5). The next inequality has used Fact 7 again. The lase two inequalities follow from the condition . Rearranging completes the proof.

It’s worth noting that if we impose an extra condition on (e.g., , which can be easily satisfied in practical applications), then we can obtain a sharper upper bound than (57):

The second inequality is due to the facts that for and for . The lase two inequalities have used the condition . Rearranging yields , which leads to a smaller gap than .

∎

Appendix D Evaluate and for Typical Structured Signal and Corruption

In Section III, we have shown that and can be accurately estimated by equations (16) and (23), respectively. Thus it is sufficient to evaluate two related functionals: Gaussian squared distance to a scaled subdifferential and spherical Gaussian width of a scaled subdifferential. There exist some standard recipes to calculate these two quantities in the literature, see e.g., [51, 37, 24]. For the completeness of this paper, we calculate these two functionals for sparse vectors and low-rank matrices in this appendix.

D-A Calculation for Sparse Vectors

Let be an -sparse vector in , and let denote its support. We use the -norm to promote the structure of sparse vectors. The scaled subdifferential of is given by

where represents the complement of . Then the Gaussian squared distance to a scaled subdifferential can be calculated as

Here is the soft thresholding operator defined as:

Let be a -sparse vector in , and let denote the support of . The scaled spherical part of the subdifferential is given by

Then the spherical Gaussian width of a scaled subdifferential is

The last line is due to the Cauchy-Schwarz inequality, i.e., . The equality holds when for .

D-B Calculation for Low-rank Matrices

Let be an -rank matrix with . We use the nuclear norm , which is the sum of singular values of , to promote the structure of low-rank matrices. Note that the nuclear norm and Gaussian distance are both unitary invariant, without loss of generality, we can assume that takes the form

where . The scaled subdifferential of is

Here is the spectral norm, which is equal to the maximum singular value of . Let

be a partition of Gaussian matrix with and . Then the Gaussian squared distance to a scaled subdifferential is

Here is the -th largest singular value. The expectation term concerns the density of singular values of Gaussian matrix , it seems challenging to obtain an exact formula for this term. However, there exist some asymptotic results in the literatures, see e.g. [37, 54]. In our simulations, we use the Monte Carlo method to calculate this expectation term.

Let be a -rank matrix. Since the Gaussian width is also unitary invariant, we assume that takes the same form as . The scaled spherical part of the subdifferential is

Then spherical Gaussian width of a scaled subdifferential is given by

The last line is due to the Cauchy-Schwarz inequality, i.e., . The notation denotes the expected length of an -dimensional vector with independent standard normal entries.

Appendix E Auxiliary Lemma and Facts

In this appendix, we present some additional auxiliary lemma and facts that are used in the proofs of our main results.

Lemma 4.

Let and be two subsets, is a constant. Then the functions

and

are -Lipschitz functions.

Proof.

Fact 3 (Polarity principle).

[56, Proposition 3.8] Let be a non-empty, closed, spherically convex subset of the unit sphere , and let be a linear map. If is not a subspace, then

where is the image of .

Fact 4 (Variance of Gaussian Lipschitz functions).

[58, Theorem 1.6.4] Consider a random vector and a Lipschitz function with Lipschitz norm (with respect to the Euclidean metric). Then,

Fact 5 (Moreau’s decomposition theorem).

[61, Theorem 6.30] Let be a nonempty closed convex cone in and let . Then

Fact 6.

Fact 7.

[37, Proposition 10.2] Let be a convex cone. The Gaussian width and the statistical dimension are closely related:

Fact 8.

[51, Lemma 3.7] Let be a non-empty closed, convex cone. Then we have that

Fact 9 (Max–min inequality).

[57, Lemma 36.1] For any function and any , , we have

Fact 10.

[37, Theorem 4.3] Let be a norm on , and fix a non-zero point . Then

References

- [1] J. Wright, A. Y. Yang, A. Ganesh, S. S. Sastry, and Y. Ma, “Robust face recognition via sparse representation,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 31, no. 2, pp. 210–227, 2009.

- [2] E. Elhamifar and R. Vidal, “Sparse subspace clustering,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Miami, FL, USA, 2009, pp. 2790–2797.

- [3] J. Haupt, W. U. Bajwa, M. Rabbat, and R. Nowak, “Compressed sensing for networked data,” IEEE Signal Process. Mag., vol. 25, no. 2, pp. 92–101, 2008.

- [4] V. Chandrasekaran, S. Sanghavi, P. A. Parrilo, and A. S. Willsky, “Rank-sparsity incoherence for matrix decomposition,” SIAM J. Optim., vol. 21, no. 2, pp. 572–596, 2011.

- [5] E. J. Candès, X. Li, Y. Ma, and J. Wright, “Robust principal component analysis?” J. ACM, vol. 58, no. 3, pp. 1–37, 2011.

- [6] M. Elad, J.-L. Starck, P. Querre, and D. L. Donoho, “Simultaneous cartoon and texture image inpainting using morphological component analysis (MCA),” Appl. Comp. Harmonic Anal., vol. 19, no. 3, pp. 340–358, 2005.

- [7] J. N. Laska, M. A. Davenport, and R. G. Baraniuk, “Exact signal recovery from sparsely corrupted measurements through the pursuit of justice,” in Proc. 43rd Asilomar Conf. Signals, Syst. Comput., Pacific Grove, CA, USA, 2009, pp. 1556–1560.

- [8] J. Wright and Y. Ma, “Dense error correction via -minimization,” IEEE Trans. Inf. Theory, vol. 56, no. 7, pp. 3540–3560, 2010.

- [9] X. Li, “Compressed sensing and matrix completion with constant proportion of corruptions,” Construct. Approximation, vol. 37, no. 1, pp. 73–99, 2013.

- [10] N. H. Nguyen and T. D. Tran, “Exact recoverability from dense corrupted observations via-minimization,” IEEE Trans. Inf. Theory, vol. 59, no. 4, pp. 2017–2035, 2013.

- [11] ——, “Robust lasso with missing and grossly corrupted observations,” IEEE Trans. Inf. Theory, vol. 4, no. 59, pp. 2036–2058, 2013.

- [12] P. Kuppinger, G. Durisi, and H. Bolcskei, “Uncertainty relations and sparse signal recovery for pairs of general signal sets,” IEEE Trans. Inf. Theory, vol. 58, no. 1, pp. 263–277, 2012.

- [13] C. Studer, P. Kuppinger, G. Pope, and H. Bolcskei, “Recovery of sparsely corrupted signals,” IEEE Trans. Inf. Theory, vol. 58, no. 5, pp. 3115–3130, 2012.

- [14] G. Pope, A. Bracher, and C. Studer, “Probabilistic recovery guarantees for sparsely corrupted signals,” IEEE Trans. Inf. Theory, vol. 59, no. 5, pp. 3104–3116, 2013.

- [15] C. Studer and R. G. Baraniuk, “Stable restoration and separation of approximately sparse signals,” Appl. Comp. Harmonic Anal., vol. 37, no. 1, pp. 12–35, 2014.

- [16] D. Su, “Data recovery from corrupted observations via l1 minimization,” 2016, [Online]. Available: https://arxiv.org/abs/1601.06011.

- [17] B. Adcock, A. Bao, J. D. Jakeman, and A. Narayan, “Compressed sensing with sparse corruptions: Fault-tolerant sparse collocation approximations,” SIAM/ASA J. Uncertain. Quantif., vol. 6, no. 4, pp. 1424–1453, 2018.

- [18] B. Adcock, A. Bao, and S. Brugiapaglia, “Correcting for unknown errors in sparse high-dimensional function approximation,” Numer. Math., vol. 142, no. 3, pp. 667–711, 2019.

- [19] H. Xu, C. Caramanis, and S. Sanghavi, “Robust PCA via outlier pursuit,” IEEE Trans. Inf. Theory, vol. 58, no. 5, pp. 3047–3064, 2012.

- [20] H. Xu, C. Caramanis, and S. Mannor, “Outlier-robust PCA: the high-dimensional case,” IEEE Trans. Inf. Theory, vol. 59, no. 1, pp. 546–572, 2013.

- [21] J. Wright, A. Ganesh, K. Min, and Y. Ma, “Compressive principal component pursuit,” Inf. Inference, J. IMA, vol. 2, no. 1, pp. 32–68, 2013.

- [22] Y. Chen, A. Jalali, S. Sanghavi, and C. Caramanis, “Low-rank matrix recovery from errors and erasures,” IEEE Trans. Inf. Theory, vol. 59, no. 7, pp. 4324–4337, 2013.

- [23] M. B. McCoy and J. A. Tropp, “Sharp recovery bounds for convex demixing, with applications,” Found. Comput. Math., vol. 14, no. 3, pp. 503–567, 2014.

- [24] R. Foygel and L. Mackey, “Corrupted sensing: Novel guarantees for separating structured signals,” IEEE Trans. Inf. Theory, vol. 60, no. 2, pp. 1223–1247, 2014.

- [25] H. Zhang, Y. Liu, and L. Hong, “On the phase transition of corrupted sensing,” in Proc. IEEE Int. Symp. Inf. Theory (ISIT), Aachen, Germany, 2017, pp. 521–525.

- [26] J. Chen and Y. Liu, “Corrupted sensing with sub-Gaussian measurements,” in Proc. IEEE Int. Symp. Inf. Theory (ISIT). Aachen, Germany: IEEE, 2017, pp. 516–520.

- [27] J. Chen and Y. Liu, “Stable recovery of structured signals from corrupted sub-Gaussian measurements,” IEEE Trans. Inf. Theory, vol. 65, no. 5, pp. 2976–2994, 2019.

- [28] Z. Sun, W. Cui, and Y. Liu, “Recovery of structured signals from corrupted non-linear measurements,” in Proc. IEEE Int. Symp. Inf. Theory (ISIT), Paris, France, 2019, pp. 2084–2088.

- [29] ——, “Quantized corrupted sensing with random dithering,” in Proc. IEEE Int. Symp. Inf. Theory (ISIT), Los Angeles, USA, 2020, pp. 1397–1402.

- [30] D. L. Donoho, “Neighborly polytopes and sparse solutions of underdetermined linear equations,” Technical Report, Department of Statistics, Stanford University, 2005.

- [31] ——, “High-dimensional centrally symmetric polytopes with neighborliness proportional to dimension,” Discrete Comput. Geom., vol. 35, no. 4, pp. 617–652, 2006.

- [32] D. Donoho and J. Tanner, “Counting faces of randomly projected polytopes when the projection radically lowers dimension,” J. Amer. Math. Soc., vol. 22, no. 1, pp. 1–53, 2009.

- [33] D. L. Donoho and J. Tanner, “Neighborliness of randomly projected simplices in high dimensions,” Proc. Natl Acad. Sci. USA, vol. 102, no. 27, pp. 9452–9457, 2005.

- [34] ——, “Counting the faces of randomly-projected hypercubes and orthants, with applications,” Discrete Comput. Geom., vol. 43, no. 3, pp. 522–541, 2010.

- [35] M. A. Khajehnejad, W. Xu, A. S. Avestimehr, and B. Hassibi, “Analyzing weighted minimization for sparse recovery with nonuniform sparse models,” IEEE Trans. Signal Process., vol. 59, no. 5, pp. 1985–2001, 2011.

- [36] W. Xu and B. Hassibi, “Precise stability phase transitions for minimization: A unified geometric framework,” IEEE Trans. Inf. Theory, vol. 57, no. 10, pp. 6894–6919, 2011.

- [37] D. Amelunxen, M. Lotz, M. B. McCoy, and J. A. Tropp, “Living on the edge: Phase transitions in convex programs with random data,” Inf. Inference, J. IMA, vol. 3, no. 3, pp. 224–294, 2014.

- [38] D. Amelunxen and P. Bürgisser, “Intrinsic volumes of symmetric cones and applications in convex programming,” Math. Progam., vol. 149, no. 1-2, pp. 105–130, 2015.

- [39] ——, “Probabilistic analysis of the grassmann condition number,” Found. Comput. Math., vol. 15, no. 1, pp. 3–51, 2015.

- [40] L. Goldstein, I. Nourdin, and G. Peccati, “Gaussian phase transitions and conic intrinsic volumes: Steining the steiner formula,” Ann. Appl. Probab., vol. 27, no. 1, pp. 1–47, 2017.

- [41] D. L. Donoho, A. Maleki, and A. Montanari, “Message-passing algorithms for compressed sensing,” Proc. Natl Acad. Sci. USA, vol. 106, no. 45, pp. 18 914–18 919, 2009.

- [42] M. Bayati and A. Montanari, “The dynamics of message passing on dense graphs, with applications to compressed sensing,” IEEE Trans. Inf. Theory, vol. 57, no. 2, pp. 764–785, 2011.

- [43] ——, “The LASSO risk for Gaussian matrices,” IEEE Trans. Inf. Theory, vol. 58, no. 4, pp. 1997–2017, 2011.

- [44] D. L. Donoho, A. Maleki, and A. Montanari, “The noise-sensitivity phase transition in compressed sensing,” IEEE Trans. Inf. Theory, vol. 57, no. 10, pp. 6920–6941, 2011.

- [45] D. L. Donoho, M. Gavish, and A. Montanari, “The phase transition of matrix recovery from Gaussian measurements matches the minimax MSE of matrix denoising,” Proc. Natl Acad. Sci. USA, vol. 110, no. 21, pp. 8405–8410, 2013.

- [46] S. Oymak and B. Hassibi, “Sharp MSE bounds for proximal denoising,” Found. Comput. Math., vol. 16, no. 4, pp. 965–1029, 2016.

- [47] Y. Gordon, “Some inequalities for Gaussian processes and applications,” Isr. J. Math., vol. 50, no. 4, pp. 265–289, 1985.

- [48] M. Rudelson and R. Vershynin, “On sparse reconstruction from fourier and Gaussian measurements,” Comm. Pure Appl. Math., vol. 61, no. 8, pp. 1025–1045, 2008.

- [49] M. Stojnic, “Various thresholds for -optimization in compressed sensing,” 2009, [Online]. Available: https://arxiv.org/abs/0907.3666.

- [50] S. Oymak and B. Hassibi, “New null space results and recovery thresholds for matrix rank minimization,” 2010, [Online]. Available: https://arxiv.org/abs/1011.6326.

- [51] V. Chandrasekaran, B. Recht, P. A. Parrilo, and A. S. Willsky, “The convex geometry of linear inverse problems,” Found. Comput. Math., vol. 12, no. 6, pp. 805–849, 2012.

- [52] M. Stojnic, “A framework to characterize performance of lasso algorithms,” 2013, [Online]. Available: https://arxiv.org/abs/1303.7291.

- [53] ——, “Regularly random duality,” 2013, [Online]. Available: https://arxiv.org/abs/1303.7295.

- [54] S. Oymak, C. Thrampoulidis, and B. Hassibi, “The squared-error of generalized LASSO: A precise analysis,” 2013, [Online]. Available: https://arxiv.org/abs/1311.0830.

- [55] C. Thrampoulidis, S. Oymak, and B. Hassibi, “The Gaussian min-max theorem in the presence of convexity,” 2014, [Online]. Available: https://arxiv.org/abs/1408.4837.

- [56] S. Oymak and J. A. Tropp, “Universality laws for randomized dimension reduction, with applications,” Inf. Inference, J. IMA, vol. 7, no. 3, pp. 337–446, 2018.

- [57] R. T. Rockafellar, Convex analysis. Princeton, NJ, USA: Princeton Univ. Press, 2015.

- [58] V. I. Bogachev, Gaussian measures. Providence, Rhode island, USA: American Mathematical Soc., 1998, no. 62.

- [59] M. Grant and S. Boyd, “CVX: Matlab software for disciplined convex programming, version 2.1,” 2017, [Online]. Available: http://cvxr.com/cvx/.

- [60] ——, “Graph implementations for nonsmooth convex programs,” in Recent Advances in Learning and Control, ser. Lecture Notes in Control and Information Sciences, V. Blondel, S. Boyd, and H. Kimura, Eds. London, U.K.: Springer-Verlag, 2008, pp. 95–110.

- [61] H. H. Bauschke, P. L. Combettes et al., Convex analysis and monotone operator theory in Hilbert spaces. Cham, Switzerland: Springer, 2011, vol. 408.