Phase function methods for second order linear ordinary differential equations with turning points

Abstract

It is well known that second order linear ordinary differential equations with slowly varying coefficients admit slowly varying phase functions. This observation is the basis of the Liouville-Green method and many other techniques for the asymptotic approximation of the solutions of such equations. More recently, it was exploited by the author to develop a highly efficient solver for second order linear ordinary differential equations whose solutions are oscillatory. In many cases of interest, that algorithm achieves near optimal accuracy in time independent of the frequency of oscillation of the solutions. Here we show that, after minor modifications, it also allows for the efficient solution of second order differential equations which have turning points. That is, it is effective in the case of equations whose solutions are oscillatory in some regions and behave like linear combinations of increasing and decreasing exponential functions in others. We present the results of numerical experiments demonstrating the properties of our method, including some which show that it can used to evaluate many classical special functions in time independent of the parameters on which they depend.

Abstract

It is well known that second order linear ordinary differential equations with slowly varying coefficients admit slowly varying phase functions. This observation is the basis of the Liouville-Green method and many other techniques for the asymptotic approximation of the solutions of such equations. More recently, it was exploited by the author to develop a highly efficient solver for second order linear ordinary differential equations whose solutions are oscillatory. In many cases of interest, that algorithm achieves near optimal accuracy in time independent of the frequency of oscillation of the solutions. Here we show that, after minor modifications, it also allows for the efficient solution of second order differential equations which have turning points. That is, it is effective in the case of equations whose solutions are oscillatory in some regions and behave like linear combinations of increasing and decreasing exponential functions in others. We present the results of numerical experiments demonstrating the properties of our method, including some which show that it can used to evaluate many classical special functions in time independent of the parameters on which they depend. ordinary differential equations; fast algorithms; special functions.

keywords:

ordinary differential equations, fast algorithms, special functions1 Introduction

It has long been known that the solutions of second order linear ordinary differential equations with slowly varying coefficients can be approximated via slowly varying phase functions. This principle is the basis of many asymptotic techniques, including the venerable Liouville-Green method. If is smooth and strictly positive on the interval , then

| (1) |

where is defined via

| (2) |

are a pair of Liouville-Green approximates for the second order linear ordinary differential equation

| (3) |

When is slowly varying, so too is the function defined via (2), and this is the case regardless of the magnitude of . By contrast, the solutions of (3) become increasing oscillatory as grows in magnitude. For a careful discussion of the Liouville-Green method, including rigorous error bounds for the approximates (1), we refer the reader to Chapter 6 of [16].

If is a phase function for (3) — so that

| (4) |

are solutions of (3) — then satisfies Kummer’s differential equation

| (5) |

The Liouville-Green phase (2) is the (crude) approximation obtained by deleting the expression

| (6) |

from (5) and solving the resulting equation. In [18], an iterative scheme for refining the Liouville-Green phase is introduced. In order to avoid the complications which square roots bring, it constructs a sequence of asymptotic approximations to a solution of the differential equation

| (7) |

satisfied by functions of the form , where is the derivative of a phase function for (3). The formulas

| (8) |

defining the scheme of [18] make clear that when and its derivatives are slowly varying, the asymptotic approximations it produces will be as well. Error bounds which hold under various assumptions on the form of are given in [19, 17, 18]. In the important case in which

| (9) |

with smooth and positive, there exist solutions , of (3) such that if is the approximate phase function obtained from the iterate of the scheme (8), then

| (10) | ||||

The approximations generated by the scheme of [18] are closely connected to the (perhaps more familiar) classical ones obtained from the Riccati equation

| (11) |

satisfied by the logarithmic derivatives of the solutions of (3). We note that the solutions of (11) are related to those of (5) via the formula

| (12) |

In the event that takes the form (9), inserting the ansatz

| (13) |

into (11) and solving the equations which result from collecting like powers of leads to the formulas

| (14) | ||||

It is a classical result (a proof of which can be found in Chapter 7 of [15]) that there exist solutions , of (3) such that

| (15) | ||||

Since the formula defining depends on all of the previous iterates whereas the iterate depends only on the iterate , the approximations produced by the scheme (8) are typically much simpler than those which result from the classical scheme.

While asymptotic methods such as (8) and (13), (14) are extremely useful for generating symbolic expressions which represent the solutions of second order linear ordinary differential equations, they leave much to be desired as numerical methods. Such schemes suffer from at least two serious problems:

-

1.

Like all asymptotic methods, there is a limit to the accuracy which they can obtain, and this limit often falls short of the level of accuracy indicated by the condition number of the problem. Moreover, in the case of asymptotic methods for (3), the obtainable accuracy generally depends in a complicated way on behavior of the function and its derivatives, thus making it difficult to control numerical errors.

-

2.

The higher order approximations constructed by these techniques depend on higher order derivatives of , which cannot be calculated numerically without significant loss of accuracy.

In [7], an algorithm for constructing nonoscillatory phase functions which represent the solutions of equations of the form (3) when is smooth and strictly positive is described. It operates by solving Kummer’s equation (5) numerically. Most of the solutions of Kummer’s equation are oscillatory, and the principal challenge addressed by the algorithm of [7] is the identification of the values of the first two derivatives of a nonoscillatory phase function at a point on the interval . Once this has been done, (5) can be solved numerically using any method which applies to stiff ordinary differential equations. The algorithm of [7] does not require knowledge of the derivatives of and calculates the phase function to near machine precision regardless of the magnitude of .

A theorem which shows the existence of a nonoscillatory phase function for (3) in the case in which is of the form (9) appears in a companion paper [9] to [7]. It applies when the function , where is defined via

| (16) |

and is the inverse function of

| (17) |

has a rapidly decaying Fourier transform. More explicitly, the theorem asserts that if the Fourier transform of satisfies a bound of the form

| (18) |

then there exist functions and such that

| (19) |

| (20) |

and

| (21) |

is a phase function for

| (22) |

The definition of the function is ostensibly quite complicated; however, is, in fact, simply equal to twice the Schwarzian derivative of the inverse function of (17). This theorem ensures that, even for relatively modest values of , the phase function constructed by [7] is nonoscillatory. When is small, the algorithm of [7] is not guaranteed to produce a phase function which is nonoscillatory, but, in stark contrast to asymptotic methods, in all cases it results in one which approximates a solution of (5) with near machine precision accuracy.

Here, we describe a variant of the algorithm of [7] which allows for the numerical solution of second order linear ordinary differential equations with turning points. We focus on the case in which the coefficient in (3) is a smooth function on the interval with a single zero at the point , and further assume that

| (23) |

with a positive integer. Most equations with multiple turning points can be handled by the repeated use of the algorithm described here (and we consider two such examples in the numerical experiments discussed in this article). When is nonpositive on the entire interval , alternate methods are indicated (for example, the technique used in [8] to solve second order differential equations in the nonoscillatory regime).

Two problems arise when the algorithm of [7] is applied to second order differential equations of this type. First, Kummer’s equation (5) and the closely-related Riccati equation (11) encounter numerical difficulties in the nonoscillatory regime, where the values of are small. More explicitly, when these equations are solved numerically, the obtained values of only approximate it with absolute accuracy on the order of machine precision in the nonoscillatory region. Since is small there, this means that the relative accuracy of these approximations is extremely poor. That such difficulties are encountered is unsurprising given that appears in the denominator of two terms in Kummer’s equation, and considering the form (9) of the solutions of Riccati’s equation. We address this difficulty by solving Appell’s equation rather than Kummer’s equation. Appell’s equation is a certain third order linear ordinary differential equation satisfied by the product of any two solutions of (3), including the reciprocal of , which is the sum of the squares of the two functions appearing in (4). As the experiments discussed in this paper show, there is no difficulty in calculating with high relative accuracy in both the oscillatory and nonoscillatory regimes by solving Appell’s equation numerically.

The second difficulty addressed by the algorithm of this paper is that, in the case of turning points of even orders, there need not exist a slowly varying phase function which extends across the turning point. We include a simple analysis of the second order differential equation

| (24) |

in the case in which is an even integer to demonstrate this. To overcome this problem, we simply construct two nonoscillatory phase functions, each defined only on one side of the turning point. For turning points of odd order, a single phase function suffices.

The remainder of this article is structured as follows. Section 2 reviews certain basic facts regarding phase functions for second order linear ordinary differential equations. In Section 3, we analyze the second order differential equation (24) in order to show that we cannot expect nonoscillatory phase functions to extend across turning points of even order. Section 4 details our numerical algorithm. Numerical experiments conducted to demonstrate its properties are discussed in Section 5. We close with a few brief remarks in Section 6.

2 Phase functions for second order linear ordinary differential equations

In this section, we review several basic facts regarding phase functions for second order linear ordinary differential equations. We first discuss the case of a general second order linear ordinary differential equation in Subsection 2.1, and then we consider the effect of applying the standard transform which reduces such equations to their so-called “normal forms” in Subsection 2.2.

2.1 The general case

If satisfies the second order differential equation

| (25) |

then it can be easily seen that r(t) solves the Riccati equation

| (26) |

Letting yields the system of ordinary differential equations

| (27) |

The second of these can be rearranged as

| (28) |

and when this expression is inserted into the first equation in (27) we arrive at

| (29) |

It is clear that if does not vanish on and satisfies (29) there, then

| (30) |

where the implicit constant of integration and the choice of branch cut for the logarithm are irrelevant, is a solution of the Riccati equation (26) and

| (31) |

where

| (32) |

is a solution of the original ordinary differential equation (25). We note that Abel’s identity implies that the Wronskian of any pair of solutions of (25) is a constant multiple of (32).

Equation (29) is known as Kummer’s equation, after E. E. Kummer who considered it in [14] and we refer to its solutions as phase functions for the ordinary differential equation (25). When the coefficients and are real-valued, is also real-valued, and the functions

| (33) |

are linearly independent real-valued solutions of (25) which form a basis in its space of solutions.

If is a phase function and and are as in (33), then a straightforward computation shows that the modulus function

| (34) |

satisfies the differential equation

| (35) |

We refer to (35) as Appell’s equation in light of the article [1].

Given any pair of solutions of (25) whose Wronskian and the associated modulus function are nonzero on , it can be shown by a straightforward calculation that

| (36) |

satisfies (29) on that interval. It follows that any antiderivative of is a phase function for (25) on . Requiring that (33) holds determines up to an additive constant which is an integral multiple of , but further restrictions are required to determine it uniquely. We will nonetheless, by a slight abuse of terminology, refer to “the phase function generated by the pair of solutions ” with the understanding that the choice of constant is of no consequence.

2.2 Reduction to normal form

If solves (25), then

| (37) |

satisfies

| (38) |

where

| (39) |

Equation (38) is often called the normal form of (25). The effects of this transformation on the various formulas discussed above are easy to discern. In particular, Riccati’s equation becomes

| (40) |

Kummer’s equation now takes the form

| (41) |

Appell’s equation becomes

| (42) |

and it is the functions

| (43) |

which form a basis in the space of solutions of (38).

We observe that when (39) is inserted into (41) it simply becomes (29), so that Kummer’s equation is unchanged by this transformation. Similarly, (36) is invariant under (37), which implies that

| (44) |

So while the solution of Riccati’s equation and the basis functions are modified by the transformation (37), the phase function is invariant. It does not matter, then, whether the form (25) or its normal form (38) is considered. Either set of solutions (33) or (43) can be recovered once has been calculated.

3 Turning points of even order

We now consider the equation

| (45) |

in the case in which is an even integer. Since the solutions of (45) can be expressed as linear combinations of Bessel functions, we begin with a short discussions of Bessel’s differential equation in Subsection 3.1. We then exhibit a basis in the space of solutions of (45) in Subsection 3.2. Finally, in Subsection 3.3, we show that (45) does not admit a phase function which is nonoscillatory on all of the real line.

3.1 Bessel functions

Standard solutions of Bessel’s differential equation

| (46) |

include the Bessel functions

| (47) |

and

| (48) |

of the first and second kinds of order . In general, these functions are multi-valued, and the usual convention is to regard them as defined on the cut plane . We note, however, that in the case of integer values of , is entire.

We use to denote the phase function for Bessel’s differential equation generated by the pair . The following formula, which appears in [11], expresses the corresponding modulus function as the Laplace transform of a Legendre function:

| (49) |

Among other things, it implies that this modulus function is completely monotone on . A function is completely monotone on the interval provided

| (50) |

It is well known that is completely monotone on if and only if it is the Laplace transform of a nonnegative Borel measure (see, for instance, Chapter 4 of [20]). The derivation of (49) in [11] relies on a considerable amount of machinery. However, it can be verified simply by observing that the integral in (49) satisfies Appell’s differential equation and then matching the first few terms of the asymptotic expansions of the left- and right-hand sides of (49) at infinity.

Since the Wronskian of the pair is (this fact can be found in Chapter 7 of [3]),

| (51) |

In particular, Bessel’s differential equation admits a phase function which is slowly varying in the extremely strong sense that its derivative is the reciprocal of the product of and a completely monotone function.

3.2 A basis in the space of solutions

It follows from the expansions (47) and (48) that when the following formulas hold:

| (52) | ||||

The limits in (52) imply that functions and defined via

| (53) |

and

| (54) |

are continuously differentiable at . They are obviously smooth on the set and it follows from direct substitution that they satisfy (45) on . So they are, in fact, smooth on and they satisfy (45) there. It is also a consequence of the formulas appearing in (52) that the Wronskian of this pair of solutions is .

3.3 There is no phase function which is nonoscillatory on the whole real line

We now use the well-known asymptotic approximations

| (55) | ||||

for the Bessel functions (which can be found in Chapter 7 of [3], among many other sources) to show that there do not exist constants and such that the modulus function

| (56) |

for the pair of solutions

| (57) |

is nonoscillatory on both of the intervals and .

From (55) and the definitions of and given in the preceding subsection, we see that

| (58) |

In order to prevent oscillations on the interval , we must have . Without loss of generality we may assume that . But, it then follows from (53), (54) and (55) that

| (59) |

as . Clearly, the function appearing on the right-hand side of (59) oscillates on .

4 Numerical Algorithm

In this section, we describe our algorithm for solving the second order differential equation

| (60) |

in the case in which is smooth on with a single turning point . We will further assume that

| (61) |

with a positive integer and . Obvious modifications to the algorithm apply in the case in which is an odd integer and . When is even and , the solutions of (60) are nonoscillatory on the whole interval and other methods are indicated.

The algorithm takes as input the interval ; the location of the turning point ; an external subroutine for evaluating the coefficient and, optionally, its derivative ; a positive integer specifying the order of the piecewise Chebyshev expansions which are used to represent phase functions; and a double precision number which controls the accuracy of the obtained solution. In the case in which the derivative of the coefficient is not specified, is evaluated using spectral differentiation. This usually results in a small loss of precision; several experiments in which this effect is measured are discussed in Section 5.

The algorithm outputs one or more phase functions, which are represented via order piecewise Chebyshev expansions. By an order piecewise Chebyshev expansions on the interval , we mean a sum of the form

| (62) | ||||

where is a partition of , is the characteristic function on the interval and denotes the Chebyshev polynomial of degree . The phase functions define a basis in the space of solutions of (60), and using these basis functions it is straightforward to construct a solution of (60) satisfying any reasonable set of boundary conditions.

When the turning point is of odd order, our algorithm constructs a single phase function such that

| (63) |

form a basis in the space of solutions of (60). In this case, the algorithm returns as output three piecewise order Chebyshev expansions, one representing the phase function , one representing its derivatives and a third expansion representing . Using these expansions, the values of the functions and , as well the values of their first derivatives, can be easily evaluated. The phase function is not always given over the whole interval . The value of decays rapidly in the nonoscillatory regime, as decreases from to , and it can become smaller in magnitude than the smallest positive IEEE double precision number. In such cases, our algorithm constructs the phase function on a truncated domain , where is chosen so that the value of is close to the smallest representable IEEE double precision number at . As the experiments of Subsection 5.1 and 5.4 show, despite this potential limitation, phase functions can represent the solutions of (60) with high relative accuracy deep into the nonoscillatory region. Indeed, in our experience, the results are often better than standard solvers in this regime.

If the turning point is of even order, then two phase functions are constructed: the “left” phase function given on the interval and the “right” phase function given on . The output in this case comprises six piecewise order Chebyshev expansions: one for each of the functions , , , , and , as well as four real-valued coefficients such that the functions

| (64) |

and

| (65) |

form a basis in the space of solutions of (60).

We begin the description of our algorithm by detailing a fairly standard adaptive Chebyshev solver for ordinary differential equations which it utilizes in Subsection 4.1. We then describe the “windowing” procedure of [7], which is also a component of the scheme of this paper, in Subsection 4.2. The method proper is described in Subsection 4.3.

4.1 An adaptive solver for ordinary differential equations

We now describe a fairly standard adaptive Chebyshev solver which is repeatedly used by the algorithm of this paper. It solves the problem

| (66) |

where is smooth, and , It takes as input the interval , the point , the vector , and an external subroutine for evaluating the function . It uses the parameters and which are supplied as inputs to the algorithm of this paper.

It outputs piecewise order Chebyshev expansions, one for each of the components of the solution of (66). Throughout, the solver maintains a list of “accepted intervals” of . An interval is accepted if the solution is deemed to be adequately represented by an order Chebyshev expansion on that interval.

The solver operates in two phases. In the first, it constructs piecewise Chebyshev expansions representing the solution on . During this phase, a list of subintervals of to process is maintained. Assuming , this list initially contains . Otherwise, it is empty. The following steps are repeated as long as the list of subintervals of to process is not empty:

-

1.

Extract the subinterval from the list of intervals to process such that is as large as possible.

-

2.

Solve the terminal value problem

(67) If , then . Otherwise, the value of the solution at the point has already been approximated, and we use that estimate for .

If the problem is linear, a Chebyshev integral equation method (see, for instance, [10]) is used to solve (67). Otherwise, the trapezoidal method (see, for instance, [2]) is first used to produce an initial approximation of the solution and then Newton’s method is applied to refine it. The linearized problems are solved using a Chebyshev integral equation method.

In any event, the result is a set of order Chebyshev expansions

(68) approximating the components of the solution of (67).

-

3.

Compute the quantities

(69) where the are the coefficients in the expansions (68). If any of the resulting values is larger than , then we split the interval into two halves and and place them on the list of subintervals of to process. Otherwise, we place the interval on the list of accepted subintervals.

In the second phase, the solver constructs piecewise Chebyshev expansions representing the solution on . During this phase, a list of subintervals of to process is maintained. Assuming , this list initially contains . Otherwise, it is empty. The following steps are repeated as long as the list of subintervals of to process is not empty:

-

1.

Extract the subinterval from the list of intervals to process such that is as small as possible.

-

2.

Solve the initial value problem

(70) If , then . Otherwise, the value of the solution at the point has already been approximated, and we use that estimate for .

If the problem is linear, a straightforward Chebyshev integral equation method is used. Otherwise, the trapezoidal method is first used to produce an initial approximation of the solution and then Newton’s method is applied to refine it. The linearized problems are solved using a straightforward Chebyshev integral equation method.

In any event, the result is a set of order Chebyshev expansions

(71) approximating the components of the solution of (67).

-

3.

Compute the quantities

(72) where the are the coefficients in the expansions (71). If any of the resulting values is larger than , then we split the interval into two halves and and place them on the list of subintervals of to process. Otherwise, we place the interval on the list of accepted intervals.

At the conclusion of this procedure, we have order piecewise Chebyshev expansions representing each component of the solution.

4.2 The “windowing” procedure of [7]

We now describe a variant of the “windowing” procedure of [7], which is used as a step in the algorithm of this paper. It takes as input an interval on which the coefficient in (60) is nonnegative and makes use of the external subroutine for evaluating the coefficient which is specified as input to the algorithm of this paper. The derivative of is not needed by this procedure. It outputs the values of the first and second derivatives of a nonoscillatory phase function for (60) on the interval at the point . The algorithm can easily be modified to provide the values of these functions at instead.

We first construct a “windowed” version of which closely approximates on the leftmost quarter of the interval and is approximately equal to

| (73) |

on the rightmost quarter of the interval . More explicitly, we set

| (74) |

and define via the formula

| (75) |

where is given by

| (76) |

The constant in (76) is chosen so that

| (77) |

where denotes IEEE double precision machine zero. We next solve the terminal value problem

| (78) |

using the solver described in Subsection 4.1. Although it outputs order piecewise Chebyshev expansions representing and , it is only the values of these functions at the point which concern us. These are the outputs of the windowing procedure, and they closely approximate the values of and , where is the the desired nonoscillatory phase function for the original equation (an error estimate under mild conditions on is proven in [7]).

4.3 A phase function method for differential equations with a turning point

Our algorithm operates differently depending on the order of the turning point . If is of odd order (i.e., is an odd integer), then we first apply the windowing algorithm of Subsection 4.2 on the interval , where is nonnegative and the solutions of (60) oscillate. By doing so, we obtain the values of the first two derivatives of a nonoscillatory phase function for (60) at the point . We next compute the value of using Kummer’s equation:

| (79) |

The first three derivatives of the function

| (80) |

which satisfies Appell’s equation

| (81) |

at the point are given by

| (82) |

We now apply the algorithm of Subsection 4.1 find the solution of (81) which satisfies (82). Appell’s equation includes a term involving the derivative of the coefficient . If the values of are not specified, then they are computed using spectral differentiation. In particular, on each interval considered by the solver of Subsection 4.1, a Chebyshev expansion representing is formed and used to evaluate the derivatives of . The solution of Appell’s equation can become extremely large in the nonoscillatory interval . The adaptive solver used to solve Appell’s equation terminates its first phase early if the value of on the interval under consideration grows above . This has the effect of truncating the interval over which the phase function is given so that the value of is close to the smallest representable IEEE double precision number at the left endpoint. The output of the solver is three order Chebyshev expansions, one for each of the functions , and . At this stage, we construct order piecewise order Chebyshev expansions representing each of the functions

| (83) |

and

| (84) |

over an interval of the form with . An order piecewise Chebyshev expansion representing the phase function itself is constructed via spectral integration; the particular choice of antiderivative is determined by the requirement that .

In the case of a turning point of even order, we first apply the windowing algorithm of Subsection 4.2 to the interval in order to obtain the values of the first two derivatives of the phase function at the point . One this has been done, Appell’s equation is solved over the interval using the algorithm of Subsection 4.1 and order piecewise Chebyshev expansions of and are constructed using the obtained solution. An order piecewise Chebyshev expansion of is constructed via spectral integration; the value of at is taken to be . This procedure is repeated on the interval in order to construct order piecewise Chebyshev expansions of and its first two derivatives. The value of at is taken to be . It is easy to see that the coefficients and in (64) are given by the formulas

| (85) |

while the coefficients and in (65) are

| (86) |

5 Numerical Experiments

In this section, we present the results of numerical experiments which were conducted to illustrate the properties of the algorithm of this paper. The code for these experiments was written in Fortran and compiled with version 12.10 of the GNU Fortran compiler. The experiments were performed on a desktop computer equipped with an AMD Ryzen 3900X processor and 32GB of memory. No attempt was made to parallelize our code.

In the first four experiments described here, we considered problems with known solutions and so were able to measure the error in our obtained solutions by direct comparison with them. Moreover, in these experiments, we were able to compare the relative accuracy of our method with that predicted by the condition number of evaluation of the solution. By the relative accuracy predicted by the condition number of evaluation of a function , we mean the quantity , where

| (87) |

is the condition number of evaluation of and and is machine zero for IEEE double precision arithmetic. The product of machine zero and the condition number of a function is a reasonable heuristic for the relative accuracy which can be expected when evaluating using IEEE double precision arithmetic. A thorough discussion of the notion of “condition number of evaluation of a function” can be found, for instance, in [12].

Explicit formulas are not available for the solutions of the problems discussed in Subsections 5.5, 5.6 and 5.7. Consequently, we ran the conventional solver described in Subsection 4.1 using quadruple precision arithmetic (i.e., using Fortran REAL*16 numbers) to construct reference solutions in these experiments. We used extended precision arithmetic because we found in the course of conducting the experiments for this article that, in most cases, our method obtains higher accuracy than the conventional solver described in Subsection 4.1.

5.1 Airy functions

The experiments of this subsection concern the Airy functions Ai and Bi, which are standard solutions of the differential equation

| (88) |

Their definitions can be found in many sources (for instance, [16]). Obviously, (88) has a turning point of order at . The Airy functions oscillate on the interval , while Ai is decreasing on and Bi is increasing on .

We first used the algorithm of this section to construct two phase functions and for (88) using the algorithm of this paper. In the case of , the values of the derivative of the coefficient were supplied as input and in the case of , the derivative of was calculated via spectral differentiation. The phase functions were constructed on the interval , where . The right endpoint was chosen by our algorithm so that the value of the derivative of the phase function was extremely small there. In fact:

| (89) | ||||

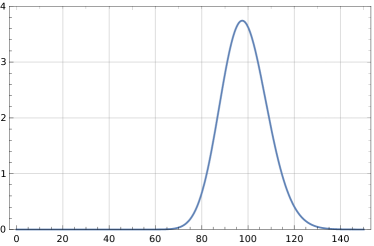

In a first experiment, we measured the relative error incurred when evaluating the function using each of the two phase functions for (88). We choose to consider rather than the Airy functions Ai and Bi individually because it does not vanish and its absolute value is nonoscillatory on the real axis (see Figure 1 for a plot of the absolute value of this function). It took approximately milliseconds to construct each of the phase functions.

In our first experiment, we used each of the two phase functions to evaluate at equispaced points on the interval , where the Airy functions are oscillatory. The plot on the left-hand side of the top row of Figure 6 gives the results. There, the relative errors in the values of calculated using each of the two phase functions are plotted, as are the relative errors predicted by the condition number of evaluation of this function.

In our next experiment, we used the each of the two phase functions to evaluate at equispaced points on the interval . The plot on the right-hand side of the top row of Figure 6 compares the relative errors which were incurred when doing so with those predicted by the condition number of evaluation of .

In a third experiment related to the Airy functions, we used the phase function for (88) to evaluate Ai at equispaced points in the interval using each of the two phase functions for (88). Since Ai is oscillatory on this interval and has many zeros there, it is not sensible to measure the relative errors in these calculations. Instead, we measured the absolute errors incurred when Ai was evaluated using and . The plot on the left-hand side of the second row of Figure 6 gives the results.

In a last experiment concerning the Airy functions, we used the two phase functions for (88) to evaluate Ai at equispaced points on the interval . The plot on the right-hand side of the second row of Figure 6 gives the results. There, the relative errors in the values of Ai calculated using each of the two phase functions are plotted, as are the relative errors predicted by the condition number of evaluation of Ai.

5.2 Bessel functions

In this set of experiments, we constructed phase functions for the normal form

| (90) |

of Bessel’s differential equation. When ,

| (91) |

is a turning point of order for (90). The coefficient in (90) is negative on the interval and positive on . Standard solutions include the Bessel functions of the first and second kinds and (definitions of which were given in Subsection 3.1).

In the first experiment, we sampled equispaced points in the interval and constructed phase functions and for each . In the case of , the derivative of the coefficient was supplied as input and in the case of , the derivative was calculated via spectral differentiation. We then used each of these phase functions to evaluate the function at equispaced points on the interval . We considered the function rather than the Bessel functions individually because its absolute value is nonoscillatory; indeed, it was shown in Section 3.1 that is completely monotone on . The plot on the left-hand side of Figure 7 gives the maximum observed relative errors as functions of , as well as the maximum relative error predicted by the condition number of evaluation of . The plot on the right-hand side of Figure 7 gives the time require to construct the phase function as a function of .

In a second set of experiments, for each , we measured the relative errors incurred when the phase functions and were used to evaluate at equispaced spaced points in the interval . Figure 8 gives the results. Each plot there corresponds to a different value of , and gives the relative errors incurred when the phase functions were used to evaluate , as well as the relative error predicted by its condition number of evaluation, as functions of .

5.3 Associated Legendre functions

The experiments described in this subsection concern the associated Legendre differential equation

| (92) |

Standard solutions of (92) defined on the interval include the Ferrer’s functions of the first and second kinds and (see, for instance, [4] or [16] for definitions). The Ferrer’s functions of negative orders are generally better behaved than those of positive orders — for instance, when is not an integer, is singular at whereas is not — and they coincide in the important case of integer values of and . When (this is a standard requirement), the normalizations

| (93) | ||||

are well-defined, and we prefer them to the standard Ferrer’s functions because the latter can take on extremely large and extremely small values, even when and are of modest sizes. We note that the norm of is when both and are integers.

We claim that the phase function generated by the pair

| (94) |

is nonoscillatory in a rather strong sense. To see this, we first observe that

| (95) | ||||

where is the modified Bessel function of the third kind of order . This formula appears in a slightly different form in Section 7.8 of [3], and it can be verified quite easily — for instance, by showing that the expression appearing on the right-hand side satisfies Appell’s differential equation and then verifying that the functions appearing on the left- and right-hand sides and their first two derivatives agree at . Next, we make the change of variables

| (96) |

to obtain

| (97) | ||||

We now observe that

| (98) | ||||

where

| (99) |

Since

| (100) |

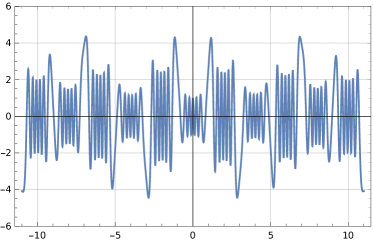

the function behaves similarly to a bump function centered at the point when is of large magnitude. It follows that the function defined in (99) resembles a bump function centered around the point , again assuming is of large magnitude. In particular, the function (98) is nonoscillatory in the sense that its Fourier transform resembles a rapidly decaying bump function centered at . Figure 2 contains plots of the functions and when and . The scaling by the reciprocal of was introduced because the magnitudes of these functions are quite large otherwise.

When performing numerical computations involving the associated Legendre functions, it is convenient to introduce a change of variables which eliminates the singular points of the equation (92) at . If solves (92), then

| (101) |

satisfies

| (102) |

We use to denote the phase function for (102) constructed via the algorithm of this paper with values of the derivative of the coefficient supplied, and to denote the phase function for (102) constructed via the algorithm of this paper with derivatives of the coefficient calculated using spectral differentiation.

In each experiment of this subsection, we first fixed a value of and sampled equispaced points in the interval . Then, for each , we constructed phase functions and using the algorithm of this paper. In the case of , the values of the derivatives of the coefficient were supplied to the algorithm, while spectral differentiation was used to evaluate in the case of . To be clear, the degree of the associated Legendre function was taken to be (it is necessary for ). The phase functions were given on intervals of the form with chosen by our algorithm so that the value of the derivative of there was close to the smallest representable IEEE double precision number. For each chosen value of , we used both of the corresponding phase functions to evaluate

| (103) |

at equispaced points on the interval . Figure 9 reports the results. Each row in that figure corresponds to one experiment. The plot on the left-hand side gives the maximum relative errors observed while evaluating (103) using each of the phase functions and , as well as the maximum relative error predicted by the condition number of evaluation of , as functions of . The plot on the right gives the time (in milliseconds) required to construct as a function of .

Remark 1.

Formula (97) implies that, after a suitable change of variables, the function is an element of one of the Hardy spaces of functions analytic in the upper half of the complex plane (see, for instance, [13] for a careful discussion of such spaces). Likewise, Formula (49) shows that is an element of one of these Hardy spaces as well. It is not a coincidence that these two functions give rise to slowly varying phase functions. A relatively straightforward generalization of the discussion in Subsection 5.3 shows that when a second order differential equation with slowly varying coefficients admits a solution in one of the classical Hardy spaces of functions analytic in the upper half of the complex plane (perhaps after a change of variables), it will have a slowly varying phase function. This argument applies to Bessel’s differential equation, the spheroidal wave equation, and many other second order linear ordinary differential equations.

5.4 Equations with turning points of higher orders

The experiments described in this subsection concerned the differential equation

| (104) |

with a positive integer greater than . In the event that is even, the functions and defined in (53) and (54) form a basis in the space of solutions of (104). It can be verified using a argument similar to that of Subsection 3.2 that

| (105) |

and

| (106) |

where is the modified Bessel function of the first kind,

| (107) |

form a basis in the space of solutions of (104) when is odd.

In each experiment, a value of was fixed and the algorithm of this paper was applied twice to evaluate

| (108) |

at equispaced points. The first time the algorithm was executed, the values of the derivative of the coefficient in (104) were supplied, and during the second application of the algorithm, the derivative of the coefficient was evaluated using spectral differentiation. The results appear in Figure 10. Each plot there corresponds to one value of and gives the relative errors in the calculated values of incurred by each variant of the algorithm, as well as the relative errors predicted by the condition number of evaluation of .

5.5 An equation whose coefficient has two bumps

In this experiment, we considered the boundary value problem

| (109) |

where

| (110) |

The function , a graph of which appears on the left-hand side of Figure 3, has a single zero at the point . A plot of the solution of (109) when appears on the right-hand side of Figure 3.

In this experiment, we sampled points in the interval . Then, for each we solved (109) using the algorithm of this paper with the values of specified. We also solved (109) by running the standard solver described in Subsection 4.1 using extended precision arithmetic. We then calculated the absolute difference in the obtained solutions at equispaced points in the interval . The plot on the left-hand side of Figure 11 gives the largest observed maximum absolute difference as a function of . The plot on the right-hand side gives the times required to solve (109) using the phase method as a function of .

5.6 An equation with three turning points

In this experiment, we considered the problem

| (111) |

where

| (112) |

The coefficient has three zeros on the interval :

| (113) | ||||

A graph of the function appears in Figure 4, as does a plot of the solution of (111) over the interval when . We truncated the plot of the solution because its magnitude grows quickly in the interval .

In this experiment, we first sampled points in the interval . Then, for each , we solved (111) using a phase function method. More explicitly, we constructed two phase functions, one defined on the interval and the second defined on the interval . The windowing algorithm was used on an interval around the point to determine the correct initial values for the phase function on and the windowing algorithm was applied on an interval around the point to determine the initial values for the phase function given on . The values of were specified. We next solved the problem (111) using the standard solver described in Subsection 4.1 running in extended precision arithmetic. Then, we evaluated the solution obtained with the phase method and the solution constructed by the standard solver at equispaced points on the interval and measured the quantity

| (114) |

Neither the absolute nor relative differences between the two solutions were appropriate for this problem because the solution of (111) oscillates on part of the interval and it is of large magnitude in another region. Figure 12 gives the results. On the left-hand side is a plot of as a function of , while the plot on the right-hand side gives the times required to solve (111) via the phase method as a function of .

5.7 An equation with many turning points

In this experiment, we considered the problem

| (115) |

where

| (116) |

The coefficient has zeroes in the interval , at the points

A graph of the function appears in Figure 5, as does a plot solution of (115) when .

We sampled points in the interval . Then, for each , we solved (111) using a phase function method and with the standard solver described in Subsection 4.1 running in extended precision arithmetic. To solve (115) using phase functions, we constructed eleven phase functions, one on each of the intervals

| (117) |

The values of were specified.

Figure 13 gives the results. The plot on the left-hand side gives the maximum absolute difference in the solutions obtained by the two solvers observed while evaluating them at equispaced points in the interval as a function of . The plot on the right-hand side gives the times required to solve (115) using the phase method as a function of .

6 Conclusions

We have described a variant of the algorithm of [7] for the numerical solution of a large class of second order linear ordinary differential equations, including many with turning points. Unlike standard solvers, its running time is largely independent of the magnitude of the coefficients appearing in the equation, and, unlike asymptotic methods, it consistently achieves accuracy on par with that indicated by the condition number of the problem.

One of the key observations of [7] is that it is often preferable to calculate phase functions numerically by simply solving the Riccati equation rather than constructing asymptotic approximations of them à la WKB methods. A principal observation of this paper is that, unlike standard asymptotic approximations of solutions of the Riccati equation which become singular near turning points, the phase functions themselves are perfectly well-behaved near turning points, and this can be exploited to avoid using different mechanisms to represent solutions in different regimes. It appears from numerical experiments that, in the vicinity of turning points, the cost of representing phase functions via Chebyshev expansions and the like is comparable to that of representing the solutions themselves. And, perhaps surprisingly, solutions can be represented to high relative accuracy deep into the nonoscillatory regime via phase functions.

We have also presented experiments showing that the algorithm of this paper can be used to evaluate many families of special functions in time independent of their parameters. It should be noted, however, that using precomputed piecewise polynomial expansions of phase functions for the second order linear ordinary differential equations satisfied by various families of special functions is much faster. Because these phase functions are slowly varying, the expansions are surprisingly compact. We refer the interested reader to [6], where such an approach is used to evaluate the associated Legendre functions.

Phase functions methods are highly useful for performing several other computations involving special functions. For instance, they can be used to calculate their zeros and various generalized Gaussian quadrature rules [5], and the author will soon report on a method for rapidly applying special function transforms using phase functions.

In a future work, the author will describe an extension of the algorithm of this paper which combines standard solvers for second order differential equations with phase function methods. The algorithm will operate by subdividing the solution domain into intervals and constructing a local basis of solutions on each interval. When the coefficients are of large magnitude, the local basis functions will be represented via phase functions, and the basis functions will be represented directly, via piecewise Chebyshev expansions, on intervals in which the coefficients are of small magnitude. One of the principal advantages of such an approach is that the solution interval can be subdivided in a more or less arbitrary fashion and each computation can be performed locally, thus allowing for large-scale parallelization.

7 Acknowledgments

The author would like to thank Alex Barnett and Fruzsina Agocs of the Flatiron Institute and Kirill Serkh of the University of Toronto for many thoughtful discussions and suggestions. The author was supported in part by NSERC Discovery grant RGPIN-2021-02613.

References

- [1] Appell, P. Sur la transformation des équations différentielles linéaires. Comptes Rendus 91 (1880), 211–214.

- [2] Ascher, U. M., and Petzold, L. R. Computer Methods for Ordinary Differential Equations and Differential-Algebraic Equations. Society for Industrial and Applied Mathematics, USA, 1998.

- [3] Bateman, H., and Erdélyi, A. Higher Transcendental Functions, vol. II. McGraw-Hill, 1953.

- [4] Bateman, H., and Erdélyi, A. Higher Transcendental Functions, vol. I. McGraw-Hill, 1953.

- [5] Bremer, J. On the numerical calculation of the roots of special functions satisfying second order ordinary differential equations. SIAM Journal on Scientific Computing 39 (2017), A55–A82.

- [6] Bremer, J. An algorithm for the numerical evaluation of the associated Legendre functions that runs in time independent of degree and order. Journal of Computational Physics 360 (2018), 15–28.

- [7] Bremer, J. On the numerical solution of second order differential equations in the high-frequency regime. Applied and Computational Harmonic Analysis 44 (2018), 312–349.

- [8] Bremer, J. A quasilinear complexity algorithm for the numerical simulation of scattering from a two-dimensional radilly symmetric potential. Journal of Computation Physics (2020).

- [9] Bremer, J., and Rokhlin, V. Improved estimates for nonoscillatory phase functions. Discrete and Continuous Dynamical Systems, Series A 36 (2016), 4101–4131.

- [10] Greengard, L. Spectral integration and two-point boundary value problems. SIAM Journal of Numerical Analysis 28 (1991), 1071–1080.

- [11] Hartman, P. On differential equations, Volterra equations and the function . American Journal of Mathemtics 95 (1973), 553–593.

- [12] Higham, N. J. Accuracy and Stability of Numerical Algorithms, second ed. SIAM, 2002.

- [13] Koosis, P. An introduction to spaces, second ed. Cambridge University Press, 2008.

- [14] Kummer, E. De generali quadam aequatione differentiali tertti ordinis. Progr. Evang. Köngil. Stadtgymnasium Liegnitz (1834).

- [15] Miller, P. D. Applied Asymptotic Analysis. American Mathematical Society, 2006.

- [16] Olver, F. W. Asymptotics and Special Functions. A.K. Peters, 1997.

- [17] Spigler, R. Asymptotic-numerical approximations for highly oscillatory second-order differential equations by the phase function method. Journal of Mathematical Analysis and Applications 463 (2018), 318–344.

- [18] Spigler, R., and Vianello, M. A numerical method for evaluating the zeros of solutions of second-order linear differential equations. Mathematics of Computation 55 (1990), 591–612.

- [19] Spigler, R., and Vianello, M. The phase function method to solve second-order asymptotically polynomial differential equations. Numerische Mathematik 121 (2012), 565–586.

- [20] Widder, D. The Laplace Transform. Princeton University Press, 1946.