Person Transfer in the Field: Examining Real World Sequential Human-Robot Interaction Between Two Robots

Abstract

With more robots being deployed in the world, users will likely interact with multiple robots sequentially when receiving services. In this paper, we describe an exploratory field study in which unsuspecting participants experienced a “person transfer” – a scenario in which they first interacted with one stationary robot before another mobile robot joined to complete the interaction. In our 7-hour study spanning 4 days, we recorded 18 instances of person transfers with 40+ individuals. We also interviewed 11 participants after the interaction to further understand their experience. We used the recorded video and interview data to extract interesting insights about in-the-field sequential human-robot interaction, such as mobile robot handovers, trust in person transfer, and the importance of the robots’ positions. Our findings expose pitfalls and present important factors to consider when designing sequential human-robot interaction.

I Introduction

As more robots are being tasked with more complex human service scenarios, individual robots are likely not designed to complete all aspects of the task due to functionality trade-offs or service requirements. Similar to existing human-human interactions in sandwich shops or hospitals, users would likely interact with multiple different specialized robots sequentially to complete those tasks. In our prior work, we coined the term “person transfer” [1] to describe the act of transferring a user from one robot to another. Our work and the community have explored various aspects of this atomic interaction, including robot-robot communication [2, 3, 4] and spatial formation [5].

As a novel but fast-approaching class of interaction, there has been sparse work exploring how these interactions will occur and be perceived outside of a laboratory setting. Prior work has suggested that laboratory environments may lower perceptions of risk [6] and heighten awareness of certain details of robot behaviors [7].

We conducted an exploratory field study to better understand how findings from the controlled user studies might translate to the real world. We believe that real conditions, such as having numerous people traversing the interaction environment and not having a scheduled appointment with the robots, can affect how people interact with them and provide insights not available in a laboratory environment.

In this study, the unsuspecting public interacted with a stationary robot that summons a mobile robot to deliver stickers to the users. We recorded various instances of these interactions and used our recorded data to extract important themes such as trust and group membership.

II Related Work

Human-Robot Interaction (HRI) researchers have long deployed robots in the world and observed how the public interacts with them. Prior work has explored robots being deployed on university campuses [8], supermarkets [9], hospitals [10], shopping malls [11], and museums [12, 13]. Rothenbucher et al. [14] created a wizard-of-Oz controlled autonomous vehicle and analyzed how pedestrians responded to the car’s actions. Sun et al. [15] developed a public robot art display and investigated how it attracted the public to interact with it. Edirisinghe et al. [16] tested how an autonomous robot could encourage purchases at a hat store. Tuyen et al. [17] explored the importance of robot gestures when presenting information in a food ordering interaction in a cafe setting. Hauser et al. [18] showed that pedestrians found a quadruped robot that display caine-like body language to be more friendly and likable.

Besides deploying their robots, researchers have also investigated the public’s reaction and opinions to commercial robots. Reig et al. [19] interviewed the public about their attitude towards autonomous vehicles deployed in their city. Han et al. [20] investigated the impact of sidewalk autonomous delivery robots on people with motor disabilities. Pelikan et al. [21] analyzed videos of autonomous delivery robots and explored the robots’ interaction with a “streetscape” – the people, objects, and interactions that happen on the street.

However, there has been little work exploring how unsuspecting users interact with more than one robot. Shiomi et al. [22] described a field study in which two robots performed various tasks in a shopping mall. As part of a guidance task, the two robots coordinated their actions such that one robot would lead the guest to another robot that then welcomed the guest to a store. In a cross-cultural study, Fraune et al. [23] investigated how the number of sociable trash box robots affects the interaction and people’s perception of the robots in a cafeteria. They found participants responded more positively to a single social robot and a group of functional robots than to a group of social robots and a single functional robot. Prior work has also deployed “Robot-Manzai”, a setup where two robots acted as passive social media and communicated with each other in front of bystanders with the goal of conveying information to bystanders in science museums [13] and train stations [24]. Most prior work in the field focuses on other important aspects of human-robot group interaction and does not investigate how people react to sequentially interact with multiple robots.

III Method

This study took advantage of an existing deployed robot on our university campus, the Roboceptionist. This is a social robot system started in 2003 as a long-term robotic platform, and it has been involved in multiple prior studies in HRI [8, 25, 26]. Roboceptionist has undergone various changes throughout its deployment, most notably in its character and backstory. The latest version of Roboceptionist is an agent named “Tank”.

To capture the nuances missed in laboratory settings and observe unique one-off situations from the combination of various factors in the environment, we used a qualitative observational study approach. We recorded instances in which people interacted with our robots and reviewed them for interesting factors of interactions and pitfalls with our system. After some interactions, we approached the participants and interviewed them about their experiences. Because participants were likely to only interact with Tank once due to novelty, all participants experienced the same scenario in which they received a sticker from the robots.

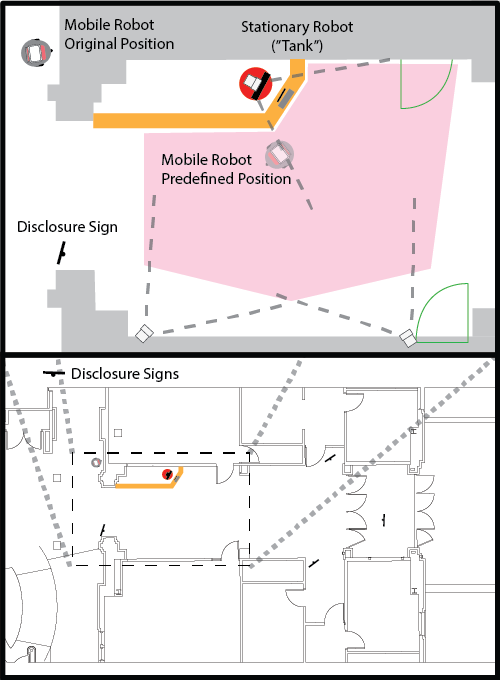

This study was conducted in the field and passersby were recorded regardless of whether they interacted with the robot; thus, we took additional measures to ensure that the privacy of the participants was maintained. We placed disclosure signs on the edge of the recording area to inform participants they were being video and audio recorded. Passersby could also ask to remove their data using an online form. While potential participants were informed that they were being recorded, we did not mention the involvement of the robots. This study went through a full board review by Carnegie Mellon University’s Institution Review Board and was approved.

III-A Study Environment & System

This study took place in the entrance hallway of Newell-Simon Hall on Carnegie Mellon University’s Pittsburgh campus in the United States. A bird’s-eye view illustration of the layout is shown in Figure 2. The Roboceptionist system sits in a wooden booth in the entrance hallway. A partial wall in front of the booth creates a physical barrier between humans and the robot. A screen and keyboard for user input are placed on a wooden ledge directly in front of the robot. A small empty box where the stickers could have been was placed near the keyboard. The Roboceptionist system is visible to visitors as soon as they enter the building from the main entrance. The second robot, a mobile robot, hid around the corner out of view and only appeared when summoned.

The Roboceptionist system consists of a stationary robot body (iRobot B21R) and a screen mounted on a pan-tilt unit that acts as the head. We leveraged the Roboceptionist’s ability to pan its head to convey gaze direction. The screen shows the animated face of “Tank”, a muscular face that wears a headset that it uses to simulate taking phone calls. We also added a microphone and a speech-to-text capability to the system, which allowed users to communicate with Tank through spoken speech. The speech-to-text was disabled when the keyboard was in use. We augmented the environment with three Azure Kinect cameras that provided information about people’s poses and locations.

The mobile robot was a custom robot that had a Pioneer P3DX as its base. An aluminum structure was built on top of the base with a small box on top holding the stickers. The mobile robot uses a 2D lidar for navigation and has a tablet in the front as a face.

III-B System

The system utilizes a combination of ROS 1 [27] and Psi [28]. Psi handles the recording of video data and pipes the information to the ROS 1 system which controls the mobile robot and communicates with Tank’s original code base. We build upon Tank’s original code base [8, 25] and create an interface between its IPC framework and ROS 1. The mobile robot uses the ROS Navigation stack [29] with a lattice local planner [30]. When the study was active, Tank’s system transitioned into a puppeteering mode and the behaviors of both robots were controlled by a Behavior Machine (i.e., a custom hierarchical state machine and behavior tree hybrid system) [31]. We authored the following scenario using the Behavior Machine.

III-C Scenario

When the study was active, we enabled a special mode called “sticker study”. In this mode, Tank told the participants interacting with it that it was giving out stickers as part of its reopening. If the participants indicated they wanted the stickers, Tank would inform them that it had run out of stickers and would summon another robot (“green mobile robot”) who had more stickers. The mobile robot would then drive around the corner and join the interaction. We piloted a group-joining algorithm that did not work most of the time due to technical errors and the busyness of the hallway; instead, the robot defaulted to a predefined position which was directly next to the robot and facing where the user would likely be.

After arriving and exchanging greetings with Tank, the mobile robot prompted the participants to take a sticker from a top-mounted tray. The experimenter, who was standing nearby and out of the way, could remotely command the robots to skip the prompt if participants had already taken the stickers. Afterward, the mobile robot told Tank that someone would be coming to refill Tank’s stickers. We added this brief conversation to observe how people would react and observe people’s movements while a group interaction with the two robots was in progress. After the conversation, the mobile robot informed the participant that it had to leave, and it departed. Tank then looked at the participants and told them about the study, mentioning that they could approach the nearby experimenter if they had any questions.

IV Study Setup

IV-A Participants & Recruitment

Our study included four types of participants:

- Passersby

-

– These were people who passed by our robots without interacting with the robots or observing any human-robot interactions.

- Observers

-

– These were people who passed by and observed the robots interacting, e.g., by slowing down or stopping to watch someone else interact with them. However, they did not directly interact with the robots.

- Participants Group A

-

– These were people who took part in some or all of the multi-robot interactions. Some participants left the interaction halfway.

- Participants Group AA

-

– These were people who were in group A and also answered a few questions that the experimenter asked. This interaction took less than 5 minutes. Participants in this condition were not compensated.

- Participants Group AB

-

– These were people who were in group A but also participated in a 15-minute interview and completed a questionnaire after the interaction. Participants were compensated USD 10 for their time.

After participants in group A completed the interaction, the experimenter approached the participants and asked if they had any questions before informing them about the interview. We were not able to intercept all participants; some left the building while we were supervising the mobile robot as it drove back to its starting point. Furthermore, not all participants agreed to be interviewed due to time commitments. We also did not interview participants who knew or recognized the experimenter. There were two groups of approached participants (AA and AB) because we wanted to provide the options to answer a few questions (AA) or complete a 15-minute interview (AB).

We recorded 7 hours and 2 minutes of complete study data (e.g., video data, robot state, etc.) over 4 weekdays. All sessions took place in November 2021 between 11:20 am and 6:30 pm. We only enabled the microphone input system for some sessions. We observed during pilot testing that the vast majority of people who walked through the hallway ignored Tank. Therefore, we added a sign in front of the Roboceptionist system to advertise that it was giving away free stickers.

In our post-study analysis, we observed 18 person-transfer interactions involving at least 40 people. We obtained this number by counting the number of people in front of Tank when the mobile robot was summoned. We believe this is a lower bound: there were multiple instances in which people approached or interacted with the robots after the mobile robot arrived. We excluded instances where people did not successfully request the stickers or just stared at Tank. We interviewed 11 people (3 in group AA and 8 in group AB).

V Findings & Discussion

For this exploratory field study, we took an exploratory approach in our analysis. We watched the recordings of the 18 interactions and used the interviews to extract important themes.

V-A Overall Impressions

Overall, participants told us that they thought the interactions were “cool”, “fun”, and “really neat”. For most participants, the highlight of the interaction was the arrival of the mobile robot. This was also reflected in our observations in which participants expressed excitement when they saw the mobile robot approaching them.

V-B Trust in Person Transfers

One of the common themes brought up by the interviewed participants was that they were surprised that the mobile robot showed up with more stickers. Participants told us that they thought the sticker was a lie and that Tank was joking in saying that another robot would come to give out stickers. Their rationales were that the joke fit Tank’s personality and that they had never seen another robot in the area before. Participants stated they did not believe it was true until they saw the mobile robot turning the corner. This reinforces the current novelty of interacting with multiple robots and the need for more research on this topic.

Participants’ behaviors confirmed this disbelief in the promise of another robot: in several interactions, after Tank informed a participant that it had run out of stickers, the participant(s) left the interaction. As the mobile robot moved towards Tank, the participants turned around and reengaged with the robots (Figure 3). Some participants intercepted the mobile robot and took the stickers from it as they left.

V-C Mobile Robot Handover

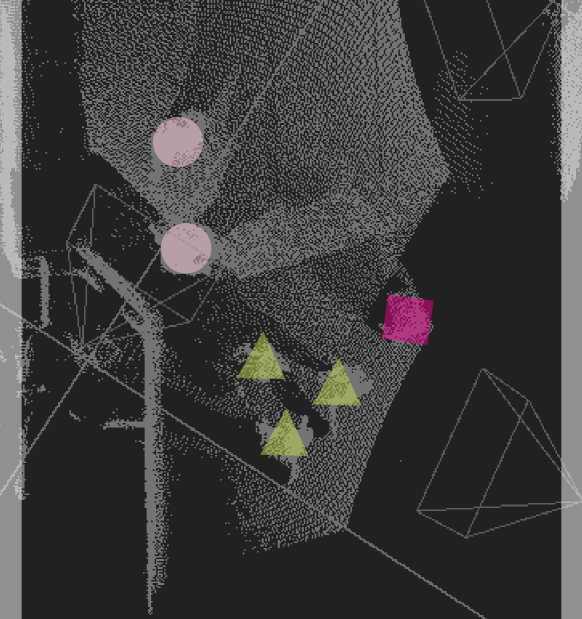

The action of picking up the sticker from our mobile robot can be viewed as a “handover” of an item from our mobile robot to participants. In out of person transfer instances, participants took the stickers while the mobile robot was still trying to reach its final position. In the remaining scenarios, participants only took the stickers after being prompted by the mobile robot. In out of scenarios, the mobile robot positioned itself further away from the participants (an example of the distance is shown in Figure 5). In the remaining one scenario, the participants were recording the interaction and did not immediately pick up the stickers.

These results provided insights into how people perceive receiving items from a robot during handovers. The act of receiving an item requires the person to decide when to approach and take the item. In most cases, as the robot got close to the participants, participants took that as permission to take the items. In cases where it was further away, participants waited for the signal from the robot that it had completed its movement before taking the item.

V-D Change in Group Membership

Among the 18 sessions, we observed a few instances where someone joined an ongoing interaction. In one case, one participant (P4) joined two others who were already interacting with Tank. The first two participants requested the stickers and the mobile robot approached the group, moving to its predefined position. As the mobile robot got closer, P4 stepped back and moved away from the interaction. From the recording, we observed that P4 stood farther away and even stepped back as the two robots interacted with each other.

In the post-interaction interview, P4 mentioned that it was unclear to them if they were part of the interaction because it was their friends who were initially interacting with the robot. As the mobile robot moved towards them, P4 was unsure if the mobile robot knew they were part of the group and moved out of the way. This sequence of interaction shows that the joining behavior has the potential to influence people’s perception of group membership. A better, socially appropriate position could have made P4 feel confident they were part of the group and have been less likely to prompt them to move away.

In one of the sessions, we observed a participant who stood to the side observing the interaction between the robot and another group of participants. Once the participant observed the group taking the stickers, they stepped in, took a sticker, and left. It was unclear if the person knew anyone who was initially interacting with the robots.

V-E Mobile Robot Joining Position

While the mobile robot moved to the predefined position next to the stationary robot in the majority of the interactions, we still collected valuable feedback on the position choice. Participants generally found the chosen position to be appropriate. One of the participants who experienced the predefined position stated that they wished the robot was closer. They talked about how while the position of the robot was where they expected a person to be, they believed the robot needed to come closer because it lacked the manipulation capabilities to hand over the object like a human would. Because the participant had to lean forward and take the object, they talked about how the robot should be only “one hand” (arm’s length) distance away compared to the “two hands” distance that they experienced.

These findings, together with our observation of handovers and changes in groups, demonstrated the importance of a task-aware and human-aware joining strategy. A static position may lead to the system accidentally excluding others in the group, lead to people misinterpreting the robot’s action, and likely be a poor position for certain tasks.

V-F Effects of Keyboard Inputs & Failures of Speech-to-Text

When we first designed this study, one concern we had was that the keyboard input would limit the movement of participants to their starting position (where the keyboard was) to provide input. We ran this study with both keyboard input only and a combination of keyboard input and a microphone.

Due to the combination of ambient sounds, hallway acoustics, and COVID-19 masking guidelines at the time of our study, our speech-to-text system was unreliable. We observed multiple participants who first attempted to use the speech system before stepping forward and interacting with the robot through the keyboard. In the interviews, participants reinforced our observations and discussed how the microphone was unreliable and they ended up using the keyboard.

We also found some evidence that participants would move back to interact with the first robot. We observed participants moving back to the keyboard to type responses such as “Thanks for the stickers”, “got the sticker”, ‘’thanks”. This supports our intuition that the keyboard anchors the human’s position during the interaction and changes the spatial dynamics of the interaction. Future work should explore how these anchors influence user positions and movements during person transfers and multi-robot interaction. For example, a factory worker using a fixed tool may choose to stay with the first robot instead of moving.

V-G Robot-Robot Communication

After the participant picked up the sticker, the robots had a quick conversation. Participants had mixed reactions to the exchange. In a few cases, participants left after taking the stickers and did not wait for the interaction to finish. This was understandable as the conversation did not add any value to the service. The majority of the participants waited for the robots to finish their conversation. When asked about the conversation, one of the participants mentioned that it was a good addition as it showed that the robots could communicate and were on the same team. Some participants also stated that it was an artificial and performative act. One participant mentioned that they were surprised that the mobile robot could talk at all.

V-H Other People in the Scene

We were also interested in how bystanders and others in our study area interacted with our robots. In the majority of the sessions, when the mobile robot was close to Tank, we observed people moving around the robots and the participants interacting with our robot. When there was a big gap between the participant and the mobile robot, we observed that most people in the passersby category simply walked through the gap, violating the human-robot group space (Figure 5). However, we did observe one instance where a person consciously walked around the mobile robot even when the mobile robot was far away from the participant.

It was unclear how much the layout of the hallway affected whether bystanders decided to walk through the gap. In the scene shown in Figure 5, the mobile robot moved to the middle of the hallway and slightly blocked the default route through the hallway (as shown in Figure 6). While there was sufficient space behind the mobile robot for people to move through, it required large trajectory changes, and the space behind the robot was unlikely to fit a group of people. The function of the hallway as a means of moving between spaces may have also led bystanders to believe it was socially acceptable to violate the robots’ and participants’ O-space (the space between the participants in a group social interaction) [32]. However, without interviewing the bystanders, it is unclear if they perceived the robot as being in a group with the two participants.

We also encountered situations in which other people in the scene purposely blocked the movement of the robot. As the hallway was filled with university students, we believed they were trying to test the capabilities and limitations of the robot. These curiosity-driven impedances are often observed when the robots are first being deployed [33].

VI Limitations & Future Work

As pointed out above, group memberships and participants in the interactions were not static and constantly changed as people joined and left the interaction. We observed cases where up to 7 people concurrently interacted with our robots and cases where people left and rejoined the group throughout the interaction. A simplistic model to determine join positions such as those presented in [5] is likely insufficient. Future work should explore how to model group membership and incorporate that into mobile robot position selection.

Our participants are not representative of the wider population. They were likely computer science students who were trying to get from one classroom to another. Furthermore, participants have seen Tank before, and we had to put extra signage to attract participants to interact with our robots. While our work is the first step in understanding person transfer in the field, future work should explore different tasks (e.g., guidance, food serving) and locations (e.g., hospitals, transportation hubs).

VII Study Implications

Combining our observational findings and interviews, we propose the following insights to consider when developing “Person Transfer” in the field:

-

1.

In remote person-transfer scenarios (where the second robot is not co-located with the first robot) [1], the first robot should communicate clearly the existence of the second robot and its expected arrival time to instill confidence and trust.

-

2.

Ensure the second robot’s joining trajectory and position respect the existing group formation to avoid alienating group members.

-

3.

For object handover, the second robot’s position should be about one arm’s length away from the participant. The robot can also use its positioning to signal whether or not an object is ready to be taken.

-

4.

While robot-robot communication that mimics social norms may be preferred [2], designers should account for situations in which participants skip pleasantries after receiving their services.

VIII Conclusion

Our field study expanded our understanding of how person transfer works and is perceived in the field. Our findings demonstrated why a context-aware socially appropriate mobile robot joining strategy is needed. A fixed position strategy will likely be less preferable for some tasks, alienate certain group members, and not react to the changes in group membership. Similarly, a bad, improper position can also lead to interruption by others as they walk through the interaction space. We also found that it is important for the first robot to communicate clearly how and when a transfer is going to happen. Furthermore, the dialog by Tank (“I ran out of stickers. Let me call green robot with more stickers”) likely did not instill confidence as it did not convey when and how the second robot would arrive. This work exposed pitfalls and generated insights on how to design sequential human-robot interaction in the field. Our findings highlighted the complexity of this space and future work should continue to explore how autonomous robotic systems can address the challenges we raised.

Acknowledgment

We thank Reid Simmons and Greg Armstrong for helping us set up and use the Roboceptionist system. We also thank Prithu Pareek, Jodi Forlizzi, Selma Šabanović, the participants, and members of the Transportation, Bots, and Disability Lab at CMU.

References

- [1] X. Z. Tan, M. Luria, A. Steinfeld, and J. Forlizzi, “Charting sequential person transfers between devices, agents, and robots,” in Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, 2021, pp. 43–52.

- [2] X. Z. Tan, S. Reig, E. J. Carter, and A. Steinfeld, “From one to another: How robot-robot interaction affects users’ perceptions following a transition between robots,” in 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, 2019, pp. 114–122.

- [3] T. Williams, P. Briggs, and M. Scheutz, “Covert robot-robot communication: Human perceptions and implications for human-robot interaction,” Journal of Human-Robot Interaction, vol. 4, no. 2, pp. 24–49, 2015.

- [4] M. Söderlund, “The robot-to-robot service encounter: An examination of the impact of inter-robot warmth,” Journal of Services Marketing, vol. 35, no. 9, pp. 15–27, Dec. 2021.

- [5] X. Z. Tan, E. J. Carter, P. Pareek, and A. Steinfeld, “Group formation in multi-robot human interaction during service scenarios,” in Proceedings of the 2022 International Conference on Multimodal Interaction, 2022, pp. 159–169.

- [6] C. G. Morales, E. J. Carter, X. Z. Tan, and A. Steinfeld, “Interaction needs and opportunities for failing robots,” in Proceedings of the 2019 on Designing Interactive Systems Conference. ACM, 2019, pp. 659–670.

- [7] X. Z. Tan, M. Vázquez, E. J. Carter, C. G. Morales, and A. Steinfeld, “Inducing bystander interventions during robot abuse with social mechanisms,” in Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction. ACM, 2018, pp. 169–177.

- [8] R. Gockley, A. Bruce, J. Forlizzi, M. Michalowski, A. Mundell, S. Rosenthal, B. Sellner, R. Simmons, K. Snipes, A. Schultz, and J. Wang, “Designing robots for long-term social interaction,” in 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Aug. 2005, pp. 1338–1343.

- [9] B. Lewandowski, T. Wengefeld, S. Müller, M. Jenny, S. Glende, C. Schröter, A. Bley, and H.-M. Gross, “Socially compliant human-robot interaction for autonomous scanning tasks in supermarket environments,” in 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). IEEE, 2020, pp. 363–370.

- [10] D. Hebesberger, T. Koertner, C. Gisinger, and J. Pripfl, “A long-term autonomous robot at a care hospital: A mixed methods study on social acceptance and experiences of staff and older adults,” International Journal of Social Robotics, vol. 9, no. 3, pp. 417–429, 2017.

- [11] T. Kanda, M. Shiomi, Z. Miyashita, H. Ishiguro, and N. Hagita, “An affective guide robot in a shopping mall,” in Proceedings of the 4th ACM/IEEE international conference on Human robot interaction, 2009, pp. 173–180.

- [12] I. R. Nourbakhsh, C. Kunz, and T. Willeke, “The mobot museum robot installations: A five year experiment,” in Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003)(Cat. No. 03CH37453), vol. 4. IEEE, 2003, pp. 3636–3641.

- [13] M. Shiomi, T. Kanda, H. Ishiguro, and N. Hagita, “Interactive humanoid robots for a science museum,” in Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction. ACM, 2006, pp. 305–312.

- [14] D. Rothenbücher, J. Li, D. Sirkin, B. Mok, and W. Ju, “Ghost driver: A field study investigating the interaction between pedestrians and driverless vehicles,” in 2016 25th IEEE international symposium on robot and human interactive communication (RO-MAN). IEEE, 2016, pp. 795–802.

- [15] Q. Sun, Y. Guo, Z. Yao, and H. Mi, “Yousu: A mythical character robot design for public scene interaction,” in 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). IEEE, 2023, pp. 134–140.

- [16] S. Edirisinghe, S. Satake, D. Brscic, Y. Liu, and T. Kanda, “Field trial of an autonomous shopworker robot that aims to provide friendly encouragement and exert social pressure,” in Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, 2024, pp. 194–202.

- [17] N. T. V. Tuyen, S. Okazaki, and O. Celiktutan, “A study on customer’s perception of robot nonverbal communication skills in a service environment,” in 2023 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). IEEE, 2023, pp. 301–306.

- [18] E. Hauser, Y.-C. Chan, R. Bhalani, A. Kuchimanchi, H. Siddiqui, and J. Hart, “Influencing incidental human-robot encounters: Expressive movement improves pedestrians’ impressions of a quadruped service robot,” arXiv preprint arXiv:2311.04454, 2023.

- [19] S. Reig, S. Norman, C. G. Morales, S. Das, A. Steinfeld, and J. Forlizzi, “A field study of pedestrians and autonomous vehicles,” in Proceedings of the 10th international conference on automotive user interfaces and interactive vehicular applications, 2018, pp. 198–209.

- [20] H. Han, F. M. Li, N. Martelaro, D. Byrne, and S. E. Fox, “The robot in our path: Investigating the perceptions of people with motor disabilities on navigating public space alongside sidewalk robots,” in Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility, 2023, pp. 1–6.

- [21] H. R. Pelikan, S. Reeves, and M. N. Cantarutti, “Encountering autonomous robots on public streets,” in Proceedings of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, 2024, pp. 561–571.

- [22] M. Shiomi, T. Kanda, D. F. Glas, S. Satake, H. Ishiguro, and N. Hagita, “Field trial of networked social robots in a shopping mall,” in 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2009, pp. 2846–2853.

- [23] M. R. Fraune, S. Kawakami, S. Sabanovic, P. R. S. De Silva, and M. Okada, “Three’s company, or a crowd?: The effects of robot number and behavior on hri in japan and the usa.” in Robotics: Science and Systems, 2015.

- [24] K. Hayashi, D. Sakamoto, T. Kanda, M. Shiomi, S. Koizumi, H. Ishiguro, T. Ogasawara, and N. Hagita, “Humanoid robots as a passive-social medium-a field experiment at a train station,” in 2007 2nd ACM/IEEE International Conference on Human-Robot Interaction (HRI). IEEE, 2007, pp. 137–144.

- [25] M. Makatchev and R. Simmons, “Incorporating a user model to improve detection of unhelpful robot answers,” in RO-MAN 2009 - The 18th IEEE International Symposium on Robot and Human Interactive Communication, 9 2009, pp. 973–978.

- [26] S. Sabanovic, M. Michalowski, and R. Simmons, “Robots in the wild: Observing human-robot social interaction outside the lab,” in 9th IEEE International Workshop on Advanced Motion Control, 2006., 3 2006, pp. 596–601.

- [27] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler, A. Y. Ng et al., “Ros: an open-source robot operating system,” in ICRA workshop on open source software, vol. 3, no. 3.2. Kobe, Japan, 2009, p. 5.

- [28] D. Bohus, S. Andrist, and M. Jalobeanu, “Rapid development of multimodal interactive systems: A demonstration of platform for situated intelligence,” in Proceedings of the 19th ACM International Conference on Multimodal Interaction, ser. ICMI ’17. New York, NY, USA: Association for Computing Machinery, 2017, p. 493–494. [Online]. Available: https://doi.org/10.1145/3136755.3143021

- [29] E. Marder-Eppstein, E. Berger, T. Foote, B. Gerkey, and K. Konolige, “The office marathon: Robust navigation in an indoor office environment,” in Robotics and Automation (ICRA), 2010 IEEE International Conference on. IEEE, 2010, pp. 300–307.

- [30] M. Pivtoraiko and A. Kelly, “Generating near minimal spanning control sets for constrained motion planning in discrete state spaces,” in 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2005, pp. 3231–3237.

- [31] X. Z. Tan, “Person transfers between multiple service robots,” Ph.D. dissertation, Carnegie Mellon University, Pittsburgh, PA, January 2022.

- [32] A. Kendon, Conducting interaction: Patterns of behavior in focused encounters. CUP Archive, 1990, vol. 7.

- [33] T. Nomura, T. Uratani, T. Kanda, K. Matsumoto, H. Kidokoro, Y. Suehiro, and S. Yamada, “Why do children abuse robots?” in Proceedings of the tenth annual ACM/IEEE international conference on human-robot interaction extended abstracts, 2015, pp. 63–64.