Pattern Formation for Fat Robots with Memory

Abstract

Given a set of autonomous, anonymous, indistinguishable, silent, and possibly disoriented mobile unit disk (i.e., fat) robots operating following Look-Compute-Move cycles in the Euclidean plane, we consider the Pattern Formation problem: from arbitrary starting positions, the robots must reposition themselves to form a given target pattern. This problem arises under obstructed visibility, where a robot cannot see another robot if there is a third robot on the straight line segment between the two robots. We assume that a robot’s movement cannot be interrupted by an adversary and that robots have a small -sized memory that they can use to store information, but that cannot be communicated to the other robots. To solve this problem, we present an algorithm that works in three steps. First it establishes mutual visibility, then it elects one robot to be the leader, and finally it forms the required pattern. The whole algorithm runs in rounds with probability at least . The algorithms are collision-free and do not require the knowledge of the number of robots.

Keywords Pattern formation Fat robots Obstructed visibility Collision avoidance.

1 Introduction

This paper considers a system of unit disk robots in the two-dimensional Euclidean plane and studies the problem of forming a pattern using these robots. Consider a set of mobile robots working under the classical oblivious robots model [21] which are autonomous (no external control), anonymous (no unique identifiers), indistinguishable (no external markers), with some history (limited memory of the past), silent (no means of direct communication), and possibly disoriented (no agreement on their coordination systems). All robots execute the same algorithm. They operate following Look-Compute-Move (LCM) cycles [15] and work toward achieving a common goal, i.e., when a robot becomes active, it uses its vision to get a snapshot of its surroundings (Look), computes a destination point based on the snapshot (Compute), and finally moves towards the computed destination (Move).

There are three types of schedulers for the activation of the robots in the plane: fully synchronous, semi-synchronous, and asynchronous. In the fully synchronous model, time is discrete and at each time instant all robots in the plane are activated and perform their LCM operations instantaneously, ending at time . The semi-synchronous is similar, except that a subset of robots is activated at each time. Therefore, we use round in fully synchronous and semi-synchronous instead of time . In the asynchronous setting, each robot acts independently from the others, and the duration of the LCM operations of each robot is finite but unpredictable, i.e., there is no common notion of time.

This classical robot model has a long history of research and has many applications including coverage, exploration, intruder detection, data delivery, and symmetry breaking [11]. Unfortunately, most of the previous work considered the robots in this model to be dimensionless point robots which do not occupy any space.

The classical model also makes an important assumption of unobstructed visibility: any three collinear (point) robots are mutually visible to each other. However, this assumption may not reflect reality, since robots are not dimensionless points and hence they may block the view of other collinear robots. Therefore, the capabilities of robots under obstructed visibility has been the subject of recent research [1, 5, 12, 13, 14, 16, 17, 18, 19, 20, 25, 30, 31, 32, 33]. Under obstructed visibility, robot can see robot if and only if there is no third robot on the straight line segment connecting and .

Recent research under obstructed visibility focuses on the Pattern Formation problem: Starting from arbitrary (distinct) positions in the plane, determine a schedule to reposition the robots such that they form a given target pattern without any two robots colliding during the execution of the algorithm [7, 9, 34]. We say that two robots collide if at any point in time they share the same position. The target pattern is allowed to be scaled, rotated, translated, and reflected in order to be successfully built by the robots.

As far as we are aware, there currently exist no algorithms to solve the Pattern Formation problem in the classical model for fat robots with limited memory. Our algorithm works in three phases. First it establishes mutual visibility, then it elects one robot to be the leader, and finally it forms the required pattern. During the execution, the robots do not need to agree on the orientation of any axes and the robots do not initially need to know how many robots there are. The algorithm requires in rounds with probability at least in the fully synchronous model under obstructed visibility and is collision-free.

1.1 Related Work

A variation of the classical model described above, called the luminous robots model (or the robots with lights model), has become the focus of significant interest [7, 9, 16, 17, 18, 29, 30, 31, 33, 34]. In this model, robots are equipped with an externally visible light which can assume different colors from a fixed set of colors. The lights are persistent, i.e., the color of the light is not erased at the end of the LCM cycle. Except for the assumption of the availability of lights, the robots work similarly to the classical model. This model corresponds to the classical oblivious robots model when the number of colors in the set is 1 [16, 21]. In this model, the objective is to solve the Pattern Formation problem while minimizing the size of the color set.

Most of the existing work considered dimensionless point robots to solve the Pattern Formation problem [6, 8, 23, 24, 26, 27, 28], and most of them considered the above lights model while solving the problem [9, 34]. However, the techniques used in those papers do not generalize to fat robots and cannot be used to solve the problem in the classical model. Most closely related to our work is the paper by Bose et al. [7], where they studied the Pattern Formation problem for fat robots under the asynchronous scheduler. In their solution, using the robots with lights model, all the robots need to agree on one axis. They solved the problem using ten colors. The required number of colors was recently improved by Alsaedi et al. [3], who gave algorithms that required 7 or 8 colors, depending on whether the target pattern was allowed to be scaled or not.

Other recent work [9, 34] solved the Pattern Formation problem in the robots with lights model assuming that the robots are dimensionless points. These papers provided techniques to overcome obstructed visibility, but did not take the physical extents of the robots into account. Unfortunately, the techniques developed for point robots cannot be applied directly to solve Pattern Formation for fat robots for this reason.

Kundu et al. [27] studied the Pattern Formation problem for fat robots with lights on an infinite grid with one axis agreement of robots. They solved the problem using nine colors. However, they did not bound the running time of their algorithm.

Other results for the Pattern Formation problem include those by Cicerone et al. [10], who considered point robots with chirality (robots have to agree on a notion of left and right), and those by Flocchini et al. [22], who studied the problem for point robots in the asynchronous setting, requiring that the robots agree on their environment and observe the positions of the other robots.

2 Preliminaries

Consider a set of anonymous robots operating in the Euclidean plane . The number of robots is not assumed to be known to the robots. We assume that each robot is a non-transparent disk with diameter 1. The center of the robot is denoted , and the position of is also said to be the position of . We denote by the Euclidean distance between the two robots to . For simplicity, we use to denote both the robot and the position of its center . Each robot has its own coordinate system, and it knows its position with respect to this coordinate system. Note that robots do not necessarily agree on the orientation of their coordinate systems. However, since all the robots are of unit size, they implicitly agree on the notion of unit length.

Each robot has a camera to take a snapshot of the plane and the distance that is visible to this camera is infinite, provided that there are no obstacles blocking its view (i.e., another robot) [1]. Following the fat robot model [1, 14], we assume that a robot can see another robot if there exists a point on the bounding circle of that is visible to some point on the bounding circle of . Similarly, we say that a point in the plane is visible to a robot if there is a point on the bounding circle of such that the straight line segment does not intersect any other robot.

Each robot is equipped with a small amount of memory. We assume that a single unit of memory suffices to store an integer of value at most . In other words, a single unit of memory can store bits. We also assume that the locations of the robots can be stored efficiently, using a constant number of units of memory, or a constant amount of memory for short. As all previous work assumes that the robots can perform computations based on the observed (locations of) robots, the only difference between this model and the existing model is that we allow a small number of things to be stored between rounds. Crucial is that the robots have no way to communicate with each other (i.e., they can send no messages and have no lights to indicate their internal state) and thus they cannot combine what is stored in their respective memories.

At any time , a robot is either active or inactive. When active, performs a sequence of Look-Compute-Move (LCM) operations:

-

•

Look: the robot takes a snapshot of the positions of the robots visible to it in its own coordinate system;

-

•

Compute: executes its algorithm using the snapshot which returns a destination point , potentially using the information stored in its memory and updating what is stored in its memory; and

-

•

Move: moves to the computed destination (if is different from its current position).

Each robot executes the same algorithm locally every time it is activated and a robot’s movement cannot be interrupted by an adversary. Two robots and are said to collide at time if the bounding circles of and share a common point at time . To avoid collisions among robots, we thus have to ensure that at all times for any robots and .

The Pattern Formation problem is now defined as follows: Given any initial positions of the robots, the robots must reposition themselves to form a given target pattern without having any collisions in the process, and then terminate in this configuration. The target pattern is given as input as a list of locations, one for each robot in the pattern and allowed to be scaled, rotated, translated, and reflected. An algorithm is said to solve the Pattern Formation problem if it always achieves the target pattern from any initial configuration. The pattern should be constructed if this is indeed possible. We measure the quality of the algorithm using the amount of memory required to execute it and the number of rounds needed to solve the Pattern Formation problem.

3 Mutual Visibility

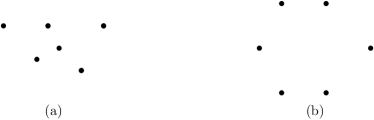

Our algorithm works in three phases. The first phase is the Mutual Visibility phase. The goal of this phase is to move the robots such that at the end of the phase every robot can see all other robots. The algorithm we present forms a convex hull with each robot positioned on a corner of the convex hull (see Figure 1 for an example). We will argue later that our approach runs in rounds and uses memory space.

3.1 Algorithm

On a high level, our Mutual Visibility algorithm is similar to the algorithm by Alsaedi et al. [2]. However, our algorithm works in the classical robots model (i.e., without the lights required by Alsaedi et al.). Their algorithm used two colors to distinguish (and communicate) which robots are corners of the convex hull and which robots are in the interior. Their algorithm proceeds to gradually expand the convex hull by moving the corner robots one unit away from the interior of the convex hull using the angle bisectors of consecutive robots on the hull. This ensures that the edges of the hull will become long enough to let all interior robots move through them to the outside of the convex hull to become new corners without collisions. Alsaedi et al. [2] showed that this process finishes in rounds.

We first observe that robots can easily determine whether they themselves are interior or corner robots. Corner robots are those robots on the vertices of the convex hull with interior angles less than . There could initially be robots with interior angle equal to , but since all the corner robots move in every round to expand the convex hull these robots will become interior robots almost immediately.

Let us look a bit more closely at how Alsaedi et al. [2] moved the interior robots. They define eligible edges for each robot as the set of convex hull edges of length at least for which no other robot is closer to the edge. If an interior robot has eligible edges, it picks the closest one and moves perpendicular to this edge through it to become a new corner of the convex hull. In every round, there are at most two interior robots that move through any single edge of the convex hull. By construction, the movements of the robots through different edges do not interfere each other. Therefore, there is no collision among interior robots. Since all the corner robots move in every round, the edges of the hull are long enough to move the interior robots through without any collisions. Therefore, there is no collision among all robots. Since a robot can determine whether it is interior or not, and all other computations for this process are based on the locations of the other robots it sees, this process does not require the colors and can be mimicked in our algorithm.

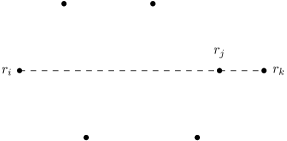

Hence, it remains to argue that the robots can correctly determine when this phase has ended. Since there are no lights to signal this (all robots having the corner robots light does this in the original algorithm) and the robots do not know , it is difficult for the robots to know whether the phase has ended even if the robots have a little memory. Specifically, there are cases where from a corner robot’s perspective all other visible robots are in convex position. For example, in Figure 2 robot cannot see robot since robot blocks its view to that robot. However, since all robots that are visible to are in convex position, it can now incorrectly conclude that it can see all robots.

Fortunately, the above problem can only occur for a single robot in the configuration. All other robots can see robot as the interior robot it is. In the next section we will prove this claim. Now, what we need to avoid is robot incorrectly and prematurely ending the Mutual Visibility phase for itself, as we cannot correct this easily due to the lack of communication between robots. Hence, when a corner robot believes the phase is over because it observed that all other visible robots are in convex position, it starts a counter with value , where is the number of visible corner robots. It stores both the value and the counter in its memory. Every round, it continues expanding the convex hull as normal (i.e., it continues to behave the same way as if not all robots are in convex position yet), but it also decrements the counter. This is to ensure that if at any point it realizes that it made a mistake, we do not have to deal with correcting the situation or computing where it should have moved due to a mistake a certain number of rounds ago. If it sees more than corner robots within these rounds, it stops its current counter and concludes that it was previously incorrect. Because the robot acted as normal, it is already in the exact same location as it would have been if it had not made this mistake and thus the algorithm can proceed as normal (and if it again observes all visible robots in convex position, it will start a new counter using the new higher value of ). In the next section, we will argue that this process ensures that every corner robot correctly concludes that the Mutual Visibility phase has ended and that they all conclude this in the same round. As a result, the Mutual Visibility problem has been solved. All robots store this in their memory.

3.2 Analysis

We proceed to prove that our algorithm solves the Mutual Visibility problem in a linear number of rounds using a constant amount of memory, while avoiding collisions between the robots.

Since our algorithm is similar to the algorithm by Alsaedi et al. [2], the main parts of the proof can be found there. We therefore focus on the differences between the two algorithms.

We start by recalling that contrary to the original algorithm, robots cannot always determine whether they are in convex position. Specifically, if multiple robots are collinear, some might observe all others to be in convex position, while globally this is not yet the case (see Figure 2). We first argue that there can be at most one robot that incorrectly concludes that all robots are in convex position.

Lemma 1.

If there exists an interior robot, then there exists at most one corner robot that believes all robots are in convex position.

Proof.

We observe that for a robot not to be visible to a corner robot, three robots have to be collinear. This implies that all robots except the two extreme ones on this line can conclude that they are not in convex position.

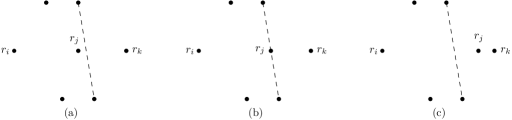

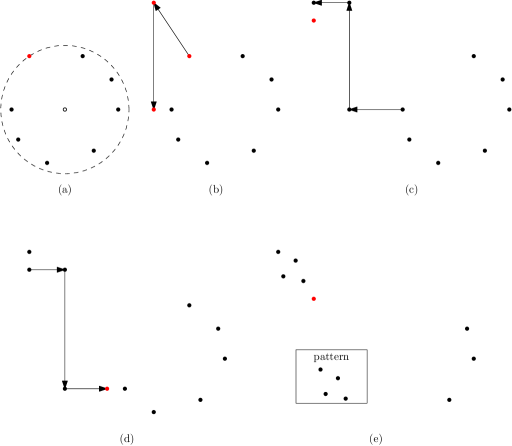

Now consider such an extreme robot. If there are one or more interior robots that block the view of one corner robot, this corner robot observes the position of at least one interior robot by using the line segments connecting each pair of corner robots (see Figure 3).

If an interior robot lies before a line segment between a pair of corner robots (see Figure 3a), the corner robot observes that they are not in convex position. If the interior robot lies on the line segment between a pair of corner robots (see Figure 3b), the corner robot observes that there are three collinear robots and thus the robots are not yet in convex position. If an interior robot lies behind the line segment between a pair of corner robots (see Figure 3c), corner robot may believe the robots are in convex position because the interior robot blocks the view to the corner robot that lies behind it. Hence, from ’s perspective, all robots are in convex position. However, note that from ’s perspective lies before this same line segment and thus correctly concludes that the robots are not in convex position yet. Hence, only one robot can incorrectly conclude that all robots are in convex position and the lemma follows. ∎

Next, we argue that when a robot starts a counter, within rounds either it sees more corner robots or it can correctly conclude that mutual visibility has been achieved.

Lemma 2.

If a robot starts a counter with value (where is the number of currently visible corner robots), then within that number of rounds either it sees more than corner robots or mutual visibility has been achieved, and terminates this phase.

Proof.

Lemma in [2] shows that it takes at most rounds to make enough space for the interior robots to move outside the current convex hull. In addition to that, it takes two more rounds to move at least one interior robot outside the current convex hull. Therefore, if there is an interior robot inside the hull, it takes at most rounds for a new corner robot to appear. Since all other corner robots remain visible, this implies the first part of the lemma. Otherwise, if there is no interior robot inside the hull, then mutual visibility has been achieved, and correctly terminates this phase. ∎

Now that we know that at some point the robots conclude that the phase has concluded, we proceed to show that all robots terminate in the same round.

Lemma 3.

All robots terminate the Mutual Visibility phase in the same round.

Proof.

Since by Lemma 1, only one corner robot can incorrectly believe that all robots are in convex position, only this robot can start a counter with value by Lemma 2. If there is an interior robot, by Lemma 2 it will conclude this within rounds.

Once there are no interior robots, every corner robot starts a counter with value when the last corner robot joins the convex hull, i.e., in the same round. Therefore, all robots terminate the Mutual Visibility phase in the same round. ∎

It remains to analyze the time complexity of the Mutual Visibility phase.

Lemma 4.

The Mutual Visibility phase takes rounds.

Proof.

Lemma 5.

The Mutual Visibility phase uses memory.

Proof.

In the Mutual Visibility phase, every robot needs to store the termination counter and the number of visible robots at that time. Both of these are upper bounded by which is at most and can thus be stored in bits, i.e., memory space. The additional bits needed to store the current phase do not affect this bound. ∎

Therefore, we conclude the following theorem.

Theorem 6.

The Mutual Visibility phase finishes in rounds without collisions in the fully synchronous setting using memory.

4 Leader Election

The second phase of our algorithm is the Leader Election phase. The goal of the Leader Election phase is for a single robot to be elected as a leader. It is known that this problem cannot be solved with a deterministic algorithm in an anonymous distributed system [4]. We present an algorithm which is an adaptation of the slotted-Aloha protocol [34].

4.1 Algorithm

After the Mutual Visibility phase, every robot sees all other robots and thus is known and can be stored by all robots. This implies that at this point all robots can determine whether it is possible to build the given pattern with the number of available robots: if the number of robots in the given pattern is at most , then the robots proceed and otherwise, they all terminate. Hence, in the remainder, we assume that the number of robots required to build the pattern is at most .

The next phase of our algorithm aims to elect a single robot to be the leader in the final Pattern Formation phase. The leader will store that it is the leader and when a leader is elected all robots will be aware of this fact. However, but since all robots are identical, once a leader is elected, the other (non-leader) robots will not be able to store which robot is the leader. All that each of these robots can remember is that it is not the leader.

In order to elect a leader all robots first compute the centroid of the convex hull formed by the robots. Next, they all determine the distance from the centroid to the robot farthest from the centroid. Since all robots are visible to each other, all robots will compute the same and . The leader is selected among all robots on the circle centered at having radius . In other words, all robots on the boundary of the circle are competing to become the leader and all robots in the interior of the circle are non-competing. By construction there are no robots outside the circle and there is at least one robot on the boundary of this circle, but there could be multiple and thus we need a mechanism to pick one of them.

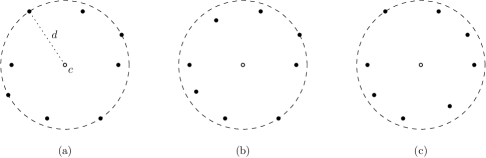

To facilitate this, the following process is repeated until a leader has been elected: All competing robots flip a coin where the probability of success is , where is the number of competing robots. If a robot is successful, then the robot remains in its current position on the boundary of the circle. Otherwise, it stores its current location on the circle and moves inside the circle some distance without crossing the straight line between its two neighbours, to ensure that the convex hull remains convex and thus all robots remain visible to each other (see Figure 4). If there are or more than robot on the boundary of the circle, the robots conclude that this iteration failed to elect a leader and the robots that were competing in the previous round return to their positions on the boundary of the circle using their stored locations to repeat the algorithm at the next iteration. When there is exactly one competing robot left on the boundary of the circle, this robot becomes the leader and it stores this information. Note that all robots can observe this event, as we keep the convex hull convex and thus the robots remain mutually visible throughout this process. Hence, all robots can determine whether a leader has been elected and whether they are the leader. Every robot stores this information, along with the fact that the Leader Election phase has ended and proceeds to the next phase of the algorithm.

4.2 Analysis

We start by arguing the correctness of the Leader Election phase.

Lemma 7.

Leader election can be solved in the memory model with robots.

Proof.

The analysis is identical to that in [34] with the lights replaced by being on the circle or not being on the circle. As this can be observed by all robots at all time, the lemma follows. ∎

Next, we consider the memory required by our algorithm.

Lemma 8.

The Leader Election phase uses memory.

Proof.

In the Leader Election phase, every robot needs to store the circle, i.e., the center and the radius. Since both are a linear combination of the locations of the robots, these can indeed be computed and stored in bits. In order to allow competing robots to move back to their original positions when an iteration is unsuccessful, we store their previous location, which takes bits. Finally, every robot needs a constant number of bits to store whether it is a leader and whether the phase has ended. In total, this requires bits of memory, i.e., memory space. ∎

Finally, since the expected running time of the Leader Election phase has already been analyzed in the asynchronous setting [34], and the fully synchronous setting takes at most the same number of rounds, we obtain the following result.

Theorem 9.

For any , the Leader Election phase finishes in rounds, with probability at least , without collisions in the fully synchronous setting using memory.

5 Pattern Formation

The final phase of our algorithm is the Pattern Formation phase. The goal of this phase is to move the robots to form the given (target) pattern and thus solve the original problem.

5.1 Algorithm

Since we now have a leader, this robot will facilitate the other robots moving and the other robots will not move unless instructed to do so by the leader. Our algorithm depends on the leader’s position on the convex hull at the end of the Leader Election phase. Since the leader is positioned on the convex hull of the robots, this means that at least one of its quadrants does not contain any robots. Once the leader has determined its empty quadrant, it proceeds to build the target pattern in that quadrant. Without loss of generality, we assume that the leader’s top left quadrant is empty, and thus we want to build the pattern there. We define an ordering on the robots (excluding the leader) and an ordering on the positions in the pattern (). Both orderings are left to right, with ties being broken top to bottom. The high-level idea is that the leader moves robot to pattern position repeatedly until the full pattern is built. If needed, the leader itself will fill position .

To avoid collisions during this process, the Pattern Formation phase requires the given pattern to be scaled, as allowed by the problem. This is to ensure that after leading a robot to its position in the pattern, the leader can move away to get the next robot without colliding with previously placed robots in the process. Since the pattern is given as part of the input, every robot knows it and thus also knows the original size of the target pattern. The leader computes the size of the pattern after scaling by multiplying the input original size of the pattern by the scaling factor (say ) to get the final target pattern after scaling. Note that the scaled pattern does not need to be stored, as the leader can simply recompute it when needed. The leader stores the scaling factor, as well as the first and last locations of the pattern after scaling in order to reconstruct the placement of the robots in the scaled pattern.

In order to precisely define the robots’ movements, we need to determine some coordinates. After the Leader Election phase, let the leader be positioned at . Let be the -coordinate of the topmost robot on the convex hull and let be the -coordinate of the leftmost robot on the convex hull. To ensure that there is no overlap between the convex hull and the scaled target pattern, the leader will build the pattern such that is at in the leader’s coordinate system.

Next, we describe how the leader moves the robots in more detail (see also Figure 5). Initially the leader lies on the circle of the Leader Election phase and in order to move away from that without colliding with any other robot, the leader first moves perpendicular to the circle until it reaches the -coordinate in one round. It then moves to , i.e., one position to the left of the leftmost topmost robot in the ordering. Robot observes that there is a robot (the leader) touching it and concludes that this robot must be the leader and that it needs to follow this robot. The problem now is that the leader cannot communicate with robot where it needs to go and since can essentially be arbitrarily far away, guiding the robot using repeated unit distance steps is not feasible.

To work around this issue, the leader ”instructs” the robot in two rounds. In the first, it places itself next to the robot in the direction it wants the robot to move in. Robot observes this and stores this direction, for example using the location of the leader. In the next round, the leader moves the intended distance in the direction of intended movement. Robot , having stored the direction, sees how far the closest robot in that direction is and stores this distance. Once the leader has moved out of the way, robot is now free to move to the indicated location. Whenever the leader wants to move a robot somewhere this process is executed a constant number of times, to facilitate multiple sequential moves.

Moving a robot to its position always starts with the leader moving one position to the left of robot , to . The leader uses the above approach to guide robot horizontally left to . The leader then moves to (one position above the robot) to let robot store the next direction and follows the leader until it reaches by moving vertically up. The leader is now at (again one position to the left of ) to guide to its final position at by moving horizontally left. In order to ensure that does not follow the leader any further, the leader moves two positions down to . Since the target pattern is scaled, this extra movement of the leader cannot cause collisions with other robots in the target pattern, as in the original pattern robots are at least a distance of apart and thus now they are at least a distance of apart, which is more than the required units of movement plus two times the radius of a robot. Once robot has reached , the leader moves horizontally right to , then vertically down to (the position to the left of robot ).

The above process is repeated until a robot is placed at or the final robot is positioned at when . To create a clean pattern, without the leader remaining in it, the leader moves horizontally right to -coordinate . If , the phase now ends. Otherwise and the leader moves vertically to , and finally to at .

5.2 Analysis

We now argue the correctness of the Pattern Formation phase.

Lemma 10.

In every round of the Pattern Formation phase, only one non-leader robot moves from the convex hull to be positioned in the target pattern avoiding collisions.

Proof.

In the Pattern Formation phase, the leader moves to the left of the leftmost topmost robot a distance one outside the convex hull. Robot observes this and follows the leader to its final position. Once the leader moves away from , stops at its final position. Since the leader moves only one robot at a time and a robot moves only once activated by the leader, only one non-leader robot moves in each round. Furthermore, due to the order in which the robots are moved (left to right) and the first move being horizontally to the left, no collisions can occur. ∎

Next, we analyze the time complexity of the Pattern Formation phase.

Lemma 11.

The Pattern Formation phase takes rounds.

Proof.

By Lemma 10, the leader activates and moves each robot from the convex hull to the target pattern one robot at a time. The leader moves next to a robot in a constant number of rounds and it moves a robot to its final position in three moves, so in six rounds. Therefore, one robot moves from the convex hull to the target pattern in a constant number of rounds. As a result, the total number of rounds required to move all robots from the convex hull to the target pattern is . ∎

Next, we prove the memory space that our algorithm needs in total for all phases.

Lemma 12.

The Pattern Formation phase uses memory.

Proof.

During the Pattern Formation phase, the leader stores the first and last locations of the pattern after scaling and the scaling factor. As these are simply locations in the plane, they can be stored using bits. Every other robot stores the direction and distance while moving to its final location in the target pattern. As these can be stored as locations of the leader, this also requires bits. Hence, memory suffices for all robots. ∎

Theorem 13.

The Pattern Formation phase finishes in rounds without collisions in the fully synchronous setting using memory.

Theorem 14.

Our algorithm solves the Pattern Formation problem for unit disk robots in rounds with probability at least without collisions in the fully synchronous model using memory space.

6 Conclusion

We studied the Pattern Formation problem for a system of autonomous fat robots of unit disk size in the classical model where all robots have a small persistent memory. We described an algorithm that solves the Pattern Formation problem and works under the fully synchronous model and under obstructed visibility. Our algorithm solves the Pattern Formation problem in rounds with probability at least . The Pattern Formation problem uses memory space. As a by-product, we also solve the Mutual Visibility problem in our model.

For future work, it is interesting to extend our algorithm to the semi-synchronous and asynchronous setting. Furthermore, it is interesting to extend our algorithm to solve the Pattern Formation problem in time complexity, which requires removing the Leader Election phase.

References

- [1] Chrysovalandis Agathangelou, Chryssis Georgiou, and Marios Mavronicolas. A distributed algorithm for gathering many fat mobile robots in the plane. In ACM Symposium on Principles of Distributed Computing (PODC), pages 250–259, 2013.

- [2] Rusul J. Alsaedi, Joachim Gudmundsson, and André van Renssen. The mutual visibility problem for fat robots. In Proceedings of the 18th Algorithms and Data Structures Symposium (WADS 2023), volume 14079 of Lecture Notes in Computer Science, pages 15–28, 2023.

- [3] Rusul J. Alsaedi, Joachim Gudmundsson, and André van Renssen. Pattern formation for fat robots with lights. Computational Geometry: Theory and Applications (CGTA), 128:102161, 2025.

- [4] Hagit Attiya and Jennifer Welch. Distributed computing: Fundamentals, simulations, and advanced topics. John Wiley & Sons, 2004.

- [5] Kálmán Bolla, Tamás Kovacs, and Gábor Fazekas. Gathering of fat robots with limited visibility and without global navigation. In International Conference on Swarm and Evolutionary Computation (SIDE), volume 7269 of Lecture Notes in Computer Science, pages 30–38, 2012.

- [6] Kaustav Bose, Ranendu Adhikary, Manash Kumar Kundu, Archak Das, and Buddhadeb Sau. Distributed pattern formation by autonomous robot swarm. In 23rd International Conference on Distributed Computing and Networking (ICDCN), pages 242–243, 2022.

- [7] Kaustav Bose, Ranendu Adhikary, Manash Kumar Kundu, and Buddhadeb Sau. Arbitrary pattern formation by opaque fat robots with lights. In Conference on Algorithms and Discrete Applied Mathematics (CALDAM), volume 12016 of Lecture Notes in Computer Science, pages 347–359, 2020.

- [8] Kaustav Bose, Archak Das, and Buddhadeb Sau. Pattern formation by robots with inaccurate movements. In 25th International Conference on Principles of Distributed Systems (OPODIS), volume 217 of Leibniz International Proceedings in Informatics (LIPIcs), pages 10:1–10:20, 2021.

- [9] Kaustav Bose, Manash Kumar Kundu, Ranendu Adhikary, and Buddhadeb Sau. Arbitrary pattern formation by asynchronous opaque robots with lights. Theoretical Computer Science, 849:138–158, 2021.

- [10] Serafino Cicerone, Gabriele Di Stefano, and Alfredo Navarra. Solving the pattern formation by mobile robots with chirality. IEEE Access, 9:88177–88204, 2021.

- [11] Mark Cieliebak, Paola Flocchini, Giuseppe Prencipe, and Nicola Santoro. Distributed computing by mobile robots: Gathering. SIAM Journal on Computing, 41(4):829–879, 2012.

- [12] Reuven Cohen and David Peleg. Local spreading algorithms for autonomous robot systems. Theoretical Computer Science, 399(1-2):71–82, 2008.

- [13] Andreas Cord-Landwehr, Bastian Degener, Matthias Fischer, Martina Hüllmann, Barbara Kempkes, Alexander Klaas, Peter Kling, Sven Kurras, Marcus Märtens, Friedhelm Meyer auf der Heide, Christoph Raupach, Kamil Swierkot, Daniel Warner, Christoph Weddemann, and Daniel Wonisch. Collisionless gathering of robots with an extent. In 37th Conference on Current Trends in Theory and Practice of Computer Science (SOFSEM), volume 6543 of Lecture Notes in Computer Science, pages 178–189, 2011.

- [14] Jurek Czyzowicz, Leszek Gasieniec, and Andrzej Pelc. Gathering few fat mobile robots in the plane. Theoretical Computer Science, 410(6-7):481–499, 2009.

- [15] Shantanu Das, Paola Flocchini, Giuseppe Prencipe, Nicola Santoro, and Masafumi Yamashita. Autonomous mobile robots with lights. Theoretical Computer Science, 609:171–184, 2016.

- [16] Giuseppe Antonio Di Luna, Paola Flocchini, S Gan Chaudhuri, Federico Poloni, Nicola Santoro, and Giovanni Viglietta. Mutual visibility by luminous robots without collisions. Information and Computation, 254:392–418, 2017.

- [17] Giuseppe Antonio Di Luna, Paola Flocchini, Sruti Gan Chaudhuri, Nicola Santoro, and Giovanni Viglietta. Robots with lights: Overcoming obstructed visibility without colliding. In 16th International Symposium on Stabilization, Safety, and Security of Distributed Systems (SSS), volume 8756 of Lecture Notes in Computer Science, pages 150–164, 2014.

- [18] Giuseppe Antonio Di Luna, Paola Flocchini, Federico Poloni, Nicola Santoro, and Giovanni Viglietta. The mutual visibility problem for oblivious robots. In 26th Canadian Conference on Computational Geometry (CCCG), 2014.

- [19] Ayan Dutta, Sruti Gan Chaudhuri, Suparno Datta, and Krishnendu Mukhopadhyaya. Circle formation by asynchronous fat robots with limited visibility. In Distributed Computing and Internet Technology (ICDCIT), volume 7154 of Lecture Notes in Computer Science, pages 83–93, 2012.

- [20] Paola Flocchini. Computations by luminous robots. In 14th International Conference on Ad Hoc Networks and Wireless (ADHOC-NOW), volume 9143 of Lecture Notes in Computer Science, pages 238–252, 2015.

- [21] Paola Flocchini, Giuseppe Prencipe, and Nicola Santoro. Distributed Computing by Oblivious Mobile Robots. Synthesis Lectures on Distributed Computing Theory (SLDCT). Springer Cham, 2012.

- [22] Paola Flocchini, Giuseppe Prencipe, Nicola Santoro, and Peter Widmayer. Arbitrary pattern formation by asynchronous, anonymous, oblivious robots. Theoretical Computer Science, 407(1-3):412–447, 2008.

- [23] Nao Fujinaga, Yukiko Yamauchi, Hirotaka Ono, Shuji Kijima, and Masafumi Yamashita. Pattern formation by oblivious asynchronous mobile robots. SIAM Journal on Computing, 44(3):740–785, 2015.

- [24] Sruti Gan Chaudhuri, Swapnil Ghike, Shrainik Jain, and Krishnendu Mukhopadhyaya. Pattern formation for asynchronous robots without agreement in chirality. arXiv preprint arXiv:1403.2625, 2014.

- [25] Sruti Gan Chaudhuri and Krishnendu Mukhopadhyaya. Leader election and gathering for asynchronous fat robots without common chirality. Journal of Discrete Algorithms, 33:171–192, 2015.

- [26] Satakshi Ghosh, Pritam Goswami, Avisek Sharma, and Buddhadeb Sau. Move and time optimal arbitrary pattern formation by asynchronous robots on infinite grid. International Journal of Parallel, Emergent and Distributed Systems, 38(1), 2023.

- [27] Manash Kumar Kundu, Pritam Goswami, Satakshi Ghosh, and Buddhadeb Sau. Arbitrary pattern formation by opaque fat robots on infinite grid. International Journal of Parallel, Emergent and Distributed Systems, 37(5):542–570, 2022.

- [28] Debasish Pattanayak, Klaus-Tycho Foerster, Partha Sarathi Mandal, and Stefan Schmid. Conic formation in presence of faulty robots. In 16th International Symposium on Algorithms and Experiments for Wireless Sensor Networks (ALGOSENSORS), volume 12503 of Lecture Notes in Computer Science, pages 170–185, 2020.

- [29] David Peleg. Distributed coordination algorithms for mobile robot swarms: New directions and challenges. In 7th International Workshop on Distributed Computing (IWDC), volume 3741 of Lecture Notes in Computer Science, pages 1–12, 2005.

- [30] Gokarna Sharma, Costas Busch, and Supratik Mukhopadhyay. Bounds on mutual visibility algorithms. In 27th Canadian Conference on Computational Geometry (CCCG), pages 268–274, 2015.

- [31] Gokarna Sharma, Costas Busch, and Supratik Mukhopadhyay. Mutual visibility with an optimal number of colors. In 11th International Symposium on Algorithms and Experiments for Wireless Sensor Networks (ALGOSENSORS), volume 9536 of Lecture Notes in Computer Science, pages 196–210, 2015.

- [32] Gokarna Sharma, Costas Busch, Supratik Mukhopadhyay, and Charles Malveaux. Tight analysis of a collisionless robot gathering algorithm. ACM Transactions on Autonomous and Adaptive Systems, 12(1):1–20, 2017.

- [33] Ramachandran Vaidyanathan, Costas Busch, Jerry L. Trahan, Gokarna Sharma, and Suresh Rai. Logarithmic-time complete visibility for robots with lights. In 29th IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 375–384, 2015.

- [34] Ramachandran Vaidyanathan, Gokarna Sharma, and Jerry Trahan. On fast pattern formation by autonomous robots. Information and Computation, 285:104699, 2022.