33email: zhangshaoting@pjlab.org.cn

Pathology-and-genomics Multimodal Transformer for Survival Outcome Prediction

Abstract

Survival outcome assessment is challenging and inherently associated with multiple clinical factors (e.g., imaging and genomics biomarkers) in cancer. Enabling multimodal analytics promises to reveal novel predictive patterns of patient outcomes. In this study, we propose a multimodal transformer (PathOmics) integrating pathology and genomics insights into colon-related cancer survival prediction. We emphasize the unsupervised pretraining to capture the intrinsic interaction between tissue microenvironments in gigapixel whole slide images (WSIs) and a wide range of genomics data (e.g., mRNA-sequence, copy number variant, and methylation). After the multimodal knowledge aggregation in pretraining, our task-specific model finetuning could expand the scope of data utility applicable to both multi- and single-modal data (e.g., image- or genomics-only). We evaluate our approach on both TCGA colon and rectum cancer cohorts, showing that the proposed approach is competitive and outperforms state-of-the-art studies. Finally, our approach is desirable to utilize the limited number of finetuned samples towards data-efficient analytics for survival outcome prediction. The code is available at https://github.com/Cassie07/PathOmics.

Keywords:

Histopathological image analysis Multimodal learning Cancer diagnosis Survival prediction1 Introduction

Cancers are a group of heterogeneous diseases reflecting deep interactions between pathological and genomics variants in tumor tissue environments [24]. Different cancer genotypes are translated into pathological phenotypes that could be assessed by pathologists [24]. High-resolution pathological images have proven their unique benefits for improving prognostic biomarkers prediction via exploring the tissue microenvironmental features [18, 10, 1, 12, 25, 13]. Meanwhile, genomics data (e.g., mRNA-sequence) display a high relevance to regulate cancer progression [29, 3]. For instance, genome-wide molecular portraits are crucial for cancer prognostic stratification and targeted therapy [16]. Despite their importance, seldom efforts jointly exploit the multimodal value between cancer image morphology and molecular biomarkers. In a broader context, assessing cancer prognosis is essentially a multimodal task in association with pathological and genomics findings. Therefore, synergizing multimodal data could deepen a cross-scale understanding towards improved patient prognostication.

The major goal of multimodal data learning is to extract complementary contextual information across modalities [4]. Supervised studies [5, 7, 6] have allowed multimodal data fusion among image and non-image biomarkers. For instance, the Kronecker product is able to capture the interactions between WSIs and genomic features for survival outcome prediction [5, 7]. Alternatively, the co-attention transformer [6] could capture the genotype-phenotype interactions for prognostic prediction. Yet these supervised approaches are limited by feature generalizability and have a high dependency on data labeling. To alleviate label requirement, unsupervised learning evaluates the intrinsic similarity among multimodal representations for data fusion. For example, integrating image, genomics, and clinical information can be achieved via a predefined unsupervised similarity evaluation [4]. To broaden the data utility, the study [28] leverages the pathology and genomic knowledge from the teacher model to guide the pathology-only student model for glioma grading. From these analyses, it is increasingly recognized that the lack of flexibility on model finetuning limits the data utility of multimodal learning. Meanwhile, the size of multimodal medical datasets is not as large as natural vision-language datasets, which necessitates the need for data-efficient analytics to address the training difficulty.

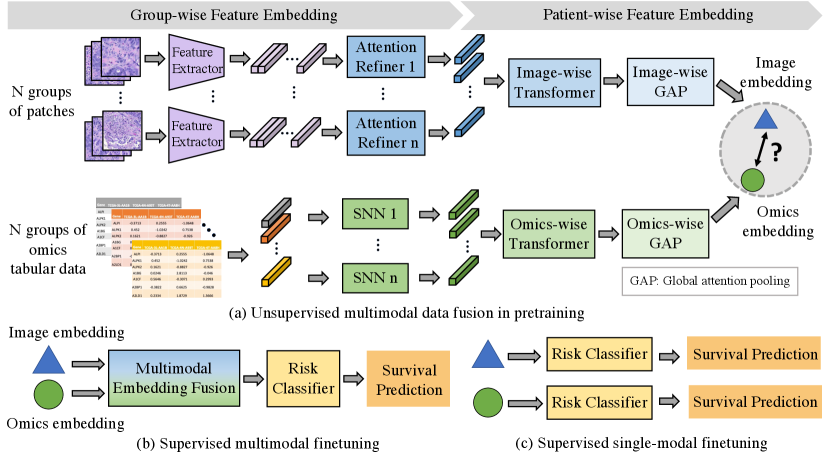

To tackle above challenges, we propose a pathology-and-genomics multimodal framework (i.e., PathOmics) for survival prediction (Fig 1). We summarized our contributions as follows. (1) Unsupervised multimodal data fusion. Our unsupervised pretraining exploits the intrinsic interaction between morphological and molecular biomarkers (Fig 1a). To overcome the gap of modality heterogeneity between images and genomics, we project the multimodal embeddings into the same latent space by evaluating the similarity among them. Particularly, the pretrained model offers a unique means by using similarity-guided modality fusion for extracting cross-modal patterns. (2) Flexible modality finetuning. A key contribution of our multimodal framework is that it combines benefits from both unsupervised pretraining and supervised finetuning data fusion (Fig 1b). As a result, the task-specific finetuning broadens the dataset usage (Fig 1b and c), which is not limited by data modality (e.g., both single- and multi-modal data). (3) Data efficiency with limited data size. Our approach could achieve comparable performance even with fewer finetuned data (e.g., only use 50% of the finetuned data) when compared with using the entire finetuning dataset.

2 Methodology

2.0.1 Overview.

Fig 1 illustrates our multimodal transformer framework. Our method includes an unsupervised multimodal data fusion pretraining and a supervised flexible-modal finetuning. From Fig 1a, in the pretraining, our unsupervised data fusion aims to capture the interaction pattern of image and genomics features. Overall, we formulate the objective of multimodal feature learning by converting image patches and tabular genomics data into group-wise embeddings, and then extracting multimodal patient-wise embeddings. More specifically, we construct group-wise representations for both image and genomics modalities. For image feature representation, we randomly divide image patches into groups; Meanwhile, for each type of genomics data, we construct groups of genes depending on their clinical relevance [22]. Next, as seen in Fig 1b and c, our approach enables three types of finetuning modal modes (i.e., multimodal, image-only, and genomics-only) towards prognostic prediction, expanding the downstream data utility from the pretrained model.

2.0.2 Group-wise Image and Genomics Embedding.

We define the group-wise genomics representation by referring to major functional groups obtained from [22]. Each group contains a list of well-defined molecular features related to cancer biology, including transcription factors, tumor suppression, cytokines and growth factors, cell differentiation markers, homeodomain proteins, translocated cancer genes, and protein kinases. The group-wise genomics representation is defined as , where , is the attribute dimension in each group which could be various. To better extract high-dimensional group-wise genomics representation, we use a Self-Normalizing Network (SNN) together with scaled exponential linear units (SeLU) and Alpha Dropout for feature extraction to generate the group-wise embedding for each group.

For group-wise WSIs representation, we first cropped all tissue-region image tiles from the entire WSI and extracted CNN-based (e.g., ResNet50) -dimensional features for each image tile k as , where , and K is the number of image patches. We construct the group-wise WSIs representation by randomly splitting image tile features into N groups (i.e., the same number as genomics categories). Therefore, group-wise image representation could be defined as , where and represents tile k in group n. Then we apply an attention-based refiner (ABR) [17], which is able to weight the feature embeddings in the group, together with a dimension deduction (e.g., fully-connected layers) to achieve the group-wise embedding. The ABR and the group-wise embedding are defined as:

| (1) |

where w,V1 and V2 are the learnable parameters.

| (2) |

2.0.3 Patient-wise Multimodal Feature Embedding.

To aggregate patient-wise multimodal feature embedding from the group-wise representations, as shown in Fig 1a, we propose a pathology-and-genomics multimodal model containing two model streams, including a pathological image and a genomics data stream. In each stream, we use the same architecture with different weights, which is updated separately in each modality stream. In the pathological image stream, the patient-wise image representation is aggregated by N group representations as , where and P is the number of patients. Similarly, the patient-wise genomics representation is aggregated as . After generating patient-wise representation, we utilize two transformer layers [27] to extract feature embeddings for each modality as follows:

| (3) |

where MSA denotes Multi-head Self-attention [27] (see Appendix 1), l denotes the layer index of the transformer, and could either be or . Then, we construct global attention poolings [17] as Eq.1 to adaptively compute a weighted sum of each modality feature embeddings to finally construct patient-wise embedding as and in each modality.

2.0.4 Multimodal Fusion in Pretraining and Finetuning.

Due to the domain gap between image and molecular feature heterogeneity, a proper design of multimodal fusion is crucial to advance integrative analysis. In the pretraining stage, we develop an unsupervised data fusion strategy by decreasing the mean square error (MSE) loss to map images and genomics embeddings into the same space. Ideally, the image and genomics embeddings belonging to the same patient should have a higher relevance between each other. MSE measures the average squared difference between multimodal embeddings. In this way, the pretrained model is trained to map the paired image and genomics embeddings to be closer in the latent space, leading to strengthen the interaction between different modalities.

| (4) |

In the single modality finetuning, even if we use image-only data, the model is able to produce genomic-related image feature embedding due to the multimodal knowledge aggregation already obtained from the model pretraining. As a result, our cross-modal information aggregation relaxes the modality requirement in the finetuning stage. As shown in Fig 1b, for multimodal finetuning, we deploy a concatenation layer to obtain the fused multimodal feature representation and implement a risk classifier (FC layer) to achieve the final survival stratification (see Appendix 2). As for single-modality finetuning mode in Fig 1c, we simply feed or into risk classifier for the final prognosis prediction. During the finetuning, we update the model parameters using a log-likelihood loss for the discrete-time survival model training [6](see Appendix 2).

3 Experiments and Results

3.0.1 Datasets.

All image and genomics data are publicly available. We collected WSIs from The Cancer Genome Atlas Colon Adenocarcinoma (TCGA-COAD) dataset (CC-BY-3.0) [21, 8] and Rectum Adenocarcinoma (TCGA-READ) dataset (CC-BY-3.0) [20, 8], which contain 440 and 153 patients. We cropped each WSI into 512 512 non-overlapped patches. We also collected the corresponding tabular genomics data (e.g., mRNA sequence, copy number alteration, and methylation) with overall survival (OS) times and censorship statuses from Cbioportal [2, 14]. We removed the samples without the corresponding genomics data or ground truth of survival outcomes. Finally, we included 426 patients of TCGA-COAD and 145 patients of TCGA-READ.

3.0.2 Experimental Settings and Implementations.

We implement two types of settings that involve internal and external datasets for model pretraining and finetuning. As shown in Fig 2a, we pretrain and finetune the model on the same dataset (i.e., internal setting). We split TCGA-COAD into training (80%) and holdout testing set (20%). Then, we implement four-fold cross-validation on the training set for pretraining, finetuning, and hyperparameter-tuning. The test set is only used for evaluating the best finetuned models from each cross-validation split. For the external setting, we implement pretraining and finetuning on the different datasets, as shown in Fig 2b; we use TCGA-COAD for pretraining; Then, we only use TCGA-READ for finetuning and final evaluation. We implement a five-fold cross-validation for pretraining, and the best pretrained models are used for finetuning. We split TCGA-READ into finetuning (60%), validation (20%), and evaluation set (20%). For all experiments, we calculate the average performance on the evaluation set across the best models.

The number of epochs for pretraining and finetuning are 25, the batch size is 1, the optimizer is Adam [19], and the learning rate is 1e-4 for pretraining and 5e-5 for finetuning. We used one 32GB Tesla V100 SXM2 GPU and Pytorch. The concordance index (C-index) is used to measure the survival prediction performance. We followed the previous studies [6, 5, 7] to partition the overall survival (OS) months into four non-overlapping intervals by using the quartiles of event times of uncensored patients for discretized-survival C-index calculation (see Appendix 2). For each experiment, we reported the average C-index among three-times repeated experiments. Conceptionally, our method shares a similar idea to multiple instance learning (MIL) [9, 23]. Therefore, we include two types of baseline models, including the MIL-based models (DeepSet [30], AB-MIL [17], and TransMIL [26]) and MIL multimodal-based models (MCAT [6], PORPOISE [7]). We follow the same data split and processing, as well as the identical training hyperparameters and supervised fusion as above. Notably, there is no need for supervised finetuning for the baselines when using TCGA-COAD (Table 1), because the supervised pretraining is already applied to the training set.

| Model | Pretrain data modality | TCGA-COAD | TCGA-READ | ||

| Finetune data modality | C-index (%) | Finetune data modality | C-index (%) | ||

| image+mRNA | - | image+mRNA | |||

| DeepSets [30] | image+CNA | - | image+CNA | ||

| image+Methy | - | image+Methy | |||

| image+mRNA | - | image+mRNA | |||

| AB-MIL [17] | image+CNA | - | image+CNA | ||

| image+Methy | - | image+Methy | |||

| image+mRNA | - | image+mRNA | |||

| TransMIL [26] | image+CNA | - | image+CNA | ||

| image+Methy | - | image+Methy | |||

| image+mRNA | - | image+mRNA | |||

| MCAT [6] | image+CNA | - | image+CNA | ||

| image+Methy | - | image+Methy | |||

| image+mRNA | - | image+mRNA | |||

| PORPOI -SE [7] | image+CNA | - | image+CNA | ||

| image+Methy | - | image+Methy | |||

| Ours | image+mRNA | image+mRNA | image+mRNA | ||

| image | image | ||||

| mRNA | mRNA | ||||

| image+CNA | image+CNA | image+CNA | |||

| image | image | ||||

| CNA | CNA | ||||

| image+Methy | image+Methy | image+Methy | |||

| image | image | ||||

| Methy | Methy | ||||

3.0.3 Results.

In Table 1, our approach shows improved survival prediction performance on both TCGA-COAD and TCGA-READ datasets. Compared with supervised baselines, our unsupervised data fusion is able to extract the phenotype-genotype interaction features, leading to achieving a flexible finetuning for different data settings. With the multimodal pretraining and finetuning, our method outperforms state-of-the-art models by about 2% on TCGA-COAD and 4% TCGA-READ. We recognize that the combination of image and mRNA sequencing data leads to reflecting distinguishing survival outcomes. Remarkably, our model achieved positive results even using a single-modal finetuning when compared with baselines (more results in Appendix 3.1). In the meantime, on the TCGA-READ, our single-modality finetuned model achieves a better performance than multimodal finetuned baseline models (e.g., with model pretraining via image and methylation data, we have only used the image data for finetuning and achieved a C-index of 74.85%, which is about 4% higher than the best baseline models). We show that with a single-modal finetuning strategy, the model could generate meaningful embedding to combine image- and genomic-related patterns. In addition, our model reflects its efficiency on the limited finetuning data (e.g., 75 patients are used for finetuning on TCGA-READ, which are only 22% of TCGA-COAD finetuning data). In Table 1, our method could yield better performance compared with baselines on the small dataset across the combination of images and multiple types of genomics data.

3.0.4 Ablation Analysis.

We verify the model efficiency by using fewer amounts of finetuning data in finetuning. For TCGA-COAD dataset, we include 50%, 25%, and 10% of the finetuning data. For the TCGA-READ dataset, as the number of uncensored patients is limited, we use 75%, 50%, and 25% of the finetuning data to allow at least one uncensored patient to be included for finetuning. As shown in Fig 3a, by using 50% of TCGA-COAD finetuning data, our approach achieves the C-index of 64.80%, which is higher than the average performance of baselines in several modalities. Similarly, in Fig 3b, our model retains a good performance by using 50% or 75% of TCGA-READ finetuning data compared with the average of C-index across baselines (e.g., 72.32% versus 64.23%). For evaluating the effect of cross-modality information extraction in the pretraining, we kept supervised model training (i.e., the finetuning stage) while removing the unsupervised pretraining. The performance is lower 2%-10% than ours on multi- and single-modality data. For evaluating the genomics data usage, we designed two settings: (1) combining all types of genomics data and categorizing them by groups; (2) removing category information while keeping using different types of genomics data separately. Our approach outperforms the above ablation studies by 3%-7% on TCGA-READ and performs similarly on TCGA-COAD. In addition, we replaced our unsupervised loss with cosine similarity loss; our approach outperforms the setting of using cosine similarity loss by 3%-6%.

4 Conclusion

Developing data-efficient multimodal learning is crucial to advance the survival assessment of cancer patients in a variety of clinical data scenarios. We demonstrated that the proposed PathOmics framework is useful for improving the survival prediction of colon and rectum cancer patients. Importantly, our approach opens up perspectives for exploring the key insights of intrinsic genotype-phenotype interactions in complex cancer data across modalities. Our finetuning approach broadens the scope of dataset inclusion, particularly for model finetuning and evaluation, while enhancing model efficiency on analyzing multimodal clinical data in real-world settings. In addition, the use of synthetic data and developing a foundation model training will be helpful to improve the robustness of multimodal data fusion [11, 15].

4.0.1 Acknowledgements.

The results of this study are based on the data collected from the public TCGA Research Network: https://www.cancer.gov/tcga.

References

- [1] Bilal, M., Raza, S.E.A., Azam, A., Graham, S., Ilyas, M., Cree, I.A., Snead, D., Minhas, F., Rajpoot, N.M.: Development and validation of a weakly supervised deep learning framework to predict the status of molecular pathways and key mutations in colorectal cancer from routine histology images: a retrospective study. The Lancet Digital Health 3(12), e763–e772 (2021)

- [2] Cerami, E., Gao, J., Dogrusoz, U., Gross, B.E., Sumer, S.O., Aksoy, B.A., Jacobsen, A., Byrne, C.J., Heuer, M.L., Larsson, E., et al.: The cbio cancer genomics portal: an open platform for exploring multidimensional cancer genomics data. Cancer discovery 2(5), 401–404 (2012)

- [3] Chaudhary, K., Poirion, O.B., Lu, L., Garmire, L.X.: Deep learning–based multi-omics integration robustly predicts survival in liver cancerusing deep learning to predict liver cancer prognosis. Clinical Cancer Research 24(6), 1248–1259 (2018)

- [4] Cheerla, A., Gevaert, O.: Deep learning with multimodal representation for pancancer prognosis prediction. Bioinformatics 35(14), i446–i454 (2019)

- [5] Chen, R.J., Lu, M.Y., Wang, J., Williamson, D.F., Rodig, S.J., Lindeman, N.I., Mahmood, F.: Pathomic fusion: an integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis. IEEE Transactions on Medical Imaging 41(4), 757–770 (2020)

- [6] Chen, R.J., Lu, M.Y., Weng, W.H., Chen, T.Y., Williamson, D.F., Manz, T., Shady, M., Mahmood, F.: Multimodal co-attention transformer for survival prediction in gigapixel whole slide images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 4015–4025 (2021)

- [7] Chen, R.J., Lu, M.Y., Williamson, D.F., Chen, T.Y., Lipkova, J., Noor, Z., Shaban, M., Shady, M., Williams, M., Joo, B., et al.: Pan-cancer integrative histology-genomic analysis via multimodal deep learning. Cancer Cell 40(8), 865–878 (2022)

- [8] Clark, K., Vendt, B., Smith, K., Freymann, J., Kirby, J., Koppel, P., Moore, S., Phillips, S., Maffitt, D., Pringle, M., et al.: The cancer imaging archive (tcia): maintaining and operating a public information repository. Journal of digital imaging 26, 1045–1057 (2013)

- [9] Dietterich, T.G., Lathrop, R.H., Lozano-Pérez, T.: Solving the multiple instance problem with axis-parallel rectangles. Artificial intelligence 89(1-2), 31–71 (1997)

- [10] Ding, K., Liu, Q., Lee, E., Zhou, M., Lu, A., Zhang, S.: Feature-enhanced graph networks for genetic mutational prediction using histopathological images in colon cancer. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part II 23. pp. 294–304. Springer (2020)

- [11] Ding, K., Zhou, M., Wang, H., Gevaert, O., Metaxas, D., Zhang, S.: A large-scale synthetic pathological dataset for deep learning-enabled segmentation of breast cancer. Scientific Data 10(1), 231 (2023)

- [12] Ding, K., Zhou, M., Wang, H., Zhang, S., Metaxas, D.N.: Spatially aware graph neural networks and cross-level molecular profile prediction in colon cancer histopathology: a retrospective multi-cohort study. The Lancet Digital Health 4(11), e787–e795 (2022)

- [13] Ding, K., Zhou, M., Wang, Z., Liu, Q., Arnold, C.W., Zhang, S., Metaxas, D.N.: Graph convolutional networks for multi-modality medical imaging: Methods, architectures, and clinical applications. arXiv preprint arXiv:2202.08916 (2022)

- [14] Gao, J., Aksoy, B.A., Dogrusoz, U., Dresdner, G., Gross, B., Sumer, S.O., Sun, Y., Jacobsen, A., Sinha, R., Larsson, E., et al.: Integrative analysis of complex cancer genomics and clinical profiles using the cbioportal. Science signaling 6(269), pl1–pl1 (2013)

- [15] Gao, Y., Li, Z., Liu, D., Zhou, M., Zhang, S., Meta, D.N.: Training like a medical resident: Universal medical image segmentation via context prior learning. arXiv preprint arXiv:2306.02416 (2023)

- [16] Gentles, A.J., Newman, A.M., Liu, C.L., Bratman, S.V., Feng, W., Kim, D., Nair, V.S., Xu, Y., Khuong, A., Hoang, C.D., et al.: The prognostic landscape of genes and infiltrating immune cells across human cancers. Nature medicine 21(8), 938–945 (2015)

- [17] Ilse, M., Tomczak, J., Welling, M.: Attention-based deep multiple instance learning. In: International conference on machine learning. pp. 2127–2136. PMLR (2018)

- [18] Kather, J.N., Pearson, A.T., Halama, N., Jäger, D., Krause, J., Loosen, S.H., Marx, A., Boor, P., Tacke, F., Neumann, U.P., et al.: Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nature medicine 25(7), 1054–1056 (2019)

- [19] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- [20] Kirk, S., Lee, Y., Sadow, C., Levine: The cancer genome atlas rectum adenocarcinoma collection (tcga-read) (version 3) [data set]. The Cancer Imaging Archive (2016)

- [21] Kirk, S., Lee, Y., Sadow, C., Levine, S., Roche, C., Bonaccio, E., Filiippini, J.: Radiology data from the cancer genome atlas colon adenocarcinoma [tcga-coad] collection. The Cancer Imaging Archive (2016)

- [22] Liberzon, A., Birger, C., Thorvaldsdóttir, H., Ghandi, M., Mesirov, J.P., Tamayo, P.: The molecular signatures database hallmark gene set collection. Cell systems 1(6), 417–425 (2015)

- [23] Maron, O., Lozano-Pérez, T.: A framework for multiple-instance learning. Advances in neural information processing systems 10 (1997)

- [24] Marusyk, A., Almendro, V., Polyak, K.: Intra-tumour heterogeneity: a looking glass for cancer? Nature reviews cancer 12(5), 323–334 (2012)

- [25] Qu, H., Zhou, M., Yan, Z., Wang, H., Rustgi, V.K., Zhang, S., Gevaert, O., Metaxas, D.N.: Genetic mutation and biological pathway prediction based on whole slide images in breast carcinoma using deep learning. NPJ precision oncology 5(1), 87 (2021)

- [26] Shao, Z., Bian, H., Chen, Y., Wang, Y., Zhang, J., Ji, X., et al.: Transmil: Transformer based correlated multiple instance learning for whole slide image classification. Advances in neural information processing systems 34, 2136–2147 (2021)

- [27] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017)

- [28] Xing, X., Chen, Z., Zhu, M., Hou, Y., Gao, Z., Yuan, Y.: Discrepancy and gradient-guided multi-modal knowledge distillation for pathological glioma grading. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2022: 25th International Conference, Singapore, September 18–22, 2022, Proceedings, Part V. pp. 636–646. Springer (2022)

- [29] Yang, M., Yang, H., Ji, L., Hu, X., Tian, G., Wang, B., Yang, J.: A multi-omics machine learning framework in predicting the survival of colorectal cancer patients. Computers in Biology and Medicine 146, 105516 (2022)

- [30] Zaheer, M., Kottur, S., Ravanbakhsh, S., Poczos, B., Salakhutdinov, R.R., Smola, A.J.: Deep sets. Advances in neural information processing systems 30 (2017)

Appendix

Kexin DingMu ZhouDimitris N. MetaxasShaoting Zhang

5 Multi-head Self-attention

The Multihead self-attention (MSA) is the concatenation of k self-attention (SA) operations. The SA uses -dim patient embedding as the query Q, key K, and value V to learn the pairwise relationship between and :

| (5) |

| (6) |

6 Discrete-time Survival Prediction and Evaluation

We extend the definition and detailed proof of "discrete-time survival prediction" as follows. The continuous event time could be discretized as , which is equal to r, where and j is the index of four non-overlapped intervals. The discrete ground truth is . With patient-wise embedding , we define the hazard function as , which is used for calculating the survival function (i.e., C-index calculation) through (i.e., ). During the supervised finetuning, the log-likelihood loss for model parameter updation is defined as , where means patient passed away during and means patient lived after .

7 Appendix Results of Baseline Models

7.1 The Results of Baseline Models with Single-modal Data

In Table S2, We reported the results of the baselines with single modality data. Each column represents the C-index values on the testing set in single modality.

| TCGA-COAD | ||||||

| Model | Pretrain with image and mRNA | Pretrain with image and CNA | Pretrain with image and methylation | |||

| Image | mRNA | Image | CNA | Image | Methy | |

| Deep -Sets | ||||||

| AB -MIL | ||||||

| Trans -MIL | ||||||

| MCAT | ||||||

| PORP -OISE | ||||||

| TCGA-READ | ||||||

| Deep -Sets | ||||||

| AB -MIL | ||||||

| Trans -MIL | ||||||

| MCAT | ||||||

| PORP -OISE | ||||||

| Model | TCGA-COAD | TCGA-READ | ||||

| 50% | 25% | 10% | 75% | 50% | 25% | |

| Deep -Sets | ||||||

| AB -MIL | ||||||

| Trans -MIL | ||||||

| MCAT | ||||||

| PORP -OISE | ||||||

7.2 The Results of Baseline Models with Fewer Finetuning Data

In Table S3, for each baseline, we include three rows to show the C-index values. The three rows are shown as "image and mRNA", "image and CNA", and "image and Methylation" in order. The baseline models are trained by fewer finetuning multimodal data.