0.1pt \contournumber10

Pasta: Proportional Amplitude Spectrum Training Augmentation for Syn-to-Real Domain Generalization

Abstract

Synthetic data offers the promise of cheap and bountiful training data for settings where labeled real-world data is scarce. However, models trained on synthetic data significantly underperform when evaluated on real-world data. In this paper, we propose Proportional Amplitude Spectrum Training Augmentation (Pasta), a simple and effective augmentation strategy to improve out-of-the-box synthetic-to-real (syn-to-real) generalization performance. Pasta perturbs the amplitude spectra of synthetic images in the Fourier domain to generate augmented views. Specifically, with Pasta we propose a structured perturbation strategy where high-frequency components are perturbed relatively more than the low-frequency ones. For the tasks of semantic segmentation (GTAVReal), object detection (Sim10KReal), and object recognition (VisDA-C SynReal), across a total of 5 syn-to-real shifts, we find that Pasta outperforms more complex state-of-the-art generalization methods while being complementary to the same.

1 Introduction

For complex tasks, deep models often rely on training with substantial labeled data. Real-world data can be expensive to label and an available labeled training set often captures only a limited set of real-world appearance diversity. Synthetic data offers an opportunity to cheaply generate diverse samples that can better cover the anticipated variance of real-world test data. However, models trained on synthetic data often struggle to generalize to real world data – e.g., the performance of a vanilla DeepLabv3+ [14] (ResNet-50 backbone) architecture on semantic segmentation drops from mIoU on GTAV [61] to mIoU on Cityscapes [19] for the same set of classes. Several approaches have been considered to tackle this problem.

In this paper, we propose a novel augmentation strategy, called Proportional Amplitude Spectrum Training Augmentation (Pasta), to address the synthetic-to-real generalization problem. Pasta, as an augmentation strategy for synthetic data, aims to satisfy three key criteria: (1) strong out-of-the-box generalization performance, (2) plug-and-play compatibility with existing methods, and (3) benefits across tasks, backbones, and shifts. Pasta achieves this by perturbing the amplitude spectra (obtained by applying 2D FFT to input images) of the source synthetic images in the Fourier domain. While prior work has explored augmenting images in the Fourier domain [75, 77, 32], they mostly rely on the observations that – (1) among the amplitude and phase spectra, phase tends to capture more high-level semantics [54, 53, 58, 26, 76] and (2) low-frequency (LF) bands of the amplitude spectrum tend to capture style information / low-level statistics (illumination, lighting, etc.) [77].

We further observe that synthetic images have less diversity in the high-frequency (HF) bands of their amplitude spectra compared to real images (see Sec. 3.3 for a detailed discussion). Motivated by these key observations, Pasta provides a structured way to perturb the amplitude spectra of source synthetic images to ensure that a model is exposed to more variations in high-frequency components during training. We empirically observe that by relying on such a simple set of motivating observations, Pasta leads to significant improvements in synthetic-to-real generalization performance – e.g., out-of-the-box GTAV [61]Cityscapes [19] generalization performance of a vanilla DeepLabv3+ (ResNet-50 backbone) model improves from mIoU to mIoU – a absolute mIoU point improvement!

Pasta involves the following steps. Given an input image, we apply 2D Fast Fourier Transform (FFT) to obtain the corresponding amplitude and phase spectra in the Fourier domain. For every spatial frequency in the amplitude spectrum, we sample a multiplicative jitter value such that the perturbation strength increases monotonically with , thereby, ensuring that higher frequency components in the amplitude spectrum are perturbed more compared to the lower frequency ones. Finally, given the perturbed amplitude and the original phase spectra, we can apply an inverse 2D Fast Fourier Transform (iFFT) to obtain the augmented image. This simple strategy of applying fine-grained structured perturbations to the amplitude spectra of synthetic images leads to strong out-of-the-box generalization without the need for specialized components, task-specific design, or changes to learning rules. Fig. 1 shows an example image augmented by Pasta.

To summarize, we make the following contributions:

-

•

We introduce Proportional Amplitude Spectrum Training Augmentation (Pasta), a simple and effective augmentation strategy for synthetic-to-real generalization. Pasta perturbs the amplitude spectra of synthetic images so as to expose a model to more high-frequency variations.

-

•

We show that applying Pasta sets the new state of the art for synthetic-to-real generalization for tasks – Semantic Segmentation (GTAV [61]Cityscapes [19], Mapillary [50], BDD100K [80]), Object Detection (Sim10K [34]Cityscapes) and Object Recognition (VisDA-C [57] SynReal) – covering a total of syn-to-real shifts with multiple backbones.

-

•

Our experimental results demonstrate that Pasta– (1) frequently enables a baseline model to outperform previous state-of-the-art approaches that rely on specialized architectural components, additional synthetic or real data, or alternate objectives; (2) is complementary to existing methods; (3) outperforms prior adaptive object detection methods; and (4) either outperforms or is competitive with current augmentation strategies.

2 Related work

Domain Generalization (DG). DG typically involves training models on single or multiple labeled data sources to generalize well to novel test time data sources (unseen during training). Several approaches have been proposed to tackle domain generalization [4, 49], such as decomposing a model into domain invariant and specific components and utilizing the former to make predictions [24, 36], learning domain specific masks for generalization [8], using meta-learning to train a robust model [42, 69, 3, 13, 22], manipulating feature statistics to augment training data [83, 44, 51], and using models crafted based on risk minimization formalisms [2]. More recently, properly tuned ERMs (Empirical Risk Minimization) have proven to be a competitive DG approach [25], with follow-up work adopting various optimization and regularization techniques [66, 7] on top.

Single Domain Generalization (SDG). Unlike DG which leverages diversity across multiple sources for better generalization, SDG considers generalizing from a single source. Notable approaches for SDG use meta-learning [59] by considering strongly augmented source images as meta-target data (by exposing the model to increasingly distinct augmented views of the source data [72, 43]) and learning feature normalization schemes with auxiliary objectives [23].

Synthetic-to-Real Generalization (Syn-to-Real). Prior work on syn-to-real generalization has mostly focused on some specific methods, including learning feature normalization / whitening schemes [55, 18], using external data for style injection [37, 39], explicitly optimizing for robustness [16], leveraging strong augmentations / domain randomization [81, 39], consistency objectives [82] and using contrastive techniques to aid generalization [15]. Some approaches have also considered adapting from synthetic to real images, using techniques such as adversarial training [17], adversarial alignment losses [63], balancing transferability and discriminability [9] and feature alignment [68]. Pasta is more similar to the kind of methods adopting augmentations for improving out-of-the-box generalization We consider of the most commonly studied syn-to-real generalization settings – (1) Semantic Segmentation - GTAV [61]Real, (2) Object Detection - Sim10K [34]Real and (3) Object Recognition - VisDA-C [57] SynReal. [48] recently proposed tailoring synthetic data for better generalization.

Fourier Generalization & Adaptation Methods. Prior work that explored augmenting images in the Fourier domain (as opposed to the pixel space) rely on a key empirical observation [54, 53, 58, 26] that the phase component of the Fourier spectrum tends to preserve high-level semantics, and therefore, they focused mostly on perturbing the amplitude. Pasta is in line with this style of approach. Amplitude Jitter (AJ) [75] and Amplitude Mixup (AM) [75] are methods similar to Pasta that augment images by perturbing their amplitude spectra. While AM mixes the amplitude spectra of different images, AJ applies uniform perturbation with a single jitter value . FSDR [32], on the other hand, isolates domain variant and invariant frequency components using extra data and sets up a learning paradigm. Building on top of [75], [78] adds a significance mask when linearly interpolating amplitudes. [33] only perturbs image-frequency components that capture little semantic information. [70] uses an encoder-decoder to obtain high/low frequency features and augments images by adding noise to high frequency phase and low frequency amplitude. [79, 11] study how amplitude and phase perturbations impact robustness to natural corruptions [28]. In contrast to these works, Pasta’s simple strategy of perturbing amplitude spectra in a structured way and leads to strong out-of-the-box generalization without the need for specialized components, extra data, task-specific design, or changes to learning rules.

3 Method

We investigate how well models trained on a single labeled synthetic source dataset generalize to real target data, without any access to target data during training.

3.1 Preliminaries: Fourier Transform

Pasta creates augmented views by applying perturbations to the Fourier amplitude spectrum. The amplitude and phase components of Fourier spectra of images have been widely used in image processing for several applications – for studying properties (e.g., periodic intereferences), compact representations (e.g, JPEG compression), digital filtering, etc – and more recently for generalizing and adapting deep networks by perturbing the amplitude spectra [75, 77]. We now cover preliminaries explaining how to obtain amplitude and phase spectra from an image.

Consider a single-channel image . The Fourier transform of can be expressed as,

| (1) |

where and denote spatial frequencies.

The inverse Fourier transform, , that maps signals from the frequency domain to the image domain can be defined accordingly. Note that the Fourier spectrum . If and denote the real and imaginary parts of the Fourier spectrum, the corresponding amplitude () and phase () spectra can be expressed as,

| (2) |

| (3) |

Without loss of generality, we will assume for the rest of this section that the amplitude and phase spectra are zero-centered, i.e., the low-frequency components (low ) have been shifted to the center ( lowest frequency component is at the center). The Fourier transform and its inverse can be calculated efficiently using the Fast Fourier Transform (FFT) [52] algorithm. For an RGB image, we can obtain the Fourier spectrum (and and ) independently for each channel. For the following sections, although we illustrate Pasta using a single-channel image, it can be easily extended to multi-channel (RGB) images by treating each channel independently.

3.2 Amplitude Spectrum Characteristics

Prior Observations. We first note that for natural images, the amplitude spectra has a specific structure – amplitude values tend to follow an inverse power law w.r.t. the spatial frequency [5, 67], i.e., roughly, the amplitude at frequency , , for some determined empirically (see Fig. 2 [Left]). Moreover, as noted earlier, a considerable body of prior work [54, 53, 58, 26] has shown that the phase component of the Fourier spectrum tends to preserve the semantics of the input image,111More accurately, small variations in the phase component can significantly alter the semantics of the image. and the low-frequency (LF) components of the amplitude spectra tend to capture low-level photometric properties (illumination, etc.) [77]. Based on these observations, several methods [77, 32, 75] generate augmented views by modifying only the amplitude spectra of input images, leaving the phase information “unchanged” – by either copying the amplitude spectra from an image from another domain [77] or by introducing naive uniform perturbations [75]. Pasta introduces a fine-grained perturbation scheme for the amplitude spectra based on an additional empirical observation when comparing synthetic and real images.

Our Observation. Across a set of synthetic source datasets, we make the important observation that synthetic images tend to have smaller variations in the high-frequency (HF) components of their amplitude spectrum than real images.222In Fig. 2 [Right], for every image, upon obtaining the amplitude spectrum, we first take an element-wise logarithm. Then, for a particular frequency band (pre-defined), we compute the standard deviation of amplitude values within that band (across all the channels). Finally, we average these standard deviations across images to report the same in the bar plots. Fig. 2 [Right] shows the standard deviation of amplitude values for different frequency bands per-dataset with the three real datasets (for high-band of Cityscapes [19], BDD-100K [80], Mapillary [50]) being significantly larger than the synthetic dataset (high band GTAV [65]). In appendix, we show how this phenomenon is consistent across (1) several syn-to-real shifts and (2) fine-grained frequency band discretizations. This phenomenon is likely a consequence of how synthetic images are rendered. For instance, in VisDA-C [57], the synthetic images are viewpoint images of 3D object models (under different lighting conditions), so it is unlikely for them to be diverse in high-frequency details. For images from GTAV [61], synthetic renderings can lead to contributing factors such as low texture variations – for instance, “roads” (one of the head classes in semantic segmentation) in synthetic images likely have less high-frequency variation compared to real roads.333When Pasta is applied, we find that performance on “road” increases by a significant margin (per-class generalization results in appendix). Consequentially, to generalize well to real data, we would like to ensure that our augmentation strategy exposes the model to more variations in the high-frequency components of the amplitude spectrum during training. Exposing a model to such variations during training allows it to be invariant to this “nuisance” characteristic – which prevents overfitting to a specific syn-to-real shift.

3.3 Proportional Amplitude Spectrum Training Augmentation (Pasta)

Following the observation that synthetic data has lower amplitude variation than real images, and that the variation difference increases with larger frequencies, we introduce a new augmentation scheme, Pasta, that injects variation into the amplitude spectra of synthetic images to help close the syn-to-real gap. Pasta perturbs the amplitude spectra of images in a manner that is proportional to the spatial frequencies, i.e., higher frequencies are perturbed more than lower frequencies. If denotes a perturbation function that returns a perturbed amplitude , i.e., , then for Pasta can be expressed as,

| (4) |

| (5) |

For every spatial frequency , ensures a “multiplicative” jitter interaction and is drawn from a gaussian dependent on the spatial frequency. controls the strength of perturbation applied for every spatial frequency. Note that a naive uniform perturbation to the amplitude spectrum can be applied with a constant function, for all . This results in equal perturbation of all spatial frequencies. To ensure that we perturb HF components more relative to the LF ones, we need to make the variance () depend on frequency ().

For a given frequency we could consider a linear dependence function such as , where computes the normalized spatial frequency. However, in our empirical observations we found that a linear dependence on frequency does not allow for significant enough growth of perturbation as frequencies increase. Instead we propose the following polynomial function of frequency to allow for sufficient perturbation increases for the high frequency components. \useshortskip

| (6) |

are controllable hyper-parameters. Overall, ensures a baseline level of jitter applied to all frequencies and govern how the perturbations grow with increasing frequencies. Note that setting either or (removing the frequency dependence) results in a setting where the is the same across all . In appendix, we verify that Pasta augmentation increases the variance metric measured in Fig. 2 [Right] for synthetic images across fine-grained frequency band discretizations.

The steps involved in obtaining a Pasta augmented view are summarized in Alg. 1 and Fig. 3. Given an input image, we first obtain the fourier, amplitude and phase spectra via 2D FFT and then perturb the amplitude spectrum (while ensuring stronger perturbations for HF components) according to Eqns. 4, 5 and 6. Finally, given the perturbed amplitude spectrum and the pristine phase spectrum, we retrieve the augmented image via inverse 2D FFT. In the next section we empricially validate our augmentation strategy.

4 Experimental Details

We conduct synthetic-to-real generalization experiments across three tasks – Semantic Segmentation (SemSeg), Object Detection (ObjDet) and Object Recognition (ObjRec).

4.1 Datasets and Shifts

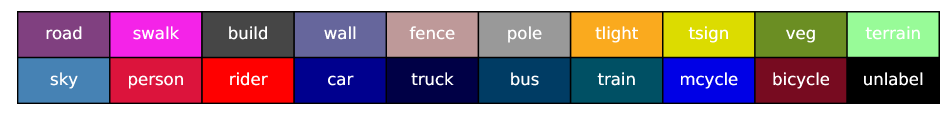

Semantic Segmentation. For SemSeg, we use GTAV [61] as our synthetic source dataset with k ground-view images and annotated classes, which are compatible with the classes in real target datasets – Cityscapes [19], BDD100K [80] and Mapillary [50]. We train our models on the training split of the synthetic sources, evaluate on the validation splits of the real targets and report performance using mIoU (mean intersection over union). We train SegFormer and HRDA (source-only) on the entirety of GTAV.

Object Detection. For ObjDet, we use Sim10K [34] as our synthetic source dataset with k street-view images (from GTAV [61]) and Cityscapes [19] as our (real) target dataset. Following prior work [35], we train on the entirety of Sim10K to detect instances of “car” and report performance on the val split of Cityscapes using mAP@50 (mean average precision at an IoU threshold of 0.5).

4.2 Models and Baselines

Models. We use DeepLabv3+ [14] (with backbones ResNet-50 [27], ResNet-101 [27]), SegFormer [73] and HRDA [30] (both with MiT-B5 backbones) architectures for SemSeg experiments. For ObjDet, we use the Faster-RCNN [60] architecture with ResNet-50 and ResNet-101 backbones. For ObjRec, we use ViT-B/16 [21] and ResNet-101 architectures, with both supervised and self-supervised (DINO [6]) initializations. We set Pasta hyper-parameters for SemSeg, ObjDet and ObjRec across shifts, backbones and apply it in conjunction with consistent geometric and photometric augmentations per task. We provide more details in appendix.

Points of Comparison. In addition to prior work in syn-to-real generalization, we also compare Pasta with other augmentation strategies – (1) RandAugment (RandAug) [20] and (2) Photometric Distortion (PD) [12]. The sequence of operations in PD to augment input images are – randomized brightness, randomized contrast, RGBHSV conversion, randomized saturation & hue changes, HSVRGB conversion, randomized contrast, and randomized channel swap.

5 Results and Findings

| Method | Real mIoU | ||||

| GC | GB | GM | Avg | ||

| ResNet-50 | |||||

| 1 Baseline (B) [18]∗ | 28.95 | 25.14 | 28.18 | 27.42 | |

| 2 B + RandAug [20] | 31.89 | 38.28 | 34.54 | 34.540.57 | +7.12 |

| 3 B + Pasta | 44.12 | 40.19 | 47.11 | 43.810.74 | +16.39 |

| ResNet-101 | |||||

| 4 Baseline (B) [18]∗ | 32.97 | 30.77 | 30.68 | 31.47 | |

| 5 B + Pasta | 45.33 | 42.32 | 48.60 | 45.420.14 | +13.95 |

| Method | mAP@50 | |

| ResNet-50 | SC | |

| 1 Baseline | 39.4 | |

| 2 Baseline + PD [12] | 51.5 | +12.1 |

| 3 Baseline + RandAug [20] | 52.8 | +13.4 |

| 4 Baseline + Pasta | 56.3 | +16.9 |

| 5 Baseline + PD + Pasta | 58.0 | +18.6 |

| 6 Baseline + RandAug + Pasta | 58.3 | +18.9 |

| ResNet-101 | ||

| 7 Baseline | 43.3 | |

| 8 Baseline + PD [12] | 52.2 | +8.9 |

| 9 Baseline + RandAug [20] | 57.2 | +13.9 |

| 10 Baseline + Pasta | 55.2 | +11.9 |

| 11 Baseline + PD + Pasta | 56.6 | +13.3 |

| 12 Baseline + RandAug + Pasta | 59.9 | +16.6 |

| Method | Arch. | Init. | Accuracy | |

| SynReal | ||||

| 1 Baseline | ResNet-101 | Sup. | 47.22 | |

| 2 Baseline + Pasta | ResNet-101 | Sup. | 54.39 | +7.17 |

| 3 Baseline | ViT-B/16 | Sup. | 56.06 | |

| 4 Baseline + Pasta | ViT-B/16 | Sup. | 58.08 | +2.02 |

| 5 Baseline | ViT-B/16 | DINO [6] | 60.93 | |

| 6 Baseline + Pasta | ViT-B/16 | DINO [6] | 63.55 | +2.62 |

5.1 Synthetic-to-Real Generalization Results

Our syn-to-real generalization results for Semantic Segmentation (SemSeg), Object Detection (ObjDet) and Object Recognition (ObjRec) are summarized in Tables. 1, 2, 3 4, 5, 6, and 7. We discuss these results below.

Pasta considerably improves a vanilla baseline. Tables. 1 and 2 show the improvements offered by Pasta for Semantic Segmentation (SemSeg) and Object Detection (ObjDet) respectively when applied to a vanilla baseline. For SemSeg (see Table. 1), we find that Pasta improves a baseline DeepLabv3+ model by absolute mIoU points (see rows 1, 3, 4 and 5) across R-50 and R-101 backbones. Furthermore, these improvements are obtained consistently across all real target datasets. Similarly, for ObjDet (Table. 2), Pasta offers absolute improvements of mAP points for a Faster-RCNN baseline across R-50 and R-101 backbones (see rows 1, 4, 7 and 10). We further note that for SemSeg, Pasta outperforms RandAugment [20], a competing augmentation strategy. For ObjDet, Pasta either outperforms (R-50; rows 3, 4) or is competitive with RandAug (R-101; rows 9, 10). For ObjRec (see Table. 3), we find that Pasta significantly improves a vanilla baseline across multiple architectures – for R-101 (rows 1, 2) and ViT-B/16 (rows 3, 4) – and initializations – for supervised (rows 3, 4) and DINO [6] (rows 5, 6) ViT-B/16 initializations.

| Method | Real mIoU | ||||

| GC | GB | GM | Avg | ||

| ResNet-50 | |||||

| 1 IBN-Net [55]∗ | 33.85 | 32.30 | 37.75 | 34.63 | |

| 2 ISW [18]∗ | 36.58 | 35.20 | 40.33 | 37.37 | |

| 3 DRPC [81]∗ | 37.42 | 32.14 | 34.12 | 34.56 | |

| 4 WEDGE [37]∗ | 38.36 | 37.00 | 44.82 | 40.06 | |

| 5 ASG [16]∗ | 31.89 | N/A | N/A | N/A | |

| 6 CSG [15]∗ | 35.27 | N/A | N/A | N/A | |

| 7 WildNet [40]∗ | 44.62 | 38.42 | 46.09 | 43.04 | |

| 8 DIRL [74]∗ | 41.04 | 39.15 | 41.60 | 40.60 | |

| 9 SHADE [82]∗ | 44.65 | 39.28 | 43.34 | 42.42 | |

| 10 B + Pasta | 44.12 | 40.19 | 47.11 | 43.810.74 | +0.77 |

| ResNet-101 | |||||

| 11 IBN-Net [55]∗ | 37.37 | 34.21 | 36.81 | 36.13 | |

| 12 ISW [18]∗ | 37.20 | 33.36 | 35.57 | 35.58 | |

| 13 DRPC [81]∗ | 42.53 | 38.72 | 38.05 | 39.77 | |

| 14 WEDGE [37]∗ | 45.18 | 41.06 | 48.06 | 44.77 | |

| 15 ASG [16]∗ | 32.79 | N/A | N/A | N/A | |

| 16 CSG [15]∗ | 38.88 | N/A | N/A | N/A | |

| 17 FSDR [32]∗ | 44.80 | 41.20 | 43.40 | 43.13 | |

| 18 WildNet [40]∗ | 45.79 | 41.73 | 47.08 | 44.87 | |

| 19 SHADE [82]∗ | 46.66 | 43.66 | 45.50 | 45.27 | |

| 20 B + Pasta | 45.33 | 42.32 | 48.60 | 45.420.14 | +0.15 |

| Method | Real Data | mAP@50 | |

| Generalization | SC | ||

| 1 Baseline (B) | ✗ | 43.3 | |

| 2 B + Pasta | ✗ | 55.2 | +11.9 |

| 3 B + RandAug + Pasta | ✗ | 59.9 | +16.6 |

| (Unsupervised) Adaptation | |||

| 4 EPM [31]∗ | ✓ | 51.2 | +7.9 |

| 5 Faster-RCNN w/ rot [71]∗ | ✓ | 52.4 | +9.1 |

| 6 ILLUME [35]∗ | ✓ | 53.1 | +9.8 |

| 7 AWADA [47]∗ | ✓ | 53.2 | +9.9 |

| 8 Faster-RCNN (Oracle) [71]∗ | ✓ | 70.4 | +27.1 |

Pasta outperforms state-of-the-art generalization methods. Table. 4 shows how applying Pasta to a baseline outperforms existing generalization methods for SemSeg. For instance, Baseline + Pasta outperforms IBN-Net [55], ISW [18], DRPC [81], ASG [16], CSG [15], WEDGE [37], FSDR [32], WildNet [40], DIRL [74] & SHADE [82] in terms of average mIoU across real targets (for both R-50 and R-101). We would like to note that DRPC, ASG, CSG, WEDGE, FSDR, WildNet & SHADE (for R-101) use either more synthetic or real data (the entirety of GTAV [61] or additional datasets) or different base architectures, making these comparisons unfair to Pasta. Overall, when compared to prior work, Baseline + Pasta, achieves state-of-the-art results across both backbones without the use of any specialized components, task-specific design, or changes to learning rules. For ObjDet, in Table. 5, we find that combining RandAug + Pasta sets new state-of-the-art on Sim10K [34]Cityscapes [19].

Pasta outperforms state-of-the-art adaptation methods. In Table. 5, we find that both Baseline + Pasta and Baseline + RandAug + Pasta significantly outperform state-of-the-art adaptive object detection method, AWADA [47] (rows 2, 3 and 7). Note that unlike the methods in rows 4-7, Pasta does not have access to real images during training!

| Method | Real mIoU | ||||

| GC | GB | GM | Avg | ||

| ResNet-50 | |||||

| 1 IBN-Net [55]∗ | 33.85 | 32.30 | 37.75 | 34.63 | |

| 2 IBN-Net + Pasta | 41.90 | 41.46 | 45.88 | 43.080.37 | +8.45 |

| 3 ISW [18]∗ | 36.58 | 35.20 | 40.33 | 37.37 | |

| 4 ISW + Pasta | 42.13 | 40.95 | 45.67 | 42.910.27 | +5.54 |

| ResNet-101 | |||||

| 5 IBN-Net [55]∗ | 37.37 | 34.21 | 36.81 | 36.13 | |

| 6 IBN-Net + Pasta | 43.64 | 42.46 | 47.51 | 44.540.89 | +8.41 |

| 7 ISW [18]∗ | 37.20 | 33.36 | 35.57 | 35.58 | |

| 8 ISW + Pasta | 44.46 | 43.02 | 47.35 | 44.950.21 | +9.37 |

Pasta is complementary to existing generalization methods. In addition to ensuring that a baseline model improves over existing methods, we find that Pasta is also complementary to existing generalization methods. For SemSeg, in Table. 6, we find that applying Pasta significantly improves generalization performance ( absolute mIoU points) of IBN-Net [55] and ISW [18] across R-50 and R-101. For ObjDet, we find that Pasta is complementary to existing augmentation methods (PD [12] and RandAug [20]; rows 5, 6, 11 and 12 in Table. 2), with RandAug + Pasta setting state-of-the-art on Sim10K [34]Cityscapes [19]. In appendix, we applied Pasta to CSG [15], a state-of-the-art generalization method for ObjRec on VisDA-C [57] CSG already utilizes RandAugment, so we tested Pasta in two settings: with and without RandAugment. In both scenarios, incorporating Pasta led to improved performance.

5.2 Analyzing Pasta

Sensitivity of Pasta to , and . While provides a baseline level of uniform jitter in the frequency domain, govern the degree of monotonicity applied to the perturbations. To assess the sensitivity of Pasta to , in Fig. 4, we conduct experiments for ObjDet where we vary one hyper-parameter while freezing the other two. We find that performance is stable when exceeds a certain threshold. For , we find that while performance drops with increasing , worst generalization performance is still significantly above baseline. More importantly, we find generalization improvements offered by Pasta are sensitive to “extreme” values of . Qualitatively, overly high values of lead to augmented views which have their semantic content significantly occluded, thereby resulting in poor generalization. As a rule of thumb, for a vanilla baseline that is not designed specifically for syn-to-real generalization, we find that restricting leads to stable improvements.

Pasta vs other frequency-based augmentation strategies. Prior work has also considered augmenting images in the Fourier domain for syn-to-real generalization (FSDR [32]), multi-source domain generalization (FACT [75]), domain adaptation (FDA [77]) and robustness (APR [11]). Row 17 in Table. 4 already shows how Pasta outperforms FSDR. In appendix, we show that for baseline DeepLabv3+ (R-50) SemSeg models on GTAVReal Pasta outperforms FACT, FDA and APR.

Does monotonicity matter in Pasta? As stated earlier, a key insight while designing Pasta was to perturb the high-frequency components in the amplitude spectrum more relative to the low-frequency ones. This monotonicity is governed by the choice of in Eqn. 6 ( applies a uniform level of jitter to all frequency components). To assess the importance of this monotonic setup, we compare generalization improvements offered by Pasta by comparing (uniform) and (monotonically increasing) settings. For SemSeg, for a baseline DeepLabv3+ (R-50) model, we find that improves over by absolute mIoU points (see Table. 1 for experimental setting). Similarly, for ObjDet, for a baseline Faster-RCNN (R-50) model, we find that improves of by absolute mAP points (see Table. 2 for experimental setting). Additionally, reversing the monotonic trend (LF perturbed more than HF) leads to significantly worse generalization performance for SemSeg – Avg. Real mIoU, dropping even below vanilla baseline performance of mIoU.

| Method | GC mIoU | |

| DeepLabv3+ (ResNet-101) | ||

| 1 Baseline | 31.47 | |

| 2 Baseline + Pasta | 45.42 | +13.95 |

| SegFormer (MiT B5) | ||

| 3 Baseline [29] | 45.60 | |

| 4 Baseline + Pasta | 52.57 | +6.97 |

| HRDA (MiT B5) | ||

| 5 Baseline [30] | 53.01 | |

| 6 Baseline + Pasta | 57.21 | +4.20 |

Does Pasta help for transformer based architectures? Our key syn-to-real generalization results across SemSeg and ObjDet are with CNN backbones. Following the recent surge of interest in introducing transformers to vision tasks [21], we also conduct experiments to assess if syn-to-real generalization improvements offered by Pasta generalize beyond CNNs. In Table. 3, we show how applying Pasta improves syn-to-real generalization performance of ViT-B/16 baselines for supervised (rows 3, 4) and self-supervised DINO [6] initializations (rows 5, 6). In Table. 7, we consider SegFormer [73] and HRDA [30] (source-only), recent transformer based segmentation frameworks, and check syn-to-real generalization performance when trained on GTAV [61] and evaluated on Cityscapes [19]. We find that applying Pasta improves performance of a vanilla baseline ( and mIoU points for SegFormer and HRDA respectively).

To summarize, from our experiments, we find that Pasta serves as a simple and effective plug-and-play augmentation strategy for training on synthetic data that

-

–

provides strong out-of-the-box generalization performance – enables a baseline method to outperform existing generalization approaches

-

–

is complementary to existing generalization methods – applying Pasta to existing methods or augmentation strategies leads to improvements

-

–

is applicable across tasks, backbones and shifts – Pasta leads to improvements across SemSeg, ObjDet, ObjRec for multiple backbones for five syn-to-real shifts

6 Conclusion

We propose Proportional Amplitude Spectrum Training Augmentation (Pasta), a plug-and-play augmentation strategy for synthetic-to-real generalization. Pasta is motivated by the observation that the amplitude spectra are less diverse in synthetic than real data, especially for high-frequency components. Thus, Pasta augments synthetic data by perturbing the amplitude spectra, with magnitudes increasing for higher frequencies. We show that Pasta offers strong out-of-the-box generalization performance on semantic segmentation, object detection, and object classification tasks. The strong performance of Pasta holds true alone (i.e., training with ERM using Pasta augmented images) or together with alternative generalization/augmentation algorithms. We would like to emphasize that the strength of Pasta lies in its simplicity (just modify your augmentation pipeline) and effectiveness, offering strong improvements despite not using extra modeling components, objectives, or data. We hope that future research endeavors in syn-to-real generalization take augmentation techniques like Pasta into account. Additionally, it might be of interest to the research community to explore how Pasta could be utilized for adaptation – when “little” real target is available.

Acknowledgments. We thank Viraj Prabhu and George Stoica for fruitful discussions and valuable feedback. This work was supported in part by sponsorship from NSF Award #2144194, NASA ULI, ARL and Google.

Appendix A Appendix

This appendix is organized as follows. In Sec. A.1, we first expand on implementation and training details from the main paper. Then, in Sec. A.2, we provide per-class synthetic-to-real generalization results (see Sec. 5.1 of the main paper). Sec. A.3 includes additional discussions surrounding different aspects of Pasta. Sec. A.4 goes through an empirical analysis of the amplitude spectra for synthetic and real images. Next, Sec. A.5 contains more qualitative examples of Pasta augmentations and predictions for semantic segmentation. Finally, Sec. A.6 summarizes the licenses associated with different assets used in our experiments.

A.1 Implementation and Training Details

In this section, we outline our training and implementation details for each of the three tasks – Semantic Segmentation, Object Detection, and Object Recognition. We also summarize these details in Tables. 8(a), 8(b), and 8(c).

| Config | Value (Tables 1, 4 and 6) | Value (Table. 7; SegFormer) | Value (Table. 7; HRDA) |

| Source Data | GTAV (Train Split) | GTAV (Entirety) | GTAV (Entirety) |

| Target Data | Cityscapes (Val Split) | Cityscapes (Val Split) | Cityscapes (Val Split) |

| BDD100K (Val Split) | |||

| Mapillary (Val Split) | |||

| Segmentation Architecture | DeepLabv3+ [14] | SegFormer [73] | HRDA [30] |

| Backbones | ResNet-50 (R-50) [27] | MiT-B5 [73] | MiT-B5 [73] |

| ResNet-101 (R-101) [27] | |||

| MobileNetv2 (Mn-v2) [64] | |||

| Training Resolution | Original GTAV resolution | Original GTAV resolution | Original GTAV resolution |

| Optimizer | SGD | AdamW | AdamW |

| Initial Learning Rate | |||

| Learning Rate Schedule | Poly-LR | Poly-LR | Poly-LR |

| Initialization | Imagenet Pre-trained Weights [38] | Imagenet Pre-trained Weights [38] | Imagenet Pre-trained Weights [38] |

| Iterations | k | k | k |

| Batch Size | |||

| Augmentations w/ Pasta | Gaussian Blur, Color Jitter, Random Crop | Photometric Distortion | Photometric Distortion |

| Random Horizontal Flip, Random Scaling | Random Crop, Random Flip | Random Crop, Random Flip | |

| Model Selection Criteria | Best in-domain validation performance | End of training | End of training |

| GPUs | (R-50/101) or (Mn-v2) |

| Config | Value (Tables 2 and 5) |

| Source Data | Sim10K |

| Target Data | Cityscapes (Val Split) |

| Segmentation Architecture | Faster-RCNN [60] |

| CNN Backbones | ResNet-50 (R-50) [27] |

| ResNet-101 (R-101) [27] | |

| Training Resolution | Original Sim10K resolution for R-50, R-101 |

| Optimizer | SGD (momentum = ) |

| Initial Learning Rate | |

| Learning Rate Schedule | Step-LR, Warmup iterations, Warmup Ratio |

| Steps k & k iterations | |

| Initialization | Imagenet Pre-trained Weights [38] |

| Iterations | k |

| Batch Size | |

| Augmentations w/ Pasta | Resize, Random Flip |

| Model Selection Criteria | End of training |

| GPUs |

| Config | Value (Table. 3) | Value (for Table. 10, following [15]) |

| Source Data | VisDA-C Synthetic | VisDA-C Synthetic |

| Target Data | VisDA-C Real | VisDA-C Real |

| Backbone | ResNet-101 (R-101) [27] | ResNet-101 (R-101) [27] |

| ViT-B/16 [21] (Sup & DINO [6]) | ||

| Optimizer | SGD w/ momentum (0.9) | SGD w/ momentum (0.9) |

| Initial Learning Rate | ||

| Weight Decay | ||

| Initialization | Imagenet [38] | Imagenet [38] |

| Epochs | ||

| Batch Size | ||

| Augmentations w/ Pasta | RandomCrop, RandomHorizontalFlip | RandAugment [20] |

| Model Selection Criteria | Best in-domain val performance | Best in-domain val performance |

| GPUs | (CNN) or (ViT) |

Semantic Segmentation (see Table. 8(a)). For our primary semantic segmentation (SemSeg) experiments (in Tables 1, 4, and 6), we use the DeepLabv3+ [14] architecture with backbones – ResNet-50 (R-50) [27] and ResNet-101 (R-101) [27]. In Sec. A.2, we report additional results with DeepLabv3+ using the MobileNetv2 (Mn-v2) [64] backbone. We adopt the hyper-parameter (and distributed training) settings used by [18] for training. Similar to [18], we train ResNet-50, ResNet-101 and MobileNet-v2 models in a distributed manner across 4, 4 and 2 GPUs respectively. We use SGD (with momentum ) as the optimizer with an initial learning rate of and a polynomial learning rate schedule [46] with a power of . Our models are initialized with supervised ImageNet [38] pre-trained weights. We train all our models for k iterations with a batch size of for GTAV. Our segmentation models are trained on the train split of GTAV and evaluated on the validation splits of the target datasets (Cityscapes, BDD100K and Mapillary). For segmentation, Pasta is applied with a base set of positional and photometric augmentations (Pasta first and then the base augmentations) – GaussianBlur, ColorJitter, RandomCrop, RandomHorizontalFlip and RandomScaling. For RandAugment [20], we only consider the vocabulary of photometric augmentations for segmentation & detection. We conduct ablations (within computational constraints) for the best performing RandAugment setting using R-50 for syn-to-real generalization and find that best performance is achieved when (photometric) augmentations are sampled at the highest severity level () from the augmentation vocabulary for application. Whenever we train a prior generalization approach, say ISW [18] or IBN-Net [55], we follow the same set of hyper-parameter configurations as used in the respective papers. Table. 8(a) includes details for SegFormer and HRDA runs. All models except SegFormer and HRDA were trained across 3 random seeds.

Object Detection (see Table. 8(b)). For object detection (ObjDet), we use the Faster-RCNN [60] architecture with ResNet-50 and ResNet-101 backbones (see Tables 2, 5 in the main paper). Consistent with prior work [35], we train on the entirety of Sim10K [34] (source dataset) for k iterations and pick the last checkpoint for Cityscapes (target dataset) evaluation. We use SGD with momentum as our optimizer with an initial learning rate of (adjusted according to a step learning rate schedule) and a batch size of . Our models are initialized with supervised ImageNet [38] pre-trained weights. All models are trained on 4 GPUs in a distributed manner. For detection, we also compare Pasta against RandAugment [20] and Photometric Distortion (PD). The sequence of operations in PD to augment input images are – randomized brightness, randomized contrast, RGBHSV conversion, randomized saturation & hue changes, HSVRGB conversion, randomized contrast, and randomized channel swap.

Object Recognition (see Table. 8(c)). For our primary object recognition (ObjRec) experiments (see Table 3 in main paper), we train classifiers with ResNet-101 [27] and ViT-B/16 [21] backbones. For ResNet-101, we start from supervised ImageNet [38] pre-trained weights. For ViT-B/16 we start from both supervised and self-supervised (DINO [6]) ImageNet pre-trained weights. We train these classifiers for epochs with SGD (momentum , weight decay ) with an initial learning rate of with cosine annealing – the newly added classifier and bottleneck layers [10] were trained with more learning rate as the rest of the network. We train on of the (synthetic) VisDA-C training split (and use the remaining for model selection) with a batch size of 128 in a distributed manner across 4 GPUs. We use RandomCrop, RandomHorizontalFlip as additional augmentations with Pasta. In Sec. A.2, we provide additional results demonstrating how Pasta is complementary to CSG [15], a state-of-the-art generalization method on VisDA-C. For these experiments, to ensure fair comparisons, we train ResNet-101 based classifiers (with supervised ImageNet pre-trained weights) with same configurations as [15]. This includes the use of an SGD (with momentum ) optimizer with a learning rate of , weight decay of and a batch size of . These models are trained for epochs. CSG [15] also uses RandAugment [20] as an augmentation – we check the effectiveness of Pasta when applied with and without RandAugment during training.

A.2 Synthetic-to-Real Generalization Results

| Method | Real mIoU | ||||

| GC | GB | GM | Avg | ||

| 1 Baseline (B) [18]∗ | 25.92 | 25.73 | 26.45 | 26.03 | |

| 2 B + Pasta | 39.75 | 37.54 | 43.28 | 40.190.45 | +14.16 |

| 3 IBN-Net [55]∗ | 30.14 | 27.66 | 27.07 | 28.29 | |

| 4 IBN-Net + Pasta | 37.57 | 36.97 | 40.91 | 38.480.75 | +10.19 |

| 5 ISW [18]∗ | 30.86 | 30.05 | 30.67 | 30.53 | |

| 6 ISW + Pasta | 37.99 | 37.49 | 42.44 | 39.311.26 | +8.79 |

MobileNet-v2 GTAVReal Generalization Results. Our key generalization results for semantic segmentation (SemSeg) (in Tables. 1, 4 and 6) are with ResNet-50 and ResNet-101 backbones. In Table. 9, we also report results when Pasta is applied to DeepLabv3+ [14] models with MobileNetv2 – a lighter backbone tailored for resource constrained settings. We find that Pasta substantially improves a vanilla baseline (by absolute mIoU points; rows 1, 2) and is complementary to existing methods (rows 3-6).

Prior work using more data / different architectures. In Table. 4 of the main paper, we compare models trained using Pasta with prior syn-to-real generalization methods. As stated in Sec. 5.1 of the main paper, prior methods such as DRPC [81], ASG [16], CSG [15], WEDGE [37], FSDR [32] and WildNet [40] & SHADE [82] (for R-101) use either more data or different base architectures, making these comparisons unfair to Pasta. For instance, WEDGE [37] and CSG [15] use DeepLabv2, ASG [16] uses FCNs, DRPC [81] & SHADE [82] (for R-101) use the entirety of GTAV (not just the training split; more data compared to Pasta) and WEDGE uses 5k extra Flickr images in it’s overall pipeline. FSDR [32] uses FCNs and the the entirety of GTAV for training. FSDR [32] and WildNet [40] also use extra ImageNet [38] images for stylization / randomization. For FSDR, the first step in the pipeline also requires access to SYNTHIA [62]. Unlike Pasta, FSDR and DRPC also select the checkpoints that perform best on target data [1, 81], which is unrealistic since assuming access to labeled (real) target data (for training or model selection) is not practical for syn-to-real generalization. We note that despite having access to less data, Pasta outperforms these methods on GTAVReal.

| Method | Accuracy | |

| 1 Oracle (IN-1k) [15]∗ | 53.30 | |

| 2 Baseline (Syn. Training) [15]∗ | 49.30 | |

| 3 CSG [15]∗ | 64.05 | |

| 4 CSG (RandAug) | 63.840.29 | +14.54 |

| 5 CSG (Pasta) | 64.290.56 | +14.99 |

| 6 CSG (RandAug + Pasta) | 65.861.13 | +16.56 |

Pasta complementary to CSG [15]. To evaluate the efficacy of Pasta for object recognition (ObjRec), in Table. 10, we apply Pasta to CSG [15], a state-of-the-art generalization method on VisDA-C SynReal. Since CSG inherently uses RandAugment [20], we apply Pasta both with (row 6) and without (row 5) RandAugment and find that applying Pasta improves over vanilla CSG (row 4) in both conditions.

| Method | road | building | vegetation | car | sidewalk | sky | pole | person | terrain | fence | wall | bicycle | sign | bus | truck | rider | light | train | motorcycle | mIoU |

| 1 Baseline (B) [18]∗ | 45.1 | 56.8 | 80.9 | 61.0 | 23.1 | 38.9 | 23.9 | 58.2 | 24.3 | 16.3 | 16.6 | 13.4 | 7.3 | 20.0 | 17.4 | 1.2 | 30.0 | 7.2 | 8.5 | 29.0 |

| 2 B + RandAug | 58.5 | 56.3 | 77.3 | 83.7 | 30.3 | 45.1 | 27.3 | 57.8 | 20.6 | 20.9 | 11.4 | 16.9 | 9.7 | 20.3 | 15.0 | 2.4 | 28.1 | 14.0 | 10.3 | 31.9 |

| 3 B + Pasta | 84.1 | 80.5 | 85.8 | 85.9 | 40.1 | 81.8 | 31.9 | 66.0 | 31.4 | 28.1 | 29.0 | 21.8 | 28.5 | 24.5 | 28.7 | 7.0 | 32.9 | 23.4 | 27.2 | 44.1 |

| 4 IBN-Net [55]∗ | 51.3 | 59.7 | 85.0 | 76.7 | 24.1 | 67.8 | 23.0 | 60.6 | 40.6 | 25.9 | 14.1 | 15.7 | 10.1 | 23.7 | 16.3 | 0.8 | 30.9 | 4.9 | 11.9 | 33.9 |

| 5 IBN-Net + Pasta | 78.1 | 79.5 | 85.8 | 84.5 | 31.7 | 80.1 | 32.2 | 63.4 | 38.8 | 21.7 | 28.0 | 18.2 | 22.6 | 26.4 | 29.0 | 2.8 | 34.0 | 16.5 | 22.9 | 41.9 |

| 6 ISW [18]∗ | 60.5 | 65.4 | 85.4 | 82.7 | 25.5 | 70.3 | 25.8 | 61.9 | 38.5 | 23.7 | 21.6 | 15.5 | 12.2 | 25.4 | 21.1 | 0.0 | 33.3 | 9.3 | 16.8 | 36.6 |

| 7 ISW + Pasta | 76.6 | 78.4 | 85.6 | 83.7 | 32.5 | 83.1 | 33.1 | 63.4 | 40.4 | 23.6 | 27.3 | 17.4 | 22.3 | 25.7 | 30.1 | 3.3 | 35.9 | 18.2 | 19.9 | 42.1 |

| Method | road | sky | building | vegetation | car | sidewalk | fence | terrain | truck | pole | bus | wall | sign | person | light | bicycle | motorcycle | rider | train | mIoU |

| 1 Baseline (B) [18]∗ | 48.2 | 32.3 | 34.3 | 58.2 | 67.3 | 23.0 | 19.8 | 21.4 | 11.3 | 28.4 | 5.6 | 3.4 | 18.1 | 43.9 | 30.2 | 11.1 | 16.0 | 5.1 | 0.0 | 25.1 |

| 2 B + RandAug | 75.4 | 82.8 | 67.7 | 74.7 | 74.1 | 39.3 | 32.7 | 26.6 | 22.7 | 37.0 | 16.5 | 5.0 | 23.9 | 51.7 | 35.8 | 12.0 | 29.3 | 20.2 | 0.0 | 38.3 |

| 3 B + Pasta | 86.0 | 86.6 | 74.8 | 72.7 | 82.8 | 38.4 | 31.2 | 24.9 | 23.7 | 34.8 | 6.4 | 11.2 | 26.2 | 55.1 | 37.0 | 13.3 | 38.3 | 19.9 | 0.0 | 40.2 |

| 4 IBN-Net [55]∗ | 68.9 | 66.9 | 56.7 | 66.6 | 70.3 | 28.8 | 21.4 | 22.1 | 12.8 | 31.9 | 7.2 | 6.0 | 21.7 | 50.2 | 35.0 | 18.1 | 23.2 | 5.8 | 0.0 | 32.3 |

| 5 IBN-Net + Pasta | 86.1 | 87.6 | 74.9 | 72.3 | 82.3 | 36.6 | 30.6 | 26.2 | 25.3 | 37.1 | 10.8 | 13.2 | 25.5 | 56.0 | 36.8 | 21.4 | 38.9 | 26.0 | 0.0 | 41.5 |

| 6 ISW [18]∗ | 74.9 | 77.4 | 65.2 | 69.0 | 72.4 | 30.4 | 22.6 | 26.2 | 16.2 | 34.9 | 6.1 | 11.5 | 22.2 | 50.3 | 36.9 | 11.4 | 31.3 | 10.0 | 0.0 | 35.2 |

| 7 ISW + Pasta | 86.5 | 87.9 | 74.0 | 73.0 | 83.2 | 37.7 | 28.6 | 28.1 | 23.4 | 37.2 | 7.8 | 11.3 | 25.0 | 55.1 | 37.8 | 23.6 | 35.5 | 22.4 | 0.0 | 41.0 |

| Method | sky | road | vegetation | building | sidewalk | car | fence | pole | terrain | wall | sign | truck | person | bus | light | bicycle | rider | motorcycle | train | mIoU |

| 1 Baseline (B) [18]∗ | 42.2 | 46.8 | 64.9 | 33.5 | 24.9 | 72.3 | 14.4 | 27.7 | 23.8 | 6.7 | 8.5 | 23.7 | 53.7 | 7.0 | 35.8 | 18.4 | 4.9 | 15.5 | 10.8 | 28.2 |

| 2 B + RandAug [20] | 51.7 | 59.6 | 75.5 | 39.5 | 33.9 | 81.3 | 22.6 | 37.2 | 24.6 | 4.2 | 32.4 | 31.2 | 56.5 | 13.4 | 36.2 | 18.0 | 11.9 | 21.4 | 5.0 | 34.5 |

| 3 B + Pasta | 93.3 | 83.0 | 76.3 | 76.9 | 40.2 | 83.3 | 27.1 | 40.9 | 37.1 | 19.2 | 50.4 | 35.2 | 63.3 | 19.5 | 41.4 | 29.4 | 25.2 | 38.1 | 15.2 | 47.1 |

| 4 IBN-Net [55]∗ | 82.0 | 66.4 | 73.5 | 57.1 | 32.9 | 73.1 | 24.9 | 31.5 | 28.4 | 10.5 | 38.9 | 30.7 | 56.4 | 16.0 | 38.0 | 18.6 | 9.1 | 16.6 | 12.6 | 37.7 |

| 5 IBN-Net + Pasta | 94.4 | 81.7 | 76.1 | 76.9 | 40.4 | 80.8 | 27.1 | 40.3 | 38.7 | 19.0 | 43.2 | 38.0 | 62.0 | 20.5 | 39.3 | 25.7 | 23.6 | 31.6 | 12.4 | 45.9 |

| 6 ISW [18]∗ | 88.2 | 74.8 | 74.3 | 66.1 | 36.2 | 78.7 | 26.0 | 35.4 | 30.2 | 15.2 | 36.6 | 33.3 | 58.6 | 14.4 | 37.9 | 17.8 | 11.1 | 20.4 | 11.0 | 40.3 |

| 7 ISW + Pasta | 94.6 | 82.9 | 76.7 | 76.0 | 41.9 | 81.8 | 25.8 | 40.4 | 40.9 | 18.8 | 43.1 | 34.1 | 61.6 | 19.9 | 40.2 | 24.5 | 22.3 | 30.5 | 11.6 | 45.7 |

Per-class GTAVReal Generalization Results. Tables 11, 12 and 13 include per-class synthetic-to-real generalization results when a DeepLabv3+ (R-50 backbone) model trained on GTAV is evaluated on Cityscapes, BDD100K and Mapillary respectively. For GTAVCityscapes (see Table. 11), we find that Baseline + Pasta consistently improves over Baseline and RandAugment. For IBN-Net and ISW in this setting, we observe consistent improvements (except for the classes terrain and fence). For GTAVBDD100K (see Table. 12), we find that for the Baseline, while Pasta outperforms RandAugment on the majority of classes, both are fairly competitive and outperform the vanilla Baseline approach. For IBN-Net and ISW, Pasta almost always outperforms the vanilla approaches (except for the class wall). For GTAVMapillary (see Table. 13), for Baseline, we find that Pasta outperforms the vanilla approach and RandAugment. For IBN-Net and ISW, Pasta outperforms the vanilla approaches with the exception of the classes train and fence.

Pasta helps SYNTHIAReal Generalization. We conducted additional syn-to-real experiments using SYNTHIA [62] as the source domain and Cityscapes, BDD100K and Mapillary as the target domains. For a baseline DeepLabv3+ model (R-101), we find that Pasta – (1) provides strong improvements over the vanilla baseline (% mIoU, % absolute improvement) and (2) is competitive with RandAugment (% mIoU). More generally, we find that syn-to-real generalization performance is worse when SYNTHIA is used as the source domain as opposed to GTAV – for instance, ISW [18] achieves an average mIoU of % (SYNTHIA) as opposed to % (GTAV). SYNTHIA has significantly fewer images compared to GTAV (k vs k), which likely contributes to relatively worse generalization performance.

| Method | Base Augmentations | Real mIoU | ||

| Positional | Photometric | |||

| 1 Baseline (B) | ✓ | ✓ | 26.99 | |

| 2 B + Pasta | ✗ | ✗ | 40.25 | +13.26 |

| 3 B + Pasta | ✓ | ✗ | 40.37 | +13.38 |

| 4 B + Pasta | ✓ | ✓ | 41.90 | +14.91 |

Pasta and Base Augmentations. Pasta is applied with some consistent color and positional augmentations (see Section. A.1). To understand if Pasta alone leads to any improvements, in Table. 14, we conduct a controlled experiments where we train a baseline DeepLabv3+ model (R-50) on GTAV (by downsampling input images to a resolution of due to compute constraints) with different augmentations and evaluate on real data (Cityscapes, BDD100K and Mapillary). We find that applying Pasta alone leads to significant improvements ( absolute mIoU points; row 2) and including the positional (row 3) and photometric (row 4) augmentations leads to further improvements.

A.3 Pasta Analysis

In this section, we provide more discussions surrounding different aspects of Pasta.

| Method | Real Data | Real mIoU | |

| 1 Baseline | ✗ | 26.99 | |

| 2 Baseline + FDA [77] | ✓ | 33.04 | +6.05 |

| 3 Baseline + APR-P [11] | ✗ | 37.52 | +10.53 |

| 4 Baseline + AJ (FACT [75]) | ✗ | 30.70 | +3.71 |

| 5 Baseline + AM (FACT [75]) | ✗ | 39.70 | +12.71 |

| 6 Baseline + Pasta | ✗ | 41.90 | +14.91 |

Pasta vs other frequency domain augmentation methods. As noted in Sec 5.2 of the main paper, prior work has also considered augmenting images in the frequency domain as part of their training pipeline. Notably, FSDR [32] augments images in the fourier domain. Table. 4 in the main paper shows how Pasta (when applied to a vanilla baseline) already outperforms FSDR (by absolute mIoU points). In Table. 15, we compare Pasta with other frequency domain augmentation strategies (summarized below) when applied to a baseline DeepLabv3+ (R-50) SemSeg model trained on GTAV (by downsampling input images to a resolution of due to compute constraints) and evaluated on real data (Cityscapes, BDD100K and Mapillary).

-

•

FDA [77] – FDA is a recent approach for syn-to-real domain adaptation and naturally requires access to unlabeled target data. In FDA, to augment source images, low frequency bands of the amplitude spectra of source images are replaced with those of target – essentially mimicking a cheap style transfer operation. Since we do not assume access to target data in our experimental settings, a direct comparison is not possible. Instead, we consider a proxy task where we intend to generalize to real datasets (Cityscapes, BDD100K, Mapillary) by assuming additional access to 6 real world street view images under different weather conditions (for style transfer) – sunny day, rainy day, cloudy day, etc. – in addition to synthetic images from GTAV. We find that Pasta outperforms FDA (row 2 vs row 6 in Table. 15).

-

•

APR-P [11] – Amplitude Phase Recombination is a recent method designed to improve robustness against natural corruptions. APR replaces the amplitude spectrum of an image with the amplitude spectrum from an augmented view (APR-S) or different images (APR-P). When applied to synthetic images from GTAV, we find that Pasta outperforms APR-P (row 3 vs row 6 in Table. 15).

-

•

FACT [75] – FACT is a multi-source domain generalization method for object recognition that uses one of two frequency domain augmentation strategies – Amplitude Jitter (AJ) and Amplitude Mixup (AM) – in a broader training pipeline. AM involves perturbing the amplitude spectrum of the image of concern by performing a convex combination with the amplitude spectrum of another “mixup” image drawn from the same source data. AJ (AJ) perturbs the amplitude spectrum with a single jitter value for all spatial frequencies and channels. We compare with both AM and AJ for SemSeg and find that Pasta outperforms both (rows 4, 5 vs row 6 in Table. 15). Additionally, for multi-source domain generalization on PACS [41], we find that FACT-Pasta (where we replace the augmentations in FACT with Pasta) outperforms FACT-Vanilla – vs average leave-one-out domain accuracy.

A.4 Amplitude Analysis

Pasta relies on the empirical observation that synthetic images have less variance in their high frequency components compared to real images. In this section, we first show how this observation is widespread across a set of syn-to-real shifts over fine-grained frequency band discretizations and then demonstrate how Pasta helps counter this discrepancy.

Fine-grained Band Discretization. For Fig. 2 [Right] in the main paper, the low, mid and high frequency bands are chosen such that the first (lowest) band is the height of the image (includes all spatial frequencies until of the image height), second band is up to the height of the image excluding band 1 frequencies, and the third band considers all the remaining frequencies. To investigate similar trends across fine-grained frequency band discretizations, we split the amplitude spectrum into , , , and frequency bands in the manner described above, and analyze the diversity of these frequency bands across multiple datasets. Across domain shifts (see Fig. 5 and 6) – GTAV, SYNTHIACityscapes, BDD100K, Mapillary, and VisDA-C SynReal, we find that (1) for every dataset (whether synthetic or real), diversity decreases as we head towards higher frequency bands and (2) synthetic images exhibit less diversity in high-frequency bands at all considered levels of granularity.

Increase in amplitude variations post-Pasta. Next, we observe how Pasta effects the diversity of the amplitude spectrums on GTAV and VisDA-C. Similar to above, we split the amplitude spectrum into , , , and frequency bands, and we analyze the diversity of these frequency bands before and after applying Pasta to images (see Fig. 7 and 8). For synthetic images from GTAV, when Pasta is applied, we observe that the standard deviation of amplitude spectrums increases from to , to and to for the low, mid and high frequency bands respectively. As expected, we observe maximum increase for the high-frequency bands.

A.5 Qualitative Examples

Pasta Augmentation Samples. Fig. 9 includes more examples of images from synthetic datasets (from GTAV and VisDA-C), when RandAugment and Pasta are applied.

Semantic Segmentation Predictions. We include qualitative examples of semantic segmentation predictions on Cityscapes made by Baseline, IBN-Net and ISW (DeepLabv3+, ResNet-50) trained on GTAV (corresponding to Tables 1, 4 and 6 in the main paper) Fig. 10, 12 and 14 respectively when different augmentations are applied (RandAugment and Pasta). The Cityscapes images we show predictions on were selected randomly. We include RandAugment predictions only for the Baseline. To get a better sense of the kind of mistakes made by different approaches, we also include the difference between the predictions and ground truth segmentation masks in Fig. 11, 13 and 15 (ordered accordingly for easy reference). The difference images show the predicted classes only for pixels where the prediction differs from the ground truth.

A.6 Assets Licenses

The assets used in this work can be grouped into three categories – Datasets, Code Repositories and Dependencies. We include the licenses of each of these assets below.

Datasets. We used the following publicly available datasets in this work – GTAV [61], Cityscapes [19], BDD100K [80], Mapillary [50], Sim10K [34], and VisDA-C [57]. For GTAV, the codebase used to extract data from the original GTAV game is distributed under the MIT license.444https://bitbucket.org/visinf/projects-2016-playing-for-data/src/master/ The license agreement for the Cityscapes dataset dictates that the dataset is made freely available to academic and non-academic entities for non-commercial purposes such as academic research, teaching, scientific publications, or personal experimentation and that permission to use the data is granted under certain conditions.555https://www.cityscapes-dataset.com/license/ BDD100K is distributed under the BSD-3-Clause license.666https://github.com/bdd100k/bdd100k/blob/master/LICENSE Mapillary images are shared under a CC-BY-SA license, which in short means that anyone can look at and distribute the images, and even modify them a bit, as long as they give attribution.777https://help.mapillary.com/hc/en-us/articles/115001770409-Licenses Densely annotated images for Sim10k are available freely888https://fcav.engin.umich.edu/projects/driving-in-the-matrix and can only be used for non-commercial applications. The VisDA-C development kit on github does not have a license associated with it, but it does include a Terms of Use, which primarily states that the dataset must be used for non-commercial and educational purposes only.999https://github.com/VisionLearningGroup/taskcv-2017-public/tree/master/classification

Code Repositories. For our experiments, apart from code that we wrote ourselves, we build on top of three existing public repositories – RobustNet101010https://github.com/shachoi/RobustNet, MMDetection111111https://github.com/open-mmlab/mmdetection and CSG121212https://github.com/NVlabs/CSG. RobustNet is distributed under the BSD-3-Clause license. MMDetection is distributed under Apache License 2.0131313https://github.com/open-mmlab/mmdetection/blob/master/LICENSE. CSG, released by NVIDIA, is released under a NVIDIA-specific license.141414https://github.com/NVlabs/CSG/blob/main/LICENSE.md

Dependencies. We use Pytorch [56] as the deep-learning framework for all our experiments. Pytorch, released by Facebook, is distributed under a Facebook-specific license.151515https://github.com/pytorch/pytorch/blob/master/LICENSE

References

- [1] Largely lower results on bdd and maphillary · issue #2 · jxhuang0508/fsdr. https://github.com/jxhuang0508/FSDR/issues/2. (FSDR Official Code Repository).

- [2] Martin Arjovsky, Léon Bottou, Ishaan Gulrajani, and David Lopez-Paz. Invariant risk minimization. arXiv preprint arXiv:1907.02893, 2019.

- [3] Yogesh Balaji, Swami Sankaranarayanan, and Rama Chellappa. Metareg: Towards domain generalization using meta-regularization. In Advances in Neural Information Processing Systems, pages 998–1008, 2018.

- [4] Gilles Blanchard, Gyemin Lee, and Clayton Scott. Generalizing from several related classification tasks to a new unlabeled sample. Advances in neural information processing systems, 24, 2011.

- [5] Geoffrey J Burton and Ian R Moorhead. Color and spatial structure in natural scenes. Applied optics, 26(1):157–170, 1987.

- [6] Mathilde Caron, Hugo Touvron, Ishan Misra, Hervé Jégou, Julien Mairal, Piotr Bojanowski, and Armand Joulin. Emerging properties in self-supervised vision transformers. In Proceedings of the International Conference on Computer Vision (ICCV), 2021.

- [7] Junbum Cha, Sanghyuk Chun, Kyungjae Lee, Han-Cheol Cho, Seunghyun Park, Yunsung Lee, and Sungrae Park. Swad: Domain generalization by seeking flat minima. Advances in Neural Information Processing Systems, 34, 2021.

- [8] Prithvijit Chattopadhyay, Yogesh Balaji, and Judy Hoffman. Learning to balance specificity and invariance for in and out of domain generalization. In European Conference on Computer Vision, pages 301–318. Springer, 2020.

- [9] Chaoqi Chen, Zebiao Zheng, Xinghao Ding, Yue Huang, and Qi Dou. Harmonizing transferability and discriminability for adapting object detectors. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 8866–8875, 2020.

- [10] Dian Chen, Dequan Wang, Trevor Darrell, and Sayna Ebrahimi. Contrastive test-time adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 295–305, 2022.

- [11] Guangyao Chen, Peixi Peng, Li Ma, Jia Li, Lin Du, and Yonghong Tian. Amplitude-phase recombination: Rethinking robustness of convolutional neural networks in frequency domain. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 458–467, 2021.

- [12] Kai Chen, Jiaqi Wang, Jiangmiao Pang, Yuhang Cao, Yu Xiong, Xiaoxiao Li, Shuyang Sun, Wansen Feng, Ziwei Liu, Jiarui Xu, et al. Mmdetection: Open mmlab detection toolbox and benchmark. arXiv preprint arXiv:1906.07155, 2019.

- [13] Keyu Chen, Di Zhuang, and J Morris Chang. Discriminative adversarial domain generalization with meta-learning based cross-domain validation. Neurocomputing, 467:418–426, 2022.

- [14] Liang-Chieh Chen, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European conference on computer vision (ECCV), pages 801–818, 2018.

- [15] Wuyang Chen, Zhiding Yu, Shalini De Mello, Sifei Liu, Jose M. Alvarez, Zhangyang Wang, and Anima Anandkumar. Contrastive syn-to-real generalization. In International Conference on Learning Representations, 2021.

- [16] Wuyang Chen, Zhiding Yu, Zhangyang Wang, and Animashree Anandkumar. Automated synthetic-to-real generalization. In International Conference on Machine Learning, pages 1746–1756. PMLR, 2020.

- [17] Yuhua Chen, Wen Li, Christos Sakaridis, Dengxin Dai, and Luc Van Gool. Domain adaptive faster r-cnn for object detection in the wild. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3339–3348, 2018.

- [18] Sungha Choi, Sanghun Jung, Huiwon Yun, Joanne T Kim, Seungryong Kim, and Jaegul Choo. Robustnet: Improving domain generalization in urban-scene segmentation via instance selective whitening. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11580–11590, 2021.

- [19] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3213–3223, 2016.

- [20] Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pages 702–703, 2020.

- [21] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations, 2021.

- [22] Qi Dou, Daniel Coelho de Castro, Konstantinos Kamnitsas, and Ben Glocker. Domain generalization via model-agnostic learning of semantic features. In Advances in Neural Information Processing Systems, pages 6447–6458, 2019.

- [23] Xinjie Fan, Qifei Wang, Junjie Ke, Feng Yang, Boqing Gong, and Mingyuan Zhou. Adversarially adaptive normalization for single domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8208–8217, 2021.

- [24] Muhammad Ghifary, W Bastiaan Kleijn, Mengjie Zhang, and David Balduzzi. Domain generalization for object recognition with multi-task autoencoders. In Proceedings of the IEEE international conference on computer vision, pages 2551–2559, 2015.

- [25] Ishaan Gulrajani and David Lopez-Paz. In search of lost domain generalization. In International Conference on Learning Representations, 2021.

- [26] Bruce C Hansen and Robert F Hess. Structural sparseness and spatial phase alignment in natural scenes. JOSA A, 24(7):1873–1885, 2007.

- [27] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [28] Dan Hendrycks and Thomas Dietterich. Benchmarking neural network robustness to common corruptions and perturbations. In ICLR, 2019.

- [29] Lukas Hoyer, Dengxin Dai, and Luc Van Gool. Daformer: Improving network architectures and training strategies for domain-adaptive semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9924–9935, 2022.

- [30] Lukas Hoyer, Dengxin Dai, and Luc Van Gool. Hrda: Context-aware high-resolution domain-adaptive semantic segmentation. In Computer Vision–ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part XXX, pages 372–391. Springer, 2022.

- [31] Cheng-Chun Hsu, Yi-Hsuan Tsai, Yen-Yu Lin, and Ming-Hsuan Yang. Every pixel matters: Center-aware feature alignment for domain adaptive object detector. In European Conference on Computer Vision, pages 733–748. Springer, 2020.

- [32] Jiaxing Huang, Dayan Guan, Aoran Xiao, and Shijian Lu. Fsdr: Frequency space domain randomization for domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6891–6902, 2021.

- [33] Jiaxing Huang, Dayan Guan, Aoran Xiao, and Shijian Lu. Rda: Robust domain adaptation via fourier adversarial attacking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8988–8999, 2021.

- [34] Matthew Johnson-Roberson, Charles Barto, Rounak Mehta, Sharath Nittur Sridhar, Karl Rosaen, and Ram Vasudevan. Driving in the matrix: Can virtual worlds replace human-generated annotations for real world tasks? arXiv preprint arXiv:1610.01983, 2016.

- [35] Vaishnavi Khindkar, Chetan Arora, Vineeth N Balasubramanian, Anbumani Subramanian, Rohit Saluja, and CV Jawahar. To miss-attend is to misalign! residual self-attentive feature alignment for adapting object detectors. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 3632–3642, 2022.

- [36] Aditya Khosla, Tinghui Zhou, Tomasz Malisiewicz, Alexei A Efros, and Antonio Torralba. Undoing the damage of dataset bias. In European Conference on Computer Vision, pages 158–171. Springer, 2012.

- [37] Namyup Kim, Taeyoung Son, Jaehyun Pahk, Cuiling Lan, Wenjun Zeng, and Suha Kwak. Wedge: Web-image assisted domain generalization for semantic segmentation. In 2023 International Conference on Robotics and Automation (ICRA), 2023.

- [38] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097–1105, 2012.

- [39] Jogendra Nath Kundu, Akshay Kulkarni, Amit Singh, Varun Jampani, and R Venkatesh Babu. Generalize then adapt: Source-free domain adaptive semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7046–7056, 2021.

- [40] Suhyeon Lee, Hongje Seong, Seongwon Lee, and Euntai Kim. Wildnet: Learning domain generalized semantic segmentation from the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9936–9946, 2022.

- [41] Da Li, Yongxin Yang, Yi-Zhe Song, and Timothy M Hospedales. Deeper, broader and artier domain generalization. In Proceedings of the IEEE International Conference on Computer Vision, pages 5542–5550, 2017.

- [42] Da Li, Yongxin Yang, Yi-Zhe Song, and Timothy M Hospedales. Learning to generalize: Meta-learning for domain generalization. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018.

- [43] Lei Li, Ke Gao, Juan Cao, Ziyao Huang, Yepeng Weng, Xiaoyue Mi, Zhengze Yu, Xiaoya Li, and Boyang Xia. Progressive domain expansion network for single domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 224–233, 2021.

- [44] Xiaotong Li, Yongxing Dai, Yixiao Ge, Jun Liu, Ying Shan, and Ling-Yu Duan. Uncertainty modeling for out-of-distribution generalization. In International Conference on Learning Representations, 2022.

- [45] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, pages 740–755. Springer, 2014.

- [46] Wei Liu, Andrew Rabinovich, and Alexander C Berg. Parsenet: Looking wider to see better. arXiv preprint arXiv:1506.04579, 2015.

- [47] Maximilian Menke, Thomas Wenzel, and Andreas Schwung. Awada: Attention-weighted adversarial domain adaptation for object detection, 2022.

- [48] Samarth Mishra, Rameswar Panda, Cheng Perng Phoo, Chun-Fu Chen, Leonid Karlinsky, Kate Saenko, Venkatesh Saligrama, and Rogerio S Feris. Task2sim: Towards effective pre-training and transfer from synthetic data. arXiv preprint arXiv:2112.00054, 2021.

- [49] Krikamol Muandet, David Balduzzi, and Bernhard Schölkopf. Domain generalization via invariant feature representation. In International Conference on Machine Learning, pages 10–18, 2013.

- [50] Gerhard Neuhold, Tobias Ollmann, Samuel Rota Bulo, and Peter Kontschieder. The mapillary vistas dataset for semantic understanding of street scenes. In Proceedings of the IEEE international conference on computer vision, pages 4990–4999, 2017.

- [51] Oren Nuriel, Sagie Benaim, and Lior Wolf. Permuted adain: Reducing the bias towards global statistics in image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021.

- [52] Henri J Nussbaumer. The fast fourier transform. In Fast Fourier Transform and Convolution Algorithms, pages 80–111. Springer, 1981.

- [53] A.V. Oppenheim and J.S. Lim. The importance of phase in signals. Proceedings of the IEEE, 69(5):529–541, 1981.

- [54] A. Oppenheim, Jae Lim, G. Kopec, and S. Pohlig. Phase in speech and pictures. In ICASSP ’79. IEEE International Conference on Acoustics, Speech, and Signal Processing, volume 4, pages 632–637, 1979.

- [55] Xingang Pan, Ping Luo, Jianping Shi, and Xiaoou Tang. Two at once: Enhancing learning and generalization capacities via ibn-net. In Proceedings of the European Conference on Computer Vision (ECCV), pages 464–479, 2018.

- [56] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems 32, pages 8024–8035. Curran Associates, Inc., 2019.

- [57] Xingchao Peng, Ben Usman, Neela Kaushik, Judy Hoffman, Dequan Wang, and Kate Saenko. Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924, 2017.

- [58] Leon N Piotrowski and Fergus W Campbell. A demonstration of the visual importance and flexibility of spatial-frequency amplitude and phase. Perception, 11(3):337–346, 1982.

- [59] Fengchun Qiao, Long Zhao, and Xi Peng. Learning to learn single domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12556–12565, 2020.

- [60] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, 28, 2015.

- [61] Stephan R Richter, Vibhav Vineet, Stefan Roth, and Vladlen Koltun. Playing for data: Ground truth from computer games. In European conference on computer vision, pages 102–118. Springer, 2016.

- [62] German Ros, Laura Sellart, Joanna Materzynska, David Vazquez, and Antonio M Lopez. The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3234–3243, 2016.

- [63] Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada, and Kate Saenko. Adversarial dropout regularization. In International Conference on Learning Representations, 2018.

- [64] Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4510–4520, 2018.

- [65] Swami Sankaranarayanan, Yogesh Balaji, Carlos D. Castillo, and Rama Chellappa. Generate to adapt: Aligning domains using generative adversarial networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [66] Yuge Shi, Jeffrey Seely, Philip Torr, Siddharth N, Awni Hannun, Nicolas Usunier, and Gabriel Synnaeve. Gradient matching for domain generalization. In International Conference on Learning Representations, 2022.

- [67] DJ Tolhurst, Yv Tadmor, and Tang Chao. Amplitude spectra of natural images. Ophthalmic and Physiological Optics, 12(2):229–232, 1992.

- [68] V. S. Vibashan, Vikram Gupta, Poojan Oza, Vishwanath A. Sindagi, and Vishal M. Patel. Mega-cda: Memory guided attention for category-aware unsupervised domain adaptive object detection. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4514–4524, 2021.

- [69] Bailin Wang, Mirella Lapata, and Ivan Titov. Meta-learning for domain generalization in semantic parsing. arXiv preprint arXiv:2010.11988, 2020.

- [70] Jingye Wang, Ruoyi Du, Dongliang Chang, and Zhanyu Ma. Domain generalization via frequency-based feature disentanglement and interaction. arXiv preprint arXiv:2201.08029, 2022.

- [71] Xin Wang, Thomas E. Huang, Benlin Liu, Fisher Yu, Xiaolong Wang, Joseph E. Gonzalez, and Trevor Darrell. Robust object detection via instance-level temporal cycle confusion. CoRR, abs/2104.08381, 2021.

- [72] Zijian Wang, Yadan Luo, Ruihong Qiu, Zi Huang, and Mahsa Baktashmotlagh. Learning to diversify for single domain generalization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 834–843, 2021.

- [73] Enze Xie, Wenhai Wang, Zhiding Yu, Anima Anandkumar, Jose M Alvarez, and Ping Luo. Segformer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems, 34:12077–12090, 2021.

- [74] Qi Xu, Liang Yao, Zhengkai Jiang, Guannan Jiang, Wenqing Chu, Wenhui Han, Wei Zhang, Chengjie Wang, and Ying Tai. Dirl: Domain-invariant representation learning for generalizable semantic segmentation. Proceedings of the AAAI Conference on Artificial Intelligence, 36(3):2884–2892, Jun. 2022.

- [75] Qinwei Xu, Ruipeng Zhang, Ya Zhang, Yanfeng Wang, and Qi Tian. A fourier-based framework for domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14383–14392, 2021.

- [76] Yanchao Yang, Dong Lao, Ganesh Sundaramoorthi, and Stefano Soatto. Phase consistent ecological domain adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9011–9020, 2020.

- [77] Yanchao Yang and Stefano Soatto. Fda: Fourier domain adaptation for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4085–4095, 2020.

- [78] Yijun Yang, Shujun Wang, Pheng-Ann Heng, and Lequan Yu. Hcdg: A hierarchical consistency framework for domain generalization on medical image segmentation. arXiv preprint arXiv:2109.05742, 2021.

- [79] Dong Yin, Raphael Gontijo Lopes, Jon Shlens, Ekin Dogus Cubuk, and Justin Gilmer. A fourier perspective on model robustness in computer vision. Advances in Neural Information Processing Systems, 32, 2019.

- [80] Fisher Yu, Haofeng Chen, Xin Wang, Wenqi Xian, Yingying Chen, Fangchen Liu, Vashisht Madhavan, and Trevor Darrell. Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2636–2645, 2020.

- [81] Xiangyu Yue, Yang Zhang, Sicheng Zhao, Alberto Sangiovanni-Vincentelli, Kurt Keutzer, and Boqing Gong. Domain randomization and pyramid consistency: Simulation-to-real generalization without accessing target domain data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2100–2110, 2019.

- [82] Yuyang Zhao, Zhun Zhong, Na Zhao, Nicu Sebe, and Gim Hee Lee. Style-hallucinated dual consistency learning for domain generalized semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), 2022.

- [83] Kaiyang Zhou, Yongxin Yang, Yu Qiao, and Tao Xiang. Domain generalization with mixstyle. In International Conference on Learning Representations, 2021.