Partial-View Object View Synthesis via Filtering Inversion

Abstract

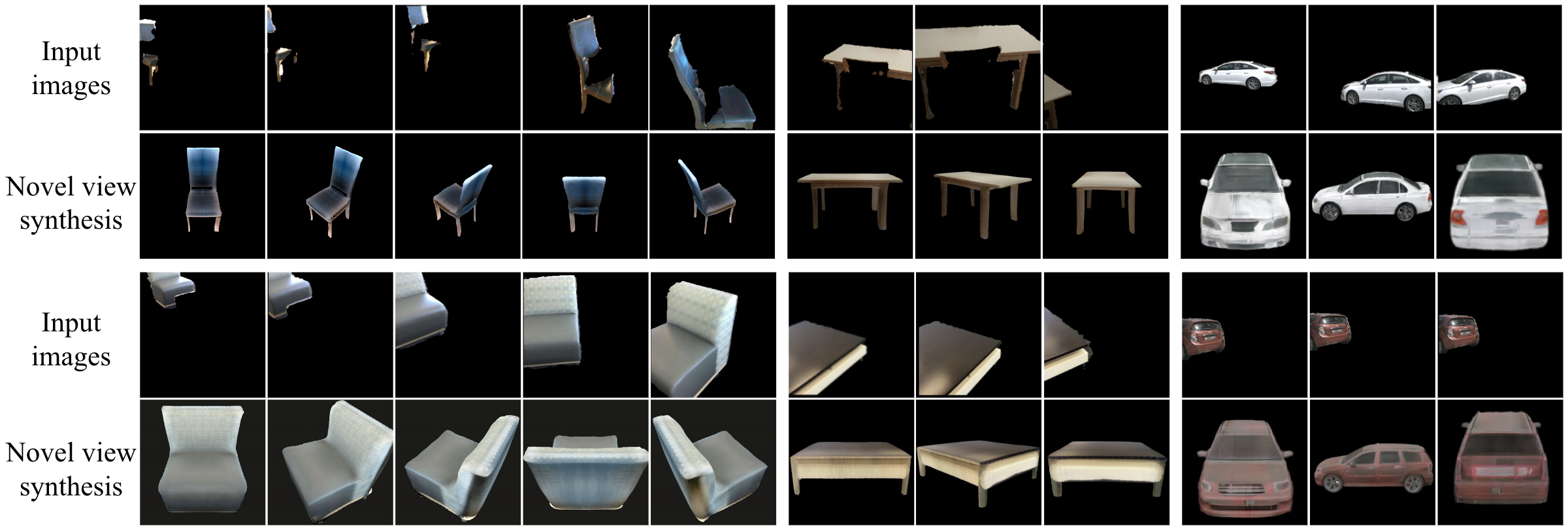

We propose Filtering Inversion (FINV), a learning framework and optimization process that predicts a renderable 3D object representation from one or few partial views. FINV addresses the challenge of synthesizing novel views of objects from partial observations, spanning cases where the object is not entirely in view, is partially occluded, or is only observed from similar views. To achieve this, FINV learns shape priors by training a 3D generative model. At inference, given one or more views of a novel real-world object, FINV first finds a set of latent codes for the object by inverting the generative model from multiple initial seeds. Maintaining the set of latent codes, FINV filters and resamples them after receiving each new observation, akin to particle filtering. The generator is then finetuned for each latent code on the available views in order to adapt to novel objects. We show that FINV successfully synthesizes novel views of real-world objects (e.g., chairs, tables, and cars), even if the generative prior is trained only on synthetic objects. The ability to address the sim-to-real problem allows FINV to be used for object categories without real-world datasets. FINV achieves state-of-the-art performance on multiple real-world datasets, recovers object shape and texture from partial and sparse views, is robust to occlusion, and is able to incrementally improves its representation with more observations.

1 Introduction

We study the problem of synthesizing novel views of an object from a sparse set of challenging partial views, in which some parts of the object might not be seen by any view (see Figure 1). Sparse-view novel view synthesis has seen recent advancements thanks to neural rendering and learning (object) priors [52, 25, 28, 45]. At a high level, these methods produce a neural scene description from few views (1–3) using prior knowledge, then use the scene description to render images from different perspectives. While these methods have shown impressive results, they fail with partial input views where the object is occluded or not fully visible, as it is often the case in practical applications such as robotics. Additionally, they do not address any domain gap between the training dataset and test-time observations.

To address these issues, we propose Filtering Inversion (FINV), a method that learns a category-level object shape and appearance prior from a large variety of instances. Inspired by pivotal tuning [35], at test time we perform a novel two-stage optimization process to first retrieve object latent representations from the generative model and then fine-tune the generator for each latent code. To combat the instability of GAN inversion, FINV incorporates a novel filtering and resampling process of latent codes, akin to particle filtering. As a result, we can handle situations where the object of interest is only partially visible, occluded, or viewed from a limited number of similar perspectives (see Fig. 2). Unlike previous methods, such as pixelNeRF [52] and AutoRF [28], our method generates a complete 3D mesh that can be used in classical rendering pipelines.

More specifically, FINV uses a 3D GAN model called GET3D [14] to learn the object prior. We train GET3D on a dataset of objects of the target category and then use it in the real world. We demonstrate that FINV can leverage the ever-growing collection of synthetic data [6] for learning object priors. At test time, our filtering inversion process can close the sim-to-real gap [41] and generalize to novel real-world object instances.

Our contributions can be summarized as follows:

- •

-

•

We introduce filtering inversion, a novel filtering process that facilitates automatic search in the latent space to overcome the instability in the inversion process.

-

•

We show state-of-the-art results on several real-world datasets (including tables, chairs, and cars), demonstrating that our two-phase method is able to synthesize new views of objects in the real world, without having to train on a real-world dataset. We conduct ablation studies showing that our filtering process contribute to the final performance.

2 Related Work

Sparse-View Novel View Synthesis.

Table 1 shows closely related work, focusing on features that are relevant to solving sparse-view novel view synthesis from partial data. Optimization methods like NeRS [53] and DS [17] do not learn priors and assume a full coverage of the object. These methods, like pixelNeRF [52], are not designed for partial-view scenarios. AutoRF [28] is the most closely related work to ours, but it does not provide the ability to process input data sequentially, does not address the sim-to-real gap, and only tests on one category (cars). Diffusion-based optimization from an image prior can also be used for object novel view synthesis [25] (at the time of this submission, the code was not yet available to conduct a fair comparison.). It has been observed that since diffusion-based methods use image instead of 3D priors, they sometimes produce 3D inconsistent views, e.g., Janus-faced animals with faces on both front and back sides [26, 25].

Inverse object estimation via generative object priors.

Recent 3D generative models leverage a hybrid framework that considers both explicit and implicit object representations [14, 4, 30, 13, 54, 40]. They have shown impressive performance in producing high-quality geometry and detailed texture information. Prior works have also shown that, through test-time optimization, the learned object/scene priors can facilitate inference of the camera pose, shape, and texture [31, 37, 20, 51, 28]. However, these works typically rely on labeled real-world data or have a time-consuming optimization process. The efficacy of many of these methods in real-world scenarios involving partial observations is unclear and will be discussed in this paper. For more discussion on related works, refer to section F in the supplementary materials.

| NeRF | NeRS | DS | pNeRF | AutoRF | FINV | |

|---|---|---|---|---|---|---|

| expected num. images | 100+ | 8–16 | 4–12 | 1–3 | 1 | 1–5 |

| handles single view | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ |

| handles occlusion | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ |

| handles not entirely in view | ✗ | ✗ | ✗ | ✗ | ✓ | ✓ |

| learns (object) prior | ✗ | ✗ | ✗ | ✓ | ✓ | ✓ |

| closes sim-to-real gap | – | – | – | ✓ | ✗ | ✓ |

| produces mesh output∗ | ✗ | ✓ | ✓ | ✗ | ✗ | ✓ |

3 Method

In this section, we define the problem and introduce our proposed Filtering Inversion (FINV) framework. The input to our system is a stream of masked RGB observations of an object in a scene, with camera poses. We denote by and the input image and its corresponding object mask at time , respectively. and denote the set of images and object masks observed up to time . In practice, masks and poses can be estimated by off-the-shelf segmentation [33] and 3D pose estimation and detection models [1]. More recently methods like [16] could be used to obtain scene information needed for our method. The task is to, at each time-step , synthesize views of the object that have not been observed up to time using RGB images and object masks and . In other words, we evaluate the model’s ability to do novel-view synthesis on the object at each time-step.

Our method adapts GAN priors to create a 3D representation of real-world objects. For each object category, we train a GAN that can generate 3D representations of objects in the category. More specifically, we leverage large-scale synthetic data [6] even though that leads to the training and testing data coming from two distinct domains [41].

Given a pre-trained generative object prior, FINV uses a two-phase procedure to reconstruct novel objects: a filtering inversion phase, followed by a refinement phase (see Fig. 3). The filtering inversion phase serves as a way to iteratively find a latent code that reconstructs the target observations at the given viewpoints. The refinement phase serves to fit the observations more accurately by fine-tuning the generator model itself while holding the latent code fixed. This two-step process is inspired by pivotal tuning inversion [35], which is proposed for latent-space image editing. This process ensures that, while adapting the model to reconstruct objects in the wild, the distortion of the learned latent space of the 3D GANs is minimized, helping to ameliorate the sim-to-real problem.

In the following, we first introduce the 3D GAN that we use as our backbone generative model. We then introduce FINV, our proposed two-phase method that allows us to reconstruct real-world objects efficiently and incrementally.

3.1 Pretraining Stage

FINV leverages a pre-trained 3D GAN generator ; we use GET3D [14] as the backbone GAN. GET3D is a 3D GAN that disentangles geometry and texture. The geometry branch of the GET3D generator differentiably outputs a surface mesh of arbitrary topology, and the texture branch produces a texture field that can be queried at the surface points to produce texture maps. In our ablation studies, we also use EG3D [5] as the backbone and discuss both backbones’ advantages and disadvantages.

At a high level, GET3D samples two input vectors from a Gaussian distribution and . Then, following StyleGAN [23, 21, 22], GET3D uses non-linear mapping networks and to map and , respectively, to intermediate latent vectors , . These intermediate latent vectors are further used to produce a textured mesh. We use to denote the rendered binary object mask, where represents the parameters of the geometry branch. Letting , we use to denote the rendered RGB image, where represents the parameters of the texture branch. The mask only depends on the geometry branch, and the final rendering depends on both the geometry and texture branches. Note that the image generation also depends on camera intrinsics and extrinsics, which we omit from the above notation for simplicity.

3.2 Filtering Inversion (Phase I)

Figure 4 shows a high-level workflow of this phase. Given a pre-trained generator , a set of RGB observations , and their corresponding object masks up to the current time , we aim to optimize a randomly initialized latent code (in practice, we use a set of latent codes) that best encodes the observed object. In greater detail, we keep the parameters of the generator fixed and we optimize with the following objective:

| (1) |

is the LPIPS metric [55], and is binary cross entropy in our experiments. We use to denote the latent code obtained after the inversion process.

However, the process of inversion can be unstable in practice due to the misalignment between the training and real-world distributions [38]. For example, in our experiments, we train the generator on synthetic object models, where this problem is particularly pronounced. To combat this issue, we propose filtering inversion, a process that combines inversion with a filtering process akin to particle filtering.

The filtering process is described as follows. At time , we randomly initialize a set of latent codes and invert them with Eq. (1) in parallel, taking image as the reference. At every following time step , we observe a new image and perform a filtering and optimization step. In the filtering step, we filter the latent codes, keeping only those that meet the following criterion:

| (2) |

where is the Percentile Ranking that gives a percentage score of the loss out of all the latent codes, and is a hyper-parameter which we set to in our experiments. In other words, we keep the top latent codes that can better reconstruct the object at all views, including the newly observed view . Next, we proceed to the optimization step, where we again apply Eq. (1) to update all the latent codes on all the observations up to and including the new observation .

We then proceed to time and repeat the filtering and optimization steps. This filtering and optimization process is executed repeatedly to discard latent codes stuck in local minima and keep improving our representation of the object with every new observation.

3.3 Refinement (Phase II)

This phase aims to refine the representations by fine-tuning the generator to reconstruct objects while maintaining the essential priors learned by the generative model. Since our goal is to reconstruct objects in the wild, we will inevitably run into instances with geometry or texture that have not been observed before in the training set. In such cases, simply having the filtered inversion phase (phase I) will be insufficient, as there may not exist a latent code in the learned latent space that enables the generator to produce the required texture and geometry. If we simply use a random or mean latent code and fine-tune the entire generator around it, the representation tends to overfit to reconstructing the observed views and produces highly-distorted novel views [35]. Instead, we fine-tune the generator while fixing , which can be used to generate a textured mesh that is similar to the observed object in real life. In this way, we expect to be able to minimize the “distortion” of the originally well-behaved latent space.

One key insight here is that geometry and texture are of different complexities for different object categories. The disentangled architecture of GET3D allows us to fine-tune the geometry and texture of the object separately and with different amounts. For example, cars have fewer geometric variations than texture variations, while wooden tables come in many different shapes but have more uniform textures. Thus, we can impose more regularization on the training of geometry to avoid overfitting to the imperfect and partial observations and distorting the learned latent space.

Let be a latent code obtained after phase I inversion at time . We fine-tune the generator using the following objectives:

| (3) | ||||

| (4) |

where is the MSE calculated on pixels and is a coefficient. Recall that and represent the parameters for the generator’s geometry and texture branch, respectively. By having the geometry and texture branch’s objectives disentangled, we can optimize and to different degrees. In our experiments, we observe that reconstructing geometry tends to be easier than texture and we simply use early-stopping as a regularization on Eq. (3) during the refinement phase.

4 Experiments

We conducted extensive experiments on novel view synthesis to evaluate our method. We first compare our method with recent competitive baselines and then conduct an ablation study to understand the effectiveness of our design choices. Additionally, we evaluate our model on 3D shape reconstruction. Finally, we conduct a deeper analysis to understand the relation between performance and the amount of rotation between the source and target view.

4.1 Setup

All methods are evaluated on real-world images. We assume a sequential observation of RGB images and instance segmentation masks from a camera moving in the scene relative to the object. We evaluate the model with either the first 1, 3, or 5 frames as input and report reconstruction quality metrics on frames 6 and onward. We report reconstruction quality metrics given the first 1, 3, and 5 frames of the object. This allows us to assess the ability of each method to incorporate information from multiple frames in an online setting. Note that we do not use depth information but assume known camera poses and intrinsic. In this paper, methods compared within the same table are provided with the same estimated poses and segmentations.

Metrics.

For novel view synthesis, we report PSNR, SSIM [46], and LPIPS [55] reconstruction quality metrics. PSNR measures reconstruction quality at the pixel level, while SSIM and LPIPS take into account semantic perceptual similarity. For the additional experiment on shape reconstruction, we use Chamfer distance and F1 score, following [15].

Datasets.

We evaluate all methods on three categories of objects: Chair [10], Table [10], and Car [3]. For all categories, we first pre-train each method on ShapeNet [6], a dataset of synthetic objects, to obtain a category-level prior. For Chair and Table, we evaluate models on ScanNet [10], a dataset of real-world indoor scene scans. For Car, we evaluate models on NuScenes [3], a driving dataset with 3D detection and tracking annotations. For more details on these datasets and the reasons behind their selection, please refer to Section B of the supplementary material.

| ScanNet Chairs | ScanNet Tables | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | |||||||||||||

| # views | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 |

| Instant-NGP | 21.36 | 22.64 | 23.23 | 0.816 | 0.853 | 0.888 | 0.261 | 0.211 | 0.177 | 15.78 | 16.63 | 17.05 | 0.583 | 0.744 | 0.770 | 0.469 | 0.394 | 0.337 |

| pixelNeRF | 21.47 | 21.58 | 21.96 | 0.687 | 0.774 | 0.847 | 0.284 | 0.234 | 0.187 | 14.83 | 16.59 | 16.96 | 0.592 | 0.699 | 0.731 | 0.415 | 0.325 | 0.306 |

| IBRNet | 22.96 | 23.45 | 23.95 | 0.790 | 0.817 | 0.848 | 0.215 | 0.201 | 0.178 | 15.11 | 16.13 | 16.58 | 0.797 | 0.809 | 0.814 | 0.283 | 0.267 | 0.263 |

| IBRNet + test time opt. | 22.96 | 23.32 | 23.47 | 0.790 | 0.835 | 0.853 | 0.215 | 0.193 | 0.186 | 15.11 | 16.14 | 16.37 | 0.797 | 0.815 | 0.825 | 0.283 | 0.268 | 0.262 |

| EG3D + PTI | 20.89 | 22.97 | 24.49 | 0.738 | 0.843 | 0.858 | 0.199 | 0.123 | 0.098 | 17.92 | 19.44 | 19.86 | 0.769 | 0.855 | 0.852 | 0.240 | 0.156 | 0.149 |

| GET3D + PTI | 23.24 | 23.62 | 24.29 | 0.872 | 0.874 | 0.874 | 0.116 | 0.111 | 0.106 | 19.21 | 19.51 | 19.93 | 0.909 | 0.921 | 0.924 | 0.163 | 0.138 | 0.130 |

| AutoRF | 22.44 | 22.80 | 22.94 | 0.798 | 0.812 | 0.817 | 0.220 | 0.210 | 0.209 | 13.90 | 14.23 | 14.39 | 0.525 | 0.541 | 0.556 | 0.495 | 0.476 | 0.470 |

| FINV-GET3D (Ours) | 24.61 | 24.96 | 26.23 | 0.937 | 0.944 | 0.950 | 0.102 | 0.089 | 0.082 | 19.26 | 19.84 | 20.48 | 0.907 | 0.925 | 0.930 | 0.163 | 0.131 | 0.120 |

Baselines.

For partial-view novel view synthesis, we compare FINV against Instant-NGP [29], pixelNeRF [52], IBRNet [45], IBRNet fine-tuned during test time, AutoRF [28], and 3D GANs with Pivotal Tuning Inversion (EG3D+PTI and GET3D+PTI) [5, 14, 35]. We use open-source implementations of each method when available, or private implementations shared by the authors. Note that AutoRF is originally proposed for reconstruction from one single view, but it supports multiple input views as well, which we find to improve reconstruction quality. Of these, Instant-NGP requires no pretraining; PixelNeRF, IBRNet, AutoRF, the EG3D models used in the baseline EG3D+PTI, and the GET3D models used in our method are pretrained on the synthetic dataset mentioned above. uses the same architecture as AutoRF, but is pretrained on the NuScenes Car dataset with in-domain data in the same way as the original paper [28].

For shape reconstruction experiments, we compare our method against two state-of-the-art sparse-view reconstruction methods (i.e., NeuS [44] and NeRS [53]), additional to 3D GANs with Pivotal Tuning Inversion. Most systems in the above novel view synthesis experiments are either inadequate for the purpose of obtaining shapes/meshes or lack an implementation for extracting them.

Implementation Details.

We run our method on a Linux machine with NVIDIA A40 GPUs. In our experiments, we run 350 gradient update steps in the filtering inversion phase (Phase I). In the refinement phase, we run 500 gradient update steps. We ensure that our test-time optimized baselines (Instant-NGP and IBRNet) are optimized with at least the same compute time used for our model. Refer to section C for runtime analysis of our method.

4.2 Results

Table 2 shows the results on ScanNet [10] chairs and tables, showing the PSNR, SSIM [46], and LPIPS [55] metrics for 1, 3 and 5 input views. Our method outperforms all prior work across all metrics for all numbers of input views. For example, on ScanNet Chairs with five input views, we outperform the most competitive prior work in each case by 7.1%, 7.0%, and 16.3% relative improvement in PSNR, SSIM, and LPIPS, respectively. In the most challenging single-view setting, this improvement increases to 7.2%, 17.4%, and 48.7%, respectively, demonstrating the superior ability of our method to effectively use the learned prior to reconstruct objects from partial views. The results on ScanNet Table data repeat this finding.

Table 3 shows the results on NuScenes cars. As before, our method consistently outperforms all other methods that were pre-trained on synthetic ShapeNet data across all numbers of input views. Specifically, we show 2.9%, 6.4%, and 8.3% relative improvement in PSNR, SSIM, and LPIPS, respectively, compared to the most competitive baseline in each case in the single-view setting. With 5 views, we still outperform all baselines in SSIM and LPIPS; however, Instant-NGP reports higher PSNR. Since LPIPS is the metric that more accurately reflects human perception [55, 52], this points to the fact that despite not reconstructing cars the best on a pixel level, our method still gives semantically better reconstructions on target views. We explore this finding deeper in Section 4.3, showing that our model actually achieves a better overall reconstruction. Surprisingly, despite using only synthetic training data, we achieve comparable results to (bottom row), which was trained on in-domain real-world images taken from the same distribution as the testing images, in all metrics except multi-view LPIPS. This demonstrates the effectiveness of our method in bridging the substantial sim-to-real gap.

| PSNR | SSIM | LPIPS | |||||||

| # views | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 |

| Instant-NGP | 14.56 | 15.57 | 17.19 | 0.580 | 0.623 | 0.647 | 0.546 | 0.519 | 0.489 |

| pre-trained on synthetic data | |||||||||

| pixelNeRF | 12.23 | 13.06 | 13.27 | 0.481 | 0.515 | 0.519 | 0.786 | 0.762 | 0.649 |

| IBRNet | 12.12 | 13.41 | 14.81 | 0.537 | 0.577 | 0.599 | 0.663 | 0.632 | 0.614 |

| IBRNet + test time opt. | 12.12 | 12.89 | 15.60 | 0.537 | 0.517 | 0.636 | 0.663 | 0.646 | 0.566 |

| EG3D + PTI | 14.33 | 15.43 | 16.50 | 0.606 | 0.647 | 0.670 | 0.564 | 0.517 | 0.487 |

| GET3D + PTI | 13.77 | 15.42 | 16.03 | 0.602 | 0.662 | 0.682 | 0.549 | 0.485 | 0.457 |

| AutoRF | 11.81 | 12.00 | 12.10 | 0.546 | 0.550 | 0.552 | 0.778 | 0.775 | 0.775 |

| FINV-EG3D (Ours) | 14.57 | 15.69 | 16.73 | 0.622 | 0.657 | 0.678 | 0.550 | 0.507 | 0.477 |

| FINV-GET3D (Ours) | 14.75 | 15.66 | 16.56 | 0.645 | 0.676 | 0.699 | 0.517 | 0.472 | 0.438 |

| pre-trained on real-world cars | |||||||||

| 15.11 | 15.76 | 16.32 | 0.663 | 0.677 | 0.698 | 0.656 | 0.642 | 0.698 | |

| Chairs+Tables | Chamfer L2 | F1 Score | ||||

|---|---|---|---|---|---|---|

| # views | 1 | 3 | 5 | 1 | 3 | 5 |

| NeuS | 0.138 | 0.091 | 0.100 | 0.323 | 0.290 | 0.376 |

| NeRS | 0.157 | 0.076 | 0.095 | 0.438 | 0.452 | 0.448 |

| EG3D + PTI | 0.068 | 0.130 | 0.015 | 0.241 | 0.237 | 0.278 |

| GET3D + PTI | 0.036 | 0.010 | 0.015 | 0.346 | 0.287 | 0.309 |

| FINV-EG3D | 0.065 | 0.122 | 0.132 | 0.456 | 0.654 | 0.666 |

| FINV-GET3D | 0.023 | 0.009 | 0.009 | 0.543 | 0.676 | 0.675 |

Figures 6 and 7 present qualitative results, highlighting the challenge of our setting: objects are often partially observed and not in full view. Although the baselines are able to generate reasonable reconstructions when the target viewpoint is close to the input view, they fail to render unseen parts of the object from viewpoints far from the input. We hypothesize that this is because pixelNeRF and IBRNet learn local priors since they are optimized to render from local pixel features. Although IBRNet uses a ray transformer so that density predictions on individual rays are coherent, it still learns local priors since the rays are organized in patches. In contrast, we limit our method to update within the latent space of a learned generative model. The learned structure of the latent space serves as regularizer and imposes a “global prior”. Intuitively, during the filtered inversion phase, we are asking the model to answer the question: “given these observations (constraints), what would the entire object look like?”. Subsequently, in the refinement phase, we ask the model to better adapt its answer to the above question to real-world observations. This two-stage process results in more globally coherent reconstructions. AutoRF also imposes a global prior, but it uses an encoder network instead of filtered inversion to compute the latent codes. The encoder overfits to its training distribution in the source domain, yielding simulated-looking renderings. Successful results with AutoRF are only achieved when trained on target-domain data (). Figure 5 shows additional qualitative results.

Shape Reconstruction Results.

We additionally evaluate FINV’s shape reconstruction quality by calculating the Chamfer L2 Distance and F1 Score between the output mesh with the ground truth object point clouds in ScanNet. From Table 4, we observe that FINV outperforms methods such as NeuS and NeRS due to the effective use of object priors. Due to the use of Filtered Inversion, FINV produces more precise surfaces when compared with EG3D+PTI which leverages similar object priors.

4.3 Ablations and Analyses

In addition to the main experiments shown above, we perform additional experiments to answer the following questions: Q1: Do FINV’s filtering process in the filtered inversion phase and the use of the refinement phase boost performance? Q2: How does the choice of a mesh-based backbone GAN (e.g., GET3D) compare with radiance field-based backbone GANs (e.g., EG3D)? Q3: How does FINV handle rotational deltas between input and target views compared to baselines?

| PSNR | ||||||

|---|---|---|---|---|---|---|

| # views | 1 | 3 | 5 | |||

| EG3D inv. | 17.15 0.56 | 17.37 0.48 | 18.13 0.35 | |||

| EG3D inv. w/ filt. | 17.15 0.56 | 20.57 0.32 | 21.19 0.30 | |||

| EG3D (refine. only) | 18.12 0.52 | 18.80 0.34 | 19.23 0.37 | |||

| EG3D inv. w/ filt. + refine. | 20.35 0.53 | 22.05 0.14 | 23.26 0.18 | |||

| GET3D inv. | 21.87 0.36 | 22.33 0.20 | 22.66 0.16 | |||

| GET3D inv. w/ filt. | 21.87 0.36 | 23.18 0.09 | 23.68 0.04 | |||

| GET3D (refine. only) | 19.40 0.50 | 20.07 0.44 | 20.43 0.49 | |||

| GET3D inv. w/ filt. + refine. | 22.71 0.27 | 23.19 0.08 | 24.07 0.06 | |||

| SSIM | LPIPS | |||||

| # views | 1 | 3 | 5 | 1 | 3 | 5 |

| EG3D inv. | 0.841 | 0.862 | 0.890 | 0.251 | 0.233 | 0.222 |

| EG3D inv. w/ filt. | 0.841 | 0.868 | 0.902 | 0.251 | 0.182 | 0.169 |

| EG3D (refine. only) | 0.889 | 0.900 | 0.905 | 0.187 | 0.169 | 0.161 |

| EG3D inv. w/ filt. + refine. | 0.793 | 0.889 | 0.885 | 0.193 | 0.119 | 0.104 |

| GET3D inv. | 0.922 | 0.932 | 0.937 | 0.141 | 0.130 | 0.124 |

| GET3D inv. w/ filt. | 0.922 | 0.941 | 0.944 | 0.141 | 0.115 | 0.108 |

| GET3D (refine. only) | 0.913 | 0.926 | 0.932 | 0.150 | 0.129 | 0.119 |

| GET3D inv. w/ filt. + refine. | 0.927 | 0.938 | 0.944 | 0.124 | 0.105 | 0.096 |

Comparison with Ablated FINV.

To answer Q1, we compare FINV (using both GET3D and EG3D as the backbone) with and without the filtering process, and with and without the refinement phase. The results in Table 5 show that the filtering process boosts overall performance. Qualitatively, we can see in Figure 8 that the filtered inversion phase fits the geometry and a rough texture from the source view. Then, in the refinement phase, the entire generator is fine-tuned to the input image to better fit the texture and also geometry.

GET3D versus EG3D.

FINV assumes GET3D as the backbone. To answer Q2, we adopt FINV to use EG3D as the backbone. The results in Table 5 show that using GET3D as the backbone achieves the highest performance across all metrics. From Figure 8, we also observe that using GET3D yields a better reconstruction of the object during the filtered inversion phase (Phase I) compared to using EG3D, likely because the geometry and texture are disentangled in GET3D. We also find that EG3D has a higher variance in performance across multiple runs when compared with GET3D. This suggests that conducting GAN inversion on GET3D is more stable than EG3D. Empirically, we observe that filtered inversion with the EG3D backbone has a higher chance of converging to a latent code that produces highly distorted reconstruction. Due to this instability in EG3D’s inversion process, it benefits from the filtering process more than GET3D.

Rotational Delta Between Input and Target Views.

To answer Q3, we plot LPIPS of various models against the rotational difference between the input and target views in Figure 9 (refer to section D in the supplementary material for plots of PSNR). We fit a line to the data points for each method. We find that Instant-NGP performs well on single-shot examples when the source and target view are extremely close to each other. However, as the target view gets further away from the source view, the performance of Instant-NGP’s reconstruction degrades significantly as it does not have a prior on the geometry and texture of the object. We can also observe that FINV renders the target views consistently better than Instant-NGP [29] and IBRNet [28] when the source and target views are farther away.

5 Conclusion

In this paper we proposed Filtering Inversion (FINV), a framework and optimization process that predicts a renderable 3D object representation from one or few partial views. Through our experiments, we have shown that our method can be successfully applied to a variety of settings. We addressed shortcomings of previous works that are unable, when provided with partial views of the object of interest, to “hallucinate” unseen parts. In contrast, our method produces complete novel views of the object. Our method generates a mesh without postprocessing, and it can process images sequentially, producing increasingly better results as more data becomes available.

References

- Ahmadyan et al. [2021] Adel Ahmadyan, Liangkai Zhang, Artsiom Ablavatski, Jianing Wei, and Matthias Grundmann. Objectron: A large scale dataset of object-centric videos in the wild with pose annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7822–7831, 2021.

- Avetisyan et al. [2019] Armen Avetisyan, Manuel Dahnert, Angela Dai, Manolis Savva, Angel X Chang, and Matthias Nießner. Scan2cad: Learning cad model alignment in rgb-d scans. In Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pages 2614–2623, 2019.

- Caesar et al. [2020] Holger Caesar, Varun Bankiti, Alex H Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11621–11631, 2020.

- Chan et al. [2022a] Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J Guibas, Jonathan Tremblay, Sameh Khamis, et al. Efficient geometry-aware 3D generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16123–16133, 2022a.

- Chan et al. [2022b] Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. Efficient geometry-aware 3D generative adversarial networks. In CVPR, 2022b.

- Chang et al. [2015] Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, et al. ShapeNet: An information-rich 3D model repository. arXiv preprint arXiv:1512.03012, 2015.

- Chen et al. [2021a] Anpei Chen, Zexiang Xu, Fuqiang Zhao, Xiaoshuai Zhang, Fanbo Xiang, Jingyi Yu, and Hao Su. MVSNeRF: Fast generalizable radiance field reconstruction from multi-view stereo. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 14124–14133, 2021a.

- Chen et al. [2021b] Wenzheng Chen, Joey Litalien, Jun Gao, Zian Wang, Clement Fuji Tsang, Sameh Khamis, Or Litany, and Sanja Fidler. DIB-R++: Learning to predict lighting and material with a hybrid differentiable renderer. Advances in Neural Information Processing Systems, 34:22834–22848, 2021b.

- Choy et al. [2016] Christopher B Choy, Danfei Xu, JunYoung Gwak, Kevin Chen, and Silvio Savarese. 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In European Conference on Computer Vision, pages 628–644. Springer, 2016.

- Dai et al. [2017] Angela Dai, Angel X Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. ScanNet: Richly-annotated 3D reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5828–5839, 2017.

- Deitke et al. [2022] Matt Deitke, Dustin Schwenk, Jordi Salvador, Luca Weihs, Oscar Michel, Eli VanderBilt, Ludwig Schmidt, Kiana Ehsani, Aniruddha Kembhavi, and Ali Farhadi. Objaverse: A universe of annotated 3D objects. arXiv preprint arXiv:2212.08051, 2022.

- Fan et al. [2017] Haoqiang Fan, Hao Su, and Leonidas J Guibas. A point set generation network for 3D object reconstruction from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 605–613, 2017.

- Fan et al. [2022] Zhiwen Fan, Yifan Jiang, Peihao Wang, Xinyu Gong, Dejia Xu, and Zhangyang Wang. Unified implicit neural stylization. In European Conference on Computer Vision, pages 636–654. Springer, 2022.

- Gao et al. [2022] Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, and Sanja Fidler. GET3D: A generative model of high quality 3D textured shapes learned from images. In Advances in Neural Information Processing Systems, 2022.

- Gkioxari et al. [2019] Georgia Gkioxari, Jitendra Malik, and Justin Johnson. Mesh R-CNN. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9785–9795, 2019.

- Gkioxari et al. [2022] Georgia Gkioxari, Nikhila Ravi, and Justin Johnson. Learning 3D object shape and layout without 3D supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Goel et al. [2022] Shubham Goel, Georgia Gkioxari, and Jitendra Malik. Differentiable stereopsis: Meshes from multiple views using differentiable rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8635–8644, 2022.

- Hasselgren et al. [2022] Jon Hasselgren, Nikolai Hofmann, and Jacob Munkberg. Shape, light, and material decomposition from images using Monte Carlo rendering and denoising. NeurIPS, 2022.

- Huang et al. [2020] Jingwei Huang, Justus Thies, Angela Dai, Abhijit Kundu, Chiyu Max Jiang, Leonidas Guibas, Matthias Nießner, and Thomas Funkhouser. Adversarial texture optimization from RGB-D scans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Jang and Agapito [2021] Wonbong Jang and Lourdes Agapito. CodeNeRF: Disentangled neural radiance fields for object categories. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 12949–12958, 2021.

- Karras et al. [2019] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4401–4410, 2019.

- Karras et al. [2020] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of StyleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8110–8119, 2020.

- Karras et al. [2021] Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems, 34:852–863, 2021.

- Lombardi et al. [2019] Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. Neural volumes: Learning dynamic renderable volumes from images. SIGGRAPH, 2019.

- Melas-Kyriazi et al. [2023] Luke Melas-Kyriazi, Christian Rupprecht, Iro Laina, and Andrea Vedaldi. RealFusion: 360° reconstruction of any object from a single image. In arXiv:2302.10663, 2023.

- Metzer et al. [2022] Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. Latent-NeRF for shape-guided generation of 3d shapes and textures. arXiv preprint arXiv:2211.07600, 2022.

- Mildenhall et al. [2020] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. NeRF: Representing scenes as neural radiance fields for view synthesis. In European Conference on Computer Vision, pages 405–421, 2020.

- Müller et al. [2022] Norman Müller, Andrea Simonelli, Lorenzo Porzi, Samuel Rota Bulò, Matthias Nießner, and Peter Kontschieder. AutoRF: Learning 3D object radiance fields from single view observations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Müller et al. [2022] Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. Instant neural graphics primitives with a multiresolution hash encoding. SIGGRAPH, 2022.

- Or-El et al. [2022] Roy Or-El, Xuan Luo, Mengyi Shan, Eli Shechtman, Jeong Joon Park, and Ira Kemelmacher-Shlizerman. StyleSDF: High-resolution 3D-consistent image and geometry generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13503–13513, 2022.

- Park et al. [2019] Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. DeepSDF: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 165–174, 2019.

- Peng et al. [2020] Songyou Peng, Michael Niemeyer, Lars Mescheder, Marc Pollefeys, and Andreas Geiger. Convolutional occupancy networks. In European Conference on Computer Vision, pages 523–540, 2020.

- Porzi et al. [2021] Lorenzo Porzi, Samuel Rota Bulo, and Peter Kontschieder. Improving panoptic segmentation at all scales. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7302–7311, 2021.

- Reizenstein et al. [2021] Jeremy Reizenstein, Roman Shapovalov, Philipp Henzler, Luca Sbordone, Patrick Labatut, and David Novotny. Common objects in 3D: Large-scale learning and evaluation of real-life 3D category reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10901–10911, 2021.

- Roich et al. [2022] Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. Pivotal tuning for latent-based editing of real images. ACM Transactions on Graphics (TOG), 42(1):1–13, 2022.

- Saito et al. [2019] Shunsuke Saito, Zeng Huang, Ryota Natsume, Shigeo Morishima, Angjoo Kanazawa, and Hao Li. PIFu: Pixel-aligned implicit function for high-resolution clothed human digitization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2304–2314, 2019.

- Sitzmann et al. [2019] Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. Scene representation networks: Continuous 3D-structure-aware neural scene representations. Advances in Neural Information Processing Systems, 32, 2019.

- Song et al. [2022] Haorui Song, Yong Du, Tianyi Xiang, Junyu Dong, Jing Qin, and Shengfeng He. Editing out-of-domain gan inversion via differential activations. In European Conference on Computer Vision, pages 1–17, 2022.

- Sucar et al. [2020] Edgar Sucar, Kentaro Wada, and Andrew Davison. Nodeslam: Neural object descriptors for multi-view shape reconstruction. In 2020 International Conference on 3D Vision (3DV), pages 949–958. IEEE, 2020.

- Takikawa et al. [2022] Towaki Takikawa, Alex Evans, Jonathan Tremblay, Thomas Müller, Morgan McGuire, Alec Jacobson, and Sanja Fidler. Variable bitrate neural fields. In ACM SIGGRAPH 2022 Conference Proceedings, pages 1–9, 2022.

- Tremblay et al. [2018] Jonathan Tremblay, Aayush Prakash, David Acuna, Mark Brophy, Varun Jampani, Cem Anil, Thang To, Eric Cameracci, Shaad Boochoon, and Stan Birchfield. Training deep networks with synthetic data: Bridging the reality gap by domain randomization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 969–977, 2018.

- Trevithick and Yang [2021] Alex Trevithick and Bo Yang. GRF: Learning a general radiance field for 3D scene representation and rendering. In ICCV, 2021.

- Wang et al. [2018] Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, and Yu-Gang Jiang. Pixel2mesh: Generating 3D mesh models from single RGB images. In Proceedings of the European Conference on Computer Vision (ECCV), pages 52–67, 2018.

- Wang et al. [2021a] Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. NeuS: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv preprint arXiv:2106.10689, 2021a.

- Wang et al. [2021b] Qianqian Wang, Zhicheng Wang, Kyle Genova, Pratul P Srinivasan, Howard Zhou, Jonathan T Barron, Ricardo Martin-Brualla, Noah Snavely, and Thomas Funkhouser. IBRNet: Learning multi-view image-based rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4690–4699, 2021b.

- Wang et al. [2004] Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4):600–612, 2004.

- Wei et al. [2022] Yao Wei, George Vosselman, and Michael Ying Yang. Flow-based gan for 3d point cloud generation from a single image. arXiv preprint arXiv:2210.04072, 2022.

- Yang et al. [2021a] Bangbang Yang, Yinda Zhang, Yinghao Xu, Yijin Li, Han Zhou, Hujun Bao, Guofeng Zhang, and Zhaopeng Cui. Learning object-compositional neural radiance field for editable scene rendering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 13779–13788, 2021a.

- Yang et al. [2021b] Bangbang Yang, Yinda Zhang, Yinghao Xu, Yijin Li, Han Zhou, Hujun Bao, Guofeng Zhang, and Zhaopeng Cui. Learning object-compositional neural radiance field for editable scene rendering. In International Conference on Computer Vision (ICCV), 2021b.

- Yariv et al. [2021] Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. Volume rendering of neural implicit surfaces. In Thirty-Fifth Conference on Neural Information Processing Systems (NeurIPS), 2021.

- Yen-Chen et al. [2021] Lin Yen-Chen, Pete Florence, Jonathan T Barron, Alberto Rodriguez, Phillip Isola, and Tsung-Yi Lin. iNeRF: Inverting neural radiance fields for pose estimation. In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 1323–1330, 2021.

- Yu et al. [2021] Alex Yu, Vickie Ye, Matthew Tancik, and Angjoo Kanazawa. pixelNeRF: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4578–4587, 2021.

- Zhang et al. [2021] Jason Zhang, Gengshan Yang, Shubham Tulsiani, and Deva Ramanan. NeRS: neural reflectance surfaces for sparse-view 3D reconstruction in the wild. Advances in Neural Information Processing Systems, 34:29835–29847, 2021.

- Zhang et al. [2022] Jichao Zhang, Enver Sangineto, Hao Tang, Aliaksandr Siarohin, Zhun Zhong, Nicu Sebe, and Wei Wang. 3D-aware semantic-guided generative model for human synthesis. In European Conference on Computer Vision, pages 339–356. Springer, 2022.

- Zhang et al. [2018] Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 586–595, 2018.

References

- Ahmadyan et al. [2021] Adel Ahmadyan, Liangkai Zhang, Artsiom Ablavatski, Jianing Wei, and Matthias Grundmann. Objectron: A large scale dataset of object-centric videos in the wild with pose annotations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7822–7831, 2021.

- Avetisyan et al. [2019] Armen Avetisyan, Manuel Dahnert, Angela Dai, Manolis Savva, Angel X Chang, and Matthias Nießner. Scan2cad: Learning cad model alignment in rgb-d scans. In Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pages 2614–2623, 2019.

- Caesar et al. [2020] Holger Caesar, Varun Bankiti, Alex H Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11621–11631, 2020.

- Chan et al. [2022a] Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J Guibas, Jonathan Tremblay, Sameh Khamis, et al. Efficient geometry-aware 3D generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16123–16133, 2022a.

- Chan et al. [2022b] Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. Efficient geometry-aware 3D generative adversarial networks. In CVPR, 2022b.

- Chang et al. [2015] Angel X Chang, Thomas Funkhouser, Leonidas Guibas, Pat Hanrahan, Qixing Huang, Zimo Li, Silvio Savarese, Manolis Savva, Shuran Song, Hao Su, et al. ShapeNet: An information-rich 3D model repository. arXiv preprint arXiv:1512.03012, 2015.

- Chen et al. [2021a] Anpei Chen, Zexiang Xu, Fuqiang Zhao, Xiaoshuai Zhang, Fanbo Xiang, Jingyi Yu, and Hao Su. MVSNeRF: Fast generalizable radiance field reconstruction from multi-view stereo. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 14124–14133, 2021a.

- Chen et al. [2021b] Wenzheng Chen, Joey Litalien, Jun Gao, Zian Wang, Clement Fuji Tsang, Sameh Khamis, Or Litany, and Sanja Fidler. DIB-R++: Learning to predict lighting and material with a hybrid differentiable renderer. Advances in Neural Information Processing Systems, 34:22834–22848, 2021b.

- Choy et al. [2016] Christopher B Choy, Danfei Xu, JunYoung Gwak, Kevin Chen, and Silvio Savarese. 3d-r2n2: A unified approach for single and multi-view 3d object reconstruction. In European Conference on Computer Vision, pages 628–644. Springer, 2016.

- Dai et al. [2017] Angela Dai, Angel X Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. ScanNet: Richly-annotated 3D reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5828–5839, 2017.

- Deitke et al. [2022] Matt Deitke, Dustin Schwenk, Jordi Salvador, Luca Weihs, Oscar Michel, Eli VanderBilt, Ludwig Schmidt, Kiana Ehsani, Aniruddha Kembhavi, and Ali Farhadi. Objaverse: A universe of annotated 3D objects. arXiv preprint arXiv:2212.08051, 2022.

- Fan et al. [2017] Haoqiang Fan, Hao Su, and Leonidas J Guibas. A point set generation network for 3D object reconstruction from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 605–613, 2017.

- Fan et al. [2022] Zhiwen Fan, Yifan Jiang, Peihao Wang, Xinyu Gong, Dejia Xu, and Zhangyang Wang. Unified implicit neural stylization. In European Conference on Computer Vision, pages 636–654. Springer, 2022.

- Gao et al. [2022] Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, and Sanja Fidler. GET3D: A generative model of high quality 3D textured shapes learned from images. In Advances in Neural Information Processing Systems, 2022.

- Gkioxari et al. [2019] Georgia Gkioxari, Jitendra Malik, and Justin Johnson. Mesh R-CNN. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9785–9795, 2019.

- Gkioxari et al. [2022] Georgia Gkioxari, Nikhila Ravi, and Justin Johnson. Learning 3D object shape and layout without 3D supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Goel et al. [2022] Shubham Goel, Georgia Gkioxari, and Jitendra Malik. Differentiable stereopsis: Meshes from multiple views using differentiable rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8635–8644, 2022.

- Hasselgren et al. [2022] Jon Hasselgren, Nikolai Hofmann, and Jacob Munkberg. Shape, light, and material decomposition from images using Monte Carlo rendering and denoising. NeurIPS, 2022.

- Huang et al. [2020] Jingwei Huang, Justus Thies, Angela Dai, Abhijit Kundu, Chiyu Max Jiang, Leonidas Guibas, Matthias Nießner, and Thomas Funkhouser. Adversarial texture optimization from RGB-D scans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

- Jang and Agapito [2021] Wonbong Jang and Lourdes Agapito. CodeNeRF: Disentangled neural radiance fields for object categories. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 12949–12958, 2021.

- Karras et al. [2019] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4401–4410, 2019.

- Karras et al. [2020] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of StyleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 8110–8119, 2020.

- Karras et al. [2021] Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems, 34:852–863, 2021.

- Lombardi et al. [2019] Stephen Lombardi, Tomas Simon, Jason Saragih, Gabriel Schwartz, Andreas Lehrmann, and Yaser Sheikh. Neural volumes: Learning dynamic renderable volumes from images. SIGGRAPH, 2019.

- Melas-Kyriazi et al. [2023] Luke Melas-Kyriazi, Christian Rupprecht, Iro Laina, and Andrea Vedaldi. RealFusion: 360° reconstruction of any object from a single image. In arXiv:2302.10663, 2023.

- Metzer et al. [2022] Gal Metzer, Elad Richardson, Or Patashnik, Raja Giryes, and Daniel Cohen-Or. Latent-NeRF for shape-guided generation of 3d shapes and textures. arXiv preprint arXiv:2211.07600, 2022.

- Mildenhall et al. [2020] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. NeRF: Representing scenes as neural radiance fields for view synthesis. In European Conference on Computer Vision, pages 405–421, 2020.

- Müller et al. [2022] Norman Müller, Andrea Simonelli, Lorenzo Porzi, Samuel Rota Bulò, Matthias Nießner, and Peter Kontschieder. AutoRF: Learning 3D object radiance fields from single view observations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- Müller et al. [2022] Thomas Müller, Alex Evans, Christoph Schied, and Alexander Keller. Instant neural graphics primitives with a multiresolution hash encoding. SIGGRAPH, 2022.

- Or-El et al. [2022] Roy Or-El, Xuan Luo, Mengyi Shan, Eli Shechtman, Jeong Joon Park, and Ira Kemelmacher-Shlizerman. StyleSDF: High-resolution 3D-consistent image and geometry generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 13503–13513, 2022.

- Park et al. [2019] Jeong Joon Park, Peter Florence, Julian Straub, Richard Newcombe, and Steven Lovegrove. DeepSDF: Learning continuous signed distance functions for shape representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 165–174, 2019.

- Peng et al. [2020] Songyou Peng, Michael Niemeyer, Lars Mescheder, Marc Pollefeys, and Andreas Geiger. Convolutional occupancy networks. In European Conference on Computer Vision, pages 523–540, 2020.

- Porzi et al. [2021] Lorenzo Porzi, Samuel Rota Bulo, and Peter Kontschieder. Improving panoptic segmentation at all scales. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7302–7311, 2021.

- Reizenstein et al. [2021] Jeremy Reizenstein, Roman Shapovalov, Philipp Henzler, Luca Sbordone, Patrick Labatut, and David Novotny. Common objects in 3D: Large-scale learning and evaluation of real-life 3D category reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10901–10911, 2021.

- Roich et al. [2022] Daniel Roich, Ron Mokady, Amit H Bermano, and Daniel Cohen-Or. Pivotal tuning for latent-based editing of real images. ACM Transactions on Graphics (TOG), 42(1):1–13, 2022.

- Saito et al. [2019] Shunsuke Saito, Zeng Huang, Ryota Natsume, Shigeo Morishima, Angjoo Kanazawa, and Hao Li. PIFu: Pixel-aligned implicit function for high-resolution clothed human digitization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 2304–2314, 2019.

- Sitzmann et al. [2019] Vincent Sitzmann, Michael Zollhöfer, and Gordon Wetzstein. Scene representation networks: Continuous 3D-structure-aware neural scene representations. Advances in Neural Information Processing Systems, 32, 2019.

- Song et al. [2022] Haorui Song, Yong Du, Tianyi Xiang, Junyu Dong, Jing Qin, and Shengfeng He. Editing out-of-domain gan inversion via differential activations. In European Conference on Computer Vision, pages 1–17, 2022.

- Sucar et al. [2020] Edgar Sucar, Kentaro Wada, and Andrew Davison. Nodeslam: Neural object descriptors for multi-view shape reconstruction. In 2020 International Conference on 3D Vision (3DV), pages 949–958. IEEE, 2020.

- Takikawa et al. [2022] Towaki Takikawa, Alex Evans, Jonathan Tremblay, Thomas Müller, Morgan McGuire, Alec Jacobson, and Sanja Fidler. Variable bitrate neural fields. In ACM SIGGRAPH 2022 Conference Proceedings, pages 1–9, 2022.

- Tremblay et al. [2018] Jonathan Tremblay, Aayush Prakash, David Acuna, Mark Brophy, Varun Jampani, Cem Anil, Thang To, Eric Cameracci, Shaad Boochoon, and Stan Birchfield. Training deep networks with synthetic data: Bridging the reality gap by domain randomization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 969–977, 2018.

- Trevithick and Yang [2021] Alex Trevithick and Bo Yang. GRF: Learning a general radiance field for 3D scene representation and rendering. In ICCV, 2021.

- Wang et al. [2018] Nanyang Wang, Yinda Zhang, Zhuwen Li, Yanwei Fu, Wei Liu, and Yu-Gang Jiang. Pixel2mesh: Generating 3D mesh models from single RGB images. In Proceedings of the European Conference on Computer Vision (ECCV), pages 52–67, 2018.

- Wang et al. [2021a] Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. NeuS: Learning neural implicit surfaces by volume rendering for multi-view reconstruction. arXiv preprint arXiv:2106.10689, 2021a.

- Wang et al. [2021b] Qianqian Wang, Zhicheng Wang, Kyle Genova, Pratul P Srinivasan, Howard Zhou, Jonathan T Barron, Ricardo Martin-Brualla, Noah Snavely, and Thomas Funkhouser. IBRNet: Learning multi-view image-based rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4690–4699, 2021b.

- Wang et al. [2004] Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4):600–612, 2004.

- Wei et al. [2022] Yao Wei, George Vosselman, and Michael Ying Yang. Flow-based gan for 3d point cloud generation from a single image. arXiv preprint arXiv:2210.04072, 2022.

- Yang et al. [2021a] Bangbang Yang, Yinda Zhang, Yinghao Xu, Yijin Li, Han Zhou, Hujun Bao, Guofeng Zhang, and Zhaopeng Cui. Learning object-compositional neural radiance field for editable scene rendering. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 13779–13788, 2021a.

- Yang et al. [2021b] Bangbang Yang, Yinda Zhang, Yinghao Xu, Yijin Li, Han Zhou, Hujun Bao, Guofeng Zhang, and Zhaopeng Cui. Learning object-compositional neural radiance field for editable scene rendering. In International Conference on Computer Vision (ICCV), 2021b.

- Yariv et al. [2021] Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. Volume rendering of neural implicit surfaces. In Thirty-Fifth Conference on Neural Information Processing Systems (NeurIPS), 2021.

- Yen-Chen et al. [2021] Lin Yen-Chen, Pete Florence, Jonathan T Barron, Alberto Rodriguez, Phillip Isola, and Tsung-Yi Lin. iNeRF: Inverting neural radiance fields for pose estimation. In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 1323–1330, 2021.

- Yu et al. [2021] Alex Yu, Vickie Ye, Matthew Tancik, and Angjoo Kanazawa. pixelNeRF: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4578–4587, 2021.

- Zhang et al. [2021] Jason Zhang, Gengshan Yang, Shubham Tulsiani, and Deva Ramanan. NeRS: neural reflectance surfaces for sparse-view 3D reconstruction in the wild. Advances in Neural Information Processing Systems, 34:29835–29847, 2021.

- Zhang et al. [2022] Jichao Zhang, Enver Sangineto, Hao Tang, Aliaksandr Siarohin, Zhun Zhong, Nicu Sebe, and Wei Wang. 3D-aware semantic-guided generative model for human synthesis. In European Conference on Computer Vision, pages 339–356. Springer, 2022.

- Zhang et al. [2018] Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 586–595, 2018.

Appendix A Leveraging Synthetic Data

Collecting 3D data in the real world is costly with current 3D scanning technology. Although our method is not limited to synthetic training data, we believe our ability to leverage priors from synthetic datasets to reconstruct real-world objects is an advantage. This allows us to use 3D model repositories from gaming, entertainment, and manufacturing industries. It may also enable users of our method to generate more diverse data via data augmentation to cover the long-tail scenarios that are rarely encountered in the real world. From the table below, we can see that Objaverse [11], a recently released dataset, consists of 818K synthetic 3D models over 21K object categories and is orders of magnitude larger than existing real-world 3D datasets such as Objectron [1] and CO3D [34]. In this paper, we pre-trained our GANs on ShapeNet [6].

In light of the growing availability of 3D synthetic data, We believe that sim-2-real is an important path forward to leverage this data and push the frontier of real-world 3D perception, alongside parallel research that focuses on real data.

| Objectron | CO3D | Shapenet | Objaverse | |

|---|---|---|---|---|

| # objects | 15K | 19K | 51K | 818K |

| # classes | 9 | 50 | 55 | 21K |

Appendix B Datasets

Dataset Choice. Contrary to many sparse-view reconstruction works that assume full 360-degree observational coverage of an object, our paper focuses on partial-view circumstances (Figure 2) where 360-degree observations are typically not feasible due to real-world constraints, such as objects being placed against walls. We chose to evaluate our model on ScanNet instead of CO3D because, in CO3D, the videos are captured by placing the object on a solid surface and recording a full circle around it, ensuring that the entire object remains in view without any occlusion. However, these videos do not resemble natural observations like those found in ScanNet.Since we are interested in reconstructing from real-world video observations, we prioritized evaluating our model on ScanNet instead of ShapeNet.

Dataset Detail. ShapeNet [6] contains 6778, 8443, and 7497 shapes for Chair, Table, and Car, respectively. Following the experimental setting in GET3D [14], we randomly choose 70% of all shapes for training. For each shape, we render 24 images in Blender111We use the following rendering script: https://github.com/nv-tlabs/GET3D/tree/master/render_shapenet_data.. Each image has a resolution of .

For Chair and Table, we evaluate models on ScanNet [10], a dataset of real-world indoor scene scans. We select the scenes commonly used in other papers [49] and use all the chairs and tables observed in these scenes for evaluation. We do not filter sequences with inaccurate object masks or imperfect observations, as we want to evaluate the robustness of these models under noisy and partial measurements, which often occur when algorithms are deployed in the real world. When we evaluate the renderings, we only use images with accurate object masks. As ScanNet does not provide object poses, we use the poses estimated by Scan2CAD [2]. We adopt the same ScanNet scenes used in [49]. Every ScanNet scene is a real-world scene that can contain many chair and table instances.

We evaluated all methods on all the chairs and tables that appear in 10 ScanNet scenes that are commonly chosen in related works such as Object-NeRF and NICE-SLAM. That adds up to 26 chairs and 18 tables. This is more than is often done, e.g., NodeSLAM evaluated 5 scenes and 10 objects for each of the three classes. Furthermore, we calculated the p-value statistical significance of our model’s results against the results of one of the most competitive baselines (AutoRF) in Table 2.222Typically, p 0.05 indicates strong evidence that the difference is statistically significant.

| p-value | PSNR | SSIM | LPIPS | ||||||

|---|---|---|---|---|---|---|---|---|---|

| # views | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 |

| ScanNet Chairs | 0.131 | 0.106 | 0.012 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| ScanNet Tables | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

For Car, we evaluate models on NuScenes [3], a driving dataset with 3D detection and tracking annotations. Following the experimental setting in [28], we filter for sequences in the daytime and run a pre-trained 2D panoptic segmentation model [33] as NuScenes does not provide 2D segmentation masks. We also filter for sequences with sufficient camera movements and sufficiently clear observations.333We filter for observations above the resolution of and sequences with a maximum rotational difference of more than 2 degrees. Across all categories, we obtained around 50 sequences in total. We acknowledge the possibility of incorporating additional instances. However, due to time and computational limitations of some baselines, those in particular that require test-time optimization consume a significant amount of time.

Appendix C Runtime Analysis

We run our method on a Linux machine with NVIDIA A40 GPUs. In our experiments, we run 350 gradient update steps in the filtering inversion phase (Phase I), which takes approximately 21 seconds with a single A40 GPU and one input image of size . The reported 21-second runtime per frame corresponds to the results in the paper at 350 iterations, but it is not the minimum. In the table below we show the results with only 50 iterations or 3 seconds. With a modest performance sacrifice, our method is suitable for near-real-time applications that process a new frame every few seconds, e.g., on a slow moving robot. Further acceleration is likely possible using optimization such as FP16 and fused operations.

| ScanNet Chairs | |||||||||

| PSNR | SSIM | LPIPS | |||||||

| # views | 1 | 3 | 5 | 1 | 3 | 5 | 1 | 3 | 5 |

| FINV-GET3D (3s) | 22.93 | 23.54 | 24.03 | 0.927 | 0.933 | 0.939 | 0.131 | 0.125 | 0.119 |

| FINV-GET3D (21s) | 24.61 | 24.96 | 26.23 | 0.937 | 0.944 | 0.950 | 0.102 | 0.089 | 0.082 |

Appendix D More Visualizations on Rotational Delta

Appendix E Additional Analyses

We provide additional reconstruction results of our model in Figure 14. We wondered how our system, having seen only synthetic objects, would handle uncommon objects. Combining learned object priors and the use of the Refinement phase in FINV, we find that it can reconstruct, from a single view, the avocado chair geometrically better than methods that do not learn object priors such as NeRS [53] and Differentiable Stereopsis (DS) [17].

While FINV makes a step towards partial-view object-centric reconstruction, it has some limitations. Chairs generated by a trained GET3D model tend to have consistent textures. That is, the model has the prior that, for instance, the back side of a chair tends to have the same texture as the front side of the chair. However, this prior can be lost or altered when we fine-tune the entire generator to fit the input observations (during the Refinement phase), resulting in unrealistic textures for the unobserved sides of the object. Figure 13 gives one such example.

Additionally, FINV does not model any optical phenomena such as specular highlights, reflections, and transparency. When generating textured objects, the texture then has baked-in light, one important step forward for generative objects from few views would be to include the physical proprieties of the object texture, e.g., roughness. This is still an open problem in the graphics community whereas differentiable renderers are used to optimize shape, texture, material, and environment light map [18]. A promising extension is to use DIB-R++ [8] to predict environmental map light and combine that with GET3D [14] to generate view-dependent lighting effects.

Appendix F More Related Works

Novel view synthesis, a long-standing research problem in the field of computer vision, entails constructing new views of a scene or an object from one or more views. Recent works demonstrate the effectiveness of learned implicit neural representations for rendering novel views [24, 27, 29, 37, 48]. However, these approaches require many input views and substantial optimization time per scene as they fit a single model to each scene or object. Recent work has explored 3D shape and/or texture generation [53, 50, 19], these methods assume views that fully cover the object, whereas our method works with a single input view. Additionally, locally-conditioned CNN features have been used to generalize neural implicit representations across scenes [36, 32, 42, 52, 45, 7]. These methods have shown excellent performance in novel-view synthesis in a range of testing scenarios. However, they mostly learn pixel-level priors and do not consider generative object-level priors. This limits their generalization capability, especially in cases with partial observations.

There is abundant work on learning to map image to 3D representations such as voxels, point cloud, and mesh. For example, [9] takes the image as input and generates a 3D object by voxel. [12] maps an image to a point cloud by learning a residual mapping in the latent space of an autoencoder. [47] uses a GAN architecture to generate 3D point cloud from a 2D image. In recent years, image to mesh methods are growing in popularity. [43] learns a mapping from the image to mesh by using a graph-based convolutional neural network. Orthogonal to the 3D representation is to incorporate multiple views. 3D-R2N2 [9] proposes to update its reconstruction given new views using an LSTM. In this work, we instead focus on implicit reconstruction, where the goal is to synthesize new views of a captured object.

Another related work to our paper is NodeSLAM [39], which considers generative object priors (i.e., class-conditional variational autoencoder) for object shape reconstruction based on RGBD inputs. However, NodeSLAM requires depth observation and only reconstructs geometry. A more recent method, AutoRF [28], also uses a pre-trained 3D generative prior to reconstruct objects in the wild (specifically cars in street scenes) from single RGB observation. However, AutoRF uses a separate ResNet encoder network to map the input images to a latent code, which may not generalize well to out-of-distribution data not seen during training. That is, it would be hard for AutoRF to leverage the large repository of synthetic data to reconstruct real-world objects. In contrast, FIN3D performs filtered inversion through a pre-trained 3D generator and utilizes a Refinement Phase to address the simulation-to-real gap.

Appendix G Future Work

We have made progress in generating photo-realistic views of objects that were not fully visible, important challenges remain. One important aspect of our proposed system is based on leveraging a generative method (GET3D). Generative methods can be quite challenging and time-consuming to train. We expect research in these areas to improve the accessibility of our proposed method. Also, our method currently focuses on single categories, exploring larger object diversity will potentially broaden the applicability of our method. This is somewhat of an open problem as most 3D GAN methods target single categories. The increasing availability of large datasets of 3D models [11], as well similar real-world data, will facilitate this type of research.