Parameter Sharing Exploration and Hetero-center Triplet Loss for Visible-Thermal Person Re-Identification

Abstract

This paper focuses on the visible-thermal cross-modality person re-identification (VT Re-ID) task, whose goal is to match person images between the daytime visible modality and the nighttime thermal modality. The two-stream network is usually adopted to address the cross-modality discrepancy, the most challenging problem for VT Re-ID, by learning the multi-modality person features. In this paper, we explore how many parameters a two-stream network should share, which is still not well investigated in the existing literature. By splitting the ResNet50 model to construct the modality-specific feature extraction network and modality-sharing feature embedding network, we experimentally demonstrate the effect of parameter sharing of two-stream network for VT Re-ID. Moreover, in the framework of part-level person feature learning, we propose the hetero-center triplet loss to relax the strict constraint of traditional triplet loss by replacing the comparison of the anchor to all the other samples by the anchor center to all the other centers. With extremely simple means, the proposed method can significantly improve the VT Re-ID performance. The experimental results on two datasets show that our proposed method distinctly outperforms the state-of-the-art methods by large margins, especially on the RegDB dataset achieving superior performance, rank1/mAP/mINP 91.05%/83.28%/68.84%. It can be a new baseline for VT Re-ID, with a simple but effective strategy.

Index Terms:

Visible-thermal person re-identification, cross-modality discrepancy, parameters sharing, hetero-center triplet loss.I Introduction

Person re-identification (Re-ID) can be regarded as a retrieval task, which aims at searching a person of interest from multi-disjoint cameras deployed at different locations. It has received increasing interest in the computer vision community due to its importance in intelligent video surveillance and criminal investigation applications. Visible-visible Re-ID (VV Re-ID), the most common single-modality Re-ID task, has progressed and achieved high performance in recent years [33].

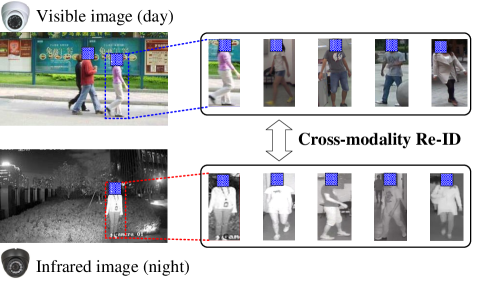

However, in practical scenarios, a 24-hour intelligent surveillance system, the visible-thermal cross-modality person re-identification (VT Re-ID) problem, is frequently encountered, as shown in Fig. 1. For example, criminals always collect information during the day and execute crimes at night, in which case, the query image may be obtained from the thermal camera (or the infrared camera) during the nighttime, while the gallery images may be captured by the visible cameras during the daytime.

In recent years, an increasing number of researchers have focused on the VT Re-ID task, achieving substantial progresses with novel and effective ideas. However, many works evaluated the effectiveness of their methods with a poor baseline, which seriously impedes the development of the VT Re-ID community. In the present study, our proposed method can be set as a strong and effective baseline for VT Re-ID with some extremely simple means.

The VT Re-ID task suffers from two major problems, the large cross-modality discrepancy arisen from the different reflective visible spectra and sensed emissivities of visible and thermal cameras, and the large intra-modality variations, similar to the VV Re-ID task, caused by viewpoint changing and different human poses, etc. To alleviate the extra cross-modality discrepancy in VT Re-ID, an intuitive and apparent method is to map the cross-modality persons into a common feature space to realize the similarity measure. Therefore, a two-stream framework is always adopted, including two modality-specific networks with independent parameters for feature extraction, and a parameter-sharing network for feature embedding to project the modality-specific features into a common feature space. Generally, the two modality-specific networks are not required to have the same architecture. The only criterion is that their outputs should have the same dimension shapes to be the input of the parameter-sharing network for feature embedding. In the literature, the ResNet50 [7] model is preferentially adopted as the backbone to construct the two-stream network, all the res-convolution blocks for feature extraction and some parameter-sharing fully connected layers for feature embedding. However, is this setting the best choice to construct the two-stream network? Those parameter-sharing fully-connected layers can only process the 1D-shaped vector, ignoring the spatial structure information of persons. To take advantage of the convolutional layers for processing the 3D-shaped tensor with spatial structure information, we can share some parameters of res-convolution blocks for feature embedding. In this situation, how many parameters of the two-stream network to share is the point of this study to investigate.

In addition, the network is always trained with identification loss and triplet loss to simultaneously enlarge the inter-class distance and minimize the intra-class distance. The triplet loss is performed on each anchor sample to all the other samples from both the same modality and cross modality. This may be a strong constraint for constraining the pairwise distance of those samples, especially when there exist some outliers (bad examples), which would form the adverse triplet to destroy other well-learned pairwise distances. It also leads to high complexity with a large number of triplets. The cross-modality and intra-modality training strategy is separately employed to enhance feature learning [35, 12]. In the authors’ opinion, the separate cross-modality and intra-modality training strategy may be unnecessary if those learned person features by the two-stream network are good enough in the common feature space, where the features can hardly be distinguished from which modality. Therefore, we propose the hetero-center triplet loss that directly performs in the unified common feature space. The hetero-center triplet loss is performed on each anchor center to all the other centers, which can also reduce the computational complexity.

The main contributions can be summarized as follows.

-

•

We achieve state-of-the-art performance on two datasets by large margins, which can be a strong VT Re-ID baseline to boost future research with high quality.

-

•

We explore the parameter-sharing problem in a two-stream network. To the best of our knowledge, it is the first attempt to analyze the impact of the number of parameter sharing for cross-modality feature learning.

-

•

We propose the hetero-center triplet loss to constrain the distance of different class centers from both the same modality and cross modality.

II Related work

This section briefly reviews those existing VT Re-ID approaches. Compared to the traditional VV Re-ID, except for the intra-modality variations, VT Re-ID should handle the extra cross-modality discrepancy. To alleviate this problem, researchers focus on projecting (or translating) the heterogeneous cross-modality person images into a common space for similarity measure, mainly including the following aspects: network designing, metric learning and image translation.

II-A Network designing

Feature learning is the fundamental step of Re-Identification before similarity measure. Most studies focus on the visible and thermal person feature learning through designing deep neural networks (DNN). Ye et.al [31, 35, 32] proposed adopting a two-stream network to separately extract the modality-specific features, and then performed the feature embedding to project those features into the common feature space with parameters sharing fully connected layers. Based on the two-stream network, Liu et al. [12] introduced mid-level features incorporation to enhance the modality-shared person features with more discriminability. To learn good modality-shared person features, Dai et al. [2] proposed the cross-modality generative adversarial network (cmGAN) under the adversarial learning framework, including a discriminator to distinguish whether the input features are from the visible modality or thermal modality. Zhang et al. [37] proposed a dual-path cross-modality feature learning framework, including a dual-path spatial-structure-preserving common space network and a contrastive correlation network, which preserves intrinsic spatial structures and attends to the difference of input cross-modality image pairs. To explore the potential of both the modality-shared information and the modality-specific characteristics to boost the re-identification performance, Lu et al. [13] proposed modeling the affinities of different modality samples according to the shared features and then transferring both shared and specific features among and across modalities.

Moreover, for handling the cross-modality discrepancy, some works concentrate on the input design of a single-stream network to simultaneously utilize visible and thermal information. Wu et al. [27] first proposed to study the VT Re-ID problem, built the SYSU-MM01 dataset, and developed the zero-padding method to extract the modality-shared person features with a single-stream network. Kang et al. [9] proposed combining the visible and thermal images as a single input with different image channels. Additionally, Wang et al. [24] also adopted a multispectral image as the input for feature learning, where the multispectral image consists of the visible image and corresponding generated thermal image, or the generated visible image and corresponding thermal image.

II-B Metric learning

Metric learning is the key step of Re-ID for similarity measure. In the deep learning framework, due to the advantage of DNN on feature learning, Re-ID could achieve good performance with only the Euclidean distance metric. Therefore, metric learning is inherent in the training loss function of DNN, guiding the training process to make the extracted features more discriminative and robust. Ye et al. [31] proposed a hierarchical cross-modality matching model by jointly optimizing the modality-specific and modality-shared metrics in a sequential manner. Then, they presented a bi-directional dual-constrained top-ranking loss to learn discriminative feature representations based on a two-stream network [35], based on which, the center-constraint was also introduced to improve performance [32]. Zhu et al. [39] proposed the hetero-center loss to reduce the intra-class cross-modality variations. Liu et al. [12] also proposed the dual-modality triplet loss to guide the training procedures by simultaneously considering the cross-modality discrepancy and intra-modality variations. Hao et al. [6] proposed an end-to-end two-stream hypersphere manifold embedding network with both classification and identification loss, constraining the intra-modality variations and cross-modality variations on this hypersphere. Zhao et al. [38] introduced the hard pentaplet loss to improve the performance of the cross-modality re-identification. Wu et al. [26] cast the learning shared knowledge for cross-modality matching as the problem of cross-modality similarity preservation, and proposed a focal modality-aware similarity-preserving loss to leverage the intra-modality similarity to guide the inter-modality similarity learning.

II-C Image translation

The aforementioned works handle the cross-modality discrepancy and intra-modality variations from the feature extraction level. Recently, image generation methods based on generative adversarial network (GAN) have drawn much attention in VT Re-ID, reducing the domain gap between visible and thermal modalities from image level. Kniaz et al. [10] first introduced GAN to translate a single visible image to a multimodal thermal image set, and then performed the Re-ID in the thermal domain. Wang et al. [20] proposed an end-to-end alignment generative adversarial network (AlignGAN) for VT Re-ID, to jointly bridge the cross-modality gap with feature alignment and pixel alignment. Wang et al. [24] proposed a dual-level discrepancy reduction learning framework based on a bi-directional cycleGAN to reduce the domain gap, from both the image and feature levels. Choi et al. [1] proposed a hierarchical cross-modality disentanglement (Hi-CMD) method, which automatically disentangles ID-discriminative factors and ID-excluded factors from visible-thermal images. Hi-CMD includes an ID-preserving person image generation network and a hierarchical feature learning module.

However, a person in the thermal modality can have different colors of clothes in the visible modality, leading to one thermal person image corresponding to multiple reasonable visible person images by image generation. It is hard to know which one is the correct target to be generated for Re-ID since when generating images, the model cannot access the gallery images that only appear in the inference phase. Image generation-based methods always have performance uncertainty, high complexity and high training trick demands.

III Our proposed method

In this section, we introduce the framework of our proposed feature learning model for VT Re-ID, as depicted in Fig. 2. The model mainly consists of three components: (1) the two-stream backbone network, exploring the parameter sharing, (2) the part-level feature extraction block and (3) the loss, our proposed hetero-center triplet loss and identity softmax loss.

III-A Two-stream backbone network

The two-stream network is a conventional way to extract features in visible-thermal cross-modality person re-identification, first introduced in [35]. It consists of two parts: feature extractor and feature embedding. The feature extractor aims at learning the modality-specific information from two heterogeneous modalities, while the feature embedding focuses on learning the multi-modality shared features for cross-modality re-identification by projecting those modality-specific features into a modality-shared common feature space.

In the existing literature, feature embedding is always computed by some shared fully connected layers, and the feature extractor is always a well-designed convolution neural network, such as ResNet50. In this situation, there may be two problems we should pay attention.

-

1.

The feature extractor consists of two branches with independent parameters. If each branch consists of the whole well-designed CNN architecture, the number of network parameters (model size) would increase by double.

-

2.

Feature embedding consists of some shared fully connected layers, which can only process the 1D-shaped feature vector without any person spatial structure information. However, the person spatial structure information is crucial to describe a person.

| Modality-specific | Modality-shared | |

| feature extractor | feature embedding | |

| ( and ) | () | |

| - | ||

| - |

To simultaneously address the aforementioned two problems, we propose to split the well-designed CNN model into two parts. The former part can be set as a two-stream feature extractor with independent parameters, while the latter part can be set as the feature embedding model. In this way, the whole model size will be reduced (corresponding to problem 1). The input of the feature embedding block is the output of the feature extractor, only the middle 3D feature maps of the well-designed CNN model, which is full of the person spatial structure information (corresponding to problem 2).

Therefore, the key point is how to split the well-designed CNN model. Namely, how many parameters of the two-stream network should be independent to learn the modality-specific information?

For simplicity in presentation, we denote the visible-stream feature extraction network as function , the thermal-stream feature extraction network as to learn the modality-specific information, and the feature embedding network as to project modality-specific person features into the shared common feature space. Given a visible image and a thermal image , the learned 3D person features and in common space can be represented as,

| (3) |

We adopt the ResNet50 model as the backbone, with the consideration of its competitive performance in some Re-ID systems as well as its relatively concise architecture. The ResNet50 model mainly consists of one shallow convolution block and four res-convolution blocks, , , and . To split the ResNet50 model into our modality-specific feature extractor and modality-shared feature embedding network, we can sequentially obtain the split scheme as shown in Table I, where means starts from the . and are two extreme cases. means that the two-stream backbone network shares all the ResNet50 model without the modality-specific feature extractor, while means that all parameters of the two streams for the visible and thermal modalities are totally independent as in [35]. Which is the best choice for a two-stream backbone network for cross-modality Re-ID? In the authors’ opinion, these two extreme cases and are not good, since they ignore some important information in the cross-modality Re-ID task. Experimental results in Sec. IV-B1 show that the modality-shared feature embedding network comprising some res-convolution blocks is a good choice, since the input of modality-shared feature embedding network is 3D shape feature maps, with the spatial structure information of persons.

III-B Part-level feature extraction block

In VV Re-ID, state-of-the-art results are always achieved with part-level deep features [28, 19]. A typical and simple approach is partitioning persons into horizontal strips to coarsely extract the part-level features, which can then be concatenated to describe the person’s body structure. Body structure is the inherent characteristic of a person, which is invariant information of the person’s body whatever modality the image is captured from. Namely, the body structure information is modality-invariant, which can be adopted as modality-shared information to represent a person. Therefore, according to the part-level feature extraction method in [18, 22], we also adopt the uniform partition strategy to obtain coarse body part features.

Given a person (visible or thermal) images, it will become the 3D feature map after undergoing all the layers inherited from the two-stream backbone network. Based on the 3D feature maps, as shown in Fig. 2, there are 3 steps to extract the part-level person features as follows.

-

1.

The 3D feature maps are uniformly partitioned into strips in the horizontal orientation to generate the coarse body part feature maps, as shown in Fig. 2, where .

-

2.

Instead of utilizing the widely used max-pooling or average pooling, we adopt a generalized-mean (GeM) [16] pooling layer to translate the 3D part feature maps into the 1D part feature vectors. Given a 3D feature patch , the GeM can be formulated as,

(4) where is the pooled result, denotes the element number, and p is the pooling hyperparameter, which can be preset or learned by back-propagation.

-

3.

Afterwards, a convolutional ( Conv) block is employed to reduce the dimension of part-level feature vectors. The block includes a convolutional layer whose output channel number is , following a batch normalization layer and a ReLU layer.

Moreover, each part-level feature vector is first adopted to perform metric learning with triplet loss (or our proposed hetero-center triplet loss ). Then, a fully connected layer with desired dimensions (corresponding to the number of identities in our model) is adopted to perform the identification with softmax . There are part-level features that need different classifiers without sharing parameters.

Finally, all the part-level features are concatenated () to form the final person features for the similarity measure during testing. Additionally, the final person features can also be supervised by the (or ).

III-C The hetero-center triplet loss

In this subsection, we introduce the designed hetero-center triplet loss to guide the network training for part-level feature learning. The learning objective is directly conducted in the common feature space to simultaneously deal with both cross-modality discrepancy and intra-modality variations. First, we revisit the general triplet loss.

III-C1 Triplet loss revisit

Triplet loss was first proposed in FaceNet [17], and then improved by mining the hard triplets [8]. The core idea is to form batches by randomly sampling identities, and then randomly sampling images of each identity, resulting in a mini-batch with images. For each sample in the mini-batch, we can select the hardest positive and hardest negative samples within the mini-batch to form the triplets for computing the batch hard triplet loss,

| (5) | ||||

which is defined for a mini-batch , where a data point denotes the image of the person in the batch, denotes the standard hinge loss, denotes the Euclidean distance of data point and , is the margin.

III-C2 Batch sampling method

Due to our two-stream structure respectively extracting features for visible and thermal images, we introduce the following online batch sampling strategy. Specifically, person identities are first randomly selected at each iteration, and then we randomly select visible images and thermal images of the selected identity to form the mini-batch, in which a total of images are obtained. This sampling strategy can fully utilize the relationship of all the samples within a mini-batch. In this manner, the sample size of each class is the same, which is important to avoid the perturbations caused by class imbalance. Moreover, due to the random sampling mechanism, the local constraint in the mini-batch can achieve the same effect as the global constraint in the entire set.

| positive | negative | |

|---|---|---|

III-C3 Hetero-center triplet loss

Eq. (5) shows that triplet loss computes the loss by comparison of the anchor to all the other samples. It is a strong constraint, perhaps too strict to constrain the pairwise distance if there exist some outliers (bad examples), which would form the adverse triplet to destroy other pairwise distances. Therefore, we consider adopting the center of each person as the identity agent. In this manner, we can relax the strict constraint by replacing the comparison of the anchor to all the other samples by the anchor center to all the other centers.

First, in a mini-batch, the center for the features of every identity from each modality is computed,

| (6) | |||

which is defined for a mini-batch, where denotes the visible image feature of the person in the mini-batch, while corresponds to the thermal image feature.

Therefore, based on our sampling method, in each mini-batch, there are visible image centers {} and thermal centers {}, as shown in Fig. 3. In the following, all the computations are only performed on the centers.

The goal of metric learning is to make those features from the same class close to each other (intra-class compactness), while those features from different classes are far away from each other (inter-class separation). Therefore, in our VT cross-domain Re-ID, based on the sampling strategy and calculated centers, we can define the hetero-center triplet loss as,

| (7) | ||||

which is defined on mini-batch centers including both visible centers {} and thermal centers {}. For each identity, concentrates on only one cross-modality positive pair and the mined hardest negative pair in both the intra- and inter-modality.

Comparing the general triplet loss (Eq. (5)) to our proposed center-based triplet loss (Eq. (7)), we replace the comparison of the anchor to all the other samples by the anchor center to all the other centers. This modification has two major advantages:

-

a)

It reduces the computational cost, as shown in Table II. For a mini-batch with images, requires computing pairwise distance for hardest positive sample mining and for hardest negative sample mining. In comparison, only needs to compute the pairwise distance for positive sample pairs (there are only cross-modality positive center pairs), and for hardest negative center sample mining. The computational cost is largely reduced.

-

b)

It relaxes the sample-based triplet constraint to the center-based triplet constraint, which also preserves the property of handling both the intra-class and inter-class variations simultaneously on visible and thermal modalities in the common feature space. For each identity, minimizing the only cross-modality positive center pairwise distance can ensure intra-class feature compactness. The hardest negative center mining can ensure the inter-class feature distinguishable property both in visible and thermal modalities.

|

|

| (a) Learned center loss | (b) Hetero-center triplet loss |

III-C4 Comparison to other center-based losses

There are two kinds of center-based losses: the learned centers [25, 32] and the computed centers [39]. The main difference lies in the method of obtaining the centers. One learns them by pre-setting a center parameter for each class, while the other computes the centers directly based on the learned deep features.

The learned centers. The learned center loss [25] is first introduced in face verification to learn a center for the features of each class and penalizes the distances between the deep features and their corresponding centers. For our cross-modality VT Re-ID task with the sampling strategy, the learned center loss can be extended in a bi-directional manner [12, 32, 35] as follows,

| (8) |

where denotes the visible image feature of the person in the mini-batch, while corresponds to the thermal image feature. is the class center for both visible and thermal modalities.

Comparing the learned center loss (Eq. (8)) to our proposed hetero-center triplet loss (Eq. (7)), there are the following differences. 1) is in a comparison of the anchor center to centers rather than ’s anchor sample to centers. 2) computes the centers for visible and thermal modalities, while unifies the class center for both visible and thermal modalities into one learned vector. 3) is formulated by triplet mining the properties of both the inter-class separability and intra-class compactness, while only focuses on the intra-class compactness, ignoring the inter-class separability.

As shown in Fig. 4, our proposed hetero-center triplet loss concentrates on both of the inter-class separability and intra-class compactness, while the learned center loss ignores the inter-class separability for both the intra- and inter modality. only performs well on intra-modality intra-class compactness, but poorly on cross-modality intra-class compactness. This may be due to the hard training of learned center loss combined with identification loss, which leads to the unsatisfactory performance.

The computed centers. The other method for obtaining the center of each class is to calculate it directly based on the learned deep features [39]. We also adopt this approach. Instead of pre-setting a center parameter to be learned as Eq. (8), the centers are directly calculated as Eq. (6). In [39], the hetero-center loss was proposed to improve the intra-class cross-modality similarity, penalizing the center distance between two modality distributions, which can be formulated as follows,

| (9) |

Comparing the hetero-center loss (Eq. (9)) to our proposed hetero-center triplet loss (Eq. (7)), the main difference is that only focuses on the intra-class cross-modality compactness (the red arrows in Fig. 3), while our additionally focuses on the inter-class separability for both the intra- and inter-modality (the grey arrows in Fig. 3) with triplet mining. In summary, is only a part of our proposed .

III-C5 The overall loss

Moreover, similar to some state-of-the-art VT Re-ID methods [6, 24, 35, 32, 39], for the sake of feasibility and effectiveness for classification, identification loss is also utilized to integrate the identity-specific information by treating each person as a class. The identification loss with label smooth operation is adopted to prevent overfitting the Re-ID model training. Given an image, we denote as the truth ID label and as the ID prediction logits of the class. The identification loss is calculated as follows,

| (10) | ||||

| (13) |

where is the number of identities in the total training set, and is a constant to encourage the model to be less confident on the training set. In this work, is set to 0.1.

We adopt both the identification loss and hetero-center triplet loss for each part-level feature, while only the hetero-center triplet loss is for the final concatenated global features. Therefore, the final loss is,

| (14) |

where is a predefined tradeoff parameters.

IV Experiments

In this section, we evaluate the effectiveness of our proposed methods for extracting the person features for VT Re-ID tasks on two public datasets, RegDB [15] and SYSU-MM01 [27]. The example images are shown in Fig. 5.

IV-A Experimental settings

IV-A1 Datasets and settings

SYSU-MM01 [27] is a large-scale dataset collected by 6 cameras, including 4 visible and 2 infrared cameras, captured in the SYSU campus. Some cameras are deployed in indoor environments and others are deployed in outdoor environments. The training set contains 395 persons, including 22,258 visible images and 11,909 infrared images. The testing set contains another 96 persons, including 3,803 infrared images for the query and 301 randomly selected visible images as the gallery set. In the all-search mode, the gallery set contains all the visible images captured from all four visible cameras. In indoor-search mode, the gallery set only contains the visible images captured by two indoor visible cameras. Generally, the all-search mode is more challenging than the indoor-search mode. We follow existing methods to perform 10 trials of gallery set selection in the single-shot setting [35, 32], and then report the average retrieval performance. A detailed description of the evaluation protocol can be found in [27].

RegDB [15] was constructed by dual-camera (one visible and one thermal camera) systems, and includes 412 persons. For each person, 10 visible images were captured by a visible camera, and 10 thermal images are obtained by a thermal camera. We follow the evaluation protocol in [31] and [35], where the dataset is randomly split into two halves, one for training and the other for testing. For testing, the images from one modality (default is thermal) were used as the gallery set while those from the other modality (default is visible) were used as the probe set. The procedure is repeated for 10 trials to achieve statistically stable results, recording the mean values.

IV-A2 Evaluation Metrics

Following existing works, cumulative matching characteristics (CMC), mean average precision (mAP) and the mean inverse negative penalty (mINP) are adopted as the evaluation metrics. CMC (rank-r accuracy) measures the probability of a correct cross-modality person image occurring in the top-r retrieved results. mAP measures the retrieval performance when multiple matching images occur in the gallery set. Moreover, mINP considers the hardest correct match that determines the workload of inspectors [33]. Note that all the person features are first normalized for testing.

IV-A3 Implementation details

The implementation111https://github.com/hijune6/Hetero-center-triplet-loss-for-VT-Re-ID of our method is with the Pytorch framework. Following the existing person Re-ID works, the ResNet50 model is adopted as the backbone network for a fair comparison, and the pre-trained ImageNet parameters are adopted for the network initialization. Specifically, the stride of the last convolutional block is changed from 2 to 1 to obtain fine-grained feature maps with large body size. In the training phase, the input images are resized to and padded with 10, then randomly left-right flipped and cropped to for data augmentation. We adopt the stochastic gradient descent (SGD) optimizer for optimization, and the momentum parameter is set to 0.9. We set the initial learning rate as 0.1 for both datasets. The warmup learning rate strategy is applied to bootstrap the network to enhance performance. The learning rate () at epoch is computed as follows,

| (19) |

We set the predefined margin for all triplet losses. For the sampling strategy, we set , for the RegDB dataset, and , for the SYSU-MM01 dataset. For the tradeoff parameter, we set for the RegDB dataset, and for the SYSU-MM01 dataset. The dimension of part-level feature is set to , and the number of part-level stripes is set to .

IV-B Ablation experiments

We evaluate the effectiveness of our proposed method, including three components, two-stream backbone network, part-level feature learning and hetero-center triplet loss.222Note that to simply show the effectiveness of different components, during the ablation experiments, we only reported one trial experimental results, rather than the mean results of 10 trials.

IV-B1 Two-stream backbone network setting

As analyzed in Sec. III-A, the key point of the two-stream backbone network setting is how to split the well-designed CNN model to construct a modality-specific feature extractor with independent parameters and modality-shared feature embedding with shared parameters. Based on the AGW baseline [33] which is designed on top of BagTricks [14], we optionally build the following baseline network with the ResNet50 model. As shown in Fig. 6, the 3D feature maps outputted from the two-stream backbone network are pooled by the generalized-mean pooling (GeM) layer to obtain the 2D feature vector. Then the batch normalization (BN) neck is adopted to train the network, where triplet loss (Eq. (5)) is first utilized on the 2D feature vector, and then the identification loss is sequentially utilized on the batch normalized feature vector.

| splits | r = 1 | r = 5 | r = 10 | r = 20 | mAP | mINP |

|---|---|---|---|---|---|---|

| RegDB | ||||||

| 77.52 | 86.50 | 90.49 | 93.50 | 69.79 | 54.58 | |

| 76.94 | 85.68 | 89.71 | 93.88 | 69.36 | 54.82 | |

| 77.14 | 87.33 | 91.94 | 95.19 | 69.82 | 54.62 | |

| 76.99 | 87.23 | 91.21 | 94.51 | 69.51 | 53.74 | |

| 64.95 | 78.30 | 85.00 | 90.49 | 60.98 | 48.62 | |

| 48.93 | 61.99 | 71.50 | 80.44 | 48.30 | 37.66 | |

| SYSU-MM01 | ||||||

| 54.38 | 80.78 | 88.96 | 95.06 | 52.18 | 38.57 | |

| 54.48 | 80.38 | 88.61 | 94.61 | 52.67 | 39.19 | |

| 57.09 | 81.78 | 88.80 | 94.61 | 54.99 | 41.26 | |

| 52.20 | 78.23 | 86.83 | 93.06 | 51.43 | 39.49 | |

| 45.23 | 71.50 | 79.65 | 88.51 | 45.43 | 33.25 | |

| 37.81 | 69.18 | 79.88 | 87.96 | 39.40 | 27.68 | |

The results of different backbone splits on RegDB and SYSU-MM01 datasets are listed in Table III, from which we can observe that.

-

a)

, without sharing any res-convolutional layers, obtains the worst performance on both the RegDB and SYSU-MM01 datasets, with large margins compared to other splits. only shares the last fully connected layer to process the 1D feature vector without any person spatial structure information. It demonstrates the effectiveness of the 3D feature maps with person spatial structure information to describe a person.

-

b)

, sharing all the backbone networks without the modality-specific feature extractor, obtains good performances on both the RegDB and SYSU-MM01 datasets. equally treats both visible and thermal person images, without focusing additionally on the color information of visible images, focusing on the spatial structure information of a person existing on both visible and thermal images. The results may demonstrate that the person spatial structure information is more important compared to the color information in VT cross-modality Re-ID.

-

c)

On the RegDB dataset, , , and achieve comparable performances. On the SYSU-MM01 dataset, obtains much better Rank1, mAP and mINP results compared to and . The different performances may be from the different settings of the two datasets. RegDB is collected by a dual-camera system, where the visible image and corresponding thermal image are well aligned. SYSU-MM01 is collected by 6 disjoint cameras deployed at different locations, where the visible image and corresponding infrared image have arbitrary poses and views. Therefore, SYSU-MM01 needs more modality-specific layers to extract the person spatial structure compared to RegDB.

-

d)

Overall, can achieve the best performance, which only sets and as the modality-specific feature extractor with acceptable independent parameters.

(a) RegDB

(b) SYSU-MM01

IV-B2 Part-level feature learning

To evaluate the effectiveness of part-level feature learning compared to global feature learning, we add the uniform partitioning strategy between the two-stream backbone network and the loss layer, as shown in Fig. 2. Based on the above experimental results, we adopt the split as the two-stream backbone network, still with the supervision of identification loss and triplet loss.

Three points are considered: 1) the number of partition strips , 2) the generalized-mean pooling (GeM) layer instead of the traditional average pooling layer or max-pooling layer, and 3) the dimension of each part-level feature corresponding to the output channel number of Conv.

The effect of partition strips. The number of partition strips determines the granularity of a person’s local feature. Fig. 7 shows the results of different partition strips on the RegDB and SYSU-MM01 datasets. We observe that is the best setting for partition strips to extract the local person feature.

| RegDB | SYSU-MM01 | |||||

|---|---|---|---|---|---|---|

| Methods | rank1 | mAP | mINP | rank1 | mAP | mINP |

| GeM | 85.10 | 81.40 | 72.13 | 57.90 | 55.10 | 40.29 |

| Mean | 76.75 | 76.08 | 68.36 | 52.09 | 49.92 | 35.88 |

| Max | 84.22 | 79.75 | 69.04 | 56.74 | 54.88 | 40.72 |

The effect of GeM. This subsection verifies the effectiveness of the generalized-mean pooling (GeM) method compared to the traditional average pooling (Mean) and max-pooling (Max) methods. Table IV lists the results of different pooling methods on the RegDB and SYSU-MM01 datasets. We observe that max-pooling performs better than average pooling, while the generalized-mean pooling method performs the best.

The effect of part-level feature dimension. This subsection shows the effect of part-level feature dimension , corresponding to the output channel number of Conv in Fig. 2. The final dimension of the person feature is the product of the part-level feature dimension and the number of partition strips . Table V lists the results of different dimensions of each part-level feature on the RegDB and SYSU-MM01 datasets. We find that on SYSU-MM01 dataset, performs the best, while on the RegDB dataset performs the best under the rank1 and mAP criteria, and achieves the best performance under the mINP criterion. considering both the performance and final person feature dimension, we set for both the RegDB and SYSU-MM01 datasets.

| RegDB | SYSU-MM01 | |||||

|---|---|---|---|---|---|---|

| rank1 | mAP | mINP | rank1 | mAP | mINP | |

| 128 | 82.72 | 79.66 | 69.96 | 55.25 | 53.49 | 39.36 |

| 256 | 85.10 | 81.40 | 72.13 | 57.90 | 55.10 | 40.29 |

| 512 | 86.99 | 82.02 | 71.66 | 57.17 | 53.89 | 38.80 |

IV-B3 Hetero-center based triplet loss

In this subsection, we verify the effectiveness of our proposed hetero-center triplet loss from two aspects. On the one hand, is compared to traditional triplet loss to demonstrate the effectiveness of anchor center to all the other centers compared to anchor to all the other samples. On the other hand, is compared to the learned center loss and hetero-center loss to demonstrate the effectiveness of constraining both the inter-class separability and intra-class compactness.

vs. . We conducted experiments under the framework shown in Fig. 2 with different triplet losses, and , fine-tuning the tradeoff parameter in Eq. (14). The results are listed in Table VI. From the table, we can observe that 1) outperforms on both RegDB and SYSU-MM01 datasets, demonstrating the effectiveness of anchor center to all the other centers compared to anchor to all the other samples. 2) With the final loss Eq. (14), those nonconvergent cases on the SYSU-MM01 dataset may show that the anchor to all the other samples of is truly a strict constraint, demonstrating the effectiveness of the anchor center to all the other centers relaxation operation.

| RegDB | SYSU-MM01 | ||||||

| Loss | rank1 | mAP | mINP | rank1 | mAP | mINP | |

| 0.1 | 80.68 | 75.35 | 64.50 | 55.30 | 54.21 | 41.09 | |

| 0.5 | 82.43 | 78.93 | 69.22 | - | - | - | |

| 1.0 | 85.10 | 81.40 | 72.13 | - | - | - | |

| 1.5 | 72.33 | 69.67 | 60.55 | - | - | - | |

| 0.1 | 80.73 | 75.08 | 64.14 | 57.53 | 54.68 | 40.22 | |

| 0.5 | 87.96 | 81.97 | 71.87 | 60.43 | 56.41 | 40.56 | |

| 1.0 | 85.24 | 81.76 | 72.33 | 61.95 | 57.25 | 40.44 | |

| 1.5 | 90.63 | 83.64 | 71.21 | 57.45 | 53.01 | 36.93 | |

| 2.0 | 92.48 | 84.41 | 71.53 | - | - | - | |

| 3.0 | 88.93 | 78.64 | 62.22 | - | - | - | |

| RegDB | SYSU-MM01 | ||||||

| Network | Loss | rank1 | mAP | mINP | rank1 | mAP | mINP |

| Global-level | 44.76 | 40.60 | 27.52 | 52.67 | 50.48 | 36.84 | |

| 52.96 | 44.52 | 27.05 | 51.30 | 48.12 | 33.73 | ||

| 79.22 | 68.35 | 48.87 | 57.61 | 53.03 | 37.22 | ||

| 67.38 | 64.30 | 54.83 | 46.02 | 47.55 | 36.70 | ||

| Part-level | 85.34 | 80.83 | 70.46 | 47.83 | 46.22 | 32.48 | |

| (ours) | 92.48 | 84.41 | 71.53 | 61.95 | 57.25 | 40.44 | |

vs. and . We conducted experiments with different center-based losses, including the learned center loss , hetero-center loss , and our proposed hetero-center triplet loss . The network is in two frameworks: the baseline network that extracts the global person features (Sec. IV-B1) and the part-level local feature learning network (Sec. IV-B2). The results are listed in Table VII. We can observe that in both networks, outperforms and with large margins. It demonstrates the effectiveness of our proposed concentrating on both the inter-class separability and intra-class compactness, compared to and which only focus on intra-class cross-modality compactness, ignoring the inter-class separability for both the intra- and inter-modality. It is also illustrated in Fig. 4 through visualizing the features extracted by the baseline model with different center-based losses.

IV-B4 Ablation summarization

Moreover, to show the effect of every component, we also summarize the corresponding ablation study in Table VIII, whose results are copied from Tables III, VI and VII. It shows that when the component works alone, the performance on the two datasets is different and is not always improved. However, the combination of three components can greatly boost the performance on both datasets.

| RegDB | SYSU-MM01 | |||||||

|---|---|---|---|---|---|---|---|---|

| rank1 | mAP | mINP | rank1 | mAP | mINP | |||

| 77.52 | 69.79 | 54.58 | 54.38 | 52.18 | 38.57 | |||

| 77.14 | 69.82 | 54.62 | 57.09 | 54.99 | 41.26 | |||

| 79.22 | 68.35 | 48.87 | 57.61 | 53.03 | 37.22 | |||

| 85.10 | 81.40 | 72.13 | 55.30 | 54.21 | 41.09 | |||

| 92.48 | 84.41 | 71.53 | 61.95 | 57.25 | 40.44 | |||

| Methods | Venue | r = 1 | r = 10 | r = 20 | mAP | mINP |

|---|---|---|---|---|---|---|

| Visible Thermal | ||||||

| Zero-Pad [27] | ICCV17 | 17.75 | 34.21 | 44.35 | 18.90 | - |

| HCML [31] | AAAI18 | 24.44 | 47.53 | 56.78 | 20.80 | - |

| HSME [6] | AAAI19 | 50.85 | 73.36 | 81.66 | 47.00 | - |

| D2RL [24] | CVPR19 | 43.40 | 66.10 | 76.30 | 44.10 | - |

| MAC [30] | MM19 | 36.43 | 62.36 | 71.63 | 37.03 | - |

| AliGAN [20] | ICCV19 | 57.90 | - | - | 53.60 | - |

| DFE [5] | MM19 | 70.13 | 86.32 | 91.96 | 69.14 | - |

| eBDTR [33] | TIFS20 | 34.62 | 58.96 | 68.72 | 33.46 | - |

| MSR [4] | TIP20 | 48.43 | 70.32 | 79.95 | 48.67 | - |

| JSIA [21] | AAAI20 | 48.50 | - | - | 48.90 | - |

| EDFL [12] | Neuro20 | 52.58 | 72.10 | 81.47 | 52.98 | - |

| XIV [11] | AAAI20 | 62.21 | 83.13 | 91.72 | 60.18 | - |

| CDP [3] | Arxiv20 | 65.00 | 83.50 | 89.60 | 62.70 | - |

| expAT [29] | Arxiv20 | 66.48 | - | - | 67.31 | - |

| CMSP [26] | IJCV20 | 65.07 | 83.71 | - | 64.50 | - |

| Hi-CMD [1] | CVPR20 | 70.93 | 86.39 | - | 66.04 | - |

| HAT [34] | TIFS20 | 71.83 | 87.16 | 92.16 | 67.56 | - |

| cmSSFT [13] | CVPR20 | 72.30 | - | - | 72.90 | - |

| MPMN [23] | TMM20 | 86.56 | 96.68 | 98.28 | 82.91 | - |

| AGW [33] | Arxiv20 | 70.05 | - | - | 66.37 | 50.19 |

| ours | - | 91.05 | 97.16 | 98.57 | 83.28 | 68.84 |

| Thermal Visible | ||||||

| Zero-Pad [27] | ICCV17 | 16.63 | 34.68 | 44.25 | 17.82 | - |

| HCML[31] | AAAI18 | 21.70 | 45.02 | 55.58 | 22.24 | - |

| eBDTR [33] | TIFS20 | 34.21 | 58.74 | 68.64 | 32.49 | - |

| MAC [30] | MM19 | 36.20 | 61.68 | 70.99 | 39.23 | - |

| HSME [6] | AAAI19 | 50.15 | 72.40 | 81.07 | 46.16 | - |

| EDFL [12] | Neuro20 | 51.89 | 72.09 | 81.04 | 52.13 | - |

| AliGAN [20] | ICCV19 | 56.30 | - | - | 53.40 | - |

| expAT [29] | Arxiv20 | 67.45 | - | - | 66.51 | - |

| MPMN [23] | TMM20 | 84.62 | 95.51 | 97.33 | 79.49 | - |

| ours | - | 89.30 | 96.41 | 98.16 | 81.46 | 64.81 |

| All search | Indoor search | ||||||||||

| Methods | Venue | r = 1 | r = 10 | r = 20 | mAP | mINP | r = 1 | r = 10 | r = 20 | mAP | mINP |

| Zero-Pad [27] | ICCV17 | 14.80 | 54.12 | 71.33 | 15.95 | - | 20.58 | 68.38 | 85.79 | 26.92 | - |

| cmGAN [2] | IJCAI18 | 26.97 | 67.51 | 80.56 | 27.80 | - | 31.63 | 77.23 | 89.18 | 42.19 | - |

| HCML [31] | AAAI18 | 14.32 | 53.16 | 69.17 | 16.16 | - | 24.52 | 73.25 | 86.73 | 30.08 | - |

| HSME [6] | AAAI19 | 20.68 | 62.74 | 77.95 | 23.12 | - | - | - | - | - | - |

| D2RL [24] | CVPR19 | 28.90 | 70.60 | 82.40 | 29.20 | - | - | - | - | - | - |

| MAC [30] | MM19 | 33.26 | 79.04 | 90.09 | 36.22 | - | 36.43 | 62.36 | 71.63 | 37.03 | - |

| AliGAN [20] | ICCV19 | 42.40 | 85.00 | 93.70 | 40.70 | - | 45.90 | 87.60 | 94.40 | 54.30 | - |

| HPILN [38] | TIP19 | 41.36 | 84.78 | 94.51 | 42.95 | - | 45.77 | 91.82 | 98.46 | 56.52 | - |

| DFE [5] | MM19 | 48.71 | 88.86 | 95.27 | 48.59 | - | 52.25 | 89.86 | 95.85 | 59.68 | - |

| Hi-CMD [1] | CVPR20 | 34.94 | 77.58 | - | 35.94 | - | - | - | - | - | - |

| EDFL [12] | Neuro20 | 36.94 | 85.42 | 93.22 | 40.77 | - | - | - | - | - | - |

| CDP [3] | Arxiv20 | 38.00 | 82.30 | 91.70 | 38.40 | - | - | - | - | - | - |

| expAT [29] | Arxiv20 | 38.57 | 76.64 | 86.39 | 38.61 | - | - | - | - | - | - |

| XIV [11] | AAAI20 | 49.92 | 89.79 | 95.96 | 50.73 | - | - | - | - | - | - |

| eBDTR [33] | TIFS20 | 27.82 | 67.34 | 81.34 | 28.42 | - | 32.46 | 77.42 | 89.62 | 42.46 | - |

| MSR [4] | TIP20 | 37.35 | 83.40 | 93.34 | 38.11 | - | 39.64 | 89.29 | 97.66 | 50.88 | - |

| JSIA [21] | AAAI20 | 38.10 | 80.70 | 89.90 | 36.90 | - | 43.80 | 86.20 | 94.20 | 52.90 | - |

| CMSP [26] | IJCV20 | 43.56 | 86.25 | - | 44.98 | - | 48.62 | 89.50 | - | 57.50 | - |

| Attri [36] | JEI20 | 47.14 | 87.93 | 94.45 | 47.08 | - | 48.03 | 88.13 | 95.14 | 56.84 | - |

| HAT [34] | TIFS20 | 55.29 | 92.14 | 97.36 | 53.89 | - | 62.10 | 95.75 | 99.20 | 69.37 | - |

| HC [39] | Neuro20 | 56.96 | 91.50 | 96.82 | 54.95 | - | 59.74 | 92.07 | 96.22 | 64.91 | - |

| AGW [33] | Arxiv20 | 47.50 | - | - | 47.65 | 35.30 | 54.17 | - | - | 62.97 | 59.23 |

| ours | - | 61.68 | 93.10 | 97.17 | 57.51 | 39.54 | 63.41 | 91.69 | 95.28 | 68.17 | 64.26 |

IV-C Comparison to the state-of-the-art

This section compares the state-of-the-art VT Re-ID methods. The results on the RegDB and SYSU-MM01 datasets are listed in Tables IX and X, respectively. 333Note that in this subsection, we reported the mean results of 10 trials following the standard dataset settings.

The experiments on the RegDB dataset (Table IX) demonstrate that our proposed method obtains the best performance in both query settings, always by large margins. We set a new baseline for this dataset, achieving superior performance rank1/mAP/mINP 91.05%/83.28%/68.84% for visible thermal query setting. The experiments suggest that our proposed method can learn better cross-modality sharing features by well designing the two-stream parameter-sharing network, learning the part-level local person features, and computing the triplet loss on heterogeneous centers from different modalities.

The experiments on the SYSU-MM01 dataset (Table X) show that our proposed method can achieve comparable performance compared to the current state-of-the-art results obtained by HAT [34], and outperforms all the other comparison methods. However, in the more challenging mode all-search, our method performs much better than HAT [34] in the two key criteria rank1/mAP, 61.68%/57.51% vs. 55.29%/53.89%.

Compared to AliGAN [20], D2RL [24]and Hi-CMD [1], our method achieves much better performance on both datasets, and does not need the sophisticated cross-modality image translation operation. Our method also does not require complicated adversarial learning with many tricks, which is always difficult for training.

V Conclusions

This paper aims to enhance the discriminative person feature learning through simple means for VT Re-ID. On the one hand, we explore the parameter-sharing settings in the two-stream network. The experimental results show that the modality-sharing feature embedding network with some convolution blocks is an effective strategy, that could process the 3D shape feature maps with the spatial structure of a person. On the other hand, we also propose the hetero-center triplet loss to improve the traditional triplet loss for VT Re-ID by replacing the comparison of the anchor to all the other samples with the anchor center to all the other centers. With part-level person feature learning, hetero-center triplet loss performs much better than traditional triplet loss. The experimental results with remarkable improvements on two VT Re-ID datasets demonstrate the effectiveness of our proposed method compared to the current state-of-the-art methods. Our method with a simple but effective strategy can be a strong VT Re-ID baseline to boost future research with high quality.

References

- [1] S. Choi, S. Lee, Y. Kim, T. Kim, and C. Kim, “Hi-cmd: Hierarchical cross-modality disentanglement for visible-infrared person re-identification,” in CVPR, 2020, pp. 10 257–10 266.

- [2] P. Dai, R. Ji, H. Wang, Q. Wu, and Y. Huang, “Cross-modality person re-identification with generative adversarial training.” in IJCAI, 2018, pp. 677–683.

- [3] X. Fan, H. Luo, C. Zhang, and W. Jiang, “Cross-spectrum dual-subspace pairing for rgb-infrared cross-modality person re-identification,” arXiv preprint arXiv:2003.00213, 2020.

- [4] Z. Feng, J. Lai, and X. Xie, “Learning modality-specific representations for visible-infrared person re-identification,” IEEE TIP, vol. 29, pp. 579–590, 2020.

- [5] Y. Hao, N. Wang, X. Gao, J. Li, and X. yu Wang, “Dual-alignment feature embedding for cross-modality person re-identification,” in ACM MM, 2019.

- [6] Y. Hao, N. Wang, J. Li, and X. Gao, “Hsme: Hypersphere manifold embedding for visible thermal person re-identification,” in AAAI, 2019, pp. 8385–8392.

- [7] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in CVPR, 2016, pp. 770–778.

- [8] A. Hermans, L. Beyer, and B. Leibe, “In defense of the triplet loss for person re-identification,” arXiv preprint arXiv:1703.07737, 2017.

- [9] J. K. Kang, T. M. Hoang, and K. R. Park, “Person re-identification between visible and thermal camera images based on deep residual cnn using single input,” IEEE Access, vol. 7, pp. 57 972–57 984, 2019.

- [10] V. V. Kniaz, V. A. Knyaz, J. Hladuvka, W. G. Kropatsch, and V. Mizginov, “Thermalgan: Multimodal color-to-thermal image translation for person re-identification in multispectral dataset,” in ECCV Workshops, 2018.

- [11] D.-G. Li, X. Wei, X. Hong, and Y. Gong, “Infrared-visible cross-modal person re-identification with an x modality,” in AAAI, 2020.

- [12] H. Liu, J. Cheng, W. Wang, Y. Su, and H. Bai, “Enhancing the discriminative feature learning for visible-thermal cross-modality person re-identification,” Neurocomputing, vol. 398, pp. 11–19, 2020.

- [13] Y. Lu, Y. Wu, B. Liu, T. Zhang, B. Li, Q. Chu, and N. Yu, “Cross-modality person re-identification with shared-specific feature transfer,” in CVPR, 2020, pp. 13 379–13 389.

- [14] H. Luo, W. Jiang, Y. Gu, F. Liu, X. Liao, S. Lai, and J. Gu, “A strong baseline and batch normalization neck for deep person re-identification,” IEEE TMM, pp. 1–1, 2019.

- [15] D. Nguyen, H. Hong, K. Kim, and K. Park, “Person recognition system based on a combination of body images from visible light and thermal cameras,” Sensors, vol. 17, no. 3, p. 605, 2017.

- [16] F. Radenović, G. Tolias, and O. Chum, “Fine-tuning cnn image retrieval with no human annotation,” IEEE TPAMI, vol. 41, no. 7, pp. 1655–1668, 2018.

- [17] F. Schroff, D. Kalenichenko, and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in CVPR, 2015, pp. 815–823.

- [18] Y. Sun, L. Zheng, Y. Yang, Q. Tian, and S. Wang, “Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline),” in ECCV, 2018, pp. 501–518.

- [19] C. Wan, Y. Wu, X. Tian, J. Huang, and X. Hua, “Concentrated local part discovery with fine-grained part representation for person re-identification,” IEEE TMM, vol. 22, no. 6, pp. 1605–1618, 2020.

- [20] G. Wang, T. Zhang, J. Cheng, S. Liu, Y. Yang, and Z. Hou, “Rgb-infrared cross-modality person re-identification via joint pixel and feature alignment,” in ICCV, 2019, pp. 3623–3632.

- [21] G. Wang, T. Zhang, Y. Yang, J. Cheng, J. Chang, X. Liang, and Z. Hou, “Cross-modality paired-images generation for rgb-infrared person re-identification,” in AAAI, 2020.

- [22] G. Wang, Y. Yuan, X. Chen, J. Li, and X. Zhou, “Learning discriminative features with multiple granularities for person re-identification,” in ACM MM, 2018, pp. 274–282.

- [23] P. Wang, Z. Zhao, F. Su, Y. Zhao, H. Wang, L. Yang, and Y. Li, “Deep multi-patch matching network for visible thermal person re-identification,” IEEE TMM, pp. 1–1, 2020.

- [24] Z. Wang, Z. Wang, Y. Zheng, Y.-Y. Chuang, and S. Satoh, “Learning to reduce dual-level discrepancy for infrared-visible person re-identification,” in CVPR, 2019, pp. 618–626.

- [25] Y. Wen, K. Zhang, Z. Li, and Y. Qiao, “A discriminative feature learning approach for deep face recognition,” in ECCV, 2016.

- [26] A. Wu, W.-S. Zheng, S. Gong, and J. Lai, “Rgb-ir person re-identification by cross-modality similarity preservation,” IJCV, vol. 128, pp. 1765–1785, 2020.

- [27] A. Wu, W.-S. Zheng, H.-X. Yu, S. Gong, and J. Lai, “Rgb-infrared cross-modality person re-identification,” in ICCV, 2017, pp. 5380–5389.

- [28] F. Yang, K. Yan, S. Lu, H. Jia, D. Xie, Z. Yu, X. Guo, F. Huang, and W. Gao, “Part-aware progressive unsupervised domain adaptation for person re-identification,” IEEE TMM, pp. 1–1, 2020.

- [29] H. Ye, H.-C. Liu, F. Meng, and X. Li, “Bi-directional exponential angular triplet loss for rgb-infrared person re-identification,” arXiv preprint arXiv:2006.00878, 2020.

- [30] M. Ye, X. Lan, and Q. Leng, “Modality-aware collaborative learning for visible thermal person re-identification,” in ACM MM, 2019.

- [31] M. Ye, X. Lan, J. Li, and P. C. Yuen, “Hierarchical discriminative learning for visible thermal person re-identification,” in AAAI, 2018.

- [32] M. Ye, X. Lan, Z. Wang, and P. C. Yuen, “Bi-directional center-constrained top-ranking for visible thermal person re-identification,” IEEE TIFS, vol. 15, pp. 407–419, 2020.

- [33] M. Ye, J. Shen, G. jie Lin, T. Xiang, L. Shao, and S. C. H. Hoi, “Deep learning for person re-identification: A survey and outlook,” arXiv preprint arXiv:2001.04193, 2020.

- [34] M. Ye, J. Shen, and L. Shao, “Visible-infrared person re-identification via homogeneous augmented tri-modal learning,” IEEE TIFS, 2020.

- [35] M. Ye, Z. Wang, X. Lan, and P. C. Yuen, “Visible thermal person re-identification via dual-constrained top-ranking.” in IJCAI, 2018, pp. 1092–1099.

- [36] S. Zhang, C. Chen, W. Song, and Z. Gan, “Deep feature learning with attributes for cross-modality person re-identification,” Journal of Electronic Imaging, vol. 29, no. 3, 2020.

- [37] S. Zhang, Y. Yang, P. Wang, X. Zhang, and Y. Zhang, “Attend to the difference: Cross-modality person re-identification via contrastive correlation,” arXiv preprint arXiv:1910.11656, 2019.

- [38] Y.-B. Zhao, J.-W. Lin, Q. Xuan, and X. Xi, “Hpiln: a feature learning framework for cross-modality person re-identification,” IET Image Processing, vol. 13, no. 14, pp. 2897–2904, 2019.

- [39] Y. Zhu, Z. Yang, L. Wang, S. Zhao, X. Hu, and D. Tao, “Hetero-center loss for cross-modality person re-identification,” Neurocomputing, vol. 386, pp. 97–109, 2019.