Parallel Longest Increasing Subsequence and

van Emde Boas Trees

Abstract.

This paper studies parallel algorithms for the longest increasing subsequence (LIS) problem. Let be the input size and be the LIS length of the input. Sequentially, LIS is a simple problem that can be solved using dynamic programming (DP) in work. However, parallelizing LIS is a long-standing challenge. We are unaware of any parallel LIS algorithm that has optimal work and non-trivial parallelism (i.e., or span).

This paper proposes a parallel LIS algorithm that costs work, span, and space, and is much simpler than the previous parallel LIS algorithms. We also generalize the algorithm to a weighted version of LIS, which maximizes the weighted sum for all objects in an increasing subsequence. To achieve a better work bound for the weighted LIS algorithm, we designed parallel algorithms for the van Emde Boas (vEB) tree, which has the same structure as the sequential vEB tree, and supports work-efficient parallel batch insertion, deletion, and range queries.

We also implemented our parallel LIS algorithms. Our implementation is light-weighted, efficient, and scalable. On input size , our LIS algorithm outperforms a highly-optimized sequential algorithm (with cost) on inputs with . Our algorithm is also much faster than the best existing parallel implementation by Shen et al. (2022) on all input instances.

1. Introduction

This paper studies parallel algorithms for classic and weighted longest increasing subsequence problems (LIS and WLIS, see definitions below). We propose a work-efficient parallel LIS algorithm with span, where is the LIS length of the input. Our WLIS algorithm is based on a new data structure that parallelizes the famous van Emde Boas (vEB) tree (van Emde Boas, 1977). Our new algorithms improve existing theoretical bounds on the parallel LIS and WLIS problem, as well as enable simpler and more efficient implementations. Our parallel vEB tree supports work-efficient batch insertion, deletion and range query with polylogarithmic span.

Given a sequence and a comparison function on the objects in , the LIS of is the longest subsequence (not necessarily contiguous) in that is strictly increasing (based on the comparison function). In this paper, we use LIS to refer to both the longest increasing subsequence of a sequence, and the problem of finding such an LIS. LIS is one of the most fundamental primitives and has extensive applications (e.g., (Delcher et al., 1999; Gusfield, 1997; Crochemore and Porat, 2010; Schensted, 1961; O’Donnell and Wright, [n. d.]; Zhang, 2003; Altschul et al., 1990; Deift, 2000)). In this paper, we use to denote the input size and to denote the LIS length of the input. LIS can be solved by dynamic programming (DP) using the following DP recurrence (more details in Sec. 2).

| (1) |

Sequentially, LIS is a straightforward textbook problem (Dasgupta et al., 2008; Goodrich and Tamassia, 2015). We can iteratively compute using a search structure to find , which gives work. However, in parallel, LIS becomes challenging both in theory and in practice. In theory, we are unaware of parallel LIS algorithms with work and non-trivial parallelism ( or span). In practice, we are unaware of parallel LIS implementations that outperform the sequential algorithm on general input distributions. We propose new LIS algorithms with improved work and span bounds in theory, which also lead to a more practical parallel LIS implementation.

Our work follows some recent research (Blelloch et al., 2012b, a; Fischer and Noever, 2018; Hasenplaugh et al., 2014; Pan et al., 2015; Shun et al., 2015; Blelloch et al., 2020c, 2018, d; Gu et al., 2022b; Shen et al., 2022; Blelloch et al., 2020b) that directly parallelizes sequential iterative algorithms. Such algorithms are usually simple and practical, given their connections to sequential algorithms. To achieve parallelism in a “sequential” algorithm, the key is to identify the dependences (Shun et al., 2015; Blelloch et al., 2020c, d; Shen et al., 2022) among the objects. In the DP recurrence of LIS, processing an object depends on all objects before it, but does not need to wait for objects before it with a larger or equal value.

An “ideal” parallel algorithm should process all objects in a proper order based on the dependencies—it should 1) process as many objects as possible in parallel (as long as they do not depend on each other), and 2) process an object only when it is ready (all objects it depends on are finished) to avoid redundant work. More formally, we say an algorithm is round-efficient (Shen et al., 2022) if its span is for a computation with the longest logical dependence length . In LIS, the logical dependence length given by the DP recurrence is the LIS length . We say an algorithm is work-efficient if its work is asymptotically the same as the best sequential algorithm. Work-efficiency is crucial in practice, since nowadays, the number of processors on one machine (tens to hundreds) is much smaller than the problem size. A parallel algorithm is less practical if it significantly blows up the work of a sequential algorithm.

Unfortunately, there exists no parallel LIS algorithm with both work-efficiency and round-efficiency. Most existing parallel LIS algorithms are not work-efficient (Galil and Park, 1994; Krusche and Tiskin, 2009; Semé, 2006; Thierry et al., 2001; Nakashima and Fujiwara, 2002, 2006; Krusche and Tiskin, 2010; Shen et al., 2022), or have span (Alam and Rahman, 2013). We review more related work in Sec. 7.

Our algorithm is based on the parallel LIS algorithm and the phase-parallel framework by Shen et al. (Shen et al., 2022). We refer to it as the SWGS algorithm, and review it in Sec. 2. The phase-parallel framework defines a rank for each input object as the length of LIS ending at it (the value in Eq. 1). Note that an object only depends on lower-rank objects. Hence, the phase-parallel LIS algorithm processes all objects based on the increasing order of ranks. However, the SWGS algorithm takes work whp, span, and space, and is quite complicated. In the experiments, the overhead in work and space limits the performance. On a 96-core machine and input size of , SWGS becomes slower than a sequential algorithm when the LIS length .

In this paper, we propose a parallel LIS algorithm that is work-efficient ( work), round-efficient ( span) and space-efficient ( space), and is much simpler than previous parallel LIS algorithms (Shen et al., 2022; Krusche and Tiskin, 2010). Our result is summarized in Thm. 1.1.

Theorem 1.1 (LIS).

Given a sequence of size and LIS length , the longest increasing subsequence (LIS) of can be computed in parallel with work, span, and space.

We also extend our algorithm to the weighted LIS (WLIS) problem, which has a similar DP recurrence as LIS but maximizes the weighted sum instead of the number of objects in an increasing subsequence.

| (2) |

where is the weight of the -th input object. We summarize our result in Thm. 1.2.

Theorem 1.2 (WLIS).

Given a sequence of size and LIS length , the weighted LIS of can be computed using work, span, and space.

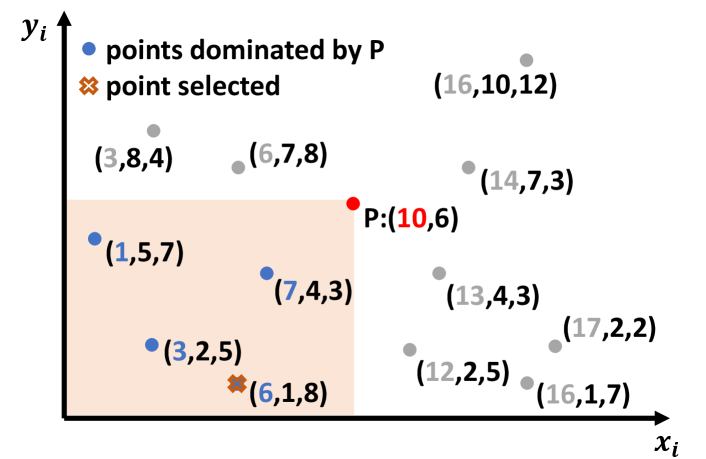

Our primary techniques to support both LIS and WLIS rely on better data structures for 1D or 2D prefix min/max queries in the phase-parallel framework. For the LIS problem, our algorithm efficiently identifies all objects with a certain rank using a parallel tournament tree that supports 1D dynamic prefix-min queries, i.e., given an array of values, find the minimum value for each prefix of the array. For WLIS, we design efficient data structures for 2D dynamic “prefix-max” queries, which we refer to as dominant-max queries (see more details in Sec. 4). Given a set of 2D points associated with values, which we refer to as their scores, a dominant-max query returns the largest score to the bottom-left of a query point. Using dominant-max queries, given an object in WLIS, we can find the maximum value among all objects that depends on. We propose two solutions focusing on theoretical and practical efficiency, respectively. In practice, we use a parallel range tree similar to that in SWGS, which results in work and span for WLIS. In theory, we parallelize the van Emde Boas (vEB) tree (van Emde Boas, 1977) and integrate it into range trees to achieve a better work bound for WLIS.

The van Emde Boas (vEB) tree (van Emde Boas, 1977) is a famous data structure for priority queues and ordered sets on integer keys, and is introduced in many textbooks (e.g., (Cormen et al., 2009)). To the best of our knowledge, our algorithm is the first parallel version of vEB trees. We believe our algorithm is of independent interest in addition to the application in WLIS. We note that it is highly non-trivial to redesign and parallelize vEB trees because the classic vEB tree interface and algorithms are inherently sequential. Our parallel vEB tree supports a general ordered set abstract data type on integer keys in with bounds stated below. We present more details in Sec. 5.

Theorem 1.3 (Parallel vEB Tree).

Let be a universe of all integers in range . Given a set of integer keys from , there exists a data structure that has the same organization as the sequential vEB tree, and supports:

-

•

single-point insertion, deletion, lookup, reporting the minimum (maximum) key, and reporting the predecessor and successor of an element, all in work, using the same algorithms for sequential vEB trees;

-

•

BatchInsert and BatchDelete that insert and delete a sorted batch in the vEB tree with work and span;

-

•

Range that reports all keys in range in work and span, where is the output size.

Our LIS algorithm and the WLIS algorithm based on range trees are simple to program, and we expect them to be the algorithms of choice in implementations in the parallel setting. We tested our algorithms on a 96-core machine. Our implementation is light-weighted, efficient and scalable. Our LIS algorithm outperforms SWGS in all tests, and is faster than highly-optimized sequential algorithms (Knuth, 1973) on reasonable LIS lengths (e.g., up to for ). To the best of our knowledge, this is the first parallel LIS implementation that can outperform the efficient sequential algorithm in a large input parameter space. On WLIS, our algorithm is up to 2.5 faster than SWGS and 7 faster than the sequential algorithm for small values. We believe the performance is enabled by the simplicity and theoretical-efficiency of our new algorithms.

We note that there exist parallel LIS algorithms (Krusche and Tiskin, 2010; Cao et al., 2023) with better worst-case span bounds than our results in theory. We highlight the simplicity, practicality, and work-efficiency of our algorithms. We also highlight our contributions on parallel vEB trees and the extension to the WLIS problem. We believe this paper has mixed contributions of both theory and practice, summarized as follows.

Theory: 1) Our LIS and WLIS algorithms improve the existing bounds. Our LIS algorithm is the first work- and space-efficient parallel algorithm with non-trivial parallelism ( span). 2) We design the first parallel version of vEB trees, which supports work-efficient batch-insertion, batch-deletion and range queries with polylogarithmic span.

Practice: Our LIS and WLIS algorithms are highly practical and simple to program. Our implementations outperform the state-of-the-art parallel implementation SWGS on all tests, due to better work and span bounds. We plan to release our code.

2. Preliminaries

Notation and Computational Model. We use with high probability (whp) (in ) to mean with probability at least for . means . We use as a short form for . For an array or sequence , we use and interchangeably as the -th object in , and use or to denote the -th to the -th objects .

We use the work-span model in the classic multithreaded model with binary-forking (Blumofe and Leiserson, 1998; Arora et al., 2001; Blelloch et al., 2020b). We assume a set of threads that share the memory. Each thread acts like a sequential RAM plus a fork instruction that forks two child threads running in parallel. When both child threads finish, the parent thread continues. A parallel-for is simulated by fork for a logarithmic number of steps. A computation can be viewed as a DAG (directed acyclic graph). The work of a parallel algorithm is the total number of operations in this DAG, and the span (depth) is the longest path in the DAG. An algorithm is work-efficient if its work is asymptotically the same as the best sequential algorithm. The randomized work-stealing scheduler can execute such a computation in time whp in on processor cores (Blumofe and Leiserson, 1998; Arora et al., 2001; Gu et al., 2022a). Our algorithms can also be analyzed on PRAM and have the same work and span bounds.

Longest Increasing Subsequence (LIS). Given a sequence of input objects and a comparison function on objects in , is a subsequence of if , where . The longest increasing subsequence (LIS) of is the longest subsequence of where . Throughout the paper, we use to denote the input size, and to denote the LIS length of the input.

LIS can be solved using dynamic programming (DP) with the DP recurrence in Eq. 1. Here (called the value of object ) is the LIS length of ending with .

The LIS problem generalizes to the weighted LIS (WLIS) problem with DP recurrence in Eq. 2. Sequentially, both LIS and weighted LIS can be solved in work. This is also the lower bound (Fredman, 1975) w.r.t. the number of comparisons. For (unweighted) LIS, there exists an sequential algorithm (Knuth, 1973). When the input sequence only contains integers in range , one can compute the LIS in work using a vEB tree. In our work, we assume general input and only use comparisons between input objects. Note that although we use vEB trees in WLIS, we will only use it to organize the indexes of the input sequence (see details in Sec. 5). Therefore, our algorithm is still comparison-based and work on any input type.

Dependence Graph (Shun et al., 2015; Blelloch et al., 2020c, d; Shen et al., 2022). In a sequential iterative algorithm, we can analyze the logical dependences between iterations (objects) to achieve parallelism. Such dependences can be represented in a DAG, called a dependence graph (DG). In a DG, each vertex is an object in the algorithm. An edge from to means that can be processed only when has been finished. We say depends on in this case. Fig. 2 illustrates the dependences in LIS. We say an object is ready when all its predecessors have finished. When executing a DG with depth , we say an algorithm is round-efficient if its span is . In LIS, the dependence depth given by the DP recurrence is the LIS length . We note that round-efficiency does not guarantee optimal span, since round-efficiency is with respect to a given DG. One can design a different algorithm with a shallower DG and get a better span.

Phase-Parallel Algorithms and SWGS Algorithm (Shen et al., 2022). The high-level idea of the phase-parallel algorithm is to assign each object a rank, denoted as , indicating the earliest phase when the object can be processed. In LIS, the rank of each object is the length of the LIS ending with it (the value computed by Eq. 1). We also define the rank of a sequence as the LIS length of . An object only depends on other objects with lower ranks. The phase-parallel LIS algorithm (Shen et al., 2022) processes all objects with rank (in parallel) in round . We call the objects processed in round the frontier of this round. An LIS example is given in Fig. 2.

The SWGS algorithm uses a wake-up scheme, where each object can be processed times whp. It also uses a range tree to find the frontiers both in LIS and WLIS. In total, this gives work whp, span, and space. Our algorithm is also based on the phase-parallel framework but avoids the wake-up scheme to achieve better bounds and performance.

3. Longest Increasing Subsequence

We start with the (unweighted) LIS problem. Our algorithm is also based on the phase-parallel framework (Shen et al., 2022) but uses a much simpler idea to make it work-efficient. The work overhead in the SWGS algorithm comes from two aspects: range queries on a range tree and the wake-up scheme. The space overhead comes from the range tree. Therefore, we want to 1) use a more efficient (and simpler) data structure than the range tree to reduce both work and space, and 2) wake up and process an object only when it is ready to avoid the wake-up scheme.

Our algorithm is based on a simple observation in Lemma 3.2 and the concept of prefix-min objects (Definition 3.1). Recall that the rank of an object is exactly its value, which is the length of LIS ending at .

Definition 3.0 (Prefix-min Objects).

Given a sequence , we say is a prefix-min object if for all , we have , i.e., is (one of) the smallest object among .

Lemma 3.0.

In a sequence , an object has rank iff. is a prefix-min object. An object has rank iff. is a prefix-min object after removing all objects with ranks smaller than .

We use Fig. 3 to illustrate the intuition of Lemma 3.2, and prove it in Sec. B.1. Based on Lemma 3.2, we can design an efficient yet simple phase-parallel algorithm for LIS (Alg. 1). For simplicity, we first focus on computing the values (ranks) of all input objects. We show how to output a specific LIS for the input sequence in Appendix A. The main loop of Alg. 1 is in Lines 1–1. In round , we identify the frontier as all the prefix-min objects and set their values to . We then remove the objects in and repeat. Fig. 3 illustrates Alg. 1 by showing the “prefix-min” value for each object, which is the smallest value up to each object. Note that this sequence is not maintained in our algorithm but is just used for illustration. In each round, we find and remove all objects with . Then we update the prefix-min values , and repeat. In round , all identified prefix-min objects have rank .

To achieve work-efficiency, we cannot re-compute the prefix-min values of the entire sequence after each round. Our approach is to design a parallel tournament tree to help identify the frontiers. Next, we briefly overview the tournament tree and then describe how to use it to find the prefix-min objects efficiently.

Tournament tree. A tournament tree on records is a complete binary tree with nodes (see Fig. 4). It can be represented implicitly as an array . The last elements are the leaves, where stores the -th record in the dataset. The first elements are internal nodes, each storing the minimum value of its two children. The left and right children of are and , respectively. We will use the following theorem about the tournament tree.

Theorem 3.3.

A tournament tree can be constructed by recursively constructing the left and right trees in parallel, and updating the root value.

Using Tournament Tree for LIS. We use a tournament tree to efficiently identify the frontier and dynamically remove objects (see Alg. 1). stores all input objects in the leaves. We always round up the number of leaves to a power of 2 to make it a full binary tree. Each internal node stores the minimum value in its subtree. When we traverse the tree at , if the smallest object to its left is smaller than , we can skip the entire subtree. Using the internal nodes, we can maintain the minimum value before any subtree and skip irrelevant subtrees to save work.

In particular, the function ProcessFrontier finds all prefix-min objects from by calling PrefixMin starting at the root. PrefixMin traverses the subtree at node , and finds all leaves in this subtree s.t. 1) is no more than any leaf before in this subtree, and 2) is no more than . The argument records the smallest value in before the subtree at . If the smallest value in subtree is larger than , we can skip the entire subtree (Alg. 1), because no object in this subtree can be a prefix-min object (they are all larger than ). Otherwise, there are two cases. The first case is when is a leaf (Lines 1–1). Since , it must be a prefix-min object. Therefore, we set its value as the current round number (Alg. 1) and remove it by setting its value as (Alg. 1). In second case, when is an internal node (Lines 1–1), we can recurse on both subtrees in parallel to find the desired objects (Alg. 1). For the left subtree, we directly use the current value. For the right subtree, we need to further consider the minimum value in the left subtree. Therefore, we take the minimum of the current and the smallest value in the left subtree (), and set it as the value of the right recursive call. After the recursive calls return, we update (Alg. 1) because some values in the subtree may have been removed (set to ). We present an example in Fig. 4, which illustrates finding the first frontier for the input in Fig. 3.

Theorem 3.4.

Alg. 1 computes the LIS of the input sequence in work and span, where is the length of the input sequence , and is the LIS length of .

Proof.

Constructing takes work and span. We then focus on the main loop (Lines 1–1) of the algorithm. The algorithm runs in rounds. In each round, ProcessFrontier recurses for steps. Hence, the algorithm has span.

Next we show that the work of ProcessFrontier in round is work, where is the number of prefix-min objects identified in this round. First, note that visiting a tournament tree node has a constant cost, so the work is asymptotically the number of nodes visited in the algorithm. We say a node is relevant if at least one object in its subtree is in the frontier. Based on Thm. 3.3, there are relevant nodes.

If Alg. 1 is executed (i.e., Alg. 1 does not return), the smallest object in this subtree is no more than and must be a prefix-min object, and this node is relevant. Other nodes are also visited but skipped by Alg. 1. Executing Alg. 1 for subtree means that ’s parent executed Alg. 1, so ’s parent is relevant. This indicates that a node is visited either because it is relevant, or its parent is relevant. Since every node has at most two children, the number of visited nodes is asymptotically the same as all relevant nodes. which is . Hence, the total number of visited nodes is:

The last step uses the concavity of the function . This proves the work bound of the algorithm. ∎

4. Weighted Longest Increasing Subsequence

A nice property of the unweighted LIS problem is that the value is the same as its rank. In round , we simply set the values of all objects in the frontier as . This is not true for the weighted LIS (WLIS) problem, and we need additional techniques to handle the weights. Inspired by SWGS, our WLIS algorithm is built on an efficient data structure supporting 2D dominant-max queries: for a set of 2D points each with a score , the dominant-max query asks for the maximum score among all points in its lower-left corner . We illustrate a dominant-max query in Fig. 9 in the appendix. We will use such a data structure to efficiently compute the values of all objects.

We present our WLIS algorithm in Alg. 2. We view each object as a 2D point with score , and use a data structure that supports dominant-max queries to maintain all such points. Initially . We call and as the x- and y-coordinate of the point, respectively. Given the input sequence, we first call Alg. 1 to compute the rank of each object and sort them by ranks to find each frontier . This can be done by any parallel sorting with work and span. We then process all the frontiers in order. When processing , we compute the values for all in parallel, using . This can be done by the dominant-max query on (Alg. 2), which reports the highest score ( value) among all objects in the lower-left corner of the object . Finally, we update the newly-computed values to (Alg. 2) as their scores.

The efficiency of this algorithm then relies on the data structure to support dominant-max. We will propose two approaches to achieve practical and theoretical efficiency, respectively. The first one is similar to SWGS and uses range trees, which leads to work and span for the WLIS problem. By plugging in an existing range-tree implementation (Sun et al., 2018), we obtain a simple parallel WLIS implementation that significantly outperforms the existing implementation from SWGS. The details of the algorithm are in Sec. 4.1, and the performance comparison is in Sec. 6. We also propose a new data structure, called the Range-vEB, to enable a better work bound ( work) for WLIS. Our idea is to re-design the inner tree in range trees as a parallel vEB tree. We elaborate our approach in Sec. 4.2 and 5.

4.1. Parallel WLIS based on Range Tree

We can use a parallel range tree (Bentley and Friedman, 1979; Sun and Blelloch, 2019) to answer dominant-max queries. A range tree (Bentley and Friedman, 1979) is a nested binary search tree (BST) where the outer tree is an index of the -coordinates of the points. Each tree node maintains an inner tree storing the same set of points in its subtree but keyed on the -coordinates (see Fig. 6). We can let each inner tree node store the maximum score in its subtree, which enables efficient dominant-max queries. In particular, for the outer tree, we can search on the -coordinates. This gives relevant subtrees in this range (called the in-range subtrees), and relevant nodes connecting them (called the connecting nodes). In Fig. 6, when , the in-range inner trees are the inner trees of points and , since their entire subtrees falls into range . The connecting nodes are and , as their x-coordinates are in the range, but only part of their subtrees are in the range. For each in-range subtree, we further search in the inner trees to get the maximum score in this range, and consider it as a candidate for the maximum score. For each connecting node, we check if its -coordinates are in range , and if so, consider it a candidate. Finally, we return the maximum score among the selected candidates (both from the in-range subtrees and connecting nodes). Using the range tree in (Sun et al., 2018; Sun and Blelloch, 2019; Blelloch et al., 2020b), we have the following result for WLIS.

Theorem 4.1.

Using a parallel range tree for the dominant-max queries, Alg. 2 computes the weighted LIS of an input sequence in work and span, where is the length of the input sequence , and is the LIS length of .

4.2. WLIS Using the Range-vEB Tree

We can achieve better bounds for WLIS using parallel van Emde Boas (vEB) trees. Unlike the solution based on parallel range trees, the vEB-tree-based solution is highly non-trivial. Given the sophistication, we describe our solution in two parts. This section shows how to solve parallel WLIS assuming we have a parallel vEB tree. Later in Sec. 5, we will show how to parallelize vEB trees.

We first outline our data structure at a high level. We refer to our data structure for the dominant-max query as the Range-vEB tree, which is inspired by the classic range tree as mentioned in Sec. 4.1. The main difference is that the inner trees are replaced by Mono-vEB trees (defined below). Recall that in Alg. 2, the RangeStruct implements two functions DominantMax and Update. We present the pseudocode of Range-vEB for these two functions in Alg. 3, assuming we have parallel functions on vEB trees.

Similar to range trees, our Range-vEB tree is a two-level nested structure, where the outer tree is indexed by -coordinates, and the inner trees are indexed by -coordinates. For an outer tree node , we will use to denote the set of points in ’s subtree and as the inner tree of . Like a range tree, the inner tree also corresponds to the set of points , but only the staircase of (defined below). Since the y-coordinates are the indexes of the input, which are integers within , we can maintain this staircase in a vEB tree. Recall that the inner tree stores the -coordinates as the key and uses the values as the scores. For two points and , we say covers if and . For a set of points , the staircase of is the maximal subset such that for any , is not covered by any points in . In other words, for two input objects and in WLIS, we say covers if comes before and has a larger or equal value. This also means that no objects will use the value at since is strictly better than . Therefore, we ignore such in the inner trees, and refer to such a vEB tree maintaining the staircase of a dataset as a Mono-vEB tree. In a Mono-vEB tree, with increasing key (), the score ( values) must also be increasing. We show an illustration of the staircase in Fig. 11 in the appendix.

Due to monotonicity, the maximum value in a Mono-vEB tree for all points with is exactly the score ( value) of ’s predecessor. Combining this idea with the dominant-max query in range trees, we have the dominant-max function in Alg. 3. We will first search the range in the outer tree for the -coordinates and find all in-range subtrees and connecting nodes. For each connecting node, we check if their coordinates are in the queried range, and if so, take their values into consideration. For each in-range inner tree , we call Pred query on the Mono-vEB tree and obtain the score ( value) of this predecessor (Alg. 3). As mentioned, the value of is the highest score from this inner tree among all points with an index smaller than . Finally, we take a max of all such results (all and those from connecting nodes), and the maximum among them is the result of the dominant-max query (Alg. 3). As the Pred function has cost , a single dominant-max query costs on a Range-vEB tree.

Querying dominant-max using a staircase is a known (sequential) algorithmic trick. However, the challenge is how to update (in parallel) the newly computed values in each round (the Update function) to a Range-vEB tree. We first show how to implement Update in Alg. 3 while assuming a parallel vEB tree. We later explain how to parallelize a vEB tree in Sec. 5.

Step 1. Collecting insertions for inner trees. Each point may need to be added to inner trees, so we first obtain a list of points to be inserted for each inner tree . This can be done by first marking all points in in the outer tree , and (in parallel) merging them bottom-up, so that each relevant inner tree collects the relevant points in . When merging the lists, we keep them sorted by the y-coordinates, the same as the inner trees.

Step 2. Refining the lists. Because of the “staircase” property, we have to first refine each list to remove points that are not on the staircase. A point in should be removed if it is covered by its previous point , or if any point in the Mono-vEB tree covers it. The latter case can be verified by finding the predecessor of , and check if has a larger or equal value than . If so, is covered by , so we ignore . After this step, all points in need to appear on the staircase in .

Step 3. Updating the inner trees. Finally, for all involved subtrees, we will update the list to in parallel. Note that some points in may cover (and thus replace) some existing points in . We will first use a function CoveredBy to find all points (denoted as set ) in that are covered by any point in . An illustration of CoveredBy function is presented in Fig. 11 in the appendix. We will then use vEB batch-deletion to remove all points in from . Finally, we call vEB batch-insertion to insert all points in to .

In Sec. 5, we present the algorithms CoveredBy, BatchDelete and BatchInsert needed by Alg. 2, and prove Thm. 1.3. Assuming Thm. 1.3, we give the proof of Thm. 1.2.

Proof of Thm. 1.2.

We first analyze the work. We first show that the DominantMax algorithm in Alg. 3 takes work. In Alg. 3, Alg. 3 finds connecting nodes and in-range inner trees, which takes work. Then for all in-range inner trees, we perform a Pred query in parallel, which costs . In total, this gives work for DominantMax. This means that the total work to compute the values in Alg. 2 in the entire Alg. 2 is .

We now analyze the total cost of Update. In one invocation of Update, we first find all keys for each inner tree that appears in . Using the bottom-up merge-based algorithm mentioned in Sec. 4.2, each merge costs linear work. Similarly, refining a list costs linear work. Since each key in appears in inner tree, the total work to find and refine all is for each batch, and is for the entire algorithm.

For each subtree, the cost of running CoveredBy is asymptotically bounded by BatchDelete. For BatchDelete and BatchInsert, note that the bounds in Thm. 1.3 show that the amortized work to insert or delete a key is . In each inner tree, a key can be inserted at most once and deleted at most once, which gives total work in the entire algorithm.

Finally, the span of each round is . In each round, we need to perform the three steps in Sec. 4.2. The first step requires to find the list of relevant subtrees for each element in the insertion batch . For each element , this is performed by first searching in the outer tree, and then merging them bottom-up, so that each node in the outer tree will collect all elements in that belong to its subtree. There are levels in the outer tree, and each merge requires span, so this first step requires span.

Step 2 will process all relevant lists in parallel (at most of them). For each list, it calls Pred for each element in each list, and a filter algorithm at the end. The total span is bounded by .

Step 3 requires calling batch insertion and deletion to update all relevant inner trees, and all inner trees can be processed in parallel. Based on the analysis above, the span for each batch insertion and deletion is , which is also bounded by .

Thus, the entire algorithm has span . Finally, as mentioned, we present the details of achieving the stated space bound in Appendix E by relabeling all points in each inner tree. ∎

Making Range-vEB Tree Space-efficient. A straightforward implementation of Range-vEB tree may require space, as a plain vEB tree requires space. There are many ways to make vEB trees space-efficient ( space when storing keys); we discuss how they can be integrated in Range-vEB tree to guarantee total space in Appendix E.

5. Parallel van Emde Boas Trees

- :

-

: A -bit integer from universe , where

- :

-

: high-bit of , equals to

- :

-

: low-bit of , equals to

- :

-

: The integer by concatenating high-bit and low-bit

- :

-

: A vEB (sub-)tree / the set of keys in this vEB tree

- ():

-

: The min (max) value in vEB tree

- :

-

: Find the predecessor of in vEB tree

- :

-

: Find the successor of in vEB tree

- :

-

: The set of high-bits in vEB tree

- :

-

: The subtree of with high-bit

- :

-

: The survival predecessor of in vEB tree (used in Alg. 5). .

- :

-

: The survival successor of in vEB tree (used in Alg. 5). .

The van Emde Boas (vEB) tree (van Emde Boas, 1977) is a famous data structure that implements the ADTs of priority queues and ordered sets and maps for integer keys and is introduced in textbooks (e.g., (Cormen et al., 2009)). For integer keys in range 0 to , single-point updates and queries cost , better than the cost for BSTs or binary heaps. We review vEB trees in Sec. 5.1.

However, unlike BSTs or binary heaps that have many parallel versions (Blelloch and Reid-Miller, 1998; Blelloch et al., 2016, 2022; Sun et al., 2018; Blelloch et al., 2020b; Wang et al., 2021; Dong et al., 2021; Lim, 2019; Williams et al., 2021; Akhremtsev and Sanders, 2016; Zhou et al., 2019), we are unaware of any parallel vEB trees. Even the sequential vEB tree is complicated (compared to most BSTs and heaps), and the invariants are maintained sophisticatedly to guarantee doubly-logarithmic cost. Such complication adds to the difficulty of parallelizing updates (insertions and deletions) on vEB trees. Meanwhile, for queries, we note that vEB trees do not directly support range-related queries—when using vEB trees for ordered sets and maps, many applications heavily rely on repeatedly calling successors and/or predecessors, which is inherently sequential. Hence, we need to carefully redesign the vEB tree to achieve parallelism. In this section, we first review the sequential vEB tree and then present our parallel vEB tree to support the functions needed in Alg. 3.

5.1. Review of the Sequential vEB Tree

A van Emde Boas (vEB) tree (van Emde Boas, 1977) is a search tree structure with keys from a universe , which are integers from 0 to . We usually assume the keys are -bit integers (i.e., ). A classic vEB tree supports insertion, deletion, lookup, reporting the min/max key in the tree, reporting the predecessor (Pred) and successor (Succ) of a key, all in work. Other queries can be implemented using these functions. For instance, reporting all keys in a range can be implemented by repeatedly calling Succ in work, where is the output size.

A vEB tree stores a key using its binary bits as its index. We use to denote a vEB tree, as well as the set of keys in this vEB tree. We present the notation for vEB trees in Tab. 1, and show an illustration of vEB trees in Fig. 6. We use 13 as an example to show how a key is decomposed and stored in the tree nodes. A vEB tree is a quadruple . and store the minimum and maximum keys in the tree. When is empty, we set and . For the rest of the keys (other than ), their high-bits (the first bits) are maintained recursively in a vEB tree, noted as . In Fig. 6, the high-bits are the first 4 bits, and there are two different unique high-bits (0 and 1). They are maintained recursively in a vEB (sub-)tree (the blue box). For each unique high-bit, the relevant low-bits (the last bits) are also organized as a vEB (sub-)tree recursively. In particular, the low-bits that belong to high-bit are stored in a vEB tree . In Fig. 6, five keys in have high-bit 0 (4, 8, 10, 13, and 15). They are maintained in a vEB (sub-)tree as (the green box and everything below). Each subtree ( and all ) has universe size (about bits). This guarantees traversal from the root to every leaf in hops. Note that the values of a vEB tree are not stored again in the summary or clusters. For example, in Fig. 6, at the root, , and thus 2 is not stored again in . Such design is crucial to guarantee doubly logarithmic work for Insert, Delete, Pred, and Succ.

Note that although we use “low/high-bits” in the descriptions, algorithms on vEB trees can use simple RAM operations to extract the corresponding bits, without any bit manipulation, as long as the universe size for each subtree is known. Due to the page limit, we refer the audience to the textbook (Cormen et al., 2009) for more details about sequential vEB tree algorithms.

5.2. Our New Results

We summarize our results on parallel vEB tree in Thm. 1.3. Both batch insertion/deletion and range reporting are work-efficient—the work is the same as performing them on a sequential vEB tree. In Alg. 2, the key range . Using the Range query, we can implement CoveredBy in Alg. 3 in work and polylogarithmic span, where is the number of objects returned.

Similar to the sequential vEB tree, batch-insertion is relatively straightforward among the parallel operations. We present the algorithm and analysis in Sec. 5.2.1. Batch-deletion is more challenging, as once or is deleted, we need to replace it with a proper key stored in the subtree of . However, when finding the replacement , we need to avoid the values in the deletion batch , and take extra care to handle the case when is the of a cluster. We propose a novel technique: Survivor Mapping (see Definition 5.2) to resolve this challenge. The batch-deletion algorithm is illustrated in Sec. 5.2.2,and analysis in Sec. B.3.For range queries, we need to avoid the iterative solution (repeatedly calling Succ) since it is inherently sequential. Our high-level idea is to divide-and-conquer in parallel, but uses delicate amortization techniques to bound the extra work. Due to the page limit, we summarize the high-level idea of Range and CoveredBy in Sec. 5.2.3 and provide the details in Appendices C and D.

5.2.1. Batch Insertion.

We show our batch-insertion algorithm in Alg. 4, which inserts a sorted batch into in parallel. Here we assume the keys in are not in ; otherwise, we can simply look up the keys in and filter out those in already. To achieve parallelism, we need to appropriately handle the high-bits and low-bits, both in parallel, as well as taking extra care to maintain the values.

We first set the values at (Alg. 4–4). If , we update by swapping it with (Alg. 4); similarly we update (Alg. 4). Since we need the batch sorted when adding and/or back to (Alg. 4), we need to insert them to the correct position, causing work. If is not empty, we will insert the keys in to and . We first find the new high-bits (not yet in ) from keys in , and denote them as (Alg. 4) This step can be done by a parallel filter. For each new high-bit , we select the smallest key with high-bit and put them in an array (Alg. 4). The new subtrees in are initialized by inserting the smallest low-bit in parallel (Alg. 4–4), after which all subtrees are certified to be non-empty. The remaining new low-bits are gathered by the corresponding high-bits (Alg. 4). Finally, new high-bits and each new low-bits are inserted into the tree in parallel recursively (Alg. 4–Alg. 4).

The correctness of the algorithm can be shown by checking that all / values for each node are set up correctly. Next, we analyze the cost bounds of Alg. 4 in Thm. 5.1.

Theorem 5.1.

Inserting a batch of sorted keys into a vEB tree can be finished in work and span, where is batch size and is the universe size.

Proof.

Let and be the work and span of BatchInsert on a batch of size and vEB tree with universe size . In each invocation of BatchInsert, we need to restore the values, find the high-bits in , initialize the clusters for the new high-bits, and gather the low-bits for each cluster.

All these operations cost work and span. Then the algorithm makes at most recursive calls, each dealing with a universe size . Hence, we have the following recurrence for work and span:

| (3) | ||||

| (4) |

Note that each key in falls into at most one of the recursions, and thus . Since is the number of distinct values to be inserted into the subtree with universe size , we also have in all recursive calls. By solving the recursions above, we can get the claimed bound in the theorem.We solve them in Sec. B.2.Note that we assume an even total bits for . If not, the number of subproblems and their size become and , respectively. One can check that the bounds still hold, the same as the sequential analysis. ∎

5.2.2. Batch Deletion.

The function BatchDelete deletes a batch of sorted keys from a vEB tree . Let be the batch size. For simplicity, we assume . If not, we can first look up all keys in and filter out those that are not in in work and span. We show our algorithm in Alg. 5. The main challenge to performing deletions in parallel is to properly set the and values for each subtree . When the value of a subtree is in , we need to replace it with another key in its subtree that 1) does not appear in , and 2) needs to be further deleted from the corresponding (recall that the values of a subtree should not be stored in its children). To resolve this challenge, we keep the survival predecessor and survival successor for all wrt. a vEB tree, defined as follows.

Definition 5.0 (Survivor Mapping).

Given a vEB tree and a batch , the survival predecessor for is the maximum key in that is smaller than . If no such key exists, . Similarly, the survival successor for is the minimum key in that is larger than , and is if no such key exists. are called the survival mappings.

and are used to efficiently identify the new keys to replace a deleted key. For instance, if (then it must be ), we can update the value of to directly.

Alg. 5 first initializes the survival mappings (Alg. 5) as follows. For each , we set (in parallel) as its predecessor in if this predecessor is not in , and set otherwise. Then we compute prefix-max of , and replace the values by the proper survival predecessor of in .

The initial values of can be computed similarly. We then use the BatchDeleteRecursive function to delete batch from a vEB (sub-)tree using the survival mappings, starting from the root. We use as the batch size of the current recursive call, and as the universe size of the current vEB subtree. The algorithm works in two steps: we first set the values of the tree properly, and then recursively deal with the and of .

Restoring values. We first discuss how to update and if they are deleted, in Alg. 5–5 of Alg. 5. We first duplicate and as and (Alg. 5), and then check whether (Alg. 5). If so, we replace it with its survival successor (denoted as on Alg. 5). If is in the clusters (), will be extracted from the corresponding cluster and become . To do so, we first delete sequentially (Alg. 5), and the cost is . Then we redirect the survival mapping for keys in using function SurvivorRedirect since their images may have changed (Alg. 5)—if any of them have survival predecessor/successor as , they should be redirected to some other key in (Alg. 5–5). In particular, if is , it should be redirected to ’s survival predecessor (Alg. 5). Similarly, if is , it should be redirected to ’s survival successor (Alg. 5). Regarding the cost of SurvivorRedirect, Alg. 5 (finding ’s predecessor and successor) costs , but we can charge this cost to the previous sequential deletion on Alg. 5. The rest of this part costs work and span. After that, we set the new value as . The symmetric case applies to when (Alg. 5). We then exclude and from on Alg. 5, since we have handled them properly. Finally, on Alg. 5, we deal with the particular case where only one key remains after deletion, in which case we have to store it twice in both and .

Recursively dealing with the low/high bits. After we update and (as shown above), we will recursively update (high-bits) and (low-bits), which requires the algorithm to construct the survival mappings for and each . We first consider the low-bits for and construct the survival mappings as and (Alg. 5). Given a key , where , the survival predecessor for its low-bits is the low-bits of its survival predecessor if and have same high-bits (Alg. 5). Otherwise would become the smallest key in after removing , therefore we map to (Alg. 5). We can construct similarly (Alg. 5–5). Note that we have to exclude and since they do not appear in the clusters. Then we can recursively call BatchDeleteRecursive on the using the survival mappings and (Alg. 5). In total, constructing all survival mappings for low-bits costs work and span.

We then construct the survival mapping and for high-bits (Alg. 5). Recall that the clusters of contain all keys in . Therefore if , then should be mapped to . Otherwise let be the maximum key except and with the same high-bits as , then we have by definition (Alg. 5). We can construct similarly (Alg. 5). The total cost of finding survival mappings for high-bits is also work and span.

Theorem 5.3.

Given a vEB tree , deleting a sorted batch costs work and span, where is the batch size and is the universe size.

Due to the page limit, we show the (informal) high-level ideas here and prove it in Sec. B.3. The span recurrence of the batch-deletion algorithm is similar to batch-insertion, which indicates the same span bound. The work-bound proof is more involved. The challenge lies in that a key in can be involved in both recursive calls on Alg. 5 and Alg. 5, which seemingly costs work-inefficiency. However, for each high-bit to be deleted on the recursive call on Alg. 5, it indicates that the corresponding will become empty after the deletion of low-bits. Therefore, the smallest low-bit among them must be exceptional and will be handled by the base cases on Lines 5–5. Therefore, for each key in , only one of the recursive calls will be “meaningful”. If the audience is familiar with (sequential) vEB trees, this is very similar to the sequential analysis—for the two recursive calls on the low- and high-bits, only one of them will be invoked in any single-point insertion/deletion, and the bound thus holds. In the parallel version, we need to further analyze the cost on Lines 5–5 to restore the values. We show a formal proof for Thm. 5.3 in Sec. B.3.

5.2.3. Range Query.

Sequentially, the range query on vEB trees is supported by repeatedly calling Succ from the start of the range until the end of the range is reached. However, such a solution is inherently sequential. Although we can find the start and end of the range directly in the tree, reporting all keys in the range to an array in parallel is difficult—vEB trees cannot maintain subtree sizes efficiently, so we cannot decide the size of the output array to be assigned to each subtree. In this paper, we design novel parallel algorithms for range queries on vEB trees and use them to implement CoveredBy in Alg. 3.

To achieve parallelism, our high-level idea is to use divide-and-conquer to split the range in the middle and search the two subranges in parallel. Even though the partition can be unbalanced, we can still bound the recursion depth while amortizing the work for partitioning to the operations in the sequential execution. We collect results first in a binary tree and then flatten the tree into a consecutive array. Putting all pieces together, our range query has optimal work (same as a sequential algorithm) and polylogarithmic span (see Thm. C.1). On top of that, we show how to implement CoveredBy, which also requires amortization techniques. The details are provided in Appendices C and D, which we believe are very interesting algorithmically.

6. Experiments

In addition to the new theoretical bounds, we also show the practicality of the proposed algorithms by implementing our LIS (Alg. 1) and WLIS algorithms (Alg. 2 using range trees). Our code is light-weight. We use the experimental results to show how theoretical efficiency enables better performance in practice over the existing results. We plan to release our code.

Experimental Setup. We run all experiments on a 96-core (192-hyperthread) machine equipped with four-way Intel Xeon Gold 6252 CPUs and 1.5 TiB of main memory. Our implementation is in C++ with ParlayLib (Blelloch et al., 2020a). All reported numbers are the averages of the last three runs among four repeated tests.

|

|

|

|

| (a). LIS. . | (b). LIS. . | (c). LIS. . | (d). Weighted LIS. . |

| Line pattern. | Line pattern. | Range pattern. | Line Pattern. |

Input Generator. We run experiments of input size and with varying ranks (LIS length ). We use two generators and refer to the results as the range pattern and the line pattern, respectively. The range pattern is a sequence consisting of integers randomly chosen from a range . The values of upper bounds the LIS length. When is large, and the largest possible rank of a sequence of size is expected to be (Johansson, 1998). To generate inputs with larger ranks, we use a line pattern generator that draws as for a sequence , where is an independent random variable chosen from a uniform distribution. We vary and to achieve different ranks. For the weighted LIS problem, we always use random weights from a uniform distribution.

Baseline Algorithms. We compare to standard sequential LIS algorithms and the existing parallel LIS implementation from SWGS (Shen et al., 2022). We also show the running time of our algorithm on one core to indicate the work of the algorithm. SWGS works on both LIS and WLIS problems with work and span, and we compare both of our algorithms (Alg. 1 and 2) with it.

|

|

| (a). LIS. . | (b). LIS. . |

For the LIS problem, we also use a highly-optimized sequential algorithm from (Knuth, 1973), and call it Seq-BS. Seq-BS maintains an array , where is the smallest value of with rank . Note that is monotonically increasing. Iterating from to , we binary search in , and if , we set as . By the definition of , we then update the value to if is smaller than the current value in . The size of is at most , and thus this algorithm has work . This algorithm only works on the unweighted LIS problem.

For WLIS, we implement a sequential algorithm and call it Seq-AVL. This algorithm maintains an augmented search tree, which stores all input objects ordered by their values, and supports range-max queries. Iterating from to , we simply query the maximum value in the tree among all objects with values less than , and update . We then insert (with ) into the tree and continue to the next object. This algorithm takes work, and we implement it with an AVL tree.

Due to better work and span bounds, our algorithms are always faster than the existing parallel implementation SWGS. Our algorithms also outperform highly-optimized sequential algorithms up to reasonably large ranks (e.g., up to for ). For our tests on and input sizes, our algorithm outperforms the sequential algorithm on ranks from 1 to larger than . We believe this is the first parallel LIS implementation that can outperform the efficient sequential algorithm in a large input parameter space.

Longest Increasing Subsequence (LIS). Fig. 7(a) shows the results on input size with ranks from to using the line generator. For our algorithm and Seq-BS, the running time first increases with getting larger because both algorithms have work . When is sufficiently large, the running time drops slightly—larger ranks bring up better cache locality, as each object is likely to extend its LIS from an object nearby. Our parallel algorithm is faster than the sequential algorithm for and gets slower afterward. The slowdown comes from the lack of parallelism ( span). Our algorithm running on one core is only 1.4–5.5 slower than Seq-BS due to work-efficiency. With sufficient parallelism (e.g., on low-rank inputs), our performance is better than Seq-BS by up to 16.8.

We only test SWGS on ranks up to because it costs too much time for larger ranks. In the existing results, our algorithm is always faster than SWGS (up to 188) because of better work and span. We believe the simplicity of code also contributes to the improvement.

We evaluate our algorithm on input size with varied ranks from to using line the generator (see Fig. 7(b)) and with varied ranks from to using the range generator (see Fig. 7(c)). We exclude SWGS in the comparison due to its space-inefficiency, since it ran out of memory to construct the range tree on elements. For , our algorithm is consistently faster than Seq-BS (up to 9.1). When the rank is large, the work in each round is not sufficient to get good parallelism, and the algorithm behaves as if it runs sequentially. Because of work-efficiency, even with large ranks, our parallel algorithm introduces limited overheads, and its performance is comparable to Seq-BS (at most 3.4 slower). We also evaluate the self-relative speedup of our algorithm on input size with rank and rank using both line and range generators. In all settings from Fig. 8, our algorithm scales well to 96 cores with hyperthreads, reaching the self-speedup of up to 25.6 for and up to 37.0 for . With the same rank, our algorithm has almost identical speedup for both patterns in all scales. Our algorithm outperforms Seq-BS (denoted as dash lines in Fig. 8) when using 8 or 16 cores.

Overall, our LIS algorithm performs well with reasonable ranks, achieving up to 41 self-speedup with and up to 70 self-speedup with . Due to work-efficiency, our algorithm is scalable and performs especially well on large data because larger input sizes result in more work to utilize parallelism better.

Weighted LIS. We compare our WLIS algorithm (Alg. 2) with SWGS and Seq-AVL on input size . We vary the rank from to , and show the results in Fig. 7(d). Our algorithm is always faster than SWGS (up to 2.5). Our improvement comes from better work bound (a factor of better, although in many cases SWGS’s work bound is not tight). Our algorithm also outperforms the sequential algorithm Seq-AVL with ranks up to . The running time of the sequential algorithm decreases with increasing ranks because of better locality. In contrast, our algorithm performs worse with increasing because of the larger span.

The results also imply the importance of work-efficiency in practice. To get better performance, we believe an interesting direction is to design a work-efficient parallel algorithm for WLIS.

7. Related Work

LIS is widely studied both sequentially and in parallel. Sequentially, various algorithms have been proposed (Yang et al., 2005; Bespamyatnikh and Segal, 2000; Fredman, 1975; Knuth, 1973; Schensted, 1961; Crochemore and Porat, 2010). and the classic solution uses work. This is also the lower bound (Fredman, 1975) w.r.t. the number of comparisons. In the parallel setting, LIS is studied both as general dynamic programming (Blelloch and Gu, 2020; Chowdhury and Ramachandran, 2008; Tang et al., 2015; Galil and Park, 1994) or on its own (Krusche and Tiskin, 2009; Semé, 2006; Thierry et al., 2001; Nakashima and Fujiwara, 2002, 2006; Alam and Rahman, 2013; Tiskin, 2015). However, we are unaware of any work-efficient LIS algorithm with non-trivial parallelism ( or span). Most existing parallel LIS algorithms introduced a polynomial overhead in work (Galil and Park, 1994; Krusche and Tiskin, 2009; Semé, 2006; Thierry et al., 2001; Nakashima and Fujiwara, 2002, 2006), and/or have span (Alam and Rahman, 2013; Blelloch and Gu, 2020; Chowdhury and Ramachandran, 2008; Tang et al., 2015) (many of them (Blelloch and Gu, 2020; Chowdhury and Ramachandran, 2008; Tang et al., 2015) focused on improving the I/O bounds). The algorithm in (Krusche and Tiskin, 2010) translates to work and span, but it relies on complicated techniques for Monge Matrices (Tiskin, 2015). Most of the parallel LIS algorithms are complicated and have no implementations. We are unaware of any parallel LIS implementation with competitive performance to the sequential or algorithm.

Many previous papers propose general frameworks to study dependencies in sequential iterative algorithms to achieve parallelism (Blelloch et al., 2020b, c, 2012b; Shen et al., 2022). Their common idea is to (implicitly or explicitly) traverse the DG. There are two major approaches, and both have led to many efficient algorithms. The first one is edge-centric (Blelloch et al., 2020c, 2018, b, d; Jones and Plassmann, 1993; Hasenplaugh et al., 2014; Blelloch et al., 2012a; Fischer and Noever, 2018; Shen et al., 2022), which identifies the ready objects by processing the successors of the newly-finished objects. The second approach is vertex-centric (Shun et al., 2015; Blelloch et al., 2012b; Pan et al., 2015; Tomkins et al., 2014; Shen et al., 2022), which checks all unfinished objects in each round to process the ready ones. However, none of these frameworks directly enables work-efficiency for parallel LIS. The edge-centric algorithms evaluate all edges in the dependence graph, giving worst-case work for LIS. The vertex-centric algorithms check the readiness of all remaining objects in each round and require rounds, meaning work for LIS. The SWGS algorithm (Shen et al., 2022) combines the ideas in edge-centric and vertex-centric algorithms. SWGS has work whp and is round-efficient ( span) using space. It is sub-optimal in work and space. Our algorithm improves the work and space bounds of SWGS in both LIS and WLIS. Our algorithm is also simpler and performs much better than SWGS in practice.

The vEB tree was proposed by van Emde Boas in 1977 (van Emde Boas, 1977), and has been widely used in sequential algorithms, such as dynamic programming (Eppstein et al., 1988; Galil and Park, 1992; Hunt and Szymanski, 1977; Chan et al., 2007; Inoue et al., 2018; Narisada et al., 2017), computational geometry (Snoeyink, 1992; Chiang and Tamassia, 1992; Claude et al., 2010; Afshani and Wei, 2017), data layout (Bender et al., 2000; Ha et al., 2014; Umar et al., 2013; van Emde Boas et al., 1976), and others (Lipski and Preparata, 1981; Gawrychowski et al., 2015; Koster et al., 2001; Lipski Jr, 1983; Akbarinia et al., 2011). However, to the best of our knowledge, there was no prior work on supporting parallelism on vEB trees.

8. Conclusion

In this paper, we present the first work-efficient parallel algorithm for the longest-increasing subsequence (LIS) problem that has non-trivial parallelism ( span for an input sequence with LIS length ). Theoretical efficiency also enables a practical implementation with good performance. We also present algorithms for parallel vEB trees and show how to use them to improve the bounds for the weight LIS problem. As a widely-used data structure, we believe our parallel vEB tree is of independent interest, and we plan to explore other applications as future work. Other interesting future directions include achieving work-efficiency and good performance for WLIS in parallel and designing a work-efficient parallel LIS algorithm with or even a polylogarithmic span.

Acknowledgement

This work is supported by NSF grants CCF-2103483, IIS-2227669, NSF CAREER award CCF-2238358, and UCR Regents Faculty Fellowships. We thank Huacheng Yu for some initial discussions on this topic, especially for the vEB tree part. We thank anonymous reviewers for the useful feedbacks.

References

- (1)

- Afshani and Wei (2017) Peyman Afshani and Zhewei Wei. 2017. Independent range sampling, revisited. In esa. Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik.

- Akbarinia et al. (2011) Reza Akbarinia, Esther Pacitti, and Patrick Valduriez. 2011. Best position algorithms for efficient top-k query processing. Information Systems 36, 6 (2011), 973–989.

- Akhremtsev and Sanders (2016) Yaroslav Akhremtsev and Peter Sanders. 2016. Fast Parallel Operations on Search Trees. In IEEE International Conference on High Performance Computing (HiPC).

- Alam and Rahman (2013) Muhammad Rashed Alam and M Sohel Rahman. 2013. A divide and conquer approach and a work-optimal parallel algorithm for the LIS problem. Inform. Process. Lett. 113, 13 (2013), 470–476.

- Altschul et al. (1990) Stephen F Altschul, Warren Gish, Webb Miller, Eugene W Myers, and David J Lipman. 1990. Basic local alignment search tool. Journal of molecular biology 215, 3 (1990), 403–410.

- Arora et al. (2001) Nimar S Arora, Robert D Blumofe, and C Greg Plaxton. 2001. Thread scheduling for multiprogrammed multiprocessors. Theory of Computing Systems (TOCS) 34, 2 (2001), 115–144.

- Bender et al. (2000) Michael A Bender, Erik D Demaine, and Martin Farach-Colton. 2000. Cache-oblivious B-trees. In focs. IEEE, 399–409.

- Bentley and Friedman (1979) Jon Louis Bentley and Jerome H Friedman. 1979. Data structures for range searching. Comput. Surveys 11, 4 (1979), 397–409.

- Bespamyatnikh and Segal (2000) Sergei Bespamyatnikh and Michael Segal. 2000. Enumerating longest increasing subsequences and patience sorting. Inform. Process. Lett. 76, 1-2 (2000), 7–11.

- Blelloch et al. (2022) Guy Blelloch, Daniel Ferizovic, and Yihan Sun. 2022. Joinable Parallel Balanced Binary Trees. ACM Transactions on Parallel Computing (TOPC) 9, 2 (2022), 1–41.

- Blelloch et al. (2020a) Guy E. Blelloch, Daniel Anderson, and Laxman Dhulipala. 2020a. ParlayLib - a toolkit for parallel algorithms on shared-memory multicore machines. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA). 507–509.

- Blelloch et al. (2016) Guy E. Blelloch, Daniel Ferizovic, and Yihan Sun. 2016. Just Join for Parallel Ordered Sets. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blelloch et al. (2012b) Guy E. Blelloch, Jeremy T. Fineman, Phillip B. Gibbons, and Julian Shun. 2012b. Internally deterministic parallel algorithms can be fast. In ACM Symposium on Principles and Practice of Parallel Programming (PPOPP).

- Blelloch et al. (2020b) Guy E. Blelloch, Jeremy T. Fineman, Yan Gu, and Yihan Sun. 2020b. Optimal parallel algorithms in the binary-forking model. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blelloch et al. (2012a) Guy E. Blelloch, Jeremy T. Fineman, and Julian Shun. 2012a. Greedy sequential maximal independent set and matching are parallel on average. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blelloch and Gu (2020) Guy E. Blelloch and Yan Gu. 2020. Improved Parallel Cache-Oblivious Algorithms for Dynamic Programming. In SIAM Symposium on Algorithmic Principles of Computer Systems (APOCS).

- Blelloch et al. (2018) Guy E. Blelloch, Yan Gu, Julian Shun, and Yihan Sun. 2018. Parallel Write-Efficient Algorithms and Data Structures for Computational Geometry. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blelloch et al. (2020c) Guy E. Blelloch, Yan Gu, Julian Shun, and Yihan Sun. 2020c. Parallelism in Randomized Incremental Algorithms. J. ACM (2020).

- Blelloch et al. (2020d) Guy E. Blelloch, Yan Gu, Julian Shun, and Yihan Sun. 2020d. Randomized Incremental Convex Hull is Highly Parallel. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blelloch and Reid-Miller (1998) Guy E. Blelloch and Margaret Reid-Miller. 1998. Fast Set Operations Using Treaps. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Blumofe and Leiserson (1998) Robert D. Blumofe and Charles E. Leiserson. 1998. Space-Efficient Scheduling of Multithreaded Computations. SIAM J. on Computing 27, 1 (1998).

- Cao et al. (2023) Nairen Cao, Shang-En Huang, and Hsin-Hao Su. 2023. Nearly optimal parallel algorithms for longest increasing subsequence. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Chan et al. (2007) Wun-Tat Chan, Yong Zhang, Stanley PY Fung, Deshi Ye, and Hong Zhu. 2007. Efficient algorithms for finding a longest common increasing subsequence. Journal of Combinatorial Optimization 13, 3 (2007), 277–288.

- Chiang and Tamassia (1992) Y-J Chiang and Roberto Tamassia. 1992. Dynamic algorithms in computational geometry. Proc. IEEE 80, 9 (1992), 1412–1434.

- Chowdhury and Ramachandran (2008) Rezaul A. Chowdhury and Vijaya Ramachandran. 2008. Cache-efficient dynamic programming algorithms for multicores. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA). ACM.

- Claude et al. (2010) Francisco Claude, J Ian Munro, and Patrick K Nicholson. 2010. Range queries over untangled chains. In International Symposium on String Processing and Information Retrieval. Springer, 82–93.

- Cormen et al. (2009) Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. 2009. Introduction to Algorithms (3rd edition). MIT Press.

- Crochemore and Porat (2010) Maxime Crochemore and Ely Porat. 2010. Fast computation of a longest increasing subsequence and application. Information and Computation 208, 9 (2010), 1054–1059.

- Dasgupta et al. (2008) Sanjoy Dasgupta, Christos H Papadimitriou, and Umesh Virkumar Vazirani. 2008. Algorithms. McGraw-Hill Higher Education New York.

- Deift (2000) Percy Deift. 2000. Integrable systems and combinatorial theory. Notices AMS 47 (2000), 631–640.

- Delcher et al. (1999) Arthur L Delcher, Simon Kasif, Robert D Fleischmann, Jeremy Peterson, Owen White, and Steven L Salzberg. 1999. Alignment of whole genomes. Nucleic acids research 27, 11 (1999), 2369–2376.

- Dong et al. (2021) Xiaojun Dong, Yan Gu, Yihan Sun, and Yunming Zhang. 2021. Efficient Stepping Algorithms and Implementations for Parallel Shortest Paths. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Eppstein et al. (1988) David Eppstein, Zvi Galil, and Raffaele Giancarlo. 1988. Speeding up dynamic programming. In IEEE Symposium on Foundations of Computer Science (FOCS). 488–496.

- Fischer and Noever (2018) Manuela Fischer and Andreas Noever. 2018. Tight analysis of parallel randomized greedy MIS. In ACM-SIAM Symposium on Discrete Algorithms (SODA). 2152–2160.

- Fredman (1975) Michael L Fredman. 1975. On computing the length of longest increasing subsequences. Discrete Mathematics 11, 1 (1975), 29–35.

- Galil and Park (1992) Zvi Galil and Kunsoo Park. 1992. Dynamic programming with convexity, concavity and sparsity. Theoretical Computer Science (TCS) 92, 1 (1992).

- Galil and Park (1994) Zvi Galil and Kunsoo Park. 1994. Parallel algorithms for dynamic programming recurrences with more than O(1) dependency. J. Parallel Distrib. Comput. 21, 2 (1994).

- Gawrychowski et al. (2015) Paweł Gawrychowski, Shunsuke Inenaga, Dominik Köppl, Florin Manea, et al. 2015. Efficiently Finding All Maximal -gapped Repeats. arXiv preprint arXiv:1509.09237 (2015).

- Goodrich and Tamassia (2015) Michael T Goodrich and Roberto Tamassia. 2015. Algorithm design and applications. Wiley Hoboken.

- Gu et al. (2022a) Yan Gu, Zachary Napier, and Yihan Sun. 2022a. Analysis of Work-Stealing and Parallel Cache Complexity. In SIAM Symposium on Algorithmic Principles of Computer Systems (APOCS). SIAM, 46–60.

- Gu et al. (2022b) Yan Gu, Zachary Napier, Yihan Sun, and Letong Wang. 2022b. Parallel Cover Trees and their Applications. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA). 259–272.

- Gusfield (1997) Dan Gusfield. 1997. Algorithms on stings, trees, and sequences: Computer science and computational biology. Acm Sigact News 28, 4 (1997), 41–60.

- Ha et al. (2014) Hoai Phuong Ha, Ngoc Nha Vi Tran, Ibrahim Umar, Philippas Tsigas, Anders Gidenstam, Paul Renaud-Goud, Ivan Walulya, and Aras Atalar. 2014. Models for energy consumption of data structures and algorithms. (2014).

- Hasenplaugh et al. (2014) William Hasenplaugh, Tim Kaler, Tao B. Schardl, and Charles E. Leiserson. 2014. Ordering heuristics for parallel graph coloring. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Hunt and Szymanski (1977) James W Hunt and Thomas G Szymanski. 1977. A fast algorithm for computing longest common subsequences. Commun. ACM 20, 5 (1977), 350–353.

- Inoue et al. (2018) Takafumi Inoue, Shunsuke Inenaga, Heikki Hyyrö, Hideo Bannai, and Masayuki Takeda. 2018. Computing longest common square subsequences. In cpm. Schloss Dagstuhl-Leibniz-Zentrum für Informatik, Dagstuhl Publishing.

- JáJá (1992) Joseph JáJá. 1992. Introduction to Parallel Algorithms. Addison-Wesley Professional.

- Johansson (1998) Kurt Johansson. 1998. The longest increasing subsequence in a random permutation and a unitary random matrix model. Mathematical Research Letters 5, 1 (1998), 68–82.

- Jones and Plassmann (1993) Mark T. Jones and Paul E. Plassmann. 1993. A parallel graph coloring heuristic. 14, 3 (1993), 654–669.

- Knuth (1973) Donald E. Knuth. 1973. The Art of Computer Programming, Volume III: Sorting and Searching. Addison-Wesley.

- Koster et al. (2001) Arie MCA Koster, Hans L Bodlaender, and Stan PM Van Hoesel. 2001. Treewidth: computational experiments. Electronic Notes in Discrete Mathematics 8 (2001), 54–57.

- Krusche and Tiskin (2009) Peter Krusche and Alexander Tiskin. 2009. Parallel longest increasing subsequences in scalable time and memory. In International Conference on Parallel Processing and Applied Mathematics. Springer, 176–185.

- Krusche and Tiskin (2010) Peter Krusche and Alexander Tiskin. 2010. New algorithms for efficient parallel string comparison. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA). 209–216.

- Lim (2019) Wei Quan Lim. 2019. Optimal Multithreaded Batch-Parallel 2-3 Trees. arXiv preprint arXiv:1905.05254 (2019).

- Lipski and Preparata (1981) Witold Lipski and Franco P Preparata. 1981. Efficient algorithms for finding maximum matchings in convex bipartite graphs and related problems. Acta Informatica 15, 4 (1981), 329–346.

- Lipski Jr (1983) Witold Lipski Jr. 1983. Finding a Manhattan path and related problems. Networks 13, 3 (1983), 399–409.

- Nakashima and Fujiwara (2002) Takaaki Nakashima and Akihiro Fujiwara. 2002. Parallel algorithms for patience sorting and longest increasing subsequence. In International Conference in Networks, Parallel and Distributed Processing and Applications. 7–12.

- Nakashima and Fujiwara (2006) Takaaki Nakashima and Akihiro Fujiwara. 2006. A cost optimal parallel algorithm for patience sorting. Parallel processing letters 16, 01 (2006), 39–51.

- Narisada et al. (2017) Shintaro Narisada, Kazuyuki Narisawa, Shunsuke Inenaga, Ayumi Shinohara, et al. 2017. Computing longest single-arm-gapped palindromes in a string. In International Conference on Current Trends in Theory and Practice of Informatics. Springer, 375–386.

- O’Donnell and Wright ([n. d.]) Ryan O’Donnell and John Wright. [n. d.]. A primer on the statistics of longest increasing subsequences and quantum states. SIGACT News ([n. d.]).

- Pan et al. (2015) Xinghao Pan, Dimitris Papailiopoulos, Samet Oymak, Benjamin Recht, Kannan Ramchandran, and Michael I. Jordan. 2015. Parallel correlation clustering on big graphs. In Advances in Neural Information Processing Systems (NIPS). 82–90.

- Schensted (1961) Craige Schensted. 1961. Longest increasing and decreasing subsequences. Canadian Journal of Mathematics 13 (1961), 179–191.

- Semé (2006) David Semé. 2006. A CGM algorithm solving the longest increasing subsequence problem. In International Conference on Computational Science and Its Applications. Springer, 10–21.

- Shen et al. (2022) Zheqi Shen, Zijin Wan, Yan Gu, and Yihan Sun. 2022. Many Sequential Iterative Algorithms Can Be Parallel and (Nearly) Work-efficient. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA).

- Shun et al. (2015) Julian Shun, Yan Gu, Guy E. Blelloch, Jeremy T. Fineman, and Phillip B Gibbons. 2015. Sequential random permutation, list contraction and tree contraction are highly parallel. In ACM-SIAM Symposium on Discrete Algorithms (SODA). 431–448.

- Snoeyink (1992) Jack Snoeyink. 1992. Two-and Three-Dimensional Point Location in Rectangular Subdivisions. In swat, Vol. 621. Springer Verlag, 352.

- Sun and Blelloch (2019) Yihan Sun and Guy E Blelloch. 2019. Parallel Range, Segment and Rectangle Queries with Augmented Maps. In SIAM Symposium on Algorithm Engineering and Experiments (ALENEX). 159–173.

- Sun et al. (2018) Yihan Sun, Daniel Ferizovic, and Guy E Blelloch. 2018. PAM: Parallel Augmented Maps. In ACM Symposium on Principles and Practice of Parallel Programming (PPOPP).

- Tang et al. (2015) Yuan Tang, Ronghui You, Haibin Kan, Jesmin Jahan Tithi, Pramod Ganapathi, and Rezaul A Chowdhury. 2015. Cache-oblivious wavefront: improving parallelism of recursive dynamic programming algorithms without losing cache-efficiency. In ACM Symposium on Principles and Practice of Parallel Programming (PPOPP).

- Thierry et al. (2001) Garcia Thierry, Myoupo Jean-Frédéric, and Semé David. 2001. A work-optimal CGM algorithm for the LIS problem. In ACM Symposium on Parallelism in Algorithms and Architectures (SPAA). 330–331.

- Tiskin (2015) Alexander Tiskin. 2015. Fast distance multiplication of unit-Monge matrices. Algorithmica 71, 4 (2015), 859–888.

- Tomkins et al. (2014) Daniel Tomkins, Timmie Smith, Nancy M Amato, and Lawrence Rauchwerger. 2014. SCCMulti: an improved parallel strongly connected components algorithm. ACM Symposium on Principles and Practice of Parallel Programming (PPOPP) 49, 8 (2014), 393–394.

- Umar et al. (2013) Ibrahim Umar, Otto Anshus, and Phuong Ha. 2013. Deltatree: A practical locality-aware concurrent search tree. arXiv preprint arXiv:1312.2628 (2013).

- van Emde Boas (1977) Peter van Emde Boas. 1977. Preserving order in a forest in less than logarithmic time and linear space. Inform. Process. Lett. 6, 3 (1977), 80–82.

- van Emde Boas et al. (1976) Peter van Emde Boas, Robert Kaas, and Erik Zijlstra. 1976. Design and implementation of an efficient priority queue. Mathematical systems theory 10, 1 (1976), 99–127.

- Wang et al. (2021) Yiqiu Wang, Shangdi Yu, Yan Gu, and Julian Shun. 2021. A Parallel Batch-Dynamic Data Structure for the Closest Pair Problem. In ACM Symposium on Computational Geometry (SoCG).

- Williams et al. (2021) Marvin Williams, Peter Sanders, and Roman Dementiev. 2021. Engineering MultiQueues: Fast Relaxed Concurrent Priority Queues. In European Symposium on Algorithms (ESA).

- Yang et al. (2005) I-Hsuan Yang, Chien-Pin Huang, and Kun-Mao Chao. 2005. A fast algorithm for computing a longest common increasing subsequence. Inform. Process. Lett. 93, 5 (2005), 249–253.

- Zhang (2003) Hongyu Zhang. 2003. Alignment of BLAST high-scoring segment pairs based on the longest increasing subsequence algorithm. Bioinformatics 19, 11 (2003), 1391–1396.

- Zhou et al. (2019) Tingzhe Zhou, Maged Michael, and Michael Spear. 2019. A Practical, Scalable, Relaxed Priority Queue. In International Conference on Parallel Processing (ICPP). 1–10.

Appendix A Output the LIS from Algorithm 1

Following the terminology in DP algorithms, we call each a state. When we attempt to compute state from state , we say is a decision for state . We say is the best decision of the state if .

To report a specific LIS of the input sequence, we can slightly modify Alg. 1 to compute the best decision for the -th object. Namely, is ’s previous object in the LIS ending at . Then starting from an object with the largest rank, we can iteratively find an LIS in work and span. Our idea is based on a simple observation:

Lemma A.0.

For an object with rank , let be the smallest object with rank before , then is the best decision for state , i.e., .

Proof.

Since and , clearly . We just need to show that , such that it is a candidate of ’s previous object in the LIS. Assume to the contrary that . Note that is the smallest object with rank before . Therefore, for any where and , we have . Hence, cannot obtain a DP value of , which leads to a contradiction. ∎