Parallel Batch-Dynamic d-trees

Abstract

d-trees are widely used in parallel databases to support efficient neighborhood/similarity queries. Supporting parallel updates to d-trees is therefore an important operation. In this paper, we present BDL-tree, a parallel, batch-dynamic implementation of a d-tree that allows for efficient parallel -NN queries over dynamically changing point sets. BDL-trees consist of a log-structured set of d-trees which can be used to efficiently insert or delete batches of points in parallel with polylogarithmic depth. Specifically, given a BDL-tree with points, each batch of updates takes amortized work and depth (parallel time). We provide an optimized multicore implementation of BDL-trees. Our optimizations include parallel cache-oblivious d-tree construction and parallel bloom filter construction.

Our experiments on a 36-core machine with two-way hyper-threading using a variety of synthetic and real-world datasets show that our implementation of BDL-tree achieves a self-relative speedup of up to ( on average) for batch insertions, up to ( on average) for batch deletions, and up to ( on average) for -nearest neighbor queries. In addition, it achieves throughputs of up to 14.5 million updates/second for batch-parallel updates and 6.7 million queries/second for -NN queries. We compare to two baseline d-tree implementations and demonstrate that BDL-trees achieve a good tradeoff between the two baseline options for implementing batch updates.

I Introduction

Nearest neighbor search is used in a wide range of applications, such as in databases, machine learning, data compression, and cluster analysis. One popular data structure for supporting -nearest neighbor (-NN) search in low dimensional spatial data is the d-tree, originally developed by Bentley [1], as it efficiently builds a recursive spatial partition over point sets.

There has been a significant body of work (e.g., [1, 2, 3, 4, 5, 6, 7, 8, 9]) devoted to developing better d-tree variants, both in terms of parallelization and spatial heuristics. However, none of these approaches tackle the problem of parallelizing batched updates, which is important given that many real-world data sets are being frequently updated. In particular, in a scenario where the set of points is being updated in parallel, existing approaches either become imbalanced or require full rebuilds over the new point set. The upper tree in Figure 1 shows the baseline approach that simply rebuilds the d-tree on every insert and delete, maintaining perfect spatial balance but adding overhead to the update operations. On the other hand, the lower tree in Figure 1 shows the other baseline approach, which never rebuilds and instead only inserts points into the existing spatial partition and marks deleted points as tombstones. This gives fast updates at the cost of potentially skewed spatial partitions.

Procopiuc et al. [9] tackled the problem of a dynamically changing point set with their Bkd-Tree, a data structure for maintaining spatial balance in the face of batched updates. However, it was developed for the external memory case and is not parallel. Similarly, Agarwal et al. [4] developed a dynamic cache-oblivious d-tree, but it was not parallel and did not support batch-dynamic operations.

We adapt these approaches and develop BDL-tree, a new parallel, in-memory d-tree-based data structure that supports batch-dynamic operations (in particular, batch construction, insertion, and deletion) as well as exact -NN queries. BDL-trees consist of a set of exponentially growing d-trees and perform batched updates in parallel. This structure can be seen in Figure 2. Just as in the Bkd-tree and cache-oblivious tree, our tree structure consists of a small buffer region followed by exponentially growing static d-trees. Inserts are performed by rebuilding the minimum number of trees necessary to maintain fully constructed static trees. Deletes are performed on the underlying trees, and we rebuild the trees whenever they drop to below half of their original capacity. Our use of parallelism, batched updates, and our approach to maintaining balance after deletion are all distinct from the previous trees that we draw inspiration from. We show that given a BDL-tree with points, each batch of updates takes amortized work and depth (parallel time). As part of our work, we develop, to our knowledge, the first parallel algorithm for the construction of cache-oblivious d-trees. Our construction algorithm takes work and depth. In addition, we implement parallel bloom filters to improve the performance of batch updates in practice. We also present a cache-efficient method for performing -NN queries in BDL-tree. We show theoretically that BDL-trees have strong asymptotic bounds on the work and depth of its operations.

We experimentally evaluate BDL-trees by designing a set of benchmarks to compare its performance against the two baseline approaches described above, which we implemented using similar optimizations. First, we perform scalability tests for each of the four main operations, construction, batch insertion, batch deletion, and -NN in order to evaluate the scalability of our data structure on many cores. On a 36-core machine with two-way hyper-threading, we find that our data structure achieves self-relative speedups of up to ( on average) for construction, up to ( on average) for batch insertion, up to ( on average) for batch deletion, and up to ( on average) for full -NN. The largest dataset we test consists of 321 million 3-dimensional points. Then, we design a set of benchmarks that perform a mixed set of updates and queries in order to better understand the performance of BDL-trees in realistic scenarios. We find that, when faced with a mixed set of batch operations, BDL-trees consistently outperforms the two baselines and presents the best option for such a mixed dynamic setting. Our source code implementing BDL-trees is publicly available at https://github.com/rahulyesantharao/batch-dynamic-kdtree.

II Related Work

Numerous tree data structures for spatial search have been proposed in the literature, including the d-tree [1], quadtree [10], ball tree [11], cover tree [12], and the R-tree [13]. Among them, the d-tree is a well-known data structure proposed by Bentley [1] in 1975. It is simple and yet can handle many types of queries efficiently. Bentley showed that the d-tree incurs time for insertion and deletion of random nodes, and logarithmic average query time was observed in practice. The data structure has been extensively studied in the parallel setting. Shevtsov [14] et al. proposed fast construction algorithms for the d-tree for ray tracing, by using binning techniques to distribute the workload among parallel processors. Agarwal [5] et al. proposed parallel algorithms for a series of spatial trees, including the d-tree under the massively parallel communication (MPC) model. Wehr and Radkowski [7] proposed fast sorting-based algorithms for constructing d-trees on the GPU. Zellmann [8] proposed algorithms for CPUs and GPUs for d-trees used in graphics rendering.

The idea of decomposing a data structure into a logarithmic number of structures for the sake of dynamism has been proposed and used in many different scenarios. Bentley [2, 3] first proposed dynamic structures for decomposable search problems. Specifically, he proposed general methods for converting static data structures into dynamic ones with logarithmic multiplicative overhead in cost, using a set of static data structures with size being a power of two. Agarwal et al. [5] designed cache-oblivious data structures for orthogonal range searching using ideas from the log-structured tree. O’Neil et al. [15] proposed the log-structured merge (LSM) tree, an efficient file indexing data structure that supports efficient dynamic inserts and deletes in systems with multiple storage hierarchies. Specifically, batches of updates are cascaded over time, from faster storage media to slower ones. A parallel version of the LSM tree has been implemented [16] by dividing the key-space independently across cores, and processing it in a data-parallel fashion. Our work, on the other hand, proposes a parallel batch-dynamic d-tree, where each batch of updates can be processed efficiently in parallel, while supporting efficient -NN queries.

We have recently discovered that, concurrent with our work, Dobson and Blelloch [17] developed the zd-tree, a data structure that also supports parallel batch-dynamic updates and -NN queries. Their insight is to modify the basic d-tree structure by sorting points based on their Morton ordering and splitting at each level based on a bit in the Morton ordering. For -NN queries, they provide a root-based method, which is the traditional top-down -NN search algorithm and is what we implement, as well as a leaf-based method, which directly starts from the leaves and searches up for nearest neighbors. The leaf-based method works and is faster when the query points are points in the dataset, as it saves the downward traversal to search for the query points. They prove strong work and depth bounds for construction, batch updates, and -NN queries assuming data sets with bounded expansion constant and bounded ratio. Without these assumptions, however, our bounds would be at least as good as theirs.

In Dobson and Blelloch’s implementation, they optimize -NN queries by presorting the query points using the Morton ordering to improve cache locality. Their algorithm assumes that there is a maximum bounding box where all future data will fit into, while our algorithm does not make this assumption. Their implementation discretizes the double coordinates into 64-bit integers in order to perform Morton sort on them. As a result, they can only use at most bits for each of the dimensions. Directly extending the implementation to higher dimensions would either lead to larger leaf sizes or using larger-width coordinates for the Morton sort, either of which could add overheads. Their experiments consider only 2D and 3D datasets, while we test on higher dimensional datasets as well. For 2D and 3D datasets, the running times that they report seem to be in the same ballpark as our times (their -NN queries for constructing the -NN graph are much faster than ours due to the presorting described above), after adjusting for number of processors and dataset sizes, but it would be interesting future work to experimentally compare the codes on the same platform and datasets, including higher-dimensional ones. It would also be interesting to integrate some of their optimizations into our code, and to combine the approaches to build a log-structured version of the zd-tree.

III Preliminaries

III-A d-tree

The d-tree, first proposed by Bentley [1], is a binary tree data structure that arranges and holds spatial data to speed up spatial queries. Given a set of -dimensional points, the d-tree is a balanced binary tree where each node represents a bounding box of a subset of the input points. The root node represents all of the points (and thus the tightest bounding box that includes all the points in ). Each non-leaf node holds a splitting dimension and splitting value that splits its bounding box into two halves using an axis-aligned hyperplane in the splitting dimension. Each child node represents the points in one of the two halves. This recursive splitting stops when the nodes hold some small constant number of points—these nodes are the leaves and directly represent the points.

III-A1 -NN Search using the d-tree

Given a query coordinate , a -NN query finds the nearest neighbors of amongst elements of the d-tree by performing a pruned search on the tree [18]. The canonical approach is to traverse the tree while inserting points in the current node into a buffer that maintains only the nearest neighbors encountered so far. Then, entire subtrees can be pruned during the traversal based on the distance of the ’th nearest neighbor found so far.

III-A2 Dual-Tree -NN

Besides the canonical approach explained above, there has been a lot of prior work on “dual-tree” approaches [19, 6, 20, 21], which provide speedups on serial batched -NN queries. In particular, the dual-tree approach involves building a second d-tree over the set of query points, and then exploring the two trees simultaneously in order to exploit the spatial partitioning provided by the d-tree structure. We parallelized and tested this dual-tree approach in our work.

III-B Batch-Dynamic Data Structures

The concept of a parallel batch-dynamic data structure has become popular in recent years [22, 23] as an important paradigm due to the availability of large (dynamic) datasets undergoing rapid changes. The idea is to batch together operations of a single type and perform them as a single batched update, rather than one at a time. This approach offers two benefits. First, from a usability perspective, it is often the case (especially in applications with a lot of data) that operations on a data structure can be grouped into phases or batches of a single type, so this restriction in the usage of the data structure does not significantly limit the usefulness of the data structure. Secondly, from a performance perspective, batching together operations of a single type allows us to group together the involved work and derive significantly more parallelism than otherwise might have been possible (while also avoiding the concurrency issues that might arise with batching together operations of varying types).

III-C Baselines

In order to benchmark and test the performance of BDL-trees, we implement two parallel baseline d-trees that use opposite strategies for providing batch-dynamism. In particular, the first baseline d-tree simply rebuilds the tree after every batch insertion and batch deletion. With this approach, the tree is able to maintain a fully balanced structure in the face of a dynamically changing dataset, enabling consistently high performance for -NN queries. This comes at the cost of reduced performance for updates. The second baseline d-tree never rebuilds the tree and simply maintains the initial spatial partition, inserting points into and deleting points from the existing structure. This allows for extremely fast batch insertions and deletions, but could potentially lead to a skewed structure and cause reduced -NN performance. The difference between these two baselines is graphically demonstrated in Figure 1. We demonstrate experimentally that BDL-tree achieves a balanced tradeoff between these two baseline options for batch-dynamic parallel d-trees on which we are performing -NN queries. It outperforms both in the dynamic setting where -NN queries and batched updates are all being used.

III-D Logarithmic Method

The logarithmic method [2, 3] for converting static data structures into dynamic ones is a very general idea. At a high level, the idea is to partition the static data structure into multiple structures with exponentially growing sizes (powers of 2). Then, inserts are performed by only rebuilding the smallest structure necessary to account for the new points. In the specific case of the d-tree, a set of static d-trees is allocated, with capacities in the set , as well as an extra buffer tree with size . Then, when an insert is performed, the insert cascades up from the buffer tree, rebuilding into the first empty tree with all the points from the lower trees. If desired, the sizes of all of the trees can be multiplied by a buffer size , which is some constant that is tuned for performance. This structure is illustrated in Figure 2. In the figure, all of the trees shown are full; one can imagine that the tree with size is empty, so the next insert would cause the buffer and trees 0, 1, and 2 to cascade up to it.

III-E Computational Model

We use the standard work-depth model [24, 25] for multicore algorithms to analyze theoretical efficiency. The work of an algorithm is the total number of operations used and the depth is the length of the longest sequential dependence (i.e., the parallel running time). An algorithm with work and depth can be executed on processors in expected time [26]. Our goal is to come up with parallel algorithms that have low work and depth.

III-E1 Parallel Primitives

We use the following parallel primitives in our algorithms.

-

•

Prefix sum takes as input a sequence of values , an associative binary operator , and an identity , and returns the sequence as well as the overall sum of the elements. Prefix sum can be implemented in work and depth [25].

-

•

Partition takes an array , a predicate function , and a partition value and outputs a new array such that all the values appear before all the values . Partition can be implemented in work and depth [25].

-

•

Median partition takes elements and a comparator and partitions the elements based on the median value in work and depth [25].

IV BDL-tree

In this section, we introduce BDL-tree, a parallel batch-dynamic d-tree implemented using the logarithmic method [2, 3] (discussed in Section III-D). BDL-trees build on ideas from the Bkd-Tree by Procopiuc et al. [9] and the cache-oblivious d-tree by Agarwal et al. [4]. The structure is depicted in Figure 2.

We implement the underlying d-trees in an BDL-tree as nodes in a contiguous memory array, where the root node is the first element in the array. The d-trees are built using the van Emde Boas (vEB) [27, 28, 4] recursive layout. Agarwal [4] et al. show that this memory layout can be used with d-trees to make traversal cache-oblivious, although dynamic updates on a single tree become very complex. However, in the logarithmic method, the underlying d-trees themselves are static, and so we are able to sidestep the complexity of cache-oblivious updates on these trees and benefit from the improved cache performance of the vEB layout. For the buffer region of the BDL-tree, we use a regular d-tree, laid out like a binary-heap in memory (i.e., nodes are in a contiguous array, and the children of index are , ). We will discuss the key parallel algorithms that we used in our implementation: construction, deletion, and -NN on the underlying individual d-trees (Section IV-A) and construction, insertion, deletion, and -NN on BDL-tree (Section IV-B). Note that we do not need to support insertions on individual d-trees, because our BDL-tree simply rebuilds the necessary d-trees upon insertions. We use subscript S to denote algorithms on the underlying d-trees, and subscript L to denote algorithms on the full logarithmic data structure.

IV-A Single-Tree Parallel Algorithms

IV-A1 Parallel vEB Construction

Input: Point Set

Output: d-tree over , laid out with the vEB layout on a contiguous memory array of size .

The algorithm for parallel construction of the cache-oblivious d-tree is shown in Algorithm 1. The function itself is recursive, and so the top level BuildvEB function allocates space on line 2 and calls the recursive function BuildvEBRecursive. Refer to Figure 3 for a graphical representation of this construction.

The recursive function BuildvEBRecursive maintains state with 5 parameters: a point set , a node index , a splitting dimension , the number of levels to build , and whether it is building the top or bottom of the tree (indicated by ). On line 5, we check for the base case—if the number of levels to build is 1, then we have to construct a node. If this is the top of a tree, then this node will be an internal node, so we perform a parallel median partition in dimension and save it as an internal node. On the other hand, if this is the bottom of the tree, we construct a leaf node that holds all the points in . Lines 6–9 form the recursive step. In accordance with the exponential layout [4], we have to first construct the top “half” of the tree and then the bottom “half”. Therefore, on line 6, we compute the number of levels in the bottom portion as the hyperceiling111The hyperceiling of , denoted as is the smallest power of 2 that is greater than or equal to , i.e., . of and the remaining number of levels in the top portion of the tree as . On line 7, we recursively build the top half of the tree. Then, on line 8, we note that because the top half of the tree is a complete binary tree with levels, it will use nodes. Therefore, we compute , the node index where the bottom half of the tree should start because the trees are laid out consecutively in memory. Finally, on line 9, we construct each of the subtrees that fall under the top half of the tree, each with levels. Each of these trees falls into a distinct segment of memory in the array, and so we can perform this construction in parallel across all of the subtrees by precomputing the starting index for each of the subtrees.

We trace this process on an example in Figure 3, in which BuildvEB is called on a set of 8 points. This spawns a call to BuildvEBRecursive(, 0, 0, 4, bottom). On line 6, we will compute and , and on line 7, we spawn a recursive call to BuildvEBRecursive(, 0, 0, 2, top). This call is shown as the solid box around the top 3 nodes in Figure 3. In this call, we will hit one further level of recursion before laying out the 3 nodes in indices 0, 1, 2. Then, the original recursive call will proceed to line 8, where it will compute as the index to begin laying out the bottom subtrees. Finally, on line 9, we will precompute that the starting indices for the 4 bottom subtrees are . This results in 4 parallel recursive calls, shown in the 4 lower dashed boxes in Figure 3. Each of these recursive calls internally has one more level of recursion to lay out their 3 nodes.

Theorem 1.

The cache-oblivious d-tree with a vEB layout can be constructed over points in work and depth.

Proof.

The work bound is obtained by observing that there are levels in the fully-constructed tree, and the median partition at each level takes work, giving a total of work. For the depth bound, at each recursive step we first build an upper tree with size , and then construct the lower trees in parallel, each with size . Further, we use an -depth prefix sum to compute at every level except the base case and an -depth median partition in the base case. Overall, this results in depth. ∎

After the vEB-layout d-tree is constructed, it can be queried as a regular d-tree—the only difference is the physical layout of the nodes in memory. The correctness of this recursive algorithm can be seen through induction on the number of levels. In particular, we form two inductive hypotheses:

-

•

BuildvEBRecursive(, , , , top) creates a contiguous, fully-balanced binary tree with levels rooted at memory location . Furthermore, this binary tree consists of internal d-tree nodes that equally split the point set in half at each level.

-

•

BuildvEBRecursive(, , , , bottom) creates a contiguous d-tree with levels rooted at memory location .

The base cases, with , for these inductive hypotheses are explicitly given on line 5. Then, the inductive step follows easily by noting that the definition of hyperceiling implies that the recursive calls on line 9 are all sized such that .

IV-A2 Parallel Deletion

Input: Point Set

The algorithm for parallel deletion from a single d-tree is shown in Algorithm 2. The function itself is recursive, so the top level Erase calls the subroutine EraseRecursive on the root node on line 2.

The recursive function EraseRecursive acts on one node at a time, represented by the index . On line 4, it checks for the base case—if the current node is a leaf node, it simply performs a linear scan to mark any points in the leaf node that are also in as deleted. Then, it returns NULL if the entire leaf was emptied; otherwise, it returns the current node . Lines 5–7 represent the recursive case. First, on line 5, we perform a parallel partition of around the current node’s splitting hyperplane. We refer to the lower partition as and the upper partition as . On line 6, we recurse on the left and right subtrees in parallel, passing to the left subtree and to the right. Finally, line 7 updates the tree structure. We always ensure that every node has 2 children in order to flatten any unnecessary tree traversal. The return value of EraseRecursive indicates the node that should take the place of in the tree (potentially the same node)—a return value of NULL indicates that the entire subtree rooted at was removed. So, if both the left and right child are removed, then we can remove the current node as well by returning NULL. On the other hand, if neither the left or right child are removed, then the subtree is still intact, and we simply reset the left and right child pointers of the current node and return the current node , indicating that it was not removed. Finally, if exactly one of the children was removed, then we remove the current node as well and let the remaining child connect directly to its grandparent—in this way, we remove an unnecessary internal splitting node. We do this by simply returning the non-NULL child, signaling that it will take the place of the current node in the d-tree.

Theorem 2.

Deleting a batch of points from a single d-tree constructed over points can be done in work and depth in the worst case.

Proof.

We can see the work bound by noting that each of the points traverse down levels as part of the algorithm. For the depth, note that in the worst-case the parallel partition at each level operates over points at each level. Because parallel partition has logarithmic depth, this would result in a worst-case depth at each of the levels, giving the overall depth of . ∎

IV-A3 Data-Parallel -NN

We execute our -NN searches in a data-parallel fashion by parallelizing across all of the query points in a batch. The -NN search for each point is executed serially. We implement a “-NN buffer”, a data structure that maintains a list of the current -nearest neighbors and provide quick insert functionality to test and insert new points if they are closer than the existing set. The data structure maintains an internal buffer of size . To insert a point, it simply adds that point to the end of the buffer. If the buffer is filled up, then it uses a serial selection algorithm to partition the buffer around the -th nearest element and clears out the remaining elements. This achieves a serial amortized runtime (because the selection partition step is and is only performed for every insertions).

To implement batched -NN on the d-tree, we perform a -NN search for each individual point in parallel across all the points. We now describe the -NN method (kNN) for a single point . We first allocate a -NN buffer for the point. Then, we recursively descend through the d-tree searching for the leaf that falls into. When we find this leaf, we add all of the points in the leaf to the -NN buffer. Then, as the recursion unfolds, we check whether the -NN buffer has points. If it does not, we add all the points in the sibling of the current node to the -NN buffer to try to fill up the buffer with nearby points as quickly as possible to improve our estimate of the -th nearest neighbor. Otherwise, we use the current distance of the -th nearest neighbor to prune subtrees in the tree. In particular, if the bounding box of the current subtree is entirely contained within the distance of the -th nearest neighbor, we add all points in the subtree to the -NN buffer. If the bounding box is entirely disjoint, then we prune the subtree. Finally, if they intersect, we recurse on the subtree.

Theorem 3.

For a constant , -NN queries over a batch of points can be performed over a single d-tree containing points in worst-case work and worst-case depth.

Proof.

In the worst-case, we have to search the entire tree, of size , resulting in total work of (due to the amortized insert cost for -NN buffers) and depth of , as the queries are done in parallel over the batch, but each search is serial. ∎

IV-B Batch-Dynamic Parallel Algorithms

This section describes our algorithms for supporting batch-dynamic updates on BDL-trees.

IV-B1 Parallel Insertion

Input: Point Set

Insertions are performed in the style of the logarithmic method [2, 3], with the goal of maintaining the minimum number of full trees within BDL-tree. Thus, upon inserting a batch of points, we rebuild larger trees if it is possible using the existing points and the newly inserted batch. This is implemented as shown in Algorithm 3, and depicted in Figure 4.

First, on line 2, we build a bitmask of the current set of full static trees in the logarithmic structure. Then, on line 3, because the buffer d-tree has size , we can add to to compute a new bitmask of full trees that would result if we added points to the tree structure. As an implementation detail, note that we first add points to the buffer d-tree—if we fill up the buffer d-tree, then we gather the points from it and treat them as part of , effectively increasing the size of by . Then, on line 4, taking the bitwise difference between these two bitmasks gives the set of trees that should be consolidated into new larger trees—specifically, any tree that is set in but not in must be constructed from trees that are set in but not in . After determining which trees should be combined into new trees, on line 5 we construct all the new trees in parallel—in parallel for each new tree to be constructed, we deconstruct and gather all the points from trees that are being combined into it and then we construct the new tree over these points and any additional required points from using Algorithm 1.

Refer to Figure 4 for an example of this insertion method (suppose for this example that ). In Figure 4(a), the BDL-tree contains points, giving a bitmask of (because only the smallest tree is in use). If we insert points, then we put one node in the buffer tree and compute , and so we have to deconstruct static tree 0 and build static tree 1, as shown in Figure 4(b). Then, if we insert points again, then we again put one point in the buffer tree and compute , and so we simply construct tree 0 on the new points (leaving tree 1 intact), as seen in Figure 4(c). Finally, if we then insert points, we note that this would fill the buffer up, so we take 1 point from the buffer and insert points; then, , and so we deconstruct trees 0, 1 and construct tree 2, as seen in Figure 4(d).

IV-B2 Parallel Deletion

Input: Point Set

When deleting a batch of points, the goal is to maintain balance within the subtrees. Thus, if any subtree decreases to less than half of its full capacity, we move all the points down to a smaller subtree in order to maintain balance. As seen in Algorithm 4, this is implemented as a three-step process.

On line 2, we call a parallel bulk erase subroutine on each of the individual trees in parallel in order to actually erase the points from the trees. On line 3, we scan the trees in parallel and collect the points from all trees which have been depleted to less than half of their original capacity. Finally, on line 4, we use the Insert routine to reinsert these points into the structure.

Theorem 4.

Given an BDL-tree that was created using only batch insertions and deletions, each batch of updates takes amortized work and depth, where is the number of points in the tree before applying the updates.

Proof.

We first argue the work for only performing insertions starting from an empty BDL-tree. In the worst case, points are added to the structure one by one. Then, as similar to the analysis by Bentley [2], the total work incurred is given by noting that the number of times the ’th tree is rebuilt when inserting points one by one is . Then, summing the total work gives , where we use the work bound from Theorem 1. After inserting a batch of size , we have points in the BDL-tree, and so the amortized work for the batch is . Now, if deletions occurred prior to a batch insertion, and the current BDL-tree has points, there still must have been previous insertions (since we started with an empty data structure), and so the work of this batch can still be amortized against those insertions. We now argue the depth bound. When a batch inserted into the tree, the points from smaller trees can be gathered in worst-case depth (if all the points must be rebuilt) and the rebuilding process takes worst case depth, using the result from Theorem 1.

The initial step of deleting the batch of points from each of the underlying d-trees incurs work (there are d-trees, each taking work ) and depth . Then, collecting the points that need to be reinserted can be done in worst-case depth and the reinsertion takes depth, from before. Overall, the depth is . The amortized work for reinserting points in trees that are less than half full is , as every point we reinsert can be charged to a deletion of another point, either from this batch or from a previous batch. This is because for a tree that is half full, there must be at least as many deletions from the tree as the number of points remaining in the tree. ∎

IV-B3 Data-Parallel -NN

Input: Point Set

Output: An array of arrays of the nearest neighbors of each point in .

In the data-parallel -NN implementation, we parallelize over the set of points given to search for nearest neighbors. First, on line 2, we allocate a -NN buffer for each of the points in . Then, for each of the non-empty trees in BDL-tree, we call the data-parallel -NN subroutine on the individual tree, passing in the set of points and the set of -NN buffers. Because we reuse the same set of -NN buffers for each underlying -NN call, we eventually end up with the -nearest neighbors across all of the individual trees for each point in .

Theorem 5.

For a constant , -NN queries over a batch of points over the points in the BDL-tree can be performed in work and depth.

Proof.

These bounds follow directly from the bounds of the underlying individual -NN calls. The -NN routine on the ’th underlying tree, with size , has worst-case work and depth . Summing over all gives the bounds. ∎

V Implementation and Optimizations

In this section, we describe implementation details and optimizations that we developed to speed up the BDL-tree in practice.

V-A Parallel Bloom Filter

The bloom filter is a probabilistic data structure for testing set membership, which can give false positive matches but no false negative matches [29]. When erasing a batch of points from the BDL-tree, we need to potentially search for every point to be deleted within every individual underlying d-tree. To mitigate this overhead, we use a bloom filter to prefilter points to be erased from each individual d-tree that definitely are not contained within it. Specifically, we implemented a parallel bloom filter and added one to each individual d-tree within the BDL-tree to track the points in that individual d-tree. Before erasing an input batch from each individual d-tree, we filter the batch with that d-tree’s bloom filter. This optimization adds some overhead to the construction and insertion subroutines, but provides significant benefits for the delete subroutine. To mitigate some of this overhead, we construct the bloom filters only when they are needed, rather than during construction.

V-B Data-Parallel -NN Structure

We tested three different variants of the data-parallel -NN search in order to determine the best parallelization scheme. Each scheme consists of 2 nested for loops; one over the input points and one over the individual d-trees. In the first variant, the outer loop is over the points and the inner loop is over the d-trees. We only parallelize the outer loop, so we perform a -NN search over all the trees for each point in parallel. In the second variant, we switch the loop order, so the outer loop is over the d-trees and the inner loop is over points. We parallelize both the inner and outer loops, so we perform a -NN search over each of the trees in a data-parallel fashion (i.e., search for the nearest neighbors of each point in parallel), but we also parallelize over the trees. In this case, because our -NN buffer is not thread-safe, for each point we have to allocate a separate buffer for each tree and combine the results at the end, which adds some extra overhead. In the third variant, described in Section IV-B3, the outer loop is over the d-trees and the inner loop is over points, but in contrast to the second scheme, we only parallelize the inner loop over points. So, we perform a data-parallel -NN over each of the individual d-trees, one at a time. We found that the second scheme gives the most parallelism, but the third scheme has the fastest end-to-end running time, both serially and in parallel. This is due to better cache locality of the third method—each tree is completely processed before moving on to the next one. One other optimization we used in this third scheme was to process the underlying trees in order from largest to smallest. We experimentally found that the data-parallel -NN search scaled better on large trees, and so this gave better radius estimates earlier (as opposed to processing the trees from smallest to largest).

V-C Parallel Splitting Heuristic

We implemented two different splitting heuristics for our d-trees: splitting by object median and splitting by spatial median. Object median refers to a true median—at each split, we split around the median of the coordinates of the points in that dimension. On the other hand, for spatial median, we compute the average of the minimum and maximum of the coordinates of the points in the current dimension and use this as the splitting hyperplane. The spatial median is faster to compute, but leads to potentially less balanced trees as it is not strictly splitting the points in half at every level. We implement the object median with a parallel in-place sort [25, 30] and we implement the spatial median in two steps. First, we perform two parallel prefix sums, using , as the predicate functions, to compute the minimum and maximum of the values, respectively. Then, we compute the spatial median as the average of these values and perform a parallel partition around this value.

V-D Coarsening

We introduced a number of optimizations that were controlled by tunable parameters in our implementation. One key optimization was the coarsening of leaves, in which we let each leaf node represent up to 16 points rather than 1. This reduces the amount of space needed for node pointers and also improves cache locality when traversing the tree, as the points in a leaf node are stored contiguously. A second key optimization was the coarsening of the serial base cases of our algorithms, in which we switch from a parallel algorithm to a serial version for recursive cases involving subtrees with less than 1000 points in order to mitigate the overhead of spawning new threads. A third parameter was the buffer tree size, which we set to 1024.

VI Experiments

We designed a set of experiments to investigate the performance and scalability of BDL-tree and compare it to the two baselines described earlier.

-

1.

B1 is a baseline described in Section III-C, where the d-tree is rebuilt on each batch insertion and deletion in order to maintain balance. This allows for improved query performance (as the tree is always perfectly balanced) at the cost of slowing down dynamic operations.

-

2.

B2 is another baseline described in Section III-C. It inserts points directly into the existing tree structure without recalculating spatial splits. This results in very fast inserts and deletes at the cost of potentially skewed trees (which would slow down query performance).

-

3.

BDL is our BDL-tree described in Section IV. It represents a tradeoff between batch update performance and query performance. By maintaining a set of balanced trees, it is able to achieve good performance on updates without sacrificing the quality of the spatial partition.

We use the following set of experiments to measure the scalability of BDL-tree and compare its performance characteristics to the baselines.

-

1.

Construction (Section VI-A): construct the tree over a dataset.

-

2.

Insertion (Section VI-B1): insert fixed-size batches of points into an empty tree until the entire dataset is inserted.

-

3.

Deletion (Section VI-C1): delete fixed-size batches of points from a tree constructed over the entire dataset until the entire dataset is deleted.

-

4.

-NN (Section VI-D1): find the -nearest neighbors of all the points in the dataset (i.e., compute the -NN graph of the dataset).

We also designed the following microbenchmarks in order to better explore the design tradeoffs that BDL-tree makes when compared to the baselines.

- 1.

-

2.

Varying after Batched Inserts (Section VI-D2): we build a tree using a set of batched insertions and then measure the -NN search performance with a range of values to measure the impact of on throughput and the impact of dynamic updates on -NN performance.

-

3.

Mixed Insert, Delete, and -NN Searches (Section VI-D3): we perform a series of batch updates (insertions and deletions) interspersed with -NN queries to measure the performance of the data structures over time.

We run all of the experiments over BDL-tree and the two baselines. In addition, for the scalability and batch size experiments, we also compare the object median and spatial median splitting heuristics described in Section V-C.

The experiments are all run on an AWS c5.18xlarge instance with 2 Intel Xeon Platinum 8124M CPUs (3.00 GHz), for a total of 36 two-way hyper-threaded cores and 144 GB RAM. Our experiments use all hyper-threads unless specified otherwise. We compile our benchmarks with the g++ compiler (version 9.3.0) with the -O3 flag, and use ParlayLib [30] for parallelism. All reported running times are the medians of 3 runs, after one extra warm-up run for each experiment.

We run the experiments over 9 datasets, consisting of 6 synthetic datasets and 3 real-world datasets. We use two types of synthetic datasets. The first is Uniform (U), consisting of points distributed uniformly at random inside a bounding hyper-cube with side length , where is the number of points. The second is VisualVar (V), a clustered dataset with variable-density, produced by Gan and Tao’s generator [31]. The generator produces points by performing a random walk in a local region, but jumping to random locations with some probability. For each of these two types, we generate them in 2D, 5D, and 7D, and for 10,000,000 points. We also use 3 real-world datasets: 10D-H-1M [32, 33] is a 10-dimensional dataset consisting of 928,991 points of home sensor data; 16D-C-4M [34, 35] is a 16-dimensional dataset consisting of 4,208,261 points of chemical sensor data; and 3D-C-321M [36] is a 3-dimensional dataset consisting of 321,065,547 points of astronomy data. Due to time constraints, we only ran experiments on 3D-C-321M using BDL-tree in parallel to demonstrate that BDL-tree can scale to large datasets.

VI-A Construction

In this benchmark, we measure the time required to construct a tree over each of the datasets. The results using an object median splitting heuristic are shown in Table I(a) and the results using a spatial median splitting heuristic are shown in Table I(b). Figure 5(a) shows the scalability of the throughput on the 10M points 7D uniform dataset.

As we can see from the results, BDL achieves similar or better performance both serially and in parallel than both B1 and B2, and has similar or better scalability than both. With the object median splitting heuristic, it achieves up to speedup, with an average speedup of . We also note that the single-threaded runtimes are faster with the spatial-median splitting heuristic than with the object median splitting heuristic. This is expected, because spatial median only involves splitting points at each level compared with finding the median for object-median, hence it is less expensive to compute; however, we also note that the scalability for spatial median is lower because there is less work to distribute among parallel threads.

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 20.6s | 16.3s | 14.4s | 0.5s (40.0x) | 3.7s (4.5x) | 0.4s (34.5x) |

| 2D-V-10M | 20.5s | 16.2s | 14.2s | 0.5s (40.3x) | 3.6s (4.5x) | 0.4s (34.8x) |

| 5D-U-10M | 23.3s | 20.8s | 16.3s | 0.7s (35.2x) | 4.8s (4.3x) | 0.5s (30.3x) |

| 5D-V-10M | 22.8s | 20.2s | 15.8s | 0.7s (34.9x) | 4.6s (4.4x) | 0.5s (29.2x) |

| 7D-U-10M | 27.0s | 24.0s | 17.1s | 0.8s (33.0x) | 5.4s (4.4x) | 0.6s (27.5x) |

| 7D-V-10M | 26.2s | 23.2s | 16.5s | 0.8s (33.5x) | 5.3s (4.4x) | 0.6s (26.6x) |

| 10D-H-1M | 1.5s | 1.5s | 2.8s | 0.1s (23.7x) | 0.5s (3.4x) | 0.1s (23.8x) |

| 16D-C-4M | 13.5s | 14.5s | 11.0s | 0.5s (25.2x) | 3.8s (3.8x) | 0.5s (20.4x) |

| 3D-C-321M | – | – | – | – | – | 20.4s |

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 10.7s | 2.3s | 5.3s | 0.5s (23.8x) | 1.6s (1.4x) | 0.4s (13.5x) |

| 2D-V-10M | 10.9s | 2.5s | 5.5s | 0.5s (22.8x) | 1.7s (1.5x) | 0.4s (13.5x) |

| 5D-U-10M | 13.7s | 3.4s | 6.7s | 0.8s (18.0x) | 2.3s (1.5x) | 0.6s (10.7x) |

| 5D-V-10M | 14.4s | 4.0s | 7.1s | 0.8s (17.4x) | 2.6s (1.5x) | 0.7s (10.3x) |

| 7D-U-10M | 16.8s | 4.4s | 7.1s | 1.0s (17.2x) | 2.9s (1.5x) | 0.8s (9.3x) |

| 7D-V-10M | 17.3s | 5.2s | 8.3s | 1.0s (16.9x) | 3.3s (1.6x) | 0.9s (9.2x) |

| 10D-H-1M | 1.4s | 0.8s | 3.2s | 0.1s (11.0x) | 0.5s (1.6x) | 0.2s (15.9x) |

| 16D-C-4M | 10.0s | 5.3s | 7.3s | 0.7s (14.0x) | 3.0s (1.8x) | 0.7s (10.2x) |

| 3D-C-321M | – | – | – | – | – | 20.8s |

VI-B Insertion

In this benchmark, we measure the performance of our batch insertion implementation as compared to the baselines. We split this experiment into two separate benchmarks, one to measure the full scalability and performance of our implementation, and one to measure the impact of varying batch sizes.

VI-B1 Scalability

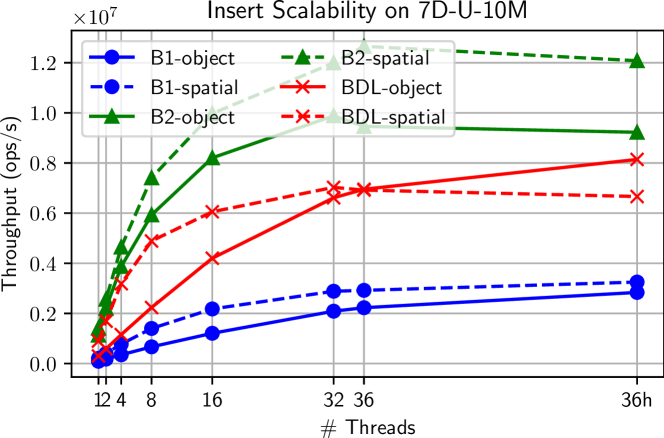

In this benchmark, we measure the time required to insert 10 batches each containing 10% of the points in the dataset into an initially empty tree for each of our two baselines as well as our BDL-tree. The results using object median and spatial median splitting heuristics are shown in Tables II(a), II(b), respectively. Figure 5(b) shows the scalability of the throughput on the 10M points 7D uniform dataset.

We see that B2 achieves the best performance on batched inserts—this is due to the fact that it does not perform any extra work to maintain balance and simply directly inserts points into the existing spatial structure. BDL achieves the second-best performance—this is due to the fact that it does not have to rebuild the entire tree on every insert, but amortizes the rebuilding work across the batches. Finally, B1 has the worst performance, as it must fully rebuild on every insertion. Similar to construction, we note that the spatial median heuristic performs better in the serial case but has lower scalability. With the object median splitting heuristic, BDL achieves parallel speedup of up to , with an average speedup of .

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 86.1s | 5.9s | 24.8s | 2.2s (39.5x) | 0.6s (10.1x) | 0.7s (35.4x) |

| 2D-V-10M | 86.1s | 5.9s | 24.4s | 2.2s (39.6x) | 0.6s (10.2x) | 0.7s (35.5x) |

| 5D-U-10M | 97.9s | 7.6s | 29.2s | 2.9s (33.6x) | 0.9s (8.7x) | 1.0s (30.3x) |

| 5D-V-10M | 94.5s | 7.5s | 28.1s | 2.8s (33.2x) | 0.9s (8.8x) | 1.0s (29.3x) |

| 7D-U-10M | 109.7s | 8.8s | 33.0s | 3.5s (31.1x) | 1.1s (8.1x) | 1.2s (26.9x) |

| 7D-V-10M | 106.1s | 8.7s | 31.7s | 3.5s (30.7x) | 1.1s (8.2x) | 1.2s (25.6x) |

| 10D-H-1M | 7.9s | 0.7s | 1.7s | 0.4s (22.1x) | 0.1s (5.7x) | 0.1s (16.3x) |

| 16D-C-4M | 66.2s | 5.5s | 21.1s | 3.0s (22.2x) | 0.9s (6.4x) | 1.1s (18.3x) |

| 3D-C-321M | – | – | – | – | – | 20.7s |

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 32.5s | 4.9s | 5.2s | 1.2s (26.1x) | 0.4s (11.9x) | 0.6s (9.2x) |

| 2D-V-10M | 33.9s | 5.0s | 5.8s | 1.4s (24.0x) | 0.5s (10.2x) | 0.6s (9.2x) |

| 5D-U-10M | 40.7s | 6.1s | 8.8s | 2.3s (17.5x) | 0.7s (9.4x) | 1.1s (8.1x) |

| 5D-V-10M | 44.5s | 6.7s | 10.0s | 2.8s (15.6x) | 0.8s (8.0x) | 1.2s (8.0x) |

| 7D-U-10M | 48.1s | 7.0s | 11.2s | 3.1s (15.6x) | 0.8s (8.5x) | 1.5s (7.4x) |

| 7D-V-10M | 52.6s | 7.6s | 12.5s | 3.7s (14.4x) | 1.0s (7.8x) | 1.6s (7.6x) |

| 10D-H-1M | 6.3s | 0.9s | 1.3s | 0.6s (10.2x) | 0.2s (3.8x) | 0.2s (6.6x) |

| 16D-C-4M | 42.9s | 6.0s | 12.9s | 3.6s (11.8x) | 1.0s (5.9x) | 1.5s (8.5x) |

| 3D-C-321M | – | – | – | – | – | 15.7s |

VI-B2 Batch Size

In this benchmark, we measure the performance of our batch insertion implementation as the size of the batch varies from 1M points to 5M points. We repeatedly perform batched inserts of the specified size until the entire dataset has been inserted. We provide plots of the results for the 2D VisualVar dataset in Figure 6(a) and for the 7D Uniform dataset in Figure 6(b). The first striking result is that the throughput decreases for B2 as the batch size increases, while the throughput increases for B1 and BDL. This is due to the fact that the work that B2 performs increases as the batch size grows—it has to do more work to compute spatial splits over larger batches, whereas for small batches it quickly computes the upper spatial splits on the first batch and never recomputes them. As a result, B2 has best throughput at smaller batch sizes, but the worst at large batch sizes. For B1 and BDL, note that the throughput increases as the batch size increases. This is due to the fact that each insert has an associated overhead of recomputing spatial partitions, and so the larger the batch size, the fewer times this overhead is paid. For larger batch sizes, BDL has the best throughput among the three implementations for object median. This is again due to the fact that it amortizes the work across inserts, rather than having to recompute spatial splits over the entire dataset at each insert. Finally, note that in most cases, the spatial median heuristic has a better throughput than its object median counterpart, as it takes less work to compute.

VI-C Deletion

In this benchmark, we measure the performance of our deletion implementation as compared to the baselines. Similar to the insertion experiment, we split this experiment into two separate benchmarks, one to measure the scalability and performance of our implementation, and one to measure the impact of varying batch size.

VI-C1 Scalability

In this benchmark, we measure the time required to delete 10 batches each containing 10% of the points in the dataset from an initially full tree for each of our two baselines as well as the BDL-tree. The results using an object median splitting heuristic are shown in Table III(a) and the results using a spatial median splitting heuristic are shown in Table III(b). Figure 5(c) shows the scalability of the throughput on the 10M point 7D uniform dataset.

We observe that B2 has vastly superior performance—it does almost no work other than tombstoning the deleted points so it is extremely efficient. Next, we see that BDL has the second-best performance, as it amortizes the rebuilding across the batches, rather than having to rebuild across the entire point set for every delete. Finally, B1 has the worst performance as it rebuilds on every delete. With the object median splitting heuristic, BDL achieves parallel speedup of up to , with an average speedup of .

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 114.0s | 1.1s | 29.2s | 2.7s (43.0x) | 0.1s (10.4x) | 0.9s (33.0x) |

| 2D-V-10M | 114.2s | 1.1s | 29.0s | 2.7s (43.0x) | 0.1s (10.5x) | 0.9s (33.1x) |

| 5D-U-10M | 130.2s | 2.2s | 40.1s | 3.5s (37.2x) | 0.2s (9.1x) | 1.4s (28.1x) |

| 5D-V-10M | 127.0s | 2.2s | 39.3s | 3.5s (36.8x) | 0.2s (9.1x) | 1.4s (27.8x) |

| 7D-U-10M | 146.7s | 3.0s | 37.3s | 4.1s (36.0x) | 0.3s (8.9x) | 1.4s (27.0x) |

| 7D-V-10M | 144.4s | 3.0s | 36.9s | 4.1s (35.6x) | 0.3s (8.9x) | 1.4s (27.2x) |

| 10D-H-1M | 24.7s | 0.2s | 21.9s | 1.0s (24.7x) | 0.0s (5.6x) | 0.8s (28.5x) |

| 16D-C-4M | 74.8s | 2.9s | 33.1s | 2.7s (27.4x) | 0.3s (8.6x) | 1.4s (23.6x) |

| 3D-C-321M | – | – | – | – | – | 17.3s |

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 70.2s | 1.3s | 30.2s | 1.8s (38.8x) | 0.1s (11.8x) | 1.1s (26.3x) |

| 2D-V-10M | 71.7s | 1.5s | 30.7s | 2.0s (36.0x) | 0.1s (10.7x) | 1.2s (25.4x) |

| 5D-U-10M | 82.9s | 2.3s | 29.2s | 2.9s (28.9x) | 0.2s (9.6x) | 1.2s (23.9x) |

| 5D-V-10M | 85.9s | 2.9s | 29.6s | 3.4s (25.4x) | 0.3s (9.0x) | 1.3s (22.2x) |

| 7D-U-10M | 97.1s | 3.2s | 38.2s | 3.6s (27.1x) | 0.3s (9.4x) | 1.8s (21.4x) |

| 7D-V-10M | 100.8s | 3.9s | 38.3s | 4.2s (24.0x) | 0.4s (8.8x) | 1.8s (20.9x) |

| 10D-H-1M | 27.6s | 0.4s | 22.1s | 1.1s (25.1x) | 0.1s (4.8x) | 0.8s (26.2x) |

| 16D-C-4M | 60.5s | 4.0s | 32.5s | 3.3s (18.3x) | 0.5s (8.8x) | 1.7s (19.5x) |

| 3D-C-321M | – | – | – | – | – | – |

VI-C2 Batch Size

In this benchmark, we measure the performance of our batch deletion implementation as the size of the batched update varies from 1M points to 5M points. We provide plots of the results for the 2D VisualVar and 7D Uniform datasets in Figures 6(c), 6(d), respectively. We note again that B2 consistently has the highest throughput, with BDL in second and B1 with the lowest throughput. Furthermore, the throughput of B1 and BDL increases as the batch size increases; this is true for the same reasons as with insertions. For B2, we observe consistent throughput across batch sizes.

VI-D Data-Parallel -NN

In this benchmark, we measure the performance and scalability of our -NN implementation as compared to the baselines. We split this into three separate experiments.

VI-D1 Scalability

In this experiment, we measure the scalability of the -NN operation after constructing each data structure over the entire dataset (in a single batch). The results using an object median splitting heuristic are shown in Table IV(a) and the results using a spatial median splitting heuristic are shown in Table IV(b). Figure 5(d) shows the scalability of the throughput on the 10M point 7D uniform dataset. With the object median heuristic, BDL achieves a parallel speedup of up to , with an average speedup of .

The results show that B1 and B2 have similar performance (B2 is slightly faster due to implementation differences). Furthermore, they are both faster than BDL-tree. This is to be expected, because the -NN operation is performed directly over the tree after it is constructed over the entire dataset in a single batch. Thus, both baselines will consist of fully balanced trees and will be able to perform very efficient -NN queries. On the other hand, BDL consists of a set of balanced trees, which adds overhead to the -NN operation, as it must be performed separately on each of these individual trees. However, as the next two benchmarks show, BDL provides superior performance in the case of a mixed set of dynamic batch inserts and deletes interspersed with -NN queries.

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 34.9s | 11.9s | 64.5s | 0.6s (57.2x) | 0.3s (40.3x) | 1.5s (43.1x) |

| 2D-V-10M | 37.2s | 12.7s | 67.0s | 0.7s (57.0x) | 0.3s (40.7x) | 1.5s (43.7x) |

| 5D-U-10M | 302.3s | 178.0s | 339.4s | 6.3s (47.6x) | 3.5s (51.4x) | 8.6s (39.4x) |

| 5D-V-10M | 109.5s | 64.1s | 145.8s | 2.1s (51.1x) | 1.2s (52.7x) | 3.2s (46.1x) |

| 7D-U-10M | 1520.0s | 1239.4s | 1621.6s | 44.3s (34.3x) | 34.1s (36.4x) | 52.9s (30.7x) |

| 7D-V-10M | 133.6s | 86.3s | 173.1s | 2.8s (48.1x) | 1.7s (50.7x) | 4.0s (43.8x) |

| 10D-H-1M | 5.3s | 5.5s | 11.9s | 0.1s (54.5x) | 0.1s (50.5x) | 0.3s (45.8x) |

| 16D-C-4M | 464.4s | 612.2s | 468.3s | 16.5s (28.1x) | 16.1s (38.1x) | 17.3s (27.1x) |

| 3D-C-321M | – | – | – | – | – | 15.6s |

| 1 | 36h | |||||

|---|---|---|---|---|---|---|

| B1 | B2 | BDL | B1 | B2 | BDL | |

| 2D-U-10M | 33.6s | 13.0s | 69.9s | 0.6s (55.6x) | 0.3s (40.0x) | 1.6s (44.9x) |

| 2D-V-10M | 35.7s | 13.5s | 72.7s | 0.6s (56.8x) | 0.3s (39.5x) | 1.6s (44.9x) |

| 5D-U-10M | 348.8s | 216.7s | 503.1s | 6.7s (52.2x) | 4.0s (54.1x) | 9.6s (52.2x) |

| 5D-V-10M | 103.1s | 65.2s | 174.2s | 2.0s (52.8x) | 1.4s (45.4x) | 3.5s (49.5x) |

| 7D-U-10M | 2142.0s | 1494.0s | 2804.0s | 45.5s (47.1x) | 36.7s (40.7x) | 57.3s (48.9x) |

| 7D-V-10M | 116.9s | 81.3s | 203.1s | 2.3s (50.5x) | 1.7s (48.0x) | 4.1s (49.3x) |

| 10D-H-1M | 5.7s | 4.7s | 15.5s | 0.1s (54.6x) | 0.1s (45.2x) | 0.3s (47.3x) |

| 16D-C-4M | 606.7s | 557.0s | 623.4s | 19.9s (30.5x) | 11.9s (47.0x) | 20.4s (30.5x) |

| 3D-C-321M | – | – | – | – | – | 17.4s |

VI-D2 Effect of Varying

In this experiment, we benchmark the throughput of the -NN operation on 36 cores with hyper-threading as varies from 2 to 11. For all three trees, we perform the -NN operation after building the tree from a set of batch insertions, with a batch size of 5% of the dataset, until the entire dataset is inserted. The results are shown for the 2D VisualVar dataset in Figure 7(a) and for the 7D Uniform dataset in Figure 7(b). In both scenarios, we see that B1 has the best -NN performance, followed closely by BDL. B2 has significantly worse performance—this is because the construction of the tree was performed with a set of batch insertions, rather than a single construction over the entire dataset, the tree ends up imbalanced and the -NN query performance suffers.

VI-D3 Mixed Operations

In this final experiment, we measure the -NN and overall performance of the trees as a mixed set of batch insertions, batch deletions, and batch -NN queries are performed. In particular, we perform a set of 20 batch insertions, each consisting of 5% of the dataset, into the tree. After every 5 batch insertions, we perform a -NN query with (over the entire dataset) to measure the current query performance. Then, we perform a set of 15 batch deletes, each consisting of a random 5% of the dataset (with no repeats). After every 5 batch deletes, we again perform a -NN () query. Overall, there are 7 -NN queries. We thus split the experiment into 7 distinct sections, demarcated by the -NN queries. For each section (labeled as INS0, INS1, INS2, INS3, DEL0, DEL1, DEL2), we measure the -NN runtime as well as the time for the 5 batched insertion/deletion operations. The results are shown for the 2D VisualVar dataset in Figure 8(a) and for the 5d Uniform dataset in Figure 8(b) (we observed similar results for other datasets). The -axis shows the 7 sections, and the -axis shows the time for each of these sections. There are two lines for each tree—a dashed line indicating just the -NN times at each section, and a solid line indicating the total time for the batched update and -NN.

In the Uniform case, we see that B2 performs the worst overall, due almost entirely to its poor -NN performance after batched updates. Note that the batched updates themselves contribute minimally to the total runtime of this baseline—they are very fast but cause significant imbalance in the tree structure, leading to degraded query performance. B1 has the best raw -NN query time, but its overall runtime is the second worst, as the batched updates are quite expensive (in order to maintain the balance that results in fast query times). Finally, BDL represents the best tradeoff between dynamic batch updates and -NN performance. In particular, it has the best total runtime after every operation. The results are quite similar for the VisualVar case, except that we note that B1 has the overall worst runtime, due to very expensive updates.

VI-D4 Dual-Tree -NN

In addition to the data-parallel approach discussed above, we also implemented -NN based on the dual-tree traversal proposed by March et al. [21]. While we found our dual-tree -NN for BDL was faster on a single-thread due to more efficient pruning, it was not as scalable as other -NN approaches and thus did not perform as well in parallel. Therefore, we omit the experimental results for dual-tree -NN.

VII Conclusion

We have presented the BDL-tree, a parallel batch-dynamic d-tree that supports batched construction, insertions, deletions, and -NN queries. We show that our data structure has strong theoretical work and depth bounds. Furthermore, our experiments show that the BDL-tree achieves good parallel speedup and presents a useful tradeoff between two baseline implementations. In particular, it delivers the best performance in a dynamic setting involving batched updates to the underlying dataset interspersed with -NN queries.

VIII Acknowledgements

This research was supported by DOE Early Career Award #DE-SC0018947, NSF CAREER Award #CCF-1845763, Google Faculty Research Award, Google Research Scholar Award, DARPA SDH Award #HR0011-18-3-0007, and Applications Driving Architectures (ADA) Research Center, a JUMP Center co-sponsored by SRC and DARPA.

References

- [1] J. L. Bentley, “Multidimensional binary search trees used for associative searching,” Commun. ACM, vol. 18, no. 9, p. 509–517, Sep. 1975.

- [2] ——, “Decomposable searching problems,” Information Processing Letters, vol. 8, no. 5, pp. 244–251, 1979.

- [3] J. L. Bentley and J. B. Saxe, “Decomposable searching problems I. static-to-dynamic transformation,” Journal of Algorithms, vol. 1, no. 4, pp. 301–358, 1980.

- [4] P. K. Agarwal, L. Arge, A. Danner, and B. Holland-Minkley, “Cache-oblivious data structures for orthogonal range searching,” in Proceedings of the Nineteenth Annual Symposium on Computational Geometry, 2003, p. 237–245.

- [5] P. K. Agarwal, K. Fox, K. Munagala, and A. Nath, “Parallel algorithms for constructing range and nearest-neighbor searching data structures,” in Proceedings of the 35th ACM SIGMOD-SIGACT-SIGAI Symposium on Principles of Database Systems, 2016, p. 429–440.

- [6] R. R. Curtin, “Faster dual-tree traversal for nearest neighbor search,” in Proceedings of the 8th International Conference on Similarity Search and Applications, 2015, p. 77–89.

- [7] D. Wehr and R. Radkowski, “Parallel kd-tree construction on the GPU with an adaptive split and sort strategy,” Int. J. Parallel Program., vol. 46, no. 6, p. 1139–1156, Dec. 2018.

- [8] S. Zellmann, J. P. Schulze, and U. Lang, “Binned k-d tree construction for sparse volume data on multi-core and GPU systems,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 3, pp. 1904–1915, 2021.

- [9] O. Procopiuc, P. K. Agarwal, L. Arge, and J. S. Vitter, “Bkd-tree: A dynamic scalable kd-tree,” in International Symposium on Spatial and Temporal Databases. Springer, 2003, pp. 46–65.

- [10] R. A. Finkel and J. L. Bentley, “Quad trees a data structure for retrieval on composite keys,” Acta Informatica, vol. 4, no. 1, pp. 1–9, 1974.

- [11] S. M. Omohundro, Five balltree construction algorithms. International Computer Science Institute Berkeley, 1989.

- [12] A. Beygelzimer, S. Kakade, and J. Langford, “Cover trees for nearest neighbor,” in Proceedings of the 23rd international conference on Machine learning, 2006, pp. 97–104.

- [13] A. Guttman, “R-trees: A dynamic index structure for spatial searching,” in Proceedings of the ACM SIGMOD international conference on Management of data, 1984, pp. 47–57.

- [14] M. Shevtsov, A. Soupikov, and A. Kapustin, “Highly parallel fast KD-tree construction for interactive ray tracing of dynamic scenes,” Computer Graphics Forum, vol. 26, no. 3, pp. 395–404, 2007.

- [15] P. O’Neil, E. Cheng, D. Gawlick, and E. O’Neil, “The log-structured merge-tree (LSM-tree),” Acta Informatica, vol. 33, no. 4, pp. 351–385, 1996.

- [16] R. A. Vitillo, “Parallel log-structure merge tree for key-value stores,” https://github.com/vitillo/kvstore.

- [17] M. Dobson and G. E. Blelloch, “Parallel nearest neighbors in low dimensions with batch updates,” CoRR, vol. abs/2111.04182, 2021. [Online]. Available: https://arxiv.org/abs/2111.04182

- [18] J. H. Friedman, J. L. Bentley, and R. A. Finkel, “An algorithm for finding best matches in logarithmic expected time,” ACM Transactions on Mathematical Software (TOMS), vol. 3, no. 3, pp. 209–226, 1977.

- [19] R. R. Curtin, W. B. March, P. Ram, D. V. Anderson, A. G. Gray, and C. L. Isbell, “Tree-independent dual-tree algorithms,” in Proceedings of the 30th International Conference on International Conference on Machine Learning, 2013, p. 1435–1443.

- [20] A. G. Gray and A. W. Moore, “’N-body’ problems in statistical learning,” in Proceedings of the 13th International Conference on Neural Information Processing Systems, 2000, p. 500–506.

- [21] W. B. March, P. Ram, and A. G. Gray, “Fast Euclidean minimum spanning tree: Algorithm, analysis, and applications,” in Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 2010, p. 603–612.

- [22] Y. Wang, S. Yu, Y. Gu, and J. Shun, “A parallel batch-dynamic data structure for the closest pair problem,” in 37th International Symposium on Computational Geometry, vol. 189, 2021, pp. 60:1–60:16.

- [23] L. Dhulipala, Q. C. Liu, J. Shun, and S. Yu, “Parallel batch-dynamic k-clique counting,” in Symposium on Algorithmic Principles of Computer Systems (APOCS), 2021, pp. 129–143.

- [24] T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein, Introduction to algorithms. MIT press, 2009.

- [25] J. JaJa, Introduction to Parallel Algorithms. Addison-Wesley Professional, 1992.

- [26] R. D. Blumofe and C. E. Leiserson, “Scheduling multithreaded computations by work stealing,” J. ACM, vol. 46, no. 5, p. 720–748, sep 1999.

- [27] L. Arge, G. S. Brodal, and R. Fagerberg, “Cache-oblivious data structures,” in Handbook of Data Structures and Applications. Chapman and Hall/CRC, 2018, pp. 545–565.

- [28] E. D. Demaine, “Cache-oblivious algorithms and data structures,” Lecture Notes from the EEF Summer School on Massive Data Sets, vol. 8, no. 4, pp. 1–249, 2002.

- [29] B. H. Bloom, “Space/time trade-offs in hash coding with allowable errors,” Communications of the ACM, 1970.

- [30] G. E. Blelloch, D. Anderson, and L. Dhulipala, “ParlayLib - a toolkit for parallel algorithms on shared-memory multicore machines,” in Proceedings of the 32nd ACM Symposium on Parallelism in Algorithms and Architectures, 2020, p. 507–509.

- [31] J. Gan and Y. Tao, “DBSCAN revisited: Mis-claim, un-fixability, and approximation,” in Proceedings of the ACM SIGMOD International Conference on Management of Data, 2015, p. 519–530.

- [32] “Ht dataset,” https://archive.ics.uci.edu/ml/datasets/Gas+sensors+for+home+activity+monitoring.

- [33] R. Huerta, T. Mosqueiro, J. Fonollosa, N. F. Rulkov, and I. Rodriguez-Lujan, “Online decorrelation of humidity and temperature in chemical sensors for continuous monitoring,” Chemometrics and Intelligent Laboratory Systems, vol. 157, pp. 169–176, 2016.

- [34] “Chem dataset,” https://archive.ics.uci.edu/ml/datasets/Gas+sensor+array+under+dynamic+gas+mixtures.

- [35] J. Fonollosa, S. Sheik, R. Huerta, and S. Marco, “Reservoir computing compensates slow response of chemosensor arrays exposed to fast varying gas concentrations in continuous monitoring,” Sensors and Actuators B: Chemical, vol. 215, pp. 618–629, 2015.

- [36] Y. Kwon, D. Nunley, J. P. Gardner, M. Balazinska, B. Howe, and S. Loebman, “Scalable clustering algorithm for N-body simulations in a shared-nothing cluster,” in International Conference on Scientific and Statistical Database Management, 2010, pp. 132–150.