22email: [email protected]

Parallel Approaches to Accelerate Bayesian Decision Trees

Abstract

Markov Chain Monte Carlo (MCMC) is a well-established family of algorithms primarily used in Bayesian statistics to sample from a target distribution when direct sampling is challenging. Existing work on Bayesian decision trees uses MCMC. Unforunately, this can be slow, especially when considering large volumes of data. It is hard to parallelise the accept-reject component of the MCMC. None-the-less, we propose two methods for exploiting parallelism in the MCMC: in the first, we replace the MCMC with another numerical Bayesian approach, the Sequential Monte Carlo (SMC) sampler, which has the appealing property that it is an inherently parallel algorithm; in the second, we consider data partitioning. Both methods use multi-core processing with a High-Performance Computing (HPC) resource. We test the two methods in various study settings to determine which method is the most beneficial for each test case. Experiments show that data partitioning has limited utility in the settings we consider and that the use of the SMC sampler can improve run-time (compared to the sequential implementation) by up to a factor of 343.

Keywords:

Parallel algorithms Machine Learning Bayesian Decision Tree1 Introduction, Recent Work, and Our Contribution

Markov Chain Monte Carlo(MCMC) can characterise a distribution without knowing the analytic form of that distribution. MCMC achieves this by using samples to characterise the distribution. The sampling operation is Markovian such that the new sample depends only on the previous one (and not any other samples before that previous one). MCMC is an extremely effective tool for Bayesian inference because it can handle a wide variety of posterior distributions and specifically those distributions which would be difficult or impossible to work with via analytic examination. MCMC has been used to solve Bayesian inference problems spanning biology [15], forensics [14], education [7], and chemistry [9], among other areas.

As will be explained in more detail in section 3.1, MCMC operates by proposing samples and then accepting these samples with a probability that is calculated such that the MCMC generates samples from the distribution of interest. Since the next step of the MCMC depends on the current sample, it is non-trivial for a single MCMC chain to be processed contemporaneously by several processing elements. Byrd [2] proposed a method to parallelise a single MCMC chain, where backup samples are calculated in separate threads of execution in anticipation of the possibility that the current move is not accepted. This is wasteful since it will often be the case that the current move is accepted (such that the backup samples are not needed). However, when the acceptance rate is relatively low, there will be increased utility from considering such backup samples. Of course, there will be a trade-off between having many concurrent threads generating such backup samples and them all being used. More generally, single-chain parallelisation can quickly become problematic as the efficiency of the parallelisation is not guaranteed.

Another way of improving the run-time of MCMC is to reduce the convergence rate by requiring fewer iterations. Metropolis-Coupled MCMC[1], , runs multiple MCMC chains at the same time. One chain is treated as “cold” and targets the posterior of interest, while the other chains are treated as “hot”, and attempt to sample from tempered versions of the posterior such that proposed samples are more likely to be accepted. The idea is that the space will be explored more quickly by the “hot” chains than by the “cold” chains: the “hot” chains find it easier to make what appear myopically to be disadvantageous moves across the parameter space. Results indicate improvements in run-time when more chains (and cores) were considered.

Several recent studies [5, 10, 12, 18] have considered MCMC in the context of Bayesian decision trees and as a replacement for traditional tree-based algorithms when tackling machine learning tasks. We are also aware that there are occassions (eg see [11]) when researchers are eager to use MCMC Decision Trees, but it is not feasible or efficient for their research, forcing them to use alternative methods: the prohibitively expensive likelihood evaluations are frequently sufficiently time-consuming that these algorithms cannot be practically applied to complex models with large-scale datasets. This paper aims to investigate alternative mechanisms for achieving improved run-time in the context of Bayesian decision trees, and to explore how we can best apply such algorithms to large datasets with either small or big feature spaces. Our focus is on applications where run-time is critical.

Existing papers consider a range of problem sizes. For example, [10] uses a dataset of 200 data, while [12] implemented some benchmarks results using a synthetic dataset of 300 data. There are also some comparison studies using Bayesian Trees; for example, [18] used 303 data points to train their algorithm, while [5, 6] compared different algorithms, including Bayesian Additive Regression Trees (BART), with a dataset of 2000 data points.

In this paper, we describe two methods with the aim of achieving a faster execution time for Bayesian decision trees. Our contributions are then:

-

•

We describe Sampling Using Multinomial Distribution (SUMD), a novel algorithm for performing inference in the context of Bayesian decision trees that uses a Sequential Monte Carlo (SMC) sampler to generate samples.

-

•

We describe Data Partitioning (DP), which, for transparency, we see as pragmatic and do not perceive to be novel and which spreads the data among the available cores, evaluating each tree faster than a conventional implementation of MCMC.

-

•

We consider a diverse range of datasets to assess which of these approaches is the most suitable way of parallelising Bayesian decision trees, and identify the effective number of cores that should be used, given the type and the length of the dataset being processed.

The remainder of this paper is organised as follows: Section 2 presents the statistical model used in Bayesian Decision trees; Section 3 then describes the methods we compare; Section 4 presents the experimental results, and Section 5 concludes the paper.

2 Bayesian Decision Trees

A decision tree operates by descending a tree. The process of outputting a classification probability for a given datum starts at a root node. At each non-leaf node, a decision as to which child node to progress to is made based on the datum and the parameters of the node. This process continues until a leaf node is reached. At the leaf node, a node-specific and datum-independent classification output is generated.

Our model describes the conditional distribution of a value for given the corresponding values for , where is a vector of predictors and the corresponding values that we predict. We define the tree to be such that the function of the non-leaf nodes is to (implicitly) identify a region, , of values for for which can be approximated as independent of the specific value of , ie as . This model is called a probabilistic classification tree, according to the quantitative response .

For a given tree, , we define the depth of the tree to be , the set of leaf nodes to be and the set of non-leaf nodes to be . The tree, , is then parameterised by: the set of features for all non-leaf nodes, ; the vector of corresponding thresholds, ; the parameters, , of the conditional probabilities associated with the leaf nodes. This is such that the parameters of the tree are and are the parameters associated with the th node of the tree, where:

| (3) |

Given a dataset comprising data, and corresponding features, , and since decision trees are specified by and , a Bayesian analysis of the problem proceeds by specifying a prior probability distribution, and associated likelihood, . Because defines the parametric model for , it will usually be convenient to adopt the following structure for the joint probability distribution of data, , and the corresponding vectors of predictors, :

| (4) | ||||

| (5) |

which we note is proportional to the posterior, , and where we assume

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

Equation 6 describes the product of the probabilities of every data point, , being classified correctly given the datum’s features, , the tree structure , and the features/thresholds, , associated with each node of the tree. At the th nod, equation 8 describes the product of possibilities of picking the th feature and corresponding threshold, , given the tree structure, . Equation 9 is used as the prior for the tree . This formula is recommended by [3] and three parameters specify this prior: the depth of the tree, ; the parameter, , which acts as a normalisation constant; the parameter, , which specifies how many leaf nodes are probable, with larger values of reducing the expected number of leaf nodes. is crucial as this is the penalizing feature of our probabilistic tree which prevents an algorithm that uses this prior from over-fitting and allows convergence to occur[13]. Changing allows us to change the prior probability associated with “bushy” trees, those whose leaf nodes do not vary too much in depth.

An exhaustive evaluation of equation 4 over all trees, , will not be feasible, except in trivially small problems, because of the sheer number of possible trees.

Despite this limitations, Bayesian algorithms can still be used to explore the posterior. Such algorithms simulate a chain sequence of trees, such as:

| (10) |

which converge in distribution to the posterior, which is itself proportional to the joint distribution, , specified in equation 4. We choose to have a simulated sequence that gravitates toward regions of higher posterior probability. Such a simulation can be used to search for high-posterior probability trees stochastically. We now describe the details of algorithms that achieve this and how they are can be implemented.

2.1 Stochastic Processes on Trees

To design algorithms that can search the space of trees stochastically, we first need to define a stochastic process for moving between trees. More specifically, we consider the following four kinds of move from one tree to another:

-

•

Grow(G) : we sample one of the leaf nodes, , and replace it with a new node with parameters, and a , which we sample uniformly from their parameter ranges.

-

•

Prune(P) : we sample the th node (uniformly) and make it a leaf.

-

•

Change(C) : we sample the th node (uniformly) from the non-leaf nodes, , and sample and a uniformly from their parameter ranges.

-

•

Swap(S) : we sample the th and th nodes uniformly, where , and swap with and with .

We note that there will be situations (eg when moving from a tree with a single node) when some moves cannot occur. We assume each ‘valid’ move is equally likely and this then makes it possible to compute the probability of transition from one tree, , to another, , which we denote .

3 Methods

3.1 Conventional MCMC

One approach is to use a conventional application of Markov Chain Monte Carlo to decision trees, as found in [8].

More specifically, we begin with a tree, and then at the th iteration, we propose a new tree by sampling . We then accept the proposed tree by drawing such that:

| (13) |

where we define the acceptance ratio, as:

| (14) |

.

This process proceeds for iterations. We take the opportunity to highlight that this process is inherently sequential in its nature.

3.2 Sampling Using Multinomial Distribution (SUMD)

We now consider using an Sequential Monte Carlo (SMC) sampler[4] to tackle the problem of sampling the trees from the posterior. We begin with a trees (which we assume to be sampled from some initial distribution over trees with a target that is that initial prior such that the inital weights are all ), one for each of the cores that we have available: we denote the th tree at the th iteration, . At each iteration and for each tree, we sample . This is such that the weights of the th tree is . We then normalise the weights (such that they sum to unity) and implement multinomial resampling to replace the trees with trees to which we assign equal weights (of ). We take the opportunity to emphasise that, just like MCMC, an SMC sampler configured in this way will generate samples from the posterior.

This method is summarised in algorithm 1. Note that we have replaced the widely-used and hard to parallelise accept-reject process in MCMC with multinomial sampling and that we can evaluate in parallel across the cores (we are also aware of techniques for implementing the multinomial sampling in parallel (eg see [16, 17]) but have not found this component of the algorithm to be a computational bottleneck for the application considered herein). Once the algorithm has converged, we keep the samples drawn from the multinomial distribution.

3.3 Data Partitioning (DP)

This method implements the same algorithm as is described in section 3.1. The only difference is that we split the dataset, , across the number of available cores. For example, if the number of available cores , we split the dataset into parts of equal size (in terms of the number of entries on each core). Each core processes the data in parallel and calculates the leaf probabilities for each leaf node with each core then calculating a contribution the (single) calculation of equation .

We note that the SUMC approach departs from the use of MCMC with the aim of increasing the number of trees considered simultaneously and thereby reducing the number of iterations needed. In contrast, this second DP approach spreads the data among the available cores to more rapidly evaluate the tree sampled at each iteration of the MCMC.

4 Experimental Setup and Results

4.1 Datasets

To demonstrate the run-time improvement we achieved through our proposed methods, we experiment on 6 publicly accessible111https://archive.ics.uci.edu/ml/index.php datasets listed in Table 1. We assert that this is a diverse range of datasets, and spans datasets with a small number of data points and small feature spaces, up to large datasets with large feature spaces. In particular, the mean number of records used is 300,480, with the lowest having 1,599 records and the largest 999,999. The mean number of features considered is 35, with the lowest being 9 features and the largest 91. For the sake of making comparisons more straightforward, we have categorised our datasets (see the class column in Table 1) into 6 classes based on whether the data is Small (SD), Medium (MD) or Big (BD) and whether the set of features considered in Small (SF) or big (BF). Henceforth, we refer to the datasets based on this classification.

| Dataset Name | Records | Features | Class |

|---|---|---|---|

| Abalone(AB) | 4,177 | 9 | SD/SF |

| Diabetes 012 Health(DH) | 253,680 | 22 | MD/BF |

| One Hundred Plant Species(OHPS) | 1,599 | 65 | SD/BF |

| OULAD | 28,084 | 9 | MD/SF |

| Poker Hand(PH) | 999,999 | 11 | BD/SF |

| Year Prediction MSD(YPM) | 515,344 | 91 | BD/BF |

This section presents the experimental results obtained using the proposed methods with a focus on run-time and convergence diagnostics. The following results were obtained using a local HPC platform comprising twin SP 6138 processors (20 cores each running at 2GHz), with 384GB memory RAM. For the large datasets, we have used high-memory nodes, each with 1TB of RAM. We note that the run-times presented in this paper include any communication overheads.

For testing purposes and a fair comparison and evaluation, we use the same hyperparameters and for every contesting algorithm. Moreover, for every method, we set the number of iterations to and the number of burn-in iterations to .

A very crucial aspect when designing parallel algorithms as alternatives to sequential alternatives is to ensure that the results output by the parallel algorithms offer commensurate (if not identical) results to the sequential algorithm. Table 2 shows that the sampled trees output from SUMD were indeed commensurate in classification accuracy to those output by the conventional MCMC approach. We do not compare the accuracy of DP with the conventional MCMC approach since the method is not altering the operation of the original algorithm, but simply altering its implementation.

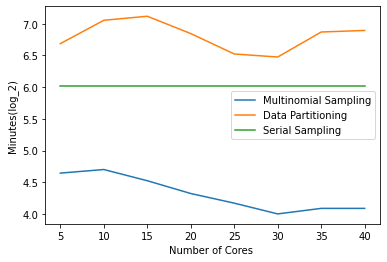

We tested each method with 10-Fold Cross Validation. Results indicate and validate our findings in section 1 that running an MCMC Decision Tree on large datasets in a sequential implementation is very challenging when time is heavily constrained. More specifically, the run-time for BD/SF took 165 hours (approximately 7 days), and 213 hours (approximately 9 days) for BD/BF (see figures 1, 2 and3). Our results indicate that SUMD is much faster than the sequential implementation and DP in all cases considered (see Figures 1, 2 and 3). In summary: SUMD is 132 times faster than the sequential implementation on SD/SF, 343 times faster on SD/MF, 4 times faster for MD/SF, 18 times faster for MD/BF, 19 times faster for BD/SF, and 10 times faster for BD/BF.

We do note that SUMD is memory hungry: for the SUMD method, we need to evaluate many more trees concurrently (subject to the number of cores we are using) and each tree needs to be evaluated against all the data.

We anticipated DP would have resulted in greater improvements in run-time relative to the sequential implementation of MCMC. The underlying reason for this is that while we save time by having more processors, each handling less data, the communication overhead is significant when transferring data between the cores: for each datum, the MCMC needs access the probability vector for the corresponding leaf node and then calculate the probability of each data point being classified correctly. For this particular method, we also tried to partition the data once, when we produced the probabilities for each leaf node, to reduce the communication overhead. However, the resulting run-time was very close to that for our initial implementation, where we partitioned the data twice. Our conclusion is that, given the time spent communicating between the cores and the time needed to transfer the data to each core, there is very limited benefit stemming from parallelising MCMC decision tress by partitioning of the data.

Large datasets utilising more than 30 cores can only run in high memory nodes (with 1TB of memory RAM) when running entirely in parallel. Of course, if the nodes have less memory, it is possible to run with partial parallelism, where a core may wait for another core to continue its work. While the presented methods can run on a personal computer, it is not recommended: greater improvements to run-times and can be achieved using HPC.

Figures 1, 2, 3 make it possible to identify the recommended number of cores we should use in the context of each test case. Specifically, when the feature space is small, it is more beneficial, in terms of run-time, to use the maximum number of available cores we have. Experiments have shown that when the feature space is big, it is wiser to use 20 to 30 cores, as having more cores degrades run-time.

| Dataset | Accuracy | |

|---|---|---|

| Sequential | SUMD | |

| SD/SF | 22.48±0.2 | 22.48±0.2 |

| SD/BF | 1.66±0.4 | 1.66±0.5 |

| MD/SF | 63.23±0.1 | 63.23±0.1 |

| MD/BF | 84.28±0.05 | 84.28±0.05 |

| BD/SF | 26.56±0.07 | 26.56±0.1 |

| BD/BF | 8.05±0.01 | 8.05±0.0.1 |

5 Conclusion

Our comparison study has shown that by taking advantage of multi-core architectures, we can improve the run-time of Bayesian Decision Trees by up to times. According to our experimental results, our novel approach based on Sequential Monte Carlo samplers, SUMD, outperforms an approach that exploits data parallelism, with SUMD improving the run-time by an average of 88 times relative to a sequential Markov chain Monte Carlo implementation. Moreover, we have shown that the results obtained are very similar to the sequential implementation. We perceive that, by taking advantage of the improvements in modern processor designs, our methods help make Bayesian decision tree-based solutions more productive and increasingly applicable to a broader range of applications.

This study, though, has some limitations. First, to run in parallel and produce the speedups we described, the proposed methods require access to HPC nodes with substantial memory resources. Secondly, at present, the implementations we have are not yet as easy-to-use as existing (single-processor) libraries: we plan to address this in future work.

References

- [1] Gautam Altekar, Sandhya Dwarkadas, John P Huelsenbeck, and Fredrik Ronquist. Parallel metropolis coupled markov chain monte carlo for bayesian phylogenetic inference. Bioinformatics, 2004.

- [2] Jonathan MR Byrd, Stephen A Jarvis, and Abhir H Bhalerao. Reducing the run-time of mcmc programs by multithreading on smp architectures. In 2008 IEEE International Symposium on Parallel and Distributed Processing. IEEE, 2008.

- [3] Hugh A Chipman, Edward I George, and Robert E McCulloch. Bart: Bayesian additive regression trees. The Annals of Applied Statistics, 2010.

- [4] Pierre Del Moral, Arnaud Doucet, and Ajay Jasra. Sequential monte carlo samplers. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68(3):411–436, 2006.

- [5] Efthyvoulos Drousiotis, Panagiotis Pentaliotis, Lei Shi, and Alexandra I Cristea. Capturing fairness and uncertainty in student dropout prediction–a comparison study. In International Conference on Artificial Intelligence in Education, pages 139–144. Springer, 2021.

- [6] Efthyvoulos Drousiotis, Panagiotis Pentaliotis, Lei Shi, and Alexandra Cristea I. Balancing fined-tuned machine learning models between continuous and discrete variables-a comprehensive analysis using educational data. 2022.

- [7] Efthyvoulos Drousiotis, Lei Shi, and Simon Maskell. Early predictor for student success based on behavioural and demographical indicators. In International Conference on Intelligent Tutoring Systems. Springer, 2021.

- [8] Efthyvoulos Drousiotis and Paul Spirakis. Single mcmc chain parallelisation on decision trees. Learning and Intelligent Optimization Conference, 2022.

- [9] Joffrey Dumont Le Brazidec, Marc Bocquet, Olivier Saunier, and Yelva Roustan. Quantification of uncertainties in the assessment of an atmospheric release source applied to the autumn 2017. Atmospheric Chemistry and Physics, 2021.

- [10] Livia Jakaite, Vitaly Schetinin, Carsten Maple, and Joachim Schult. Bayesian decision trees for eeg assessment of newborn brain maturity. In 2010 UK Workshop on Computational Intelligence (UKCI), pages 1–6. IEEE, 2010.

- [11] Maarten Kruijver, Hannah Kelly, Kevin Cheng, Meng-Han Lin, Judi Morawitz, Laura Russell, John Buckleton, and Jo-Anne Bright. Estimating the number of contributors to a dna profile using decision trees. Forensic Science International: Genetics, 50:102407, 2021.

- [12] Reza Mohammadi, Matthew Pratola, and Maurits Kaptein. Continuous-time birth-death mcmc for bayesian regression tree models. arXiv preprint arXiv:1904.09339, 2019.

- [13] Veronika Ročková and Enakshi Saha. On theory for bart. In The 22nd International Conference on Artificial Intelligence and Statistics. PMLR, 2019.

- [14] Duncan Taylor, Jo-Anne Bright, and John Buckleton. Interpreting forensic dna profiling evidence without specifying the number of contributors. Forensic Science International: Genetics, 2014.

- [15] Gloria I Valderrama-Bahamóndez and Holger Fröhlich. Mcmc techniques for parameter estimation of ode based models in systems biology. Frontiers in Applied Mathematics and Statistics, 2019.

- [16] Alessandro Varsi, Simon Maskell, and Paul G Spirakis. An o (log2 n) fully-balanced resampling algorithm for particle filters on distributed memory architectures. Algorithms, 14(12):342, 2021.

- [17] Alessandro Varsi, Jack Taylor, Lykourgos Kekempanos, Edward Pyzer Knapp, and Simon Maskell. A fast parallel particle filter for shared memory systems. IEEE Signal Processing Letters, 27:1570–1574, 2020.

- [18] J Vijayarangam, S Kamalakkannan, and J Anita Smiles. A novel comparison of neural network and decision tree as classifiers using r. In 2021 Fifth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC), pages 712–715. IEEE, 2021.