Oversampling Divide-and-conquer for Response-skewed Kernel Ridge Regression

Abstract

The divide-and-conquer method has been widely used for estimating large-scale kernel ridge regression estimates. Unfortunately, when the response variable is highly skewed, the divide-and-conquer kernel ridge regression (dacKRR) may overlook the underrepresented region and result in unacceptable results. We combine a novel response-adaptive partition strategy with the oversampling technique synergistically to overcome the limitation. Through the proposed novel algorithm, we allocate some carefully identified informative observations to multiple nodes (local processors). Although the oversampling technique has been widely used for addressing discrete label skewness, extending it to the dacKRR setting is nontrivial. We provide both theoretical and practical guidance on how to effectively over-sample the observations under the dacKRR setting. Furthermore, we show the proposed estimate has a smaller risk than that of the classical dacKRR estimate under mild conditions. Our theoretical findings are supported by both simulated and real-data analyses.

1 INTRODUCTION

We consider the problem of calculating large-scale kernel ridge regression (KRR) estimates in a nonparametric regression model. Although the theoretical properties of the KRR estimator are well-understood [8, 50, 38], in practice, the computation of KRR estimates may suffer from a large computational burden. In particular, for a sample of size , it requires computational time to calculate a KRR estimate using the standard approach, as will be detailed in Section 2. Such a computational cost is prohibitive when the sample size is considerable. The divide-and-conquer approach has been implemented pervasively to alleviate such computational burden [51, 52, 44, 45, 46]. Such an approach randomly partitions the full sample into subsamples of equal sizes, then calculates a local estimate on an independent local processor (also called a local node) for each subsample. The local estimates are then averaged to obtain the global estimate. The divide-and-conquer approach reduces the computational cost of calculating KRR estimates from to . Such savings may be substantial as grows.

Despite algorithmic benefits, the success of the divide-and-conquer approach highly depends on the assumption that the subsamples can well represent the observed full sample. Nevertheless, this assumption cannot be guaranteed in many real-world applications, where the response variable may have a highly skewed distribution. Specifically, the random variable has a highly skewed distribution if is nearly zero inside a large region . Problems of this type arise in high energy physics, Bayesian inference, financial research, biomedical research, environmental data, among others [28, 1, 12]. In these applications, are the responses that occur with reduced frequency. However, such responses are often of more interest as they tend to have a more widespread impact. For example, may represent a rare signal for seismic activity or stock market fluctuations. Overlooking such signals could resulting in a substantial negative impact on society either economically or in terms of human casualties.

|

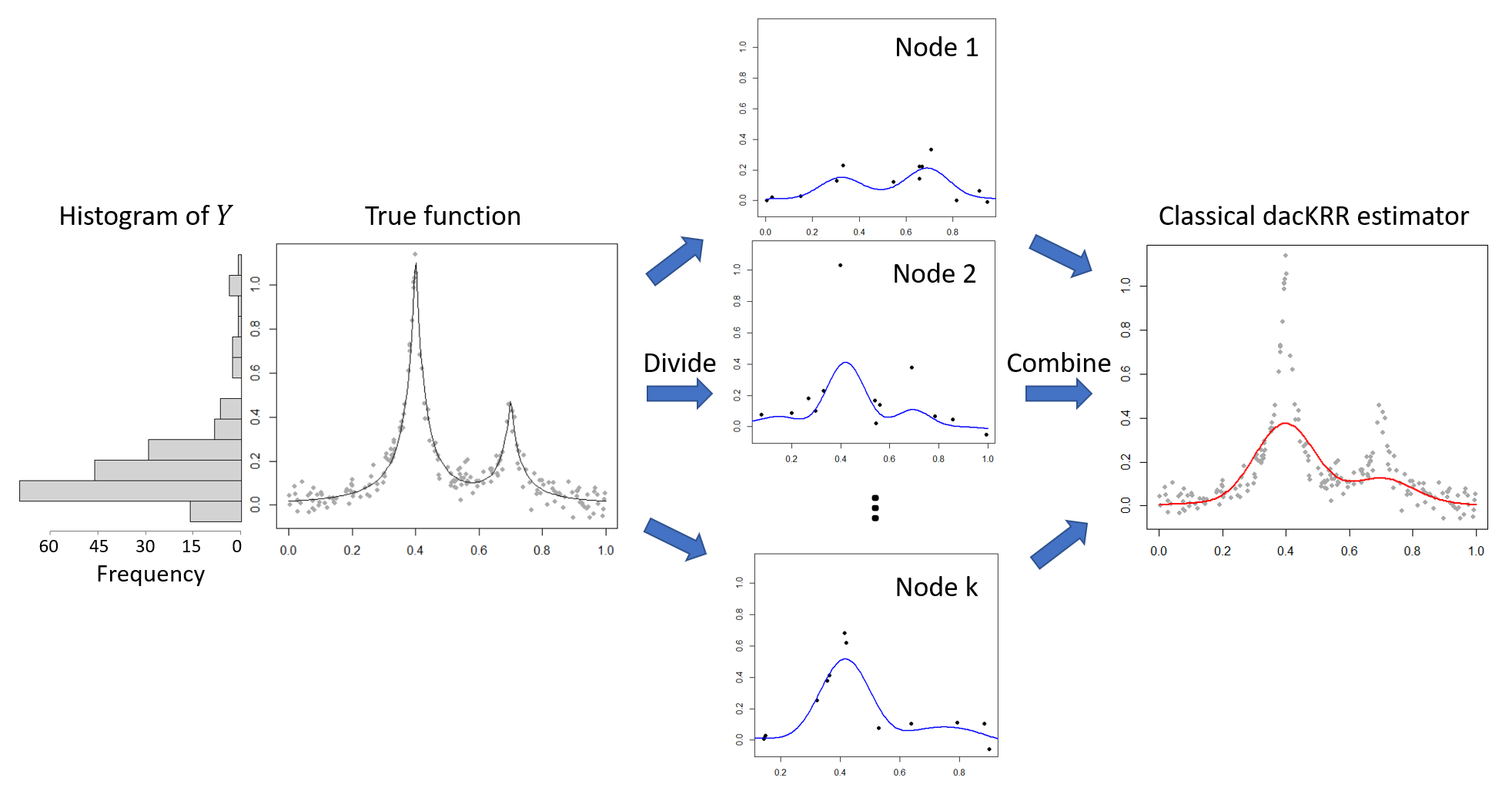

Recall that in the classical divide-and-conquer approach, a random subsample is processed on every local node. Under the aforementioned rare-event scenarios, such a subsample could fail to have observations selected from the potentially informative region . The local estimate based on such a subsample thus is very likely to overlook the region . Averaging these local estimators could lead to unreliable estimations and predictions over these informative regions. A synthetic example in Fig. 1 illustrates the scenario that the response is highly skewed. In this example, the one-dimensional sample (gray points) is uniformly generated from . The response variable has a heavy-tailed distribution, as illustrated by the histogram. The classical dacKRR method is used to estimate the true function (gray curve). We observe that almost all the local estimates (blue curves) Averaging these local estimates thus results in a global estimate (red curve) with unacceptable estimation performance. Such an observation is due to the fact that the subsample in each node overlooks the informative region over the distribution of (the peaks) and thus fails to provide a successful global estimate.

Our contributions. To combat the obstacles, we develop a novel response-adaptive partition approach with oversampling to obtain large-scale kernel ridge regression estimates, especially when the response is highly skewed. Although the oversampling technique is widely used for addressing discrete label imbalance, extending such a technique to the continuous dacKRR setting is nontrivial. We bridge the gap by providing both theoretical and practical guidance on how to effectively over-sample the observations. Different from the classical divide-and-conquer approach, such that each observation is allocated to only one local node, we propose to allocate some informative data points to multiple nodes. Theoretically, we show the proposed estimates have a smaller risk than the classical dacKRR estimates under mild conditions. Furthermore, we show the number of strata of the response vector and the number of nodes regulate the trade-off between the computational time and the risk of the proposed estimator. In particular, when is well-selected, a larger is associated with a longer computational time as well as a smaller risk. Such results are novel to the best of our knowledge. Our theoretical findings are supported by both simulated and real-data analyses. In addition, we show the proposed method is not specific to the dacKRR method and has the potential to improve the estimation of other nonparametric regression estimators under the divide-and-conquer setting.

2 PRELIMINARIES

Model setup. Let be a reproducing kernel Hilbert space (RKHS). Such a RKHS is induced by the reproducing kernel , which is a symmetric nonnegative definite function, and an inner product satisfying for any . The RKHS is equipped with the norm . The well-known Mercer’s theorem states that, under some regularity conditions, the kernel function can be written as , where is a sequence of decreasing eigenvalues and is a family of orthonormal basis functions. The smoothness of a function is characterized by the decaying rate of the eigenvalues . The major types of such decaying rates include the finite rank type, the exponentially decaying type, and the polynomially decaying type. The representative kernel function of these three major types are the polynomial kernel ( is an integer), the Gaussian kernel ( is the scale parameter and is the Euclidean norm), and the kernel , respectively. We refer to [14, 36] for more details.

Consider the nonparametric regression model

| (1) |

where is the response, is the predictors, is the unknown function to be estimated, and are i.i.d. normal random errors with zero mean and unknown variance . The kernel ridge regression estimator aims to find a projection of into the RKHS . Such an estimator can be written as

| (2) |

Here, the regularization parameter controls the trade-off between the goodness of fit of and the smoothness of it. A penalized least squares framework analogous to Equation (2) has been extensively studied in the literature of regression splines and smoothing splines [40, 13, 23, 16, 10, 33, 48, 37, 47, 24, 49, 29].

The well-known representer theorem [40] states that the minimizer of Equation (2) in the RKHS takes the form Let be the response vector, be the coefficient vector and be the kernel matrix such that the -th element equals . These coefficient vector can be estimated through solving the minimization problem as follows,

| (3) |

It is known that the solution of such a minimization problem has a closed form

| (4) |

where the regularization parameter can be selected based on the cross-validation technique, the Mallows’s Cp method [27], or the generalized cross-validation (GCV) criterion [41].

Although the solution of the minimization problem (3) has a closed-form, the computational cost for calculating the solution using Equation (4) is of the order , which is prohibitive when the sample size is considerable. In this paper, we focus on the divide-and-conquer approach for alleviating such a computational burden. In recent decades, there also exist a large number of studies that aim to develop low-rank matrix approximation methods to accelerate the calculation of kernel ridge regression estimates [31, 42, 32, 26, 30]. These approaches are beyond the scope of this paper. In practice, one can combine the divide-and-conquer approach with the aforementioned methods to further accelerate the calculation.

Background of the divide-and-conquer approach. The classical divide-and-conquer approach is easy to describe. Rather than solving the minimization problem (3) using the full sample, the divide-and-conquer approach randomly partitions the full sample into subsamples of equal sizes. Each subsample is then allocated to an independent local node. Next, minimization problems are solved independently on each local node based on the corresponding subsamples. The final estimate is simply the average of all the local estimates. The divide-and-conquer approach has proven to be effective in linear models [4, 21], partially linear models [53], nonparametric regression models [51, 52, 19, 35, 11], principal component analysis [43, 6], matrix factorization [25], among others.

Algorithm 1 summarizes the classical divide-and-conquer method under the kernel ridge regression setting. Such an algorithm reduces the computational cost for the estimation from to . The savings may be substantial as grows.

One difficulty in Algorithm 1 is how to choose the regularization parameter s. A natural way to determine the size of these regularization parameters is to utilize the standard approaches, e.g., the Mallows’s Cp criterion or the (GCV) criterion, based only on the local subsample . It is known that such a simple strategy may lead to a global estimate that suffers from suboptimal performance [52, 45]. To overcome the challenges, there has been a large number of studies dedicated to developing more effective methods of selecting the local regularization parameters in the recent decade. For example, [52] proposed to select the regularization parameter according to the order of the entire sample size instead of the subsample size . Recently, [45] proposed the distributed GCV method to select a global optimal regularization parameter for nonparametric regression under the classical divide-and-conquer setting.

3 MOTIVATION AND METHODOLOGY

Motivation. To motivate the development of the proposed method, we first re-examine the oversampling strategy. Such a strategy is well-known in imbalanced data analysis, where the labels can be viewed as skewed discrete response variables. [17, 15, 18]. Classical statistical and machine learning algorithms assume that the number of observations in each class is roughly at the same scale. In many real-world cases, however, such an assumption may not hold, resulting in imbalanced data. Imbalanced data pose a difficulty for classical learning algorithms, as they will be biassed towards the majority group. Despite the rareness, the minority class is usually more important from the data mining perspective, as it may carry useful and important information. An effective classification algorithm thus should take such unbalances into account. To tackle the imbalanced data, the oversampling strategy supplements the training set with multiple copies of the minority classes and keeps all the observations in the majority classes to make the whole dataset suitable for a standard learning algorithm. Such strategy has proven to be effective for achieving more robust results [17, 15, 18].

Intuitively, the data with a continuous skewed response can be considered as imbalanced data. In particular, if we divide the range of the skewed response into equally-spaced disjoint intervals, denoted by , then there will be a disproportionate ratio of observations in each interval. The intervals that contain more observations can be considered as the majority classes, and the ones that contain fewer observations can be considered as the minority classes. Recall that the classical divide-and-conquer approach utilizes a simple random subsample from the full sample to calculate the local estimate. Therefore, when the response variable is highly skewed, such a subsample could easily overlook the observations respecting the minority classes, resulting in unpleasant estimates.

Main algorithm. Inspired by the oversampling strategy, we propose to supplement the training data with multiple copies of the minority classes before applying the divide-and-conquer approach. In addition, analogous to the downsampling strategy, the subsample in each node should keep most of the observations from the minority classes. Let be the number of observations in the set . Let be the rounding function that rounds down the number to its nearest integer. The proposed method, called oversampling divide-and-conquer kernel ridge regression, is summarized in Algorithm 2.

|

We use a toy example to illustrate Algorithm 2 in Fig. 2. Suppose there are three nodes (), and the histogram of the response is divided into three slices (). The left panel of Fig. 2 illustrates the oversampling process. In particular, the observations are duplicated by certain times, such that the total number of observations respecting each slice is roughly equal to each other. The original observations and the duplicated observations are marked by tilted lines and horizontal lines, respectively. The right panel of Fig. 2 shows the next step. For each slice, the observations within such a slice are then randomly and evenly allocated to each of the nodes. The observations allocated to different nodes are marked by blue, red and purple, respectively. Finally, similar to the classical dacKRR approach, the local estimates are calculated and then are averaged to obtain the global estimate.

Implementation details and the computational cost. Analogous to Algorithm 1, a difficulty in Algorithm 2 is how to choose the regularization parameters ’s. Throughout this paper, we opt to use the same approach to select ’s as the one proposed in [52]. The number of slices can be set as a constant or be determined by Scott’s rule; see [34] for more details. Our empirical studies indicate that the performance of the proposed algorithm is robust to a wide range of choices of . In addition, to avoid the data dependency within a node raised by oversampling, we conduct a “de-duplicating” process before calculating the local estimates.

Consider the total number of observations in the training set after the oversampling and de-duplicating processes. Let denote such a number. When the response variable has a roughly uniform distribution, few observations would be copied, and thus one has . In such a case, the computational cost of Algorithm 2 is at the same order as that of the classical divide-and-conquer method. In the most extreme case that the most majority class contains almost all the observations, it can be shown that . Therefore, the computational cost of Algorithm 2 is at the order of . Consequently, when is a constant, again, the computational cost of Algorithm 2 is at the same order of the classical divide-and-conquer method. Otherwise, when and go to infinity, for example, when is determined by Scott’s rule [34] ( ), and , the computational cost of Algorithm 2 becomes . However, such a higher computational cost is associated with theoretical benefits, as will be detailed in the next section.

4 THEORETICAL RESULTS

Main theorem. In this section, we obtain the upper bounds on the risk of the proposed estimator and establish the main result in Theorem 4.1. We show our bounds contain a smaller asymptotic estimation variance term, compared to the bounds of the classical dacKRR estimator [52, 20]. The following assumptions are required to bound the terms in Theorem 4.1. Assumption 4 guarantees the spectral decomposition of the kernel via Mercer’s theorem. Assumption 4 is a regularity assumption on . These two assumptions are fairly standard. Assumption 4 requires that the basis functions are uniformly bounded. [52] showed that one could easily verify this assumption for a given kernel . For instance, this assumption holds for many classical kernel functions, e.g., the Gaussian kernel.

Assumption 1. The reproducing kernel is symmetric, positive definite, and square integrable.

Assumption 2. The underlying function .

Assumption 3. There exists some such that .

Let be the subset with size of allocated to the th node after de-duplicating, . We assume they are drawn i.i.d from an unknown probability measure . Let denote marginal distribution of . For brevity, we further assume the sample size for each node is the same. The bounds on the risk of the proposed estimator are given in Theorem 4.1, followed by the main corollary.

Theorem 4.1.

Corollary 4.2.

Assume the first two terms of the bounds in Theorem 4.1 are dominant, we have

| (5) |

With the properly chosen regularization parameter, most of the commonly used kernels, e.g., the kernels with polynomial eigendecay, can satisfy this assumption for Corollary 5 [9]. More discussions can be found in the Supplementary Material. The squared bias term for the classical dacKRR estimator is at the order of , which is the same as the one in Equation (5) [52]. However, different from the variance term in Equation (5), which equals , the variance term for the classical dacKRR estimator is at the order of . Our rate depends on the eigenvalues instead of the trace of kernel derived in [52]. This rate is tighter than that in [2] and is considered as the minimax rate.

Practical guidance on the selection of the parameter and . We now compare the risk of the classical dacKRR estimator with the proposed one under three different scenarios. Furthermore, we provide some practical guidance on the selection of the number of slides and the number of nodes . We denote the total sample size after oversampling as .

First, consider the scenario that as shown in Fig. 3(a). In this case, oversampling is unnecessary, thus the proposed estimate has the same risk as the classical dacKRR estimator.

Second, consider the scenario that the response variable has a slightly skewed distribution, i.e., one has as shown in Fig. 3(b). Under this scenario, the total sample size after de-duplicating is also in the same order of the original sample size, i.e. . Corollary 5 indicates the proposed estimate has the same risk as the classical dacKRR estimator.

Third, consider the scenario that the response variable has a highly skewed distribution. Under this scenario, the sample size in the “majorest” slide is in the same order of , and the sample size in the “minorest” slide, denoted as , is in the order of . In these cases, one has We then discuss under two cases, (1) when , especially, when is a constant; and (2) when . In case (1), the sample size after de-duplicating is in the same order of . The risk of then has the same order as the classical dacKRR estimator. In case (2), notice that , this indicates that should go to infinity as . In this scenario, we consider i.e., , then we have . Thus equation (5) becomes

| (6) |

For example, when is determined by Scott’s rule, one has , and we choose . Equation (6) indicates the proposed estimator has a smaller risk than the classical dacKRR estimator. Corollary 5 indicates that the parameters and regulate the trade-off between the computational time and the risk. Specifically, when is well-selected, a larger is associated with a longer computational time as well as a smaller risk.

|

5 SIMULATION RESULTS

To show the effectiveness of the proposed estimator, we compared it with the classical dacKRR estimator in terms of the mean squared error (MSE). We calculated the MSE for each of the estimators based on 100 replicates. In particular, , where is the true function and represents the estimator in the th replication, respectively. Through all the experiments in this section, we set the number of nodes . Gaussian kernel function was used for the kernel ridge regression. We followed the procedure in [52] to select the bandwidth for the kernel and the regularization parameters. For the proposed method, we divided the range of into slices according to Scott’s rule [34].

|

|

Recall that in Algorithm 2, each observation is copied for certain times, such that the total number of observations in each slice is roughly equal to each other. A natural question is how does the number of such copies affects the performance of the proposed method. In other words, if a fewer number of copies are supplemented to the training data, would the proposed method perform better or worse? To answer this question, we induced a constant to control the oversampling size. Specifically, for the th slice, , we duplicated the observations within such a slice for times, instead of times in Algorithm 2. We set . This procedure is equivalent to Algorithm 2 when .

Let be the -dimensional vector, such that all elements are equal to . We considered a function that has a highly skewed response, i.e.,

where , and represents the norm. We then simulated the data from Model (1) with , , and two different regression function ’s,

Uni-peak: , with ;

Double-peak: , with and .

Figure 4 shows the MSE versus different sample size under various settings. Each column represents a different , and each row represents a different . The classical dacKRR estimator and the full sample KRR estimator are labeled as blue lines and black lines, respectively. The proposed estimators are labeled as red lines, and the proposed method with , i.e., Algorithm 2, is labeled as solid red lines. The vertical bars represent the standard errors obtained from 100 replications. In Fig. 4, we first observed that the classical dacKRR estimator, as expected, does not perform well. We then observed that the proposed estimators perform consistently better than the classical estimator. Such an observation indicates that when the response is highly skewed, oversampling the observations respecting the minority values of the response helps improve the estimation accuracy. Finally, we observed that a larger value of is associated with a better performance. In particular, as the number of copies increases, the proposed estimator tends to have faster convergence rates. This observation supports Corollary 5, which states that a larger number of observations after the oversampling process is associated with a smaller estimation MSE.

Besides Scott’s rule (), we also considered other rules, e.g., the Sturge’s formula () [39] and the Freedman-Diaconis choice () [7]. Figure 5 compares the empirical performance of the proposed estimator w.r.t. different choices of ’s. For the setting of unskewed response (the first row), all methods share similar performance. For response-skewed settings (the second and the third row), all the three aforementioned rules yield better performance than fixed . Among all, Scott’s rule gives the best results. The simulation results are consistent with Corollary 4.2, which indicates that the proposed estimator shows advantages over the classical one.

Besides the impact of , we also studied the impact of . In addition, we have also applied the proposed strategy to other nonparametric regression estimators, say smoothing splines, under the divide-and-conquer setting. Such simulation results are provided in the Supplementary Material. These results indicated that the performance of the proposed method is robust the choice of and has the potential to improve the estimation of other nonparametric regression estimators under the divide-and-conquer setting. It is also possible to consider unequally-spaced slicing methods in the proposed method; however, such extensions are beyond the scope of this paper.

6 REAL DATA ANALYSIS

We applied the proposed method to a real-world dataset called Melbourne-housing-market 111Data source https://www.kaggle.com/anthonypino/ melbourne-housing-market.. The dataset includes housing clearance data in Melbourne from the year 2016 to 2017. Each observation represents a record for a sold house, including the total price and several predictors. Of interest is to predict the price-per-square-meter (range from 20 to 30000) for each sold house using the longitude and the latitude of the house, and the distance from the house to the central business district (CBD). Such a goal can be achieved by using kernel ridge regression.

|

We replicated the experiment one hundred times. In each replicate, we used stratified sampling to randomly pick of the observations as the testing set and the remaining as the training set. The Gaussian kernel function was used for the kernel ridge regression. We set the number of nodes . We followed the procedure in [52] to select the bandwidth for the kernel and the regularization parameters. The performance of different estimators was evaluated by the MSE, respecting the prediction on the testing set. The experiment was conducted in R using a Windows computer with 16GB of memory and a single-threaded 3.5Ghz CPU.

The left panel of Fig. 6 shows the MSE versus the different number of nodes , and the right panel of Fig. 6 shows the CPU time (in seconds) versus different . To the best of our knowledge, our work is the first approach that addresses the response-skewed issue under the dacKRR setting, while other approaches only considered discrete-response settings or cannot be easily extended to the divide-and-conquer scenario. Thus we only compare our method with the classical dacKRR approach. In Fig. 6, the blue lines and the red lines represent the classical dacKRR approach and the proposed approach, respectively. Vertical bars represent the standard errors, which are obtained from one hundred replicates. The black lines represent the results of the full sample KRR estimate, and the gray dashed lines represent its standard errors. We can see that the proposed estimate consistently outperforms the classical dacKRR estimate. In particular, we observe the MSE of the proposed estimate is comparable with the MSE of the full sample KRR estimate. The MSE for the classical dacKRR estimate, however, almost doubles the MSE for the full sample KRR estimate when the number of nodes is greater than one hundred. The bottom panel of Fig. 6 shows the CPU time of the proposed approach is comparable with the CPU time of the classical divide-and-conquer approach. All these observations are consistent with the findings in the previous section. Such observations indicate the proposed approach outperforms the classical dacKRR approach for response-skewed data without requiring too much extra computing time.

Appendix A SIMULATION STUDIES

A.1 Impact of The Number of Nodes

We considered the function as in the manuscript, i.e.,

where , and represents the norm. We then simulated the data from Model (1) with , , and two different regression function ’s,

Uni-peak: , with ;

Double-peak: , with and .

Figure 7 study the impact of the number of nodes . In Fig. 7, we fix and let varies. We observe that the proposed estimator consistently outperforms the classical one under all scenarios.

|

A.2 Application in Other Non-parametric Regression Methods

Besides the KRR estimator, we also applied the proposed response-adaptive partition strategy to other non-parametric regression methods. One example is the smoothing splines under the divide-and-conquer setting. In the simulation, we again study the impact of the over-sample size , the number of slices , and the number of nodes . We set the dimension , other settings are similar to the cases for KRR. The results are shown in Fig. 8. Each row of Fig. 8 shows the impact of and , respectively. The odd columns represent the estimation MSE, and the even columns represent the CPU time in seconds. The first two columns represent the results of the uni-peak setting, and the last two columns represent the results of the double-peak setting. We can see that the results show the similar pattern as in the KRR cases. These results indicate that the performance of the proposed method is robust for different nonparametric regressions.

|

Appendix B PROOFS

B.1 Proof of Theorem 4.1

The proof of Theorem 4.1 relies on the following bias-variance decomposition

where . The claim of Theorem 4.1 can be proved by combining the inequalities in Lemma B.1 and B.2. The minimax bound for risk is

With the properly chosen smoothing parameter, the first two terms in the upper bound are typically dominate for most of the commonly used kernels, e.g., the kernels for the Sobolev spaces, see [9]. For any kernel with -polynomial eigendecay, we obtain the optimal convergence rate for risk, i.e., , with for the finite number of nodes.

Lemma B.1.

Under Assumption 1-3, we have

Proof. The estimate minimizes the following empirical objective,

| (7) |

where (, ), are the data assigned to the first node, is a self-adjoint operator such that for , and . Note that the objective function (7) is Fréchet differentiable [22]. We can obtain

For notation simplicity, we define

where is the adjoint of . We then have that

which yields the estimate

where . Using the fact that , and plugging it into the equation above yields

| (8) |

Taking expectation on both sides of (8), we obtain

The difference between and can be written as

where . Thus, we have

| (9) |

where for . The equation above uses the fact that one can move between and inner products as follows

Let , be the orthonormal basis for and be the matrix based on the orthonormal basis. We then have , and the norm in (9) can be written as

where , , , and .

| (10) |

where the event for ,

and is the complement of . We use the fact that to obtain the inequality in (10). We now bound the first term on the right-hand side of (10).

The last inequality follows based on Definition 1 in [2]. For , we have

where the last inequality follows based on the spectral theorem. Detailed discussions can be found in [3] and [9, Chapter 9]. Applying the above inequality on the event when and , we obtain

for any .

For the second term in (10), we have that

where the last inequality follows based on Definition 1 in [2]. We complete the proof by applying Lemma 1 adapted from [5], which states that

Lemma B.2.

Under Assumption 1-3, we have

Proof. Note that

From (8), we thus have

| (11) |

Following similar techniques in Lemma 2.1, the norm of in (11) can be written as

By Von Neumann’s inequality and the assumption that for , we obtain

The rest of proof is similar to that in Lemma 2.2. Following the same technique in (10), we have

| (12) |

We now bound the first term on the right-hand side of (12). By Von Neumann inequality, we have

| (13) |

From Cauchy-Schwartz inequality, we have the following inequality for any

From Definition 1 in [2], we also have

and

Thus, we have

| (14) |

Plugging (14) into (13), we have

For the second term on the right-hand side of (12), we have

where the last inequality follows by Von Neumann’s inequality. From (14) and Definition 1 in [2], it is easy to verify that

Applying Von Neumann’s inequality again, we have

which is bounded by on the event . We complete the proof by setting and using the fact that

References

- [1] A. A. Afifi, J. B. Kotlerman, S. L. Ettner, and M. Cowan. Methods for improving regression analysis for skewed continuous or counted responses. Annu. Rev. Public Health, 28:95–111, 2007.

- [2] F. Bauer, S. Pereverzev, and L. Rosasco. On regularization algorithms in learning theory. Journal of Complexity, 23(1):52–72, 2007.

- [3] A. Caponnetto and E. De Vito. Optimal rates for the regularized least-squares algorithm. Foundations of Computational Mathematics, 7(3):331–368, 2007.

- [4] C. P. Chen and C.-Y. Zhang. Data-intensive applications, challenges, techniques and technologies: A survey on big data. Information Sciences, 275:314–347, 2014.

- [5] L. H. Dicker, D. P. Foster, and D. Hsu. Kernel ridge vs. principal component regression: Minimax bounds and the qualification of regularization operators. Electronic Journal of Statistics, 11(1):1022–1047, 2017.

- [6] J. Fan, D. Wang, K. Wang, and Z. Zhu. Distributed estimation of principal eigenspaces. Annals of statistics, 47(6):3009, 2019.

- [7] D. Freedman and P. Diaconis. On the histogram as a density estimator: L2 theory. Zeitschrift für Wahrscheinlichkeitstheorie und verwandte Gebiete, 57(4):453–476, 1981.

- [8] S. A. Geer and S. van de Geer. Empirical Processes in M-estimation. Cambridge University Press, 2000.

- [9] C. Gu. Smoothing Spline ANOVA Models. Springer Science & Business Media, 2013.

- [10] C. Gu and Y.-J. Kim. Penalized likelihood regression: General formulation and efficient approximation. Canadian Journal of Statistics, 30(4):619–628, 2002.

- [11] Z.-C. Guo, L. Shi, and Q. Wu. Learning theory of distributed regression with bias corrected regularization kernel network. The Journal of Machine Learning Research, 18(1):4237–4261, 2017.

- [12] G. Haixiang, L. Yijing, J. Shang, G. Mingyun, H. Yuanyue, and G. Bing. Learning from class-imbalanced data: Review of methods and applications. Expert Systems with Applications, 73:220–239, 2017.

- [13] T. Hastie. Pseudosplines. Journal of the Royal Statistical Society. Series B (Methodological), pages 379–396, 1996.

- [14] T. Hastie, R. Tibshirani, and J. Friedman. The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media, 2009.

- [15] H. He and E. A. Garcia. Learning from imbalanced data. IEEE Transactions on knowledge and data engineering, 21(9):1263–1284, 2009.

- [16] X. He, L. Shen, and Z. Shen. A data-adaptive knot selection scheme for fitting splines. IEEE Signal Processing Letters, 8(5):137–139, 2001.

- [17] N. Japkowicz and S. Stephen. The class imbalance problem: A systematic study. Intelligent data analysis, 6(5):429–449, 2002.

- [18] B. Krawczyk. Learning from imbalanced data: open challenges and future directions. Progress in Artificial Intelligence, 5(4):221–232, 2016.

- [19] S.-B. Lin, X. Guo, and D.-X. Zhou. Distributed learning with regularized least squares. The Journal of Machine Learning Research, 18(1):3202–3232, 2017.

- [20] M. Liu, Z. Shang, and G. Cheng. How many machines can we use in parallel computing for kernel ridge regression? arXiv preprint arXiv:1805.09948, 2018.

- [21] J. Lu, G. Cheng, and H. Liu. Nonparametric heterogeneity testing for massive data. arXiv preprint arXiv:1601.06212, 2016.

- [22] D. G. Luenberger. Optimization by vector space methods. John Wiley & Sons, 1997.

- [23] Z. Luo and G. Wahba. Hybrid adaptive splines. Journal of the American Statistical Association, 92(437):107–116, 1997.

- [24] P. Ma, J. Z. Huang, and N. Zhang. Efficient computation of smoothing splines via adaptive basis sampling. Biometrika, 102(3):631–645, 2015.

- [25] L. Mackey, A. Talwalkar, and M. I. Jordan. Divide-and-conquer matrix factorization. Advances in neural information processing systems, 24, 2011.

- [26] M. W. Mahoney. Lecture notes on randomized linear algebra. arXiv preprint arXiv:1608.04481, 2016.

- [27] C. L. Mallows. Some comments on Cp. Technometrics, 42(1):87–94, 2000.

- [28] D. McGuinness, S. Bennett, and E. Riley. Statistical analysis of highly skewed immune response data. Journal of immunological methods, 201(1):99–114, 1997.

- [29] C. Meng, X. Zhang, J. Zhang, W. Zhong, and P. Ma. More efficient approximation of smoothing splines via space-filling basis selection. Biometrika, 107:723–735, 2020.

- [30] C. Musco and C. Musco. Recursive sampling for the Nyström method. In Advances in Neural Information Processing Systems, pages 3833–3845, 2017.

- [31] A. Rahimi and B. Recht. Random features for large-scale kernel machines. In Advances in neural information processing systems, pages 1177–1184, 2008.

- [32] A. Rudi, R. Camoriano, and L. Rosasco. Less is more: Nyström computational regularization. Advances in Neural Information Processing Systems, 28:1657–1665, 2015.

- [33] D. Ruppert. Selecting the number of knots for penalized splines. Journal of computational and graphical statistics, 11(4):735–757, 2002.

- [34] D. W. Scott. Multivariate Density Estimation: Theory, Practice, and Visualization. John Wiley & Sons, 2015.

- [35] Z. Shang and G. Cheng. Computational limits of a distributed algorithm for smoothing spline. The Journal of Machine Learning Research, 18(1):3809–3845, 2017.

- [36] J. Shawe-Taylor and N. Cristianini. Kernel methods for pattern analysis. Cambridge university press, 2004.

- [37] J. C. Sklar, J. Wu, W. Meiring, and Y. Wang. Nonparametric regression with basis selection from multiple libraries. Technometrics, 55(2):189–201, 2013.

- [38] I. Steinwart, D. R. Hush, C. Scovel, et al. Optimal rates for regularized least squares regression. In COLT, pages 79–93, 2009.

- [39] H. A. Sturges. The choice of a class interval. Journal of the american statistical association, 21(153):65–66, 1926.

- [40] G. Wahba. Spline Models for Observational Data. SIAM, 1990.

- [41] G. Wahba and P. Craven. Smoothing noisy data with spline functions: Estimating the correct degree of smoothing by the method of generalized cross-validation. Numerische Mathematik, 31:377–404, 1978.

- [42] S. Wang. A practical guide to randomized matrix computations with matlab implementations. arXiv preprint arXiv:1505.07570, 2015.

- [43] S. X. Wu, H.-T. Wai, L. Li, and A. Scaglione. A review of distributed algorithms for principal component analysis. Proceedings of the IEEE, 106(8):1321–1340, 2018.

- [44] C. Xu, Y. Zhang, R. Li, and X. Wu. On the feasibility of distributed kernel regression for big data. IEEE Transactions on Knowledge and Data Engineering, 28(11):3041–3052, 2016.

- [45] D. Xu and Y. Wang. Divide and recombine approaches for fitting smoothing spline models with large datasets. Journal of Computational and Graphical Statistics, 27(3):677–683, 2018.

- [46] G. Xu, Z. Shang, and G. Cheng. Distributed generalized cross-validation for divide-and-conquer kernel ridge regression and its asymptotic optimality. Journal of computational and graphical statistics, 28(4):891–908, 2019.

- [47] Y. Yuan, N. Chen, and S. Zhou. Adaptive B-spline knot selection using multi-resolution basis set. Iie Transactions, 45(12):1263–1277, 2013.

- [48] H. H. Zhang, G. Wahba, Y. Lin, M. Voelker, M. Ferris, R. Klein, and B. Klein. Variable selection and model building via likelihood basis pursuit. Journal of the American Statistical Association, 99(467):659–672, 2004.

- [49] J. Zhang, H. Jin, Y. Wang, X. Sun, P. Ma, and W. Zhong. Smoothing spline ANOVA models and their applications in complex and massive datasets. Topics in Splines and Applications, page 63, 2018.

- [50] T. Zhang. Learning bounds for kernel regression using effective data dimensionality. Neural Computation, 17(9):2077–2098, 2005.

- [51] Y. Zhang, J. Duchi, and M. Wainwright. Divide and conquer kernel ridge regression. In Conference on learning theory, pages 592–617, 2013.

- [52] Y. Zhang, J. Duchi, and M. Wainwright. Divide and conquer kernel ridge regression: A distributed algorithm with minimax optimal rates. The Journal of Machine Learning Research, 16(1):3299–3340, 2015.

- [53] T. Zhao, G. Cheng, and H. Liu. A partially linear framework for massive heterogeneous data. Annals of statistics, 44(4):1400, 2016.