Optimizing Traffic Lights with Multi-agent Deep Reinforcement Learning and V2X communication

Abstract

We consider a system to optimize duration of traffic signals using multi-agent deep reinforcement learning and Vehicle-to-Everything (V2X) communication. This system aims at analyzing independent and shared rewards for multi-agents to control duration of traffic lights. A learning agent traffic light gets information along its lanes within a circular V2X coverage. The duration cycles of traffic light are modeled as Markov decision Processes. We investigate four variations of reward functions. The first two are unshared-rewards: based on waiting number, and waiting time of vehicles between two cycles of traffic light. The third and fourth functions are: shared-rewards based on waiting cars, and waiting time for all agents. Each agent has a memory for optimization through target network and prioritized experience replay. We evaluate multi-agents through the Simulation of Urban MObility (SUMO) simulator. The results prove effectiveness of the proposed system to optimize traffic signals and reduce average waiting cars to 41.5 as compared to the traditional periodic traffic control system.

Keywords:

Deep reinforcement learning, V2X, deep learning, traffic light control.I Introduction

Existing traffic signal management is performed: either leveraging limited real-time traffic information or by fixed periodic traffic signal timings [1]. This information is widely obtained from underlying inductive loop detectors. However, this input is processed in a limited domain to estimate better duration of red/green signals. The advances in mobile communication networks and sensors technology have made possible to obtain real-time traffic information [2]. An artificial brain can be implemented with deep reinforcement learning (DRL). DRL is based on three main components: states in the environment, action space and the scalar reward from each action [3]. A popular success of DRL is AlphaGo [4], and its successor AlphaGo Zero [5]. The main goal is to maximize the reward by choosing the best actions.

In some research works, state is defined as the number of vehicles waiting at an intersection or the waiting queue length [6], [7]. However, it is investigated by [8] that real traffic environment cannot be fully captured leveraging the number of waiting vehicles or the waiting queue length. Thanks to the rapid development of deep learning, large state problems have been addressed with deep neural networks paradigm [9]. In [10], [11] authors have proposed to resolve traffic control problem with DRL. However two limitations exist in the current studies: 1) fixed time intervals of traffic lights, which is not efficient in some studies; 2) random sequences of traffic signals, which may cause safety and comfort issues. In [12] the authors have controlled duration in a cycle based on information extracted from vehicles and sensors networks which can reduce average waiting time to 20%.

In this letter we investigate for the first time multiple experienced traffic operators to control traffic in each step at multiple traffic lights. This idea assumes that control process can be modeled as a Markov Decision Process (MDP). The system experiences the control strategy based on the MDP by trial and error. Recently, a Q-learning based method is proposed by [18] showing better performance than fixed period policy. An linear function is proposed to achieve more effective traffic flow management with a high traffic flow [19]. But neither tabular Q learning nor linear function methods could support the increasing size of traffic state space and accurate estimation of Q value in a real scenario [20]. Gao et al. [21] has proposed a DRL method with a change in cumulative staying time as a reward. A CNN is employed to map states to rewards [21]. H. Jiang has analyzed nonzero-sum games with multi-players by using adaptive dynamic programming (ADP) [22]. Li et al. proposed a single intersection control method based on DQN to focus on a local optimum [23]. Pol et al. combined DQN with a max-plus method to achieve cooperation between traffic lights [25]. Xiaoyuan Liang et al. proposed a model incorporating multiple optimization elements for traffic lights and the simulation on SUMO has shown efficiency of the model [12]. However, most of the works focused on single-agent controlling signals for an intersection.

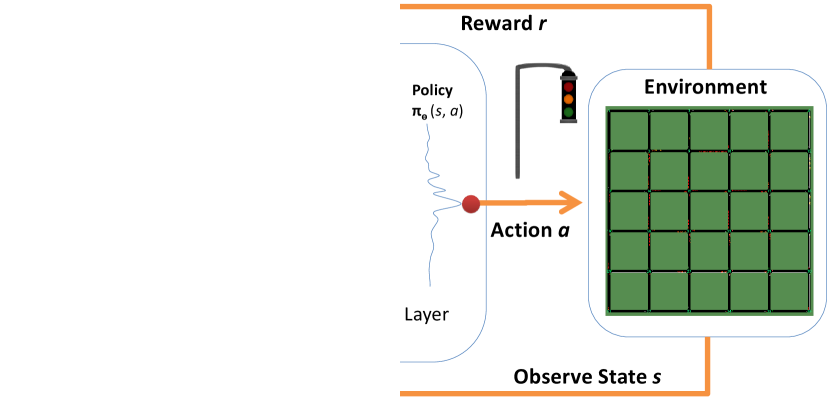

II System Model

The proposed system is shown in Fig. 1. The model for each agent is based on four items . Let is possible state space, and is a state (). is possible action space, and is an action () and is reward space. Let is the transition probability function space from one state to next state. A series of consequent actions is policy . Learning an optimal policy to maximize the cumulative expected reward is main goal. An agent at state takes an action to reach next state , gets the reward .

A four-tuple represents this situation as ,,,. The state transition occurs at a discrete step in . Let is cumulative reward function in future by executing an action at state . Let is reward at th step, and (1) gives for :

| (1) |

The parameter is a discount factor in , decides how much importance should be given to recent and future rewards. The optimal policy can be acquired through several episodes in the learning process. Calculation of optimal is based on the optimal values of the succeeding states represented by the Bellman optimality (2):

| (2) |

It can be solved by dynamic programming keeping finite states for less computational burden. However, the values can also be estimated by a function for larger number of states.

II-A Problem formulation

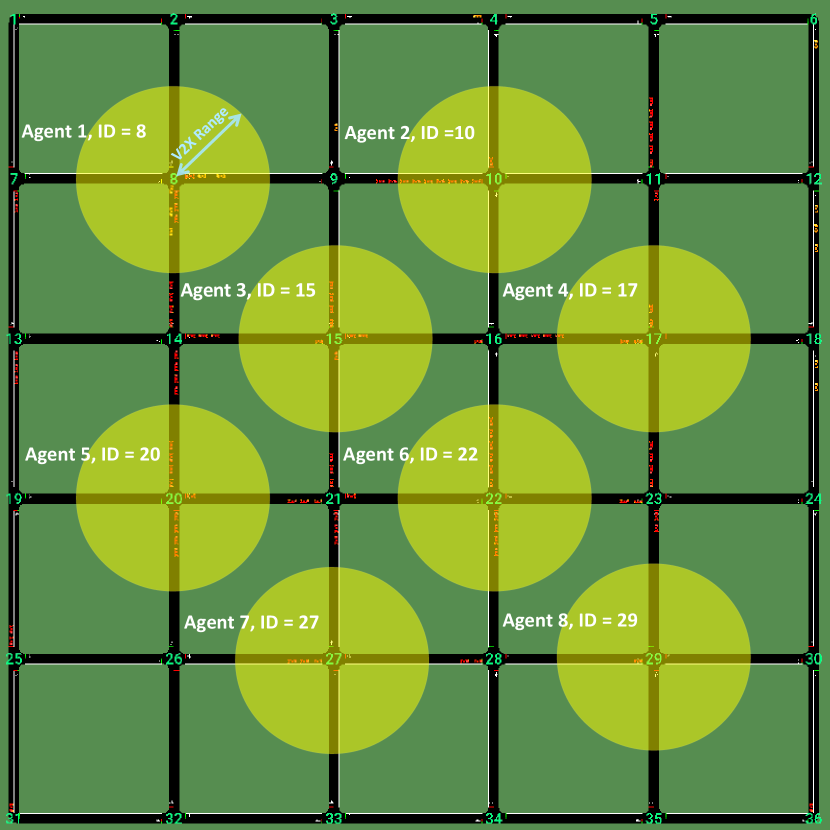

The environment (road traffic) is shared by the agents. Let is total number of controlled lanes of an agent . Where and . The number of waiting cars is where is a lane. The reward is considering a case and . Figure 2 shows the multi-gent traffic scenario under study. We aim to minimize average of in each and varying number of multi-agents as described in problem (P):

| (3) |

II-A1 States

The states are number of vehicles on road for each lane of the traffic light agents. The number of vehicles are acquired from V2X communication in DSRC mode [26]. This reduces number of states to the controlled lanes for a multi-lane traffic intersection reducing computational burden. The length of road is defined as . The state is a four-value vector such that each element represents number of vehicles respectively in lane 0, lane 1, lane 2 and lane3 (North, East, South, West).

II-A2 Actions

The actions space decides duration of each phase in the next cycle. The duration of two phase changes between two consecutive cycles is modeled as a high-dimension MDP. The phase remains constant during seconds. Let are durations of 4 phases. The duration of one phase in the next cycle will be incremented by if the same action is chosen by an agent. Repeating an action will increase duration of the same phase. To investigate feasibility of actions, we assume that probability of phase transition for action to action is . Let is the probability that a chosen action starting from will go to for the first time after steps. An agent may take too long time to choose an unvisited action if there are no bounded conditions. The probability that an action will be chosen after action in one action-step is as in (4):

| (4) |

The first passage probability after action-steps can be generalized as (5):

| (5) |

Let be that probability that an agent at action will eventually take at least once in transitions then it will be the sum of all first passage probabilities (6):

| (6) |

The feasible operation of the proposed system is possible if and only if is transient in nature which is shown by (7):

| (7) |

It can achieved by setting a threshold for the maximum waiting number of vehicles.

II-A3 Rewards

Our study aims at decreasing in each lane. This proportionally reduces cumulative waiting time. In contrast to previous research work of single agent we argue that real-life scenarios consist of multiple traffic lights and the learning process of one agent may not be effective in reducing traffic congestion in the neighborhood. The reason is that, once an agent performs good at an intersection, then the congestion at the connected roads of this agent will be reduced causing increase in traffic flow which will result in severe traffic jam in the neighboring intersections. To investigate this we consider four cases in .

II-A4 Agents with unshared-rewards

In first case the reward for an agent , is accumulative waiting number of vehicles in its vicinity. Let is total number of lanes of agent . The reward , for case 1 is given by (8):

| (8) |

The reward in the second case is accumulative waiting time of vehicles in an agent’s vicinity. Let is total waiting time of all vehicles in , (9) gives the reward for a step ,

| (9) |

II-A5 Agents with shared-rewards

The third case considers common aim for each agent. The actions of agents are selected to minimize the overall waiting number of cars of all agents. Therefore, reward for each agent is the accumulative waiting number of vehicles in its own as well as other agents vicinity. Let number of agents are selected for the common environment, is total number of agents except the agent , the reward is given by (10):

| (10) |

In fourth case we consider waiting time experienced by all agents as the shared reward for an agent. It means that is accumulative waiting time of vehicles in its own and other agents vicinity as expressed in (11):

| (11) |

Variable is for total waiting time of all vehicles in .

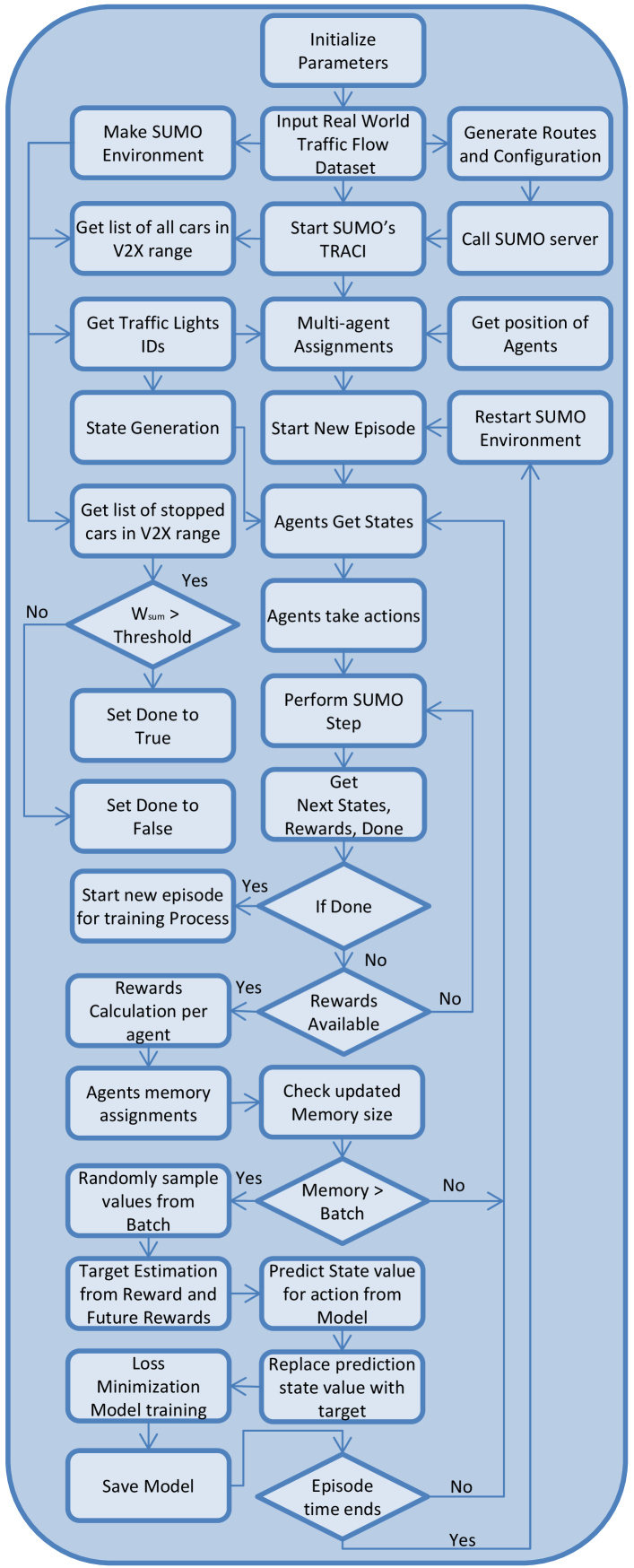

II-B Process flow of the proposed model

Figure 3 shows process flow of the proposed multi-agent model. The initialization parameters and their values are in Table I. The proposed algorithm reads the dataset of traffic flow per lane and formulate the vehicle flow rate from real world domain to SUMO domain in terms of steps.

We choose this formulation to assign vehicle flow rate or arrival probability to each vehicle according to configuration 1 of “Huawei 2019 Vehicle Scheduling Contest” [24]. The purpose of selecting this online dataset is to establish a standard comparison for the research. A total of 128 cars are used. The process of agent training starts by initializing the SUMO environment. The SUMO environment imitates the real world road network with traffic lights and vehicles. Each agent is responsible for its traffic light region. The V2X coverage is limited to meter radius of a circle around the traffic light intersection. The agent gets information of vehicles in each connected roads under the coverage area.

Each lane is given equal importance in the calculation of reward function. All agents take actions in a predefined cycle duration. The agents act upon their respective states either using experience replay or based on the random decision under exploration. The experience replay is used individually by each agent to minimize its loss. The loss is difference between target and prediction. A separate neural network acts as a function approximator known as Q-network with weights . The Q-network is trained by minimizing the sequence of loss functions which changes in each th iteration is shown in Equation (12):

| (12) |

where is the probability distribution over states and action sequences and for the th iteration is given by the Equation (13):

| (13) |

The weights of previous iteration are kept fixed during the optimization of loss function . The term is SUMO environment. The aim is to predict the Q value for a given state and action, get the target value and replace predicted state for that action with the target value. The targets are dependent on the Q network weights. Let is a temporary variable. The differentiation of the loss function with respect to the weights gives gradient in the form of Equation (14):

| (14) |

The proposed multi-agent DRL is a model-free approach. It solves the tasks by directly using the samples from SUMO environment and does not require the estimate of . Each agent learns its greedy strategy and follows a behaviour distribution ensuring adequate state space exploration. The learning behaviour distribution follows -greedy strategy, which means 1- is selected for the exploitation and random action is selected with the probability .

| Parameter | Value |

|---|---|

| Learning Rate | 0.001 |

| Memory Size | 10000 |

| Epsilon initial | .95 |

| Epsilon final | 0.01 |

| Epsilon Decay rate | 0.001 |

| Minibatch Size | 32 |

| Discount Factor | 0.95 |

| Target network activation ReLU | beta 0.01 |

| Loss metric | MSE |

| Optimizer | Adam |

| V2X circular coverage area | 45,216 |

| Episodes | 60 |

| Fully connected Hidden Layers | 3 |

| Nodes per each hidden layer | 24, 24, 24 |

III Evaluation

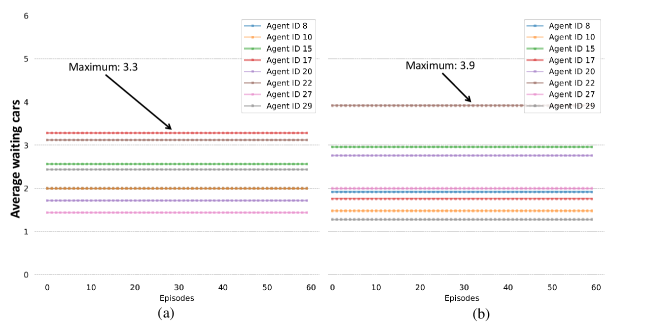

The scenario is a 66 intersections scenario with 2, 4, 8 multi-agents are selected as shown in Fig. 3. There are a total of 128 vehicles that randomly enter the scenario from various intersections. The parameters used for training the deep neural network are in Table I. Figure 4 shows the average waiting cars at 8 intersections for the scenario. In Fig. 4(a) we have kept 30 s duration for each phase. Similarly in Fig. 4(b) the duration is 40 s. It is noted that Agent IDs are reference intersection ID. The agents are not taking actions in the Fig. 5. It is observed that traffic at intersection marked with ID 22 has a higher (greater than 3) average number of waiting cars in both 30 s and 40 s cases. All intersections have more than 1 average waiting cars during all episodes.

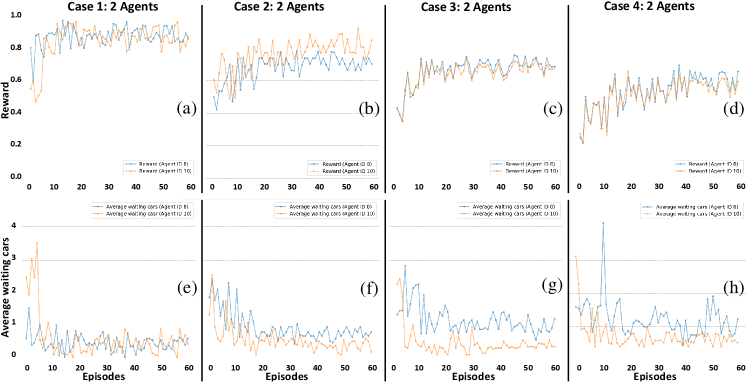

We divide the parameters for evaluation as: model parameters and traffic parameters. The model is trained iteratively in each episode for 500 s. The reward and are aggregated in each episode. The performance of agents are compared for , , and . The rewards in Fig. 5(a) are for agents with IDs 8 and 10 and evaluated with . Similarly Fig. 5(b) shows corresponding rewards evaluated by using . The shared-rewards and are respectively presented in Fig. 5(c) and Fig. 5(d). The effects of using different reward functions are shown below for each case in Fig. 5(e)-(h). It is observed that in case 1 both agents have performed better in maximizing their rewards and minimizing the number of waiting cars in their connected lanes. Agent 10 performed better than agent 8 for the cases 2, 3 and 4. The reward for the agent 10 is higher than agent 8 and the corresponding results of average waiting cars also show less average waiting cars for the agent 10 as compared to agent 8.

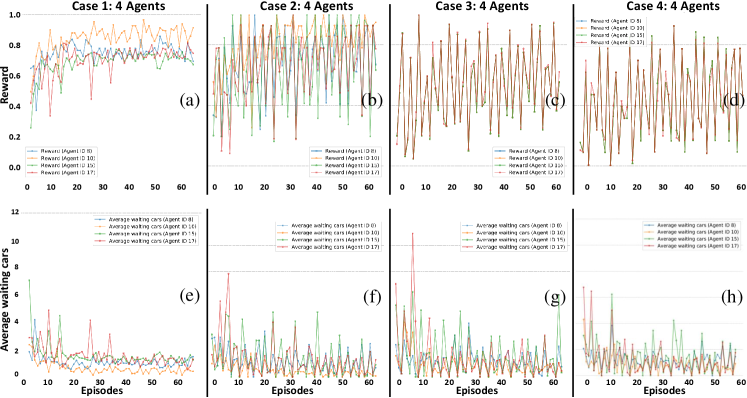

Figure 6 shows results of 4 agents with IDs 8, 10, 15, and 17 by experimenting the same four cases using the similar scenario. It is observed in Fig. 6(a) that agent 10 performs better than other agents as the average number of waiting cars under agent 10 are less than others. In Fig. 6(b) all agents try to perform better by fluctuating their rewards. These fluctuations are also reflected in the number of waiting cars as shown in Fig. 6(f). Interesting similar rewards are observed in the shared-rewards cases (3,4) in Fig 6(c) and Fig 6(d).

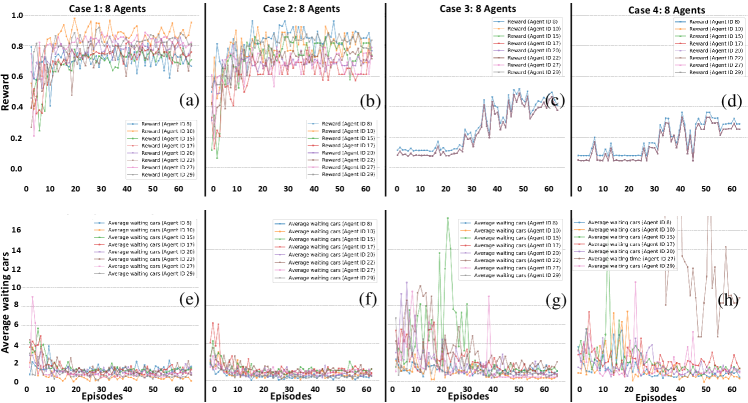

Average number of waiting cars are also similar as shown in Fig. 6(g) and Fig. 6(h). The results for the 8 agents are shown in Fig. 7 with IDs 8,10,15, 17, 20, 22, 27, and 29. The case 1 outperforms other cases in Fig 7(a). All agents tried to maximize their rewards showing better results in Fig. 7(a)-(b) and Fig. 7(e)-(f). Peak average waiting cars in Fig. 7 (f) is 2.1 as compared to 3.9 (see Fig. 4(b)) after 15 episodes, which means a decrease of 41.5 . On the other hand all rewards become same for the agents as expected. However, the shared-reward schemes performed poorly as compare to unshared-rewards. The reason behind this low performance is failure to achieve better actions that could maximize the shared reward. The actions of one agent produces the environment conditions that could negatively disturb the rewards for other agents. Agents 8, 10, 17, and 29 relatively performed better even with reduced shared rewards.

IV Conclusion

In this article we have presented the performance of deep reinforcement learning under multi-agent V2X driven traffic control system. We have observed that multi-agents with individual rewards considering waiting number of cars is a better choice as compared to the average waiting time. On the other hand shared-rewards based cases do not perform better. Shared-rewards make the situation more competitive. This competition should be further investigated using other techniques of deep reinforcement learning. We have also observed that for larger number of agents, the reward based on waiting time is the better choice.

References

- [1] X. Liang, T. Yan, J. Lee, and G. Wang, “A distributed intersection management protocol for safety, efficiency, and driver’s comfort,” IEEE Internet Things J., vol. 5, no. 3, pp. 1924-1935, Jun. 2018.

- [2] S. El-Tantawy, B. Abdulhai, and H. Abdelgawad, “Design of reinforcement learning parameters for seamless application of adaptive traffic signal control,” J. Intell. Transp. Syst., vol. 18, no. 3, pp. 227-245, Jul. 2014.

- [3] R. S. Sutton and A. G. Barto, “Reinforcement Learning: An Introduction”, vol. 1, no. 1. Cambridge, MA, USA: MIT Press, Mar. 1998.

- [4] D. Silver et al., “Mastering the game of go with deep neural networks and tree search,” Nature, vol. 529, no. 7587, pp. 484-489, Jan. 2016.

- [5] D. Silver et al., “Mastering the game of go without human knowledge,” Nature, vol. 550, no. 7676, pp. 354-359, Oct. 2017.

- [6] S. El-Tantawy, B. Abdulhai, and H. Abdelgawad, “Design of reinforce- ment learning parameters for seamless application of adaptive traffic signal control,” J. Intell. Transp. Syst., vol. 18, no. 3, pp. 227-245, Jul. 2014.

- [7] M. Abdoos, N. Mozayani, and A. L. Bazzan, “Holonic multi-agent system for traffic signals control,” Eng. Appl. Artif. Intell., vol. 26, no. 5, pp. 1575-1587, May/Jun. 2013.

- [8] W. Genders and S. Razavi, “Using a deep reinforcement learning agent for traffic signal control,’ PrePrint, 2016.

- [9] V. Mnih et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529-533, Feb. 2015.

- [10] L.Li, Y.Lv, and F.-Y.Wang, “Traffic signal timing via deep reinforcement learning,” IEEE/CAA J. Automatica Sinica, vol. 3, no. 3, pp. 247-254, Jul. 2016.

- [11] E. van der Pol, “Deep reinforcement learning for coordination in traffic light control,” Master’s thesis, Dept. Artif. Intell., Univ. Amsterdam, Amsterdam, The Netherlands, Aug. 2016.

- [12] X. Liang , X. Du, G. Wang, and Z. Han, “A Deep Reinforcement Learning Network for Traffic Light Cycle Control,” IEEE Tans. on vehicular Tech. vol. 68, No. 2, Feb 2019.

- [13] S.Chiu and S.Chand, “Adaptive traffic signal control using fuzzy logic, ”in Proc. 1st IEEE Regional Conf. Aerosp. Control Syst. ,Apr.1993.

- [14] Kanungo A,Sharma A,Singla C. “Smart traffic light switchhing and traffic density calculations in video processing.” Proceeding of the Conference on Recent Advances in Engineering and Computational Sciences.Chandigarh, India, 2014.

- [15] Chao K,Chen P. “An intelligent traffic flow control system based on radio frequency identification and Wireless Sensor Networks.International Journal of Distributed Sensor Networks,” vol. 2014, Article ID 694545,10 pages, 2014.

- [16] F. V. Webster, “Traffic signal settings,” Dept. Sci. Ind. Res., Nat. Acad.Sci. Eng. Med., London, U.K., Rep. 39, 1958.

- [17] A. J. Miller, “Settings for fixed-cycle traffic signals,” J. Oper. Res. Soc.,vol. 14, no. 4, pp. 373 C386, 1963.

- [18] Abdulhai, Baher, Rob Pringle, and Grigoris J. Karakoulas. “Reinforcement learning for true adaptive traffic signal control”. Journal of Transportation Engineering, 2003.

- [19] Wiering, Marco. “Multi-agent reinforcement learning for traffic light control”. ICML. 2000.

- [20] S. El-Tantawy, B. Abdulhai, and H. Abdelgawad, ”Design of reinforcement learning parameters for seamless application of adaptive traffific signal control,” J. Intell. Transp. Syst., vol. 18, no. 3, pp. 227 C245, Jul.2014.

- [21] J. Gao, Y. Shen, J. Liu, M. Ito, and N. Shiratori, “Adaptive traffific signal control: Deep reinforcement learning algorithm with experience replay and target network,” unpublished paper, 2017.

- [22] H. Jiang, H. Zhang, Y. Luo, and J. Han, “Neural-network-based robust control schemes for nonlinear multiplayer systems with uncertainties via adaptive dynamic programming,” IEEE Trans. Syst., Man, Cybern., Syst., vol. 49, no. 3, pp. 579 C588, Mar. 2019.

- [23] Li, Li, Yisheng Lv, and Fei-Yue Wang. “Traffic signal timing via deep reinforcement learning”. IEEE/CAA Journal of Automatica Sinica, 2016.

- [24] Run the world: Code Craft 2019. Available online:https://codecraft.huawei.com

- [25] E. van der Pol, “Deep reinforcement learning for coordination in traffic light control,” Master’s thesis, Dept. Artif. Intell., Univ. Amsterdam, Amsterdam, The Netherlands, Aug. 2016.

- [26] J. B. Kenney, ”Dedicated Short-Range Communications (DSRC) Standards in the United States,” in Proceedings of the IEEE, vol. 99, no. 7, pp. 1162-1182, July 2011.