Optimizing for ROC Curves on Class-Imbalanced Data by Training over a Family of Loss Functions

Abstract

Although binary classification is a well-studied problem in computer vision, training reliable classifiers under severe class imbalance remains a challenging problem. Recent work has proposed techniques that mitigate the effects of training under imbalance by modifying the loss functions or optimization methods. While this work has led to significant improvements in the overall accuracy in the multi-class case, we observe that slight changes in hyperparameter values of these methods can result in highly variable performance in terms of Receiver Operating Characteristic (ROC) curves on binary problems with severe imbalance. To reduce the sensitivity to hyperparameter choices and train more general models, we propose training over a family of loss functions, instead of a single loss function. We develop a method for applying Loss Conditional Training (LCT) to an imbalanced classification problem. Extensive experiment results, on both CIFAR and Kaggle competition datasets, show that our method improves model performance and is more robust to hyperparameter choices. Code is available at https://github.com/klieberman/roc_lct.

1 Introduction

Consider a classifier which takes images of skin lesions and predicts whether the lesions are melanoma Rotemberg et al. (2020). Such a system could be especially valuable in underdeveloped countries where expert resources for diagnosis are scarce Cassidy et al. (2022). Classifying melanoma from images is a problem with class imbalance since benign lesions are far more common than melanomas. Furthermore, the accuracy on the melanoma (minority) class is much more important than the accuracy on the benign (majority) class because predicting a benign lesion as melanoma would result in the cost of a biopsy while predicting a melanoma lesion as benign could result in the melanoma spreading before the patient can receive appropriate treatment.

In this case, overall accuracy, even on a balanced test set, is clearly an inadequate metric, as it implies that the accuracies on both classes are equally important. Instead, Receiver Operating Characteristic (ROC) curves are better suited for such problems Egan (1975). These curves plot the tradeoff between the true positive rate (TPR) on the y-axis and the false positive rate (FPR) on the x-axis over a range of classification thresholds. Unlike scalar metrics (e.g., overall accuracy on a balanced test set or ), ROC curves show model performance over a wide range of classification thresholds. This allows practitioners to understand how the model’s performance changes based on different classification thresholds and choose the best tradeoff for their needs. ROC curves can also be summarized by their Area Under the Curve (AUC) Egan (1975). Furthermore, both ROC curves and AUC have several mathematical properties which make them preferred to alternative precision-recall curves Flach & Kull (2015)111We provide definitions and visualizations of commonly-used metrics for binary problems with imbalanced data in Appendix A.

Although binary problems, like melanoma classification, are often cited as the motivation for class imbalance problems and ROC curves are the de facto metric of choice for such problems, the class imbalance literature largely focuses on improving performance on longtailed multi-class datasets in terms of overall accuracy on a balanced test set. We instead focus on binary problems with severe imbalance and propose a method, which adapts existing techniques for handling class imbalance, to optimize for ROC curves in these binary scenarios.

In particular, we adapt Vector Scaling (VS) loss, which is a general loss function for imbalanced learning with strong theoretical backing Kini et al. (2021). VS loss is a modification of Cross-entropy loss that adjusts the logits via additive and multiplicative factors. There is theory supporting the use of both of these factors: multiplicative factors are essential for the terminal phase of training, but these have negative effects early during training, so additive factors are necessary speed up convergence. Although VS loss has shown strong performance in the multi-class setting, it does have hyperparameters which require tuning (e.g., the additive and multiplicative factors on the loss function).

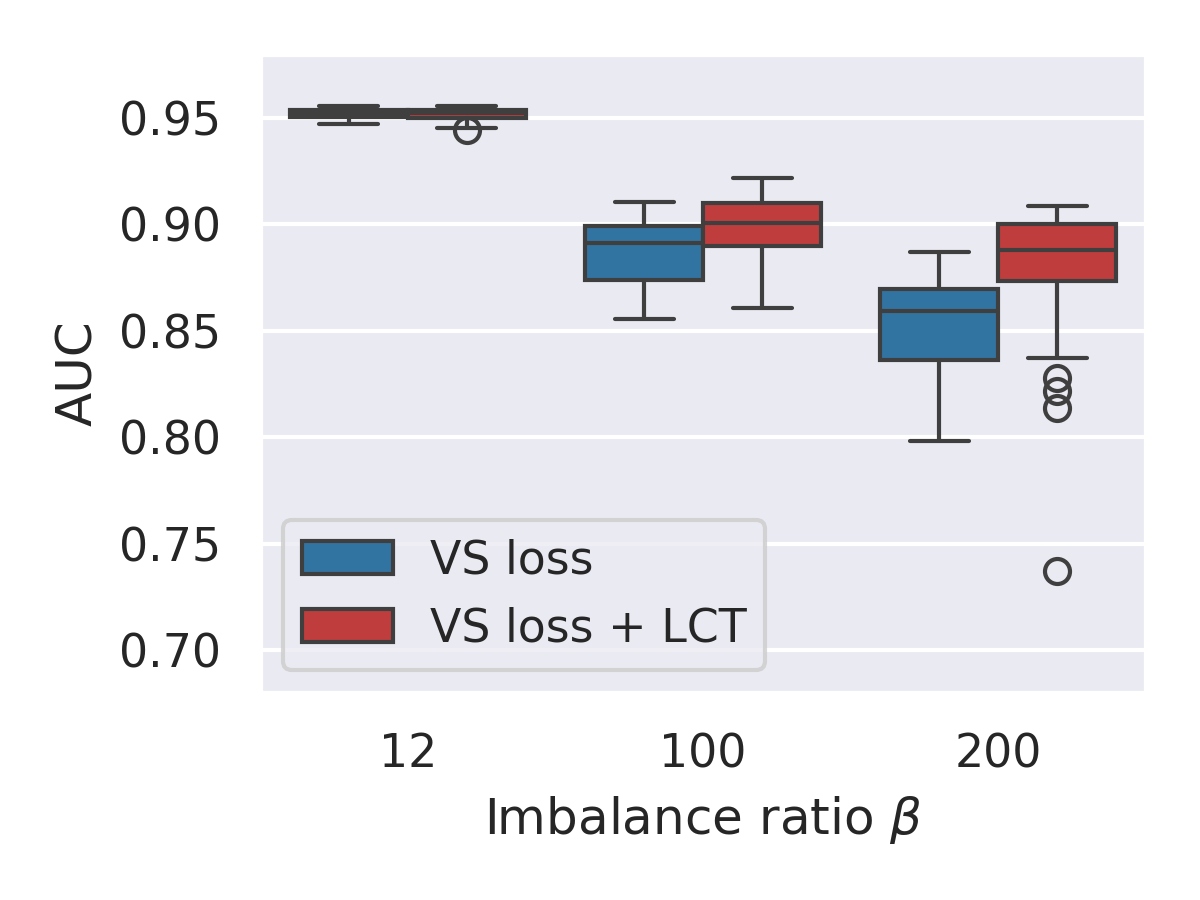

We find that, in the binary case, the effect of these hyperparameters is small and reasonable at moderate imbalance ratios (where is defined as the ratio of majority to minority samples); however, at large imbalance ratios, small differences in these hyperparameters lead to very wide variance in the results (Figure 1). This figure shows that increasing the imbalance ratio not only decreases the AUCs (as expected), but also drastically increases the variance in AUCs obtained by training models with slightly different hyperparameter values.

In this work, we highlight the practical effect of the theoretically-motivated VS loss on the ROC metric, especially for data problems with high imbalance ratios. We propose a method that adapts VS loss to align the training objective more closely with ROC curve optimization. Our method trains a single model on a wide range of hyperparameter values using Loss Conditional Training Dosovitskiy & Djolonga (2020). We find that this method not only reduces the variance in model performance caused by hyperparameter choices, but also improves performance over the best hyperparameter choices since it optimizes for many tradeoffs on the ROC curve. We provide extensive results– both on CIFAR datasets and datasets of real applications derived from Kaggle competitions– at multiple imbalance ratios and across a wide range of hyperparameter choices.

In summary, our contributions are as follows.

-

•

We identify that higher levels of imbalance are not only associated with worse model performance, but also more variance.

-

•

We recognize that training over a range of hyperparameter values can actually benefit classification models that are otherwise prone to overfitting to a single loss function.

-

•

We propose using Loss Conditional Training (LCT) to improve the training regimen for classification models trained under imbalance.

-

•

We show that this method consistently improves performance at high imbalance ratios.

2 Related work

Many solutions have been proposed to address class imbalance, including several specialized loss functions and optimization methods Cao et al. (2019); Rangwani et al. (2022); Buda et al. (2018); Kini et al. (2021); Shwartz-Ziv et al. (2023). Perhaps the simplest of these is to change the class weights in the loss function so that the minority and majority class have “balanced” class weights or class weights which are inversely proportional to the frequency of the class in the training set (e.g., weighted cross-entropy loss Xie & Manski (1989)). Another popular loss function is Focal loss, which down-weights “easy” samples (i.e., samples with high predictive confidence) Lin et al. (2017).

More recently, several loss functions have been proposed which add additive and multiplicative factors to the logits before they are input to the softmax function Cao et al. (2019); Ye et al. (2020); Menon et al. (2021). These aim to enforce larger margins on the minority class and/or calibrate the models. Kini et al. (2021) recognized that many of the previous loss functions for addressing class imbalance can be expressed by one general loss function: Vector Scaling (VS) loss, which gives strong performance on multi-class datasets after hyperparameter tuning Kini et al. (2021). Du et al. (2023) use a global and local mixture consistency loss, contrastive learning, and a dual head architecture.

Additionally, Rangwani et al. (2022) proposed using Sharpness Aware Minimization (SAM) as an alternative optimizer and found that this helped the model escape saddle points in multi-class problems with imbalance Foret et al. (2021). Similarly, Shwartz-Ziv et al. (2023) identify several tweaks that can be made to models—including batch size, data augmentation, specialized optimizers, and label smoothing—which can all improve training.

These methods are predominantly tested on multi-class datasets with overall accuracy on a balanced test set as the primary metric. We instead propose to optimize for the ROC curve in the binary case by training over a family of loss functions. To this end, we use Loss Conditional Training (LCT), which was proposed as a way to train one model to work over several rates in a variable-rate problem Dosovitskiy & Djolonga (2020). LCT was proposed for applications such as neural image compression and variational autoencoders and, to our knowledge, we are the first to use it to improve ROC curves of a classification problem.

3 Problem setup

3.1 Data

In this paper, we focus on binary classification problems with class imbalance. Specifically, let be the training set consisting of i.i.d. samples from a distribution on where and . Then let be the number of samples with label . Without loss of generality, we assume that (i.e., 0 is the majority class and 1 is the minority class) and measure the amount of imbalance in the dataset by .

3.2 Predictor and predictions

Let be a predictor with weights and be ’s output for input . We assume are logits (i.e., a vector of unnormalized scalars where is the logit corresponding to class ) and are the outputs of the softmax function (i.e., a vector of normalized scalars such that ). Then the model’s prediction is

| (1) |

where is a threshold and by default. To find the ROC curve of a classifier , we compute the predictions over a range of values.

3.3 Loss function

For all experiments, we use the Vector Scaling (VS) loss as defined by Kini et al. (2021). This loss is a modification of weighted Cross-entropy loss with two hyperparameters that specify an affine transformation for each logit:

| (2) |

We follow parameterization by Kini et al. (2021) as follows:

| (3) |

where and are hyperparameters set by the user. We add an additional hyperparameter to parameterize each class’ weight as follows

| (4) |

where . In the binary case, we can simplify the loss as follows (see Appendix E for details):

| (5) | ||||

| (6) |

Note that VS loss is equivalent to cross entropy loss when , and . It is also equal to weighted cross entropy loss when , and .

4 Analysis on the effects of hyperparameters

Metric

AUC

0.11

Acc.

0.60

TPR

0.95

4.1 Variance of results

As discussed in Section 3.3, our parameterization of VS loss has three hyperparameters: and . Each of these has theory associated with its effect on training under imbalance. controls the overall balance between the magnitude of the gradients from the minority and majority class. was originally proposed to compensate for the difference between the magnitude of the minority-class logits at training and testing time Ye et al. (2020); however, Kini et al. (2021) showed that it is essential for training beyond zero error and optimizing with is equivalent to a solving Cost-Sensitive Support Vector Machine problem. enforces a larger margin on minority-class samples and has many of its own theoretical properties Cao et al. (2019): in particular, it has been shown to counteract some of the negative effects of training with early in training Kini et al. (2021). Although there are intuitive and theoretical rationale for each of these hyperparameters Kini et al. (2021), it is not clear how sensitive a model’s performance is to their values, especially in terms of ROC curves on a binary dataset.

To understand this better, we trained several models on the same dataset over different combination of hyperparameter values. Specifically, we trained 512 models on the same dataset (CIFAR10 cat vs. dog with ) and varied only their values of and . 222We used all unique combinations of 0.5, 0.6, 0.7, 0.8, 0.9, 0.95, 0.99, 0.999, 0.0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0, 0.5, 1, 1.5, 2, 2.5, 3, 3.5. Figure 2 shows that there is a wide variance in performance of these models Specifically, these models have AUC values which range from 0.58 to 0.74 with a standard deviation of 0.03. Additionally, Figure 1 shows that this variance becomes more severe as the imbalance ratio increases. This implies that models trained with the VS loss under severe class imbalance are especially sensitive and variable in their results.

4.2 Correlation between hyperparameter values and scalar metrics

We next consider how much of this variance is explained by the hyperparameter values (i.e., is the randomness coming from the hyperparameters themselves or other randomness in training?). If the variance is mostly a result of different hyperparameter choices, then we can reasonably expect the practitioner to search for hyperparameters that generalize well. Otherwise, tuning hyperparameters may not be an effective way to improve performance.

To test this, we fit polynomials between the three hyperparameters () and two scalar metrics: AUC and overall accuracy on a balanced test set when . Although TPR, equivalently recall, is not a good indicator of a model’s overall performance by itself (because it is trivial to increase TPR at the expense of FPR), we also fit a polynomial to TPR to gain insights on the effect of the hyperparameters on an ROC curve. We show results, including the of these regressions, in the three plots in Figure 3. We also fit three (one for AUC, accuracy, and TPR) degree-2 polynomials using all three hyperparameters as features and report values of these polynomials in the table in Figure 3. We choose polynomials of degree two instead of linear regression because we expect that an optimum exists.

We see that no single hyperparameter is strongly correlated with AUC; however, 11% of the variance can be explained by the polynomial fit to all three hyperparameters. This suggests that some variance can be removed by choosing strong hyperparameters; however, much of the variance comes from other sources. Additionally, only 60% of the variance in overall accuracy can be explained by the polynomial with all three hyperparameters. While this is significantly better than AUC’s , it is still far from perfect. Thus, VS Loss hyperparameter values do not affect performance metrics in a very predictable way. It would be preferable to reduce the variance of VS Loss over performing extensive hyperparameter tuning.

4.3 Connection between hyperparameter values and softmax outputs

The third plot in Figure 3 shows that is strongly correlated with TPR when the threshold (). To understand this, recall that affects the additive term on the logits in Equations 2 and 3. Specifically, increasing enforces a larger margin on the minority class. In general, this will lead to increasing the softmax scores of the minority class at inference time, which is similar to post-hoc VS calibration Zhao et al. (2020). Thus, when is constant, increasing will likely cause an increase in TPR.

We can also visualize the effect of by considering the set of “break-even” logits, which are defined as the set of points of where the loss is equal whether the sample has label 0 or 1 (i.e., the points of such that ). With regular Cross-entropy, this set is . Assume instead that we keep , but have arbitrary values for , then the equation for the set of break-even points becomes . In other words, varying is equivalent to shifting the set of break-even points (and the rest of the loss landscape) by .333Varying also shifts the loss landscape in a similar way for any fixed and . See Appendix G for details.. Figure 4 visualizes this.

In addition, and also have well-understood effects on the logit outputs. Appendices G and H include a general equation for the set of break-even points and more loss contours. Just as we saw in the example with , different combinations of hyperparameters have different effects on how is scaled, which in turn affects their softmax scores. Thus, training with different values of VS Loss hyperparameters corresponds to optimizing for different tradeoffs of TPR and FPR.

5 Optimizing for ROC Curves via Loss Conditional Training

Recall that ROC curves show the performance of the model in terms of TPR for all possible FPRs and vice versa. We saw in Section 4.3 that training with different combinations of hyperparameters corresponds to optimizing for different tradeoffs of TPR and FPR. Therefore, training one model over a range of hyperparameter values is a proxy for optimizing over a range of the ROC curve. Using this intuition, we design a system which optimizes one model over a range of VS loss hyperparameter values via loss conditional training (LCT).

5.1 Loss Conditional Training (LCT)

Dosovitskiy & Djolonga (2020) observed the computational redundancy involved in training separate models with slightly different loss functions, such as neural image compression models with different compression rates. They proposed loss conditional training (LCT) as a method to train one model to work over a family of losses.

Let be a vector which parameterizes a loss function. For example, we can parameterize VS loss by . Then let be the family of loss functions parameterized by where is the length of . In our example, is the set of all possible VS loss functions obtained by different parameter values for .

Normally, training finds the weights on a model which minimize a single loss function (i.e., we optimize for a single combination of values in VS loss) as shown below.

| (7) |

LCT instead optimizes over a distribution of values as shown below.

| (8) |

LCT is implemented on Deep Neural Network (DNN) predictors by augmenting the network to take an additional input vector along with each data sample . During training, is sampled from with each sample and is used in two ways: 1) as an additional input to the network and 2) in the loss function for the sample. During inference, the model takes as input a along with the sample and outputs the appropriate prediction.

In order to condition the model on , the DNN is augmented with Feature-wise Linear Modulation (FiLM) layers Perez et al. (2018). These are small neural networks that take the conditioning parameter as input and output a and , used to modulate the activations channel-wise based on the value of . Specifically, suppose a layer in the DNN has an activation map of size . In LCT, we transform each activation by and as follows: where both and are vectors of size , and “” stands for element-wise multiplication.

5.2 Details about implementing LCT for classification

We define our family of loss functions using VS loss. We try several choices for , including and . In the first three cases, we set the hyperparameters excluded from to constants (as is done in regular VS loss). We find that is especially strong at improving the average performance and reducing the variance in performance (Figure 8), which is supported by our insights in Figure 3 and Section 4.3. Thus, for most of our experiments, we use .

For each mini-batch, we draw one . This is done by independently sampling each hyperparameter in from a distribution that has a linear density over range . In this distribution, the user specifies , and the height of the probability density function (pdf) at , . The height at , , is then found to ensure the area under the pdf is 1. Unlike the triangular distribution, this general linear distribution does not require the pdf to be 0 at either endpoint and, unlike the uniform function, it does not require the slope of the pdf to be 0.444Appendix D contains more details about the linear distribution. We use this general distribution as a way to experiment with different distributions.

Although the value of at inference time affects the TPR and FPR when , we observe that the ROC curves are almost identical for all values of in the range the model was trained on (see Appendix C). Thus, we evaluate LCT models at one and find their ROC curve from this output.

6 Experiments

6.1 Experimental setup

Methods. For each dataset and imbalance ratio , we train 48 models with the regular VS loss, varying their hyperparameter values. We also train 48 models with LCT applied to VS loss where (VS Loss + LCT). We use , for both methods (note that we set to constants when in LCT). We use for VS loss, and with for VS loss + LCT. We evaluate with . To apply LCT, we augment the networks with one FiLM block after the final convolutional layer and before the linear layer. This block is comprised of two linear layers with 128 hidden units. Specifically, the first layer takes one input and outputs 128 hidden values and the second layer outputs 64 values which are used to modulate the 64 channels of convolutional activations (total of parameters if is a scalar).

Datasets. We experiment on both toy datasets derived from CIFAR10/100 and more realistic datasets derived from Kaggle competitions. CIFAR10 cat/dog consists of the cat and dog classes from the CIFAR10 dataset. We also show results for all pairs of CIFAR10 classes in Section 6.3, but find the cat/dog pair is particularly well-suited for additional experiments since it is a challenging classification problem. CIFAR100 household electronics vs. furniture was proposed as a binary dataset with imbalance by Wang et al. (2016). Each class contains 5 classes from CIFAR100: electronics contains clock, computer keyboard, lamp, telephone and television, and furniture contains bed, chair, couch, table and wardrobe. For all CIFAR experiments, we split the data according to their train and test splits. Kaggle Dogs vs. Cats contains 25,000 images of dogs and cats from the Petfinder website Cukierski (2013). We split the data so that each class has 1,000 validation samples (a 92/8 train/validation split). Finally, SIIM-ISIC Melanoma is a classification dataset, which was designed by the Society for Imaging Informatics in Medicine (SIIM) and the International Skin Imaging Collaboration (ISIC) for a Kaggle competition Zawacki et al. (2020). This is a binary dataset where 8.8% of the samples belong to the positive (melanoma) class (i.e., ). We follow the procedure of Fang et al. (2023) and combine the 33,126 and 25,331 images from 2020 and 2019 respectively and split the data into an 80/20 train/validation split. Table 9 outlines the sizes of these. We subsample the minority class to obtain various imbalance ratios . Specifically, we test the CIFAR datasets at , the Kaggle datasets at .

Model architectures and training procedure. We use two model architectures and training procedures, following the literature for each type of dataset. For CIFAR10/100 data, we follow Kini et al. (2021) and use a ResNet-32 model architecture. We find that LCT takes longer to train, so we train for 500 epochs instead of 200 (note: training the baseline models longer does not significantly alter performance and we do this for consistency). We train these models from scratch using an initial learning rate of 0.1 and decreasing this to and at 400 and 450 epochs respectively. For the Kaggle datasets, we follow Shwartz-Ziv et al. (2023) and use ResNext50-32x4d with weights pre-trained on ImageNet. We train the Melanoma dataset for 10 epochs, following the learning rates and weight decays of Fang et al. (2023) and train the Kaggle Dogs vs. cats models for 30 epochs, following the learning rates and weight decays of other finetuning tasks in Fang et al. (2023). For all experiments we use a batch size of 128 and gradient clipping with maximum norm equal to 0.5. Additionally, with the exception of Figure 8, we use Stochastic Gradient Descent (SGD) optimization with momentum=0.9. We run all experiments on A5000 GPUs.

6.2 VS Loss with and without LCT

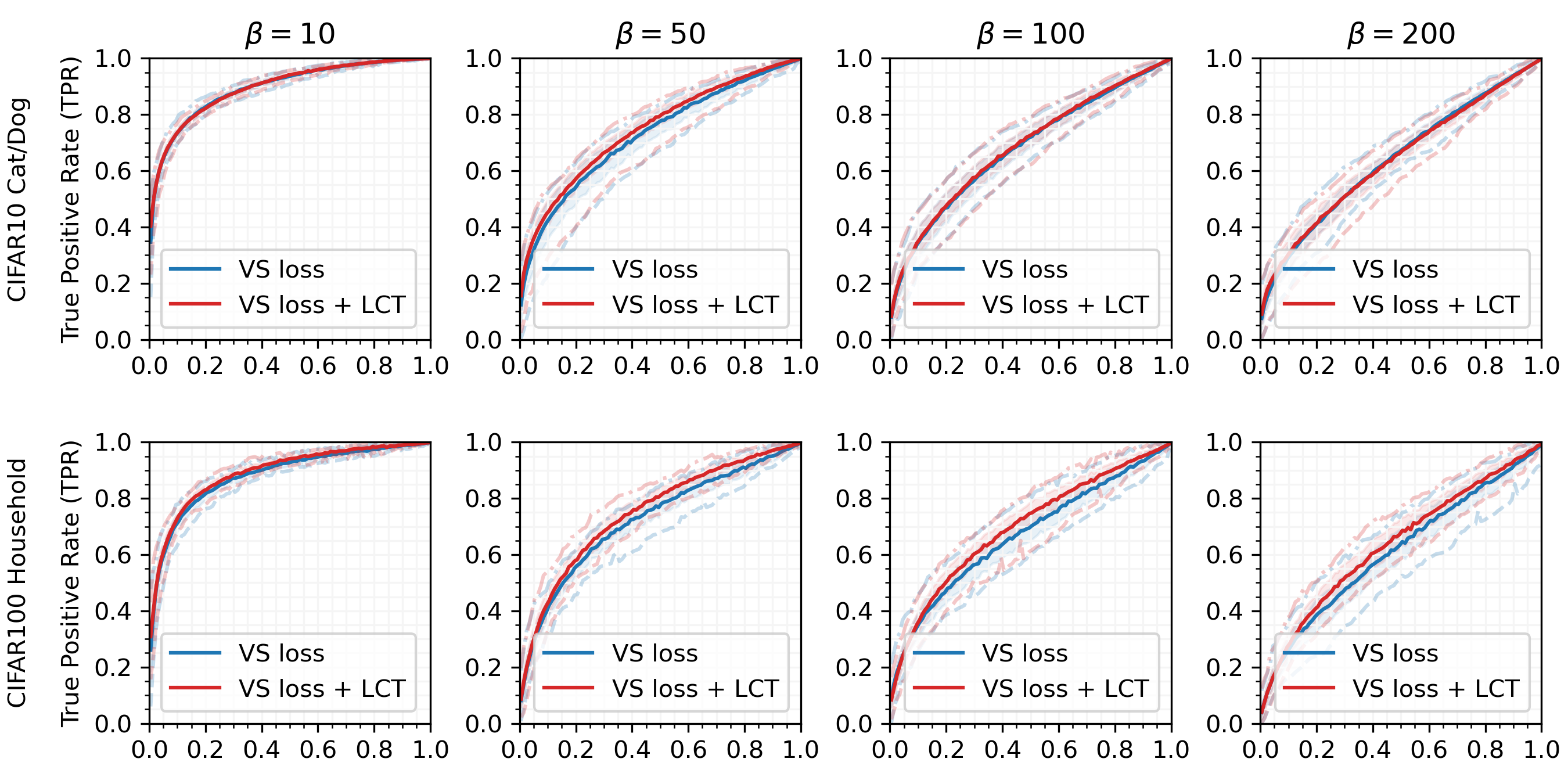

Figures 5 and 6 compare the performance of the VS loss and VS loss + LCT methods on all four datasets, each at multiple imbalance ratios. For each method, we find the mean, min, max, and standard deviation of the 48 models trained with that method. We calculate these values across false positive rates (FPRs). We also show the distribution of AUCs on the SSIM-ISIC Melanoma dataset in Figure 1.

Figure 5 shows that at moderate imbalance ratios (), there is very little variation in the ROC curves obtained by models trained with different hyperparameters. Here the two methods are essentially tied in their performance. However, at high imbalance ratios (Figures 5 and 6), the variance in ROC curves becomes much more drastic. Here LCT consistently outperforms the Baseline method. Specifically, LCT improves or ties the max and mean of the ROC curves in all cases, with a large gap in improvement for Melanoma at .

6.3 Evaluation over all CIFAR10 pairs

For a more comprehensive evaluation, we compare the results of VS loss with and without LCT on all 45 pairs of classes chosen from the 10 CIFAR10 classes. We train each method on each of these binary datasets using 48 different hyperparameter values. We then analyze the aggregated performance of these models by AUC. For each binary dataset, we find the mean, maximum, minimum, and standard deviation of the AUCs over the hyperparameter values. We then compare Baseline (VS loss) and LCT (VS loss + LCT) for each of these metrics. For example, let and be the maximum AUCs achieved on dataset by LCT and Baseline respectively. Then we obtain 45 of these values for each method (one for each dataset ). We then analyze these using a paired t-test and report results in Table 1. The table shows that LCT improves performance, increasing the min and mean AUC in nearly all cases, as well as the max AUC in most cases. It also reduces the standard deviation of AUC values. This shows that LCT significantly outperforms Baseline on a comprehensive set of datasets.

| # LCT | # Base. | Avg. diff | P-value | |

|---|---|---|---|---|

| Max | 37 | 8 | 0.004 | 9.1e-03 |

| Mean | 45 | 0 | 0.010 | 3.9e-17 |

| Min | 43 | 2 | 0.027 | 2.6e-13 |

| Std. dev. | 2 | 43 | -0.005 | 3.2e-12 |

6.4 LCT vs. SAM

Previous work has shown that Sharpness Aware Minimization (SAM) is effective at optimizing imbalanced datasets in the multi-class setting Foret et al. (2021); Rangwani et al. (2022). SAM searches for parameters that lie in neighborhoods of uniformly low loss and usees a hyperparameter to define the size of these neighborhoods. Rangwani et al. (2022) shows that SAM can effectively escape saddle points in imbalanced settings by using large values for (i.e., ). We, however, find that using SAM in this way does not translate to improved performance on a binary dataset. In Figure 8, we compare the performance of the models across several hyperparameter values . We find that, unlike the multi-class case, in the binary case the best is quite small (i.e., ). While training with SAM and can produce some very strong results, this also exacerbates the amount of variance in the performance. LCT with SGD gives models which are better on average and require less tuning. Note that larger values are absolutely detrimental to training in this setting and lead to models which are effectively naive classifiers. We also tried combining LCT and SAM with and found this to be less effective than training with LCT and SGD.

6.5 Choice of

In this section, we compare different options for which hyperparameters in VS loss to use as in LCT. We consider setting to each of the three hyperparameters (using constant values for the other two). We also consider setting . Figure 8 compares these options and shows that achieves a good compromise between improving the AUC of the models and reducing the variance of results. Furthermore, this choice of follows our insights from Section 4.

7 Conclusion

In conclusion, we analyze the theoretically well-studied Vector Scaling (VS) loss, and identify that, in practice, its performance exhibits significant variability for binary class imbalance problems with severe imbalance. We mitigate this problem by training over a family of loss functions. We find that this consistently both improves the ROC curves and reduces the model’s sensitivity to hyperparameter choices. This improvement comes from the fact that training over a family of loss functions is a proxy for optimizing along different TPR and FPR tradeoffs. Areas of future work include studying how to adapt this method to work on multi-class classification problems under imbalance and regression tasks.

References

- Buda et al. (2018) Mateusz Buda, Atsuto Maki, and Maciej A Mazurowski. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw., 106:249–259, October 2018.

- Cao et al. (2019) Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. In Advances in Neural Information Processing Systems, 2019.

- Cassidy et al. (2022) Bill Cassidy, Connah Kendrick, Andrzej Brodzicki, Joanna Jaworek-Korjakowska, and Moi Hoon Yap. Analysis of the ISIC image datasets: Usage, benchmarks and recommendations. Med. Image Anal., 75:102305, January 2022.

- Cukierski (2013) Will Cukierski. Dogs vs. cats, 2013. URL https://kaggle.com/competitions/dogs-vs-cats.

- Dosovitskiy & Djolonga (2020) Alexey Dosovitskiy and Josip Djolonga. You only train once: Loss-conditional training of deep networks. In International Conference on Learning Representations, 2020. URL https://openreview.net/forum?id=HyxY6JHKwr.

- Du et al. (2023) Fei Du, Peng Yang, Qi Jia, Fengtao Nan, Xiaoting Chen, and Yun Yang. Global and local mixture consistency cumulative learning for long-tailed visual recognitions. In Conference on Computer Vision and Pattern Recognition 2023, 2023. URL https://arxiv.org/abs/2305.08661.

- Egan (1975) James P. Egan. Signal Detection Theory and ROC Analysis. In Series in Cognition and Perception. Academic Press, New York. 01 1975.

- Fang et al. (2023) Alex Fang, Simon Kornblith, and Ludwig Schmidt. Does progress on ImageNet transfer to real-world datasets? January 2023.

- Flach & Kull (2015) Peter A Flach and Meelis Kull. Precision-Recall-Gain curves: PR analysis done right. Advances in Neural Information Processing Systems, 28:838–846, 2015.

- Foret et al. (2021) Pierre Foret, Ariel Kleiner, Hossein Mobahi, and Behnam Neyshabur. Sharpness-aware minimization for efficiently improving generalization. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=6Tm1mposlrM.

- Kini et al. (2021) Ganesh Ramachandra Kini, Orestis Paraskevas, Samet Oymak, and Christos Thrampoulidis. Label-imbalanced and group-sensitive classification under overparameterization. In A. Beygelzimer, Y. Dauphin, P. Liang, and J. Wortman Vaughan (eds.), Advances in Neural Information Processing Systems, 2021. URL https://openreview.net/forum?id=UZm2IQhgIyB.

- Lin et al. (2017) Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. Focal loss for dense object detection. August 2017.

- Menon et al. (2021) Aditya Krishna Menon, Sadeep Jayasumana, Ankit Singh Rawat, Himanshu Jain, Andreas Veit, and Sanjiv Kumar. Long-tail learning via logit adjustment. In International Conference on Learning Representations, 2021. URL https://openreview.net/forum?id=37nvvqkCo5.

- Perez et al. (2018) Ethan Perez, Florian Strub, Harm de Vries, Vincent Dumoulin, and Aaron Courville. Film: Visual reasoning with a general conditioning layer. Proceedings of the AAAI Conference on Artificial Intelligence, 32(1), Apr. 2018.

- Rangwani et al. (2022) Harsh Rangwani, Sumukh K Aithal, Mayank Mishra, and Venkatesh Babu Radhakrishnan. Escaping saddle points for effective generalization on class-imbalanced data. In Advances in Neural Information Processing Systems, 2022. URL https://openreview.net/forum?id=9DYKrsFSU2.

- Rotemberg et al. (2020) Veronica Rotemberg, Nicholas Kurtansky, Brigid Betz-Stablein, Liam Caffery, Emmanouil Chousakos, Noel Codella, Marc Combalia, Stephen Dusza, Pascale Guitera, David Gutman, Allan Halpern, Harald Kittler, Kivanc Kose, Steve Langer, Konstantinos Lioprys, Josep Malvehy, Shenara Musthaq, Jabpani Nanda, Ofer Reiter, George Shih, Alexander Stratigos, Philipp Tschandl, Jochen Weber, and H. Peter Soyer. A patient-centric dataset of images and metadata for identifying melanomas using clinical context, 2020.

- Shwartz-Ziv et al. (2023) Ravid Shwartz-Ziv, Micah Goldblum, Yucen Lily Li, C. Bayan Bruss, and Andrew Gordon Wilson. Simplifying neural network training under class imbalance. In Thirty-seventh Conference on Neural Information Processing Systems, 2023. URL https://openreview.net/forum?id=iGmDQn4CRj.

- Wang et al. (2016) Shoujin Wang, Wei Liu, Jia Wu, Longbing Cao, Qinxue Meng, and Paul J. Kennedy. Training deep neural networks on imbalanced data sets. In 2016 International Joint Conference on Neural Networks (IJCNN), pp. 4368–4374, 2016. doi: 10.1109/IJCNN.2016.7727770.

- Xie & Manski (1989) Yu Xie and Charles F Manski. The logit model and Response-Based samples. Sociol. Methods Res., 17(3):283–302, 1989.

- Ye et al. (2020) Han-Jia Ye, Hong-You Chen, De-Chuan Zhan, and Wei-Lun Chao. Identifying and compensating for feature deviation in imbalanced deep learning. January 2020.

- Zawacki et al. (2020) Anna Zawacki, Brian Helba, Jochen Weber George Shih, Julia Elliott, Marc Combalia, Nicholas Kurtansky, NoelCodella, Phil Culliton, and Veronica Rotemberg. Siim-isic melanoma classification, 2020. URL https://kaggle.com/competitions/siim-isic-melanoma-classification.

- Zhao et al. (2020) Yuan Zhao, Jiasi Chen, and Samet Oymak. On the role of dataset quality and heterogeneity in model confidence. arXiv preprint arXiv:2002.09831, 2020.

Appendix A Binary classification metrics

In the binary case, we assume that the minority class is the positive class (i.e., the class with label a label of one). For a given classifier, we can categorize the samples in terms of their actual labels and the classifier’s predictions as shown in Table 2. The remainder of this section defines several metrics used for binary classification.

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.1 True Positive Rate (TPR) = Minority-class accuracy = Recall

The True Positive Rate (TPR) is defined as the proportion of actual positive samples which are predicted to be positive. Note that in the binary case, this is equivalent to both the minority-class accuracy and the recall.

| (9) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.2 False Positive Rate (FPR) = 1 - Majority-class accuracy = 1 - TNR

The False Positive Rate (FPR) is defined as the proportion of actual negative samples which are predicted to be positive. Note that in the binary case, this is equivalent to 1 - the majority-class and 1 - the True Negative Rate (TNR).

| (10) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.3 Precision

The precision is defined as the proportion of predicted positive samples which are actually positive.

| (11) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.4 Overall Accuracy

Perhaps the simplest metric is the overall accuracy of the classifier. This is simply the proportion of samples which are correctly classified (regardless of their class). If the test set is imbalanced, a trivial classifier which predicts all samples as negative will achieve a high overall accuracy. Specifically, the overall accuracy of this classifier will be the proportion of negative samples or . In class imbalance literature, the overall accuracy is often reported on a balanced test set. In this case, the accuracy is an average accuracy on the positive and negative classes.

| (12) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.5 and

In some problems, such as information retrieval, there is only one class of interest (the positive class) and the true negatives can vastly outnumber the other three categories. In this case, a method’s effectiveness is determined by 1) how many positive samples it correctly predicted as positive (i.e., the recall) and 2) how many samples are actually positive out of all the samples it predicted as positive (i.e., the precision). The metric measures how well a method can achieve both of these goals simultaneously. Specifically, the measure is the harmonic mean between the precision and recall and is defined as

| (13) |

The measure assumes that the precision and recall have equal weights; however, sometimes problems have different costs for recall and precision. These asymmetric costs can be addressed by the more general metric. Let be the ratio of importance between recall and precision, then is defined as 555Note that this differs from the which we defined in the main body.,

| (14) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.6 G-mean

The Geometric mean (G-mean or GM) is the geometric mean of the TPR (i.e., sensitivity) and TNR (i.e., specificity) and is defined as follows,

| (15) | ||||

| (16) |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actually Positive | True Positives (TP) | False Negatives (FN) |

| Actually Negative | False Positives (FP) | True Negatives (TN) |

A.7 ROC curves and AUROC

Of course, the number of true positives and true negatives are a trade-off and any method can be modified to give a different combination of these metrics. Specifically, the decision threshold can be modified to give any particular recall. Receiver Operating Characteristic (ROC) curves take this in consideration and show the trade-off of true positive rates and false positive rates over all possible decision thresholds. The area under the ROC curve (AUROC) is calculated using the trapezoid rule for integration over a sample of values and is a commonly used metric Buda et al. (2018).

A.8 Precision-Recall Curves and AUPRC

Similarly, the precision-recall curves show the tradeoff of the recall (on the x-axis) and the precision (on the y-axis) over all possible thresholds. The area under the Precision-Recall Curve (AUPRC) is also calculated using the trapezoid rule.

Appendix B Number of samples

| Train set | Test set | |||||

| Dataset | # maj. | # min. | # min. | # min. | # maj. | # min. |

| () | () | () | ||||

| CIFAR10 pair | 5,000 | 500 | 50 | 25 | 1,000 | 1,000 |

| Household | 2,500 | 250 | 25 | 13 | 500 | 500 |

| Dogs vs. Cats | 11,500 | 1,500 | 150 | 75 | 1,000 | 1,000 |

| SIIM-ISIC Melanoma | 41,051 | 4,071* | 410 | 205 | 12,300 | 1,035 |

Appendix C Effect of evaluation

Appendix D Linear probability density function

We use a linear probability distribution to sample from an interval . Unlike the triangular distribution, the probability distribution function (PDF) of this distribution can be nonzero at both a and b. Figure 10 shows several examples of this distribution when .

To implement this distribution, the user first selects the domain and the height of PDF at , . The function then calculates so that the area under the PDF equals one. To sample from this distribution, we draw from a uniform(0,1) distribution and use the inverse cumulative distribution function (CDF) of the linear distribution to find a value of .

Note that this is the uniform distribution on when . Additionally, this is a triangular distribution when or .

Appendix E Simplifying VS Loss

Recall the definition of VS loss.

| (17) |

Additionally, recall that and are parameterized by and as follows.

| (18) | ||||

| (19) |

Consider a binary problem with an imbalance ratio . Then ’s and ’s are defined as follows

| (20) | |||||

| (21) |

We can simplify as follows. First plug in the ’s and ’s:

| (23) |

Then rewrite as and cancel out from the numerator and denominator.

| (24) | ||||

| (25) | ||||

| (26) |

Then use the following two facts to a) simplify the term inside the log and b) rewrite the log term:

| (27) | |||

| (28) |

| (29) |

Finally, we move the term outside of the exponent

| (30) |

We follow a similar process for and get

| (31) |

Appendix F Partial derivatives of VS-Loss with respect to

Then the partial derivatives of the loss when with respect to and are as follows.

| (32) | |||

| (33) | |||

| (34) |

In particular,

| (35) |

and for balanced classes

Appendix G Full derivation for break-even points

With VS loss defined in Equations 5 and 6, denote , setting gives us

| (36) |

Consider the function . Since , it is clear that is a continuous and monotonically decreasing function. Also, as , and as . These imply that has exactly one unique solution, depended on . Let be the solution such that .

Notice that when , the unique solution = 0. We can also see that if , we have , and since is monotonically decreasing, we know that the solution should be strictly larger than 0. Otherwise, (i.e., ), we have .

Suppose we have found the such that , this means that the break-even point should have . Compared with the regular cross-entropy break-even point, where , we are adding biases here by the terms , , and , dependent on , respectively. These terms change the margin between and , which poses a bias for samples on the break-even point. Specifically, for a break-even point sample (a sample that the trained model is totally confused with), the model still prefers a positive output with if , , and/or , which translate to , , and/or , respectively.

G.1 Numerical Example

Since VS loss introduces a bias term to during training, the model will learn to consistently shrink its and/or increase its outputs to match this bias. Specifically, in the case , but arbitrary , the will re-calibrate to give output where is arbitrary to samples it is completely unsure about. Consequently, the softmax output associated with the break-even points will be larger. For example, when (regular cross-entropy), the softmax associated with the break-even points is 0.5. When , the softmax score associated with the break-even points is (e.g., 0.99 when and ).

Appendix H Contour plots of effect of on VS loss

In this section we consider the effect of hyperparameter values for on the values of the loss functions over a set of for a binary problem. Specifically, we consider the difference of the loss when the label is 1 and 0 (i.e., ). This gives insights into how the model prioritizes different classes over the domain of . We plot this for several combinations of hyperparameter values in Figure 11. In particular, we are interested in the set of “break-even” points or the points of where because this gives insights into the thresholds which a model with that loss function optimizes over.

H.1 Regular cross-entropy

First consider regular cross-entropy loss (i.e., ). Here when . This is shown on the top left column of Figure 11, where the line corresponding to is white, indicating that the losses are equal along this line. If (i.e., ), then the difference of the losses is negative (i.e., ). This makes sense since the model should receive a bigger loss for an incorrect prediction. Notice that this plot is symmetric along the line .

H.2 Varying (Weighted cross-entropy loss)

Next consider VS loss with arbitrary weights , but fixed . Note that this is equivalent to weighted cross-entropy with class 1 having weight and class 0 having weight . We include contour plots of this case in the top row of Figure 11 and simplify the equation of equal losses below. Unfortunately there is no analytic solution in this case, but we provide more insights about this case in Subsection H.5.

| (37) | ||||

| (38) | ||||

| (39) | ||||

| (40) | ||||

| (41) |

H.3 Varying

Next consider VS loss with with arbitrary values of , but fixed . Recall from the previous section that for a binary problem with an imbalance ratio , and . Then

| (42) | ||||

| (43) | ||||

| (44) |

Thus changing equates to rotating the line of break-even points as seen on the middle row of Figure 11.

H.4 Varying

Finally consider VS loss with with arbitrary values of , but fixed . Recall from the previous section that for a binary problem with an imbalance ratio , and . Then

| (46) | ||||

| (47) | ||||

| (48) | ||||

| (49) | ||||

| (50) |

Thus changing equates to shifting the line of break-even points as seen on the bottom row of Figure 11.

H.5 as a function of softmax scores

Recall that each model outputs a vector of logits and that . In the binary case, this is equivalent to computing the softmax score for and thresholding this score at 0.5 as shown below.

| (52) | ||||

| (53) |

Because 0-1 loss is not differentiable, models are instead optimized for a proxy function like cross-entropy. In traditional cross-entropy (i.e., ), the loss function is symmetric such that . Figure 12 shows how this threshold varies for different values of . As increases, the line becomes steeper while becomes less steep and the value of where the losses intersect increases. Note that the point of intersection is

| (54) |

Additionally, note that changing or does not change the point where the losses intersect.