.tifpng.pngconvert #1 \OutputFile \AppendGraphicsExtensions.tif

Optimization of End-to-End AoI in Edge-Enabled Vehicular Fog Systems: A Dueling-DQN Approach

Abstract

In real-time status update services for the Internet of Things (IoT), the timely dissemination of information requiring timely updates is crucial to maintaining its relevance. Failing to keep up with these updates results in outdated information. The age of information (AoI) serves as a metric to quantify the freshness of information. The Existing works to optimize AoI primarily focus on the transmission time from the information source to the monitor, neglecting the transmission time from the monitor to the destination. This oversight significantly impacts information freshness and subsequently affects decision-making accuracy. To address this gap, we designed an edge-enabled vehicular fog system to lighten the computational burden on IoT devices. We examined how information transmission and request-response times influence end-to-end AoI. As a solution, we proposed Dueling-Deep Queue Network (dueling-DQN), a deep reinforcement learning (DRL)-based algorithm, and compared its performance with DQN policy and analytical results. Our simulation results demonstrate that the proposed dueling-DQN algorithm outperforms both DQN and analytical methods, highlighting its effectiveness in improving real-time system information freshness. Considering the complete end-to-end transmission process, our optimization approach can improve decision-making performance and overall system efficiency.

Index Terms:

Information freshness, AoI, IoT, vehicular fog, edge computing, DQN.I Introduction

The integration of wireless communications with IoT devices has been driven by the increasing demand for real-time status updates [1]. Real-time status updates have diverse applications, ranging from optimizing energy consumption in smart homes [2] to predicting and managing forest fires by monitoring temperature and humidity [3]. Additionally, they play a crucial role in assisting drivers by providing data on a vehicle’s speed and acceleration in intelligent transportation systems [4]. To measure the timeliness of this information, a metric called AoI has been introduced [5]. It measures the duration between the latest received information and its generation time. It is of great importance in IoT applications where the timeliness of information is crucial, e.g., in monitoring the status of a system or estimating a Markov process [6]. Unlike conventional measurements like throughput and latency, AoI considers the generation time and delay in the transmission of information [7].

IoT systems are crucial in processing and transmitting real-time status updates [8, 9]. However, the constrained processing capabilities of IoT devices may present challenges in handling real-time status updates for computationally intensive packets. This challenge can be mitigated by offloading real-time data to edge computing [10, 11] and vehicular fog computing (VFC), ensuring rapid processing and the preservation of information freshness. Edge computing, rooted in the European Telecommunication Standards Institute’s cloud virtualization concept, positions servers near cellular base stations to process data closer to users, ensuring faster response times [12]. VFC optimizes vehicular communication and computational resources, leveraging vehicles as the underlying infrastructure [13]. Vehicles can become intelligent entities through an edge server, interacting with their surroundings (V2I) [14]. VFC improves the Intelligent Traffic System (ITS) by processing real-time traffic information, providing accident alerts, facilitating path navigation, and more [15].

Several studies have been conducted on the optimization of AoI based on the transmission time of packets from the source to the monitor [16, 17, 18]. However, as illustrated in Figure 1, the time taken to transmit processed packets from the monitor to the destination has been overlooked. The transmission time of packets from the monitor to the destination significantly influences the freshness of information observation, thereby impacting the system’s performance in making accurate decisions. When outdated information reaches the intended destinations, it unavoidably degrades the quality and reliability of decisions drawn from it, potentially compromising safety and security. Additionally, the limited processing capabilities of IoT devices for computationally intensive packet processing can lead to the distribution of stolen information to the destination.

Let’s consider an example in intelligent transportation systems [4], where the aim is to keep drivers well-informed about their environment’s status, including updates on traffic congestion, accident alerts, emergency message delivery, and earthquake alerts. The information provided by these services frequently changes over time. Consequently, the information’s freshness and timely updates [19, 20] and efficient dissemination of this information are crucial to ensuring its delivery in a fresh state. Otherwise, outdated information will reduce its usefulness, particularly in dynamically changing environments.

We designed an edge-enabled vehicular fog system, as shown in Figure 1, to reduce the computational load of collecting packets from IoT devices. The system offloads packets to an edge server and then to a vehicular fog node via wireless communication. To address this, we formulated an optimization problem for average AoI and proposed a DRL-based dueling-DQN algorithm to solve it [21]. The key technical contributions are summarized below:

-

1.

Designed edge-enabled vehicular environment, formulated and analyzed the impact of packet transmission time and request-response time on optimizing average end-to-end AoI.

-

2.

We proposed a DRL-based dueling-DQN algorithm to solve the issue.

-

3.

We validated the analytical results by implementing the proposed approach and constructing Neural Networks through simulation experiments using TensorFlow [22].

-

4.

Furthermore, we conducted a performance comparison of the proposed algorithm against both the current DQN algorithm and the analytical results.

The rest of the paper is organized as follows. Section II provides a review of related works. Section III elucidates the system models and analyses of AoI. In Section IV, the paper details the problem formulations and introduces the proposed dueling-DQN solution. Section V delves into the simulation and results, while Section VI concludes this work.

II Related Works

This section presents related works on AoI optimization. As described in the next section, we have categorized these works into three groups, which are explained in the next section and summarized in Table I.

II-1 AoI Optimization using Queue Models

Yates et al. [23] examine a straightforward queue with several sources sending updates to a monitor. To optimize AoI, they consider two types of last-come-first-served queueing systems and first-come-first-served and used stochastic hybrid systems. Chao et al. [17] developed an IoT system incorporating multiple sensors to assess information freshness, optimizing peak AoI (PAoI). Elmagid et al. [24] considered a multi-source updating system that uses SHS for several queueing disciplines and energy harvesting (EH) to deliver status updates about multiple sources to a monitor.

II-2 AoI Optimization based on Edge-enabled Models

Qiaobin et al. [18] employed a zero-wait policy and used MEC to examine the AoI for computation-intensive messages in their research. Ying et al. [25] have researched a wireless sensor node that uses RF energy from an energy transmitter to power the transfer of compute tasks to a MEC server. The zero-wait policy is implemented when the sensor node submits the computing task to the MEC server after the capacitor has fully charged. Zipeng et al. [26] discussed a real-time information dissemination approach over vehicular social networks (VSNs) with an AoI-centric perspective. They utilize scale-free network theory to model social interactions among autonomous vehicles (AVs).

| Categories | Papers | Target Network | Source | Estimation of AoI | Objectives | Constraint | Approaches | ||

| Single | Multi | Source to Monitor | Monitor to Destinations | ||||||

| AoI Optimization based on Queueing Models | [8] | Simple Queueing Model | ✓ | Average AoI | Queue Capacity | Stochastic Hybrid System (SHS) | |||

| [17] | Tandem Queueing Model | Average PAoI | Min-max Optimization | ||||||

| [24] | General Queueing Model | Average AoI | Energy | SHS | |||||

| AoI Optimization based on Edge-enabled Models | [18] | Mobile Edge Computing | ✓ | Average AoI | Resources | Zero-wait policy | |||

| [25] | IoT Device | Average AoI | Energy | Generating set search-based algorithm | |||||

| [26] | Vehicular Network | ✓ | Average Peak Network AoI (NAoI) | Transmission Capacity | Parametric Optimization Schematic | ||||

| AoI Optimization using RL Approach | [7] | IoT Device | ✓ | Average weighted sum of AoI and energy | Energy | DRL | |||

| [27] | Vehicle to Everything | Average AoI | Resources | DRL(DDPG) | |||||

| [28] | IoT Device | ✓ | Average weighted sum of AoI and energy | Distributed RL | |||||

| [29] | Edge Computing | Average AoI | DQN | ||||||

| [30] | Vehicle Network | AoI-aware radio resource allocation | DRQN | ||||||

| Ours | Edge and Vehicular Fog | ✓ | ✓ | Average end-to-end AoI | RL(dueling-DQN) | ||||

II-3 AoI Optimization using RL Approach

Xie et al. [7] explored an IoT system comprising devices endowed with computing capabilities. These devices can update their status, and this update can either be computed directly by the monitor or by the device itself before being transmitted to the destination. The authors aimed to minimize the average weighted sum of AoI and energy consumption. To achieve this, they employed DRL based on offloading and scheduling criteria. Zoubeir et al. [27] employed the deep deterministic policy gradient (DDPG) based DRL algorithm to reduce the average end-to-end AoI within 5G vehicle networks. Sihua et al. [28] introduced an innovative distributed RL method for sampling policy optimization to minimize the combined weighted sum of AoI and total energy consumption within the examined IoT system, as referenced in their research. Lingshan et al. [29] devised a novel approach for an Unmanned Aerial Vehicle (UAV)-assisted wireless network with Radio Frequency (RF) Wireless Power Transfer (WPT) using DRL. They formulated the problem as a Markov process with extensive state spaces and introduced a DQN-based strategy to discover a near-optimal solution for it. Xianfu et al. [30] analyzed the management of radio resources in a vehicle-to-vehicle network within a Manhattan grid. Their work involves an inventive algorithm utilizing DRL techniques and long short-term memory, enabling a proactive approach within the network.

The above-mentioned works focus on optimizing the AoI of data packet transmission time from source to monitor. However, the time to transmit the results from the monitor to the destination has been ignored. This presents a challenge in addressing the needs of intelligent applications for real-time processing of packets, making it difficult to meet system performance requirements.

III System Model and Analysis of AoI

In this section, we discussed the edge-enabled vehicular fog systems model and the analysis of AoI models. Table II presents the notations used in AoI analysis and problem formulation.

III-A Edge-enabled Vehicular Fog Model

We considered a real-time status update in edge-enabled vehicular fog systems as illustrated in Figure 1. The system consists of a set of IoT devices (sources), denoted as , an edge server (monitor) denoted as , and vehicular fog (destination) denoted as . The packets from the source are generated based on a Poisson process with a parameter and served according to independent and identically distributed (i.i.d.) service times with rates and where and , representing the computational capacities of the edge server and vehicular fog, respectively. The server utilization offered by the th source is , and the total server utilization is . Thus, the updates of the th source compete with the aggregate of the other sources, with , where . The edge server receives updates from the IoT devices and keeps track of the system’s state. The vehicular fog can connect to the edge server via a wireless communication channel and request the latest information. The updated information from the edge server is offloaded to the vehicular fog via the wireless communication channel. Using the metric Age of AoI, we can assess how recent the status information is at the destination (target).

| Notations | Descriptions |

|---|---|

| Edge server | |

| Vehicular-fog | |

| Set of IoT devices, | |

| , | Distance between th IoT device to Edge server and Edge server to Vehicular fog |

| , | Computational capacity of edge servers and vehicular fog |

| , | Uplink and Downlink transmission rate from th IoT device to edge server and edge server to vehicular fog |

| The packet arrival from the th IoT device to the edge server | |

| Size of the original packets from the IoT device | |

| Size of the processed data packets from the edge server | |

| Time expected between packet arrivals from IoT devices | |

| , | Time expected for packets to be sent from IoT devices to edge server, and from edge nodes to vehicular fogs |

| , | Data transmission queue and processing queue expected waiting times |

| The edge server’s expected processing time | |

| Server busyness probability | |

| Average end-to-end AoI |

Figure 1 illustrates the structure of queuing systems for packet management policies. Packets are generated from the source device, pushed into an infinite buffer in the transmission system, and then moved to the processing system. Both the transmission and processing systems are formulated as queuing models with infinite buffer sizes. These buffers are termed the transmission queue and the processing queue. An arriving packet will be transmitted to the edge server if the transmission channel is available. However, when the transmission channel is busy, arriving packets wait in the transmission queue. We assume that the server can handle at most one packet at a time, with packets being serviced using a first-come-first-served (FCFS) queuing discipline. When the server is busy, any new arriving packets are placed in the processing queue until the current packet is finished.

III-B AoI Analysis in Edge-enabled Vehicular Fog Systems

III-B1 Communication model

The size of the processed packet affects the communication between the transmitted packet from the IoT source to the edge server, and the edge server to vehicular fog. It directly impacts the transmission rate and how long it takes to transmit it in the transmission stage. This communication model can be formulated using Shannon’s formula [31] as:

| (1) |

| (2) |

where and are the up-link and down-link rate of a packet for the communication between the IoT device to edge server, and edge server to vehicular fog, respectively, is the channel bandwidth, is the gain of a channel, and are the transmit channel power gains of the IoT device and edge server, respectively, is the density of noisy power and is the path loss exponent.

III-B2 Time-Average Age Analysis

-

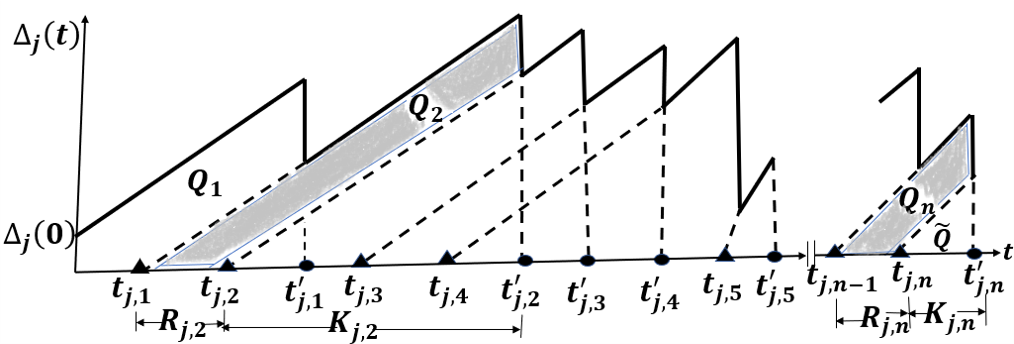

Figure 2 shows a sample variation in age as a function of time for the th source at the destination. Without loss of generality, we start observing when the queue is empty and the age is . The initial status update of the th source is time-stamped as and is followed by updates time-stamped , , , . The AoI of the packet generated by the IoT device is given by , where is the generation time of the most recently received packet from an IoT device until time instant .

At the destination, the age of source increases linearly without updates, and it resets to a smaller value when an update is received. The update of the th source, generated at time , finishes service and is received by the destination at time . At , the age at the destination is reset to the age of an update on the status. denotes the system time of packet for the th IoT device. Thus, the age function shows a saw-tooth pattern as seen in Figure 2. In the status update process, the time-average age is the area under the age graph normalized by the observation period , as shown in [8].

| (3) |

To simplify it, the observation interval length has been set to . In Equation (3), the area defined by the integral is divided into the sum of the areas of the polygons , the trapezoidal areas for ( and , highlighted in Figure 2), and the triangular area of width over the time interval . is the difference in the area of the points connected by the base of an isosceles triangle can be calculated and , and the area of the isosceles triangle with a base connecting the points and , then the inter-arrival time of the update can be calculated as .

| (4) |

With , where is the number of packets of th sources updated by time . After some rearrangement and using Equation (4), the time-average age is

| (5) |

where , and as the contribution of to the average AoI is negligible.

Definition 1.

If () is a stationary sequence with a marginal distribution equal to (), then for source there exists an ergodic and stationary status updating system, and as , , and with probability 1.

In a stationary ergodic status updating system, represents the inter-arrival time between updates from source , and is the system time for these updates. Little’s Law does not explicitly provide conditions for the ergodicity of the age process, but these can be verified in well-designed service systems. In systems with infinite buffers, update rates must be controlled to maintain a stationary and ergodic age process [8]. For such a system, the time average tends to the probability density, and the average AoI of th source using Equation (5) as , is

| (6) |

The system time is determined by the waiting time in the processing system and in the transmission system . As such, we can write . So, the AoI for the th source in Equation (6) is formulated as

| (7) |

Referring to Equation (7), we emphasize to and , it should noted that the packet arrives from the th IoT device with parameter implies and , then the AoI can be formulated as

| (8) |

Following Equation (8), the waiting time for a packet in the processing system is determined by its waiting time in the processing queue () plus the server processing time (). This can be expressed as: , where . In the other case, the waiting time for a packet in the transmission system is given by the sum of its waiting time in the transmission queue () and the time taken in the transmission channel, which includes the transmission from the queue to the edge server () and from the edge server to the vehicular fog (). This can be expressed as: . Then Equation (8) can be reformulated as

| (9) |

Following this, we need the analyze of each element, in Equation (LABEL:eq:CM9), .

III-B3 Analysis of Packet transmission rate

To analyze the packet transmission rate, as formulated in Equations (1) and (2), we utilized Shannon’s formula [31]. Here the rate stands for the transmission channel capacity. Higher transmission rates allow faster data transmission, reducing the time it takes for information to travel from the source to the destination. Faster transmission rates lead to lower AoI, as updates can be delivered more quickly and frequently, keeping the information fresher.

Analysis of

The transmission rate is given in Equation (1), and according to a Rayleigh distribution [32], transmission rates are random variables. Then we can calculate the expected service time for packets from the th IoT device using the following theorem.

Theorem 1.

The time it takes to successfully transmit packets generated by th IoT device is calculated as

| (10) |

Proof.

The proof is shown in Appendix A ∎

Analysis of

Given the transmission rate of processed packets from the edge server to vehicular fogs in Equation (2) and considering a Rayleigh distribution [32] that the transmission rate is a random variable. Based on the following theorem, we can determine how long the data packet should take from the edge server to the vehicular fog during the transmission subsystem.

Theorem 2.

The time it takes to successfully transmit processed packet from the edge server to vehicular fog is calculated as

| (11) |

Proof.

The proof is shown in Appendix B ∎

III-B4 Analysis of

A freshly received packet must wait in the processing queue until the completion of the packets currently on the server. Let be the probability of busy periods on the server. Using Little’s law [33], we can compute as

| (12) |

Based on this analysis, we can formulate the expected waiting time of a packet in the processing queue using the following theorem.

Theorem 3.

In the processing queue for packets from th IoT device, the waiting time is formulated as

| (13) |

where is indicator variable,

the load caused by data packets from th IoT device.

Proof.

The proof is shown in Appendix C ∎

III-B5 Analysis of

We take into consideration M/G/1 systems [34], in which customers come from an infinite customer pool with independent, exponentially distributed inter-arrival times, wait in a queue with an infinite capacity, are independently served by a single server with a general service time distribution, and then go back to the client pool [35]. The estimated waiting time spent in the transmission queue was estimated by using the principle of maximum entropy (PME) [34]. Based on this principle, we can derive the following theorem.

Theorem 4.

In the transmission queue, the estimated time spent waiting for packets originally generated from the th IoT device is calculated as

| (14) |

Proof.

The proof is shown in Appendix D ∎

IV Problem Formulation and Proposed Solution

IV-A AoI Optimization Problem Formulation

We considered an edge-enabled vehicular fog system, which consists of multiple IoT devices, an edge server, and a vehicular fog. We aim to minimize the average end-to-end AoI ( of edge-enabled vehicular fog systems. To achieve this, we formulated the average end-to-end AoI ( as

| (15) |

where indicates the average end-to-end AoI of th IoT device updates over the long term, given in Equation (LABEL:eq:CM9), is the updated arrival rates, and the total time slot. Hence, the corresponding problem is stated as

| (16) |

subject to

| (17a) | |||

| (17b) | |||

| (17c) | |||

| (17d) | |||

The constraint in Equation (17a) guarantees the stability of the processing capacity, which is only one packet at a time. The inequalities in Equations (17b) and (17c) also ensure that the transmission channel will serve only one packet at a time (transmission from IoT device to edge server and, from the edge server to vehicular fog), respectively. The constraint in Equation (17d) guarantees the non-negativity of each packet arrival.

IV-B Proposed Solution

Our objective is to obtain the optimal policy that specifies the actions taken at different states of the system over time, achieving the minimum average end-to-end AoI at the destination. To solve this optimization problem, we used a modified DRL function. This function is known as an agent, which interacts with the edge-enabled vehicular fog environment to find optimal solutions. The agent can be placed on an edge server to offload information to vehicular fogs. A DRL agent is trained using a replay memory buffer that stores information in the form of, states, actions, rewards, and next-states . The agent learns the optimal action to take given a particular environmental state. The state represents the current condition of the edge-enabled vehicular fog system. The probability of offloading processed packets to vehicular fog is the action taken by the DRL agent. The optimal action aims to minimize the average end-to-end AoI.

IV-B1 Edge-enabled Vehicular Fog Environment setup

The offloading decisions in edge-enabled vehicular fog environments require appropriate decisions to be made at each moment in time to achieve their objectives. We have implemented DRL-based agents to interact with the environment and make quick decisions. We define the agent, states, actions, and rewards for AoI optimization in the designed environment as follows.

Agent

Agent is a decision-maker who interacts with edge-enabled vehicular fog environments.

Environment

Edge-enabled vehicular fog systems topology ().

State ()

State is a representation of the current environment that the agent can observe. It includes all relevant information necessary for decision-making, such as current topology (), packet arrival (), computation resources for edge server (), and vehicular fog (), communication resources between IoT devices and edge server () and edge server and vehicular fogs (), and the AoI (). The state arrays are constructed from the states and used as inputs to the algorithms: , , , , where is the set of packet arrival rates to all edge servers at time . is an array of the computing capacities at the edge server and vehicular fogs, and is the set of communication capacities between IoT devices, edge servers, and vehicular fogs. set of the AoI of the system. , , , and are combined to form the environment state at time . Then the system environment state at time can be represented as .

Action

An action refers to the potential offloading decisions that the agent makes and applies in the edge-enabled vehicular fog environment. Since the offloading decision is binary, then . At time the action can be expressed as . Let be a set of offloading decisions to be sent to the requested vehicular fogs, where .

Reward ()

Our objective is to maximize the cumulative rewards , it receives over time while minimizing end-to-end AoI. So, the reward function should be related to the goal of our optimization problem, which is given as

| (18) |

where is the episode at th time.

IV-B2 Proposed Dueling-DQN Algorithm

The dueling-DQN algorithm is a model-free DRL approach that predicts future states and rewards within an environment without learning the transition function of the environment [21]. The primary objective of this algorithm is to determine the best policy. To achieve this, the neural network used in the dueling-DQN algorithm divides its last layer into two sections: one section estimates the expected reward collected from a specific state, called the state value function (), while the other section measures the relative benefit of one action compared to others, called the advantage function (). In the end, it merges the two components into a single output, which estimates a state-action value . It can be computed as

| (19) |

The expectation of the advantage function is zero, and the value function in Equation (19) is replaced using Equation (20), where is a -dimensional vector for a set of actions

| (20) |

where policy is the distribution connecting state to action. Let be the value function with parameters , which is expressed as

| (21) |

where is parameter of the convolutions layer, is the action-value function parameter of fully-connected, is advantage parameter of fully-connected. The dueling DQN agent observes and interacts with the environment. The target -value () of the target -network given as

| (22) |

Then, the loss function in dueling-DQN is

| (23) |

where denotes the expected value. Finally, the action-value function is updated as

| (24) |

To achieve optimal offloading of real-time updating information in an edge-enabled vehicular fog environment, we propose Algorithm 1, utilizing a modified dueling-DQN algorithm. The dynamic changes in the environment bring about variations in agent actions and state spaces at each step of the process. Due to improved network stability and faster convergence time, managing the dynamics of this process is possible through the proposed algorithm. After several iterations, an end-to-end AoI optimization policy can be obtained to deliver the most optimally updated information to vehicular fog. Algorithm 1 works as follows:

Step 1

The algorithm takes a set of IoT devices, computing capacity, vehicular fog, and packet arrival as input state to return an offloading decision as an output, as shown in lines 1 and 2. The current and target -function parameters, replay memory, the maximum number of episodes, policy update frequency, the maximum time slots, and the maximum Epsilon greedy are initialized in lines 3-6.

Step 2

In the agent’s training process, at each time slot the agent observes the environment state and chooses the random action with a probability of epsilon-greedy. Then execute the action in the environment to calculate the reward and observe the next state as shown in lines 11-14. The transition is stored in the replay memory, and the current state is set to the next state in lines 15-16.

Step 3

Step 4

The gradient of the network parameters, denoted as , is computed using Equation (23). The network parameter is then updated using the gradient , following the expression and then update the action-value network using Equations (24) in lines 23-28.

Figure 3 illustrates the exchange of information between the environment and the proposed dueling-DQN algorithm. It comprises three primary components: First, the edge-enabled vehicular fog environment provides information to the agent as a state () to determine the offloading decisions () at time iterations. Secondly, the replay memory serves as a storage for transition information, capturing the history of agent-environment interactions as a tuple of state, action, reward, and next state . This transition information is crucial for updating the agent model’s parameters during training. Finally, the agent, the offloading agent utilizes the current network to update both the current -value and the target network -value. This updating process is done based on the gradient loss values.

V Performance Analysis

V-A Simulation Parameter Settings

In edge-enabled vehicular fog systems, the Python programming language was utilized to create the simulation environment. We developed the edge-enabled vehicular fog environment, following OpenAI Gym’s standards [36]. To ensure its effectiveness in meeting all required DRL criteria, we conducted rigorous testing using the Stable-Baselines3 DRL implementation [37]. This evaluation allowed us to thoroughly assess the system’s performance and confirm its suitability for comparison with other DRL algorithms. The proposed solutions were compared with the existing DQN algorithm and analytical results. The parameter configurations were presented as both system parameters and agent parameters.

| Parameters descriptions | Value(s) |

|---|---|

| Number of Edge server () | 1 |

| Number of Vehicular fog () | 1 |

| Number of IoT Devices () | [] |

| Distance between th IoT device and Edge server () | 3KM |

| Distance between edge node and Vehicular-fog () | 10km |

| System Bandwidth () | 100KHz = () |

| Background noise () | -174 dBm/Hz = -124dBm |

| Transmission and Reception power () | 1000 mW, = (200dBm) |

| Path loss exponent () | 3 |

| Time slots () | [1-10] |

| The capacity of the Edge server () | |

| The capacity of Vehicular Fog () | |

| Arrival packets from IoT devices () | [10, 20, 40, 60, 80]Mbps |

| The original packet size arrived at the edge server () | |

| Processed packet size from the edge server to vehicular fog () | |

| Packet size | 10bits |

V-A1 System parameter settings

Table III provides system parameter settings, and we examined the effects of IoT device size and different time slots. In the experiments, we considered five arrival traffic rate scenarios. The traffic generated by the IoT devices follows a Poisson distribution, with mean values. The distances between IoT devices and the edge server and between the edge server and vehicular fog are randomly distributed as defined in Table III.

| Parameters | Value |

|---|---|

| Learning rate () | 0.0001 |

| Batch Size | 128 |

| Replay memory size | 100000 |

| Discount factor () | 0.99 |

| Initial epsilon () | 0.5 |

| Max-Episodes | |

| Hidden Layer_1 and Layer_2 | 256 and 256 |

V-A2 Agent hyper-parameter settings

The simulation environment was written in Python, and for DRL policy both (dueling-DQN and DQN) algorithms, the ANNs are implemented using TensorFlow [22]. The configurations for the DRL algorithms can be found in Table IV.

V-B DRL Agents Convergence Evaluations

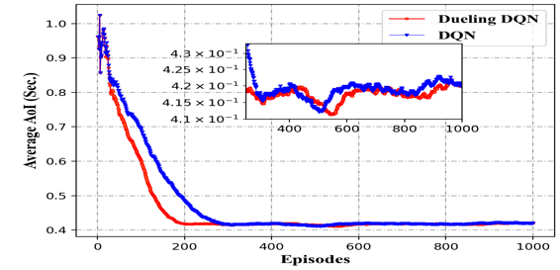

In Figure 4, we evaluate the performance of the proposed dueling-DQN-based DRL algorithm by assessing its performance regarding reward, loss, and the optimal AoI values achieved by the algorithm. During the evaluation of agent performance, each algorithm is run for 10,000 episodes, and the results are averaged. The optimal episode rewards from the dueling-DQN and DQN algorithms are shown in Figures 4a and 4b, respectively, where, in contrast to DQN, the proposed dueling-DQN algorithm learns faster and achieves optimal rewards more quickly. The average reward convergence of dueling-DQN and DQN agents is illustrated in Figure 4c. This shows that the dueling-DQN agent outperforms in terms of optimal rewards over a range of episodes, from 50 to 250, because the dueling-DQN agent can learn a more optimal policy. However, after converging, both the dueling DQN and DQN agents exhibit similar performance. This similarity arises because, in both algorithms, the epsilon-greedy approach introduces randomness, leading to sub-optimal policy performance. As a result, achieving optimal reward values can be accomplished by reducing the value of epsilon to zero after the algorithm converges. In Figure 4d, the optimal convergence of AoI for DRL agents is illustrated, demonstrating that the dueling-DQN agent exhibits better convergence and optimal AoI values. Due to the utilization of randomness in both algorithms, their convergence performance becomes similar after the agents have converged. The losses of the dueling-DQN and DQN agents are shown in Figures 4e and 4f, respectively. These losses are further averaged and presented in Figure 4g. The dueling-DQN agent exhibits fewer outliers and improved stability because DQN is vulnerable to overestimation issues, causing worse training stability and more outliers.

Figure 5 illustrates the assessment of the dueling-DQN algorithm concerning different numbers of IoT devices and time slots. In Figure 5a, we analyze the average end-to-end AoI as the number of IoT devices increases while keeping time slots fixed. Conversely, in Figure 5b, we assess the average end-to-end AoI with varied time slots while maintaining a constant number of IoT devices. Both Figures 5a and 5b demonstrate that the average end-to-end AoI rises with an increasing number of IoT devices and varied time slots. The convergence of average end-to-end AoI evaluation occurs at episode number 200, and with the increase in the number of episodes, there is a noticeable trend towards greater stability and a decrease in AoI. Based on this result, we can conclude that the dueling-DQN agent is more transferable and that the network shows more training stability. This is due to the dueling-DQN algorithm’s ability to learn the dynamics of the environment and make wise decisions.

V-C AoI Performance Analysis

In Figure 6, we compared the average end-to-end AoI performance of the system algorithms under an increasing number of IoT devices. The average end-to-end AoI has increased with the rise in the number of IoT devices for all algorithms. This is because as the number of IoT devices increases, the generation time and waiting time in the transmission channel will also increase for each packet. As a result, the average end-to-end AoI value will be higher. However, the dueling-DQN algorithm is shown to perform close to analytical results and outperform the DQN algorithm when the number of IoT devices ranges from 2 to 7. However, when the number of IoT devices exceeds 7, dueling-DQN performs even better. This improvement can be attributed to the fact that dueling-DQN learns value functions without considering actions in each state, resulting in a significant enhancement in network stability and convergence speed.

Figure 7 evaluates the average end-to-end AoI performance of our proposed dueling-DQN algorithm concerning different time slots and compares it with DQN and analytical results. As the number of time slots increases, the average end-to-end AoI grows monotonically concerning time for all algorithms. This is because, with more time slots, there is a higher likelihood that each packet generated by the IoT devices will take more time to schedule in the transmission channel, while also reducing the time available for generating updates. As shown in Figure 7, for time slots ranging from 2 to 6, all algorithms exhibit similar performance. However, after the time slots increase beyond 6, dueling-DQN outperforms the other algorithms. This is attributed to the fact that the dueling-DQN algorithm remains more stable even with increased time slots. Both DRL algorithms (dueling-DQN and DQN) outperform the analytical results when the time slots exceed 9. This is because the DRL algorithms fully consider the effect of time slots on decision-making and utilize an experience replay buffer, which is uniformly sampled. This approach increases data efficiency and reduces variance, as the samples used in the update are less likely to be correlated.

Figure 8 shows the performance of the algorithms when varying the packet arrival size. As the offloaded packet size increases, the average end-to-end AoI also increases. This is because it requires more time to transmit one packet for each data stream in the transmission channel, packet processing queue, and packet transmission queue, resulting in a higher average end-to-end AoI. The dueling-DQN algorithm achieves better performance in terms of AoI compared to the DQN algorithm and the analytical results. This is attributed to its ability to learn value functions without considering actions in each state, allowing it to offload more fresh information and optimize the average end-to-end AoI.

Figure 9 illustrates the system performance evaluation with an increasing number of transmissions power. For all algorithms, the average end-to-end AoI decreases as transmission power increases. This is because higher transmission power makes it easier to offload the updated packet requirements, resulting in faster service times and a lower average end-to-end AoI. Notably, our proposed dueling-DQN algorithm performs the best, while the DQN algorithm has the worst performance. This improvement in the dueling-DQN algorithm is due to increased network stability and convergence speed, significantly reducing the average end-to-end AoI with higher transmission power. Additionally, for transmission power above 60dBm, the analytical results outperformed the DQN algorithm. Because the DQN algorithm sometimes produces wrong decisions due to overestimation and network instability, resulting in higher AoIs.

VI Conclusion

This research work considers an edge-enabled vehicular fog system and aims to minimize the average end-to-end system AoI. A time-step-based dynamic status update optimization problem is developed and analyzed, and a modified dueling-DQN-based algorithm is used to solve this problem. The simulation results demonstrate that our proposed dueling-DQN algorithm converges faster than the DQN algorithm. Furthermore, the proposed algorithm achieves a lower average end-to-end AoI compared to both the DQN algorithm and the analytical results. This improvement is attributed to the dueling-DQN algorithm’s ability to learn the dynamics of the environment and make wise decisions.

Our future research will focus on leveraging the correlation of IoT device status updates to improve scheduling and offloading strategies. Although this paper assumes interoperability in vehicular fog environments, real-world implementation faces challenges due to resource and time limitations, making real-world validation a key research extension.

References

- [1] S. Chen, T. Zhang, Z. Chen, Y. Dong, M. Wang, Y. Jia, and T. Q. Quek, “Minimizing age-upon-decisions in bufferless system: Service scheduling and decision interval,” IEEE Transactions on Vehicular Technology, vol. 72, no. 1, pp. 1017–1031, 2022.

- [2] H. Menouar, I. Guvenc, K. Akkaya, A. S. Uluagac, A. Kadri, and A. Tuncer, “Uav-enabled intelligent transportation systems for the smart city: Applications and challenges,” IEEE Communications Magazine, vol. 55, no. 3, p. 22–28, Mar. 2017.

- [3] M. Bisquert, E. Caselles, J. M. Sánchez, and V. Caselles, “Application of artificial neural networks and logistic regression to the prediction of forest fire danger in galicia using modis data,” International Journal of Wildland Fire, vol. 21, no. 8, pp. 1025–1029, 2012.

- [4] Z. Xiong, H. Sheng, W. Rong, and D. E. Cooper, “Intelligent transportation systems for smart cities: a progress review,” Science China Information Sciences, vol. 55, pp. 2908–2914, 2012.

- [5] S. Kaul, R. Yates, and M. Gruteser, “Real-time status: How often should one update?” 2012Proceedings IEEE INFOCOM, Orlando, FL, USA, 2012, pp. 2731–2735, 2012.

- [6] X. Chen, K. Gatsis, H. Hassani, and S. S. Bidokhti, “Age of information in random access channels,” IEEE Transactions on Information Theory, vol. 68, no. 10, pp. 6548–6568, 2022.

- [7] X. Xie, H. Wang, and M. Weng, “A reinforcement learning approach for optimizing the age-of-computing-enabled iot,” IEEE Internet of Things Journal, vol. 9, no. 4, pp. 2778–2786, Feb.15, 2022.

- [8] R. D. Yates and S. K. Kaul, “The age of information: Real-time status updating by multiple sources,” IEEE Transactions on Information Theory, vol. 65, no. 3, pp. 1807–1827, 2019.

- [9] L. Hu, Z. Chen, Y. Dong, Y. Jia, L. Liang, and M. Wang, “Status update in iot networks: Age-of-information violation probability and optimal update rate,” IEEE Internet of Things Journal, vol. 8, no. 14, pp. 11 329–11 344, 2021.

- [10] B. Kar, Y.-D. Lin, and Y.-C. Lai, “Cost optimization of omnidirectional offloading in two-tier cloud–edge federated systems,” Journal of Network and Computer Applications, vol. 215, p. 103630, 2023.

- [11] F. G. Wakgra, B. Kar, S. B. Tadele, S.-H. Shen, and A. U. Khan, “Multi-objective offloading optimization in mec and vehicular-fog systems: A distributed-td3 approach,” IEEE Transactions on Intelligent Transportation Systems, 2024.

- [12] Y. C. Hu, M. Patel, D. Sabella, N. Sprecher, and V. Young, “Mobile edge computing—a key technology towards 5g,” ETSI white paper, vol. 11, no. 11, pp. 1–16, 2015.

- [13] B. Kar, K.-M. Shieh, Y.-C. Lai, Y.-D. Lin, and H.-W. Ferng, “QoS violation probability minimization in federating vehicular-fogs with cloud and edge systems,” IEEE Transactions on Vehicular Technology, vol. 70, no. 12, pp. 13 270–13 280, 2021.

- [14] I. Sorkhoh, D. Ebrahimi, S. Sharafeddine, and C. Assi, “Minimizing the age of information in intelligent transportation systems,” 2020 IEEE 9th International Conference on Cloud Networking (CloudNet), Piscataway, NJ, USA, 2020, p. 2020, 2020.

- [15] N. Keshari, D. Singh, and A. K. Maurya, “A survey on vehicular fog computing: Current state-of-the-art and future directions,” Vehicular Communications, vol. 38, p. 100512, 2022.

- [16] Z. Chen, Q. Zeng, S. Chen, M. Li, M. Wang, Y. Jia, and T. Q. Quek, “Improving the timeliness of two-source status update systems in internet of vehicles with source-dedicated buffer: Resource allocation,” IEEE Transactions on Vehicular Technology, 2023.

- [17] C. Xu, H. H. Yang, X. Wang, and T. Q. S. Quek, “Optimizing information freshness in computing-enabled iot networks,” IEEE Internet of Things Journal, vol. 7, no. 2, pp. 971–985, 2020.

- [18] Q. Kuang, J. Gong, X. Chen, and X. Ma, “Analysis on computation-intensive status update in mobile edge computing,” IEEE Transactions on Vehicular Technology, vol. 69, no. 4, pp. 4353–4366, 2020.

- [19] Y. Sun, E. Uysal-Biyikoglu, R. D. Yates, C. E. Koksal, and N. B. Shroff, “Update or wait: How to keep your data fresh,” IEEE Transactions on Information Theory, vol. 63, no. 11, pp. 7492–7508, 2017.

- [20] D. Deng, Z. Chen, H. H. Yang, N. Pappas, L. Hu, M. Wang, Y. Jia, and T. Q. Quek, “Information freshness in a dual monitoring system,” in IEEE Global Communications Conference (GLOBECOM), 2022, pp. 4977–4982.

- [21] Z. Wang, T. Schaul, M. Hessel, H. Hasselt, M. Lanctot, and N. Freitas, “Dueling network architectures for deep reinforcement learning,” in International conference on machine learning. PMLR, 2016, pp. 1995–2003.

- [22] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard et al., “Tensorflow: a system for large-scale machine learning.” in Osdi, vol. 16, no. 2016. Savannah, GA, USA, 2016, pp. 265–283.

- [23] R. D. Yates and S. K. Kaul, “The age of information: Real-time status updating by multiple sources,” IEEE Transactions on Information Theory, vol. 65, no. 3, pp. 1807–1827, 2018.

- [24] M. A. Abd-Elmagid and H. S. Dhillon, “Age of information in multi-source updating systems powered by energy harvesting,” IEEE Journal on Selected Areas in Information Theory, vol. 3, no. 1, pp. 98–112, 2022.

- [25] Y. Liu, Z. Chang, G. Min, and S. Mao, “Average age of information in wireless powered mobile edge computing system,” IEEE Wireless Communications Letters, vol. 11, no. 8, pp. 1585–1589, 2022.

- [26] Z. Li, L. Xiang, and X. Ge, “Age of information modeling and optimization for fast information dissemination in vehicular social networks,” IEEE Transactions on Vehicular Technology, vol. 71, no. 5, pp. 5445–5459, 2022.

- [27] Z. Mlika and S. Cherkaoui, “Deep deterministic policy gradient to minimize the age of information in cellular v2x communications,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 12, pp. 23 597–23 612, 2022.

- [28] S. Wang, M. Chen, Z. Yang, C. Yin, W. Saad, S. Cui, and H. V. Poor, “Distributed reinforcement learning for age of information minimization in real-time iot systems,” IEEE Journal of Selected Topics in Signal Processing, vol. 16, no. 3, pp. 501–515, 2022.

- [29] L. Liu, K. Xiong, J. Cao, Y. Lu, P. Fan, and K. B. Letaief, “Average aoi minimization in uav-assisted data collection with rf wireless power transfer: A deep reinforcement learning scheme,” IEEE Internet of Things Journal, vol. 9, no. 7, pp. 5216–5228, 2022.

- [30] X. Chen, C. Wu, T. Chen, H. Zhang, Z. Liu, Y. Zhang, and M. Bennis, “Age of information aware radio resource management in vehicular networks: A proactive deep reinforcement learning perspective,” IEEE Transactions on Wireless Communications, vol. 19, no. 4, pp. 2268–2281, 2020.

- [31] C. E. Shannon, “A mathematical theory of communication,” The Bell system technical journal, vol. 27, no. 3, pp. 379–423, 1948.

- [32] G. V. Weinberg and V. G. Glenny, “Optimal rayleigh approximation of the k-distribution via the kullback–leibler divergence,” IEEE Signal Processing Letters, vol. 23, no. 8, pp. 1067–1070, 2016.

- [33] M. Harchol-Balter, Performance modeling and design of computer systems: queueing theory in action. Cambridge University Press, 2013.

- [34] J. E. Shore, “Information theoretic approximations for m/g/1 and g/g/1 queuing systems,” Acta Informatica, vol. 17, pp. 43–61, 1982.

- [35] N. Prabhu, “A bibliography of books and survey papers on queueing systems: Theory and applications,” Queueing Systems, vol. 2, no. 4, pp. 393–398, 1987.

- [36] G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “Openai gym,” arXiv preprint arXiv:1606.01540, 2016.

- [37] A. Raffin, A. Hill, A. Gleave, A. Kanervisto, M. Ernestus, and N. Dormann, “Stable-baselines3: Reliable reinforcement learning implementations,” The Journal of Machine Learning Research, vol. 22, no. 1, pp. 12 348–12 355, 2021.

Appendix A Proof of Theorem 1

According to Equation (1), the time required for a packet to be transmitted from the th IoT device to the edge server is given as

| (25) | |||

where , denotes the size of the original packets. Since is random due to the random gain of the channel and it monotonically decreases with channel gain , using Equation (25), can be calculated as

| (26a) | |||

| (26b) | |||

| (26c) | |||

We can denote that is the function inversely mapping from to . Which is which is given in Equation (26c). In consequence, we obtain the probability density function Equation (LABEL:eq:CM26) and cumulative distribution function Equation (27) of , as

| (27) | ||||

Appendix B Proof of Theorem 2

The proof for this Theorem 2 is almost similar to Theorem 1, the only difference is the size of the packets (the size of the original packets will reduce after processing on the edge server). According to the transmission rate given in Equation (2), the expected transmission time of packet from the edge server to the vehicular fog is given as

| (30) |

where denotes the size of the processed packet. The rest of this proof is similar to the proof of Theorem 1, and the expression is given as

| (31a) | |||

| (31b) | |||

where is a function inversely mapping from to given in Equation (31b), which is given as in Equation (31b). Thus, we obtain the cumulative distribution function Equation (33) and probability density function Equation (32) for , respectively

| (32) |

Appendix C Proof of Theorem 3

Let , denote the average number of update packets within the processing queue, and , remaining processing time in service. Then for , the expectation of , is given as

| (35) | ||||

From Equation (LABEL:eq:CM33), we found:

| (36) |

where , and it represents the load on the processing subsystem caused by the arrival of packets from the IoT device 1. Similarly, for , we have

| (37) |

By substituting Equation (36) in Equation (LABEL:eq:CM35), we have

| (38) |

Then, based on the above analysis, we can calculate as

| (39) |

where is the remaining processing time. By applying the renewal-reward theory [33], we can determine time averages of multiple quantities by considering only the average of a single renewal cycle. To calculate the time-average excess, we follow the following procedure

| (40) |

Appendix D Proof of Theorem 4

To prove Theorem 4, we adopt the PME to derive an approximation of the expectation , . An average waiting time for a typical packet in the transmission queue can be calculated as

| (41) |

where Probability of an IoT device sending a packet to the transmission queue , and is the estimation of its waiting time. From Theorem 1, a typical packet spends the following amount of time in the transmission subsystem

| (42) | ||||

where the estimation time in (10). Then, by Little’s law [33] applying Equation (41) to the transmission subsystem, obtain as

| (43) |

where estimated total number of packets transmitted by the transmission system, is given by Equation (LABEL:eq:CM40), as Since we are dealing with a random integer value, the PME can be used to represent its probability mass function, which is a uniquely correct and self-consistent method of estimating probability distributions [34]. Let be the probability that the number of customers served in a busy period , and be the moments of . In general, can be expressed as a function of and the service time. Then the maximum entropy distribution is

| (44) | ||||

| (45) |

where is the Lagrange multiplier associated with th moment of the random variable , By using Little’s law to the transmission queue and merging Equations (LABEL:eq:CM42) and (45), we have the following equations

| (46) |

where probability of a busy server. As such, using this analysis, we have

| (47) |

In addition to this packet waiting or being served in the processing queue, packets preceding it are sent sequentially into the transmission queue. Finally, by substituting Equation (41) and Equation (47) in Equation (43), we can conclude Theorem 4 given in Equation (14).