Optimal Parameterizing Manifolds for Anticipating Tipping Points and Higher-order Critical Transitions

Abstract

A general, variational approach to derive low-order reduced models from possibly non-autonomous systems, is presented. The approach is based on the concept of optimal parameterizing manifold (OPM) that substitutes the more classical notions of invariant or slow manifolds when breakdown of “slaving” occurs, i.e. when the unresolved variables cannot be expressed as an exact functional of the resolved ones anymore. The OPM provides, within a given class of parameterizations of the unresolved variables, the manifold that averages out optimally these variables as conditioned on the resolved ones.

The class of parameterizations retained here is that of continuous deformations of parameterizations rigorously valid near the onset of instability. These deformations are produced through integration of auxiliary backward-forward (BF) systems built from the model’s equations and lead to analytic formulas for parameterizations. In this modus operandi, the backward integration time is the key parameter to select per scale/variable to parameterize in order to derive the relevant parameterizations which are doomed to be no longer exact away from instability onset, due to breakdown of slaving typically encountered e.g. for chaotic regimes. The selection criterion is then made through data-informed minimization of a least-square parameterization defect. It is thus shown, through optimization of the backward integration time per scale/variable to parameterize, that skilled OPM reduced systems can be derived for predicting with accuracy higher-order critical transitions or catastrophic tipping phenomena, while training our parameterization formulas for regimes prior to these transitions take place.

We introduce a framework for model reduction to produce analytic formulas to parameterize the neglected variables. These parameterizations are built from the model’s equations in which only a scalar is left to calibration per scale/variable to parameterize. This calibration is accomplished through a data-informed minimization of a least-square parameterization defect. By their analytic fabric the resulting parameterizations benefit physical interpretability. Furthermore, our hybrid framework—analytic and data-informed–enables us to bypass the so-called extrapolation problem, known to be an important issue for purely data-driven machine-learned parameterizations. Here, by training our parameterizations prior transitions take place, we are able to perform accurate predictions of these transitions via the corresponding reduced systems.

I Introduction

This article is concerned with the efficient reduction of forced-dissipative systems of the form

| (1) |

in which denotes a Hilbert state space, possibly of finite dimension. The forcing term is considered to be either constant in time or time-dependent, while denotes a linear operator not necessarily self-adjoint 111but diagonalizable over the set of complex numbers . that include dissipative effects, and denotes a nonlinear operator which saturate the possible unstable growth due to unstable modes and may account for a loss of regularity (such as for nonlinear advection) in the infinite-dimensional setting. Such systems arise in broad range of applications; see e.g. [2, 3, 4, 5, 6, 7, 8, 9, 10, 11].

The framework adopted is that of [12] which allows for deriving analytic parameterizations of the neglected scales that represent efficiently the nonlinear interactions with the retained, resolved variables. The originality of the approach of [12] is that the parameterizations are hybrid in their nature in the sense that they are both model-adaptive, based on the dynamical equations, and data-informed by high-resolution simulations.

For a given system, the optimization of these parameterizations benefits thus from their analytical origin resulting into only a few parameters to learn over short-in-time training intervals, mainly one scalar parameter per scale to parameterize. Their analytic fabric contributes then to their physical interpretability compared to e.g. parameterizations that would be machine learned. The approach is applicable to deterministic systems, finite- or infinite-dimensional, and is based on the concept of optimal parameterizing manifold (OPM) that substitutes the more classical notion of slow or invariant manifolds when takes place a breakdown of “slaving” relationships between the resolved and unresolved variables [12], i.e. when the latter cannot be expressed as an exact functional of the formers anymore.

By construction, the OPM takes its origin in a variational approach. The OPM is the manifold that averages out optimally the neglected variables as conditioned on the the current state of the resolved ones [12, Theorem 4]. The OPM allows for computing approximations of the conditional expectation term arising in the Mori-Zwanzig approach to stochastic modeling of neglected variables [13, 14, 15, 16, 17]; see also [12, Theorem 5] and [18, 19].

The approach introduced in [12] to determine OPMs in practice consists at first deriving analytic parametric formulas that match rigorous leading approximations of unstable/center manifolds or slow manifolds near e.g. the onset of instability [12, Sec. 2], and then to perform a data-driven optimization of the manifold formulas’ parameters to handle regimes further away from that instability onset [12, Sec. 4]. In other words, efficient parameterizations away from the onset are obtained as continuous deformations of parameterizations near the onset; deformations that are optimized by minimization of cost functionals tailored upon the dynamics and measuring the defect of parameterization.

There, the optimization stage allows for alleviating the small denominator problems rooted in small spectral gaps and for improving the parameterization of small-energy but dynamically important variables. Thereby, relevant parameterizations are derived in regimes where constraining spectral gap or timescale separation conditions are responsible for the well-known failure of standard invariant/inertial or slow manifolds [20, 21, 22, 23, 24, 25, 12]. As a result, the OPM approach provides (i) a natural remedy to the excessive backscatter transfer of energy to the low modes classically encountered in turbulence [12, Sec. 6], and (ii) allows for deriving optimal models of the slow motion for fast-slow systems not necessarily in presence of timescale separation [18, 19]. Due to their optimal nature, OPMs allow also for providing accurate parameterizations of dynamically important small-energy variables; a well-known issue encountered in the closure of chaotic dynamics and related to (i).

This work examine the ability of the theory-guided and data-informed parameterization approach of [12] in deriving reduced systems able to predict higher-order transitions or catastrophic tipping phenomena, when the original, full system is possibly subject to time-dependent perturbations. From a data-driven perspective, this problem is tied to the so-called extrapolation problem, known for instance to be an important issue in machine learning, requiring more advanced methods such as e.g. transfer learning [26]. While the past few decades have witnessed a surge and advances of many data-driven reduced-order modeling methodologies [27, 28], the prediction of non-trivial dynamics for parameter regimes not included in the training dataset, remains a challenging task. Here, the OPM approach by its hybrid framework—analytic and data-informed—allows us to bypass this extrapolation problem at a minimal cost in terms of learning efforts as illustrated in Secns. IV and V. As shown below, the training of OPMs at parameter values prior the transitions take place, is sufficient to perform accurate predictions of these transitions via OPM reduced systems.

The remainder of this paper is organized as follows. We first survey in Sec. II the OPM approach and provide the general variational parameterization formulas for model reduction in presence of a time-dependent forcing. We then expand in Sec. III on the backward-forward (BF) systems method [29, 12] to derive these formulas, clarifying fundamental relationships with homological equations arising in normal forms and invariant manifold theories [30, 31, 12, 32]. Section III.3 completes this analysis by analytic versions of these formulas in view of applications. The ability of predicting noise-induced transitions and catastrophic tipping phenomena [33, 34] through OPM reduced systems is illustrated in Sec. IV for a system arising in the study of thermohaline circulation. Successes in predicting higher-order transitions such as period-doubling and chaos are reported in Sec. V for a Rayleigh-Bénard problem, and contrasted by comparison with the difficulties encountered by standard manifold parameterization approaches in Appendix D. We then summarize the findings of this article with some concluding remarks in Sec. VI.

II Variational Parameterizations for Model Reduction

We summarize in this Section the notion of variational parameterizations introduced in [12]. The state space is decomposed as the sum of the subspace, , of resolved variables (“coarse-scale”), and the subspace, , of unresolved variables (“small-scale”). In practice is spanned by the first few eigenmodes of with dominant real parts (e.g. unstable), and by the rest of the modes, typically stable.

In many applications, one is interested in deriving reliable reduced systems of Eq. (1) for wavenumbers smaller than a cutoff scale corresponding to a reduced state space of dimension . To do so, we are after parameterizations for the unresolved variables (i.e., the vector component of the solution to Eq. (1) in ) of the form

| (2) |

in which the (possibly infinite) vector formed by the scalars is a free parameter. As it will be apparent below, the goal is to get a small-scale parameterization which is not necessarily exact such that is approximated by in a least-square sense, where is the coarse-scale component of in . The vector is aimed to be a homotopic deformation parameter. The purpose is to have, as is varied, parameterizations that cover situations for which slaving relationships between the large and small scales hold ( as well as situations in which they are broken (, e.g. far from criticality. With the suitable , the goal is to dispose of a reduced system resolving only the “coarse-scales,” able to e.g. predict higher-order transitions caused by nonlinear interactions with the neglected, “small-scales.”

As strikingly shown in [12], meaningful parameterizations away from the instability onset can still be obtained by relying on bifurcation and center manifold theories. To accomplish this feat, the parameterizations, rigorously valid near that onset, need though to be revisited as suitably designed continuous deformations. The modus operandi to provide such continuous deformations is detailed in Sec. III below, based on the method of backward-forward systems introduced in [29, Chap. 4], in a stochastic context. In the case where with a homogeneous polynomial of degree ), this approach gives analytical formulas of parameterizations given by (see Sec. III.1)

| (3) | ||||

Here, the denote the spectral elements of which are ordered such that . In Eq. (LABEL:Eq_app2), and denote the projector onto span and span, respectively, while . This formula provides an explicit expression for in (2) given here by the integral term over multiplying in (LABEL:Eq_app2). The only free parameter per mode to parameterize is the backward integration time .

The vector , made of the , is then optimized by using data from the full model. To allow for a better flexibility, the optimization is executed mode by mode one wishes to parameterize. Thus, given a solution of the full model Eq. (1) over an interval of length , each parameterization of the solution amplitude carried by mode is optimized by minimizing—in the -variable—the following parameterization defect

| (4) |

for each . Here denotes the time-mean over while and denote the projections of onto the high-mode and the reduced state space , respectively.

Geometrically, the function given by (LABEL:Eq_app2) provides a time-dependent manifold such that:

| (5) |

where denotes the distance of (lying e.g. on the system’s global attractor) to the manifold .

Thus minimizing each (in the -variable) is a natural idea to enforce closeness of in a least-squares sense to the manifold . Panel A in Fig. 1 illustrates (5) for the -component: The optimal parameterization, , minimizing (4) is shown for a case where the dynamics is transverse to it (e.g. in absence of slaving) while provides the best parameterization in a least-squares sense.

In practice, the normalized parameterization defect, , is often used to compare different parameterizations. It is defined as . For instance, the flat manifold corresponding to no parameterization () of the neglected variables (Galerkin approximations) comes with for all , while a manifold corresponding to a perfect slaving relationship between the and ’s, comes with . When for all , the underlying manifold will be referred to as a parameterizing manifold (PM). Once the parameters are optimized by minimization of (4), the resulting manifold will be referred to as the the optimal parameterizing manifold (OPM) 222 Here, we make the abuse of language that the OPM is sought within the class of parameterizations obtained through the backward-forward systems of Sec. III. In general, OPMs are more general objects related to the conditional expectation associated with the projector ; see [12, Sec. 3.2]..

We conclude this section by a few words of practical considerations. As documented in [12, Secns. 5 and 6], the amount of training data required in order to reach a robust estimation of the optimal backward integration time , is often comparable to the dynamics’ time horizon that is necessary to resolve the decay of correlations for the high-mode amplitude . For multiscale systems, one thus often needs to dispose of a training dataset sufficiently large to resolve the slowest decay of temporal correlations of the scales to parameterize. On the other hand, by benefiting from their dynamical origin, i.e. through the model’s equations, the parameterizations formulas employed in this study (see Sec. III) allow often for reaching out in practice satisfactory OPM approximations when optimized over training intervals shorter than these characteristic timescales.

When these conditions are met, the minimization of the parameterization defect (4) is performed by a simple gradient-descent algorithm [12, Appendix]. There, the first local minimum that one reaches, corresponds often to the OPM; see Secns. IV and V below, and [12, Secns. 5 and 6]. In the rare occasions where the parameterization defect exhibits more than one local minimum and the corresponding local minima are close to each others, criteria involving colinearity between the features to parameterize and the parameterization itself can be designed to further assist the OPM selection. Section V.4 below illustrates this point with the notion of parameterization correlation.

III Variational Parameterizations: Explicit Formulas

III.1 Homological Equation and Backward-Forward Systems: Time-dependent Forcing

In this section, we recall from [12] and generalize to the case of time-dependent forcing, the theoretical underpinnings of the parameterization formula (LABEL:Eq_app2). First, observe that when and for all , the parameterization (LABEL:Eq_app2) is reduced (formally) to

| (6) |

where , with denoting the canonical projectors onto . Eq. (6) is known in invariant manifold theory as a Lyapunov-Perron integral [7]. It provides the leading-order approximation of the underlying invariant manifold function if a suitable spectral gap condition is satisfied and solves the homological equation:

| (7) |

see [2, Lemma 6.2.4] and [12, Theorem 1]. The later equation is a simplification of the invariance equation providing the invariant manifold when it exists; see [5, Sec. VIIA1] and [12, Sec. 2.2]. Thus, Lyapunov-Perron integrals such as (6) are intimately related to the homological equation, and the study of the latter informs us on the former, and in turn on the closed form of the underlying invariant manifold.

Another key element was pointed out in [29, Chap. 4], in the quest of getting new insights about invariant manifolds in general and more specifically concerned with the approximation of stochastic invariant manifolds of stochastic PDEs [36], along with the rigorous low-dimensional approximation of their dynamics [37]. It consists of the method of Backward-Forward (BF) systems, thereafter revealed in [12] as a key tool to produce parameterizations (based on model’s equations) that are relevant beyond the domain of applicability of invariant manifold theory, i.e. away the onset of instability.

To better understand this latter feature, let us recall first that BF systems allow for providing to Lyapunov-Perron integrals such as (6), a flow interpretation. In the case of (6), this BF system takes the form [12, Eq. (2.29)]:

| (8a) | |||

| (8b) | |||

| (8c) | |||

The Lyapunov-Perron integral is indeed recovered from this BF system. The solution to Eq. (8b) at is given by

| (9) |

which by taking the limit formally in (9) as , leads to given by (6). Thus, stretching to infinity in the course of integration of the BF system (8) allows for recovering rigorous approximations (under some non-resonance conditions [12, Theorem 1]) of well-known objects such as the center manifold; see also [2, Lemma 6.2.4] and [11, Appendix A.1]. The intimate relationships between Lyapunov-Perron integral, , and the homological Eq. (7) are hence supplemented by their relationships with the BF system (8).

By breaking down, mode by mode, the backward integration of Eq. (8b) (in the case of diagonal), one allows for a backward integration time per mode’s amplitude to parameterize, leading in turn to the following class of parameterizations

| (10) |

as indexed by . Clearly Eq. (LABEL:Eq_app2) is a generalization of Eq. (10).

We make precise now the similarities and differences between Eq. (LABEL:Eq_app2) and Eq. (10), at a deeper level as informed by their BF system representation. In that respect, we consider for the case of time-dependent forcing, the following BF system

| (11a) | |||

| (11b) | |||

| (11c) | |||

Note that compared to the BF system (8), the backward-forward integration is operated here on to account for the non-autonomous character of the forcing, making this way the corresponding parameterization time-dependent as summarized in Lemma III.1 below. In the presence of an autonomous forcing, operating the backward-forward integration of the BF system (8) on does not change the parameterization, which remains time-independent.

Since the BF system (11) is one-way coupled (with forcing (11b) but not reciprocally), if one assumes to be diagonal over , one can break down the forward equation Eq. (11b) into the equations,

| (12) |

allowing for flexibility in the choice of per mode whose amplitude is aimed at being parameterized by , for each .

After backward integration of Eq. (11a) providing , one obtains by forward integration of Eq. (12) that

| (13) | ||||

with . By making the change of variable , one gets

| (14) | ||||

with . Now by summing up these parameterizations over the modes for , we arrive at Eq. (LABEL:Eq_app2). Thus, our general parameterization formula (LABEL:Eq_app2) is derived by solving the BF system made of backward Eq. (11a) and the forward Eqns. (12).

We want to gain into interpretability of such parameterizations in the context of forced-dissipative systems such as Eq. (1). For this purpose, we clarify the fundamental equation satisfied by our parameterizations; the goal being here to characterize the analogue of (7) in this non-autonomous context. To simplify, we restrict to the case . The next Lemma provides the sought equation.

Lemma III.1

The proof of this Lemma is found in Appendix A.

As it will become apparent below, the -dependence of the terms in (I) is meant to control the small denominators that arise in presence of small spectral gap between the spectrum of and that leads typically to over-parameterization when standard invariant/inertial manifold theory is applied in such situations; see Remark III.1 below and [12, Sec. 6].

The terms in (II) are responsible for the presence of exogenous memory terms in the solution to the homological equation Eq. (16), i.e. in the parameterization ; see Eq. (32) below.

In the case is bounded 333One could also express a constraint on the behavior of as . For instance one may require that (18) , and , Eq. (16) reduces, in the (formal) limit , to:

| (19) |

Such a system of linear PDEs is known as the homological equation and arises in the theory of time-dependent normal forms [39, 31]; see also [40]. In the case where is -periodic, one can seek an approximate solution in a Fourier expansion form [41, Sec. 5.2] leading to useful insights. For instance, solutions to (19) when is quadratic, exist if the following non-resonance condition is satisfied

| (20) | ||||

(with and ) in the case is discrete, without Jordan block. Actually one can even prove in this case that

| (21) |

(where ) on the space of functions

| (22) |

in which and represent the components of the vector in onto and , respectively.

Thus, in view of Lemma III.1 and what precedes, the small-scale parameterizations (15) obtained by solving the BF system (11) over finite time intervals, can be conceptualized as perturbed solutions to the homological equation Eq. (19) arising in the computation of invariant and normal forms of non-autonomous dynamical systems [31, Sec. 2.2]. The perturbative terms brought by the -dependence play an essential role to cover situations beyond the domain of application of normal form and invariant manifold theories. As explained in Sec. III.3 and illustrated in Secns. IV-V below, these terms can be optimized to ensure skillful parameterizations for predicting, by reduced systems, higher-order transitions escaping the domain of validity of these theories.

III.2 Homological Equation and Backward-Forward Systems: Constant Forcing

In this Section, we clarify the conditions under which solutions to Eq. (11) exist in the asymptotic limit . We consider the case where is constant in time, to simplify. For the existence of Lyapunov-Perron integrals under the presence of more general time-dependent forcings we refer to [40]. To this end, we introduce, for in ,

| (23) |

where is the solution to Eq. (11a), namely

| (24) |

We have then the following result that we cast for the case of finite-dimensional ODE system (of dimension ) to simply.

Theorem III.1

Assume that is constant in time and that is diagonal under its eigenbasis . Assume furthermore that , and , i.e. contains only stable eigenmodes.

Finally, assume that the following non-resonance condition holds:

| (25) | ||||

with , where denotes the inner product on , denotes the leading-order term in the Taylor expansion of , and denotes the eigenvectors of the conjugate transpose of .

Then, the Lyapunov-Perron integral given by (23) is well defined and is a solution to the following homological equation

| (26) |

and provides the leading-order approximation of the invariant manifold function in the sense that

Moreover, given by (23) is the limit, as goes to infinity, of the solution to the BF system (11), when is constant in time. That is

| (27) |

where is the solution to Eq. (11b).

Conditions similar to (LABEL:Eq_NR) arise in the smooth linearization of dynamical systems near an equilibrium [42]. Here, condition (LABEL:Eq_NR) implies that the eigenvalues of the stable part satisfy a Sternberg condition of order [42] with respect to the eigenvalues associated with the modes spanning the reduced state space .

This theorem is essentially a consequence of [12, Theorems 1 and 2], in which condition (LABEL:Eq_NR) is a stronger version of that used for [12, Theorem 2]; see also [12, Remark 1 (iv)]. This condition is necessary and sufficient here for to be well defined. The convergence of is straightforward since by assumption and is constant. The derivation of (26) follows the same lines as the derivation for [12, Eq. (4.6)].

One can also generalize Theorem III.1 to the case when is time-dependent provided that satisfies suitable conditions to ensure given by (23) to be well defined and that the non-resonance condition (LABEL:Eq_NR) is suitably augmented. We leave the precise statement of such a generalization to a future work. For the applications considered in later sections, the forcing term is either a constant or with a sublinear growth. For such cases, is always well defined under the assumptions of Theorem III.1. We turn now to present the explicit formulas of the parameterizations based on the BF system (11).

III.3 Explicit Formulas for Variational Parameterizations

We provide in this Section, closed form formulas of parameterizations for forced dissipative such as Eq. (1). We consider first the case where is constant in time, and then deal with time-dependent forcing case.

III.3.1 The constant forcing case

To favor flexibility in applications, we consider scale-awareness of our parameterizations via BF systems, i.e. we consider parameterizations that are built up, mode by mode, from the integration, for each , of the following BF systems

| (28a) | |||

| (28b) | |||

| (28c) | |||

Recall that is aimed at parameterizing the solution amplitude carried by mode , when in Eq. (28c), whose parameterization defect is minimized by optimization of the backward integration time in (4). To dispose of explicit formulas for qualifying the dependence on , facilitates greatly this minimization.

In the case the nonlinearity is quadratic, denoted by , such formulas are easily accessible as (28) is integrable. It is actually integrable in the presence of higher-order terms, but we provide the details here for the quadratic case, leaving the details to the interested reader for extension.

Recall that we denote by the conjugate modes from the adjoint and that these modes satisfy the bi-orthogonality condition. That is if , and zero otherwise, where denotes the inner product endowing . Denoting by the solution to Eq. (28b), we find after calculations that

| (29) | ||||

in which and denote the components of the vector in onto and , and

| (30) |

while otherwise. Here , with the referring to the eigenvalues of . We refer to [12] and Appendix B for the expression of which accounts for the nonlinear interactions between the forcing components in . Here, the dependence on the in (29) is made apparent, as this dependence plays a key role in the prediction of the higher-order transitions; see applications to the Rayleigh-Bénard system of Sec. V.

Remark III.1

OPM balances small spectral gaps. Theorem III.1 teaches us that when not only defined in (30) converges towards a well defined quantity as but also the coefficients involved in (see (85)-(86)-(87)), in case of existence of invariant manifold. For parameter regimes where the latter fails to exist, some of the or the involved in (86)-(87) can become small, leading to the well known small spectral gap issue [25] manifested typically by over-parameterization of the ’s mode amplitude when the Lyapunov-Perron parameterization (23) is employed; see [12, Sec. 6]. The presence of the through the exponential terms in (30) and (86)-(87) allows for balancing these small spectral gaps after optimization and improve notably the parameterization and closure skills; see Sec. V and Appendix D.

Remark III.2

Formulas such as introduced in [43] in approximate inertial manifold (AIM) theory, and used earlier in atmospheric dynamics [44] in the context of non-normal modes initialization [45, 46, 47], are also tied to leading-order approximations of invariant manifolds since given by (6) satisfies

| (31) |

and the term is the main parameterization used in [43]; see [29, Lemma 4.1] and [11, Theorem A.1.1]. Adding higher-order terms can potentially lead to more accurate parameterizations and push the validity of the approximation to a larger neighborhood [48, 49], but in presence of small spectral gaps, such an operation may become also less successful. When such formulas arising in AIM theory are inserted within the proper data-informed variational approach such as done in [12, Sec. 4.4], their optimized version can also handle regimes in which spectral gap becomes small as demonstrated in [12, Sec. 6].

III.3.2 The time-dependent forcing case

Here, we assume that the neglected modes are subject to time-dependent forcing according to . Then by solving the BF systems made of Eq. (11a) and Eqns. (12) with (to account for possibly non-zero mean), we arrive at the following time-dependent parameterization of the th mode’s amplitude:

| (32) |

where lies in .

This formula of gives the solution to the homological equation Eq. (16) of Lemma III.1 in which therein is replaced here by , the projector onto the mode whose amplitude is parameterized by . Clearly, the terms in (I) are produced by the time-dependent forcing. They are functionals of the past and convey thus exogenous memory effects.

The integral term in (I) of Eq. (32) is of the form

| (33) |

By taking derivates on both sides of (33), we observe that satisfies

| (34) |

As a practical consequence, the computation of boils down to solving the scalar ODE (34) which can be done with high-accuracy numerically, when . One bypasses thus the computation of many quadratures (as evolves) that we would have to perform when relying on Eq. (33). Instead only one quadrature is required corresponding to the initialization of the ODE (34) at .

This latter computational aspect is important not only for simulation purposes when the corresponding OPM reduced system is ran online but also for training purposes, in the search of the optimal during the offline minimization stage of the parameterization defect.

If time-dependent forcing terms are present in the reduced state space , then the BF system (28) can still be solved analytically albeit leading to more involved integral terms than in (I) in the corresponding parameterization. This aspect will be detailed elsewhere.

III.3.3 OPM reduced systems

Either in the constant forcing or time-dependent forcing case, our OPM reduced system takes the form

| (35) |

where

| (36) |

where either is given by (32) in the time-dependent case, or by (29), otherwise. Whatever the case, the vector is formed by the minimizers of given by (4), for each . Note that in the case of the time-dependent parameterization (32), the OPM reduced system is run online by augmenting (36) with equations (34), depending on the modes that are forced.

We emphasize finally that from a data-driven perspective, the OPM reduced system benefits from its dynamical origin. By construction, only a scalar parameter is indeed optimized per mode to parameterize. This parameter benefits furthermore from a dynamical interpretation since it plays a key role in balancing the small spectral gaps known as to be the main issue in applications of invariant or inertial manifold theory in practice [25]; see Remark III.1 above.

IV Predicting Tipping Points

IV.1 The Stommel-Cessi model and tipping points

A simple model for oceanic circulation showing bistability is Stommel’s box model [50], where the ocean is represented by two boxes, a low-latitude box with temperature and salinity , and a high-latitude box with temperature and salinity ; see [51, Fig. 1]. The Stommel model can be viewed as the simplest “thermodynamic” version of the Atlantic Meridional Overturning Circulation (AMOC) [52, Chap. 6], a major ocean current system transporting warm surface waters toward the northern Atlantic that constitutes an important tipping point element of the climate system; see [53, 54].

Cessi in [51] proposed a variation of this model, based on the box model of [55], consisting of an ODE system describing the evolution of the differences and ; see [51, Eq. (2.3)]. The Cessi model trades the absolute functions involved in the original Stommel model by polynomial relations more prone to analysis. The dynamics of and are coupled via the density difference , approximated by the relation which induces an exchange of mass between the boxes to be given as according to Cessi’s formulation, where denotes the the Poiseuille transport coefficient, the volume of a box, and the diffusion timescale. The coefficient is a coefficient inversely proportional to the practical salinity unit, i.e. unit based on the properties of sea water conductivity while is in ; see [51]. In this simple model, relaxes at a rate to a reference temperature (with and ) in absence of coupling between the boxes.

Using the dimensionless variables , , and rescaling time by the diffusion timescale , the Cessi model can be written as [56]

| (37) | ||||

in which is proportional to the freshwater flux, , and ; see [51, Eq. (2.6)] and [56].

The nonlinear exchange of mass between the boxes is reflected by the nonlinear coupling terms in Eq. (LABEL:Cessi_model). These nonlinear terms lead to multiple equilibria in certain parts of the parameter space, in particular when is varied over a certain range , while and are fixed. One can even prove that Eq. (LABEL:Cessi_model) experiences two saddle-node bifurcations [57] leading to a typical S-shaped bifurcation diagram; see Fig. 2A.

S-shaped bifurcation diagrams occur in oceanic models that go well beyond Eq. (LABEL:Cessi_model) such as based on the hydrostatic primitive equations or Boussinesq equations; see e.g. [58, 59, 60, 61]. More generally, S-shaped bifurcation diagrams and more complex multiplicity diagrams are known to occur in a broad class of nonlinear problems [62, 63, 64] that include energy balance climate models [65, 66, 67, 68], population dynamics models [69, 70], vegetation pattern models [71, 72], combustion models [73, 74, 75, 76], and many other fields [77].

The very presence of such S-shaped bifurcation diagrams provides the skeleton for tipping point phenomena to take place when such models are subject to the appropriate stochastic disturbances and parameter drift, causing the system to “tip” or move away from one branch of attractors to another; see [33, 34]. From an observational viewpoint, the study of tipping points has gained a considerable attention due to their role in climate change as a few components of the climate system (e.g. Amazon forest, the AMOC) have been identified as candidates for experiencing such critical transitions if forced beyond the point of no return [54, 78, 53].

Whatever the context, tipping phenomena are due to a subtle interplay between nonlinearity, slow parameter drift, and fast disturbances. To better understand how these interactions cooperate to produce a tipping phenomenon could help improve the design of early warning signals. Although, we will not focus on this latter point per se in this study, we show below, on the Cessi model, that the OPM framework provides useful insights in that perspective, by demonstrating its ability of deriving reduced models to predict accurately the crossing of a tipping point; see Sec. IV.3.

IV.2 OPM results for a fixed value: Noise-induced transitions

We report in this section on the OPM framework skills to derive accurate reduced models to reproduce noise-induced transitions experienced by the Cessi model (LABEL:Cessi_model), when subject to fast disturbances, for a fixed value of . The training of the OPM operated here serves as a basis for the harder tipping point prediction problem dealt with in Sec. IV.3 below, when is allowed to drift slowly through the critical value at which the lower branch of steady states experiences a saddle-node bifurcation manifested by a turning point; see Fig. 2A again. Recall that denotes here the -value corresponding to the turning point experienced by the upper branch.

Reformulation of the Cessi model (LABEL:Cessi_model). The 1D OPM reduced equation is obtained as follows. First, we fix an arbitrary value of in that is denoted by and marked by the green vertical dashed line in Fig. 2A. The system (LABEL:Cessi_model) is then rewritten for the fluctuation variables and , where denotes the steady state of Eq. (LABEL:Cessi_model) in the lower branch when .

The resulting equation for is then of the form

| (38) |

with , , given by:

| (39) |

| (40) |

Since is a locally stable steady state, we add noise to the first component of to trigger transitions from the lower branch to the top branch in order to learn an OPM that can operate not only locally near but also when the dynamics is visiting the upper branch. This leads to

| (41) |

where and denotes a standard one-dimensional two-sided Brownian motion.

The eigenvalues of have negative real parts since is locally stable. We assume that the matrix has two distinct real eigenvalues, which turns out to be the case for a broad range of parameter regimes. As in Sec. III, the spectral elements of the matrix (resp. ) are denoted by (resp. ), for . These eigenmodes are normalized to satisfy , and are bi-orthogonal otherwise.

Let us introduce and , for . We also introduce . In the eigenbasis, Eq. (41) can be written then as

| (42) |

with

| (43) |

where denotes the component of .

Derivation of the OPM reduced equation. We can now use the formulas of Sec. III.3 to obtain an explicit variational parameterization of the most stable direction carrying the variable here, in terms of the least stable one, carrying .

For Eq. (42), both of the forcing terms appearing in the - and -equations of the corresponding BF system (28) are stochastic (and thus time-dependent). To simplify, we omit the stochastic forcing in Eq. (28a) and work with the OPM formula given by (32) which, as shown below, is sufficient for deriving an efficient reduced system.

Once the optimal is obtained by minimizing the parameterization defect given by (4), we obtain the following OPM reduced equation for :

| (46) | ||||

The online obtention of by simulation of the OPM reduced equation Eq. (46) allows us to get the following approximation of the variables from the original Cessi model (LABEL:Cessi_model):

| (47) |

after going back to the original variables.

Numerical results. The OPM reduced equation Eq. (46) is able to reproduce the dynamics of for a wide range of parameter regimes. We show in Fig. 2 a typical example of skills for parameter values of , , and as listed in Table 1. Since lies in (see Table 1), the Cessi model (LABEL:Cessi_model) admits three steady states among which two are locally stable (lower and upper branches) and the other one is unstable (middle branch); see Fig. 2A again. For this choice of , the steady state corresponding to the lower branch is with , , and the eigenvalues of are , and .

| 0.1 | 6.2 | 0.8513 | 0.8821 | 0.855 |

|---|

The offline trajectory used as input for training to find the optimal , is taken as driven by an arbitrary Brownian path from Eq. (42) for in the time interval . The resulting offline skills of the OPM, , are shown as the blue curve in Fig. 2B, while the original targeted time series to parameterize is shown in black. The optimal that minimizes the normalized parameterization defect turns out to be for the considered regime, as shown in Fig. 2C. One observes that the OPM captures, in average, the fluctuations of ; compare blue curve with black curve.

The skills of the corresponding OPM reduced equation (46) are shown in Fig. 2D, after converting back to the original -variable using (47). The results are shown out of sample, i.e. for another noise path and over a time interval different from the one used for training. The 1D reduced OPM reduced equation (46) is able to capture the multiple noise-induced transitions occurring across the two equilibria (marked by the cyan lines), after transforming back to the original -variable; compare red with black curves in Fig. 2D. Both the Cessi model (41) and the reduced OPM equation are numerically integrated using the Euler-Maruyama scheme with time step .

Note that we chose here the numerical value of to be closer to than to for making more challenging the tipping point prediction experiment conducted in Sec. IV.3 below. There, we indeed train the OPM for while aiming at predicting the tipping phenomenon as drifts slowly through (located thus further away) as time evolves.

IV.3 Predicting the crossing of tipping points

OPM reduced equation (46) in the original coordinates. For better interpretability, we first rewrite the OPM reduced equation (46) under the original coordinates in which the Cessi model (LABEL:Cessi_model) is formulated. For that purpose, we exploit the components of the eigenvectors of given by (39) (for ) that we write as and . Then, from (47) the expression of rewrites as

| (48) | ||||

where the second line is obtained by using the expression of given by (44) and the notation . It turns out that

| (49) |

We can now express as a function of , i.e. with given by

| (51) | ||||

as obtained by replacing with in Eq. (50). Replacing by the dummy variable , one observes that provides a time-dependent manifold that parameterizes the variable in the original Cessi model as (a time-dependent) polynomial function of radical functions in due to the expression of ; see (49).

We are now in position to derive the equation satisfied by aimed at approximating in the original variables. For that purpose, we first differentiate with respect to time the expression of given in (49) to obtain

| (52) |

On the other hand, by taking into account the expressions of in (48) and in (50), we observe that the -equation (46) can be re-written as

| (53) |

with

| (54) |

given by the right-hand side (RHS) of the Cessi model (LABEL:Cessi_model) with .

Now by equating the RHS of Eq. (53) with that of Eq. (52), we obtain, after substitution of by , that satisfies the following equation

| (55) | ||||

where

| (56) |

and is given by (cf. (45))

| (57) |

Eq. (55) is the OPM reduced equation for the -variable of the Cessis model, as rewritten in the original system of coordinates. This equation in its analytic formulation, mixes information about the two components of the RHS to Eq. (LABEL:Cessi_model) through the inner product involved in Eq. (55), while parameterizing the -variable by the time-dependent parametarization given by (51). While designed for away from the lower tipping point, we show next that the OPM reduced system built up this way demonstrates a remarkable ability in predicting this tipping point when is allowed to drift away from in the course of time.

Prediction results. Thus, we aim at predicting by our OPM reduced model, the tipping phenomenon experienced by the full model when is subject to a slow drift in time via

| (58) |

where is a small parameter, and is some fixed value of such that , with denoting the parameter value of the turning point of the lower branch of equilibria; see Fig. 2A.

The original Cessi model is forced as follows

| (59) | ||||

Introducing, , and writing as , we consider the following forced version of the OPM reduced equation (55):

| (60) | ||||

In this equation, recall that the parameterization, has been trained for ; see above.

We set now , , and , while and are kept as in Table 1. Note that compared to the results shown in Fig. 2, the noise level is here substantially reduced to focus on the tipping phenomenon to occur in the vicinity of the turning point . The goal is thus to compare the behavior of solving the forced Cessi model (LABEL:Cessi_model_v3) with that of the solution of the (forced) OPM reduced equation (60), as crosses the critical value .

The results are shown in Fig. 3. The red curve corresponds to the solution of the OPM reduced equation (60), and the black curve to the -component of the forced Cessi model (LABEL:Cessi_model_v3). Our baseline is the slow manifold parameterization which consists of simply parameterizing as in Eq. (60) which provides, for sufficiently small due to the Tikhonov’s theorem [79, 56], good reduction skills from the “slow” reduced equation,

| (61) |

Here, the value lies beyond the domain of applicability of the Tikhonov theorem, and as a result the slow reduced Eq. (61) fails in predicting any tipping phenomenon; see yellow curve in Fig. 3. In contrast, the OPM reduced equation (60) demonstrates a striking success in predicting the tipping phenomenon to the upper branch, in spite of being trained away from the targeted turning point, for as marked by the (green) vertical dash line. The only caveat is the overshoot observed in the magnitude of the predicted random steady state in the upper branch.

The striking prediction result of Fig. 3 are shown for one noise realization. We explore now the accuracy in predicting such a tipping phenomenon by the OPM reduced equation (60) when the noise realization is varied.

To do so, we estimate the statistical distribution of the -value at which tipping takes place, denoted by , for both the Cessi model (LABEL:Cessi_model_v3) and Eq. (60). These distributions are estimated as follows. We denote by the -component of the steady state at which the saddle-node bifurcation occurs in the lower-branch for . As noise is turned on and evolves slowly through , the solution path to Eq. (LABEL:Cessi_model_v3) increases in average while fluctuating around the -value (due to small noise) before shooting off to the upper branch as one nears . During this process, there is a time instant, denoted by , such that for all , stay above . We denote the -value corresponding to as according to (58). Whatever the noise realization, the solution to the OPM reduced equation (60) experiences the same phenomenon leading thus to its own for a particular noise realization. As shown by the histograms in Fig. 4, the distribution of predicted by the OPM reduced equation (60) (blue curve) follows closely that computed from the full system (LABEL:Cessi_model_v3) (orange bars). Thus, not only the OPM reduced equation (60) is qualitatively able to reproduce the passage through a tipping point (as shown in Fig. 3), but is also able to accurately predicting the critical -value (or time-instant) at which the tipping phenomenon takes place with an overall success rate over 99%.

V Predicting Higher-Order Critical Transitions

V.1 Problem formulation

In this section, we aim at applying our variational parameterization framework to the the following Rayleigh-Bénard (RB) system from [80]:

| (62) | ||||

Here denotes the Prandtl number, and denotes the reduced Rayleigh number defined to be the ratio between the Rayleigh number and its critical value at which the convection sets in. The coefficients are given by

with corresponding to the critical horizontal wavenumber of the square convection cell. This system is obtained as a Fourier truncation of hydrodynamic equations describing Rayleigh-Bénard (RB) convection in a 3D box [80]. The Prandtl number is chosen to be in the experiments performed below, which is the same as used in [80]. The reduced Rayleigh number is varied according to these experiments; see Table 2.

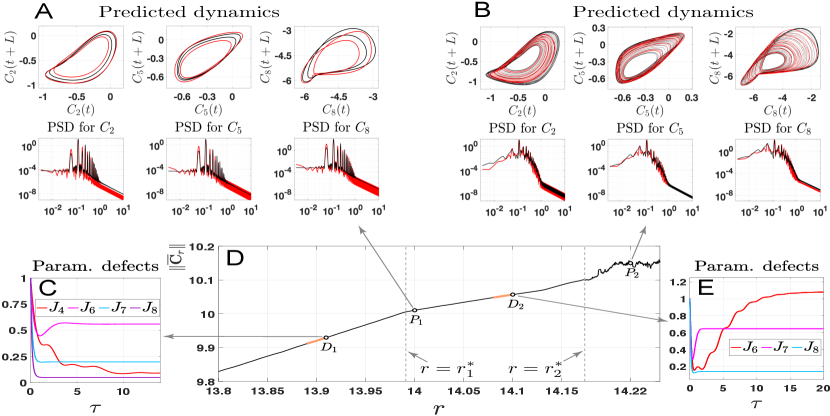

Our goal is to assess the ability of our variational parameterization framework for predicting higher-order transitions arising in (62) by training the OPM only with data prior to the transition we aim at predicting. The Rayleigh number is our control parameter. As it increases, Eq. (LABEL:Eq_RBC_eigenbasis) undergoes several critical transitions/bifurcations, leading to chaos via a period-doubling cascade [80]. We focus on the prediction of two transition scenarios beyond Hopf bifurcation: (I) the period-doubling bifurcation, and (II) the transition from a period-doubling regime to chaos. Noteworthy are the failures that standard invariant manifold theory or AIM encounter in the prediction of these transitions, here; see Appendix D.

To do so, we re-write Eq. (62) into the following compact form:

| (63) |

where , and is the matrix given by

The nonlinear term is defined as follows. For any and in , we have

| (64) |

We now re-write Eq. (62) in terms of fluctuations with respect to its mean state. In that respect, we subtract from its mean state , which is estimated, in practice, from simulation of Eq. (62) over a typical characteristic time of the dynamics that resolves e.g. decay of correlations (when the dynamics is chaotic) or a period (when the dynamics is periodic); see also [12]. The corresponding ODE system for the fluctuation variable, , is then given by:

| (65) |

with

| (66) |

Denote the spectral elements of the matrix by and those of by . Here the eigenmodes are normalized so that if , and otherwise. By taking the expansion of in terms of the eigenelements of , we get

| (67) |

Assuming that is diagonalizable in and by rewriting (65) in the variable , we obtain that

| (68) | ||||

where and

| (69) |

Like the , the eigenmodes also depend on the mean state , explaining the dependence of the interaction coefficients . From now on, we work with Eq. (LABEL:Eq_RBC_eigenbasis).

V.2 Predicting Higher-order Transitions via OPM: Method

Thus, we aim at predicting for Eq. (LABEL:Eq_RBC_eigenbasis) two types of transition: (I) the period-doubling bifurcation, and (II) the transition from period-doubling to chaos, referred to as Experiments I and II, respectively. For each of these experiments, we are given a reduced state space with as indicated in Table 2, depending on the parameter regime. The eignemodes are here ranked by the real part of the corresponding eigenvalues, corresponding to the eigenvalue with the largest real part. The goal is to derive an OPM reduced system (35) able to predict such transitions. The challenge lies in the optimization stage of the OPM as due to the prediction constraint, one is prevented to use data from the full model at the parameter which one desires to predict the dynamics. Only data prior to the critical value at which the concerned transition, either period-doubling or chaos, takes place, are here allowed.

| Experiment I (period-doubling) | 3 | |||

| Experiment II (chaos) | 5 |

Due to the dependence on of , as well as of the interaction coefficients and forcing terms , a particular attention to this dependence has to be paid. Indeed, recall that the parameterizations given by the explicit formula (29), depend heavily here on the spectral elements of linearized operator at , and thus does its optimization. Since the goal is to eventually dispose of an OPM reduced system able to predict the dynamical behavior at , one cannot rely on data from the full model at and thus one cannot exploit in particular the knowledge of the mean state for . We are thus forced to estimate the latter for our purpose.

To do so, we estimate the dependence on of on an interval such that (see Table 2), and use this estimation to extrapolate the value of at , that we denote by . For both experiments, it turned out that a linear extrapolation is sufficient. This extrapolated value allows us to compute the spectral elements of the operator given by (66) in which replaces the genuine mean state . Obviously, the better is the approximation of by , the better the approximation of by along with the corresponding eigenspace.

We turn now to the training of the OPM exploiting these surrogate spectral elements, that will be used for predicting the dynamical behavior at . To avoid any looking ahead whatsoever, we allow ourselves to only use the data of the full model (LABEL:Eq_RBC_eigenbasis) for and minimize the following parameterization defect

| (70) |

for each relevant , in which (resp. ) denotes the full model solution’s amplitude in (resp. ) at . The normalized defect is then given by . To compute these defects we proceed as follows. We first use the spectral elements at of the linearized matrix at the extrapolated mean state to form the coefficients , and involved in the expression (29) of . For each , the defect is then fed by the input data at (i.e. and ), and evaluated for that varies in some sufficiently large interval to capture global minima. The results are shown in Fig. 5C and Fig. 5E for Experiment I and II, respectively. Note that the evaluation of for a range of is used here only for visualization purpose as it is not needed to find a minimum. A simple gradient-descent algorithm can be indeed used for the latter; see [12, Appendix]. We denote by the resulting optimal value of per variable to parameterize.

After the are found for each , the OPM reduced system used to predict the dynamics at takes the form:

| (71) | ||||

with , , and

We can then summarize our approach for predicting higher-order transitions via OPM reduced systems as follows:

-

Step 1.

Extrapolation of at (the parameter at which one desires to predict the dynamics).

-

Step 2.

Computation of the spectral elements of the linearized operator at , by replacing by in (66).

-

Step 3.

Training of the OPM using the spectral elements of Step 2 and data of the full model for .

-

Step 4.

Run the OPM reduced system (71) to predict the dynamics at .

We mention that the minimization of certain parameterization defects may require a special care such as for in Experiment II. Due to the presence of nearby local minima (see red curve in Fig. 5E), the analysis of the optimal value of to select for calibrating an optimal parameterization of is more subtle and exploits actually a complementary metric known as the parameterization correlation; see Sec. V.4.

Obviously, the accuracy in approximating the genuine mean state by is a determining factor in the transition prediction procedure described in Steps 1-4 above. Here, the relative error in approximating () is for Experiment I, and for Experiment II. For the latter case, although the parameter dependence is rough beyond (see Panel D), there is no brutal local variations of relative large magnitude as identified for other nonlinear systems [81, 18]. Systems for which a linear response to parameter variation is a valid assumption [82, 83], thus constitute seemingly a favorable ground to apply the proposed transition prediction procedure.

V.3 Prediction of Higher-order Transitions by OPM reduced systems

As summarized in Figs. 5A and B, for each prediction experiment of Table 2, the OPM reduced system (71) not only successfully predicts the occurrence of the targeted transition at either and ( and in Fig. 5D), but also approximates with good accuracy the embedded (global) attractor as well as key statistics of the dynamics such as the power spectral density (PSD). Note that for Experiment I (period-doubling) we choose the reduced dimension to be and to be for Experiment II (transition to chaos). For Experiment I the global minimizers of given by (70) are retained to build up the corresponding OPM. In Experiment II, all the global minimizer are also retained except for from which the second minimizer is used for the construction of the OPM.

Once the OPM is built, an approximation of is obtained from the solution to the corresponding OPM reduced system (71) according to

| (72) | ||||

with given by (29) whose spectral entries are given by the spectral elements of given by (66) for in which is replaced by . The and denote the eigenvectors of this matrix and here.

As baseline, the skills of the OPM reduced system (71) are compared to those from reduced systems when parameterizations from invariant manifold theory such as (6) or from inertial manifold theory such as (31), are employed; see [12, Theorem 2] and Remark III.2. The details and results are discussed in Appendix D. The main message is that compared to the OPM reduced system (71), the reduced systems based on these traditional inertial/inertial manifold theories fail in predicting the correct dynamics. A main player in this failure lies in the inability of these standard parameterizations to accurately approximate small-energy variables that are dynamically important; see Appendix D. The OPM parameterization by its variational nature enables to fix this over-parameterization issue here.

V.4 Distinguishing between close local minima: Parameterization correlation analysis

Since the ’s coefficients depend nonlinearly on (see Eqns. (29)-(30) and (85)), the parameterization defects, , defined in (70) are also highly nonlinear, and may not be convex in the -variable as shown for certain variables in Panels C and E of Fig. 5. A minimization algorithm to reach most often its global minimum is nevertheless detailed in [12, Appendix A], and is not limited to the RB system analyzed here. In certain rare occasions, a local minimum may be an acceptable halting point with an online performance slightly improved compared to that of the global minimum. In such a case, one discriminates between a local minimum and the global one by typically inspecting another metric offline: the correlation angle that measures essentially the collinearity between the actual high-mode dynamics and its parameterization. Here, such a situation occurs for Experiment II; see in Fig. 5E.

Following [12, Sec. 3.1.2], we recall thus a simple criterion to assist the selection of an OPM when there are more than one local minimum displayed by the parameterization defect and the corresponding local minimal values are close to each other.

Given a parameterization that is not trivial (i.e. ), we define the parameterization correlation as,

| (73) |

It provides a measure of collinearity between the unresolved variable and its parameterization , as time evolves. It serves thus as a complimentary, more geometric way to measure the phase coherence between the resolved and unresolved variables than with the parameterization defect defined in (4). The closer to unity is for a given parameterization, the better the phase coherence between the resolved and unresolved variables is expected to hold.

We illustrate this point on shown in Fig. 5E. The defect exhibits two close minima corresponding to and , occurring respectively at and . Thus, the parameterization defect alone does not help provide a satisfactory discriminatory diagnosis between these two minimizers. To the contrary, the parameterization correlation shown in Fig. 6 allows for diagnosing more clearly that the OPM associated with the local minimizer has a neat advantage compared to that associated with the global minimizer.

As explained in [12, Sec. 3.1.2], this parameterization correlation criterion is also useful to assist the selection of the dimension of the reduced state space. Indeed, once an OPM has been determined, the dimension of the reduced system should be chosen so that the parameterization correlation is sufficiently close to unity as measured for instance in the -norm over the training time window. The basic idea is that one should not only parameterize properly the statistical effects of the neglected variables but also avoid to lose their phase relationships with the unresolved variables [84, 12]. For instance, for predicting the transition to chaos, we observe that an OPM comes with a parameterization correlation much closer to unity in the case than (not shown).

VI Concluding Remarks

In this article, we have described a general framework, based on the the OPM approach introduced in [12] for autonomous systems, to derive effective (OPM) reduced systems able to either predict higher-order transitions caused by parameter regime shift, or tipping phenomenas caused by model’s stochastic disturbances and slow parameter drift. In each case, the OPMs are sought as continuous deformations of classical invariant manifolds to handle parameter regimes away from bifurcation onsets while keeping the reduced state space relatively low-dimensional. The underlying OPM parameterizations are derived analytically from model’s equations, constructed as explicit solutions to auxiliary BF systems such as Eq. (11), whose backward integration time is optimized per unresolved mode. This optimization involves the minimization of natural cost functionals tailored upon the full dynamics at parameter values prior the transitions take place; see (4). In each case—either for prediction of higher-order transitions or tipping points—we presented compelling evidences of success of the OPM approach to address such extrapolation problems typically difficult to handle for data-driven reduction methods.

As reviewed in Sec. III, the BF systems such as Eq. (11) allow for drawing insightful relationships with the approximation theory of classical invariant/inertial manifolds (see [12, Sec. 2] and [31, 40, 85]) when the backward integration time in Eq. (11) is sent to ; see Theorem III.1. Once the invariant/inertial manifolds fail to provide legitimate parameterizations, the optimization of the backward integration time may lead to local minima at finite of the parameterization defect which if sufficiently small, give access to skillful parameterizations to predict e.g. higher-order transitions. This way, as illustrated in Sec. V (Experiment II), the OPM approach allows us to bypass the well-known stumbling blocks tied to the presence of small spectral gaps such as encountered in inertial manifold theory [25], and tied to the accurate parameterization of dynamically important small-energy variables; see also Remark III.1, Appendix D and [12, Sec. 6].

To understand the dynamical mechanisms at play behind a tipping phenomenon and to predict its occurrence are of uttermost importance, but this task is hindered by the often high dimensionality nature of the underlying physical system. Devising accurate, and analytic reduced models able to predict tipping phenomenon from complex system is thus of prime importance to serve understanding. The OPM results of Sec. IV indicate great promises for the OPM approach to tackle this important task for more complex systems. Other reduction methods to handle the prediction of tipping point dynamics have been proposed recently in the literature but mainly for mutualistic networks [86, 87, 88]. The OPM formulas presented here are not limited to this kind of networks and can be directly applied to a broad class of spatially extended systems governed by (stochastic) PDEs or to nonlinear time-delay systems by adopting the framework of [89] for the latter to devise center manifold parameterizations and their OPM generalization; see [90, 91].

The OPM approach could be also informative to design for such systems nearing a tipping point, early warning signal (EWS) indicators from multivariate time series. Extension of EWS techniques to multivariate data is an active field of research with methods ranging from empirical orthogonal functions reduction [92] to methods exploiting the system’s Jacobian matrix and relationships with the cross-correlation matrix [93] or exploiting the detection of spatial location of “hotspots” of stability loss [94, 95]. By its nonlinear modus operandi for reduction, the OPM parameterizations identifies subgroup of essential variables to characterize a tipping phenomenon which in turn could be very useful to construct the relevant observables of the system for the design of useful EWS indicators.

Thus, since the examples of Secns. IV and V are representative of more general problems of prime importance, the successes demonstrated by the OPM approach on these examples invite for further investigations for more complex systems.

Similarly, we would like to point out another important aspect in that perspective. For spatially extended systems, the modes involve typically wavenumbers that can help interpret certain primary physical patterns. Formulas such as (29) or (32) arising in OPM parameterizations involve a rebalancing of the interactions among such modes by means of the coefficients ; see Remark III.1. Thus, an OPM parameterization when skillful to derive efficient systems may provide useful insights to explain emergent patterns due to certain nonlinear interactions of wavenumbers, for regimes beyond the instability onset. For more complex systems than dealt with here, it is known already than near the instability onset of primary bifurcations, center manifold theory provides such physical insights; see e.g. [96, 97, 98, 99, 100, 101, 102].

By the approach proposed here relying on OPMs obtained as continuous deformations of invariant/center manifolds, one can thus track the interactions that survive or emerge between certain wavenumbers as one progresses through higher-order transitions. Such insights bring new elements to potentially explain the low-frequency variability of recurrent large-scale patterns typically observed e.g. in oceanic models [103, 104], offering at least new lines of thoughts to the dynamical system approach proposed in previous studies [105, 106, 107, 104]. In that perspective, the analytic expression (85) of terms such as in (29) bring also new elements to break down the nonlinear interactions between the forcing of certain modes compared to others. Formulas such as (85) extend to the case of time-dependent or stochastic forcing of the coarse-scale modes whose exact expression will be communicated elsewhere. As for the case of noise-induced transitions reported in Fig. 2D, these generalized formulas are expected to provide in particular new insights to regime shifts not involving crossing a bifurcation point but tied to other mechanisms such as slow-fast cyclic transitions or stochastic resonances [108].

Yet, another important practical aspect that deserve in-depth investigation is related to the robustness of the OPM approach subject to model errors. Such errors can arise from multiple sources, including e.g. imperfection of the originally utilized high-fidelity model in capturing the true physics and noise contamination of the solution data used to train the OPM. For the examples of Secns. IV and V, since we train the OPM in a parameter regime prior to the occurrence of the concerned transitions, the utilized training data contain, de facto, model errors. The reported results in these sections show that the OPM is robust in providing effective reduced models subject to such model errors. Nevertheless, it would provide useful insights if one can systematically quantify uncertainties of the OPM reduced models subject to various types of model errors. In that respect, several approaches could be beneficial for stochastic systems such as those based on the information-theoretic framework of [109] or the perturbation theory of ergodic Markov chains and linear response theory [110], as well as methods based on data-assimilation techniques [111].

Acknowledgements.

We are grateful to the reviewers for their constructive comments, which helped enrich the discussion and presentation. This work has been partially supported by the Office of Naval Research (ONR) Multidisciplinary University Research Initiative (MURI) grant N00014-20-1-2023, by the National Science Foundation grant DMS-2108856, and by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement no. 810370). We also acknowledge the computational resources provided by Advanced Research Computing at Virginia Tech for the results shown in Fig. 4.Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Appendix A Proof of Lemma III.1

We introduce the notations

| (74) | ||||

Appendix B The low-order term in the OPM parameterization

In the special case where in (28) is time-independent, by introducing and , the -term in the parameterization (29) takes the form

| (85) | ||||

with

| (86) |

and

| (87) |

For the application to the Rayleigh-Bénard system considered in Sec. V, the forcing term is produced after rewriting the original unforced system in terms of the fluctuation variable with respect to the mean state. For this problem, the eigenvalues and the interaction coefficients both depend on the mean state.

Appendix C Numerical aspects

In the numerical experiments of Sec. V, the full RB system (62) as well as the OPM reduced system (71) are numerically integrated using a standard fourth-order Runge–Kutta (RK4) method with a time-step size taken to be . Note that since the eigenvalues of are typically complex-valued, some care is needed when integrating (71). Indeed, since complex eigenmodes of must appear in complex conjugate pairs, the corresponding components of in (71) form also complex conjugate pairs. To prevent round-off errors that may disrupt the underlying complex conjugacy, after each RK4 time step, we enforce complex conjugacy as follows. Assuming that and form a conjugate pairs and that after an RK4 step, and () where and are real-valued with and , we redefine and to be respectively given by and . For each component corresponding to a real eigenmode, after each RK4 time step, we simply redefine it to be its real part and ignore the small imaginary part that may also arise from round-off errors. The same numerical procedure is adopted to integrate the reduced systems obtained from invariant manifold reduction as well as the FMT parameterization used in Appendix D below.

Appendix D Failure from standard manifold parameterizations

In this section we briefly report on the reduction skills for the chaotic case of Sec. V.3 as achieved through application of the invariant manifold theory or standard formulas used in inertial manifold theory. Invariant manifold theory is usually applied near a steady state. To work within a favorable ground for these theories, we first chose the steady state that is closest to the mean state at the parameter value we want to approximate the chaotic dynamics via a reduced system. This way the chaotic dynamics is located near this steady state.

To derive the invariant manifold (IM) reduced system, we also re-write the RB system (62) in the fluctuation variable, but this time using the steady state , namely for the . The analogue of Eq. (65) is then projected onto the eigenmodes of linearized part

| (88) |

to obtain:

| (89) |

Here the denote the spectral elements of , while the interaction coefficients are given as with denoting the eigenvector of associated with . Note that unlike (LABEL:Eq_RBC_eigenbasis), there is no constant forcing term on the RHS of (89) since as is a steady state.

Here also, the reduced state space with as indicated in Table 2, for the chaotic regime. The local IM associated with has its components approximated by [12, Theorem 2]:

| (90) |

when for and .

The corresponding IM reduced system takes the form given by (71) in which the components, , of the OPM therein are replaced by the given by (90) for . Unlike the OPM reduced system (71), here we use the true eigenvalues ’s at the parameter at which the dynamics is chaotic ( in the notations of Fig. 5).

.

To the solution to this IM reduced system one then forms the approximation of given by:

| (91) |

Similarly, we also test the performance of the reduced system when the local IM (90) is replaced by the following FMT parameterization (see (31) in Remark III.2):

| (92) |

Formulas such as (92) have been used in earlier studies relying on inertial manifolds for predicting higher-order bifurcations [49].

The predicted orbits obtained from reduced systems built either on the IM parameterization (90) or the FMT one (92) are shown in the top row of Fig. 7 as blue and magenta curves, respectively. Both reduced systems lead to periodic dynamics and thus fail dramatically in predicting the chaotic dynamics. The small spectral gap issue mentioned in Remark III.1 plays a role in explaining this failure but not only. Another fundamental issue for the closure of chaotic dynamics lies in the difficulty to provide accurate parameterization of small-energy but dynamically important variables; see e.g. [12, Sec. 6]. This issue is encountered here, as some of variables to parameterize for Experiment II contain only from to of the total energy.

Replacing by the genuine mean state does not fix this issue as some of variables to parameterize contain still a small fraction of the total energy, from to . The IM parameterization (90) and the FMT one (92) whose coefficients are now determined from the spectral elements of in which replaces in (88), still fail in parameterizing accurately such small-energy variables; see bottom row of Fig. 7444Note that here the FMT parameterization accounts for the forcing term (see [12, Eq. (4.40)]) produced by fluctuations calculated with respect to . By comparison, the OPM succeeds in parameterizing accurately these small-energy variables. For Experiment I, inaccurate predictions are also observed from the reduced systems built either on the IM parameterization (90) or the FMT one (92) (not shown).

References

- Note [1] But diagonalizable over the set of complex numbers .

- Henry [1981] D. Henry, Geometric Theory of Semilinear Parabolic Equations, Lecture Notes in Mathematics, Vol. 840 (Springer-Verlag, Berlin, 1981).

- Ghil and Childress [1987] M. Ghil and S. Childress, Topics in Geophysical Fluid Dynamics: Atmospheric Dynamics, Dynamo Theory and Climate Dynamics (Springer-Verlag, New York/Berlin, 1987).

- Hale [1988] J. K. Hale, Asymptotic Behavior of Dissipative Systems, Mathematical Surveys and Monographs, Vol. 25 (American Mathematical Society, Providence, RI, 1988) pp. x+198 pp.

- Crawford [1991] J. D. Crawford, Reviews of Modern Physics 63, 991 (1991).

- Cross and Hohenberg [1993] M. C. Cross and P. C. Hohenberg, Rev. Mod. Phys. 65, 851 (1993).

- Sell and You [2002] G. Sell and Y. You, Dynamics of Evolutionary Equations, Applied Mathematical Sciences, Vol. 143 (Springer-Verlag, New York, 2002) pp. xiv+670.

- Ma and Wang [2005] T. Ma and S. Wang, Bifurcation Theory and Applications, World Scientific Series on Nonlinear Science. Series A: Monographs and Treatises, Vol. 53 (World Scientific Publishing Co. Pte. Ltd., Hackensack, NJ, 2005).

- Kloeden and Rasmussen [2011] P. E. Kloeden and M. Rasmussen, Nonautonomous Dynamical Systems, Mathematical Surveys and Monographs, Vol. 176 (American Mathematical Society, Providence, RI, 2011).

- Temam [2012] R. Temam, Infinite-dimensional Dynamical Systems in Mechanics and Physics, Vol. 68 (Springer Science & Business Media, 2012).

- Ma and Wang [2014] T. Ma and S. Wang, Phase Transition Dynamics (Springer, 2014).

- Chekroun et al. [2020a] M. D. Chekroun, H. Liu, and J. C. McWilliams, J. Stat. Phys. 179, 1073 (2020a).

- Chorin et al. [2002] A. J. Chorin, O. H. Hald, and R. Kupferman, Physica D 166, 239 (2002).

- Givon et al. [2004] D. Givon, R. Kupferman, and A. Stuart, Nonlinearity 17, R55 (2004).

- Gottwald [2010] G. Gottwald, Gazette of the Australian Mathematical Society 37, 319 (2010).

- Gottwald et al. [2017] G. A. Gottwald, D. T. Crommelin, and C. L. E. Franzke, “Stochastic climate theory,” (Cambridge University Press, 2017) pp. 209–240.

- Lucarini and Chekroun [2023] V. Lucarini and M. Chekroun, arXiv:2303.12009 (2023).

- Chekroun et al. [2017] M. D. Chekroun, H. Liu, and J. C. McWilliams, Computers & Fluids 151, 3 (2017).

- Chekroun et al. [2021] M. D. Chekroun, H. Liu, and J. C. McWilliams, Proc. Natl. Acad. Sci. 118, e2113650118 (2021).

- Constantin et al. [2012] P. Constantin, C. Foias, B. Nicolaenko, and R. Temam, Integral Manifolds and Inertial Manifolds for Dissipative Partial Differential Equations, Vol. 70 (Springer Science, 2012).

- Debussche and Temam [1991] A. Debussche and R. Temam, Differential and Integral Equations 4, 897 (1991).

- Debussche and Temam [1994] A. Debussche and R. Temam, Journal de Mathématiques Pures et Appliquées 73, 489 (1994).

- Temam and Wirosoetisno [2010] R. Temam and D. Wirosoetisno, SIAM Journal on Mathematical Analysis 42, 427 (2010).

- Temam and Wirosoetisno [2011] R. Temam and D. Wirosoetisno, J. Atmos. Sci. 68, 675 (2011).

- Zelik [2014] S. Zelik, Proc. R. Soc. Edinb. Sec. A: Mathematics 144, 1245 (2014).

- Subel et al. [2022] A. Subel, Y. Guan, A. Chattopadhyay, and P. Hassanzadeh, arXiv preprint arXiv:2206.03198 (2022).

- Ahmed et al. [2021] S. Ahmed, S. Pawar, O. San, A. Rasheed, T. Iliescu, and B. Noack, Physics of Fluids 33, 091301 (2021).

- Hesthaven et al. [2022] J. Hesthaven, C. Pagliantini, and G. Rozza, Acta Numerica 31, 265 (2022).

- Chekroun et al. [2015a] M. D. Chekroun, H. Liu, and S. Wang, Stochastic Parameterizing Manifolds and Non-Markovian Reduced Equations: Stochastic Manifolds for Nonlinear SPDEs II (Springer Briefs in Mathematics, Springer, 2015).

- Cabré et al. [2005] X. Cabré, E. Fontich, and R. De La Llave, Journal of Differential Equations 218, 444 (2005).

- Haro et al. [2016] À. Haro, M. Canadell, J.-L. Figueras, A. Luque, and J.-M. Mondelo, The Parameterization Method for Invariant Manifolds:From Rigorous Results to Effective Computations, Vol. 195 (Springer-Verlag, Berlin, 2016).

- Roberts [2018] A. Roberts, arXiv preprint arXiv:1804.06998 (2018).

- Kuehn [2011] C. Kuehn, Physica D 240, 1020 (2011).

- Ashwin et al. [2012] P. Ashwin, S. Wieczorek, R. Vitolo, and P. Cox, Phil. Trans. Roy. Soc. A 370, 1166 (2012).

- Note [2] Here, we make the abuse of language that the OPM is sought within the class of parameterizations obtained through the backward-forward systems of Sec. III. In general, OPMs are more general objects related to the conditional expectation associated with the projector ; see [12, Sec. 3.2].

- Chekroun et al. [2015b] M. D. Chekroun, H. Liu, and S. Wang, Approximation of Stochastic Invariant Manifolds: Stochastic Manifolds for Nonlinear SPDEs I (Springer Briefs in Mathematics, Springer, 2015).