Optimal Control of Multi-Agent Systems

with Processing Delays

Abstract

In this article, we consider a cooperative control problem involving a heterogeneous network of dynamically decoupled continuous-time linear plants. The (output-feedback) controllers for each plant may communicate with each other according to a fixed and known transitively closed directed graph. Each transmission incurs a fixed and known time delay. We provide an explicit closed-form expression for the optimal decentralized controller and its associated cost under these communication constraints and standard linear quadratic Gaussian (LQG) assumptions for the plants and cost function. We find the exact solution without discretizing or otherwise approximating the delays. We also present an implementation of each sub-controller that is efficiently computable, and is composed of standard finite-dimensional linear time-invariant (LTI) and finite impulse response (FIR) components, and has an intuitive observer-regulator architecture reminiscent of the classical separation principle.

1 Introduction

In multi-agent systems such as swarms of unmanned aerial vehicles, it may be desirable for agents to cooperate in a decentralized fashion without receiving instructions from a central coordinating entity. Each agent takes local measurements, performs computations, and may communicate its measurements with a given subset of the other agents, with a time delay. In this article, we investigate the problem of optimal control under the aforementioned communication constraints.

We model each agent as a continuous-time linear time-invariant (LTI) system. We make no assumption of homogeneity across agents; each agent may have different dynamics. We assume the aggregate dynamics of all agents are described by the state-space equations

| (1) |

where is the global state, is the regulated output, is the measured output, is the exogenous disturbance, and is the controlled input. The decoupled nature of the agents imposes a sparsity structure on the plant. Namely, if we partition , , , each into pieces corresponding to the agents, the conformally partitioned state space matrices , , , , are block-diagonal. The regulated output , however, couples all agents’ states and inputs, so in general and will be dense. The matrix transfer function is a standard four-block plant that takes the form111In a slight abuse of notation, the vectors , , , and now refer to the Laplace transforms of the corresponding time-domain signals in (1).

| (2) |

where and are block-diagonal.

We assume information sharing is mediated by a fixed and known directed graph. Specifically, if there is a (possibly multi-hop) directed path from Agent to Agent , then Agent can observe the local measurements of Agent with a delay . We further assume there are no self-delays, so agents can observe their local measurements instantaneously.

In practice, our setting corresponds to a network where the chief source of latency is due to processing and transmission delays [12, §1.4] (the encoding, decoding, and transmission of information). Therefore, we neglect propagation delays (proportional to distance traveled) and queuing delays (related to network traffic and hops required to reach the destination).

We assume is fixed and known and homogeneous across all communication paths, as it is determined by the physical capabilities (e.g., underlying hardware and software) of the individual agents rather than external factors. Thus, Agent ’s feedback policy (in the Laplace domain) is of the form222There is no loss of generality in assuming a linear control policy; see Section 1.1 for details.

| (3) |

where the sum is over all agents for which there is a directed path from to in the underlying communication graph.

Given the four-block plant (2), the directed communication graph, and the processing delay , we study the problem of finding a structured controller that is internally stabilizing and minimizes the norm of the closed-loop map .

In spite of the non-classical information structure present in this problem, it is known that there is a convex Youla-like parameterization of the set of stabilizing structured controllers, and the associated synthesis problem is a convex, albeit infinite-dimensional, optimization problem.

Main contribution. We provide a complete solution to this structured cooperative control problem that is computationally tractable and intuitively understandable. Specifically, the optimal controller can be implemented with a finite memory and transmission bandwidth that does not grow over time. Moreover, the controller implementations at the level of individual agents have separation structures between the observer and regulator reminiscent of classical synthesis theory.

In the remainder of this section, we give context to this problem and relate it to works in optimal control, delayed control, and decentralized control. In Section 2, we cover some mathematical preliminaries and give a formal statement of the problem. In Section 3, we give a convex parameterization of all structured suboptimal controllers, and present the -optimal controller for the non-delayed () and delayed () cases. In Section 4, we describe the optimal controller architecture at the level of the individual agents, and give intuitive interpretations of the controller architecture. In Section 5, we present case studies that highlight the trade-offs between processing delay, connectivity of the agents, and optimal control cost. Finally, we conclude in Section 6 and discuss future directions.

1.1 Literature review

If we remove the structural constraint (3) and allow each to have an arbitrary causal dependence on all with no delays, the optimal controller is linear and admits an observer–regulator separation structure [34]. This is the classical (LQG) synthesis problem, solved for example in [37].

The presence of structural constraints generally leads to an intractable problem [1]. For example, linear compensators can be strictly suboptimal, even under LQG assumptions [33]. Moreover, finding the best linear compensator also leads to a non-convex infinite-dimensional optimization problem.

However, not all structural constraints lead to intractable synthesis problems. For LQG problems with partially nested information, there is a linear optimal controller [4]. If the information constraint is quadratically invariant with respect to the plant, the problem of finding the optimal LTI controller can be convexified [27, 26]. The problem considered in this article is both partially nested and quadratically invariant, so there is no loss in assuming a linear policy as we do in (3).

Once the problem is convexified, the optimal controller can be computed exactly using approaches like vectorization [28, 32], or approximated to arbitrary accuracy using Galerkin-style numerical approaches [25, 29]. However, these approaches lead to realizations of the solution that are neither minimal nor easily interpreted. For example, a numerical solution will not reveal a separation structure in the optimal controller, nor will it provide an interpretation of controller states or the signals communicated between agents’ controllers. Indeed, the optimal controller may have a rich structure, reminiscent of the centralized separation principle. Such explicit solutions were found for broadcast [15], triangular [31, 18], and dynamically decoupled [9, 8, 6] cases.

The previously mentioned works do not consider time delays. In the presence of delays, we distinguish between discrete and continuous time. In discrete time, the delay transfer function is rational. Therefore, the problem may be reduced to the non-delayed case by absorbing each delay into the plant [14]. However, this reduction is not possible in continuous time because the continuous-time delay transfer function is irrational. A Padé approximation may be used for the delays [35], but this leads to approximation error and a larger state dimension.

Although the inclusion of continuous-time delays renders the state space representation infinite-dimensional, the optimal controller may still have a rich structure. For systems with a dead-time delay (the entire control loop is subject to the same delay), a loop-shifting approach using finite impulse response (FIR) blocks can transform the problem into an equivalent delay-free LQG problem with a finite-dimensional LTI plant [24, 20]. A similar idea was used in the discrete-time case to decompose the structure into dead-time and FIR components, which can be optimized separately [13].

The loop-shifting technique can be extended to the adobe delay case, where the feedback path contains both a delayed and a non-delayed path [21, 22, 23]. The loop-shifting technique was also extended to specific cases like bilateral teleoperation problems that involve two stable plants whose controllers communicate across a delayed channel [10, 2], and haptic interfaces that have two-way communication with a shared virtual environment [11]. Another example is the case of homogeneous agents coupled via a diagonal-plus-low-rank cost [19]. All three of these examples are special cases of the information structure (3).

In the present work, we solve a general structured synthesis problem with agents that communicate using a structure of the form (3). We present explicit solutions that show an intuitive observer-regulator structure at the level of each individual sub-controller. Preliminary versions of these results that only considered stable or non-delayed plants were reported in [6, 7]. In this article, we consider the general case of an unstable plant, we find an agent-level parameterization of all stabilizing controllers, and we obtain explicit closed-form expressions for the optimal cost.

2 Preliminaries

Transfer matrices.

Let and . A transfer matrix is said to be proper if there exists an such that . We call this set . Similarly, a transfer matrix is said to be strictly proper if this supremum vanishes as . The Hilbert space consists of analytic functions equipped with the inner product , where the inner product induced norm is bounded. A function is in if is analytic in , for almost every , and . This supremum is always achieved at when . The set is the orthogonal complement of in . The set refers to the subspace of strictly proper rational transfer functions with no poles in . Similarly, the set refers to the subspace of strictly proper rational transfer functions with all poles in . The set consists of matrix-valued functions for which . and are defined analogously to and .

The state-space notation for transfer functions is

| (6) |

A square matrix is Hurwitz if none of its eigenvalues belong to . If is Hurwitz in (6), then . If is Hurwitz and , then . The conjugate of is

The dynamics (1) and four-block plant from (2) satisfy

| (7) |

If we use the feedback policy , then we can eliminate and from (2) to obtain the closed-loop map , which is given by the lower linear fractional transformation (LFT) defined as . LFTs can be inverted: if and has a proper inverse, then , where is the upper linear fractional transformation: .

Block indexing.

Ordered lists of indices are denoted using . The total number of agents is and . The subsystem has state dimension , input dimension , and measurement dimension . The global state dimension is and similarly for and . The matrix is the identity of size and is the block-diagonal matrix formed by the blocks . The zeros used throughout are matrix or vector zeros and their sizes are dependent on the context.

We write to denote the descendants of node , i.e., the set of nodes such that there is a directed path from to for all . By convention, we list first, and then the remaining indices in increasing order. The directed path represents the direction of information transfer between the agents. Similarly, denotes the ancestors of node (again listing first). We also use and to denote the strict ancestors and descendants, respectively, which excludes . For example, in Fig. 1, we have and .

We also use this notation to index matrices. For example, if is a block matrix, then . We will use specific partitions of the identity matrix throughout: , and for each agent , we define (the block column of ). We have and , akin to the descendant and ancestor definitions above. The dimensions of and are determined by the context of use. We also use the notations and to indicate the block column and block rows respectively for a matrix . Similar notations is the matrix of ’s. Further notations are defined at their points of first use.

2.1 Delay

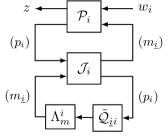

We follow the notation conventions set in [23]. The adobe delay matrix leaves block unchanged and imposes a delay of on all strict descendants of . We define that maps the plant in (7) and adobe delay matrix to a modified plant and FIR systems and . This loop-shifting transformation reported in [21, 23, 22] shown in Fig. 2 transforms a loop with adobe input delay into a modified system involving a rational plant . See Section A for details on the definition of .

In this decomposition, and is inner (if and ), so the closed-loop map satisfies . Thus, we can find the -optimal by first solving a standard problem with to obtain , and then transforming back using . This transformation, illustrated in the bottom left panel of Fig. 2, has the form of a modified Smith predictor, where the FIR blocks and compensate for the effect of the adobe delay in the original loop. See [22, §III.C] for further detail.

2.2 Problem statement

Consider a four-block plant (7) representing the aggregated dynamics of agents as described in Section 1, which we label using indices . Suppose , , and , partitioned conformally with the subsystems as and similarly for and .

Consider a directed graph on the nodes , and let be the set of compensators of the form (3). For example, for the directed graph of Fig. 1, every controller takes the form

where . So each agent may use its local measurements with no delay, and measurements from its ancestors with a delay of . An output-feedback policy (internally) stabilizes if

For further background on stabilization, we refer the reader to [37, 3]. We consider the problem of finding a structured controller that is stabilizing and minimizes the norm of the closed-loop map. Specifically, we seek to

| (8) | ||||

| subject to |

In the remainder of this section, we list our technical assumptions and define control and estimation gains that will appear in our solution. The assumptions we make ensure that relevant estimation and control subproblems are non-degenerate. We make no assumptions regarding the open-loop stability of .

Assumption 1 (System assumptions).

For the interacting agents, the Riccati assumptions defined in Definition 2 hold for and for for all .

Definition 2 (Riccati assumptions).

2.2.1 Riccati equations

The algebraic Riccati equations (AREs) corresponding to the centralized linear quadratic regulator (LQR) and Kalman filtering are

| (9a) | ||||

| (9b) | ||||

Consider controlling the descendants of Agent using only measurements . The associated four-block plant is

| (15) |

and we define the corresponding ARE solutions as

| (16a) | ||||

| (16b) | ||||

Note that the block-diagonal structure of the estimation subproblems implies and . Existence of the matrices defined in (9) and (16) follows from Assumption 1 and the fact that , , , , and are block-diagonal. If we apply the loop-shifting transformation described in Section 2.1 and Fig. 2, we obtain the modified plant

This modified plant has the same estimation ARE as in (16b), but a new control ARE, which we denote

| (17) |

Existence of the matrices defined in (17) also follows from Assumption 1 [23, Lem. 4 and Rem. 1].

3 Optimal Controller

We now present our solution to the structured optimal control problem described in Section 2.2. We begin with a convex parameterization of all structured stabilizing controllers.

3.1 Parameterization of stabilizing controllers

This parameterization is similar to the familiar state-space parameterization of all stabilizing controllers [37, 3], but with an additional constraint on the parameter to enforce the required controller structure.

Lemma 3.

Consider the structured optimal control problem described in Section 2.2with given by (7) and suppose Assumption 1 holds. Pick and block-diagonal such that and are Hurwitz. The following are equivalent:

-

(i)

and stabilizes .

-

(ii)

for some , where

(21)

Proof.

A similar approach was used in [16, Thm. 11] to parameterize the set of stabilizing controllers when (no delays). In the absence of the constraint , the set of stabilizing controllers is given by [37, Thm. 12.8]. It remains to show that if and only if . Expanding the definition of the lower LFT, we have

| (22) |

The matrices , , , , are block-diagonal, so is block-diagonal and therefore . The delays in our graph satisfy the triangle inequality, so is closed under multiplication (whenever the matrix partitions are compatible). Moreover, is quadratically invariant with respect to [26]. Therefore, if , then [26, 17], and we conclude from (22) that . Applying the inversion property of LFTs, we have . Now

so we can apply a similar argument to the above to conclude that and .

We refer to in Lemma 3 as the Youla parameter, due to its similar role as in the classical Youla parameterization [36].

Remark 4.

Although the problem we consider is quadratically invariant (QI), the existing approaches for convexifying a general QI problem [27] or even a QI problem involving sparsity and delays [26] require strong assumptions, such as being stable or strongly stabilizable. Due to the particular delay structure of our problem, the parameterization presented in Lemma 3 does not require any special assumptions and holds for arbitrary (possibly unstable) .

Remark 5.

In the special case where is Hurwitz (so is stable), we can substitute and in (21) to obtain a simpler parameterization of stabilizing controllers.

Using the parameterization of Lemma 3, we can rewrite the synthesis problem (8) in terms of the Youla parameter . After simplification, we obtain the convex optimization problem

| (23) | ||||

| subject to |

where

| (28) |

Remark 6.

Remark 7.

We use throughout the rest of the article. This choice of yields a with reduced state dimension and simplifies our exposition.

3.2 Optimal controller without delays

When there are no processing delays (), the optimal structured controller is rational. We now provide an explicit state-space formula for this optimal .

Theorem 8.

Consider the structured optimal control problem described in Section 2.2 and suppose Assumption 1 holds. Choose a block-diagonal such that is Hurwitz. A realization of the that solves (23) in the case is

| (31) |

and a corresponding that solves (8) is

| (34) |

In (31)–(34), we defined the new symbols

Matrices and are block-diagonal concatenations of zero-padded LQR and Kalman gains for each agent. Specifically, and for all , where and are defined in (16).

Proof.

See Section C.

Remark 9.

Remark 10.

Since agents can act as relays, any cycles in the communication graph can be collapsed and the associated nodes can be aggregated when there are no delays. For example, the graph of Fig. 1 would become the four-node diamond graph , and . So in the delay-free setting, there is no loss of generality in assuming the communication graph is acyclic.

3.3 Optimal controller with delays

In this section, we generalize Theorem 8 to include an arbitrary but fixed processing delay . To this end, we introduce a slight abuse of notation to aid in representing non-rational transfer functions. We generalize the notation of (6) to allow for that depend on . So we write:

Theorem 12.

Consider the setting of Theorem 8. The transfer function of that solves (23) for any is

| (35) |

and a corresponding that solves (8) is

| (36) |

where , , , , , , are defined in Theorem 8. The remainder of the symbols are defined as follows. We apply the loop-shifting transformation , where , , are defined in Section 2.2.1, and

Proof.

See Section D.

4 Agent-level controllers

The optimal controller presented in Theorem 8 is generally not minimal. For example, in (34) has a state dimension of , which means a copy of the global plant state for each agent. However, if we extract the part of associated with a particular agent, there is a dramatic reduction in state dimension. So in a distributed implementation of this controller, each agent would only need to store a small subset of the controller’s state. A similar reduction exists for the optimal controller for the delayed problem presented in Theorem 12.

Our next result presents reduced implementations for these agent-level controllers and characterizes the information each agent should store and communicate with their neighbors. We find that Agent simulates its descendants’ dynamics, and so has dimension , which is at least times smaller than the dimension of the aggregate optimal controller from Theorem 8.

Theorem 13.

Consider the setting of Theorem 8 with . The agent-level implementation of all structured stabilizing controllers, parameterized by , is shown in Fig. 3. Here, the optimal controller is achieved when . In this case, we obtain the simpler structure of Fig. 4. All symbols used are defined in Theorems 8 and 12.

Proof.

See Section E.

4.1 Interpretation of optimal controller

Fig. 3 shows that Agent transmits the same signal to each of its strict descendants. When an agent receives the signals from its strict ancestors , it selectively extracts and sums together certain components of the signals. To implement the optimal controller, each agent only needs to know the dynamics and topology of its descendants.

If the network has the additional property that there is at most one directed path connecting any two nodes333Also known as a multitree or a diamond-free poset., then the communication scheme can be further simplified. Since Agent ’s decision is a sum of terms from all ancestors, but each ancestor has exactly one path that leads to , the optimal controller can be implemented by transmitting all information to immediate descendants only and performing recursive summations. This scheme is illustrated for a four-node chain graph in Fig. 5.

Remark 14.

Remark 15.

5 Characterizing the cost

In this section, we characterize the cost of any structured stabilizing controller. The cost is defined as , where is feasible for (8) or equivalently, is feasible for (23) (see Lemma 3). We show how to interpret the cost in different ways, and how to compute it efficiently. We illustrate our result using an example with agents.

Theorem 17.

Consider the setting of Theorem 8. The optimal (minimal) costs for the cases: a fully connected graph with no delays, a decentralized graph with no delays, a fully connected graph with delays, and a decentralized graph with delays are:

| (37a) | ||||

| (37b) | ||||

| (37c) | ||||

| (37d) | ||||

respectively. If a feasible but sub-optimal is used in any of the above cases, write . The cost of this sub-optimal is found by adding to (37a)–(37d). The various symbols are defined as

| (38a) | ||||

| (38b) | ||||

, and are defined in Section 2.2.1. and are defined in Sections F.6 and F.7, respectively.

Proof.

See Section F.

In (37a) we recognize as the standard LQG cost (fully connected graph with no delays). Further, there are two intuitive interpretations for Theorem 17 that are represented in Fig. 7 for a 3-agents system. The intermediate graph topologies are different, but the starting and ending topologies are equal for both. Along the upper path, is the additional cost incurred due to decentralization alone, and is the further additional cost due to delays. Likewise, along the lower path, is the additional cost due to delays alone and is the further additional cost due to decentralization. Finally, is the additional cost incurred due to suboptimality. Theorem 17 unifies existing cost decomposition results for the centralized [37, §14.6], decentralized [18, Thm. 16], and delayed [23, Prop. 6] cases.

Remark 18.

Delay and decentralization do not contribute independently to the cost. Specifically, the marginal increase in cost due to adding processing delays depends on the graph topology. Likewise, the marginal increase in cost due to removing communication links depends on the processing delay. In other words, .

Remark 19.

There is a dual expression for the cost in (37a):

The corresponding dual expressions for (37b)–(37d) are unfortunately more complicated. See Section F.3 for details.

5.1 Synchronization example

We demonstrate Theorem 8 via a simple structured LQG example. We consider identical lightly damped oscillators. The oscillators begin with different initial conditions and the goal is to achieve synchronization. The oscillators have identical dynamics defined by the differential equations in Figs. 8 and 9. Fig. 8 shows the open-loop zero-input response for the four oscillators with given initial conditions. Due to the light damping, the states slowly converge to zero as .

Fig. 9 shows the closed-loop response using the optimal controller from Theorem 8 for a diamond-shaped communication network with no processing delay. The controller states are initialized to match the initial state of the plant. Since the observer is an unbiased estimator, the LQG controller replicates the behavior of full-state feedback LQR. Fig. 9 shows the four oscillators leveraging their shared information to achieve synchronization to a common oscillation pattern.

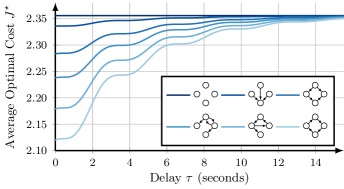

In Fig. 10, we use the same system as in Fig. 9, but we plot the total average cost as a function of time delay for various network topologies. The highest cost corresponds to a fully disconnected network, while the lowest cost corresponds to a fully connected network. In the limit as (infinite processing delay), the cost tends to that of the fully disconnected case.

6 Conclusion

We studied a structured optimal control problem where multiple dynamically decoupled agents communicate over a delay network. Specifically, we characterized the structure and efficient implementation of optimal controllers at the individual agent level. We now propose some possible future applications for our work.

First, our approach can be readily generalized to treat cases with a combination of processing delays and network latency, where the various delays are heterogeneous but known [5]. Next, the observer-regulator architecture elucidated in Fig. 6 could also be used to develop heuristics for solving cooperative control problems where the agents’ dynamics are nonlinear or the noise distributions are non-Gaussian. Examples could include decentralized versions of the Extended Kalman Filter or Unscented Kalman Filter. Finally, our closed-form expressions for the optimal cost can serve as lower bounds to the cost of practical implementation that have additional memory, power, or bandwidth limitations.

Appendices

A Definition of the function

The function takes in a four-block plant and adobe delay matrix and returns a transformed plant and FIR systems , . As in [23], we first consider the special case where . The completion operator acts on a rational LTI system delayed by and returns the unique FIR system supported on that provides a rational completion:

The input matrices and of are partitioned according to the blocks of adobe delay matrix . So, , where the two blocks correspond to inputs with delay and , respectively. is partitioned in a similar manner. Define the Hamiltonian matrix

where and , and define its matrix exponential as . Define the modified matrices

where the are partitioned the same way as the . The modified four-block plant output by is then

Finally, define the FIR systems

FIR outputs of are and .

In the general case , we can use a standard change of variables to transform back to the case . See [21, Rem. 2] for details.

B Gramian equations

Here we provide the set of Lyapunov equations that are uniquely associated with the multi-agent problem.

Lemma 20.

Proof.

We also have a dual analog to Lemma 20, provided below.

Lemma 21.

Proof.

Since and the matrix is Hurwitz, and is unique.

C Proof of Theorem 8

For the case , we can replace by in (23) because the closed-loop map must be strictly proper in order to have a finite norm. Since is strictly proper, this forces to be strictly proper as well, and hence . Further, if is rational, we have . The optimization problem (23) is a least squares problem with a subspace constraint, so the necessary and sufficient conditions for optimality are given by the normal equations with the constraint that .

We can check membership by checking if whenever there is a path . For example, consider the two-node graph . Then we have

So here, if and only if . We will show that the proposed in (31) is optimal by directly verifying the normal equations.

Substituting from (31) into with defined in (28), we obtain the closed-loop map

| (41) |

where and . Next, we show that the controllability Gramian for the closed loop map is block-diagonal.

Lemma 22.

Proof.

Lemma 22 has the following statistical interpretation. If the controlled system (41) is driven by standard Gaussian noise, its state in these coordinates will have a steady-state covariance , so each block component will be mutually independent.

C.1 Proof of optimality

Let . Substituting from (31) and using (41), we obtain

| (46) |

where , , and , , , are defined in (41). Apply the state transformation

to (46), where we defined and , and is defined in Lemma 22. The transformed is

where we have defined the symbols

A without subscript denotes an unimportant block. Simplifying using Riccati and Lyapunov equations from Section 2.2.1 and Section B respectively, we get ; the mode is uncontrollable. Removing it, we obtain

| (51) |

Now consider a block for which there is a path .

| (56) |

Let and denote the block column and let and denote the block row. Algebraic manipulation reveals that

-

(i)

If and , then .

-

(ii)

If or , then .

Consider the diagonal block of in (56), which is . This block is itself block-diagonal; it contains the block and smaller blocks for all . We have three cases.

-

1.

If , then for all , we have from Item (i) above, so the mode is unobservable.

-

2.

If , but instead , we have from Item (ii) above, so the modes are uncontrollable.

-

3.

If , then because by assumption. Then from Item (ii) above, all such modes are uncontrollable.

Consequently every block of is either uncontrollable or unobservable, leading us to the reduced realization

| (60) |

Therefore, whenever , as required.

D Proof of Theorem 12

Start with the convexified optimization problem (23). Based on the structured realization (28), we see that is block-diagonal. Therefore, the optimal cost can be split by columns:

Since , we can factor each block column of as , where has no structure or delay, and is the adobe delay matrix (defined in Section 2.1). We can therefore optimize for each block column separately. Thus, each subproblem is to

| (61) |

Define . Comparing to (23)–(28), we observe that (61) is a special case of the problem (23), subject to the transformations (defined in (15)) and , , and . If we define the associated for this subproblem (according to (21)), we view the subproblem as that of finding the -optimal controller for the plant subject to an adobe input delay, as illustrated in the left panel of Fig. 11. The key difference between this problem and (8) is that we no longer have a sparsity constraint.

The adobe delay can be shifted to the input channel, shown in the right panel of Fig. 11. This follows from leveraging state-space properties and the block structure of certain blocks of . Examples include and .

The remainder of the proof proceeds as follows: we define to be the shaded system in Fig. 11 (right panel). This is a standard adobe delayed problem, so we can apply the transformation illustrated in Fig. 2. Specifically, we define , and obtain Fig. 12.

By the properties of the loop-shifting transformation discussed in Section 2.1, the optimal is found by solving a standard non-delayed LQG problem in the (rational) plant , whose solution is

Inverting each transformation, , and we can recover the Youla parameter via , which leads to (62).

| (62) |

E Proof of Theorem 13

From Lemma 3, the set of sub-optimal controllers is parameterized as , where . Equivalently, write , where and is given in Theorem 12. The controller equation can be expanded using the LFT as with . If has state , the state-space equation for decouples as

Note that we replaced by from (16b). This leads to simpler algebra, but is in principle not required. Meanwhile, the equation is coupled: . Now consider Agent . Since we are interested in the agent-level implementation, we begin by extracting , which requires finding . Separate by columns as in Section D to obtain

| (63) |

where is given in (62), and is the delay-free component of . A possible distributed implementation is to have Agent simulate locally. Since is available locally, then so is . We further suppose Agent computes locally. Component is used locally, while component for is transmitted to descendant . Each agent then computes by summing its local with the delayed received from strict ancestors . The complete agent-level implementation is shown in Fig. 3.

F Proof of Theorem 17

All the estimation, control gains and Riccati solutions used here are defined in Section 2.2.1. The additional cost incurred due to suboptimality is [37, §14.6]. Using [37, Lem. 14.3], we have .

F.1 (37a)

F.2 (37b)

Consider that in (34) is a sub-optimal centralized controller for , subject to . Centralized theory [37] implies that , where and is the centralized Youla parameter. Here, , where

After simplifications, we obtain

We substitute into the expression for , using , where is the controllability Gramian given by Lyapunov equation . Based on the Lemma 20 and using the identity , we evaluate

We obtain (37b) by substituting into .

F.3 Alternative formulas for the cost

We obtained an alternative formula for in Section F.1. Similarly, in Section F.2 for , is also equal to , where is the observability Gramian given by the dual Lyapunov equation . Based on Lemma 21, we can evaluate . Similar alternative formulas exist for (37c), and (37d) as well.

F.4 (37c)

We can split the cost in (23) into a sum of separate terms because is block-diagonal. Using [23, Prop. 6] on each of these problems, we write as a combination of a non-delayed cost and a incurred by adding delays to that system: , where . Also, since . We obtain (37c) by substituting into . See Section F.6 below for explanation on .

F.5 (37d)

Derivation is analogous to that of . See Section F.7 below for explanation on .

F.6 Proofs for (38a)

We have in Lemma 20 for all . The properties of a positive semi-definite matrix give us , and hence .

Now we define and establish that . The Hamiltonian for the control Riccati equation (17) is

where , and , and define the corresponding symplectic matrix exponential as . The elements , of this modified are used to define the . For all , we define . By solving the associated Differential Riccati Equation (DRE) [23, Eq. 16], we show [23, §4.3]. This gives us .

F.7 Proofs for (38b)

Next we consider the case of a fully connected graph with delays. So Agent ’s feedback policy looks like . Since we solve for by solving for individual columns , we define the associated state transition matrix for each column as , where . We define the corresponding , , , , and in a similar manner. We also define a centralized for each -modified plant

Each individual column has its own as the associated adobe delay matrix is different. We have a corresponding control ARE We solve DREs for each as in [23, §V.C] to obtain for all , where is a reshuffling of to mirror the ordering of . This proves that for all .

Lemma 23 proves that for all .

Lemma 23.

and are the solutions of the DREs for delayed fully connected and decentralized graphs respectively. Then, , where , and corresponds to .

References

- [1] V. D. Blondel and J. N. Tsitsiklis. A survey of computational complexity results in systems and control. Automatica, 36(9):1249–1274, 2000.

- [2] J. H. Cho and M. Kristalny. On the decentralized controller synthesis for delayed bilateral teleoperation systems. IFAC Proceedings Volumes, 45(22):393–398, 2012.

- [3] G. E. Dullerud and F. Paganini. A course in robust control theory: a convex approach, volume 36. Springer Science & Business Media, 2013.

- [4] Y.-C. Ho and K.-C. Chu. Team decision theory and information structures in optimal control problems—Part I. IEEE Transactions on Automatic Control, 17(1):15–22, 1972.

- [5] M. Kashyap. Optimal Decentralized Control with Delays. PhD thesis, Northeastern University, 2023.

- [6] M. Kashyap and L. Lessard. Explicit agent-level optimal cooperative controllers for dynamically decoupled systems with output feedback. In IEEE Conference on Decision and Control, pages 8254–8259, 2019.

- [7] M. Kashyap and L. Lessard. Agent-level optimal LQG control of dynamically decoupled systems with processing delays. In IEEE Conference on Decision and Control, pages 5980–5985, 2020.

- [8] J.-H. Kim and S. Lall. Explicit solutions to separable problems in optimal cooperative control. IEEE Transactions on Automatic Control, 60(5):1304–1319, 2015.

- [9] J.-H. Kim, S. Lall, and C.-K. Ryoo. Optimal cooperative control of dynamically decoupled systems. In IEEE Conference on Decision and Control, pages 4852–4857, 2012.

- [10] M. Kristalny and J. H. Cho. On the decentralized optimal control of bilateral teleoperation systems with time delays. In IEEE Conference on Decision and Control, pages 6908–6914, 2012.

- [11] M. Kristalny and J. H. Cho. Decentralized optimal control of haptic interfaces for a shared virtual environment. In IEEE Conference on Decision and Control, pages 5204–5209, 2013.

- [12] J. F. Kurose and K. W. Ross. Computer Networking: A top-down approach. Pearson, 8 edition, 2021.

- [13] A. Lamperski and J. C. Doyle. The control problem for quadratically invariant systems with delays. IEEE Transactions on Automatic Control, 60(7):1945–1950, 2015.

- [14] A. Lamperski and L. Lessard. Optimal decentralized state-feedback control with sparsity and delays. Automatica, 58:143–151, 2015.

- [15] L. Lessard. Decentralized LQG control of systems with a broadcast architecture. In IEEE Conference on Decision and Control, pages 6241–6246, 2012.

- [16] L. Lessard, M. Kristalny, and A. Rantzer. On structured realizability and stabilizability of linear systems. In American Control Conference, pages 5784–5790, 2013.

- [17] L. Lessard and S. Lall. An algebraic approach to the control of decentralized systems. IEEE Transactions on Control of Network Systems, 1(4):308–317, 2014.

- [18] L. Lessard and S. Lall. Optimal control of two-player systems with output feedback. IEEE Transactions on Automatic Control, 60(8):2129–2144, 2015.

- [19] D. Madjidian and L. Mirkin. optimal cooperation of homogeneous agents subject to delyed information exchange. IFAC-PapersOnLine, 49(10):147–152, 2016.

- [20] L. Mirkin. On the extraction of dead-time controllers and estimators from delay-free parametrizations. IEEE Transactions on Automatic Control, 48(4):543–553, 2003.

- [21] L. Mirkin, Z. J. Palmor, and D. Shneiderman. Loop shifting for systems with adobe input delay. IFAC Proceedings Volumes, 42(6):307–312, 2009.

- [22] L. Mirkin, Z. J. Palmor, and D. Shneiderman. Dead-time compensation for systems with multiple I/O delays: A loop-shifting approach. IEEE Transactions on Automatic Control, 56(11):2542–2554, 2011.

- [23] L. Mirkin, Z. J. Palmor, and D. Shneiderman. optimization for systems with adobe input delays: A loop shifting approach. Automatica, 48(8):1722–1728, 2012.

- [24] L. Mirkin and N. Raskin. Every stabilizing dead-time controller has an observer–predictor-based structure. Automatica, 39(10):1747–1754, 2003.

- [25] X. Qi, M. V. Salapaka, P. G. Voulgaris, and M. Khammash. Structured optimal and robust control with multiple criteria: A convex solution. IEEE Transactions on Automatic Control, 49(10):1623–1640, 2004.

- [26] M. Rotkowitz, R. Cogill, and S. Lall. Convexity of optimal control over networks with delays and arbitrary topology. International Journal of Systems, Control and Communications, 2(1-3):30–54, 2010.

- [27] M. Rotkowitz and S. Lall. A characterization of convex problems in decentralized control. IEEE Transactions on Automatic Control, 50(12):1984–1996, 2005.

- [28] M. Rotkowitz and S. Lall. Convexification of optimal decentralized control without a stabilizing controller. In International Symposium on Mathematical Theory of Networks and Systems, pages 1496–1499, 2006.

- [29] C. W. Scherer. Structured finite-dimensional controller design by convex optimization. Linear Algebra and its Applications, 351–352:639–669, 2002.

- [30] P. Shah and P. A. Parrilo. -optimal decentralized control over posets: A state-space solution for state-feedback. IEEE Transactions on Automatic Control, 58(12):3084–3096, 2013.

- [31] T. Tanaka and P. A. Parrilo. Optimal output feedback architecture for triangular LQG problems. In American Control Conference, pages 5730–5735, 2014.

- [32] A. S. M. Vamsi and N. Elia. Optimal distributed controllers realizable over arbitrary networks. IEEE Transactions on Automatic Control, 61(1):129–144, 2016.

- [33] H. S. Witsenhausen. A counterexample in stochastic optimum control. SIAM Journal on Control, 6(1):131–147, 1968.

- [34] W. Wonham. On the separation theorem of stochastic control. SIAM Journal on Control, 6(2):312–326, 1968.

- [35] J. Yan and S. E. Salcudean. Teleoperation controller design using -optimization with application to motion-scaling. IEEE Transactions on Control Systems Technology, 4(3):244–258, 1996.

- [36] D. Youla, H. Jabr, and J. Bongiorno. Modern Wiener-Hopf design of optimal controllers–Part II: The multivariable case. IEEE Transactions on Automatic Control, 21(3):319–338, 1976.

- [37] K. Zhou, J. C. Doyle, and K. Glover. Robust and optimal control, volume 40. Prentice Hall, New Jersey, 1996.