Optimal Charging Profile Design for Solar-Powered Sustainable UAV Communication Networks ††thanks: Accepted by IEEE ICC 2023: Green Communication Systems and Networks Symposium.

Abstract

This work studies optimal solar charging for solar-powered self-sustainable UAV communication networks, considering the day-scale time-variability of solar radiation and user service demand. The objective is to optimally trade off between the user coverage performance and the net energy loss of the network by proactively assigning UAVs to serve, charge, or land. Specifically, the studied problem is first formulated into a time-coupled mixed-integer non-convex optimization problem, and further decoupled into two sub-problems for tractability. To solve the challenge caused by time-coupling, deep reinforcement learning (DRL) algorithms are respectively designed for the two sub-problems. Particularly, a relaxation mechanism is put forward to overcome the ”dimension curse” incurred by the large discrete action space in the second sub-problem. At last, simulation results demonstrate the efficacy of our designed DRL algorithms in trading off the communication performance against the net energy loss, and the impact of different parameters on the tradeoff performance.

I Introduction

In future mobile communications networks, UAVs equipped with wireless transceivers can be exploited as mobile base stations to provide highly on-demand services to the ground users, forming UAV-based communication networks (UCNs)[1]. With the advantages of flexible 3D mobility, higher chance of Line-of-Sight communication channels, and lower deployment and operational cost, UCNs have received substantial research attention from various aspects[2]. However, the existing works mostly focus on UAV control considering a fixed set of UAVs. Few works have investigated how the network should optimally respond when the UAV crew dynamically change. On one hand, UAVs are powered by batteries. Some UAVs will run out of battery during the service and have to quit the network for charging. On the other hand, supplemental UAVs can be dispatched to easily join the existing crew to enhance the network performance. Therefore, it is indispensable to design novel responsive regulation strategies capable of optimally handling a UAV crew that may change dynamically.

To this end, we proposed in [3, 4] a responsive UAV trajectory control strategy to maximize the accumulated number of served users over a time horizon where at least one UAV quits or joins the network. Nevertheless, no matter how good such responsive strategies can be, they are by nature passive reaction strategies which can only accept and passively react to the change rather than proactively control the change. Solar charging makes the proactive control possible. The chance lies in that the user traffic demand in an area is usually time-varying. When the demand is low, some UAVs can be deliberately dispatched to elevate high to get solar charged even if they are not in bad need of charging. They can be called back later to replace other UAVs or meet the increased user demand. In this manner, the network is able to take charge of the change in the serving UAV crew, and a solar-powered sustainable (SPS) UAV network can be established.

UAV communications with solar charging have been studied by some pioneering works. With a single solar-powered UAV, [5] developed an optimal 3D trajectory control and resource allocation strategy, [6] studied the problems of energy outage at UAV and service outage at users by modeling solar and wind energy harvesting, and [7] proposed a novel power cognition scheme to intelligently adjust the energy harvesting, information transmission, and trajectory to improve UAV communication performance. For multi-UAV networks, the work[8] studied joint dynamic UAV altitude control and multi-cell wireless channel access management to optimally balance between solar charging and communication throughput improvement. The work[9] analytically characterized the user coverage performance of a UAV network based on a harvest power model and 3D antenna radiation patterns. Although solar charging is exquisitely integrated to fuel the UAV communications, most of the related works do not take into account the time-variability of solar radiation or user traffic demand, which, however, is usually the case in practice. To achieve day-scale sustainability, it is essential to consider these time variation when designing the UAV control strategies.

Therefore, in this work, we investigate the optimal solar charging strategy design for a UCN, considering time-varying solar radiation and user data traffic demand. The strategy aims to optimally trade off over a time horizon between maximizing the accumulated user coverage and minimizing the net energy loss, subject to the constraints of UAV sustainability and user service requirements. The net energy loss is defined as the difference between the total energy harvest and the total energy consumption. As far as we know, this work is the first to jointly consider time-varying solar radiation and user service demand at a day-scale in a solar-powered self-sustainable UAV network. Specifically, our contributions are three-fold.

-

•

The studied problem is first formulated into a time-coupled mixed-integer non-convex optimization problem. To make the problem tractable, the original problem is decoupled into two sub-problems, one obtaining the mapping between the number of serving UAVs and the number of served users in each time slot, and the other handling the time-variability of solar radiation and user service demand.

-

•

To tackle the challenge caused by time coupling, deep reinforcement learning (DRL) algorithms are developed to solve the two sub-problems. Particularly, a relaxation mechanism is designed to relieve the “dimension curse” caused by the large discrete action space in the second sub-problem.

-

•

Simulations are conducted to demonstrate the efficacy of the proposed learning algorithms and the impact of different parameters on the tradeoff performance.

remainder of the paper is organized as follows. Section II depicts the system model. Section III presents the problem formulation and decomposition. Section IV details the proposed DRL algorithms and its relaxation. Section V provides the numerical results. Section VI concludes the paper.

II System Model

II-A Network Model

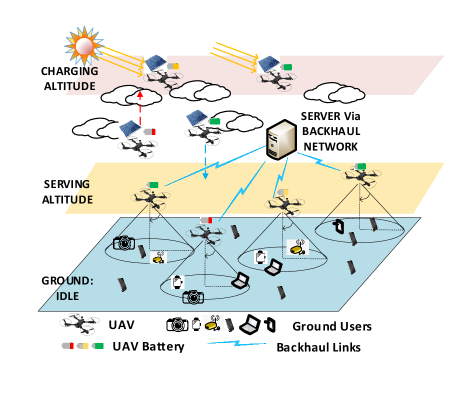

As shown in Fig. 1, we consider solar chargeable UAVs, denoted as , providing communication services to (i.e., serve) a target area. All UAVs can communicate with a server via backhaul networks (e.g., a satellite or cellular network). Each UAV concentrates its transmission energy within an aperture underneath it, forming a ground coverage disk. UAVs are mostly at three altitudes: ground, the serving altitude () and the charging altitude (). When on the ground, UAVs consume negligible power only for messaging with the server. UAVs only serve and get charged at the fixed altitude and , respectively. is low to maintain good UAV-user communication quality, while is right above the upper boundary of the clouds to minimize the attenuation of solar radiation due to clouds. The consideration of only charging UAVs at is justified as follows. According to [10], the solar radiation is attenuated exponentially with the thickness of clouds between the sun and solar panel, leading to only after the first 300 meters. When it does not take long (e.g., one minute or two) for a UAV to move vertically through 300 meters, UAVs can be reasonably set for charging at a fixed altitude just above the clouds.

The time horizon is equally divided into time slots indexed by . In any time slot, a percentage of the users are randomly distributed in proximity to some hotspot centers while the remaining are uniformly distributed throughout the area. The numbers and spatial distributions of the users and hotspots are deemed unchanged within a time slot but may vary with . The dynamics of the user distribution are known to the server to obtain an offline UAV charging strategy. The well-trained strategy will be executed relying on the server-UAV communications via the backhaul links.

II-B Spectrum Access

Users access the UAV spectrum following LTE Orthogonal Frequency Division Multiple Access (OFDMA)[11], which assigns different users of one UAV at least one orthogonal Resource blocks (RBs) such that they do not interfere with each other. A heuristic 2-stage user association policy is adopted. In each time slot, users send connection requests in stage I to the serving UAVs that provides the best SINR (can be measured via reference signaling), and each UAV admits the users with the best SINR values based on its bandwidth. In stage II, the rejected users then chooses the UAV with the next best SINR and is admitted if the UAV has available bandwidth. The stage II procedure is repeated for each user without association until it is admitted or has no available UAV to send requests to. Each user has a minimum throughput requirement . When a UAV admits a user , the number of RBs assigned to the user, , should satisfy

| (1) |

In Eq. (1), is the bandwidth per RB, is the transmit power spectrum density (psd) of UAVs, is the noise psd, denotes the set of UAVs that can cover user , and is the UAV-to-user channel gain as a function of the center frequency , distance between UAV and user , light speed and a line of sight (LoS) related parameter [12].

II-C Energy Model

We follow the work in [5] to model the kinematics power consumption for the UAVs. For a UAV flying at a level speed and a vertical speed , the kinematics power consumption is modeled as Eq. (2). In Eq. (2), , and denotes the power consumption of level flight, vertical flight and blade drag profile power, respectively, is the UAV weight, is the air density, is the total area of the UAV rotor disks, is the profile drag coefficient, is the total blade area, and is the blade tip speed. Note that is positive for UAV climbing, and negative for UAV landing.

| (2) |

In addition to the kinematics power consumption, UAVs spend power on communication and on-board operations like computing, which are denoted as and , respectively. Thus the total power consumption of a UAV is given as

| (3) |

Note that is usually several hundred watts. The transmission power of a small BS covering hundreds of meters typically falls between 0.25 and [13]. The operational power consumption is also in single-digit watts. Thus, and are usually neglected in practice.

The solar radiation intensity above the clouds varies with time in a day. We follow the model in [6] to characterize the average intensity as

| (4) |

where represents hour , and denotes the maximum intensity during a day. The harvested solar power is then calculated as

| (5) |

where is the solar panel area, is the charging efficiency coefficient, and is an intensity threshold.

III Problem Formulation and Decomposition

The objective is to achieve the optimal tradeoff among maximizing the total number of served users over the time horizon , maximizing the total harvested solar energy, and minimizing the total energy consumption of the UAV network. The optimization is subject to the network sustainability constraints and user traffic demand requirements. With the above considerations, the problem formulation is given as .

In Problem , the decision variables include whether a UAV should land, go serving or go charging at any time slot , i.e, , and the horizontal positions of the UAVs that go serving in any time slot , i.e., , where index the serving UAVs at time slot . Part denotes the amount of energy harvest from solar charging for UAV at time slot . This part is determined by and since a UAV takes some time to move from the last altitude to the current one, the harvest solar power , and the battery residue at the end of time slot , i.e., , since the battery capacity may be reached during charging. Part represents the energy consumption of UAV at , which is also determined by , , and . In Part , is the set of users that are admitted and served by all the UAVs at , which is a function of and . The constant is the coefficient balancing the weights between the user coverage and the energy gain and losses.

| () | ||||

| s.t. | (C1.1) | |||

| (C1.2) | ||||

| (C1.3) |

Constraint C1.1 represents the network sustainability requirements. The battery residue of any UAV at any should be no smaller than an altitude-dependent threshold . This is to make sure at the end of each , each UAV has enough energy to elevate to for charging in future slots to avoid completely leaving the crew. Thus,

| (6) |

where takes when =0, - when =1, and 0 when =2. Constraints C1.2 and C1.3 represent the user data traffic demand requirements. C1.2 requires that the percentage of served users at any should be no less than given the total number of users . C1.3 requires that the individual user traffic demand should be satisfied for any served users at any .

Problem is a mixed integer nonlinear non-convex optimization problem with nonlinear constraints. The items of different in the objective function are temporally coupled through UAV battery residue. These facts make the sequential decision problem intractable. To this end, we decouple into two sub-problems and , each of which can be solved by means of DRL algorithms.

| () | ||||

| (C2.1) |

In the first sub-problem , given the user distribution at each and the number of UAVs in service , the total number of users that can be served is maximized via determining the optimal positions as a function of and the user distribution.

| () | ||||

| s.t. | (C3.1) | |||

| (C3.2) | ||||

| (7) |

In the second sub-problem , the achieved mapping from P2 between the maximum number of served users and is exploited. The same objective as in is maximized via only optimizing . The relationship between and is given in Eq. (7), where is a binary indicator taking 1 if the inside condition is true and 0 otherwise.

IV Design of Deep Reinforcement Learning Algorithms

In this section, design of the DRL algorithms for solving and are elaborated. For , we reuse the algorithm designed in our previous work [4] to achieve the mapping between the number of UAVs in service () and the total number of served users () given a certain user distribution in hour . Based on the time-dependent mappings, the DRL algorithm design for solving is emphatically presented.

IV-A DRL for Solving

Our previous work [4] considered a set of UAVs flying at a fixed height providing communication services to the ground users with minimum throughput requirement. We considered dynamic UAV crew change during the training due to battery depletion or supplementary UAV join-in. A DDPG algorithm was designed to maximize the user satisfaction score via obtaining the optimal UAV trajectories during the steady period without crew change and the transition period when crew changes. With the following simplifications, the proposed algorithm can be fit to . Crew change is not considered so that the state space can be cut down to only including the UAV positions. The action space remains unchanged allowing a UAV to go any direction with a maximum distance per step. The reward function changes from step-wise user satisfaction score to step-wise total number of served users. The closest-SINR based user association policy is replaced with that elaborated in Subsection II-B.

IV-B DRL for Solving

P3 exploits the mappings between and in different hours obtained via , and aims to maximize its objective function via optimizing the UAVs’ charging profiles in the considered time horizon. In each hour , the DRL agent needs to determine whether a UAV should go charging, go serving or go to the ground for energy saving, i.e., , based on the UAVs’ current battery residues and altitudes, solar radiation intensity, and user traffic demands. When designing the DRL algorithm, we consider the varying solar radiation and user traffic demands as the dynamics of the underlying environment. The key components of the algorithm are designed as follows.

IV-B1 State Space

Battery residue of a UAV is a critical factor in determining its next move, thus are included into the state space, denoting the residue battery of UAV at the beginning of hour . The current UAV altitude is another in-negligible factor as the altitude changing will incur wear energy consumption which may accumulate to significantly impact the overall scheduling. Minimizing the unnecessary altitude changes for UAVs while satisfying the constraints will contribute positively to the optimization objective. Therefore, are embraced in the state space, which takes values , , or 0. Lastly, the hour indexing needs to be considered to capture the dynamics of the environment (e.g., solar radiation and user traffic demand) so that different actions may be taken at different even if the rest of the states are same. The complete state space is given below, with a cardinality of .

| (8) |

IV-B2 Action Space

The decision variables of subproblem is , denoting the altitudes that each UAV will go to at the beginning of the current hour . The action space is directly defined as , which takes value 0 if the UAV goes to the ground, 1 if the UAV goes serving, and 2 if the UAV goes charging. The cardinality of the action space is .

IV-B3 Reward Function Design

The reward function consists of three parts. The first part corresponds to the constraints of . When any UAV breaks the sustainability constraint (C3.1), a constant penalty is applied. When constraint C3.2 is broken, i.e., the total number of serving UAVs cannot result in a minimum user service rate , a constant penalty is applied. In addition, when is larger than the minimum number of serving UAVs that result in 100 user service rate, a reward of 0 is applied to prevent service over-provisioning.

The second part corresponds to the maximization of the total number of served users over the entire time horizon. Thus, is set to be directly equal to . The third part corresponds to the maximization of the difference between the total harvested energy and the total consumed energy. Due to the time-varying solar radiation intensity, it is beneficial for a UAV to land to the ground if it does not serve during some hours of a day (e.g., in the night or around sunset/sunrise), while it is beneficial to go charging during other hours of a day. In the former case, positive reward is given for UAVs going to the ground to promote energy saving, whereas in the latter case, positive reward is given for UAVs going charging to encourage energy harvesting. Therefore, is designed as

| (9) |

where and are reward coefficients to tradeoff between and in , replacing coefficient for . The total instantaneous reward is .

IV-B4 Relaxation of the Discrete Action Space

As the state space is continuous and discrete mixed, and the action space is discrete, Deep Q learning (DQL) algorithm is typically exploited. However, the cardinality of the action space, i.e., , increases exponentially with the total number of UAVs . For an instance of =15, the total number of possible aggregate actions over all UAVs will be 1.4. As the number of outputs of the Deep Q network (DQN) is equal to the total number of possible actions, the resultant DQN will be prohibitively complicated, not to mention the number of hours in the considered time horizon. Therefore, DQL is technically feasible, but practically impossible.

Inspired by [14], we relax the original discrete action space into a continuous space and obtain the UAV charging profile by means of DDPG. Each action is relaxed from discrete values to a continuous range (-0.5, 2.5). Hence, the relaxed action space becomes . With DDPG, the number of outputs of the actor network is equal to the dimension of the action space, i.e., , which only increases linearly with rather than exponentially as in DQN. Each time when an aggregate action is determined by the actor network and added with noise, the action will then be discretized to the closest value in . The discretized action will be the actual action applied to the current state and stored in the experience replay buffer. In this manner, the complexity of the exploration can be significantly reduced.

V Numerical Results

V-A Simulation Setup

For subproblem , we reuse the simulation setup and parameter configurations in our previous work [4] to obtain the hourly mapping between the number of UAVs and the maximal number of served users. For subproblem , the environment parameters and the RL parameters are summarized in Table I and II, respectively. A 24-hour time horizon is considered per episode with each hour being a step. The training is conducted using Reinforcement Learning Toolbox of Matlab 2022a on a Windows 10 server with Intel Core i9-10920X CPU @ 3.50GHz, 64GB RAM, and Quadro RTX 6000 GPU.

Note that only considering 24 hours will not guarantee the same set of UAVs working for consecutive days, but only ensure that the involved UAVs have sufficient battery residue to go charging in the next day. Once it is verified that a given set of UAVs can be sustainable for a whole day, two sets of UAVs can serve in alternative days to achieve full sustainability.

| mcell@mbjot \mcell@mbjotParametersc! Values | |

| UAV levels | (0,300,1400) |

| Max. solar radiation intensity | |

| above clouds | |

| Solar radiation intensity threshold | |

| UAV charging efficiency | 0.25 |

| UAV maximum speeds | (, 4, 4) |

| UAV rotor disk radius | 0.3 |

| UAV charging panel area | |

| UAV weight and air density | (5x9.8, 1.225) |

| UAV Battery Capacity | [15] |

| UAV static operational power | |

| UAV drag profile | - |

| Minimum user service rate | 0.85 |

| mcell@mbjot \mcell@mbjotParametersc! Values | |

| Actor and critic networks | 2 hidden layers, each with |

| hidden nodes | |

| Neural network learning rates | for critic and actor |

| Activation function | (actor output), |

| (all remaining) | |

| Regularization | L2 with |

| Gradient threshold | 1 |

| Smooth factor for target networks | 0.001 |

| Update frequency for target networks | 1 |

| Mini-Batch size | 512 |

| Action noise (, decay,) | |

| Experience buffer capacity | |

| Discount factor | |

| Max. steps per episode | |

| Max. episodes simulated | |

V-B Simulation Results

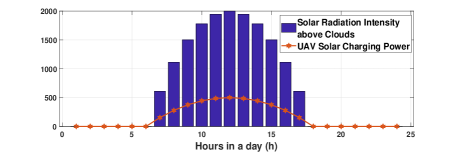

The major dynamics of the environment are presented in Fig. 2 first. The solar radiation is concentrated between 7am-5pm in a day. The UAV charging rate above clouds is positively related to the solar radiation. In Subfig. 2(b), there are more users requesting services in the late morning and afternoon, which is consistent with daily working hours. To satisfy a minimum of user service rate per hour, the red bars show the minimum number of UAVs needed for serving.

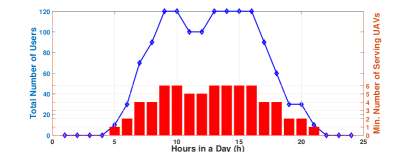

Given the above dynamics and the constraints of sustainability and user demand, the convergence of episodic rewards under our designed DRL algorithms are provided in Fig. 3. It can be seen that for the same number of UAVs, convergence is almost the same for different reward coefficients, yet a larger UAV set will lead to longer convergence time due to increased dimensions of the state-action space.

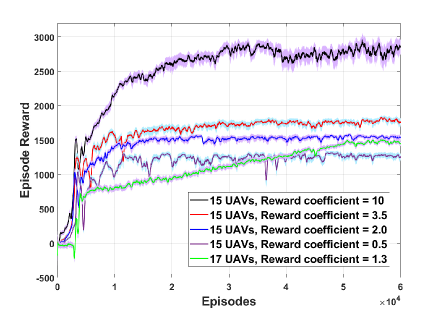

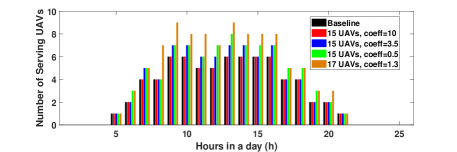

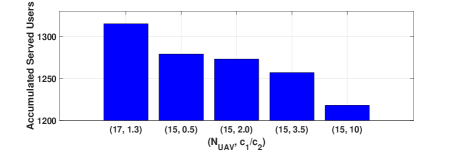

Fig. 4 reveals details of the achieved optimal charging profiles in terms of hourly number of serving UAVs and the accumulated number of served users in one day. The baseline in Subfig. 4(a) gives the minimum required number of serving UAVs in each hour to satisfy the user service rate. It can be observed that with smaller reward coefficients (, ), the number of serving UAVs in each hour tend to increase. The reason is that smaller reward coefficients will result in a smaller weight in optimization . Thus the RL agent tends to dispatch more UAVs to serve more users to get more rewards rather than make UAVs go charging or idle. When there are more UAVs available (e.g., 17 UAVs), more UAVs can serve in each hour when and are relatively low. More serving UAVs in each hour will consequently bring more served users, which is confirmed by Subfig. 4(b).

VI Conclusions

In this paper, optimal solar charging problem has been studied in a sustainable UAV communication network, considering the dynamic solar radiation and user service demand. The problem has been formulated into a time-coupled optimization problem and further decoupled into two sub-problems. DRL algorithms have been designed to make the sub-problems tractable. Simulation results have demonstrated the efficacy of the designed algorithms in optimally trading off the communication performance against the net energy loss.

References

- [1] A. Fotouhi, H. Qiang, M. Ding, M. Hassan, L. G. Giordano, A. Garcia-Rodriguez, and J. Yuan, “Survey on UAV cellular communications: Practical aspects, standardization advancements, regulation, and security challenges,” IEEE Communications surveys & tutorials, vol. 21, no. 4, pp. 3417–3442, 2019.

- [2] M. Mozaffari, W. Saad, M. Bennis, Y.-H. Nam, and M. Debbah, “A tutorial on UAVs for wireless networks: Applications, challenges, and open problems,” IEEE communications surveys & tutorials, vol. 21, no. 3, pp. 2334–2360, 2019.

- [3] R. Zhang, M. Wang, and L. X. Cai, “SREC: Proactive self-remedy of energy-constrained UAV-based networks via deep reinforcement learning,” in GLOBECOM 2020-2020 IEEE Global Communications Conference. IEEE, 2020, pp. 1–6.

- [4] R. Zhang, M. Wang, L. X. Cai, and X. Shen, “Learning to be proactive: Self-regulation of uav based networks with uav and user dynamics,” IEEE Transactions on Wireless Communications, vol. 20, no. 7, pp. 4406–4419, 2021.

- [5] Y. Sun, D. Xu, D. W. K. Ng, L. Dai, and R. Schober, “Optimal 3D-trajectory design and resource allocation for solar-powered UAV communication systems,” IEEE Transactions on Communications, vol. 67, no. 6, pp. 4281–4298, 2019.

- [6] S. Sekander, H. Tabassum, and E. Hossain, “Statistical performance modeling of solar and wind-powered UAV communications,” IEEE Transactions on Mobile Computing, vol. 20, no. 8, pp. 2686–2700, 2020.

- [7] J. Zhang, M. Lou, L. Xiang, and L. Hu, “Power cognition: Enabling intelligent energy harvesting and resource allocation for solar-powered UAVs,” Future Generation Computer Systems, vol. 110, pp. 658–664, 2020.

- [8] S. Khairy, P. Balaprakash, L. X. Cai, and Y. Cheng, “Constrained deep reinforcement learning for energy sustainable multi-UAV based random access IoT networks with NOMA,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 4, pp. 1101–1115, 2020.

- [9] E. Turgut, M. C. Gursoy, and I. Guvenc, “Energy harvesting in unmanned aerial vehicle networks with 3D antenna radiation patterns,” IEEE Transactions on Green Communications and Networking, vol. 4, no. 4, pp. 1149–1164, 2020.

- [10] A. Kokhanovsky, “Optical properties of terrestrial clouds,” Earth-Science Reviews, vol. 64, no. 3-4, pp. 189–241, 2004.

- [11] R. Zhang, M. Wang, X. Shen, and L.-l. Xie, “Probabilistic analysis on QoS provisioning for Internet of Things in LTE-A heterogeneous networks with partial spectrum usage,” IEEE Internet of Things Journal, vol. 3, no. 3, pp. 354–365, 2015.

- [12] A. Al-Hourani, S. Kandeepan, and A. Jamalipour, “Modeling air-to-ground path loss for low altitude platforms in urban environments,” in 2014 IEEE global communications conference. IEEE, 2014, pp. 2898–2904.

- [13] “Small cells and health,” Available at https://www.gsma.com/publicpolicy/wp-content/uploads/2015/03/SmallCellForum_2015_small-cells_and_health_brochure.pdf, 2015.

- [14] G. Dulac-Arnold, R. Evans, H. van Hasselt, P. Sunehag, T. Lillicrap, J. Hunt, T. Mann, T. Weber, T. Degris, and B. Coppin, “Deep reinforcement learning in large discrete action spaces,” arXiv preprint arXiv:1512.07679, 2015.

- [15] “High power density light weight drone solid state lithium battery,” Available at https://unmannedrc.com/products/high-power-density-light-weight-drone-solid-state-lithium-battery.