Open Source Iris Recognition Hardware and Software

with Presentation Attack Detection

Abstract

This paper proposes the first known to us open source hardware and software iris recognition system with presentation attack detection (PAD), which can be easily assembled for about 75 USD using Raspberry Pi board and a few peripherals. The primary goal of this work is to offer a low-cost baseline for spoof-resistant iris recognition, which may (a) stimulate research in iris PAD and allow for easy prototyping of secure iris recognition systems, (b) offer a low-cost secure iris recognition alternative to more sophisticated systems, and (c) serve as an educational platform. We propose a lightweight image complexity-guided convolutional network for fast and accurate iris segmentation, domain-specific human-inspired Binarized Statistical Image Features (BSIF) to build an iris template, and to combine 2D (iris texture) and 3D (photometric stereo-based) features for PAD. The proposed iris recognition runs in about 3.2 seconds and the proposed PAD runs in about 4.5 seconds on Raspberry Pi 3B+. The hardware specifications and all source codes of the entire pipeline are made available along with this paper.

1 Introduction

Iris recognition is without a doubt one of the main biometric modes, and the seminal work by Daugman [8] paved the way for many current commercial systems and inspired academia with a mathematically elegant and information theory-based iris feature extraction and matching. ISO/IEC 19794-6 recommendations regarding the iris image properties are not demanding in terms of resolution (640480 pixels with at least 120 pixels per iris diameter), and near-infrared (NIR) LEDs with a peak in the ISO-recommended range of 700-900 nm are easily available. However, very few works have been published along the line of open source iris recognition [21, 24, 29], and all previous papers focused on the software domain. To the best of our knowledge, there has not been prior work that attempted to offer an open source hardware design for iris recognition.

In this paper, we propose an open source iris recognition system (hardware and software) with presentation attack detection (PAD). The hardware platform is based on the Raspberry Pi 3B+ board, through which we perform illumination control, image acquisition, and the entire image processing involved in the pipeline. In addition to the main Pi board, the hardware involves a NIR-sensitive Pi camera, an NIR-filter, two NIR LEDs, a breadboard, resistors, and jumper wires. We designed our hardware in a way such that it is low-cost, easy to assemble, easy to use, and follows the ISO recommendations regarding the iris image capture (thus the use of NIR, rather than visible light). The total cost of the hardware in the moment of writing this paper was less than 75 USD, which was cheaper than most commercial iris systems. Our open-source software pipeline consists of three modules: segmentation, presentation attack detection, and recognition. We use an image-complexity guided network compression scheme, CC-Net [27], to design a lightweight iris segmentation network that operates with high speed on Raspberry Pi, while producing high-quality masks. The presentation attack detection module is based on the best-performing methods among all available open-source iris PAD algorithms evaluated to date [12]. For iris recognition, we employ an algorithm that extracts features using domain-specific, human-inspired Binarized Statistical Image Features (BSIF) [7] and performs matching with mean fractional Hamming Distance.

To summarize, the main contributions of this paper are the following:

-

1.

An open source design of a complete iris recognition system equipped with iris presentation attack detection (hardware design based on Raspberry Pi and the end-to-end pipeline from image acquisition to recognition decision written in Python).

-

2.

A lightweight iris segmentation neural network that segments an ISO-compliant iris image in approx. 1.5 sec. on a Raspberry Pi 3B+, running around faster than the existing open-source iris segmentation (OSIRIS [29]) and faster than the state-of-the-art deep learning-based iris segmentation (SegNet [36]) while preserving good segmentation accuracy.

The complete package (segmentation model, source codes, hardware specifications and assembly instructions) can be obtained at https://github.com/CVRL/RaspberryPiOpenSourceIris. Since the implementation is done in Python, all software components can be easily run outside the Raspberry Pi environment and adapted to other needs.

2 Related Work

Open Source Biometric Systems.

The idea of creating open-source biometric systems is not new, and has been developing unevenly across modalities due to uneven ubiquitousness of appropriate sensors. Among the three most popular biometric techniques (fingerprints, face, and iris), face recognition is the method that can be effectively implemented with commodity cameras, and thus several open-source software-only solutions exist [1, 9, 30]. Contactless fingerprint acquisition (with a camera installed in a mobile phone) has been also explored [20], which opens the door for low-cost/free fingerprint recognition systems. A contact-based open-source fingerprint recognition system has been recently proposed by Engelsma et al. [11]. The authors built RaspiReader, an easy-to-assemble, spoof-resistant, optical fingerprint reader. RaspiReader provides open source STL and software which allow replication of the entire system within one hour at the cost of US $175. Visible-light iris recognition has been also explored [31, 35], opening the possibilities for low-cost iris recognition solutions, although their recognition accuracy may be lower than that for near infrared-based systems, especially for dark eyes (i.e. rich in melanin pigment).

Open Source Iris Recognition Software.

There are several iris image processing and coding algorithms that have been open-sourced. Perhaps the first open-source re-implementation of Daugman’s coding [8] was published by Masek and Kovesi [25]. It uses a bank of log-Gabor wavelets for feature extraction and the fractional Hamming distance for template matching. Masek’s codes were later superseded by Open Source IRIS (OSIRIS) [29], written in C, which is arguably the most popular open source iris recognition software based on old concepts using circular approximations of iris boundaries and Gabor filters in iris encoding. University of Salzburg’s Iris Toolkit (USIT) [33] extends the iris segmentation and coding beyond Gabor-based algorithm, and is the most recent open-source iris recognition software.

Iris recognition typically involves two major steps: (a) image segmentation and (b) feature extraction and comparison. These steps are in principle independent, and thus we observe open-source solutions for either segmentation or iris coding. Most initial works on iris segmentation focused on circular approximations of the inner and outer iris boundaries [8]. Since then, solutions of iris segmentation deal with more complex shapes, enabled by a recent advent of deep learning. Especially convolutional neural networks (CNN) have been used as end-to-end, effective iris image segmenters with model weights offered along with the papers [17, 18, 22, 36]. Kinnison et al. [19] adapt the existing learning-free method designed earlier for neural microscopy volumes to iris segmentation and achieved considerable processing speed-up at low-resource platforms (such as Raspberry Pi) at a small cost of lower segmentation accuracy. Once the segmentation stage is secured, one may find open-sourced feature encoding methods based on standard [32] and human-driven Binary Statistical Image Features [7], or off-the-shelf CNN embeddings [4, 28]. Ahmad and Fuller [2] propose a triplet CNN-based iris recognition system without a need for image segmentation and normalization of iris images.

Despite richness of proposed iris recognition methods, with some of them offering open-source codes, to the best of our knowledge no open-source hardware with appropriate codes suited for low-resource platforms, has been proposed yet for iris biometrics to date.

Open Source Iris Presentation Attack Detection (PAD) Software.

For iris recognition to be effective and meaningful, presentation attacks (such as printed images, textured contact lenses, and even cadaver irises) to iris sensors must be detected and excluded from the matching process. The 2018 survey [5] provides a comprehensive study of iris PAD research, covering background, presentation attack instruments, detection methods, datasets, and competitions. We will focus on the works most closely related to the topic of this paper, i.e. only those iris PAD methods that have been open sourced. Hu et al. [15] use spatial pyramids and relational measures (convolutions on features) to extract higher-level regional features from local features extracted by Local Binary Patterns (LBP), Local Phase Quantization (LPQ), and intensity correlogram. Gragnaniello et al. [13] extract features from both iris and sclera using scale-invariant local descriptors (SID) and apply a linear Support Vector Machine (SVM) for PAD. In another work by Gragnaniello et al. [13], the authors combine domain-specific knowledge into the design of their CNN, namely the network architecture and loss function. The PAD methods we adopt in this paper are the most recent, open-source methods evaluated by Fang et al. [12] as best-performing in their experiments: OSPAD-2D [26], OSPAD-3D [6], and OSPAD-fusion [12]. OSPAD-2D is a multi-scale BSIF-based PAD method on textural features, OSPAD-3D is a photometric-stereo-based PAD method on shape features, and OSPAD-fusion is a combination of them. These methods will be discussed in further detail in Section 3.1.3.

3 Design

3.1 Software

3.1.1 Iris Segmentation

The most recent and most accurate iris segmentation methods deploy various convolutional neural network architectures. All of them, however, require more computing power and memory than a device like Raspberry Pi could offer. Therefore, we adapted the lightweight CC-Net architecture [27] to iris segmentation. CC-Net has a U-Net structure [34], but it leverages information about the image complexity of the training data to compress the network while preserving most of the segmentation accuracy. In the original paper, the authors showed that CC-Net is able to retain up to 95% accuracy using only 0.1% of the trainable parameters on biomedical image segmentation tasks. We applied the CC-Net network compression scheme to the domain of iris segmentation and ended up with a deep learning-based segmentation model that completes the prediction for ISO-compliant iris image in about 1.5 seconds on Raspberry Pi 3B+. That is, it runs around faster than the existing open-source iris segmentation (OSIRIS [29]) and faster than the state-of-the-art deep learning-based segmentation (SegNet [36]), which is re-trained from an off-the-shelf SegNet model [3] on the iris segmentation task. SegNet obtains benchmark performance and produces masks with fine details. However, it has a deep encoder-decoder structure and has a lot more parameters than CC-Net, making it a less ideal candidate for applications on mobile devices with limited memory and computing resource. In Section 4.3, we compare the running time of all three iris segmentation methods on the Raspberry Pi, and verify our choice of CC-Net as the segmentation tool. Our software implementation includes also both SegNet-based and OSIRIS-based codes prepared for the Pi platform, to make the results presented in this paper reproducible.

3.1.2 Iris Recognition

We adopt the domain-specific human-inspired BSIF-based iris recognition algorithm [7]. In contrast to previous work that treated BSIF as a generic textural filter, Czajka et al. [7] perform domain adaptation to train BSIF filters specifically learned for iris recognition. Additionally, the filters are trained on iris regions that appear important to humans to preserve the maximum amount of information possible. To further ensure the quality of images, only patches from genuine iris pairs that were correctly classified by humans are used in the filter training. The human-in-the-loop design and domain adaptation result in a statistically significant improvement in iris recognition accuracy, including methods based on standard BSIF filters and OSIRIS implementation of Gabor-based encoding, according to [7].

3.1.3 Iris Presentation Attack Detection

Despite many iris presentation attack detection methods proposed to date [5], a small number of them have been formally open-sourced. Fang et al. [12] offer a recent comparison of available open-source iris PAD methods, and based on their assessment we decided to adopt the best-performing methods (OSPAD-3D [6], OSPAD-2D [26], and fusion of these two) and prepare a faster implementation by parallelization of processes and early stopping (for OSPAD-2D), suitable for low-resource platforms. Below, we provide a brief overview of each method and direct the readers to the original papers and our code implementation for more details.

OSPAD-3D

In OSPAD-3D, Czajka et al. [6] leverage the fact that when iris is illuminated from two different directions, differences in shadows observed in the images of authentic iris are small, while significant differences appear in the images of irises wearing a textured contact lens. From this observation, OSPAD-3D employs photometric stereo to reconstruct the iris surface, which is flatter for authentic irises and more irregular for textured contact lenses. Given a pair of iris images and their segmentation masks, OSPAD-3D first estimates the surface normal vectors of the iris surface. Then, the variance of the vectors’ distances to the mean normal vector is computed as the PAD score.

OSPAD-2D

Extending [10], OSPAD-2D [26] proposes a PAD method using purely open source libraries. In this method, features are extracted through multi-scale standard BSIF, and an ensemble of Support Vector Machine, Multi-layer Perceptron, and Random Forests classifiers is used to make the final prediction. The method does not use iris segmentation and instead uses the best guess of iris location (center of the image) to obtain the salient iris region. Since OSPAD-2D integrates majority voting of the SVM decisions, we implement an early stopping strategy to further speed up the processing time: for each sample, once more than half of the models agree on a decision (live or fake), the decision is returned immediately and the rest of the models are not run.

OSPAD-fusion

Since OSPAD-3D uses only 3D shape features and OSPAD-2D uses only 2D textural features, Fang et al. [12] propose OSPAD-fusion that fuses the previous two methods. In the paper, the authors observe that lenses with highly opaque patterns often fail to produce well-pronounced shadows, thus violating the assumptions of OSPAD-3D. It is also observed that OSPAD-2D tends to accept fakes when tested on unknown samples. Therefore, instead of a simple score-level fusion, OSPAD-fusion employs a cascaded fusion algorithm that is able to sidestep the flaws present in OSPAD-3D and combine the strengths of the two methods.

3.2 Hardware

Figure 1 (a-b) shows the setup of our hardware. The design is simple: an IR-sensitive camera (with the IR filter) is used to capture the iris images, and a circuit controlled by the Raspberry Pi output pins is used for illumination purposes. Since OSPAD-3D requires two iris images taken when the eye is illuminated from two different directions, two near-infrared LEDs (with a power peak around 850 nm) are placed equidistant to the lens and on both sides of the lens. At test time, the first picture is taken with the left LED turned on (and right LED turned off), and then the second picture is taken with the left LED turned off (and right LED turned on). Then, both LEDs will go off and the iris segmentation, recognition, and presentation attack detection steps are carried out.

3.3 Assembly Details

| Component | Cost (USD) |

|---|---|

| Raspberry Pi 3B+ | $ |

| NIR-sensitive Pi-compatible | |

| camera ( px) | $ |

| NIR filter (cut at 760 nm) | $ |

| NIR LEDs (850 nm) | $ |

| Resistors (220 Ohm) | $ |

| Total | $ |

Table 1 lists the main components used to construct the system and their current prices. The Raspberry Pi model version is 3B+. The NIR LEDs have emission wavelengths of 850mm, but any LEDs with wavelength in the range between 700mm and 900mm, as recommended by ISO [16], will work. Four 220 Ohm resistors are used for circuit protection purposes. For the software part, all software modules are implemented in Python. One may optionally use a protective metal sleeve, as shown in Fig. 1, or skip using the NIR filter at all, if the camera has an embedded IR-cut filter.

The complete package (segmentation model, source codes, hardware specifications, and assembly instructions) can be obtained at https://github.com/CVRL/RaspberryPiOpenSourceIris. Note that all software components can be easily run outside the Raspberry Pi environment, and thus serve as a rapid iris recognition implementation with presentation attack detection. The optional OSIRIS-based segmentation module (attached to the software release for comparisons and replication purposes) is written in C++.

4 Performance Evaluation

We evaluate the performance of iris recognition and presentation attack detection on NDIris3D database [12]. This is the only database known to us which offers iris images taken from the same subjects with and without textured contact lenses, and captured user NIR illumination from two different directions (required for photometric stereo-based presentation attack detection method).

4.1 Iris Recognition Performance

This evaluation has two goals. One is obviously an assessment of the overall iris recognition accuracy offered by algorithms working in low-resource setup. The second goal is to compare the adapted, light-weight CC-Net-based segmentation with three other open-sourced segmenters: U-Net-based [23], SegNet-based [36] and OSIRIS-based [29]. In order to make a fair comparison, the iris images in the test set are first segmented by each segmentation method separately. Then, the same human-driven BSIF-based iris recognition algorithm [7] is used to generate the genuine and imposter comparison scores using segmentation results of each segmentation method. It is noteworthy that all segmentation and iris coding methods used different, subject-disjoint datasets for training and hyperparameters setting, so this experimental protocol is the best available option.

| Method | EER | FNMR(%) | ||

|---|---|---|---|---|

| (%) | @FMR=1% | @FMR=0.1% | ||

| CC-Net | 4.39 | 8.48 | 19.36 | |

| OSIRIS | 4.14 | 7.01 | 14.16 | |

| SegNet | 4.26 | 8.63 | 18.75 | |

| U-Net | 39.51 | 87.33 | 97.80 | |

The datasets used in training of both iris segmenters and the BSIF-based encoding method are subject-disjoint with the NDIris3D-LG corpus (samples collected by the LG 4000 iris sensor) used for testing in this work. Table 2 reports the between genuine and imposter distributions, Equal Error Rate (EER), and False Non-Match Rate (FNMR) at different levels of False Match Rate (FMR). As it can be seen, CC-Net, OSIRIS, and SegNet all achieve high and low EER, which means that they give high-quality masks. Note that CC-Net achieves similar FMR as the state-of-the-art CNN-based segmentation method (SegNet). U-Net-based segmentation results in lackluster overall accuracy. This may be due to the fact that the CASIA-V1 dataset is small in size, and fine-tuning the U-Net weights with only 160 images (according to the original paper) may be insufficient to generalize on non-CASIA and ISO-compliant samples. Although it is possible to re-train it on a larger dataset, which would certainly lead to better performance, we will show in Section 4.3 that the structure of this network is prohibitively large to be deployable on low-resource platforms, such as Raspberry Pi.

We also present selected qualitative results of the segmentation results of different methods. Figure 2 shows two examples from CASIA-V1 and NDIris3D-LG. It can be seen that U-Net [23] performs perfectly on CASIA-V1, but fails to generalize onto NDIris3D-LG. OSIRIS misclassified part of the iris and sclera region for CASIA-V1 but achieves a robust performance overall. SegNet- and CC-Net-based methods generate masks that are close to the ground truth. Note that SegNet predicts finer details for the CASIA-V1 when compared to CC-Net segmentation, and we conjecture that this is because SegNet is a richer model, trained on a variety of data, and is therefore able to capture more low-level features. Both SegNet and CC-Net demonstrate very good segmentation results for iris images captured by the hardware proposed in this work. These qualitative observations correspond with our quantitative assessment.

4.2 Iris Presentation Attack Detection Performance

| Distribution pairs | |

|---|---|

| Genuine vs Imposter | |

| Genuine vs Real-Contact | |

| Real-Contact vs Imposter |

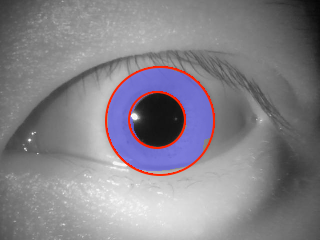

Placing textured contact lenses on the cornea to interfere with actual iris pattern, and thus conceal one’s identity, is a well-known presentation attack type. A close observation of images may reveal that textured contact lenses cover only part of the iris, leaving some regions of the iris observable, as shown in Figure 3. This fractional authentic iris area is, however, too small to obtain reliable recognition. Indeed, Table 3 and Figure 4 demonstrate that when the genuine irises are covered by textured contact lenses, the genuine score distribution (denoted as “Genuine vs Real-Contact”) is shifted towards imposter score distribution, significantly increasing the probability of false non-match. Such attempts should be detected, and thus presentation attack detection is necessary.

| Method | Performance | |||||

|---|---|---|---|---|---|---|

| LG4000 | AD100 | |||||

| Accuracy (%) | APCER (%) | BPCER (%) | Accuracy (%) | APCER (%) | BPCER (%) | |

| OSPAD-fusion [12] | ||||||

| OSPAD-3D [6] | ||||||

| OSPAD-2D [26] | ||||||

| DACNN [14] | ||||||

| SIDPAD [13] | ||||||

| RegionalPAD [15] | ||||||

In this subsection, we compare the PAD methods implemented in our system (OSPAD-fusion, OSPAD-3D, and OSPAD-2D) to all currently available open-source baselines, by either following download instructions offered in original papers, or – in some cases – asking the corresponding authors for source codes. We evaluated all methods under the same protocol: all algorithms are trained according to the training schedule in the original papers / the schedules suggested by the authors, with the NDCLD’15 dataset [10] as the training set, and the entire NDIris3D-LG as the testing set. Again, our goal was to use a subject-disjoint train and test sets of images. Since OSPAD-3D generates one prediction for each pair of images, we use a validation subset from the training set NDCLD’15 to search for the best fusion strategy for the other methods. As a result, all methods now generate one prediction for each pair of images, so their performance could be evaluated fairly. Results in Table 4 show that the adopted methods achieve state-of-the-art accuracy in iris presentation attack detection.

4.3 Timings

In order for an open-source system to be deployable in real-world application scenarios, the most important feature is fast running time. A slow system is not desirable, even if it is cost-effective, open software, and open hardware. Table 5 presents the average and standard deviation of the running times (in seconds) of each module in our system, on both NDCLD’15 and NDIris3D-LG sets. Note that all the times are for a pair of iris images, as required by the OSPAD-3D method. So processing times per a single iris image are approximately half of those reported in Table 5. All modules have fast running time because our implementation takes advantage of all four cores of the Raspberry Pi through multiprocessing. We can further parallelize OSPAD-2D with the other modules, since it does not depend on segmentation results. Note that while the running times for the other modules are roughly comparable for the two datasets, both the average and standard deviation of the OSPAD-2D running time are significantly higher on NDIris3D-LG than on NDCLD’15. This is because the training set of OSPAD-2D models partially overlaps with our subset of NDCLD’15. This makes OSPAD-2D decisions on NDCLD’15 more confident, thus triggering the early stopping more frequently on NDCLD’15 than on NDIris3D-LG.

| Operation | NDCLD’15 | NDIris3D |

|---|---|---|

| Segmentation | ||

| OSPAD-3D | ||

| OSPAD-2D | ||

| Iris Recognition |

We also compare the running times of CC-Net, compared to the other three candidate segmentation tools: OSIRIS segmentation module, SegNet-based and U-Net-based. Table 6 shows the running times of each method, along with the number of trainable parameters. Results show that CC-Net is the fastest segmentation method, around faster than OSIRIS and faster than SegNet. The running time of U-Net is not reported because the model is too large to be run on Raspberry Pi. The huge speed advantage of CC-Net is due to the fact that the number of its trainable parameters is significantly less than SegNet and U-Net. Though OSIRIS does not need to tune any parameters, it requires a lot of computation to perform the Viterbi algorithm. Therefore, CC-Net is left as the segmentation component in the final prototype.

| Method | Running time | # of trainable parameters |

|---|---|---|

| CC-Net | ||

| OSIRIS | ||

| SegNet | ||

| UNet |

5 Summary

This paper proposes the first, known to us, complete open-source iris recognition system (hardware and software) that can be implemented on low-resource platforms supporting Python, such as Raspberry Pi. It features not only a fast and accurate CNN-based segmentation (which is usually the most time-consuming element of iris recognition pipeline) but also one of the most recent iris presentation attack detection methods, making it complete also in terms of security aspects. It may serve as a state-of-the-art open source platform for designing secure and low-cost iris recognition systems. All software components can be also easily run outside the Raspberry Pi, and even adapted to other hardware platforms supporting Python programming language and near-infrared imaging.

References

- [1] OpenBR – Open Source Biometric Recognition. http://openbiometrics.org/index.html.

- [2] S. Ahmad and B. Fuller. ThirdEye: Triplet Based Iris Recognition without Normalization. https://arxiv.org/abs/1907.06147, 2019.

- [3] V. Badrinarayanan, A. Kendall, and R. Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12):2481–2495, 2017.

- [4] A. Boyd, A. Czajka, and K. Bowyer. Deep Learning-Based Feature Extraction in Iris Recognition: Use Existing Models, Fine-tune or Train From Scratch? In 2019 IEEE 10th International Conference on Biometrics Theory, Applications and Systems (BTAS), pages 1–9, Tampa, FL, USA, 2019.

- [5] A. Czajka and K. W. Bowyer. Presentation attack detection for iris recognition: An assessment of the state-of-the-art. ACM Comput. Surv., 51(4):86:1–86:35, July 2018.

- [6] A. Czajka, Z. Fang, and K. Bowyer. Iris presentation attack detection based on photometric stereo features. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 877–885, 2019.

- [7] A. Czajka, D. Moreira, K. Bowyer, and P. Flynn. Domain-specific human-inspired binarized statistical image features for iris recognition. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 959–967, 2019.

- [8] J. G. Daugman. High confidence visual recognition of persons by a test of statistical independence. IEEE Transactions on Pattern Analysis and Machine Intelligence, 15(11):1148–1161, 1993.

- [9] J. Deng, J. Guo, N. Xue, and S. Zafeiriou. Arcface: Additive angular margin loss for deep face recognition. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4685–4694, 2019.

- [10] J. S. Doyle and K. W. Bowyer. Robust detection of textured contact lenses in iris recognition using bsif. IEEE Access, 3:1672–1683, 2015.

- [11] J. J. Engelsma, K. Cao, and A. K. Jain. Raspireader: Open source fingerprint reader. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(10):2511–2524, 2019.

- [12] Z. Fang, A. Czajka, and K. W. Bowyer. Robust Iris Presentation Attack Detection Fusing 2D and 3D Information. https://arxiv.org/abs/2002.09137, 2020.

- [13] D. Gragnaniello, G. Poggi, C. Sansone, and L. Verdoliva. Using iris and sclera for detection and classification of contact lenses. Pattern Recognition Letters, 82(P2):251–257, Oct. 2016.

- [14] D. Gragnaniello, C. Sansone, G. Poggi, and L. Verdoliva. Biometric spoofing detection by a domain-aware convolutional neural network. In 2016 12th International Conference on Signal-Image Technology Internet-Based Systems (SITIS), pages 193–198, Nov 2016.

- [15] Y. Hu, K. Sirlantzis, and W. G. J. Howells. Iris liveness detection using regional features. Pattern Recognition Letters, 82:242–250, 2016.

- [16] ISO/IEC 19794-6:2011. Information technology – Biometric data interchange formats – Part 6: Iris image data, 2011.

- [17] E. Jalilian and A. Uhl. Iris Segmentation Using Fully Convolutional Encoder–Decoder Networks, pages 133–155. 08 2017.

- [18] D. Kerrigan, M. Trokielewicz, A. Czajka, and K. W. Bowyer. Iris recognition with image segmentation employing retrained off-the-shelf deep neural networks. In 2019 International Conference on Biometrics (ICB), pages 1–7, 2019.

- [19] J. Kinnison, M. Trokielewicz, C. Carballo, A. Czajka, and W. Scheirer. Learning-free iris segmentation revisited: A first step toward fast volumetric operation over video samples. In 2019 International Conference on Biometrics (ICB), pages 1–8, 2019.

- [20] A. Kumar. Contactless 3D Fingerprint Identification. Springer, 1 edition, 2018.

- [21] Y. Lee, R. J. Micheals, and P. J. Phillips. Improvements in video-based automated system for iris recognition (vasir). In 2009 Workshop on Motion and Video Computing (WMVC), pages 1–8, 2009.

- [22] J. Lozej, B. Meden, V. Struc, and P. Peer. End-to-end iris segmentation using u-net. In 2018 IEEE International Work Conference on Bioinspired Intelligence (IWOBI), pages 1–6, 2018.

- [23] J. Lozej, B. Meden, V. Struc, and P. Peer. End-to-end iris segmentation using u-net. In 2018 IEEE International Work Conference on Bioinspired Intelligence (IWOBI), pages 1–6, 2018.

- [24] L. Masek and P. Koves. Matlab source code for a biometric identification system based on iris patterns. 2003.

- [25] L. Masek and P. Kovesi. MATLAB Source Code for a Biometric Identification System Based on Iris Patterns. https://www.peterkovesi.com/studentprojects/libor/sourcecode.html, 2003. The School of Computer Science and Software Engineering – The University of Western Australia.

- [26] J. McGrath, K. W. Bowyer, and A. Czajka. Open Source Presentation Attack Detection Baseline for Iris Recognition. ArXiv, abs/1809.10172, 2018.

- [27] S. Mishra, P. Liang, A. Czajka, D. Z. Chen, and X. S. Hu. Cc-net: Image complexity guided network compression for biomedical image segmentation. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), pages 57–60, 2019.

- [28] K. Nguyen, C. Fookes, A. Ross, and S. Sridharan. Iris recognition with off-the-shelf cnn features: A deep learning perspective. IEEE Access, 6:18848–18855, 2018.

- [29] N. Othman, B. Dorizzi, and S. Garcia-Salicetti. Osiris: An open source iris recognition software. Pattern Recognition Letters, 82:124 – 131, 2016. An insight on eye biometrics.

- [30] O. M. Parkhi, A. Vedaldi, and A. Zisserman. Deep face recognition. In British Machine Vision Conference, 2015.

- [31] H. Proença. Unconstrained Iris Recognition in Visible Wavelengths, pages 321–358. Springer London, London, 2016.

- [32] K. B. Raja, R. Raghavendra, and C. Busch. Binarized statistical features for improved iris and periocular recognition in visible spectrum. In 2nd International Workshop on Biometrics and Forensics, pages 1–6, 2014.

- [33] C. Rathgeb, A. Uhl, P. Wild, and H. Hofbauer. Design decisions for an iris recognition sdk. In K. Bowyer and M. J. Burge, editors, Handbook of Iris Recognition, Advances in Computer Vision and Pattern Recognition. Springer, second edition edition, 2016.

- [34] O. Ronneberger, P. Fischer, and T. Brox. U-net: Convolutional networks for biomedical image segmentation. In N. Navab, J. Hornegger, W. M. Wells, and A. F. Frangi, editors, Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, pages 234–241, Cham, 2015. Springer International Publishing.

- [35] M. Trokielewicz and E. Bartuzi. Cross-spectral iris recognition for mobile applications using high-quality color images. Journal of Telecommunications and Information Technology, 3:91–97, 2016.

- [36] M. Trokielewicz and A. Czajka. Data-driven segmentation of post-mortem iris images. In 2018 International Workshop on Biometrics and Forensics (IWBF), pages 1–7, June 2018.