Online Estimation and Inference for Robust Policy Evaluation in Reinforcement Learning

Abstract

Recently, reinforcement learning has gained prominence in modern statistics, with policy evaluation being a key component. Unlike traditional machine learning literature on this topic, our work places emphasis on statistical inference for the parameter estimates computed using reinforcement learning algorithms. While most existing analyses assume random rewards to follow standard distributions, limiting their applicability, we embrace the concept of robust statistics in reinforcement learning by simultaneously addressing issues of outlier contamination and heavy-tailed rewards within a unified framework. In this paper, we develop an online robust policy evaluation procedure, and establish the limiting distribution of our estimator, based on its Bahadur representation. Furthermore, we develop a fully-online procedure to efficiently conduct statistical inference based on the asymptotic distribution. This paper bridges the gap between robust statistics and statistical inference in reinforcement learning, offering a more versatile and reliable approach to policy evaluation. Finally, we validate the efficacy of our algorithm through numerical experiments conducted in real-world reinforcement learning experiments.

Keywords: Statistical inference; dependent samples; policy evaluation; reinforcement learning; online learning.

1 Introduction

Reinforcement learning has offered immense success and remarkable breakthroughs in a variety of application domains, including autonomous driving, precision medicine, recommendation systems, and robotics (to name a few, e.g., Murphy, 2003; Kormushev et al., 2013; Mnih et al., 2015; Shi et al., 2018). From recommendation systems to mobile health (mHealth) intervention, reinforcement learning can be used to adaptively make personalized recommendations and optimize intervention strategies learned from retrospective behavioral and physiology data. While the achievements of reinforcement learning algorithms in applications are undisputed, the reproducibility of its results and reliability is still in many ways nascent.

Those recommendation and health applications enjoy great flexibility and affordability due to the development of reinforcement algorithms, despite calling for critical needs for a reliable and trustworthy uncertainty quantification for such implementation. The reliability of such implementations sometimes plays a life-threatening role in emerging applications. For example, in autonomous driving, it is critical to avoid deadly explorations based upon some uncertainty measures in the trial-and-error learning procedure. This substance also extends to other applications including precision medicine and autonomous robotics. From the statistical perspective, it is important to quantify the uncertainty of a point estimate with complementary hypothesis testing to reveal or justify the reliability of the learning procedure.

Policy evaluation plays a cornerstone role in typical reinforcement learning (RL) algorithms such as Temporal Difference (TD) learning. As one of the most commonly adopted algorithms for policy evaluation in RL, TD learning provides an estimator of the value function iteratively with regard to a given policy based on samples from a Markov chain. In large-scale RL tasks where the state space is infinitely expansive, a typical procedure to provide a scalable yet efficient estimation of the value function is via linear function approximation. This procedure can be formulated in a linear stochastic approximation problem (Sutton, 1988; Tsitsiklis and Van Roy, 1997; Sutton et al., 2009; Ramprasad et al., 2022), which is designed to sequentially solve a deterministic equation by a matrix-vector pair of a sequence of unbiased random observations of governed by an ergodic Markov chain.

The earliest and most prototypical stochastic approximation algorithm is the Robbins-Monro algorithm introduced by Robbins and Monro (1951) for solving a root-finding problem, where the function is represented as an expected value, e.g., . The algorithm has generated profound interest in the field of stochastic optimization and machine learning to minimize a loss function using random samples. When referring to an optimization problem, its first-order condition can be represented as , and the corresponding Robbins-Monro algorithm is often referred to as first-order methods, or more widely known as stochastic gradient descent (SGD) in machine learning literature. It is well established in the literature that its averaged version (Ruppert, 1988; Polyak and Juditsky, 1992), as an online first-order method, achieves optimal statistical efficiency when estimating the model parameters in statistical models, which apparently kills the interest in developing second-order methods that use additional information to help the convergence. That being said, it is often observed in practice that first-order algorithms entail significant accuracy loss on non-asymptotic convergence as well as severe instability in the choice of hyperparameters, specifically, the stepsizes (a.k.a. learning rate). In addition, the stepsize tuning further complicates the quantification of uncertainty associated with the algorithm output. Despite the known drawbacks above, first-order stochastic methods are historically favored in machine learning tasks due to their computational efficiency while they primarily focus on estimation. On the other hand, when the emphasis of the task lies on statistical inference, certain computation of the second-order information is generally inevitable during the inferential procedure, which shakes the supremacy of first-order methods over second-order algorithms.

In light of that, we propose a second-order online algorithm which utilizes second-order information to perform the policy evaluation sequentially. Meanwhile, our algorithm can be used for conducting statistical inference in an online fashion, allowing for the characterization of uncertainty in the estimation of the value function. Such a procedure generates no extra per-unit computation and storage cost beyond , which is at least the same as typical first-order stochastic methods featuring statistical inference (see, e.g., Chen et al., 2020 for SGD and Ramprasad et al., 2022 for TD). More importantly, we show theoretically that the proposed algorithm converges faster in terms of the remainder term compared with first-order stochastic approximation methods, and revealed significant discrepancies in numerical experiments. In addition, the proposed algorithm is free from tuning stepsizes, which has been well-established as a substantial criticism of first-order algorithms.

Another challenge to the reliability of reinforcement learning algorithms lies in the modeling assumptions. Most algorithms in RL have been in the “optimism in the face of uncertainty” paradigm where such procedures are vulnerable to manipulation (see some earlier exploration in e.g., Everitt et al., 2017; Wang et al., 2020). In practice, it is often unrealistic to believe that rewards on the entire trajectory follow exactly the same underlying model. Indeed, non-standard behavior of the rewards happens from time to time in practice. The model-misspecification and presence of outliers are indeed very common in an RL environment, especially that with a large time horizon . It is of substantial interest to design a robust policy evaluation procedure. In pursuit of this, our proposed algorithm uses a smoothed Huber loss to replace the least-squares loss function used in classical TD learning, which is tailored to handle both outliers and heavy-tailed rewards. To model outlier observations of rewards in reinforcement learning, we bring the static -contamination model (Huber, 1992) to an online setting with dependent samples. In a static offline robust estimation problem, one aims to learn the distribution of interest, where a sample of size is drawn i.i.d. from a mixture distribution , and denotes an arbitrary outlier distribution. We adopt robust estimation in an online environment, where the observations are no longer independent, and the occurrence time of outliers is unknown. In contrast to the offline setting, future observations cannot be used in earlier periods in an online setting. Therefore, in the earlier periods, there is very limited information to help determine whether an observation is an outlier. In addition to this discrepancy between the online decision process and offline estimation, we further allow the outlier reward models to be potentially different for different time instead of being from a fixed distribution, and such rewards may be arbitrary and even adversarially adaptive to historical information. Besides the outlier model, our model also incorporates rewards with heavy-tailed distributions. This substantially relaxes the boundness condition that is commonly assumed in policy evaluation literature.

We summarize the challenges and contributions of this paper in the following facets.

-

•

We propose an online policy evaluation method with dependent samples and simultaneously conduct statistical inference for the model parameters in a fully online fashion. Furthermore, we build a Bahadur representation of the proposed estimator, which includes the main term corresponding to the asymptotic normal distribution and a higher-order remainder term. To the best of our knowledge, there is no literature establishing the Bahadur representation for online policy evaluation. Moreover, it shows that our algorithm matches the offline oracle and converges strictly faster to the asymptotic distribution than that of a prototypical first-order stochastic method such as TD learning.

-

•

Compared to existing reinforcement learning literature, our proposed algorithm features an online generalization of the -contamination model where the rewards contain outliers or arbitrary corruptions. Our proposed algorithm is robust to adversarial corruptions which can be adaptive to the trajectory, as well as heavy-tailed distribution of the rewards. Due to the existence of outliers, we use a smooth Huber loss where the thresholding parameter is carefully specified to change over time to accommodate the online streaming data. From a theoretical standpoint, a robust policy evaluation procedure forces the update step from to to be a non-linear function of , which brings in additional technical challenges compared to the analysis of classical TD learning algorithms (see e.g., Ramprasad et al., 2022) based on linear stochastic approximation.

-

•

Our proposed algorithm is based on a dedicated averaged version of the second-order method, where in each iteration a surrogate Hessian is obtained and used in the update step. This second-order information enables the proposed algorithm to be free from stepsize tuning while still ensuring efficient implementation. Furthermore, our proposed algorithm stands out distinctly from conventional first-order stochastic approximation approaches which fall short of attaining the optimal offline remainder rate. On the other hand, while deterministic second-order methods do excel in offline scenarios, they lack the online adaptability crucial for real-time applications.

1.1 Related Works

Conducting statistical inference for model parameters in stochastic approximation has attracted great interest in the past decade, with a building foundation of the asymptotic distribution of the averaged version of stochastic approximation first established in Ruppert (1988); Polyak and Juditsky (1992). The established asymptotic distribution has been brought to conduct online statistical inference. For example, Fang et al. (2018) presented a perturbation-based resampling procedure for inference. Chen et al. (2020) proposed two online procedures to estimate the asymptotic covariance matrix to conduct inference. Other than those focused on the inference procedures, Shao and Zhang (2022) established the remainder term and Berry-Esseen bounds of the averaged SGD estimator. Givchi and Palhang (2015); Mou et al. (2021) analyzed averaged SGD with Markovian data. Second-order stochastic algorithms were analyzed in Ruppert (1985); Schraudolph et al. (2007); Byrd et al. (2016) and applied to TD learning in Givchi and Palhang (2015).

Under the online decision-making settings including bandit algorithms and reinforcement learning, a few existing works focused on statistical inference of the model parameters or uncertainty quantification of value functions. Deshpande et al. (2018); Chen et al. (2021a, b); Zhan et al. (2021); Zhang et al. (2021, 2022) studied statistical inference for linear models, -estimation, and the non-Markovian environment with data collected via a bandit algorithm, respectively. Thomas et al. (2015) proposed high-confidence off-policy evaluation based on Bernstein inequality. Hanna et al. (2017) presented two bootstrap methods to compute confidence bounds for off-policy value estimates. Dai et al. (2020); Feng et al. (2021) construct confidence intervals for value functions based on optimization formulations, and Jiang and Huang (2020) derived a minimax value interval, both with i.i.d. sample. Shi et al. (2021a) developed online inference procedures for high-dimensional problems. Shi et al. (2021b) proposed inference procedures for Q functions in RL via sieve approximations. Hao et al. (2021) studied multiplier bootstrap algorithms to offer uncertainty quantification for exploration in fitted Q-evaluation. Syrgkanis and Zhan (2023) studied a re-weighted Z-estimator on episodic RL data and conducted inference on the structural parameter.

The most relevant literature to ours is Ramprasad et al. (2022), who studied a bootstrap online statistical inference procedure under Markov noise using a quadratic SGD and demonstrated its application in the classical TD (and Gradient TD) algorithms in RL. Our proposed procedure and analysis differ in at least two aspects. First, our proposed estimator is a Newton-type second-order approach that enjoys a faster convergence and optimality in the remainder rate. In addition, we show both analytically and numerically that the computation cost of our procedure is typically lower than Ramprasad et al. (2022) for an inference task. Second, our proposed algorithm is a robust alternative to TD algorithms, featuring a non-quadratic loss function to handle the potential outliers and heavy-tailed rewards. There exists limited RL literature on either outliers or heavy-tailed rewards. In a recent work Li and Sun (2023), the author studied online linear stochastic bandits and linear Markov decision processes in the presence of heavy-tailed rewards. While both our work and theirs apply pseudo-Huber loss, our work focuses on policy evaluation with statistical inference guarantees.

1.2 Paper Organization and Notations

The remainder of this paper is organized as follows. In Sections 2 and 3, we present and discuss our proposed algorithm for robust policy evaluation in reinforcement learning. Theoretical results on convergence rates, asymptotic normality, and the Bahadur representation are presented in Section 3. In Section 4, we develop an estimator for the long-run covariance matrix to construct confidence intervals in an online fashion and provide its theoretical guarantee. Simulation experiments are provided in Section 5 to demonstrate the effectiveness of our method. Concluding remarks are given in Section 6. All proofs are deferred to the Appendix.

For every vector , denote , , and . For simplicity, we denote and as the unit sphere and unit ball in centered at . Moreover, we use as the support of the vector . For every matrix , define as the matrix operator norms, and as the largest and smallest singular values of respectively. The symbols () denote the greatest integer (the smallest integer) not larger than (not less than) . We denote . For two sequences , we say when and hold at the same time. We say if . For a sequence of random variables , we denote if there holds , and denote if there holds . Lastly, the generic constants are assumed to be independent of and .

2 Robust Policy Evaluation in Reinforcement Learning

We first review the Least-squares temporal difference methods in RL. Consider a -tuple . Here is the global finite state space, is the set of control (action), is the transition kernel, and is the reward function. One of the core steps in RL is to estimate the accumulative reward (which is also called the state value function) for a given policy:

where is a given discount factor and is any state. Here denote the environment states which are usually modeled by a Markov chain. In real-world RL applications, the state space is often very large such that one cannot directly compute value estimates for every state in the state space. A common approach in the modern RL is to approximate the value function , i.e., let

be a linear approximation of , where contains the model parameters, and is a set of feature vectors that corresponds to the state . Here we write , are linearly independent vectors in and . That is, we use a low dimensional linear approximation (with the basis vectors ) for . Let the matrix and then .

The state value function satisfies the Bellman equation

| (1) |

where is the next state transferred from . Let be the probability transition matrix and define the Bellman operator by . The state function is the unique fixed point of the Bellman operator . When is replaced by , the Bellman equation may not hold.

The classical temporal difference attempts to find such that well approximates the value function . Particularly, the TD algorithm updates

| (2) |

where is the step size which often requires careful tuning. We next illustrate the key point that (2) leads to a good estimator such that is close to . It can be shown that converges to an unknown population parameter that minimizes the expected squared difference of the Bellman equation,

| (3) |

It is easy to see that such exists and satisfies the following first-order condition,

| (4) |

Under the condition that is positive definite in the sense that for all ; see Tsitsiklis and Van Roy (1997), the solution of (4) writes

The estimation equation (2) is a first-order stochastic algorithm that converges to the stochastic root-finding problem (4) using a sequence of observations . In this paper, we refer to it as the Least-squares temporal difference estimator. See Kolter and Ng (2009) for details on the properties of the Least-squares TD estimator.

Least-squares-based methods are oftentimes criticized due to their sensitivity to outliers in data. When there may exist outliers in some observations of the reward , it is a natural call for interest to design a robust estimator of . Following the widely-known classical literature (Huber, 1964; Charbonnier et al., 1994, 1997; Hastie et al., 2009) , we replace the square loss by a smoothed (Pseudo) Huber loss , parametrized by a thresholding parameter . We define a similar fixed point equation with the smoothed Huber loss by

| (5) |

In this section, when we motivate the algorithm, we assume that the fixed point exists 111Here is used for the motivation only. Our algorithm and theory do not depend on the existence of . . As the thresholding parameter goes to infinity, the objective equation in (5) is close to the least-squares loss in (3), and should be close to . When tends to , the problem (5) becomes with a nonsmooth least absolute deviation (LAD) loss, which is out of the scope of this paper. In this paper, we carefully specify to balance the statistical efficiency and the effect of potential outliers in an online fashion (see Theorem 1).

By the first-order condition of (5), we obtain a similar estimation function as (4),

| (6) |

where the function is the differential of the smoothed Huber loss . Instead of using the first-order iteration with the estimation equation (6) as in TD (2), we propose a Newton-type iterative estimator, which avoids the tuning of the learning rate. Newton-type estimators are often referred to as second-order methods when discussed in convex optimization. Nonetheless, the equation (5) is just for illustration purposes, which cannot be directly optimized since appears in the objective function for minimization. On the contrary, our proposed method is a Newton-type method for solving the root-finding problem (6) using a sequence of the observations.

In this paper, we model two types of noise in observed rewards. The first is the classical Huber contamination model (Huber, 1992, 2004), where an -fraction of rewards comes from arbitrary distributions. The second is the heavy-tailed model, where the reward function may admit heavy-tailed distributions. In the following section, we show that our proposed estimator is robust to the aforementioned two noises.

3 Online Newton-type Method for Parameter Estimation

In the following, we introduce an online Newton-type method for estimating the parameter in the presence of outliers and heavy-tailed noise in the reward function . For ease of presentation, we denote the observations by , , and , where is the state at time . Here for each observation in the sample. Our objective is to propose an online estimator by the estimation function (6), which can be rewritten as

| (7) |

based on a sequence of dependent observations .

At iteration , our proposed Newton-type estimator updates by

| (8) |

Here is an empirical information matrix of the estimation equation (7), as

| (9) |

where is the thresholding parameter in the Huber loss. We let tend to infinity to eliminate the bias generated by the smoothed Huber loss.

It is noteworthy to mention that the matrix is not the Hessian matrix of the objective function on the right-hand side of (5). As discussed in the previous section, (5) cannot be directly optimized, nor do they lead to M-estimation problems. Indeed, our proposed update (8) is a Newton-type method to find the root of (7) using a sequence of observations . In the following section, we will show the use of the matrix helps for desirable convergence properties.

It should be noted that (8) can be implemented efficiently in a fully-online manner, i.e., without storage of the trajectory of historical information. Specifically, we write (8) as , with each of the item on the right-hand side being a running average. It is easy to see that the averaged estimator and the vector

| (10) |

can both be updated online. In addition, the inverse can be directly and efficiently computed online by the inverse recursion formulation. By the Sherman-Morrison formula, we have

| (11) | ||||

Here we note that both terms and inside the brackets on the right-hand side of (11) are scalars in , not matrices.

The complete algorithm is presented in Algorithm 1. We refer to it as the Robust Online Policy Evaluation (ROPE). Compared with existing stochastic approximation algorithms for TD learning (Durmus et al., 2021; Mou et al., 2021; Ramprasad et al., 2022), our ROPE algorithm does not need to tune the step size.

Since performing iterations of in (11) requires an initial invertible , we shall compute from the first samples that

| (12) |

which serves as the initial quantities of (10)–(11) in order to compute the forthcoming iterations. Here is a given initial parameter, and is a specified initial threshold level.

3.1 Convergence Rate of ROPE

Before illustrating how to conduct statistical inference on the parameter , we first provide theoretical results for our proposed ROPE method.

To characterize the weak dependence of the sequence of environment states, we use the concept of -mixing. More precisely, we assume is a sequence of -mixing dependent variables, which satisfy

| (13) |

for all and all , where . The -mixing dependence covers the irreducible and aperiodic Markov chain, which is typically used to model the states sampling in reinforcement learning (RL) (Rosenblatt, 1956; Rio, 2017, and others). The mixing rate identifies the strength of dependence of the sequence. In this paper, we assume the following condition on the mixing rate.

Condition (C1).

The sequence is a stationary -mixing sequence, where the mixing rate satisfies for some .

It is easy to see that (C1) holds when is an irreducible and aperiodic finite-state Markov chain. In addition, we also assume the following boundedness condition on that is commonly required by the RL literature (Sutton and Barto, 2018; Ramprasad et al., 2022).

Condition (C2).

There exists a constant such that .

Next, we assume the matrix has a bounded condition number.

Condition (C3).

There exists a constant such that , where and denote the largest and the smallest singular values, respectively.

When is an irreducible and aperiodic Markov chain, Tsitsiklis and Van Roy (1997) proved that is positive definite, i.e., for all . This indicates that is nonsingular and hence (C3) holds. Finally, we provide conditions on the temporal difference error .

Condition (C4).

The temporal-difference error comes from the distribution , where . The distribution is an arbitrary distribution, and satisfies that holds uniformly for some constants and .

We assume that the temporal-difference error comes from the Huber contamination model. The outlier distribution can be arbitrary and the true distribution has -th order of moment (), which does not necessarily indicate a variance exists when . Therefore, our assumption largely extends the boundedness condition of the reward function in RL literature (Sutton and Barto, 2018; Ramprasad et al., 2022). In Condition (C4), controls the ratio of outliers among samples. Particularly, means that there are outliers among samples . Given the above conditions, by selecting the thresholding parameter as defined in (8), we can obtain the following statistical rate for our proposed ROPE estimator.

Theorem 1.

The error bounds in (14) contains five terms. In the sequel, we will discuss each of these terms individually. The first term comes from the impact of outliers among the samples. Here the contamination ratio should tend to zero; otherwise, it is impossible to obtain a consistent estimator for . The second term is due to the bias from smoothed Huber’s loss for mean estimation. The third and the fourth term in (14) is the classical statistical rate due to the variance and boundedness incurred by thresholding, respectively. These two terms commonly appear in the Bernstein-type inequalities (see, e.g. Bennett, 1962). As we can see from the third term, the convergence rate has a phase transition between the regimes of finite variance and infinite variance (see also Corollary 3 below). This phenomenon has also been observed in different estimation problems with Huber loss; see Sun et al. (2020) and Fan et al. (2022). In the presence of a higher moment condition, specifically , we can eliminate the fourth term by applying a more delicate analysis (See Proposition 5 below and Proposition 8 in Appendix for more details). The last term represents how quickly the proposed iterative algorithm converges. Given an initial value with an error , the proposed second-order method enjoys a local quadratic convergence, i.e., the error evolves from to after iterations. This term decreases super-exponentially fast, a characteristic convergence rate often encountered in the realm of second-order methods (see, e.g., Nesterov, 2003). Specifically, when the sample size, which is equivalent to the number of iterations, is reasonably large, the last term is dominated by the statistical error in the other terms of (14). The assumption on the initial error for some is mild. A valid initial value can be obtained by the solution to the following estimation equation using a subsample with fixed size before running Algorithm 1 with a specified constant threshold . The above equation can be solved efficiently by classical first-order root-finding algorithms.

To highlight the relationship between the convergence rate and the outlier rewards, we present the following corollary which gives a clear statement on how the rate depends on the number of outliers .

Corollary 2.

Suppose the conditions in Theorem 1 hold with . Let the thresholding parameter with some and tend to infinity. We have

where is the number of outliers among the samples of size .

The corollary shows that, when there are outliers, our ROPE can still estimate consistently. Note that in is the exponent specified by the practitioner. A smaller allows more outliers among the samples. Furthermore, when there are outliers and , the ROPE estimator achieves the optimal rate , up to a logarithm term.

The next corollary indicates the impact of the tail of rewards on the convergence rate. For a clear presentation, we discuss the impact under the case without outliers, i.e., .

Corollary 3.

Suppose the conditions in Theorem 1 hold with the contamination rate and let tend to infinity.

-

•

When , we specify . Then

-

•

When , we specify . Then

Corollary 3 illustrates a sharp phase transition between the regimes of infinite variance and finite variance , and such transition is smooth and optimal. The rate of convergence established in Corollary 3 matches the offline oracle with independent samples in the estimation of linear regression model (Sun et al., 2020), ignoring the logarithm term. A similar corollary can be established for this phase transition of the convergence rate when the contamination rate .

3.2 Asymptotic Normality and Bahadur Representation

In the following theorem, we give the Bahadur representation for the proposed estimator . The Bahadur representation provides a more refined rate for the estimation error. Moreover, the asymptotic normality can be established by applying the central limit theorem to the main term in the representation.

Theorem 4.

Theorem 4 provides a Bahadur representation for the proposed estimator , which contains a main term corresponding to an asymptotic normal distribution in (16), and a higher-order remainder term. The asymptotic normality and its concrete form of the covariance matrix pave the way for further research on conducting statistical inference efficiently in an online fashion, demonstrated in Section 4 below. To achieve the asymptotic normality, additional conditions and are required on the specification of the thresholding parameters . These conditions easily hold when the practitioner specifies with and the number of outliers satisfies . We further note that to establish the Bahadur representation in the above theorem, we require the sixth moment to exist (i.e., ). This condition may be weakened and we believe a similar Bahadur representation holds for . We leave this theoretical question to future investigation.

It is worthwhile to note that even for the case where there is no contamination, i.e., , our result is still new. As far as we know, there is no literature establishing the Bahadur representation for the TD method. To highlight the rate of convergence concisely in the remainder term, we provide the following corollary under .

Proposition 5.

Suppose the conditions of Theorem 4 hold with and for and some , we have for any nonzero with ,

In this proposition, we specify to ensure that the remainder term possesses an order of . Notably, this result is not a direct corollary of Theorems 1 and 4, since the rate of in (14) of Theorem 1 is not sharp enough to guarantee the term in (15) converges as fast as in Proposition 5. To reach this fast rate of the remainder, we establish an improved rate of Theorem 1 under the stronger assumptions, achieved by eliminating the fourth term of (14). The detailed formal result of the improved rate of is relegated to Proposition 8 in Appendix.

Remark 1.

We provide some discussion on the remainder term in the Bahadur representation. Since we have not found any result on the Bahadur representation for TD algorithm in literature, we compare it with SGD instead, as the theoretical properties of TD are expected to be similar to SGD. For i.i.d. samples, Polyak and Juditsky (1992) shows that the average of SGD with learning rate is consistent when . Their proof indicates that the remainder term is at the order of (assuming that the dimension is a constant for simplicity). It is easy to see that SGD with any choice of leads to a strictly slower rate of the remainder than that of our proposed method, .

4 Estimation of Long-Run Covariance Matrix and Online Statistical Inference

In the above section, we provide an online Newton-type algorithm to estimate the parameter . As we can see in Theorem 4, the proposed estimator has an asymptotic variance with a sandwich structure . To conduct statistical inference simultaneously with ROPE method we proposed in Section 3, we propose an online plug-in estimator for this sandwich structure. Note that the online estimator of is proposed and utilized in (11) of the estimation algorithm. It remains to construct an online estimator for in (17).

Developing an online estimator of with dependent samples is intricate compared to the case of independent ones. As the samples are dependent, the covariance matrix is an infinite sum of the series of time-lag covariance matrices. To estimate the above long-run covariance matrix , we first rewrite the definition of in (17) into the following form

Next, we replace each term in (17) with its empirical counterpart using the samples. Meanwhile, to handle outliers, we replace by . In addition, the infinite sum in (17) is not feasible for direct estimation. Nonetheless, Condition (C1) on the mixing rate of allows us to approximate it by effectively estimating the first terms only, where is a pre-specified constant. In summary, we can construct the following estimator for the covariance matrix ,

| (18) | ||||

To perform a fully-online update for the estimator , we define

and the above equation (18) can be rewritten as,

In practice, at the -th step, we keep only the terms in memory, and the covariance matrix can be updated by

| (19) |

The proposed estimator complements the estimator in (11) to construct an estimator of the sandwich form that appears in the asymptotic distribution in Theorem 4.

Specifically, we can construct the confidence interval for the parameter using the asymptotic distribution in (16) in the following way. For any unit vector , a confidence interval with nominal level is

| (20) |

where and denotes the -th quantile of a standard normal distribution.

Remark 2.

We provide some insights into the computational complexity of our inference procedure. Both estimators and can be computed in an per-iteration computation cost, as discussed in (11). In addition, the computation requires three vector-matrix multiplication with the same computation complexity .

On the contrary, the online bootstrap method proposed in Ramprasad et al. (2022) has a per-iteration complexity of at least , where the resampling size in the bootstrap is usually much larger than in practice.

In the following theorem, we provide the theoretical guarantee of the above construction of the confidence intervals by showing that our online estimator of the covariance matrix, , is consistent.

Theorem 6.

Theorem 6 provides an upper bound on the estimation error . To achieve the consistency on , we specify the thresholding parameter for , such that

In this case, as long as the fraction of outliers satisfies , we obtain a consistent estimator of the matrix . In other words, our proposed robust algorithm allows outliers, ignoring the dimension. We summarize the result in the following corollary.

Corollary 7.

Suppose the conditions of Theorem 4 hold, and the fraction of outliers satisfies , where we specify and . Then given a vector and a pre-specified confidence level , we have

where and denotes the -th quantile of a standard normal distribution.

5 Numerical Experiments

In this section, we assess the performance of our ROPE algorithm by conducting experiments under various settings. We construct all confidence intervals with a nominal coverage of . Throughout the majority of experiments, we compare our method with the online bootstrap method with the linear stochastic approximation proposed in Ramprasad et al. (2022), which we refer to as LSA. The hyperparameters for LSA are selected based on the settings described in Sections 5.1 and 5.2 of Ramprasad et al. (2022).

5.1 Parameter Inference for Infinite-Horizon MDP

In the first experiment, we focus on an infinite-horizon Markov Decision Process (MDP) setting. Specifically, we create an environment with a state space of size and an action space of size . The dimension of the features is fixed at . The transition probability kernel of the MDP, the state features, and the policy under evaluation are all randomly generated. By employing the Bellman equation (1), we can compute the expected rewards at each state under the policy. To introduce variability, we add noise to the expected rewards, drawn from different distributions such as the standard normal distribution, distribution with a degree of freedom of , and standard Cauchy distribution. The main objective of this experiment is to construct a confidence interval for the first coordinate of the true parameter .

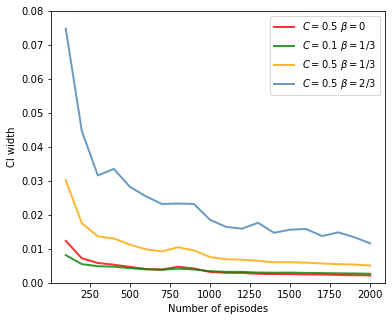

We set the thresholding level as where is a positive constant. We begin with an investigation of the influence of the parameters and on the coverage probability and width of the confidence interval in our ROPE algorithm. Figure 1 displays the results, revealing that the performance is relatively robust to variations in and , irrespective of the type of noise applied. Notably, although our theoretical guarantees are based on the noises with a -th order moment (See Theorem 4), the experiment indicates that our method exhibits a wider range of applicability.

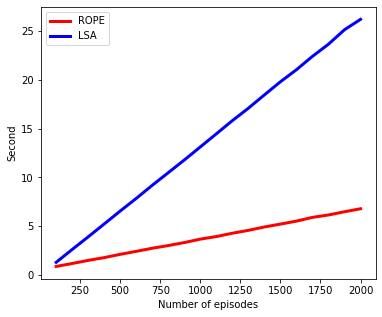

In subsequent experiments, we specify and for the ROPE algorithm and compare it with the LSA method. Alongside the coverage probability and width of the confidence interval, we also assess the average running time for both methods. The findings are depicted in Figure 2. It is evident that, under a light-tailed noise that admits normal distribution, the two methods yield comparable results, with the ROPE method consistently outperforming LSA in terms of the confidence interval width. When the noise exhibits heavy-tailed characteristics, the confidence interval width of LSA is notably larger than that of ROPE. Additionally, the runtime of ROPE is approximately four times shorter than that of LSA.

In the LSA method, the step size is determined by the expression , which relies on two positive hyperparameters and . In this experiment, we investigate the sensitivity of LSA method on these parameters. For comparison, we also present the result of our ROPE algorithm, which does not require any hyperparameter tuning. In this particular experiment, we specify the noise distribution to be standard normal, and directly apply the online Newton step on the square loss (as opposed to the pseudo-Huber loss). Notably, Figure 3 illustrates that the LSA method is sensitive to the parameter . Specifically, when takes on larger values, the LSA method generates significantly wider confidence intervals than that of LSA with well-tuned hyperparameters, which is undesirable in practical applications. Meanwhile, our ROPE method always generates confidence intervals with a valid width and comparable coverage rate.

5.2 Value Inference for FrozenLake RL Environment

In this section, we consider the Frozenlake environment provided by OpenAI gym, which involves a character navigating an grid world. The character’s objective is to start from the first tile of the grid and reach the end tile within each episode. If the character successfully reaches the target tile, a reward of is obtained; otherwise, the reward is . In our setup, we generate the state features randomly, with a dimensionality of . The policy is trained using -learning, and the true parameter can be explicitly computed using the transition probability matrix. Under a contaminated reward model, we introduce random perturbations by replacing the true reward with a value uniformly sampled from the range with probability . Our main goal is to infer the value at the initial state.

Following the approach in Section 5.1, we proceed to examine the sensitivity of ROPE to the thresholding parameters and . We present the results in Figure 4, and observe that the performance of ROPE is influenced by both the contamination rate and the chosen thresholding parameter. Specifically, when is small, a larger thresholding parameter tends to yield better outcomes. However, as increases, the use of a large thresholding parameter can negatively impact performance.

Subsequently, we set the values , when or , and when for ROPE algorithm, and compare its performance with that of the LSA method. Figure 5 displays the results, which clearly demonstrates that our ROPE method consistently outperforms LSA in terms of coverage rates, CI widths, and running time. The advantage of ROPE becomes more pronounced as the contamination rate increases.

6 Concluding Remarks

This paper introduces a robust online Newton-type algorithm designed for policy evaluation in reinforcement learning under the influence of heavy-tailed noise and outliers. We demonstrate the estimation consistency as well as the asymptotic normality of our estimator. We further establish the Bahadur representation and show that it converges strictly faster than that of a prototypical first-order method such as TD. To further conduct statistical inference, we propose an online approach for constructing confidence intervals. Our experimental results highlight the efficiency and robustness of our approach when compared to the existing online bootstrap method. In future research, it would be intriguing to explore online statistical inference for off-policy evaluation.

References

- Bennett (1962) Bennett, G. (1962), “Probability inequalities for the sum of independent random variables,” Journal of the American Statistical Association, 57, 33–45.

- Berkes and Philipp (1979) Berkes, I. and Philipp, W. (1979), “Approximation theorems for independent and weakly dependent random vectors,” The Annals of Probability, 7, 29–54.

- Billingsley (1968) Billingsley, P. (1968), Convergence of Probability Measures, Wiley Series in Probability and Mathematical Statistics, Wiley.

- Byrd et al. (2016) Byrd, R. H., Hansen, S. L., Nocedal, J., and Singer, Y. (2016), “A stochastic quasi-Newton method for large-scale optimization,” SIAM Journal on Optimization, 26, 1008–1031.

- Charbonnier et al. (1994) Charbonnier, P., Blanc-Feraud, L., Aubert, G., and Barlaud, M. (1994), “Two deterministic half-quadratic regularization algorithms for computed imaging,” in Proceedings of 1st International Conference on Image Processing.

- Charbonnier et al. (1997) — (1997), “Deterministic edge-preserving regularization in computed imaging,” IEEE Transactions on Image Processing, 6, 298–311.

- Chen et al. (2021a) Chen, H., Lu, W., and Song, R. (2021a), “Statistical inference for online decision making: In a contextual bandit setting,” Journal of the American Statistical Association, 116, 240–255.

- Chen et al. (2021b) — (2021b), “Statistical inference for online decision making via stochastic gradient descent,” Journal of the American Statistical Association, 116, 708–719.

- Chen et al. (2020) Chen, X., Lee, J. D., Tong, X. T., and Zhang, Y. (2020), “Statistical inference for model parameters in stochastic gradient descent,” The Annals of Statistics, 48, 251–273.

- Dai et al. (2020) Dai, B., Nachum, O., Chow, Y., Li, L., Szepesvári, C., and Schuurmans, D. (2020), “Coindice: Off-policy confidence interval estimation,” Advances in Neural Information Processing Systems.

- Deshpande et al. (2018) Deshpande, Y., Mackey, L., Syrgkanis, V., and Taddy, M. (2018), “Accurate inference for adaptive linear models,” in International Conference on Machine Learning, PMLR, pp. 1194–1203.

- Doukhan et al. (1994) Doukhan, P., Massart, P., and Rio, E. (1994), “The functional central limit theorem for strongly mixing processes,” in Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, vol. 30, pp. 63–82.

- Durmus et al. (2021) Durmus, A., Moulines, E., Naumov, A., Samsonov, S., and Wai, H.-T. (2021), “On the stability of random matrix product with Markovian noise: Application to linear stochastic approximation and TD learning,” in Proceedings of Thirty Fourth Conference on Learning Theory.

- Everitt et al. (2017) Everitt, T., Krakovna, V., Orseau, L., and Legg, S. (2017), “Reinforcement learning with a corrupted reward channel,” in Proceedings of the 26th International Joint Conference on Artificial Intelligence.

- Fan et al. (2022) Fan, J., Guo, Y., and Jiang, B. (2022), “Adaptive Huber regression on Markov-dependent data,” Stochastic Processes and their Applications, 150, 802–818.

- Fang et al. (2018) Fang, Y., Xu, J., and Yang, L. (2018), “Online bootstrap confidence intervals for the stochastic gradient descent estimator,” Journal of Machine Learning Research, 19, 1–21.

- Feng et al. (2021) Feng, Y., Tang, Z., Zhang, N., and Liu, Q. (2021), “Non-asymptotic confidence intervals of off-policy evaluation: Primal and dual bounds,” in International Conference on Learning Representations.

- Freedman (1975) Freedman, D. A. (1975), “On tail probabilities for martingales,” The Annals of Probability, 3, 100–118.

- Givchi and Palhang (2015) Givchi, A. and Palhang, M. (2015), “Quasi newton temporal difference learning,” in Proceedings of the Sixth Asian Conference on Machine Learning.

- Hanna et al. (2017) Hanna, J., Stone, P., and Niekum, S. (2017), “Bootstrapping with models: Confidence intervals for off-policy evaluation,” in Proceedings of the AAAI Conference on Artificial Intelligence.

- Hao et al. (2021) Hao, B., Ji, X., Duan, Y., Lu, H., Szepesvari, C., and Wang, M. (2021), “Bootstrapping fitted q-evaluation for off-policy inference,” in International Conference on Machine Learning.

- Hastie et al. (2009) Hastie, T., Tibshirani, R., Friedman, J. H., and Friedman, J. H. (2009), The Elements of Statistical Learning: Data Mining, Inference, and Prediction, vol. 2, Springer.

- Huber (1964) Huber, P. J. (1964), “Robust estimation of a location parameter,” The Annals of Mathematical Statistics, 35, 73–101.

- Huber (1992) — (1992), “Robust estimation of a location parameter,” in Breakthroughs in Statistics, Springer.

- Huber (2004) — (2004), Robust Statistics, vol. 523, John Wiley & Sons.

- Jiang and Huang (2020) Jiang, N. and Huang, J. (2020), “Minimax value interval for off-policy evaluation and policy optimization,” Advances in Neural Information Processing Systems.

- Kolter and Ng (2009) Kolter, J. Z. and Ng, A. Y. (2009), “Regularization and feature selection in least-squares temporal difference learning,” in Proceedings of the 26th Annual International Conference on Machine Learning.

- Kormushev et al. (2013) Kormushev, P., Calinon, S., and Caldwell, D. G. (2013), “Reinforcement learning in robotics: Applications and real-world challenges,” Robotics, 2, 122–148.

- Li and Sun (2023) Li, X. and Sun, Q. (2023), “Variance-aware robust reinforcement learning with linear function approximation under heavy-tailed rewards,” arXiv e-prints, arXiv:2303.05606.

- Mnih et al. (2015) Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. (2015), “Human-level control through deep reinforcement learning,” Nature, 518, 529–533.

- Mou et al. (2021) Mou, W., Pananjady, A., Wainwright, M. J., and Bartlett, P. L. (2021), “Optimal and instance-dependent guarantees for Markovian linear stochastic approximation,” arXiv e-prints, arXiv:2112.12770.

- Murphy (2003) Murphy, S. A. (2003), “Optimal dynamic treatment regimes,” Journal of the Royal Statistical Society: Series B: Statistical Methodology, 65, 331–355.

- Nesterov (2003) Nesterov, Y. (2003), Introductory Lectures on Convex Optimization: A Basic Course, vol. 87, Springer Science & Business Media.

- Polyak and Juditsky (1992) Polyak, B. T. and Juditsky, A. B. (1992), “Acceleration of stochastic approximation by averaging,” SIAM Journal on Control and Optimization, 30, 838–855.

- Ramprasad et al. (2022) Ramprasad, P., Li, Y., Yang, Z., Wang, Z., Sun, W. W., and Cheng, G. (2022), “Online bootstrap inference for policy evaluation in reinforcement learning,” Journal of the American Statistical Association, To appear.

- Rio (2017) Rio, E. (2017), Asymptotic Theory of Weakly Dependent Random Processes, vol. 80, Springer.

- Robbins and Monro (1951) Robbins, H. and Monro, S. (1951), “A stochastic approximation method,” The Annals of Mathematical Statistics, 22, 400–407.

- Rosenblatt (1956) Rosenblatt, M. (1956), “A central limit theorem and a strong mixing condition,” Proceedings of the National Academy of Sciences, 42, 43–47.

- Ruppert (1985) Ruppert, D. (1985), “A Newton-Raphson Version of the Multivariate Robbins-Monro Procedure,” The Annals of Statistics, 13, 236–245.

- Ruppert (1988) — (1988), “Efficient estimations from a slowly convergent Robbins-Monro process,” Tech. rep., Cornell University Operations Research and Industrial Engineering.

- Schraudolph et al. (2007) Schraudolph, N. N., Yu, J., and Günter, S. (2007), “A stochastic quasi-Newton method for online convex optimization,” in Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics.

- Shao and Zhang (2022) Shao, Q.-M. and Zhang, Z.-S. (2022), “Berry–Esseen bounds for multivariate nonlinear statistics with applications to M-estimators and stochastic gradient descent algorithms,” Bernoulli, 28, 1548–1576.

- Shi et al. (2018) Shi, C., Fan, A., Song, R., and Lu, W. (2018), “High-dimensional A-learning for optimal dynamic treatment regimes,” The Annals of Statistics, 46, 925–957.

- Shi et al. (2021a) Shi, C., Song, R., Lu, W., and Li, R. (2021a), “Statistical inference for high-dimensional models via recursive online-score estimation,” Journal of the American Statistical Association, 116, 1307–1318.

- Shi et al. (2021b) Shi, C., Zhang, S., Lu, W., and Song, R. (2021b), “Statistical inference of the value function for reinforcement learning in infinite-horizon settings,” Journal of the Royal Statistical Society. Series B: Statistical Methodology, 84, 765–793.

- Sun et al. (2020) Sun, Q., Zhou, W.-X., and Fan, J. (2020), “Adaptive Huber Regression,” Journal of the American Statistical Association, 115, 254–265.

- Sutton (1988) Sutton, R. (1988), “Learning to predict by the method of temporal differences,” Machine Learning, 3, 9–44.

- Sutton and Barto (2018) Sutton, R. and Barto, A. (2018), Reinforcement Learning, second edition: An Introduction, Adaptive Computation and Machine Learning series, MIT Press.

- Sutton et al. (2009) Sutton, R. S., Maei, H. R., Precup, D., Bhatnagar, S., Silver, D., Szepesvári, C., and Wiewiora, E. (2009), “Fast gradient-descent methods for temporal-difference learning with linear function approximation,” in Proceedings of the 26th Annual International Conference on Machine Learning.

- Syrgkanis and Zhan (2023) Syrgkanis, V. and Zhan, R. (2023), “Post-Episodic Reinforcement Learning Inference,” arXiv e-prints, arXiv:2302.08854.

- Thomas et al. (2015) Thomas, P., Theocharous, G., and Ghavamzadeh, M. (2015), “High confidence policy improvement,” in International Conference on Machine Learning, PMLR.

- Tsitsiklis and Van Roy (1997) Tsitsiklis, J. and Van Roy, B. (1997), “An analysis of temporal-difference learning with function approximation,” IEEE Transactions on Automatic Control, 42, 674–690.

- Vershynin (2010) Vershynin, R. (2010), “Introduction to the non-asymptotic analysis of random matrices,” arXiv preprint arXiv:1011.3027.

- Wang et al. (2020) Wang, J., Liu, Y., and Li, B. (2020), “Reinforcement learning with perturbed rewards,” in Proceedings of the AAAI conference on Artificial Intelligence.

- Zhan et al. (2021) Zhan, R., Hadad, V., Hirshberg, D. A., and Athey, S. (2021), “Off-policy evaluation via adaptive weighting with data from contextual bandits,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp. 2125–2135.

- Zhang et al. (2021) Zhang, K., Janson, L., and Murphy, S. (2021), “Statistical inference with M-estimators on adaptively collected data,” Advances in Neural Information Processing Systems, 34, 7460–7471.

- Zhang et al. (2022) — (2022), “Statistical inference after adaptive sampling in non-Markovian environments,” arXiv preprint arXiv:2202.07098.

7 Appendix

7.1 Technical Lemmas

Lemma 1.

Let be -mixing sequence with . Assume that and . Then for any fixed and , we have some constant such that

Proof.

We divide the -tuple into different subsets , where . Here for , and . is a sufficiently large constant which will be specified later. Then we have . Without loss of generality, we assume that is an even integer.

Let , then we know and holds. For all and , there holds for all . By Theorem 2 of Berkes and Philipp (1979), there exists a sequence of independent variables , with having the same distribution as , and

For any , we can take so that

| (21) | ||||

where is some constant. Next, we bound the variance of . From equation (20.23) of Billingsley (1968) we know that for arbitrary , there is

Then we compute that

| (22) | ||||

Notice that ’s are all uniformly bounded by , we can apply Bernstein’s inequality (Bennett, 1962) and yield

We take for some large enough (note that ). Then we have that

| (23) |

Combining (21) and (23) we have that

| (24) |

Next we denote . We can similarly show that

| (25) |

Lemma 2.

Let be random vectors in . Let . Assume that

Then for every , there is

for some sufficiently large.

Proof.

Lemma 3.

Let be a random matrix with , , be the -field generated by . Then for any -field , there holds

where

Proof.

By elementary inequality of matrix, we know that

For every element of , by Lemma 4.4.1 of Berkes and Philipp (1979) we have that

Therefore, we have that

The proof is complete. ∎

Lemma 4.

(Approximation of pseudo-Huber gradient) The gradient of pseudo-Huber loss satisfies

| (26) | ||||

uniformly for . And

| (27) |

holds for all .

Proof.

It is easy to compute that

It is not hard to see that

This proves (27). Furthermore, when , we have

for all . Similarly,

for . The proof is complete. ∎

Lemma 5.

For any sequence where and , there hold

Here the constant only depends on the parameter .

7.2 Proof of Results in Section 2

Proof of Theorem 1.

For each , when , we denote

| (29) |

where . Given a sufficiently large constant , we define the event

| (30) | ||||

where the constant is a constant that does not depend on . We will show that , where is some constant and is some constant only depends on . Let us prove it by induction on . By the choice of initial parameter , we know (30) holds for . For , from (8) we have that

| (31) |

For the second term on the right-hand side of (31), we have that

where the second equality holds because of the fact that , the last equality uses the inductive hypothesis. Substitute it into (31), we have

| (32) |

where and .

Under event , by Lemma 5 there exists a constant such that

| (33) |

Similarly, by Lemma 5 there exists a constant such that

| (34) |

By Lemma 6, (34) and (42) we know that under event , for every there exist constants (which only depend on ), such that

By Lemma 8 we know that

| (35) |

In this part, we mainly consider the case where and can be arbitrary. A special case where and will be presented in Proposition 8 below. By Lemma 7 we have that when , there is

By substituting the above inequalities into (32), we have that

| (36) |

where can be taken to be sufficiently large, given fixed. Here the second inequality holds because can be sufficiently small for by taking sufficiently large. Meanwhile, as , the last two terms both have order of . Obviously, the rest two events of (30) are contained in (36). Therefore, the inductive hypothesis that , is proved.

For , there holds

which proves the theorem. ∎

Lemma 6.

(Bound of the pseudo-Huber Hessian matrix) Under the same assumptions as in Theorem 1, there exists uniform constants and , such that the Hessian matrix satisfies

Proof.

We note that

For the first term, there is

holds for all and . Therefore we have that

| (37) | ||||

For the second term, we first prove that

| (38) |

Let be the -net of the unit ball . By Lemma 5.2 of Vershynin (2010) we know that . Then we have that

Applying Lemma 1 to every we can obtain that

for some constant . Therefore

which proves (38). Next we prove that when are normal data, for every ,

Indeed, denote , we have that

where the third line uses Lemma 4, and . Then clearly we have that . Therefore, by the choice of and Lemma 5, we have

| (39) |

Under event , from (34) we know there is

| (40) | ||||

Therefore combining (38), (39) and (40) we have that

| . | (41) | |||

Combining (37) and (41), we have that

which proves the lemma.

As a corollary, we can show that under event ,

| (42) |

∎

Lemma 7.

Proof.

Proof of i). We prove the bound coordinate-wisely. When has no outlier, for the -th coordinate, we first prove that for every , there is

Indeed, , we have that

Therefore, by the choice of and Lemma 5, we have

| (43) |

Next, when all data are normal, we prove the rate of

We basically rehash the proof in Lemma 1.

Case 1: . We divide the -tuple into different subsets , where . Here for , and . Then we have . Without loss of generality, we assume that is an even integer.

Let and , then we know (since ) and holds. For all and , there holds for all . By Theorem 2 of Berkes and Philipp (1979), there exists a sequence of independent variables , with having the same distribution as , and

For any , we can take so that

| (44) | ||||

where is some constant. Next, we bound the variance of . From equation (20.23) of Billingsley (1968) we know that for arbitrary , there is

Then we compute that

Notice that ’s are all uniformly bounded by , we can apply Bernstein’s inequality (Bennett, 1962) and yield

We take for some large enough (note that ). Then we have that

| (45) |

Combining (44) and (45) we have that

| (46) |

Next we denote , then we can similarly show that

| (47) |

Combining (46) and (47) we can prove that

| (48) |

By combining it with (43) we have that

Proof of ii). When and , we define the thresholding level , and consider the truncated random variables . Here is a sufficiently large constant. Then we have that

| (49) |

Similarly as in (43), we have that

| (50) |

Similarly as in the proof of (48), for every , there exists such that

| (51) |

By the choice of , we know that

Combining (49), (50) and (51) we have that

When there are fraction of outliers, denote as the index set, we have

which proves the lemma. ∎

Lemma 8.

(Bound of the mixed term) Under the same assumptions as in Theorem 1, for every , there exist constants and , such that

Proof.

Firstly, from (39) and Lemma 5, under the event , we can bound the term

| (52) |

For ease of notation, we first consider the case when there is no outlier. Similar as in the proof of Lemma 1, for each , we evenly divide the tuple into subsets , where . Here for , and . is a sufficiently large constant which will be specified later. Then we have . We further denote . Without loss of generality, we assume that is an even integer. For each , suppose , we construct the following random variable

| (53) |

When , we take the sum for the terms in . For , we take . Then by Lemma 9 below we have that,

| (54) |

Moreover, from the proof of Lemma 9, we can obtain that under , there is

| (55) |

It left to bound the term . To be more precise, we define the -field , and construct the random variable

Then by (55) we know that

We further set

then there is

| (56) |

Notice that are martingale differences, and there is for . Therefore we have

By Lemma 3 there is

for some large enough. Then Markov’s inequality yields

| (57) |

It is direct to verify that

Then we can apply Lemma 2 and yield

Combining it with (57) and (56), we have that

A similar result holds for the even term. Note that , and together with (54) we have

When taking the outliers into consideration, we have that

under the event , which proves the lemma by combining it with (52). ∎

Lemma 9.

Let be defined in (53), for every , there exist constants and , such that

Proof.

Under event , using Lemma 5, we have

| (58) |

For the first term, by Lemma 6 and (42) we have that under event ,

On the other hand, there is

Therefore, we have that

| (59) |

Denote

It is direct to verify that

| (60) |

Therefore, it left bound

To this end, we further denote

We define the -field , by Lemma 3 we have that

Therefore, by Markov’s inequality

| (61) |

It is direct to verify that

Then we can apply Lemma 2 and yield

Combining it with (60) and (61), we have that

A similar result holds for the average of , where . Substitute it into (59), we have that

which proves the lemma. ∎

7.3 Proof of Results in Section 3.2

Proof of Theorem 4.

From the proof of Theorem 1, as tends to infinity, we can obtain that

| (62) |

where . Denote , we first bound the term

| (63) | ||||

where the last inequality uses the moment assumption on and similar argument as in (43). To bound the first term, we directly compute its variance and use Chebyshev’s inequality. More precisely, by equation (20.23) of Billingsley (1968), for arbitrary and each coordinate , there is

Therefore we have that

for some constant . Therefore, by Chebyshev’s inequality, we have that

| (64) |

Combining (62), (63) and (64), given a unit vector , we have

where the remainder becomes under some rate constraints. Here the main term is the average of strict stationary and strong mixing sequence . By Lemma 10, the conditions in Theorem 1 of Doukhan et al. (1994) is fulfilled. Then we can apply Theorem 1 of Doukhan et al. (1994) and yield

where

and is defined in (17). Therefore the theorem is proved. ∎

Lemma 10.

Let the random variable satisfies for some , and (where ). Then we have that

where is the inverse function of , i.e. , , and .

Proof.

By Markov’s inequality, we have that

Therefore, we have that

as long as , which proves the lemma. ∎

Proposition 8.

Proof.

7.4 Proof of Results in Section 4

Proof of Theorem 6.

For simplicity we denote , . Then by (17) we know

Under event (30), for , we have that

Therefore we have that

| (66) |

We first prove

| (67) |

for some . By equation (20.23) of Billingsley (1968), for each , there is

Therefore we can obtain that

for , which proves (67). Next we prove that for , there holds

| (68) | ||||

By the proof of Lemma 6, we know that

where is a -net of . For , Denote , then by (13) we know that

for all for all . We basically rehash the proof in Lemma 1 and obtain that

Here the only difference is that in (22), and is bounded by . Therefore (68) can be proved.

Last, we prove that

| (69) |

To see this, we compute that

For the second term,

for some constants . Therefore (69) is proved.