Online Deterministic Annealing for Classification and Clustering

Abstract

Inherent in virtually every iterative machine learning algorithm is the problem of hyper-parameter tuning which includes three major design parameters: (a) the complexity of the model, e.g., the number of neurons in a neural network, (b) the initial conditions, which heavily affect the behavior of the algorithm, and (c) the dissimilarity measure used to quantify its performance. We introduce an online prototype-based learning algorithm that can be viewed as a progressively growing competitive-learning neural network architecture for classification and clustering. The learning rule of the proposed approach is formulated as an online gradient-free stochastic approximation algorithm that solves a sequence of appropriately defined optimization problems, simulating an annealing process. The annealing nature of the algorithm contributes to avoiding poor local minima, offers robustness with respect to the initial conditions, and provides a means to progressively increase the complexity of the learning model, through an intuitive bifurcation phenomenon. The proposed approach is interpretable, requires minimal hyper-parameter tuning, and allows online control over the performance-complexity trade-off. Finally, we show that Bregman divergences appear naturally as a family of dissimilarity measures that play a central role in both the performance and the computational complexity of the learning algorithm.

Index Terms:

Machine learning algorithms, progressive learning, annealing optimization, classification, clustering, Bregman divergences.I Introduction

Learning from data samples has become an important component of artificial intelligence. While virtually all learning problems can be formulated as constrained stochastic optimization problems, the optimization methods can be intractable, typically dealing with mixed constraints and very large, or even infinite-dimensional spaces [1]. For this reason, feature extraction, model selection and design, and analysis of optimization methods, have been the cornerstone of machine learning algorithms from their genesis until today.

Deep learning methods, currently dominating the field of machine learning due to their performance in multiple applications, attempt to learn feature representations from data, using biologically-inspired models in artificial neural networks [2, 3]. However, they typically use overly complex models of a great many parameters, which comes in the expense of time, energy, data, memory, and computational resources [4, 5]. Moreover, they are, by design, hard to interpret and vulnerable to small perturbations and adversarial attacks [6, 7]. The latter, has led to an emerging hesitation in their implementation outside common benchmark datasets [8], and, especially, in security critical applications. On the other hand, it is understood that the trade-off between model complexity and performance is closely related to over-fitting, generalization, and robustness to input noise and attacks [9]. In this work, we introduce a learning model that progressively adjusts its complexity, offering online control over this trade-off. The need for such approaches is reinforced by recent studies revealing that existing flaws in the current benchmark datasets may have inflated the need for overly complex models [10], and that over-fitting to adversarial training examples may actually hurt generalization [11].

We focus on prototype-based models, mainly represented by vector quantization methods, [12, 13, 14]. In vector quantization, originally introduced as a signal processing method for compression, a set of codevectors (or prototypes) , is used to represent the data space in an optimal way according to an average distortion measure:

where the proximity measure defines the similarity between the random input and a codevector . The codevectors can be viewed as a set of neurons, the weights of which live in the data space itself, and constitute the model parameters. In this regard, vector quantization algorithms can be viewed as competitive-learning neural network architectures with a number of appealing properties: they are consistent, data-driven, interpretable, robust, topology-preserving [15], sparse in the sense of memory complexity, and fast to train and evaluate. In addition, they have recently shown impressive robustness against adversarial attacks, suggesting suitability in security critical applications [16], while their representation of the input in terms of memorized exemplars is an intuitive approach which parallels similar concepts from cognitive psychology and neuroscience. As iterative learning algorithms, however, their behavior heavily depends on three major design parameters: (a) the number of neurons/prototypes, which, defines the complexity of the model, (b) the initial conditions, that affect the transient and steady-state behavior of the algorithm, and (c) the proximity measure used to quantify the similarity between two vectors in the data space.

Inspired by the deterministic annealing approach [17], we propose a learning approach that resembles an annealing process, tending to avoid poor local minima, offering robustness with respect to the initial conditions, and providing a means to progressively increase the complexity of the learning model, allowing online control over the performance-complexity trade-off. We relax the original problem to a soft-clustering problem, introducing the association probabilities , and replacing the cost function by . This probabilistic framework (to be formally defined in Section III) allows us to define the Shannon entropy that characterizes the “purity” of the clusters induced by the codevectors. We then replace the original problem by a sequence of optimization problems:

parameterized by a temperature coefficient , which acts as a Lagrange multiplier controlling the trade-off between minimizing the distortion and maximizing the entropy . By successively solving the optimization problems for decreasing values of , the model undergoes a series of phase transitions that resemble an annealing process. Because of the nature of the entropy term, in high temperatures , the effect of the initial conditions is greatly mitigated, while, as decreases, the optimal codevectors of the last optimization problem are used as initial conditions to the next, which helps in avoiding poor local minima. Furthermore, as decreases, the cardinality of the set of codevectors increases, according to an intuitive bifurcation phenomenon.

Adopting the above optimization framework, we introduce an online training rule based on stochastic approximation [18]. While stochastic approximation offers an online, adaptive, and computationally inexpensive optimization algorithm, it is also strongly connected to dynamical systems. This enables the study of the convergence of the learning algorithm through mathematical tools from dynamical systems and control [18]. We take advantage of this property to prove the convergence of the proposed learning algorithm as a consistent density estimator (unsupervised learning), and a Bayes risk consistent classification rule (supervised learning). Finally, we show that the proposed stochastic approximation learning algorithm introduces inherent regularization mechanisms and is also gradient-free, provided that the proximity measure belongs to the family of Bregman divergences. Bregman divergences are information-theoretic dissimilarity measures that have been shown to play an important role in learning applications [19, 20], including measures such as the widely used Euclidean distance and the Kullback-Leibler divergence. We believe that these results can potentially lead to new developments in learning with progressively growing models, including, but not limited to, communication, control, and reinforcement learning applications [21, 22, 23].

II Prototype-based Learning

In this section, the mathematics and notation of prototype-based machine learning algorithms, which will be used as a base for our analysis, are briefly introduced. For more details see [19, 24, 20, 14].

II-A Vector Quantization for Clustering

Unsupervised analysis can provide valuable insights into the nature of the dataset at hand, and it plays an important role in the context of visualization. Central to unsupervised learning is the representation of data in a vector space by typical representatives, which is formally defined in the following optimization problem:

Problem 1.

Let be a random variable defined in a probability space , and be a divergence measure, where represents the relative interior of . Let be a partition of and a set of codevectors, such that , for all . A quantizer is defined as the random variable and the vector quantization problem is formulated as

Vector quantization is a hard-clustering algorithm, and, as such, assumes that the quantizer assigns an input vector to a unique codevector with probability one. As a result, Problem 1 becomes equivalent to

| (1) |

for V being a Voronoi partition, i.e., for

It is typically the case that the actual distribution of is unknown, and a set of independent realizations , for , are available. In case the observations are available a priori, the solution of the VQ problem is traditionally approached with variants of the LBG algorithm [25], a generalization of the Lloyd algorithm [26] which includes the widely used -means algorithm [27].

When the training data are not available a priori but are being observed online, or when the processing of the entire dataset in every optimization iteration is computationally infeasible, a stochastic vector quantization algorithm can be defined as a recursive asynchronous stochastic approximation algorithm based on gradient descent [14]:

Definition 1 (Stochastic Vector Quantization (sVQ) Algorithm).

Repeat:

for until convergence, where is given during initialization, and represents the number of times the component has been updated up until time .

II-B Learning Vector Quantization for Classification

The supervised counterpart of vector quantization is the particularly attractive and intuitive approach of the competitive-learning Learning Vector Quantization (LVQ) algorithm, initially proposed by Kohonen [12]. LVQ for binary classification is formulated in the following optimization problem (and generalized to any type of classification task, see, e.g. [28]):

Problem 2.

Let the pair of random variables defined in a probability space , with representing the class of and . Let , where represent codevectors, and define the set , such that represents the class of for all . The quantizer is defined such that . Then, the minimum-error classification problem is formulated as

where , and is defined as , .

LVQ algorithms that solve Problem 2 are similar in structure with the stochastic vector quantization algorithm of Def. 1, and make use of a modified distortion measure, which in the case of the original LVQ1 algorithm [12] takes the form:

Generalizations of this definition based on similar principles have also been proposed [29, 30].

II-C Bregman Divergences as Dissimilarity Measures

Prototype-based algorithms rely on measuring the proximity between different vector representations. In most cases the Euclidean distance or another convex metric is used, but this can be generalized to alternative dissimilarity measures inspired by information theory and statistical analysis, such as the Bregman divergences:

Definition 2 (Bregman Divergence).

Let , be a strictly convex function defined on a vector space such that is twice F-differentiable on . The Bregman divergence is defined as:

where , and the continuous linear map is the Fréchet derivative of at .

Notice that, as a divergence measure, Bregman divergence can be used to measure the dissimilarity of one probability distribution to another on a statistical manifold, and is a notion weaker than that of the distance. In particular, it does not need to be symmetric or satisfy the triangle inequality. In this work, we will concentrate on nonempty, compact convex sets so that the derivative of with respect to the second argument can be written as

where , represents differentiation with respect to the second argument of , and represents the Hessian matrix of at .

Example 1.

As a first example, , gives the squared Euclidean distance

for which .

Example 2.

A second interesting Bregman divergence that shows the connection to information theory, is the generalized I-divergence which results from such that

for which , where is the vector of ones, and is the diagonal matrix with diagonal elements the inverse elements of . It is easy to see that reduces to the Kullback-Leibler divergence if .

The family of Bregman divergences provides proximity measures that have been shown to enhance the performance of a learning algorithm [31]. In addition, the following theorem shows that the use of Bregman divergences is both necessary and sufficient for the optimizer of (1) to be analytically computed as the expected value of the data inside , which is implicitly used by many “centroid” algorithms, such as -means [27]:

Theorem 1.

Let be a random variable defined in the probability space such that , and let a distortion measure , where denotes the relative interior of . Then is the unique minimizer of in , if and only if is a Bregman divergence for any function that satisfies the definition.

Proof.

For necessity, identical arguments as in Appendix B of [19] are followed. For sufficiency,

with equality holding only when by the strict convexity of , which completes the proof. ∎

In Section III, we will show a similar result for the proposed algorithm that uses a soft-partition approach.

III Online Deterministic Annealing for Unsupervised and Supervised Learning

Online vector quantization algorithms, are proven to converge to locally optimal configurations [14]. However, as iterative machine learning algorithms, their convergence properties and final configuration depend heavily on two design parameters: the number of neurons/clusters , and their initial configuration. Inspired by the deterministic annealing framework [17], we relax the the original optimization problem (1) to a soft-clustering problem, and replace it by a sequence of deterministic optimization problems, parameterized by a temperature coefficient, that are progressively solved at successively reducing temperature levels. As will be shown, the annealing nature of this algorithm will contribute to avoiding poor local minima, provide robustness with respect to the initial conditions, and induce a progressive increase in the cardinality of the set of clusters needed to be used, via a intuitive bifurcation phenomenon.

III-A Soft-Clustering and Annealing Optimization

In the clustering problem (Problem 1), the distortion function is typically non convex and riddled with poor local minima. To partially deal with this phenomenon, soft-clustering approaches have been proposed as a probabilistic framework for clustering. In this case, an input vector is assigned, through the quantizer , to all codevectors with probabilities , where . In this regard, the quantizer becomes a discrete random variable, with the set being its image, and can be fully described by the values of and the probability functions . In contrast, hard clustering assumes that is a simple random variable that can be described fully by and , since (see Problem 1).

For the randomized partition we can rewrite the expected distortion as

where is the association probability relating the input vector with the codevector . We note that, at the limit, where each input vector is assigned to a unique codevector with probability one, this reduces to the hard clustering distortion. The main idea in deterministic annealing, is to seek the distribution that minimizes subject to a specified level of randomness, measured by the Shannon entropy

by appealing to Jaynes’ maximum entropy principle111Informally, Jaynes’ principle states: of all the probability distributions that satisfy a given set of constraints, choose the one that maximizes the entropy. [32]. This multi-objective optimization is conveniently formulated as the minimization of the Lagrangian

| (2) |

where is the temperature parameter that acts as a Lagrange multiplier. Clearly, for large values of we maximize the entropy, and, as is lowered, we trade entropy for reduction in distortion. Equation (2) also represents the scalarization method for trade-off analysis between two performance metrics [33]. As varies we essentially transition from one Pareto point to another, and the sequence of the solutions will correspond to a Pareto curve of the multi-objective optimization (2) that resembles annealing processes in chemical engineering. In this regard, the entropy , which is closely related to the “purity” of the clusters, acts as a regularization term which is given progressively less weight as decreases.

As in the case of vector quantization, we form a coordinate block optimization algorithm to minimize , by successively minimizing it with respect to the association probabilities and the codevector locations . Minimizing with respect to the association probabilities is straightforward and yields the Gibbs distribution

| (3) |

while, in order to minimize with respect to the codevector locations we set the gradients to zero

| (4) | ||||

In the following theorem, we show that we can have analytical solution to the last optimization step (4) in a convenient centroid form, if is a Bregman divergence. This is a similar result to Theorem 1 for vector quantization.

Theorem 2.

III-B Bifurcation Phenomena

This optimization procedure takes place for decreasing values of the temperature coefficient such that the solution maintains minimum free energy (thermal equilibrium) while gradually lowering the temperature. Adding to the physical analogy, it is significant that, as the temperature is lowered, the system undergoes a sequence of “phase transitions”, which consists of natural cluster splits where the cardinality of the codebook (number of clusters) increases. This is a bifurcation phenomenon and provides a useful tool for controlling the size of the clustering model relating it to the scale of the solution.

At very high temperature () the optimization yields uniform association probabilities

and, provided is a Bregman divergence, all the codevectors are located at the same point:

which is the expected value of (Theorem 1). This is true regardless of the number of codevectors available. We refer to the number of different codevectors resulting from the optimization process as effective codevectors. These define the cardinality of the codebook, which changes as we lower the temperature. The bifurcation occurs when the solution above a critical temperature is no longer the minimum of the free energy for . A set of coincident codevectors then splits into separate subsets. These critical temperatures can be traced when the Hessian of loses its positive definite property, and are, in some cases, computable (see Theorem 1 in [17]). In other words, an algorithmic implementation needs only as many codevectors as the number of effective codevectors, which depends only on the temperature parameter, i.e. the Lagrange multiplier of the multi-objective minimization problem in (2). As will be shown in Section III-E, we can detect the bifurcation points by maintaining and perturbing pairs of codevectors at each effective cluster so that they separate only when a critical temperature is reached.

III-C Online Deterministic Annealing for Clustering

The conditional expectation in eq. (5) can be approximated by the sample mean of the data points weighted by their association probabilities , i.e.,

This approach, however, defines an offline (batch) optimization algorithm and requires the entire dataset to be available a priori, subtly assuming that it is possible to store and also quickly access the entire dataset at each iteration. This is rarely the case in practical applications and results to computationally costly iterations that are slow to converge. We propose an Online Deterministic Annealing (ODA) algorithm, that dynamically updates its estimate of the effective codevectors with every observation. This results in a significant reduction in complexity, that comes in two levels. The first refers to huge reduction in memory complexity, since we bypass the need to store the entire dataset, as well as the association probabilities that map each data point in the dataset to each cluster. The second level refers to the nature of the optimization iterations. In the online approach the optimization iterations increase in number but become much faster, and practical convergence is often after a smaller number of observations.

To define an online training rule for the above optimization framework, we formulate a stochastic approximation algorithm to recursively estimate directly. Stochastic approximation, first introduced in [34], was originally conceived as a tool for statistical computation, and, since then, has become a central tool in a number of different disciplines, often times unbeknownst to the users, researchers and practitioners. It offers an online, adaptive, and computationally inexpensive optimization framework, properties that make it an ideal optimization method for machine learning algorithms. In addition to its connection with optimization and learning algorithms, however, stochastic approximation is strongly connected to dynamical systems, as well, a property that allows the study of its convergence through the analysis of an ordinary differential equation, as illustrated in the following theorem:

Theorem 3 ([18], Ch.2).

Almost surely, the sequence generated by the following stochastic approximation scheme:

| (7) |

with prescribed , converges to a (possibly sample path dependent) compact, connected, internally chain transitive, invariant set of the o.d.e:

| (8) |

where and , provided the following assumptions hold:

-

(A1)

The map is Lipschitz in , i.e., with such that ,

-

(A2)

The stepsizes satisfy , and ,

-

(A3)

is a martingale difference sequence with respect to the increasing family of -fields , , i.e., , for all , and, furthermore, are square-integrable with , where for some ,

-

(A4)

The iterates remain bounded a.s., i.e.,

As an immediate result, the following corollary also holds:

Corollary 3.1.

Now we are in place to prove the following theorem:

Theorem 4.

Let a vector space, , and be a random variable defined in a probability space . Let be a sequence of independent realizations of , and a sequence of stepsizes such that , and . Then the random variable , where are sequences defined by

| (9) | ||||

converges to almost surely, i.e. .

Proof.

We will use the facts that and . The recursive equations (9) are stochastic approximation algorithms of the form:

| (10) | ||||

It is obvious that both stochastic approximation algorithms satisfy the conditions of Theorem 3 and Corollary 3.1. As a result, they converge to the asymptotic solution of the differential equations

which can be trivially derived through standard ODE analysis to be . In other words, we have shown that

| (11) |

The convergence of follows from the fact that , and standard results on the convergence of the product of two random variables. ∎

As a direct consequence of this theorem, the following corollary provides an online learning rule that solves the optimization problem of the deterministic annealing algorithm.

Corollary 4.1.

Finally, the learning rule (12), (13) can be used to define a consistent (histogram) density estimator at the limit . This follows from the fact that as , the number of clusters goes to infinity, , and, as a result, , i.e., the consistency of Alg. 1 can be studied with similar arguments to the stochastic divergence-based vector quantization algorithm (1) (see [14, 13]).

III-D Online Deterministic Annealing for Classification

We can extend the proposed learning algorithm to be used for classification as well. In this case we can rewrite the expected distortion as

where . Because is not differentiable, using similar principles as in the case of LVQ, we can instead approximate the optimal solution by solving the minimization problem for the following distortion measure

| (14) |

This particular choice for the distortion measure will lead to some interesting regularization properties of the proposed online approach (see Section III-E).

It is easy to show that the coordinate block optimization steps (3) and (5), in this case become:

respectively. In the last step, we have assumed that the class of each centroid is given and cannot be changed dynamically by the algorithm, which results to the minimization with respect to only. In a similar fashion, it can be shown that the online learning rule that solves the optimization problem of the deterministic annealing algorithm for classification, based on the distortion measure (14), is given by:

| (15) | ||||

where

| (16) | ||||

At the limit , the quantization scheme described above equipped with a majority-vote classification rule is strongly Bayes risk consistent, i.e., converges to the optimal (Bayes) probability of error (see Ch. 21 in [13]). However, due to the choice of the distortion measure in (14) used in ODA for classification, the algorithm can be used to estimate consistent class-conditional density estimators, which define the natural classification rule:

| (17) |

where .

III-E The algorithm

The proposed Online Deterministic Annealing (ODA) algorithm (Algorithm 1), is based on (15), (16), and can be used for both clustering and classification alike, depending on whether the data belong to a single (clustering) or several classes (classification).

Temperature Schedule. The temperature schedule plays an important role in the behavior of the algorithm. Starting at high temperature ensures the correct operation of the algorithm. The value of depends on the domain of the data and should be large enough such that there is only one effective codevector at . When the range of the domain of the data is not known a priori, overestimation is recommended. The stopping temperature can be set a priori or be decided online depending on the performance of the model at each temperature level. The temperature step should be small enough such that no critical temperature is missed. On the other hand, the smaller the step , the more optimization problems need to be solved. It is common practice to use the geometric series .

Stochastic Approximation. Regarding the stochastic approximation stepsizes, simple time-based learning rates, e.g. of the form , have been sufficient for fast convergence in all our experiments so far. Convergence is checked with the condition for a given threshold that can depend on the domain of . Exploring adaptive learning rates would be an interesting research direction for the future.

Bifurcation and Perturbations. To every temperature level , corresponds a set of effective codevectors , which consist of the different solutions of the optimization problem (2) at . Bifurcation, at , is detected by maintaining a pair of perturbed codevectors for each effective codevector generated at , i.e. for . Using arguments from variational calculus [17], it is easy to see that, upon convegence, the perturbed codevectors will merge if a critical temperature has not been reached, and will get separated otherwise. In case of a merge, one of the perturbed codevectors is removed from the model. Therefore, the cardinality of the model is at most doubled at every temperature level. For classification, a perturbed codevector for each distinct class is generated.

Regularization. Merging is detected by the condition , where is a design parameter that acts as a regularization term for the model. Large values for (compared to the support of the data ) lead to fewer effective codevectors, while small values lead to a fast growth in the model size, which is connected to overfitting. It is observed that, for practical convergence, the perturbation noise is best to not exceed . An additional regularization mechanism that comes as a natural consequence of the stochastic approximation learning rule, is the detection of idle codevectors. To see that, notice that the sequence resembles an approximation of the probability . In the updates (12), (13), becomes negligible () if not updated by any nearby observed data, which is a natural criterion for removing the codevector . This happens if all observed data samples are largely dissimilar to . In classification, because of the choice of in (14), codevectors that are not assigned the same class as the data in their vicinity, will end up to be removed, as well. The threshold is a parameter that usually takes values near zero.

Complexity. The worst case complexity of Algorithm 1 behaves as where:

-

•

is an upper bound of the number of data samples observed, which should be large enough to overestimate the iterations needed for convergence;

-

•

is the dimension of the input vectors, i.e., ;

-

•

is the maximum number of codevectors allowed;

-

•

, where is the number of temperature values in our temperature schedule that lie between two critical temperatures and , with the understanding that at there are distinct effective codevectors present. Here we have assumed that is achievable within our temperature schedule.

Fine-Tuning. In practice, because the convergence to the Bayes decision surface comes at the limit , a fine-tuning mechanism should be designed to run on top of the proposed algorithm after . This can be either an LVQ algorithm (Section II-B) or some other local model.

IV Experimental Evaluation and Discussion

We illustrate the properties and evaluate the performance of the proposed algorithm in widely used artificial and real datasets for clustering and classification222Code and Reproducibility: The source code is publicly available online at https://github.com/MavridisChristos/OnlineDeterministicAnnealing..

IV-A Toy Examples

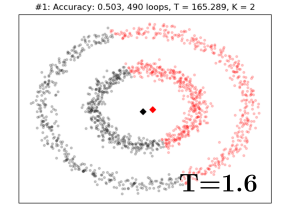

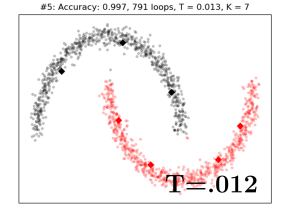

We first showcase how Alg. 1 works in three simple, but illustrative, classification problems in two dimensions (Fig. 1). The first two are binary classification problems with the underlying class distributions shaped as concentric circles (Fig. 1(a)), and half moons (Fig. 1(b)), respectively. The third is a multi-class classification problem with Gaussian mixture class distributions (Fig. 1(c)). All datasets consist of samples. Since the objective is to give a geometric illustration of how the algorithm works in the two-dimensional plane, the Euclidean distance is used. The algorithm starts at high temperature with a single codevector for each class. As the temperature coefficient gradually decreases (Fig. 1, from left to right), the number of codevectors progressively increases. The accuracy of the algorithm typically increases as well. As the temperature goes to zero, the complexity of the model, i.e. the number of codevectors, rapidly increases (Fig. 1, rightmost pictures). This may, or may not, translate to a corresponding performance boost. A single parameter –the temperature – offers online control on this complexity-accuracy trade-off. Finally, Fig. 1(d) showcases the robustness of the proposed algorithm with respect to the initial configuration. Here the codevectors are poorly initialized outside the support of the data, which is not assumed known a priori (e.g. online observations of unknown domain). In this example the LVQ algorithm has been shown to fail [35]. In contrast, the entropy term in the optimization objective of Alg. 1, allows for the online adaptation to the domain of the dataset and helps to prevent poor local minima.

IV-B Real Datasets

Clustering. For clustering, we consider the following datasets: (a) the dataset of Fig. 1(c) (Gaussians), (b) the WBCD dataset [36], (c) the PIMA dataset [37], and (d) the Adult dataset333 samples randomly selected. Non-numerical features removed.[36]. In Fig. 2, we compare Alg. 1 with the online sVQ algorithm (Def. 1), and two offline algorithms, namely -means [27], and the original deterministic annealing (DA) algorithm [17]. The algorithms are compared in terms of the minimum average distortion achieved, as a function of the number of samples they observed, and the number of clusters they used (floating numbers inside the figures). The Euclidean distance is used for fair comparison. Since there is no criterion to decide the number of clusters for -means and sVQ, we run them sequentially for the values estimated by DA, and add up the computational time. All algorithms are able to achieve comparable average distortion values given good initial conditions and appropriate size . Therefore, the progressive estimation of , as well as the robustness with respect to the initial conditions, are key features of both annealing algorithms, i.e., DA and ODA (Alg. 1). Compared to the offline algorithms, i.e., -means and DA, ODA and sVQ achieve practical convergence with significantly smaller number of observations, which corresponds to reduced computational time, as argued above. Notice the substantial difference in running time between the original DA algorithm and the proposed ODA algorithm in Fig. 4. Compared to the online sVQ (and LVQ), the probabilistic approach of ODA introduces additional computational cost: all neurons are now updated in every iteration, instead of only the winner neuron. However, the updates can still be computed fast when using Bregman divergences (Theorem 2), and the aforementioned benefits of the annealing nature of ODA, outweigh this additional cost in many real-life problems.

| Data set | ODA | SVM | NN | RF |

|---|---|---|---|---|

| Gaussian | 98.9 0.0 | 79.5 0.0 | 98.6 0.0 | 98.7 0.0 |

| WBCD | 90.7 0.0 | 85.6 0.0 | 92.7 0.0 | 94.6 0.0 |

| Credit (F1) | 95.6 0.0 | 69.1 0.2 | 58.9 0.1 | 62.8 0.1 |

| PIMA | 70.5 0.0 | 62.9 0.0 | 76.3 0.0 | 74.4 0.0 |

Classification. For classification, we consider the Gaussian (Fig. 1(c)), WBCD, PIMA, and Credit Card444 samples randomly selected.[38] datasets. We compare Alg. 1 against an SVM model with a linear kernel [39], a feed-forward fully-connected neural network with a single hidden layer of neurons (NN), and the Random Forests (RF) algorithm with estimators [40]. These algorithms have been selected to represent today’s standards in simple classification tasks, i.e., when no sophisticated feature extraction is required. The SVM classifier represents the class of linear classification models, the neural network represents the class of non-linear approximation models, and the random forests algorithm represents the class of partition-based methods with bootstrap aggregating. Table I shows the results of a -fold cross validation (), and Fig. 3 illustrates the performance of the algorithms during a random test. The evolution of the complexity of the ODA model is depicted as a function of the observed samples and the classification accuracy achieved. We use the generalized I divergence (Example 2) in the WBCD dataset and the Euclidean distance in the rest. ODA (Alg. 1) outperforms the linear SVM classifier, and can achieve comparable performance with the NN and the RF algorithms, which are today’s standards in classification tasks where no feature extraction is required. In the greatly unbalanced Credit Card dataset, all algorithms achieved accuracy close to , but the scores dropped significantly (Fig. 3(d)). Notably, this was not the case with the ODA algorithm. This may be due to the generative nature of the algorithm, and might also be an instance of the robustness expected by vector quantization algorithms [16]. Justifying and quantifying this robustness is beyond the scope of this paper.

Parameters. The parameters and were selected through extensive grid search. In contrast, the parameters of the ODA algorithm for all the experiments were set as follows: , , , , , , , , and , where is the number of dimensions of the input , and represents the length of the largest edge of the smallest -orthotope that contains . We stress that no parameter tuning has taken place for the proposed algorithm.

Limitations. Finally, we note that both NN and RF outperform Alg. 1 in some datasets (Table I). A fine-tuning mechanism, as discussed in Section III-E, could alleviate these differences, and is currently not used in our experiments. Regarding the running time of the ODA algorithm, Fig. 4 shows the execution time of the learning algorithms used in Fig. 2(a) and Fig. 3(a). All experiments were implemented in a personal computer. We note that, in contrast to the commercial, and, therefore, optimized versions of the -means, SVM, NN, and RF algorithms, the algorithmic implementation of the proposed algorithm is not yet optimized, and substantial speed-up is expected through appropriate software development.

V Conclusion

It is understood that the trade-off between model complexity and performance in machine learning algorithms is closely related to over-fitting, generalization, and robustness to input perturbations and adversarial attacks. We investigate the properties of learning with progressively growing models, and propose an online annealing optimization approach as a learning algorithm that progressively adjusts its complexity with respect to new observations, offering online control over the performance-complexity trade-off. The proposed algorithm can be viewed as a neural network with inherent regularization mechanisms, the learning rule of which is formulated as an online gradient-free stochastic approximation algorithm. As a prototype-based learning algorithm, it offers a progressively growing knowledge base that can be interpreted as a memory unit that parallels similar concepts form cognitive psychology and neuroscience. The annealing nature of the algorithm prevents poor local minima, offers robustness to initial conditions, and provides a means to progressively increase the complexity of the learning model as needed. To our knowledge, this is the first time such a progressive approach has been proposed for machine learning applications. We believe that these results can lead to new developments in learning with progressively growing models, especially in communication, control, and reinforcement learning applications.

References

- [1] K. P. Bennett and E. Parrado-Hernández, “The interplay of optimization and machine learning research,” The Journal of Machine Learning Research, vol. 7, pp. 1265–1281, 2006.

- [2] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” in Advances in Neural Information Processing Systems, 2012, pp. 1097–1105.

- [3] H. Lee, R. Grosse, R. Ranganath, and A. Y. Ng, “Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations,” in Proceedings of the 26th Annual International Conference on Machine Learning, 2009, pp. 609–616.

- [4] N. C. Thompson, K. Greenewald, K. Lee, and G. F. Manso, “The computational limits of deep learning,” arXiv:2007.05558, 2020.

- [5] E. Strubell, A. Ganesh, and A. McCallum, “Energy and policy considerations for deep learning in nlp,” arXiv:1906.02243, 2019.

- [6] C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” arXiv preprint arXiv:1312.6199, 2013.

- [7] N. Carlini and D. Wagner, “Towards evaluating the robustness of neural networks,” in IEEE Symposium on Security and Privacy. IEEE, 2017.

- [8] V. Sehwag, A. N. Bhagoji, L. Song, C. Sitawarin, D. Cullina, M. Chiang, and P. Mittal, “Analyzing the robustness of open-world machine learning,” in Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, 2019, pp. 105–116.

- [9] H. Xu and S. Mannor, “Robustness and generalization,” Machine Learning, vol. 86, no. 3, pp. 391–423, 2012.

- [10] C. G. Northcutt, A. Athalye, and J. Mueller, “Pervasive label errors in test sets destabilize machine learning benchmarks,” arXiv preprint arXiv:2103.14749, 2021.

- [11] A. Raghunathan, S. M. Xie, F. Yang, J. C. Duchi, and P. Liang, “Adversarial training can hurt generalization,” arXiv:1906.06032, 2019.

- [12] T. Kohonen, Learning Vector Quantization. Berlin, Heidelberg: Springer Berlin Heidelberg, 1995, pp. 175–189.

- [13] L. Devroye, L. Györfi, and G. Lugosi, A probabilistic theory of pattern recognition. Springer Science & Business Media, 2013, vol. 31.

- [14] C. N. Mavridis and J. S. Baras, “Convergence of stochastic vector quantization and learning vector quantization with bregman divergences,” in 21rst IFAC World Congress. IFAC, 2020.

- [15] E. A. Uriarte and F. D. Martín, “Topology preservation in som,” International Journal of Applied Mathematics and Computer Sciences, vol. 1, no. 1, pp. 19–22, 2005.

- [16] S. Saralajew, L. Holdijk, M. Rees, and T. Villmann, “Robustness of generalized learning vector quantization models against adversarial attacks,” in International Workshop on Self-Organizing Maps. Springer, 2019, pp. 189–199.

- [17] K. Rose, “Deterministic annealing for clustering, compression, classification, regression, and related optimization problems,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2210–2239, 1998.

- [18] V. S. Borkar, Stochastic approximation: a dynamical systems viewpoint. Springer, 2009, vol. 48.

- [19] A. Banerjee, S. Merugu, I. S. Dhillon, and J. Ghosh, “Clustering with bregman divergences,” Journal of Machine Learning Research, vol. 6, no. Oct, pp. 1705–1749, 2005.

- [20] T. Villmann, S. Haase, F.-M. Schleif, B. Hammer, and M. Biehl, “The mathematics of divergence based online learning in vector quantization,” in IAPR Workshop on Artificial Neural Networks in Pattern Recognition. Springer, 2010, pp. 108–119.

- [21] C. N. Mavridis and J. S. Baras, “Progressive graph partitioning based on information diffusion,” in Conference on Decision and Control (CDC). IEEE, 2021, pp. 37–42.

- [22] C. N. Mavridis, N. Suriyarachchi, and J. S. Baras, “Detection of dynamically changing leaders incomplex swarms from observed dynamic data,” in 2020 Conference on Decision and Game Theory for Security (GameSec), 2020.

- [23] ——, “Maximum-entropy progressive state aggregation for reinforcement learning,” in Conference on Decision and Control (CDC). IEEE, 2021, pp. 5144–5149.

- [24] M. Biehl, B. Hammer, and T. Villmann, “Prototype-based models in machine learning,” Wiley Interdisciplinary Reviews: Cognitive Science, vol. 7, no. 2, pp. 92–111, 2016.

- [25] Y. Linde, A. Buzo, and R. Gray, “An algorithm for vector quantizer design,” IEEE Transactions on Communications, vol. 28, 1980.

- [26] M. Sabin and R. Gray, “Global convergence and empirical consistency of the generalized lloyd algorithm,” IEEE Transactions on Information Theory, vol. 32, no. 2, pp. 148–155, 1986.

- [27] L. Bottou and Y. Bengio, “Convergence properties of the k-means algorithms,” in Advances in Neural Information Processing Systems, 1995, pp. 585–592.

- [28] R. O. Duda, P. E. Hart, and D. G. Stork, Pattern classification. John Wiley & Sons, 2012.

- [29] A. Sato and K. Yamada, “Generalized learning vector quantization,” in Advances in Neural Information Processing Systems, 1996, pp. 423–429.

- [30] B. Hammer and T. Villmann, “Generalized relevance learning vector quantization,” Neural Networks, vol. 15, no. 8-9, pp. 1059–1068, 2002.

- [31] H. K. B. Babiker and R. Goebel, “Using kl-divergence to focus deep visual explanation,” arXiv preprint arXiv:1711.06431, 2017.

- [32] E. T. Jaynes, “Information theory and statistical mechanics,” Physical Review, vol. 106, no. 4, p. 620, 1957.

- [33] K. Miettinen, Nonlinear multiobjective optimization. Springer Science & Business Media, 2012, vol. 12.

- [34] H. Robbins and S. Monro, “A stochastic approximation method,” The Annals of Mathematical Statistics, pp. 400–407, 1951.

- [35] J. S. Baras and A. LaVigna, “Convergence of a neural network classifier,” in Advances in Neural Information Processing Systems, 1991.

- [36] D. Dua and C. Graff, “UCI machine learning repository,” 2017. [Online]. Available: http://archive.ics.uci.edu/ml

- [37] J. W. Smith, J. Everhart, W. Dickson, W. Knowler, and R. Johannes, “Using the adap learning algorithm to forecast the onset of diabetes mellitus,” in Proceedings of the Annual Symposium on Computer Application in Medical Care. American Medical Informatics Association, 1988, p. 261.

- [38] F. Carcillo, Y.-A. Le Borgne, O. Caelen, Y. Kessaci, F. Oblé, and G. Bontempi, “Combining unsupervised and supervised learning in credit card fraud detection,” Information Sciences, 2019.

- [39] M. A. Hearst, S. T. Dumais, E. Osuna, J. Platt, and B. Scholkopf, “Support vector machines,” IEEE Intelligent Systems and their Applications, vol. 13, no. 4, pp. 18–28, 1998.

- [40] L. Breiman, “Random forests,” Machine Learning, vol. 45, 2001.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/98e7c52f-d63e-49df-b979-4cdc84d879d6/christos_mavridis.png) |

Christos N. Mavridis (M’20) received the Diploma degree in electrical and computer engineering from the National Technical University of Athens, Greece, in 2017, and the M.S. and Ph.D. degrees in electrical and computer engineering at the University of Maryland, College Park, MD, USA, in 2021. His research interests include learning theory, stochastic optimization, systems and control theory, multi-agent systems, and robotics. He has worked as a researcher at the Department of Electrical and Computer Engineering at the University of Maryland, College Park, and as a research intern for the Math and Algorithms Research Group at Nokia Bell Labs, NJ, USA, and the System Sciences Lab at Xerox Palo Alto Research Center (PARC), CA, USA. Dr. Mavridis is an IEEE member, and a member of the Institute for Systems Research (ISR) and the Autonomy, Robotics and Cognition (ARC) Lab. He received the Ann G. Wylie Dissertation Fellowship in 2021, and the A. James Clark School of Engineering Distinguished Graduate Fellowship, Outstanding Graduate Research Assistant Award, and Future Faculty Fellowship, in 2017, 2020, and 2021, respectively. He has been a finalist in the Qualcomm Innovation Fellowship US, San Diego, CA, 2018, and he has received the Best Student Paper Award (1st place) in the IEEE International Conference on Intelligent Transportation Systems (ITSC), 2021. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/98e7c52f-d63e-49df-b979-4cdc84d879d6/john_baras.png) |

John S. Baras (LF’13) received the Diploma degree in electrical and mechanical engineering from the National Technical University of Athens, Greece, in 1970, and the M.S. and Ph.D. degrees in applied mathematics from Harvard University, Cambridge, MA, USA, in 1971 and 1973, respectively. He is a Distinguished University Professor and holds the Lockheed Martin Chair in Systems Engineering, with the Department of Electrical and Computer Engineering and the Institute for Systems Research (ISR), at the University of Maryland College Park. From 1985 to 1991, he was the Founding Director of the ISR. Since 1992, he has been the Director of the Maryland Center for Hybrid Networks (HYNET), which he co-founded. His research interests include systems and control, optimization, communication networks, applied mathematics, machine learning, artificial intelligence, signal processing, robotics, computing systems, security, trust, systems biology, healthcare systems, model-based systems engineering. Dr. Baras is a Fellow of IEEE (Life), SIAM, AAAS, NAI, IFAC, AMS, AIAA, Member of the National Academy of Inventors and a Foreign Member of the Royal Swedish Academy of Engineering Sciences. Major honors include the 1980 George Axelby Award from the IEEE Control Systems Society, the 2006 Leonard Abraham Prize from the IEEE Communications Society, the 2017 IEEE Simon Ramo Medal, the 2017 AACC Richard E. Bellman Control Heritage Award, the 2018 AIAA Aerospace Communications Award. In 2016 he was inducted in the A. J. Clark School of Engineering Innovation Hall of Fame. In 2018 he was awarded a Doctorate Honoris Causa by his alma mater the National Technical University of Athens, Greece. |