capbtabboxtable[][\FBwidth]

One Explanation is Not Enough:

Structured Attention Graphs for Image Classification

Abstract

Saliency maps are popular tools for explaining the decisions of convolutional neural networks (CNNs) for image classification. Typically, for each image of interest, a single saliency map is produced, which assigns weights to pixels based on their importance to the classification. We argue that a single saliency map provides an incomplete understanding since there are often many other maps that can explain a classification equally well. In this paper, we propose to utilize a beam search algorithm to systematically search for multiple explanations for each image. Results show that there are indeed multiple relatively localized explanations for many images. However, naively showing multiple explanations to users can be overwhelming and does not reveal their common and distinct structures. We introduce structured attention graphs (SAGs), which compactly represent sets of attention maps for an image by visualizing how different combinations of image regions impact the confidence of a classifier. An approach to computing a compact and representative SAG for visualization is proposed via diverse sampling. We conduct a user study comparing the use of SAGs to traditional saliency maps for answering comparative counterfactual questions about image classifications. Our results show that user accuracy is increased significantly when presented with SAGs compared to standard saliency map baselines.

1 Introduction

With the emergence of convolutional neural networks (CNNs) as the most successful learning paradigm for image classification, the need for human understandable explanations of their decisions has gained prominence. Explanations lead to a deeper user understanding and trust of the neural network models, which is crucial for their deployment in safety-critical applications. They can also help identify potential causes of misclassification. An important goal of explanation is for the users to gain a mental model of the CNNs, so that the users can understand and predict the behavior of the classifier[18] in cases that have not been seen. A better mental model would lead to appropriate trust and better safeguards of the deep networks in the deployment process.

A popular line of research towards this goal has been to display attention maps, sometimes called saliency maps or heatmaps. Most approaches assign weights to image regions based on the importance of that region to the classification decision, which is then visualized to the user. This approach implicitly assumes that a single saliency map with region-specific weights is sufficient for the human to construct a reasonable mental model of the classification decision for the particular image.

We argue that this is not always the case. Fig. 1(d-f) show three localized attention maps highlighting different regions. Each of these images, if given as input to the CNN, results in a very confident prediction of the correct category. However, this information is not apparent from a single saliency map as produced by current methods (Fig. 1(b-c)). This raises several questions: How many images have small localized explanations (i.e., attention maps) that lead to high confidence predictions? Are there multiple distinct high confidence explanations for each image, and if so, how to find them? How can we efficiently visualize multiple explanations to users to yield deeper insights?

The first goal of this paper is to systematically evaluate the sizes and numbers of high-confidence local attention maps of CNN image classifications.

For this purpose, rather than adopting commonly used gradient-based optimization approaches, we employ discrete search algorithms to find multiple high-confidence attention maps that are distinct in their coverage.

Image

heatmap

heatmap

(98% confidence)

(97% confidence)

(93% confidence)

The existence of multiple attention maps shows that CNN decisions may be more comprehensively explained with a logical structure in the form of disjunctions of conjunctions of features represented by local regions instead of a singleton saliency map. However, a significant challenge in utilizing this as an explanation is to come up with a proper visualization to help users gain a more comprehensive mental model of the CNN.

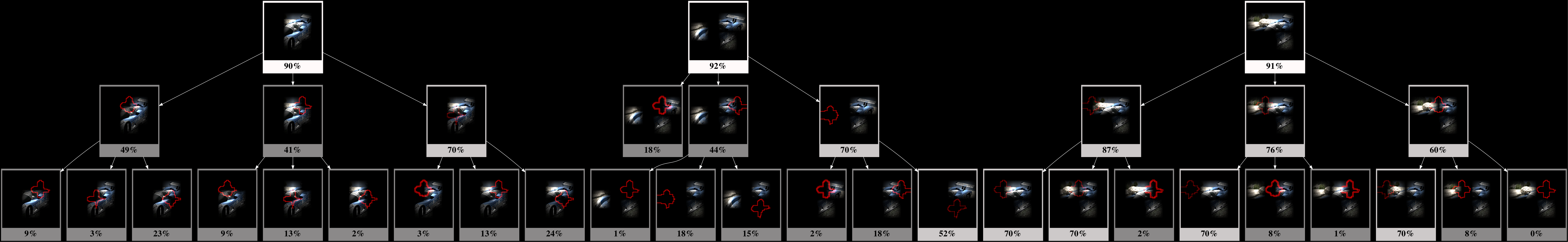

This leads us to our second contribution of the paper, Structured Attention Graphs (SAGs) 111Source code for generating SAGs: https://github.com/viv92/structured-attention-graphs , which are directed acyclic graphs over attention maps of different image regions. The maps are connected based on containment relationships between the regions, and each map is accompanied with the prediction confidence of the classification based on the map (see Fig. 2 for an example). We propose a diverse sampling approach to select a compact and diverse set of maps for SAG construction and visualization.

This new SAG visualization allows users to efficiently view information from a diverse set of maps, which serves as a novel type of explanation for CNN decisions.

In particular, SAGs provide insight by decomposing local maps into sub-regions and making the common and distinct structures across maps explicit. For example, observing that the removal of a particular patch leads to a huge drop in the confidence suggests that the patch might be important in that context.

Our visualization can also be viewed as representing a (probabilistic) Monotone Disjunctive Normal Form (MDNF) Boolean expression, where propositional symbols correspond to primitive image regions we call ‘patches’. Each MDNF expression is a disjunction of conjunctions, where any one of the conjunctions (e.g., one of the regions in Fig. 1) is sufficient for a high confident classification. Following [14], we call these minimal sufficient explanations (MSEs). Each conjunction is true only when all the patches that correspond to its symbols are present in the image.

We conducted a large-scale user study (100 participants total) to compare SAGs to two saliency map methods. We wondered if participants can answer challenging counterfactual questions with the help of explanations

, e.g., how a CNN model classifies an image if parts of the image are occluded . In our user study, participants were provided two different occluded versions of the image (i.e., different parts of the image are occluded ) and asked to choose one that they think would be classified more positively. Results show that when presented with SAG, participants correctly answer significantly more of these questions compared to the baselines, which suggests that SAGs help them build better mental models of the behavior of the classifier on different subimages.

In summary, our contributions are as follows:

-

•

With a beam search algorithm, we conducted a systematic study of the sizes and numbers of attention maps that yield high confidence classifications of a CNN (VGGNet [28]) on ImageNet [8]. We showed that the proposed beam search algorithm significantly outperforms Grad-CAM and I-GOS in its capability to locate small attention maps to explain CNN decisions.

-

•

We introduce Structured Attention Graphs (SAGs) as a novel representation to visualize image classifications by convolutional neural networks.

-

•

We conducted a user study demonstrating the effectiveness of SAGs in helping users gain a deeper understanding of CNN’s decision making.

2 Related Work

Much recent work on interpretability of CNNs is based on different ways to generate saliency maps depicting the importance of different regions to the classification decisions. These include gradient-based methods that compute the gradient of the outputs of different units with respect to pixel inputs [32, 27, 29, 26, 30, 3, 26, 33, 25], perturbation-based methods, which perturb parts of the input to see which ones are most important to preserve the final decision [6, 10], and concept-based methods, which analyze the alignment between individual hidden neurons and a set of semantic concepts [4, 15, 34]. Importantly, they all generate a single saliency map for the image and have been found to be brittle and unreliable [16, 11].

Another popular approach is LIME [22], which constructs simplified interpretable local classifiers consistent with the black-box classifier in the neighborhood of a single example. However, the local classifier learns a single linear function, which is sufficient to correctly classify the image but does not guarantee consistency with the classifier on its sub-images. More recently, Anchors [23] learns multiple if-then-rules that represent sufficient conditions for classifications. However, this work did not emphasize image classification and did not systematically study the prevalence of multiple explanations for the decisions of CNNs. The if-then-rules in Anchors can be thought of as represented by the root nodes in our SAG. SAGs differ from them by sampling a diverse set for visualization, as well as by additionally representing the relationships between different subregions in the image and their impact on the classification scores of the CNN. The ablation study of Section 5.3 shows that SAGs enable users to better understand the importance of different patches on the classification compared to Anchors-like rules represented by their root nodes.

Some prior work identifies explanations in terms of minimal necessary features [9] and minimal sufficient features [6]. Other work generates counterfactuals that are coherent with the underlying data distribution and provides feasible paths to the target counterfactual class based on density weighted metrics [20]. In contrast, our work yields multiple explanations in terms of minimal sufficient features and visualizes the score changes when some features are absent – simultaneously answering multiple counterfactual questions.

Network distillation methods that compile a neural network into a boolean circuit [5] or a decision tree [17] often yield uninterpretable structures due to their size or complexity. Our work balances the information gain from explanations with the interpretability of explanations by providing a small set of diverse explanations structured as a graph over attention maps.

3 Investigating Image Explanations

In this section, we provide a comprehensive study of the number of different explanations of the images as well as their sizes. As the number of explanations might be combinatorial, we limit the search space by subdividing each image into patches, which corresponds to the resolution utilized in Grad-CAM [25]. Instead of using a heatmap algorithm, we propose to utilize search algorithms to check the CNN (VGGNet [28]) predictions on many combinations of patches in order to determine whether they are able to explain the prediction of the CNN by being a minimum sufficient explanation, defined as having a high prediction confidence from a minimal combination of patches w.r.t. using the full image. The rationale is that if the CNN is capable of achieving the same confidence from a subimage, then the rest of the image may not add substantially to the classification decision. This corresponds to common metrics used in evaluating explanations [24, 19, 21], which usually score saliency maps based on whether they could use a small highlighted part of the image to achieve similar classification accuracy as using the full image. This experiment allows us to examine multiple interesting aspects, such as the minimal number of patches needed to explain each image, as well as the number of diverse explanations by exploring different combinations of patches. The ImageNet validation dataset of images is used for our analysis.

Formally, we assume a black-box classifier that maps , where is an instance space and is a set of classes. If is an instance, we use to denote the output class-conditional probability on class . The predicted class-conditional probability is referred as confidence of the classification in the rest of the paper. In this paper we assume is a set of images. Each image can be seen as a set of pixels and is divided into non-overlapping primitive regions called ‘patches,’ i.e., , where if . For any image , we let and call the target class of . We associate the part of the image in each patch with a propositional symbol or a literal. A conjunction of a set of literals is the image region that corresponds to their union. The confidence of a conjunction is the output of the classifier applied to it, denoted by . We determine this by running the classifier on a perturbed image where the pixels in are either set to zeros or to a highly blurred version of the original image. The latter method is widely used in saliency map visualization methods to remove information without creating additional spurious boundaries that can distort the classifier predictions [10, 19, 21]. We compare the effects of the two perturbation styles in the appendix.

A minimal sufficient explanation (MSE) of an image as class w.r.t. is defined as a minimal conjunction/region that achieves a high prediction confidence w.r.t. using the entire image, where we set as a- sufficiently high fraction in our experiments. That is, if we provide the classifier with only the region represented by the MSE , it will yield a confidence at that is at least of the confidence for the original (unoccluded) image as input. Often we will be most interested in MSEs for .

3.1 Finding MSEs via Search

A central claim of the paper we purport to prove is that the MSEs are not unique, and can be found by systematic search in the space of subregions of the image. The search objective is to find the minimal sufficient explanations that score higher than a threshold where no proper sub-regions exceed the threshold, i.e., find all such that:

| (1) |

for some high probability threshold .

But such a combinatorial search is too expensive to be feasible if we treat each image pixel as a patch. Hence we divide the image into a coarser set of non-overlapping patches. One could utilize a superpixel tessellation of an image to form the set of coarser patches. We adopt a simpler approach: we downsample the image into a low resolution image. Each pixel in the downsampled image corresponds to a coarser patch in the original image. Hence a search on the downsampled image is computationally less expensive. We set the hyperparameter in all our experiments. Further, to use an attention map as a heuristic for search on the downsampled image, we perform average pooling on w.r.t. each patch . This gives us an attention value for each patch, hence constituting a coarser attention map. Once the attention map is generated in low resolution, we use bilinear upsampling to upsample it to the original image resolution to be used as a mask. Bilinear upsampling creates a slightly rounded region for each patch which avoids sharp corners that could be erroneously picked up by CNNs as features.

[.47]

\capbtabbox[.47]

Overlap = 0

Method

Mean

Variance

Mode

CombS

1.56

0.69

1

BeamS-3

1.72

0.98

1

BeamS-15

1.87

1.01

2

Overlap = 1

Method

Mean

Variance

Mode

CombS

3.16

5.82

1

BeamS-3

4.18

7.24

2

BeamS-15

4.51

7.22

3

\capbtabbox[.47]

Overlap = 0

Method

Mean

Variance

Mode

CombS

1.56

0.69

1

BeamS-3

1.72

0.98

1

BeamS-15

1.87

1.01

2

Overlap = 1

Method

Mean

Variance

Mode

CombS

3.16

5.82

1

BeamS-3

4.18

7.24

2

BeamS-15

4.51

7.22

3

We analyze two different search methods for finding the MSEs:

Restricted Combinatorial Search: Combinatorial search constrains the size of the MSE to patches and finds the MSEs by searching for all combinations (conjunctions) of patches that satisfy the criterion in Equation 1. However, such a combinatorial search over the entire downsampled image will be of the order , which is computationally expensive. Hence, we first prune the search space by selecting the most relevant patches, where the relevance of each patch is given by an attention map as , and then carry out a combinatorial search. We set and vary as hyperparameters. These hyperparameter choices allow the combinatorial search to complete in reasonable time.

Beam Search: Beam search searches for a set of at most MSEs by maintaining a set of distinct conjunctions of patches as states at the iteration. It adds a patch to each conjunction to obtain a new set of distinct conjunctions as successor states for the next iteration, until they satisfy the criterion in equation 1 to yield the set . This is similar to the traditional beam search with beam width , but we leverage the attention map for generating the successor states. More concretely, the search is initialized by selecting the highest weighted patches from the attention map as the set of initial states . At any iteration , for each state , we generate candidate successor states by adding the highest weighted patches in the attention map that are not already in . By doing this for each of the states in , we generate a set of candidate successor states. We obtain the classification score for each candidate successor state and select the highest scoring states as the successor states . We chose as a hyperparameter. This choice of value for the hyperparameter allows the beam search to complete in reasonable time.

3.2 Size of Minimal Sufficient Explanations

Each search method yields a set of MSEs constituting multiple minimal regions of an image sufficient for the black-box classifier to correctly classify the image with a high confidence. We measure the size of these minimal regions in terms of the number of patches they are composed of.

Fig. 3 shows these plots for different search methods on the VGG network. Results on ResNet-50 are shown in the appendix. For each chosen size , we plot the cumulative number of images whose MSE has a size . We see that 80% images of the ImageNet validation dataset have at least one MSE comprising of 10 or less patches. This implies that 80% images of the dataset can be confidently classified by the CNN using a region of the image comprising of just 20% of the area of the original image, showing that in most cases CNNs are able to make decisions based on local information instead of looking at the entire image. The remaining 20% of the images in the dataset have MSEs that fall in the range of 11-49 patches (20% - 100% of the original image). Besides, one can see that many more images can be explained via the beam search approach w.r.t. conventional heatmap generation approaches, because the search algorithm evaluated combinations more comprehensively than these heatmap approaches and is less likely to include irrelevant regions. For example, at 10 patches, beam search with all beam sizes can explain about of ImageNet images, whereas Grad-CAM and I-GOS can only explain about . Although beam search as an saliency map method is limited to a low resolution whereas some other saliency map algorithms can generate heatmaps at a higher resolution, this result shows that the beam search algorithm is more effective than traditional saliency map approaches at a low resolution.

3.3 Number of Diverse MSEs

Given the set of MSEs obtained via different search methods, we also analyze the number of diverse MSEs that exist for an image. Two MSEs of the same image are considered to be diverse if they have less than two patches in common. Table 1 provides the statistics on the number of diverse MSEs obtained by allowing for different degrees of overlap across the employed search methods. We see that images tend to have multiple MSEs sufficient for confident classification, with explanations per image if we do not allow any overlap, and explanations per image if we allow a 1-patch overlap. Table 1 provides the percentage of images having a particular number of diverse MSEs. This result confirms our hypothesis that in many images CNNs have more than one way to classify each single image. In those cases, explanations based on a single saliency map pose an incomplete picture of the decision-making of the CNN classifier.

| Overlap = 0 | Overlap = 1 | |||||||||

| 1 | 2 | 3 | 4 | 5 | 1 | 2 | 3 | 4 | 5 | |

| CombS | 87% | 9% | 2% | 0% | 0% | 79% | 5% | 4% | 2% | 2% |

| BeamS-3 | 84% | 11% | 2% | 0% | 0% | 65% | 15% | 6% | 3% | 2% |

| BeamS-15 | 79% | 15% | 2% | 1% | 0% | 59% | 16% | 8% | 5% | 2% |

4 Structured Attention Graphs

From the previous section, we learned about the prevalence of multiple explanations. How can we then, effectively present them to human users so that they can better build mental models of the behavior of image classifiers?

This section introduces structured attention graphs (SAGs), a new way to compactly represent sets of attention maps for an image by visualizing how different combinations of image regions impact the confidence of a classifier. Fig. 2 shows an example. A SAG is a directed acyclic graph whose nodes correspond to sets of image patches and edges represent subset relationships between sets defined by the removal of a single patch.

The root nodes of SAG correspond to sets of patches that represent minimal sufficient explanations (MSEs) as defined in the previous section.

Typically, the score of the root node is higher than all its children . The size of the drop in the score may correspond to the importance of the removed patch .

Under the reasonable assumption that the function is monotonic with the set of pixels covered by the region, the explanation problem generalizes learning Monotone DNF (MDNF) boolean expressions from membership (yes/no) queries, where each disjunction corresponds to a root node of the SAG, which in turn represents a conjunction of primitive patches.

Information-theoretic bounds imply that the general class of MDNF expressions is not learnable with polynomial number of membership queries although some special cases are learnable [2].

The next two subsections describe how a SAG is constructed.

4.1 Finding Diverse MSEs

We first find multiple candidate MSEs

, for some through search. We observe that the obtained set often has a large number of similar MSEs that share a number of literals. To minimize the cognitive burden on the user and efficiently communicate relevant information with a small number of MSEs, we heuristically prune the above set to select a small diverse subset. Note that we prefer a diverse subset (based on dispersion metrics) over a representative subset (based on coverage metrics). This choice was based on the observation that even a redundant subset of candidates can achieve high coverage when the exhaustive set has high redundancy. But has lower information compared to a diverse subset of candidates obtained by optimizing a dispersion metric.

More concretely, we want to find an information-rich diverse solution set of a desired size such that is minimized for all where . We note that can be obtained by solving the following subset selection problem:

For any subset of the candidate set, is the cardinality of the largest pairwise intersection over all member sets of . is the subset with minimum value for among all the subsets of a fixed cardinality . Minimizing is equivalent to maximizing a dispersion function, for which a greedy algorithm obtains a solution up to a provable approximation factor [7]. The algorithm initializes to the empty set, and at each step adds a new set to it which minimizes . The constant is set to in order to show the users a sufficiently diverse and yet not overwhelming number of candidates in the SAG.

4.2 Patch Deletion to Build the SAG

After we have obtained the diverse set of candidates , it is straightforward to build the SAG. Each element of forms a root node for the SAG. Child nodes are recursively generated by deleting one patch at a time from a parent node (equivalent to obtaining leave-one-out subsets of a parent set). We calculate the confidence of each node by a forward pass of the image represented by the node through the deep network. Since nodes with low probability represent less useful sets of patches, we do not expand nodes with probability less than a threshold as a measure to avoid visual clutter in the SAG. is set to as a sufficiently low value.

A flowchart illustrating the steps involved to generate a SAG for a given image input is shown in Fig.5. All the SAGs presented in the paper explain the predictions of VGGNet [28] as the classifier. Results on ResNet-50,

as well as details regarding the computation costs for generating SAGs are provided in the appendix.

5 User Study

We conducted a user study to evaluate the effectiveness of our proposed SAG visualization.222The user study has been approved by IRB, followed the informed consent procedure and involved no more than minimal risk to the participants. User studies have been a popular method to evaluate explanations. For instance, Grad-CAM [25] conducted a user study to evaluate faithfulness and user trust on their saliency maps, and LIME [22] asked participants to predict generalizability of their method by showing their explanations to the participants. This section describes the design of our study and its results.

5.1 Study Design and Procedure

We measured human understanding of classifiers indirectly with predictive power, defined as the capability of predicting given a new set of patches that has not been shown. This can be thought of as answering

counterfactual questions – “how will the classification score change if parts of the image are occluded?” Since humans do not excel in predicting numerical values, we focus on answering comparative queries, which predict the TRUE/FALSE value of the query: , with being the indicator function. In other words, participants were provided with two new sets of patches that have not been shown in the SAG presented to them and were asked to predict which of the two options would receive a higher confidence score for the class predicted by the classifier on the original image.

Using this measure, we compared SAG with two state-of-the-art saliency map approaches I-GOS [21] and Grad-CAM [25].

We recruited 60 participants comprising of graduate and undergraduate students in engineering students at our university (37 males, 23 females, age: 18-30 years). Participants were randomly divided into three groups with each using one of the three saliency map approaches (i.e., between-subjects study design). They were first shown a tutorial informing them about the basics of image classification and saliency map explanations. Then they were directed to the task that involved answering 10 sets of questions. Each set involved an image from ImageNet. These 10 images are sampled from a subset of ImageNet comprising of 10 classes. Each question set composed of two sections. First, participants were shown a reference image with its classification but no explanation. Then they were asked to select one of the two different perturbed versions of the reference image with different regions of the image occluded , based on which they think would be more likely to be classified as the same class as the original image (shown in Fig. 6(a)). They were also asked to provide a confidence rating about how sure they were about their response. In the second section, the participants were shown the same reference image, but now with a saliency map or SAG additionally. They were asked the same question to choose one of the two options, but this time under the premise of an explanation. Along with a SAG representation, they can click on an option to highlight the corresponding SAG nodes that have overlapping patches with the selected option and also highlight their outgoing edges (as shown in Fig. 6(c)). Each participant was paid for their participation.

The metrics obtained from the user study include the number of correct responses among the 10 questions (i.e., score) for each participant, the confidence score for each of their response (i.e., 100 being completely confident; 0 being not at all), and the time taken to answer each response.

5.2 Results

Fig. 9 shows the results comparing the metrics across the three conditions. Fig. 9(a) indicates that participants got more answers correct when they were provided with SAG explanations (Mean8.6, SD1.698) than when they were provided with I-GOS (Mean5.4, SD1.188) or Grad-CAM (Mean5.3, SD1.031) explanations. The differences between SAG and each of the two other methods are statistically significant (0.0001 in Mann-Whitney U tests for both333To check the normality of each variable, we first ran Shapiro-Wilk tests, and the results indicated that some of the variables are not normally distributed. Thus we used the Mann-Whitney U tests which are often used for comparing the means of two variables that are not necessarily normally distributed [31].).

Fig. 9(b) shows the participants’ levels of confidence for correct and incorrect answers across all three conditions after being provided with the explanations.

The plots show that their confidence levels are almost the same for both correct and incorrect responses in the cases of I-GOS and Grad-CAM. However, for the case of SAG, participants have lower confidence for incorrect responses and higher confidence for correct responses. Interestingly, the variance in confidence for incorrect answers is very low for the participants working with SAG explanations. The increased confidence for correct responses and reduced confidence for incorrect responses implies that SAG explanations allow users to “know what they know” and when to trust their mental models. The indifference in confidence for correctness in I-GOS and Grad-CAM may imply that participants lack a realistic assessment of the correctness of their

mental models.

Fig. 9(c) shows that SAG explanations required more effort for participants to interpret explanations. This is expected because SAGs convey more information compared to other saliency maps. However, we believe that the benefits of gaining the right mental models and “appropriate trust” justify the longer time users need to digest the explanations.

5.3 Ablation Study

The two major components of the SAG condition used in the study are the graph-based attention map visualization and the user interaction for highlighting relevant parts in the visualization. As an ablation study, we include two ablated versions of SAGs: (1) SAG/I, which is a SAG without the click interaction, comprising only of the graph visualization and (2) SAG/G, which is a SAG without the graph visualization, comprising only of the root nodes and the interaction. These root nodes of the SAG are similar in spirit to the if-then rules of Anchors [23] and serve as an additional baseline.

To evaluate how participants would work with SAG/I and SAG/G, we additionally recruited 40 new participants (30 males, 10 females, age: 18-30 years) from the same recruitment effort as for earlier experiments and split them into two groups, with each group evaluating an ablated version of SAGs via the aforementioned study procedure. The results of the ablation study are shown in Fig. 8. The participants received significantly lower scores when the user interaction (SAG/I) or the graph structure (SAG/G) are removed (0.0001 in Mann-Whitney U tests for both; data distribution shown in Fig. 8(a)). This implies that both the interaction for highlighting and the graph structure are critical components of SAGs. The correlations of high confidence with correctness and low confidence with incorrectness are maintained across the ablated versions (as in Fig. 8(b)). Participants spent a longer time to interpret a SAG when they were not provided with the interaction feature, while interpreting just the root nodes took a shorter time (as in Fig. 8(c)). It is also worth noting that the differences between SAG without the interactive feature (SAG/I) and each of the two baseline methods (i.e., Grad-CAM and I-GOS) are also statistically significant (0.0004 and 0.0012, respectively), showing the effectiveness of presenting multiple explanations using the graph structure. More data for all the 100 participants involved in the studies is provided in the appendix.

6 Conclusions and Future Work

In this paper, we set out to examine the number of possible explanations for the decision-making of an image classifier.

Through search methods, especially beam search, we have located an average of explanations per image assuming no overlap and explanations per image assuming an overlap of at most 1 patch (about of the area of the image). Moreover, we have found that of the images in ImageNet has an explanation of at most of the area of the image, and it is shown that beam search is more efficient than other saliency map approaches such as GradCAM and I-GOS in locating compact explanations at a low resolution.

Based on these findings, we presented a new visual representation, SAG, that explicitly shows multiple explanations of an image. It effectively shows how different parts of an image contribute to the confidence of an image classifier’s decision.

We conducted a large-scale human-subject study (i.e., 100 participants), and

participants were able to answer counterfactual-style questions significantly more accurately with SAGs than with the baseline methods.

There are many interesting future research directions. One weakness of our approach is that it takes more time for people to digest SAGs than the existing methods. This could be mitigated via more advanced interfaces that allow users to interactively steer and probe the system to gain useful insights [13]. Another direction is to generalize our approach to multiple images and apply our methodology to other modalities such as language and videos.

Acknowledgements

This work was supported by DARPA #N66001-17-2-4030 and NSF #1941892. Any opinions, findings, conclusions, or recommendations expressed are the authors’ and do not reflect the views of the sponsors.

References

- [1]

- Abasi et al. [2014] Hasan Abasi, Nader H. Bshouty, and Hanna Mazzawi. 2014. On Exact Learning Monotone DNF from Membership Queries. In International Conference on Algorithmic Learning Theory. 111–124.

- Bach et al. [2015] Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus Robert Müller, and Wojciech Samek. 2015. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS One 10 (July 2015).

- Bau et al. [2017] David Bau, Bolei Zhou, Aditya Khosla, Aude Oliva, and Antonio Torralba. 2017. Network Dissection: Quantifying Interpretability of Deep Visual Representations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 6541–6549.

- Choi et al. [2017] Arthur Choi, Weijia Shi, Andy Shih, and Adnan Darwiche. 2017. Compiling neural networks into tractable Boolean circuits. intelligence (2017).

- Dabkowski and Gal [2017] Piotr Dabkowski and Yarin Gal. 2017. Real Time Image Saliency for Black Box Classifiers. In Advances in Neural Information Processing Systems (NIPS), Vol. 30.

- Dasgupta et al. [2013] Anirban Dasgupta, Ravi Kumar, and Sujith Ravi. 2013. Summarization through submodularity and dispersion. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 1014–1022.

- Deng et al. [2009] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. 2009. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 248–255.

- Dhurandhar et al. [2018] Amit Dhurandhar, Pin-Yu Chen, Ronny Luss, Chun-Chen Tu, Paishun Ting, Karthikeyan Shanmugam, and Payel Das. 2018. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. In Advances in Neural Information Processing Systems (NIPS), Vol. 31. 592–603.

- Fong and Vedaldi [2017] R. C. Fong and A. Vedaldi. 2017. Interpretable Explanations of Black Boxes by Meaningful Perturbation. In 2017 IEEE International Conference on Computer Vision (ICCV). 3449–3457.

- Ghorbani et al. [2019] Amirata Ghorbani, Abubakar Abid, and James Zou. 2019. Interpretation of neural networks is fragile. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 33. 3681–3688.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.

- Hohman et al. [2019] Fred Hohman, Minsuk Kahng, Robert Pienta, and Duen Horng Chau. 2019. Visual analytics in deep learning: An interrogative survey for the next frontiers. IEEE Transactions on Visualization and Computer Graphics 25, 8 (2019), 2674–2693.

- Khan et al. [2009] Omar Zia Khan, Pascal Poupart, and James P. Black. 2009. Minimal Sufficient Explanations for Factored Markov Decision Processes. In Proceedings of the 19th International Conference on Automated Planning and Scheduling (ICAPS). AAAI.

- Kim et al. [2018] Been Kim, Martin Wattenberg, Justin Gilmer, Carrie Cai, James Wexler, Fernanda Viegas, and Rory Sayres. 2018. Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (TCAV). In Proceedings of the 35th International Conference on Machine Learning (ICML). PMLR, 2668–2677.

- Kindermans et al. [2017] Pieter-Jan Kindermans, Sara Hooker, Julius Adebayo, Maximilian Alber, Kristof T Schütt, Sven Dähne, Dumitru Erhan, and Been Kim. 2017. The (un)reliability of saliency methods. arXiv preprint arXiv:1711.00867 (2017).

- Liu et al. [2018] Xuan Liu, Xiaoguang Wang, and Stan Matwin. 2018. Improving the interpretability of deep neural networks with knowledge distillation. In 2018 IEEE International Conference on Data Mining Workshops (ICDMW). IEEE, 905–912.

- Mohseni et al. [2021] Sina Mohseni, Niloofar Zarei, and Eric D Ragan. 2021. A multidisciplinary survey and framework for design and evaluation of explainable AI systems. ACM Transactions on Interactive Intelligent Systems (TiiS) 11, 3-4 (2021), 1–45.

- Petsiuk et al. [2018] Vitali Petsiuk, Abir Das, and Kate Saenko. 2018. RISE: Randomized Input Sampling for Explanation of Black-box Models. In Proceedings of the British Machine Vision Conference (BMVC).

- Poyiadzi et al. [2020] Rafael Poyiadzi, Kacper Sokol, Raul Santos-Rodriguez, Tijl De Bie, and Peter Flach. 2020. FACE: Feasible and actionable counterfactual explanations. In Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society. 344–350.

- Qi et al. [2020] Zhongang Qi, Saeed Khorram, and Li Fuxin. 2020. Visualizing Deep Networks by Optimizing with Integrated Gradients.. In Proceedings of the AAAI Conference on Artificial Intelligence.

- Ribeiro et al. [2016] Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. 2016. Why should I trust you?: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 1135–1144.

- Ribeiro et al. [2018] Marco Túlio Ribeiro, Sameer Singh, and Carlos Guestrin. 2018. Anchors: High-Precision Model-Agnostic Explanations. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence. AAAI Press, 1527–1535.

- Samek et al. [2016] Wojciech Samek, Alexander Binder, Grégoire Montavon, Sebastian Lapuschkin, and Klaus-Robert Müller. 2016. Evaluating the visualization of what a deep neural network has learned. IEEE transactions on neural networks and learning systems 28, 11 (2016), 2660–2673.

- Selvaraju et al. [2017] R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra. 2017. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In 2017 IEEE International Conference on Computer Vision (ICCV). 618–626.

- Shrikumar et al. [2016] Avanti Shrikumar, Peyton Greenside, Anna Shcherbina, and Anshul Kundaje. 2016. Not Just a Black Box: Learning Important Features Through Propagating Activation Differences. arXiv preprint arXiv:1605.01713 (2016).

- Simonyan et al. [2014] Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. 2014. Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. ICLR Workshop (2014).

- Simonyan and Zisserman [2014] Karen Simonyan and Andrew Zisserman. 2014. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014).

- Springenberg et al. [2015] J.T. Springenberg, A. Dosovitskiy, T. Brox, and M. Riedmiller. 2015. Striving for Simplicity: The All Convolutional Net. In ICLR Workshop.

- Sundararajan et al. [2017] Mukund Sundararajan, Ankur Taly, and Qiqi Yan. 2017. Axiomatic Attribution for Deep Networks. In Proceedings of the 34th International Conference on Machine Learning (ICML). PMLR, 3319–3328.

- Wobbrock and Kay [2016] Jacob O Wobbrock and Matthew Kay. 2016. Nonparametric statistics in human–computer interaction. In Modern Statistical Methods for HCI. Springer, 135–170.

- Zeiler and Fergus [2014] Matthew D. Zeiler and Rob Fergus. 2014. Visualizing and Understanding Convolutional Networks. In European Conference on Computer Vision (ECCV). Springer, 818–833.

- Zhang et al. [2016] Jianming Zhang, Zhe Lin, Jonathan Brandt, Xiaohui Shen, and Stan Sclaroff. 2016. Top-down Neural Attention by Excitation Backprop. In European Conference on Computer Vision (ECCV). Springer, 543–559.

- Zhou et al. [2018] Bolei Zhou, Yiyou Sun, David Bau, and Antonio Torralba. 2018. Interpretable Basis Decomposition for Visual Explanation. In Proceedings of the European Conference on Computer Vision (ECCV). 122–138.

7 Appendix

7.1 User Study Data

Here we provide the scores of all the 100 users that participated in our user study. We see that the scores are fairly random when participants are not provided with any explanation. Moreover, participants spending more time on the questions do not necessarily achieve higher scores. After providing the explanations, we see that high scores (8 and above) are exclusively obtained by participants working with SAG and its ablations. As discussed earlier, participants working with SAG and SAG/I tend to have a higher response time than participants working with other explanations.

7.2 Effect of Perturbation Style

In Section 3, we state that images perturbations can be implemented by either setting the perturbed pixels to zeros or to a highly blurred version of the original image. All the experiments and results in the paper involve image perturbations obtained using the former method. In this section of the appendix, we provide a snapshot of the effect of using blurred pixels as perturbations instead. We use ImageNet validation set as the dataset and VGGNet as the classifier for these experiments. Fig. 10 shows that we obtain a better coverage of the images explained for a given size of minimal sufficient explanations (MSE) on using blurred pixels as perturbations. We hypothesize that this behavior is due to spurious boundaries created on setting the perturbed pixels to zeros, which undermines the classifier’s prediction scores. Such boundaries are absent on using blurred version of the original image for perturbations.

7.3 Minimum Sufficient Explanations: Analysis for ResNet

All the experiments and results in the paper use VGGNet as the black-box classifier. In this section of the appendix, we provide a brief analysis of the nature of multiple minimal sufficient explanations (MSEs) for ResNet [12] as the black-box classifier. We use the same dataset i.e., the ImageNet validation set for these experiments.

| Overlap = 0 | Overlap = 1 | |||||

| Method | Mean | Variance | Mode | Mean | Variance | Mode |

| BeamS-3 (VGGNet) | 1.72 | 0.98 | 1 | 4.18 | 7.24 | 2 |

| BeamS-3 (ResNet) | 1.66 | 2.61 | 1 | 3.27 | 5.49 | 1 |

| BeamS-15 (VGGNet) | 1.87 | 1.01 | 2 | 4.51 | 7.22 | 3 |

| BeamS-15 (ResNet) | 1.73 | 2.62 | 1 | 3.41 | 5.94 | 1 |

From Fig. 11, we see that the beam search is slightly sub-optimal at finding minimal MSEs for ResNet than for VGGNet. Similarly, Table 2 shows that beam search finds a lower number of MSEs on average when the classifier being explained is ResNet. The difference between the modes of the distributions for the two classifiers becomes stark on increasing the beam width. We hypothesize that these differences in the two distributions for the number of MSEs are due to the different perturbation maps obtained for the two classifiers, which we use for guiding the beam search. Digging deeper into the nature of MSEs for various classifiers is one of the possible avenues for future research.

7.4 Computation costs

A representation of computation cost of all the methods and baselines used in our work is provided in table 3 in terms of the wallclock time taken by each method to find and build the explanation for a single image. These values were obtained over a random sample of 100 images from the IMAGENET validation set using a single NVIDIA Tesla V100 GPU.

| Method | Time taken to find the explanation (T1) | Time taken to build the explanation (T1 + time taken to create the visualization) | ||

| Mean | Variance | Mean | Variance | |

| GradCAM | 3.10 | 0.32 | 3.66 | 0.38 |

| IGOS | 4.96 | 0.05 | 5.44 | 0.06 |

| CombS | 9.73 | 4.07 | 12.40 | 10.79 |

| BeamS-3 | 11.34 | 49.16 | 19.97 | 477.41 |

| BeamS-5 | 14.64 | 126.78 | 21.44 | 225.62 |

| BeamS-10 | 20.65 | 299.95 | 28.39 | 555.72 |

| BeamS-15 | 27.81 | 625.85 | 38.12 | 1855.36 |

7.5 SAG Explanations for Wrong Predictions and Debugging

SAGs can be particularly useful to gain insights about the predictions of a neural network and facilitate debugging in case of wrong predictions. For example, Fig. 12 shows that the image with ground truth class as “seashore" is (wrongly) classified as a “shopping cart" by VGG-Net because the coast fence looks like a shopping cart. Interestingly, the classifier uses the reflection of the fence as further evidence for the class “shopping cart": with both the fence and the reflection the confidence is more than but with only the fence it was . The patch corresponding to the reflection is not deemed enough on its own for a classification of shopping cart(evident from the drop in probabilities shown in SAG).

We provide more examples of SAGs for explaining wrong predictions by VGG-Net. These SAG explanations provide interesting insights into the wrong decisions of the classifier. For contrast, we also show the corresponding Grad-CAM and I-GOS explanations for the wrong predictions.

7.5.1 Predicted class: Golf-cart; True class: RV

7.5.2 Predicted class: Prison; True class: Library

7.5.3 Predicted class: Space-shuttle; True class: Racecar

7.6 SAG Examples

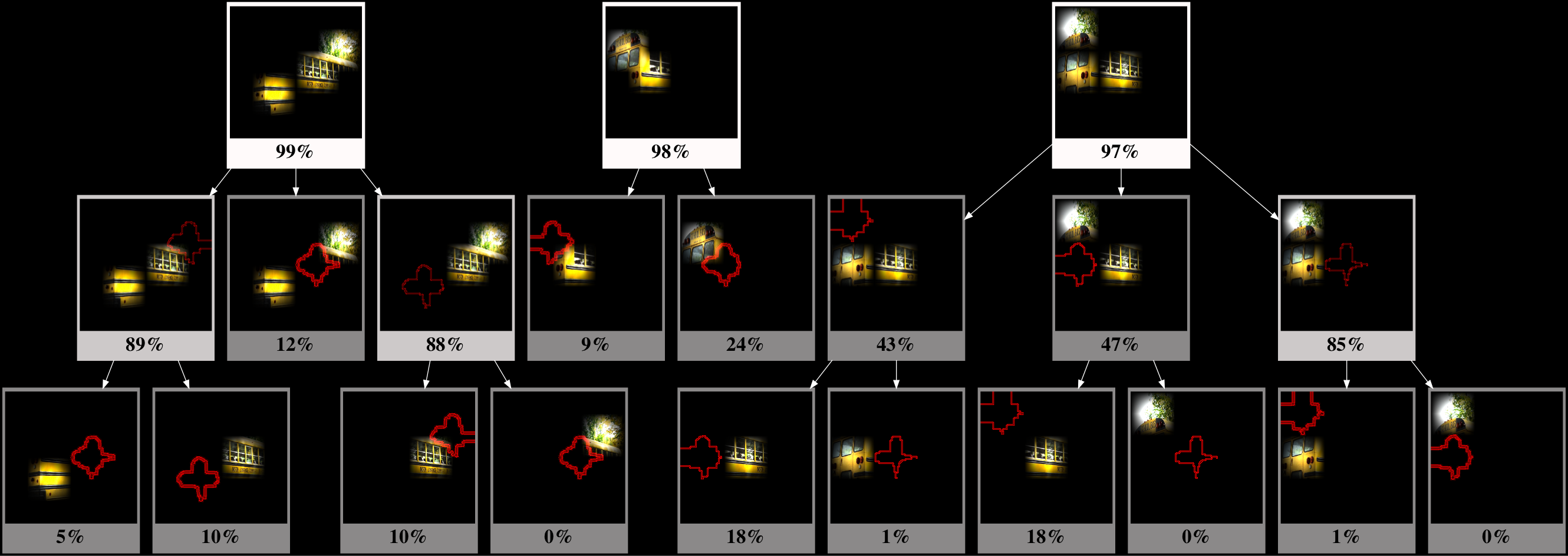

Here we provide more examples of SAGs for various images with their predicted (true) classes. In order to emphasize the advantage of our approach over traditional attention maps, we also provide the corresponding Grad-CAM and I-GOS saliency maps.

7.6.1 Class: Goldjay

7.6.2 Class: Schoolbus

7.6.3 Class: Peacock

7.6.4 Class: RV

7.6.5 Class: Barbell