On Three-Layer Data Markets

Abstract

We study a three-layer data market comprising users (data owners), platforms, and a data buyer. Each user benefits from platform services in exchange for data, incurring privacy loss when their data, albeit noisily, is shared with the buyer. The user chooses platforms to share data with, while platforms decide on data noise levels and pricing before selling to the buyer. The buyer selects platforms to purchase data from. We model these interactions via a multi-stage game, focusing on the subgame Nash equilibrium. We find that when the buyer places a high value on user data (and platforms can command high prices), all platforms offer services to the user who joins and shares data with every platform. Conversely, when the buyer’s valuation of user data is low, only large platforms with low service costs can afford to serve users. In this scenario, users exclusively join and share data with these low-cost platforms. Interestingly, increased competition benefits the buyer, not the user: as the number of platforms increases, the user utility does not necessarily improve while the buyer utility improves. However, increasing the competition improves the overall utilitarian welfare. Building on our analysis, we then study regulations to improve the user utility. We discover that banning data sharing maximizes user utility only when all platforms are low-cost. In mixed markets of high- and low-cost platforms, users prefer a minimum noise mandate over a sharing ban. Imposing this mandate on high-cost platforms and banning data sharing for low-cost ones further enhances user utility.

1 Introduction

In the digital era, online platforms have become integral to our daily lives, offering a plethora of services that range from social networking to personalized shopping experiences. While these platforms provide convenience and connectivity, they also engage in extensive data collection practices. Users’ interactions with these platforms generate vast amounts of data, encompassing personal preferences, behaviors, and other sensitive information. This data, a valuable commodity, is often shared with third-party buyers.

With the advent of new functionalities by information technology companies such as Apple or Google that reduce or eliminate tracking via third-party cookies, there has been an increased reliance on first-party data, which is information gathered by a company from its own user base. For example, as elaborated in Cross [2023b], major retailers such as BestBuy and Walmart capitalize on this data through loyalty programs, customer purchase records, and subscriptions. They do this by selling the data to advertisers who aim to place targeted ads on the retailers’ websites or across various online platforms. In a similar vein, Cross [2023a] notes that Mastercard, a leading payment technology firm, trades cardholder transaction information via third-party online data marketplaces and its internal “Data and Services” division. This practice grants numerous entities access to extensive consumer data and insights. For instance, their Intelligent Targeting service allows businesses to leverage “Mastercard 360∘ data insights” to create and execute advertising campaigns that specifically target potential “high-value” customers.

In this complex digital ecosystem, users face the dilemma of benefiting from the services provided by these platforms while potentially compromising their privacy. Platforms, on the other hand, must balance the profitability derived from data sharing with the need to maintain user trust and satisfaction. This interplay between users’ privacy concerns and the economic incentives of platforms raises important questions about the sustainability of current data market practices. Notable regulatory actions have been taken to improve user experiences. For instance, the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) have been introduced to protect user privacy and to limit the amount of data shared with the platforms. Although, at first sight, this helps users, there have been several studies pointing to its detrimental impact both on platforms and users. This is because these regulations have led to a more concentrated market structure by limiting competition in data markets. This results from the regulation’s restrictions on data sharing, which may slow down data-based innovations and services. For instance, studies have shown that GDPR has led to a significant reduction in the number of available apps in the market, with new app entries falling by half following the regulation’s implementation (Janssen et al. [2022]). This decline in market entries indicates a potential loss of innovation as new and smaller players find it increasingly difficult to compete under these regulations. This effect is particularly detrimental to small companies that rely on these data synergies for innovation and market growth (see also Gal and Aviv [2020]).

In this paper, we aim to understand the user and platform strategies that arise when considering the collection and usage of user data. We also explore regulatory frameworks that could be implemented to enhance user utility while maintaining the benefits provided by online platforms.

1.1 Our model and main results

We adopt an analytical-modeling-based approach and develop a framework to examine the choices of both users and platforms, taking into account users’ privacy concerns. We also explore regulations and policies that could alleviate these concerns. In our model, multiple platforms interact with a user who owns data and seeks the services of these platforms, as well as with a third-party buyer interested in purchasing the user’s data from the platforms. Throughout our study, we consider MasterCard as an example of such a platform. The user represents an individual in a specific population segment with particular preferences, and the buyer is conceptualized as a third-party advertiser aiming to understand and leverage these user preferences for its own benefit.

The utility of each platform is determined by three factors: the gains obtained from using user data to provide services, potential payments received from buyers purchasing this data, and the costs incurred in providing services to the user. Let us highlight the cost of services, as it plays an important role in our analysis. It is particularly important to distinguish between high-cost and low-cost platforms. The low-cost platforms are those that do not necessarily need to sell data to stay in the market, as their gains from the service already cover their costs of operation. In our leading examples, low-cost platforms are exemplified by payment-technology companies or big retailers, where either their operational costs per user are low, or their main source of revenue is not derived from selling data. Conversely, for high-cost platforms, the gains from service provision are not enough to offset their operational costs. Hence, they require a minimum level of data selling to be incentivized to enter the market (i.e., to provide services to the user). Examples of these platforms typically include smaller firms or companies that heavily rely on data collection for advertising to ensure their continued operation.

We model the interaction between the players as a multi-stage game in which, in the first stage, the platforms decide whether they want to provide services to the user and the privacy level they want to deliver to the data buyer (so that the user is incentivized to share). We model the privacy level that the platform provides as a noise level added to the user data. Adding a suitable level of noise is common practice in, for example, the analysis of census data Abowd [2018] and is theoretically justified in the literature on differential privacy Dwork et al. [2014].

In the second stage of the game, the user decides which platform to join. The user’s utility is the service quality of the platform, proportional to the amount of user information revealed to the platform minus the user’s privacy loss. The privacy loss refers to the amount of information all platforms reveal to the data buyer. In the third and fourth stages, the platforms determine the price to charge the data buyer, and the buyer decides from which platforms to purchase. The data buyer’s utility is the user’s leaked information from all platforms minus the payments made to acquire this information. Again, the data buyer can be viewed as a third-party advertiser in our example mentioned above who pays the platforms to obtain user data. They then use this data for potential gain, with their gain being proportional to the accuracy of the user data obtained.

Equilibrium characterization and insights:

We adopt the subgame Nash equilibrium as our equilibrium concept and use backward induction to characterize it. Finding the equilibrium of this game is complex mainly because the platforms’ choice of noise level not only directly impacts the user’s utility but also changes the data buyer’s decision, which indirectly impacts the user’s utility. Also, the competition among platforms further complicates the dynamic of the game. For instance, a platform may still be incentivized to sell data at a noise level higher than needed for the user to be willing to share their data. At first glance, this may seem unreasonable as it reduces the quality of the data that they can sell, and hence, the data buyer would pay less for it. However, increasing the privacy could persuade the user to forgo other platforms that offer less stringent privacy guarantees and only use this platform’s service. As a result, this can give a monopoly to the platform in terms of access to the user’s data, and hence, the data buyer would end up paying more to them.

We start our analysis of equilibrium by focusing on the case of two platforms. This setting allows us to infer insights about equilibrium properties that are applicable to more general cases. Here, we identify up to three primary regimes, depending on the data buyer’s valuation of user data acquisition, denoted by in our model. When falls below a certain threshold, neither of the two platforms enters the market due to insufficient payment to cover service costs. This regime ceases to exist when at least one platform is low-cost, meaning it can cover service costs solely through service provision without needing to sell data. Conversely, when is sufficiently high, both platforms will participate in the market. Interestingly, in this case, and when the user is privacy-conscious and requires a minimum non-zero level of noise for data sharing, the user utility is equal to zero at equilibrium. However, as the data buyer compensates each platform at the marginal value of the data, their utility remains positive. Finally, there exists an intermediate regime of , where only the lower-cost platform engages in the market. Here, both the user and the data buyer utilities are zero at equilibrium, indicating that having both platforms in the market to compete benefits the data buyers but not the user.

We demonstrate that these findings extend to the general scenario with platforms. Specifically, we establish that when exceeds a certain threshold, signifying the data buyer’s willingness to pay more for the user data, all platforms will enter the market and provides services to the user. Conversely, when is below this threshold, only low-cost platforms will participate, while high-cost ones will abstain. Although competition does not favor the user, it results in positive utility for the data buyer. Moreover, the overall utilitarian welfare increases proportionally with the number of participating platforms.

Regulation and policy design:

Building on our equilibrium characterization, we then study regulations that aim to improve user utility. Here, our analysis provides three main insights. Firstly, we consider a ban on platforms sharing user data with the data buyers. This regulation only maximizes the user utility if all the platforms are low-cost. Specifically, under this regulation, users obtain the best of both worlds: all platforms (that have a low cost for providing services) enter the market, and the user benefits from the services of all of them while incurring zero privacy loss. This observation suggests that if we only had large firms whose costs of providing service per user are small, then the draconian regulation that involves banning data sharing would have been optimal. However, this is no longer the case if the market includes both high and low-cost platforms.

In this case, our second insight reveals that imposing a uniform minimum privacy mandate improves user utility, particularly when the value of the user data to the data buyer is significant enough for platforms to charge a high price for it. This means that all platforms need to perturb the user data by adding to it a noise with a high enough noise level. Here, our analysis identifies specific conditions where GDPR-type policies, which limit user data usage, can benefit users. Finally, our third insight suggests that, despite potential implementation challenges, a non-uniform minimum privacy mandate — banning data sharing for low-cost platforms while imposing a minimum privacy standard for high-cost platforms — further enhances user utility. This regulation entails prohibiting large, low-cost platforms from sharing user data, while permitting smaller, high-cost platforms to do so, albeit in a limited manner through privacy mechanisms. This approach aligns with our earlier discussion that GDPR-type regulations disproportionately harm small businesses. Therefore, a non-uniform regulation favoring small businesses is preferred not only by these businesses but also by the users.

1.2 Related literature

Our paper broadly relates to the emerging literature on data markets and online platform behavior. Earlier works on this topic include Acquisti et al. [2016], Posner and Weyl [2018], Ali et al. [2019], Jones and Tonetti [2020], and Dosis and Sand-Zantman [2022] which study the consequences of protecting and disclosing personal information about individuals. Additionally, Acemoglu et al. [2022], Bergemann et al. [2020], Ichihashi [2020b], and Fainmesser et al. [2022] study the privacy consequences of data externality (whereby a user’s data reveals information about others). Acemoglu et al. [2023a] study user-optimal privacy-preserving mechanisms that platforms can adopt to balance privacy and learning and Acemoglu et al. [2023b] develop an experimentation game to study the implications of the platform’s information advantage in product offering. Finally, Ichihashi [2021] studies data intermediaries, and Ichihashi [2020a] studies consumer privacy choices while online platforms adjust their privacy guarantees dynamically to incentivize more data sharing from consumers.

More closely related to ours are papers that study data sharing among users, platforms, and third-party data buyers and, in particular, the impacts of banning such data sharing. In this regard, Bimpikis et al. [2021] and Argenziano and Bonatti [2023] develop a two-round model of the interaction between a user and two competing platforms that can collect data on user preferences (and potentially use it for price discrimination). Bimpikis et al. [2021] show that banning data sharing can hurt the user when the platforms offer complementary products, and Argenziano and Bonatti [2023] show that banning data sharing can hurt the user if the benefits of knowing user preferences by the seller and personalized services are limited. The above papers consider the interactions between a user and a platform that itself uses the user data for price discrimination and, therefore, can potentially hurt the user. In contrast, we study a different problem that relates to how platforms sell user data to other third-party data buyers. Therefore, in addition to the differences in modeling choices and analysis, we depart from the above papers by considering a three-layer data market that includes the interaction between the platforms and the data buyer and focusing on deriving insights on effective regulations.

Also related to our work is Ravichandran and Korula [2019] who empirically study the effect of the data-sharing ban on platforms’ revenues and Madsen and Vellodi [2023] who study the effects of a complete ban on innovation (we refer to Pino [2022] for a comprehensive survey on the microeconomics of data). We depart from these papers by focusing on the impact of regulations (and in particular data-sharing ban) on user’s utility. Our paper also relates to the literature that studies various forms of monetizing user data by platforms, such as selling cookies Bergemann and Bonatti [2015], efficient pricing for large data sets Abolhassani et al. [2017], dynamic sales Immorlica et al. [2021], Drakopoulos and Makhdoumi [2023], monetization while ensuring the data is replicable Falconer et al. [2023], and the design and price of information where a data buyer faces a decision problem under uncertainty and can augment their initial private information with supplemental data from a data seller Bergemann et al. [2018].

Finally, our paper relates to the growing literature on data acquisition and the design of mechanisms for incentivizing data sharing, including Ghosh and Roth [2011], Ligett and Roth [2012], Nissim et al. [2014], Cummings et al. [2015], Chen et al. [2018], Chen and Zheng [2019], Fallah et al. [2023], Cummings et al. [2023], Fallah et al. [2022], and Karimireddy et al. [2022]. We depart from this line of work as we consider a three-layer market where the privacy cost occurs due to selling data to a third-party buyer.

The rest of the paper proceeds as follows. In Section 2, we describe our model and the interactions among the platforms, the data buyer, and the user. We also present some properties of the revealed information measure in our setting such as its monotonicity and submodularity. In Section 3, we introduce our equilibrium concept and provide preliminary characterizations of it. In Section 4, we focus on a setting with two platforms and provide a full characterization of the equilibrium. The focus on two platforms allows us to describe some of the nuances of the interactions in our model. In Section 5, we establish that our main characterizations continue to hold in the general setting with any number of platforms. We also provide several insights and comparative statics, leading to our discussion of the regulations in Section 6. Finally, Section 7 concludes while the appendix contains all the omitted proofs from the text.

2 Model

We consider platforms that are interacting with a user and a data buyer. The user benefits from using the services of these platforms. In return, the platforms acquire data about the user’s preferences and behavior. The platforms may subsequently sell this data to a third party, such as an advertiser, which we refer to as a data buyer (or, interchangeably, a buyer). The data buyer is interested in learning about the user’s preferences, which can adversely impact the user’s utility. This negative impact can be because of price discrimination, unfairly targeted advertising, manipulation, or intrinsic privacy losses. In the example of MasterCard described in the introduction, MasterCard is one of the platforms, and the data buyer is any other firm that purchases user data from MasterCard.

Formally, we represent the user’s data by , which follows a Gaussian distribution with zero mean and identity variance, that is, . This vector can be interpreted as the user’s feature vector. Each platform offers a service whose quality depends on how accurately the platform can learn the match between the user’s feature vector and the platform’s characteristics, i.e.,

Here, is a known unit-norm vector symbolizing the characteristic vector of platform .

The user decides whether to share their data with each of the platforms in exchange for their service. We let denote whether the user has shared data with platform and denote the user strategy profile. Specifically, if the user opts to share their data with platform , meaning , then platform receives a signal

The introduced noise ensures a degree of privacy concerning the user’s data.111We set the noise variance to one to simplify the results. However, the analysis remains valid for any (potentially heterogeneous) level of noise.

The data buyer aims to estimate a linear function of the user’s data, . Here, with denotes the data buyer’s known characteristic vector. If platform possesses the user’s data (i.e., ), they present the data buyer with an option to acquire a noisy version of their data at a price . Therefore, the data buyer strategy profile is , with denoting their acceptance of the platform ’s offer. The data buyer then receives a signal

for the price , where represents the variance of the noise that platform adds to the data they share with the data buyer.

Lastly, each platform decides on the noise level to add to the user data before offering it to the data buyer, denoted by . We find it more convenient to work with the standard deviation of the noise and assume, without loss of generality, it is positive rather than the variance . Each platform also decides whether to enter the market and provide services to the user, denoted by . Therefore, the platforms’ strategy profile is .

We set when the data buyer does not obtain any information from platform . This can occur either because the user has not shared their data with platform or because the data buyer declines the offer from platform or the platform has not provided the service to the user. In this case, is irrelevant, and we set it to zero.

Throughout the paper, we make the following assumption:

Assumption 1.

Let

denote the correlation between the user ’s data and the data buyer. Then, we assume

Note that under this assumption, the data offered by different platforms are viewed as substitutes for each other from the data buyer’s perspective. However, if this assumption does not hold, a complementary effect could also be observed. To illustrate this, suppose we have platforms where is orthogonal to , i.e., , and also . In this scenario, the data from platform 1 alone has no value to the data buyer, as provides no information about . However, if the data buyer decides to purchase data from the second platform, then the data from the first platform could also become valuable. To understand why, observe that the data from the second platform is a convex combination of two components: and . Therefore, if the data buyer acquires the data from the second platform, learning could assist in better distinguishing these two components. In other words, the data from the first platform is valuable only if accompanied by the data from the second platform. This scenario exemplifies the complementary effect we described earlier. Assumption 1 excludes this possibility, allowing us to primarily focus on the substitution effect. This assumption also implies that the correlation among data for different platforms is given by

2.1 Utility functions

To define the utility functions, we need to first quantify how much information is revealed by observing the signals. We do so by using the following definition, which captures the reduction in uncertainty following the observation of a set of signals.

Definition 1 (Revealed information).

Consider a random variable drawn from the distribution . Let represent the posterior distribution of upon observing a set of signals . The revealed information about by observing is quantified as the reduction in the mean-squared error of , i.e.,

where the outer expectations are over the randomness in and the signals.

Measuring the revealed information, or information gain, through the variance of the posterior distribution has been used widely in economics, statistics, and learning theory (see, for example, Breiman et al. [1984], Bergemann et al. [2020], and Acemoglu et al. [2022]).

Given this definition, the user’s utility function is given by

| (1) |

where the term

is the user’s revealed information to platform if the user shares her information (i.e., when ) and the platform is offering service to the user (i.e., when ). The term

is the user’s revealed information to the data buyer. Here, denotes the vector , which is the revealed information to the data buyer. Notice that the user’s utility does not directly depend on , but we retain this notation to highlight that through the data buyer’s decision, the revealed information depends on . Also, notice that in finding the revealed information to the data buyer, all that matters is the set . Therefore, we also find it convenient to define

| (2) |

where we may drop the subindex whenever the indices of the elements of are clear from the context.

The first term in (1) captures the user’s gain from the service offered by each platform. Notice that this term is present if the platform has entered the market (i.e., ) and if the user shares with the platform (i.e., ). The second term represents the user’s privacy loss from the sharing of their data with the data buyer. Additionally, indicates the user’s relative importance on these two opposing terms.

For each , platform ’s utility is given by

| (3) |

When the platform has not entered the market (i.e., ), the platform’s utility is zero. When the platform has entered the market (i.e., ), the second term represents the platform’s revenue from selling the user’s data to the data buyer. The first term , similar to the user’s utility, reflects the quality of service the platform provides (if the user ends up utilizing their service). This term is concluded to capture the fact that a better service by the platform also enhances the platform’s utility. For example, it could increase ad revenue or expand the user base. Lastly, the cost reflects the per-user cost incurred by the platform for providing the service.

Finally, the data buyer’s utility is given by

| (4) |

which is the data buyer’s gain from learning the user’s information minus the amount they pay to all the platforms to purchase their data. Here, is some constant that captures the importance of the revealed information compared to the payments in the data buyer’s utility.

We refer to the above game among the platforms, user, and buyer of data as a three-layer data market with parameters . Here is the timing of the game (see Figure 1):

-

1.

In the first stage, all platforms simultaneously choose that specifies their noise levels and whether they want to enter the market to provide services to the user.

-

2.

In the second stage, the user chooses that specifies which platforms to join and share data with.

-

3.

In the third stage, the platforms simultaneously choose that determines their offered prices.

-

4.

In the fourth stage, the data buyer chooses that determines whether they accept or decline each offer made by the platforms.

2.2 Revealed Information Properties

Before analyzing this game, we provide some properties of our notion of revealed information. In stating the properties, we recall that the leaked information to the data buyer depends on , , and through the set of platforms that share user data with the data buyer and the noise level of each of them. Therefore, we make use of the shorthand notation for the leaked information given in (2). In what follows, we also consider the lattice , where

| (5) |

The revealed information admits the following closed-form expression.

Lemma 1.

For any , we have . Moreover, for any and we have

where , , and for all such that .

Lemma 1 follows by using the properties of multivariate normal distribution. We should highlight that for simplicity, we assumed that the user adds the same noise level to their data before sharing it with any platform, which results in for all being equal. Our analysis continues to hold for any heterogeneous level of noise.

Importantly, Lemma 1 enables us to obtain some structural properties of the revealed information, as we now demonstrate.

Lemma 2 (monotonicity).

The revealed information is increasing in the set of platforms that share the user data with the data buyer and is decreasing in , i.e., for any and , is increasing in and decreasing in .

Lemma 2 simply states that more data sharing with the data buyer increases its revealed information. Moreover, data with a lower noise level increases the revealed information to the data buyer.

Lemma 3 (submodularity in actions).

For a given vector of noise levels , the revealed information is submodular in the set of platforms that share the user data with the data buyer, i.e., for any , , and , we have

Lemma 3 states the intuitively appealing result that as more data is shared with the data buyer, the marginal increase in the revealed information from one more unit of shared data becomes smaller.

Lemma 4 (submodularity in noise variance).

For any , , , and

is decreasing in and increasing in .

Lemma 4 states the data of platform reveals a relatively higher amount of information for smaller values and for larger values. To understand the former, let us compare the case of and . When , the data of platform reveals a lot, which helps the data buyer learn much better. However, when , the data of platform does not contain any information, and therefore, the relative gain in the revealed information is . To understand the former, let us again compare the case of and . When , the data of platform reveals a lot; therefore, the marginal increase in the revealed information of platform is small. However, when , the data of platform does not contain any information, and therefore, the relative increase in the revealed information from platform ’s data is much larger.

3 Preliminary Equilibrium Characterization

We adopt subgame perfect equilibrium as our solution concept, which means that the strategy profile of all players is a Nash equilibrium of every subgame of the game. We next formally define and characterize the subgame perfect equilibrium in our setting, starting from the last stage.

Buyer equilibrium:

For a given , a data buyer profile is an equilibrium if it achieves the data buyer’s highest utility, i.e.,

Given that there is a finite set of possibilities, one of them achieves the maximum data buyer’s utility, and therefore, an equilibrium buyer profile strategy always exists. We let be the set of such buyer equilibria.

Price equilibrium:

For a given , a pricing strategy by the platforms is an equilibrium if no platform has a profitable deviation (taking the data buyer’s updated decision into account):

Before proceeding with the rest of the game, we establish that the data buyer and price equilibria admit a simple characterization, stated next.

Proposition 1.

For a given , there exists a unique price equilibrium given by

and the corresponding unique buyer equilibrium is for all , where is the vector of all ones for all .

The proof of Proposition 1, given in the appendix, directly follows from the submodularity of the revealed information established in Lemma 3 for the following reason. Consider platform and suppose the contrary that in equilibrium, the buyer is not purchasing from it. This platform has a profitable deviation by lowering its price so that the data buyer finds it optimal to purchase from it. Also, notice that the submodularity of the revealed information implies that if one more platform’s data is shared with the data buyer, the marginal increase in the revealed information decreases. Therefore, if the data buyer was purchasing from another platform , they still find it optimal to purchase from that platform.

Proposition 1 characterizes the equilibrium of the third and fourth stages of the game. Therefore, it remains to characterize the user sharing profile and the platform’s noise level and entry decisions in equilibrium, taking into account the equilibrium of the subsequent stages that we have already identified.

User equilibrium:

For a given , a user sharing profile is an equilibrium if it achieves the user’s highest utility, i.e.,

Again, given that there are possibilities, one of them achieves the maximum user’s utility, and therefore, an equilibrium sharing profile strategy always exists. We let be the set of such user equilibria. This set has the following important lattice structure that we use in the rest of the analysis.

Lemma 5.

For a given , the set of user equilibria is a sublattice of the lattice (defined in (5)). Therefore, it has a maximum and a minimum.

For a platform strategy profile , we select the platforms’ Pareto-optimal one if there are multiple user equilibria. Using Lemma 5, such a user equilibrium exists and is the one that shares with the highest number of platforms.222We can view this as Stackelberg Nash equilibrium in which because the platforms move first, we break the ties in their favor. Similar concepts have appeared in the literature with different terms, such as sender-preferred equilibrium in Bayesian persuasion [Kamenica and Gentzkow, 2011].

Platform equilibrium:

A noise level and entry strategy is an equilibrium if no platform has a profitable deviation, i.e.,

for all .

We refer to a platform’s noise level and entry decision as the platform’s decision and to the corresponding equilibrium as the platforms’ equilibrium. The following characterizes the set of possible platforms’ equilibria.

Proposition 2.

For any platforms’ equilibrium strategy , we must have

that is, the user must find it optimal to share with all platforms that have entered the market.

This proposition follows by noting that a platform that has entered the market can always increase its noise level so that the user finds it optimal to share with the platform.

Motivated by Proposition 2 and Lemma 5, we conclude that for any set of platforms that have entered the market (captured by ), the set of possible noise levels in equilibrium is , where we have

| (6) |

We are interested in characterizing for any and finding the equilibrium that belongs to one of these sets for an equilibrium choice of .

Throughout the paper, we focus on the case in which the user asks for some level of privacy in order to share their data. This means that if the noise levels are all zero, then the user does not share with the platforms. More formally, we make the following assumption:

Assumption 2.

The weight of privacy on the user utility, i.e., , is large enough such that, for any , the point belongs to the set .

We will further clarify this assumption’s role in the next section.

4 Equilibrium Characterization with Platforms

We begin our analysis by considering a setting with platforms. We then show that the main insights from this analysis extend to the general setting with platforms.

Depending on which platforms enter the market, we have four cases:

Case 1 where both platforms enter the market:

In this case, the user’s utility if they share their data with both platforms is given by333With a slight abuse of notation, we drop the other dependencies of the user utility here.

| (7) |

and the user’s utility if they share their data with platform only is equal to

| (8) |

Recall the definition of with in (6). To simplify the notation, we drop the and use . In particular,

Similarly, and denote the cases where and , respectively, have the highest value. Finally,

Notice that we operate under Assumption 2, which implies that is non-empty. In particular, for , this assumption translates to

| (9) |

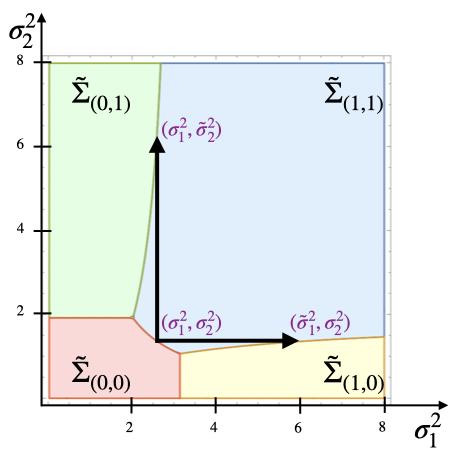

Figure 2(a) depicts the sets , , , and as a function of the noise variance that the two platforms add to the data, i.e., , for parameters and .

Using Proposition 2, the noise level equilibrium is in the set . In fact, we argue that it must belong to because, otherwise, one of the platforms can increase its utility by decreasing its noise level such that the user still shares with it.

Now, for any , the only possible deviation for platform is to increase to to move to the point which resides in , exactly next to the boundary with (see Figure 2(a)). At first glance, it might look like this can never be a deviation because it reduces the revealed information to the buyer. However, notice that, by this action, platform pushes platform out of the market because is in the yellow region in which the user does not share their data with the second platform. Therefore, it is possible that the first platform’s payment increases, as they are now the only platform with access to the data. Note that this is the only deviation that we need to consider. Because if it ends up not being a deviation, it would imply that a further increase of into the yellow region is also not profitable. Also, it is evident that increasing but staying in the region or decreasing it and going into the region in which the user shares no data are not profitable deviations. Considering these deviations results in the following.

Lemma 6.

Lemma 6 is under the assumption that both platforms enter the market. The final point to check is whether any platform has an incentive to enter the market.

Proposition 3.

Both parts of the result provide a lower bound on , with the difference that the first part establishes a necessary condition for an equilibrium to exist, while the second part presents a sufficient condition. Note that the ratio between these two lower bounds converges to one as grows. However, we next highlight a subtle difference between the two results. Note that, for any value greater than , we can choose sufficiently large and such that an equilibrium exists for these parameters and the ratio of over is less than or equal to . In other words, we have

This, in fact, is a direct consequence of the second part of Proposition 3 along with the fact that converges to as grows.

However, the infimum mentioned above does not imply that any and satisfying the condition (11) will necessarily result in an equilibrium. In fact, as our proof establishes, to have an equilibrium for , we must have . In other words, we have

Cases 2 and 3 where only one platform enters the market:

Suppose only platform enters the market at some equilibrium. In this case, the first platform wants to choose the noise level as small as possible but also large enough so that user shares their data. Therefore, the platform chooses at the value that makes the user indifferent between sharing and not sharing (recall that we assumed the user breaks the ties in favor of the platforms), i.e.,

| (13) |

which implies . Notice that this is equivalent to the corresponding to points and in Figure 2(b).

Now, for this noise level to be an equilibrium, we need to verify two potential deviations: (i) platform one has no incentive to abstain from participating, i.e., their utility should be nonnegative, and (ii) the second platform has no profitable deviation by entering the market. Using (13), the first condition simply implies

| (14) |

For the second deviation check, we argue that it suffices to ensure the second platform’s utility at point in Figure 2(b) is nonnegative.

Let us denote the noise levels corresponding to point as . To understand why we only need to check the point , consider that, given platform one’s chosen noise level (equal to ), if the second platform enters the market and selects a certain noise variance, it will result in a point on the line . The smallest noise level for the second platform that motivates the user to share their data with them (and thus has the potential to be profitable) is . One might argue that the second platform could opt for a very large noise level so that the vector of noise levels falls into the green region , implying that only the second platform receives the data. However, this is not feasible, as the line stays within the blue region for any value of (and in fact, it becomes tangent to the border of and as approaches infinity). To confirm this, we need to demonstrate that

holds for any . This is indeed the case, given that due to the submodularity of the revealed information, decreases with increasing and equals when .

Now, checking the second platform’s utility at point along with (14) yields the following result.

Case 4 where no platform enters the market:

Finally, we ask whether there is an equilibrium in which no platform enters the market. In such a scenario, we need to ensure no platform has a profitable deviation by entering the market and choosing some noise level. For instance, if platform wants to deviate, the smallest noise level that they would choose would be as it makes the user indifferent between sharing and not sharing their data with them. In this case, the platform’s utility, as derived in (14), is given by

which is nonpositive when . Making the same argument for the second platform’s deviation, we obtain the following result.

4.1 Insights from the case with

We conclude this section by highlighting a few implications and insights of our results for the case , which, as we will see, also extend to the general case. Note that the term determines the value the buyer places on the user’s data. The higher is, the more money the platform can earn by selling the user’s data.

Our results indicate that there are, at most, three main regimes for , and the market equilibrium depending on the interval falls into. First, as established in Proposition 5, when is small, the platform’s monetary gain from selling data is limited, so platforms may decide not to provide any service as they cannot fully compensate for their service costs. This regime would not exist if at least one of the platforms operates at a low cost, meaning its gain from the user’s data already compensates for the cost of service (this corresponds to the case for some ).

On the other hand, as shown in Proposition 3, when is large enough and platforms can charge a high price for the user’s data, both platforms would enter the market. Finally, there is an intermediary regime for where only the platform with the lower cost enters the market, and the platform with a higher service cost opts out.

Interestingly, the user’s utility in all these cases would be equal to zero. However, the buyer’s utility could be positive when both platforms enter the market. As we establish next, these observations continue to hold in the general case with platforms.

5 Equilibrium Characterization with Platforms

This section shows how our equilibrium characterization extends to a general setting with any number of platforms. In what follows, we make use of the following definition.

Definition 2 (High- and low-cost platforms).

We say a platform is of high cost if and is of low cost if .

The comparison of the cost to in the above definition comes from the fact that the leaked information to any platform is and therefore, high-cost platforms enter the market only if the payment they receive from the buyer is large enough. Conversely, low-cost platforms enter the market irrespective of the payment they receive from the buyer. We can view low-cost platforms as large platforms with small operation costs that do not need extra payments from the data buyer to enter the market and provide services. High-cost platforms, however, are small platforms with large operation costs that do need extra payments from the buyer to be able to provide service.

Theorem 1.

Suppose Assumptions 1 and 2 hold. There exists , , and such that for , we have the following:

-

•

If , an equilibrium of the game exists. Moreover, in any equilibrium, all platforms enter the market, the user utility is zero, and the buyer purchases the data of all platforms and has a strictly positive utility.

-

•

If , an equilibirum of the game exists. Moreover, in any equilibrium, only low-cost platforms enter the market, the user utility is zero, and the buyer purchases the data of low-cost platforms and has a strictly positive utility only if there is more than one low-cost platform.

Let us explain the qualifiers of Theorem 1 and then explain its intuition. First, notice that similar to the setting with platforms, for small enough and also for intermediary values of , a pure-strategy equilibrium may not exist. Therefore, we focus on large enough and also avoid intermediary values of . In the proof of Theorem 1, we have provided explicit expressions for , , and .

To gain the intuition of the first part of Theorem 1, notice that for large enough the data buyer gains a lot when the user’s data is revealed to them and therefore is willing to pay a high price for the user data. This, in turn, means that even high-cost platforms find it optimal to enter the market. As established in Proposition 1 and Proposition 2, in equilibrium, the user shares with all platforms, and the data buyer purchases from all platforms. To see that the user utility becomes zero in equilibrium, suppose the contrary, that the user utility is strictly positive. This means that the equilibrium noise level strategy is in the interior of the set . Given that noise level strategy is in the interior of , there exists such that by decreasing its noise level, we remain in the set (i.e., the user still finds it optimal to share with all platforms), but this deviation increases the payment and therefore the utility of platform . Also, Lemma 3 guarantees that the user still finds it optimal to share with all platforms.

To gain the intuition of the second part of Theorem 1, notice that for small enough , the data buyer’s gain when the user’s data is revealed to them is small and therefore, the buyer is willing to pay only a low price for the user data. This means that high-cost platforms will never find it optimal to enter the market. Nonetheless, the low-cost platforms always enter the market. The fact that the user shares with all the low-cost platforms (that have entered), the buyer purchases from them, and the user utility becomes zero in equilibrium follows from a similar argument to the one discussed above.

Theorem 1 leads to the following insights.

Competition among platforms helps the buyer and not the user:

Let us first characterize the equilibrium when we have only one platform.

Proposition 6.

Suppose Assumption 1 holds. When , we have the following:

-

•

Suppose . If (which holds whenever ), then in equilibrium, the platform enters the market and chooses , the user shares with the platform, and the buyer purchases the data. In this case, the user utility is , and the buyer utility is zero. Otherwise, if , then the platform does not enter the market, and all utilities are zero.

-

•

Suppose . If , then in equilibrium, the platform enters the market and chooses , the user shares with the platform and the buyer purchases the data. In this case, both the user utility and the buyer utility are zero. Otherwise, if , then the platform does not enter the market, and all utilities are zero.

We are interested in the second regime where is large enough so that the user is not incentivized to share with the platform, irrespective of the noise level choice of the platform. In this case, as established in Proposition 6, both the user and the data buyer utilities are zero. Now, let us compare this setting to a setting with platforms that are competing to obtain the user’s data and sell it to the data buyer, established in Theorem 1. Interestingly, we observe that competition does not help the user (as the user obtains zero utility for any ). However, increasing the number of platforms from to improves the data buyer’s utility. To gain the intuition for this observation, notice that the user’s utility will always be zero because otherwise, the platforms can always decrease their noise levels by a small margin so that the user still shares and increases their payments. Now, why does the competition help the data buyer? This is because of the data externality among the platforms’ data about the user, similar to that of Acemoglu et al. [2022] and Bergemann et al. [2020]. In particular, the data of a platform (because of the submodularity of the revealed information, proved in Lemma 3) decreases the marginal value of another platform’s data, and therefore, the platforms end up competing with each other, and sell their data at lower marginal prices.

More platforms imply higher utilitarian welfare:

Let us first find the overall utilitarian welfare in equilibrium, where utilitarian welfare is defined as the sum of the utilities of the platforms, the user, and the data buyer.

Corollary 1.

Consider , , and established in Theorem 1. For , we have the following:

-

•

If , the utilitarian welfare in equilibrium becomes

-

•

If , the utilitarian welfare in equilibrium becomes

Notably, Corollary 1 shows that, within the ranges of each case, the overall utilitarian welfare is increasing in and decreasing in . This is because the payments always cancel each other out, and as increases, the data buyer’s gain becomes larger, and as increases, the user’s loss becomes larger. Moreover, in the first case, the utilitarian welfare is increasing in the number of platforms, and in the second case, it is increasing in the number of low-cost platforms.

6 Regulations

In this section, we consider regulations of the three-layer data market to improve the user utility. As we have established in Theorem 1, the user utility in equilibrium is zero. We now explore whether we can improve the user utility by imposing a regulation. As discussed in the introduction, one possible regulation is to impose a limit on the amount of information each platform can leak about the user to the data buyer. This means imposing a lower bound on the noise level. Let us formally define this regulation.

Definition 3 (Minimum privacy mandate).

A minimum privacy mandate, represented by , is a regulation that mandates that each platform must choose a noise level above . A special case of this regulation is uniform minimum privacy mandate is such that for all . Another special case of this regulation is to ban the platforms from sharing with the buyer, i.e., for all .

Uniform minimum privacy mandate versus ban:

One may conjecture that banning the platforms from sharing their data with the buyer is user-optimal in the class of all uniform minimum privacy mandate regulations because it reduces the privacy loss of the user to zero. We next prove that this is not necessarily true. The main intuition is that if we ban data sharing, then only low-cost platforms enter the market. This, in turn, implies that the user does not obtain the gain from the services provided by the high-cost platforms, and this loss in the service gain can dominate the gain in privacy loss. We next formalize this intuition.

Proposition 7.

Consider for established in Theorem 1. We have:

-

•

If all platforms are low-cost, then irrespective of the minimum privacy mandate, all platforms enter the market in equilibrium. In this case, banning data sharing achieves a higher user utility than any (uniform or non-uniform) minimum privacy mandate.

-

•

Otherwise, if there is at least one high-cost platform, then there exists , , and such that

-

–

If , then under uniform minimum privacy mandate all platforms enter the market, and the user utility is higher than banning data sharing.

-

–

If , then irrespective of the minimum privacy mandate, only low-cost platforms enter the market. In this case, banning data sharing achieves a higher user utility than any uniform minimum privacy mandate.

-

–

The first part is rather straightforward: if all platforms are low-cost, then they all enter the market and provide services to the user. Banning data sharing is user-optimal because it decreases the privacy loss of the user to zero while the user obtains the service gains from all platforms. The second part is more nuanced: here, if is large enough, then all platforms enter the market. Now, there are two opposing forces in place. If we ban data sharing, only low-cost platforms enter the market, and user utility then comprises only the service gained from low-cost platforms, while the privacy loss becomes zero. Therefore, banning data sharing decreases the service gains but also decreases the privacy losses. If we do not ban data sharing, the user utility comprises the service gains from all platforms and the privacy loss incurred by the data sharing of all platforms with the buyer. Therefore, not banning data sharing increases the service gains but also increases the privacy losses. For a large enough minimum uniform privacy mandate, and when there is at least one high-cost platform, the service gains of the user utility dominate the privacy loss, and therefore, not banning data sharing becomes the user-optimal regulation. Finally, to understand the last part, we observe that for small enough , only low-cost platforms enter the market, and therefore, the situation is effectively the same as the first part of Proposition 7. Notice that the assumption of large enough and large/small enough is adopted for the same reason as that of Theorem 1 so that an equilibrium exists.

Non-uniform versus uniform minimum privacy mandate:

As noted above, when all platforms are low-cost or when is small enough so that high-cost platforms do not enter the market, then banning data sharing is optimal in the class of all (uniform or non-uniform) minimum privacy mandates. However, corresponding to the second part of Proposition 7, the user prefers a minimum privacy mandate to banning data sharing when there is at least one high-cost platform, and is large enough. This is because if we ban data sharing, the high-cost platforms do not enter, while with the “right” choice of uniform minimum privacy mandate, they enter the market. In this case, the user benefits from the services, while the privacy loss that they incur is small. However, with a uniform minimum privacy mandate, the low-cost platforms (that enter the market regardless of the minimum privacy mandate in place) also bring about privacy loss to the user. Therefore, a natural idea is to have a non-uniform minimum privacy mandate to help the user further. We next formalize this idea.

Proposition 8.

Suppose there is at least one high-cost platform and consider for established in Theorem 1. There exists and such that for banning the low-cost platforms from data sharing and imposing minimum privacy mandate equal to for all high-cost platforms generates a higher user utility than any non-uniform minimum privacy mandate.

With the non-uniform minimum privacy mandate described above, the user obtains the service gain of the low-cost platforms and incurs zero privacy loss from them. Moreover, for large enough , the high-cost platforms all enter the market. Therefore, the user gains from the high-cost platforms’ services, and when is large enough, it incurs a small privacy loss. Notice that, as established in Proposition 7, with the optimal uniform minimum privacy mandate, both the low-cost and high-cost platforms enter the market. We prove that if we impose the same minimum privacy mandate but only for high-cost platforms and ban the low-cost platforms, the user obtains the same service gain from all platforms but incurs a smaller privacy loss. Moreover, all platforms still find it optimal to enter the market.

Proposition 7 and Proposition 8 establish that banning the data market is not necessarily user-optimal, especially when we have high-cost platforms (i.e., smaller platforms whose cost of providing the service is high). Moreover, a uniform minimum privacy mandate improves the user utility because it also incentivizes the high-cost platforms to enter the market to provide service to the user. Finally, a non-uniform minimum privacy mandate that bans the low-cost (i.e., large) platforms from data sharing and imposes a minimum privacy mandate on high-cost (i.e., small) platforms further improves the user utility. This is because this regulation not only incentivizes the high-cost platforms to enter the market to provide service to the user but also zeros out the privacy loss of the low-cost platforms.

7 Conclusion

In this paper, we study the intricate interplay between user privacy concerns and the dual incentives of online platforms: to safeguard user privacy and to monetize user data through sales to third-party buyers. To this end, we have constructed a multi-stage game model that captures the interactions between users, platforms, and data buyers. Our model delineates various scenarios where the interplay of platform service costs, the valuation of data, and privacy concerns dictate equilibrium outcomes. Additionally, we examine potential regulations designed to bolster user privacy and enhance market outcomes for users.

There are several exciting avenues for future research. In our framework, we have modeled a user as a representative of a market segment. Extending our analysis to a setting with multiple heterogeneous users is very interesting. This is because data externalities among different users may cause indirect information disclosure, which in turn could alter both user strategies and potential regulations aimed at enhancing user experience. Similarly, our focus has been on a representative data buyer who aims to acquire user data and learn their preferences. Extending our findings beyond this is very valuable. This is because user data is inherently multi-dimensional and can be aggregated from diverse sources, potentially modifying user strategies and calling for tailored regulations. Lastly, we have investigated regulations that restrict the transfer of user data to buyers. The challenge of developing alternative regulatory frameworks that address the intricacies of the data market—frameworks that are both adaptable and resilient enough to keep pace with swift technological advancements—remains an open and important area for exploration.

8 Acknowledgment

Alireza Fallah acknowledges support from the European Research Council Synergy Program, the National Science Foundation under grant number DMS-1928930, and the Alfred P. Sloan Foundation under grant G-2021-16778. The latter two grants correspond to his residency at the Simons Laufer Mathematical Sciences Institute (formerly known as MSRI) in Berkeley, California, during the Fall 2023 semester. Michael Jordan acknowledges support from the Mathematical Data Science program of the Office of Naval Research under grant number N00014-21-1-2840 and the European Research Council Synergy Program.

References

- Abolhassani et al. [2017] M. Abolhassani, H. Esfandiari, M. Hajiaghayi, B. Lucier, and H. Yami. Market pricing for data streams. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 31, 2017.

- Abowd [2018] J. M. Abowd. The us census bureau adopts differential privacy. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 2867–2867, 2018.

- Acemoglu et al. [2022] D. Acemoglu, A. Makhdoumi, A. Malekian, and A. Ozdaglar. Too much data: Prices and inefficiencies in data markets. American Economic Journal: Microeconomics:Micro, 2022.

- Acemoglu et al. [2023a] D. Acemoglu, A. Fallah, A. Makhdoumi, A. Malekian, and A. Ozdaglar. How good are privacy guarantees? platform architecture and violation of user privacy. Technical report, National Bureau of Economic Research, 2023a.

- Acemoglu et al. [2023b] D. Acemoglu, A. Makhdoumi, A. Malekian, and A. Ozdaglar. A model of behavioral manipulation. Technical report, National Bureau of Economic Research, 2023b.

- Acquisti et al. [2016] A. Acquisti, C. Taylor, and L. Wagman. The economics of privacy. Journal of Economic Literature, 54(2):442–492, 2016.

- Ali et al. [2019] S. N. Ali, G. Lewis, and S. Vasserman. Voluntary disclosure and personalized pricing. NBER Working Paper, (w26592), 2019.

- Argenziano and Bonatti [2023] R. Argenziano and A. Bonatti. Data markets with privacy-conscious consumers. AEA Papers and Proceedings, 113:191–96, May 2023. doi: 10.1257/pandp.20231083. URL https://www.aeaweb.org/articles?id=10.1257/pandp.20231083.

- Bergemann and Bonatti [2015] D. Bergemann and A. Bonatti. Selling cookies. American Economic Journal: Microeconomics, 7(3):259–294, 2015.

- Bergemann et al. [2018] D. Bergemann, A. Bonatti, and A. Smolin. The design and price of information. American Economic Review, 108(1):1–48, 2018.

- Bergemann et al. [2020] D. Bergemann, A. Bonatti, and T. Gan. The economics of social data. arXiv preprint arXiv:2004.03107, 2020.

- Bimpikis et al. [2021] K. Bimpikis, I. Morgenstern, and D. Saban. Data tracking under competition. Available at SSRN 3808228, 2021.

- Breiman et al. [1984] L. Breiman, J. Friedman, R. A. Olshen, and C. J. Stone. Classification and Regression Trees. Routledge, 1st edition, 1984. doi: 10.1201/9781315139470.

- Chen and Zheng [2019] Y. Chen and S. Zheng. Prior-free data acquisition for accurate statistical estimation. In Proceedings of the 2019 ACM Conference on Economics and Computation, pages 659–677, 2019.

- Chen et al. [2018] Y. Chen, N. Immorlica, B. Lucier, V. Syrgkanis, and J. Ziani. Optimal data acquisition for statistical estimation. In Proceedings of the 2018 ACM Conference on Economics and Computation, pages 27–44, 2018.

- Cross [2023a] R. Cross. How mastercard sells its ‘gold mine’ of transaction data, Sep 2023a. URL https://pirg.org/edfund/resources/how-mastercard-sells-data/.

- Cross [2023b] R. Cross. The new data brokers: retailers, rewards apps & streaming services are selling your data. https://pirg.org/articles/the-new-data-brokers-retailers-rewards-apps-streaming-services-are-selling-your-data/, June 2023b.

- Cummings et al. [2015] R. Cummings, K. Ligett, A. Roth, Z. S. Wu, and J. Ziani. Accuracy for sale: Aggregating data with a variance constraint. In Proceedings of the 2015 Conference on Innovations in Theoretical Computer Science, pages 317–324, 2015.

- Cummings et al. [2023] R. Cummings, H. Elzayn, E. Pountourakis, V. Gkatzelis, and J. Ziani. Optimal data acquisition with privacy-aware agents. In 2023 IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), pages 210–224. IEEE, 2023.

- Dosis and Sand-Zantman [2022] A. Dosis and W. Sand-Zantman. The ownership of data. The Journal of Law, Economics, and Organization, 39(3):615–641, 02 2022.

- Drakopoulos and Makhdoumi [2023] K. Drakopoulos and A. Makhdoumi. Providing data samples for free. Management Science, 69(6):3536–3560, 2023.

- Dwork et al. [2014] C. Dwork, A. Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- Fainmesser et al. [2022] I. P. Fainmesser, A. Galeotti, and R. Momot. Digital privacy. Management Science, 2022.

- Falconer et al. [2023] T. Falconer, J. Kazempour, and P. Pinson. Towards replication-robust analytics markets, 2023.

- Fallah et al. [2022] A. Fallah, A. Makhdoumi, A. Malekian, and A. E. Ozdaglar. Bridging central and local differential privacy in data acquisition mechanisms. Available at SSRN 4311351, 2022.

- Fallah et al. [2023] A. Fallah, A. Makhdoumi, A. Malekian, and A. Ozdaglar. Optimal and differentially private data acquisition: Central and local mechanisms. Operations Research, 2023.

- Gal and Aviv [2020] M. S. Gal and O. Aviv. The competitive effects of the GDPR. Journal of Competition Law & Economics, 16(3):349–391, 2020.

- Ghosh and Roth [2011] A. Ghosh and A. Roth. Selling privacy at auction. In Proceedings of the 12th ACM conference on Electronic commerce, pages 199–208, 2011.

- Ichihashi [2020a] S. Ichihashi. Dynamic privacy choices. In Proceedings of the 21st ACM Conference on Economics and Computation, pages 539–540, 2020a.

- Ichihashi [2020b] S. Ichihashi. Online privacy and information disclosure by consumers. American Economic Review, 110(2):569–595, 2020b.

- Ichihashi [2021] S. Ichihashi. Competing data intermediaries. The RAND Journal of Economics, 52(3):515–537, 2021.

- Immorlica et al. [2021] N. Immorlica, I. A. Kash, and B. Lucier. Buying data over time: Approximately optimal strategies for dynamic data-driven decisions. In 12th Innovations in Theoretical Computer Science Conference (ITCS 2021). Schloss Dagstuhl-Leibniz-Zentrum für Informatik, 2021.

- Janssen et al. [2022] R. Janssen, R. Kesler, M. E. Kummer, and J. Waldfogel. GDPR and the lost generation of innovative apps. Technical report, National Bureau of Economic Research, 2022.

- Jones and Tonetti [2020] C. I. Jones and C. Tonetti. Nonrivalry and the economics of data. American Economic Review, 110(9):2819–2858, 2020.

- Kamenica and Gentzkow [2011] E. Kamenica and M. Gentzkow. Bayesian persuasion. American Economic Review, 101(6):2590–2615, 2011.

- Karimireddy et al. [2022] S. P. Karimireddy, W. Guo, and M. I. Jordan. Mechanisms that incentivize data sharing in federated learning. arXiv preprint arXiv:2207.04557, 2022.

- Ligett and Roth [2012] K. Ligett and A. Roth. Take it or leave it: Running a survey when privacy comes at a cost. In International Workshop on Internet and Network Economics, pages 378–391. Springer, 2012.

- Madsen and Vellodi [2023] E. Madsen and N. Vellodi. Insider imitation. Available at SSRN 3832712, 2023.

- Nissim et al. [2014] K. Nissim, S. Vadhan, and D. Xiao. Redrawing the boundaries on purchasing data from privacy-sensitive individuals. In Proceedings of the 5th Conference on Innovations in Theoretical Computer Science, pages 411–422, 2014.

- Pino [2022] F. Pino. The microeconomics of data–a survey. Journal of Industrial and Business Economics, 49(3):635–665, 2022.

- Posner and Weyl [2018] E. Posner and E. Weyl. Radical markets: Uprooting Capitalism and Democracy for a Just Society. Princeton University Press, 2018.

- Ravichandran and Korula [2019] D. Ravichandran and N. Korula. Effect of disabling third-party cookies on publisher revenue. Google Report, 2019.

Appendix A Proofs:

This appendix included the omitted proofs from the text.

Proof of Lemma 1

Without loss of generality, we prove the claim for . Let us recall a property of normal distributions: If is a -dimensional normal random variable is partitioned as

with with sizes

and accordingly is partitioned as

with sizes

and is partitioned as

with sizes

then the distribution of conditional on is normal with covariance matrix

The proof of this lemma follows directly from the above fact.

Proof Lemma 2

Both parts follow from the conditional expectation being the MSE estimator. To see the first part, notice that we can always throw away data points. To see the second part, notice that we can always add extra noise to the data points.

Proof of Lemma 3

Using Lemma 1, we first derive the leaked information to the buyer. In particular, if both platforms enter the market and the user’s data is shared with them and then sold to the buyer, then the leaked information is given by

On the other hand, if the user’s data is only shared with the buyer through platform , then the leaked information to the buyer is given by

To prove this lemma, without loss of generality, we need to establish that if the buyer has the extra data of platform , the gain in acquiring the data of platform is smaller. By invoking the fact mentioned in the proof of Lemma 1 for the normal variable conditional on the rest of the data points, the inequality stated in the lemma becomes equivalent to

where for all , where is the variance of conditional on the rest of the data points. The above inequality holds true as it is equivalent to

that holds as .

Proof of Lemma 4

We prove part one and part two follows an identical argument. We use a similar argument to the one presented in the proof of Lemma 3. We establish this inequality by considering a one-by-one change in the noise variance.

If and , then the inequality becomes equivalent to

The above inequality holds because the function

is increasing, which can be seen by evaluating its derivative.

Proof of Proposition 1

We first argue that, for any , the equilibrium price of platform must be such that the buyer finds it optimal to purchase from this platform in the corresponding buyer equilibrium. To see this notice that if the buyer does not purchase from platform , the platform’s utility from interacting with the buyer becomes . Now, if the platform deviates and selects the price

then the buyer always finds it optimal to purchase from this platform, and therefore, the platform’s utility weakly increases from . This is because for any , we have

where (a) follows from Lemma 3.

Proof of Lemma 5

This lemma directly follows the submodularity of the leaked information in actions, established in Lemma 3.

Proof of Proposition 2

Suppose the contrary that there exists a platform equilibrium strategy for which , and . We argue that platform has a profitable deviation. In particular, notice that the current utility of platform is , as the buyer will not pay any non-zero price for this platform’s data. Now, if platform chooses , and , then in any we must have that and therefore the platform ’s utility increases to .

Proof of Lemma 6

Recall that we argued that the equilibrium, if exists, belong to . Furthermore, we stated that we only need to check deviations in Figure 2(a). To make sure such a deviation does not happen for some equilibrium , we must have

| (15) |

Notice that is on the boundary of and which implies that

| (16) |

and thus, . Using this, we can rewrite the condition (15) as

| (17) |

Notice that we can similarly consider the deviation of the second platform by increasing its noise variance to in Figure 2(a). To ensure this deviation is also not profitable, we should have

| (18) |

The following lemma establishes the necessary and sufficient condition for (17) and (18) to hold for some .

Notice that if we move up on the boundary , and both would increase, and hence, and both would decrease. Therefore, if (17) holds for some , then it would hold for any upper point on the boundary. Similarly, if (18) holds for some , then it would hold for any lower point on the boundary. Hence, if a point satisfies both conditions, then for any other point on the boundary , at least one of the conditions would hold.

Next, we show that, for the point given by (10), both conditions hold if and neither hold if . Given our discussion above, this would show that for , there is no point for which both conditions hold and hence the proof would be complete.

Now, let us first see why the point given by (10) is actually on the boundary . Consider point in Figure 3. For this point, we have

Hence, the line

| (19) |

passes through point , and therefore, it intersects with the boundary . Let us call this intersection (see Figure 3) and denote it by . Using (16) along with the fact that is on the line (19), we can derive that

| (20) |

Now, let us consider the deviation to which is on the boundary of and , i.e.,

| (21) |

Let

| (22) |

Plugging (20) and (22) into (21) and simplifying the equation yields

| (23) |

Next we argue that we should pick the smaller (first) solution. Notice that since , we have . In addition, Assumption 2, or equivalently (9), implies . In this regime, we have . Thus, we should pick the smaller solution above.

Now, we check the condition (17). Notice that we need to find such that

| (24) |

We can verify that the right-hand side minus the left-hand side is equal to zero at and positive afterward. Notice that and therefore the condition (24) is symmetric with respect to both platforms, and consequently, considering the other deviation to leads to the exact same condition on . This completes the proof of our claim that either both conditions hold if and neither hold if .

Proof of Proposition 3

Suppose an equilibrium exists in which both platforms enter the market. As we argued in Section 4, in this case . Next, notice that for any , the platform ’s utility should be nonnegative. Therefore, we have

| (25) |

Notice that, since , we have . Also, the minimum of corresponds to point for and point for in Figure 2(b) and is equal to . This implies the lower bound on .

Now, suppose . We claim the point , defined in the proof of Lemma 6, can be an equilibrium. We just need to check when the platform’s utility is nonnegative. In particular, the first platform’s utility at is given by

| (26) |

which is nonnegative for . We can similarly write the second platform’s utility at the point .

Proof of Theorem 1

We make use of the following lemmas in this proof.

Lemma 7.

For any , the noise level equilibrium strategy, if it exists, belongs to the boundary of the set .

Proof of Lemma 7: Using Proposition 2, we know that the equilibrium noise variance belongs to . We next argue that it cannot belong to the interior of . To see this, suppose the contrary that is an equilibrium. There exists such that by locally decreasing , we remain in the set . We claim that platform has an incentive to decrease its noise variance. First, note that by decreasing its noise variance, we remain in the set and that the user still finds it optimal to share with platform . However, the payment she receives from the buyer, which is given by

increases because of Lemma 4.

Lemma 8.

For any , the noise level equilibrium strategy, if it exists, belongs to .

Proof of Lemma 8: Using Lemma 7, we know that the equilibrium noise level, if it exists, belongs to the boundary of and for some . We argue that it cannot belong to when . Suppose the contrary that is an equilibrium where and also . We claim that platform has an incentive to decrease its noise level. In particular, we argue that by decreasing its noise level, we go to and that platform ’s utility increases. Notice that it suffices to show that by decreasing , we remain in the set . This is because by invoking Lemma 4, the payment of platform increases, and the users keep sharing with platform . For , the indifference condition for the user implies

which can be written as

Now if , then by decreasing , the left-hand side does not change, and the right-hand decreases (from Lemma 4). Therefore, by decreasing , we remain in the set .

For a given with , platform finds it optimal to enter the market if

Therefore, by letting

all platforms enter the market. We next argue that this term is finite. Note that, for any given value of , the fact that implies that the term in the denominator is equal to

As a result, we can rewrite as

Notice that, for any given , the denominator is positive because the amount of leaked information strictly decreases when one less user shares information. Finally, is finite becasue the outer maximum is taken over finitely many elements.

Similarly, for a given with , when platform finds it optimal to not enter the market if

Therefore, by letting

all platforms enter the market.

We next prove that assuming a set of platforms that have entered the market, for large enough , there exists an equilibrium. Without loss of generality, let us assume all platforms have entered the market. is an equilibrium noise level if no platform has a deviation. Let us consider platform 1. For this platform, the possible deviations are to where and can be any vector in . We next argue that for which

is a noise level equilibrium for large enough . Similar to the analysis with platforms, we let

for any denote the leaked information about when the data of the user is shared with the buyer through platforms in the set and platform is using noise variance .

Before proceeding with the proof, we first find a closed-form expression for the leaked information.

Lemma 9.

-

•

Suppose for all . The leaked information about when the data of all platforms is shared with the buyer becomes

-

•

Suppose for all and . The leaked information about when the data of all platforms is shared with the buyer becomes

Proof: Using Lemma 1, the leaked information is

where in (a), we used and in (b), we used the Sherman–Morrison formula for the inverse of a rank-one perturbation of a matrix. This completes the proof of the first part. The second part follows from a similar argument.

Let us consider the deviation of platform 1 so that the corresponding user equilibrium is with having ones and zeros. Notice that if such deviation is not possible by increasing , then this is not a profitable deviation for platform 1, and we now verify that even if such deviation is possible, it is not profitable for platform 1. Given that , we have that the user is indifferent between sharing with all platforms and sharing with none of them. Therefore, we have

which gives us

Using Lemma 9, the above equality gives us

Given the above deviation to , the user indifference condition gives us

Substituting the closed-form expressions of Lemma 9 in the above equality gives us

where .

Now, for this deviation to be not profitable, we must have

Again, using the closed-form expressions of Lemma 9 and writing and in terms of , the above inequality becomes equivalent to

which we prove next. We can write

where (a) follows by noting that the last term is increasing in and (b) holds for

Notice that for any other set of the platforms that have entered the market, the above bound on guarantees the existence of platform equilibrium strategy as . This completes the proof.

Proof of Proposition 6

The user utility from sharing if the platform enters the market is

The above utility is always positive if . Therefore, the user always shares their data. This also implies that the platform chooses to maximize the payment received from the buyer. The platform’s utility then becomes

Therefore, the platform enters the market if and only if the above quantity is positive.