On the Problem of Reformulating Systems with Uncertain Dynamics as a Stochastic Differential Equation

Abstract

We identify an issue in recent approaches to learning-based control that reformulate systems with uncertain dynamics using a stochastic differential equation. Specifically, we discuss the approximation that replaces a model with fixed but uncertain parameters (a source of epistemic uncertainty) with a model subject to external disturbances modeled as a Brownian motion (corresponding to aleatoric uncertainty).

I Problem Formulation and Error in Literature

Consider a nonlinear system whose state at time is , control inputs are , such that

| (1) |

where , almost surely, i.e., is known exactly, and is twice continuously differentiable.

In many applications, is not known exactly, and prior knowledge is necessary to safely control (1). One such approach consists of assuming that lies in a known space of functions , and to impose a prior distribution in this space . For instance, by assuming that lies in a bounded reproducing kernel Hilbert space (RKHS), a common approach consists of imposing a Gaussian process prior on the uncertain dynamics , where is the mean function, and is a symmetric positive definite covariance kernel function which uniquely defines [1, 2]. An alternative consists of assuming that , where are known basis functions, and are unknown parameters. With this approach, one typically sets a prior distribution on , e.g., a Gaussian , and updates this belief as additional data about the system is gathered.

Given these model assumptions and prior knowledge about , safe learning-based control algorithms often consist of designing a control law satisfying different specifications, e.g., minimizing fuel consumption , or satisfying constraints , with a set encoding safety and physical constraints.

Next, we describe an issue with the mathematical formulation of the safe learning-based control problem that has appeared in recent research [3, 4, 5, 6], slightly changing notations and assuming a finite-dimensional combination of features for clarity of exposition but without loss of generality. As in [5], consider the problem of safely controlling the uncertain system

| (2) |

where , , , and is positive definite, with its Cholesky decomposition. Note that this formulation can be equivalently expressed in function space, where is drawn from a Gaussian process with mean function and kernel . Our representation can be seen as a weight-space treatment of the GP approaches used in [3] and [4].111For a squared exponential kernel, one needs for this equivalence, see [1] for more details. Nevertheless, the issue discussed in this paper remains valid for such kernels.

These works then proceed by introducing the Brownian motion , making the change of variable , and reformulating (2) as a stochastic differential equation (SDE)

| (3) |

with and . Unfortunately, (3) is not equivalent to (2). Indeed, the solution to (3) is a Markov process, whereas the solution to (2) is not. Intuitively, the increments of the Brownian motion in (3) are independent, whereas in (2), is randomized only once, and the uncertainty in its realization is propagated along the entire trajectory. By making this change of variables for , the temporal correlation between the trajectory and the uncertain parameters is neglected. In the next section, we provide a few examples to illustrate the distinction between these two cases. The first demonstrates the heart of the issue on a simple autonomous system, whereas the second shows that analyzing the SDE reformulation (3) is insufficient to deduce the closed-loop stability of the system in (2).

II Counter-Examples

II-A Uncontrolled system

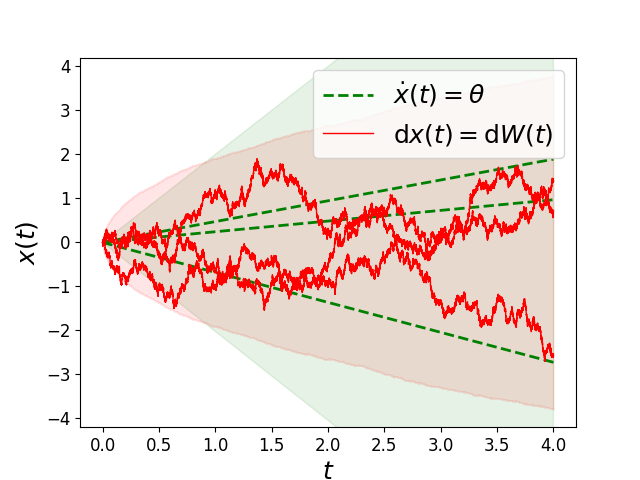

Consider the scalar continuous-time linear system

| (4) |

where and almost surely, i.e., is known exactly. The solution to (4) satisfies , i.e. each sample path is a linear (continuously differentiable) function of time . The marginal distribution of this stochastic process is Gaussian at any time , with . The increments of this process are not independent, since the increment depends on for any .

Using the change of variables described previously, one might consider substituting for , where is a standard Brownian motion, yielding the following SDE

| (5) |

The solution of this SDE is a standard Brownian motion started at . This stochastic process has different marginal distributions , has independent increments, and is not differentiable at any almost surely. We illustrate sample paths of these two different stochastic processes in Figure 1.

II-B System with linear feedback

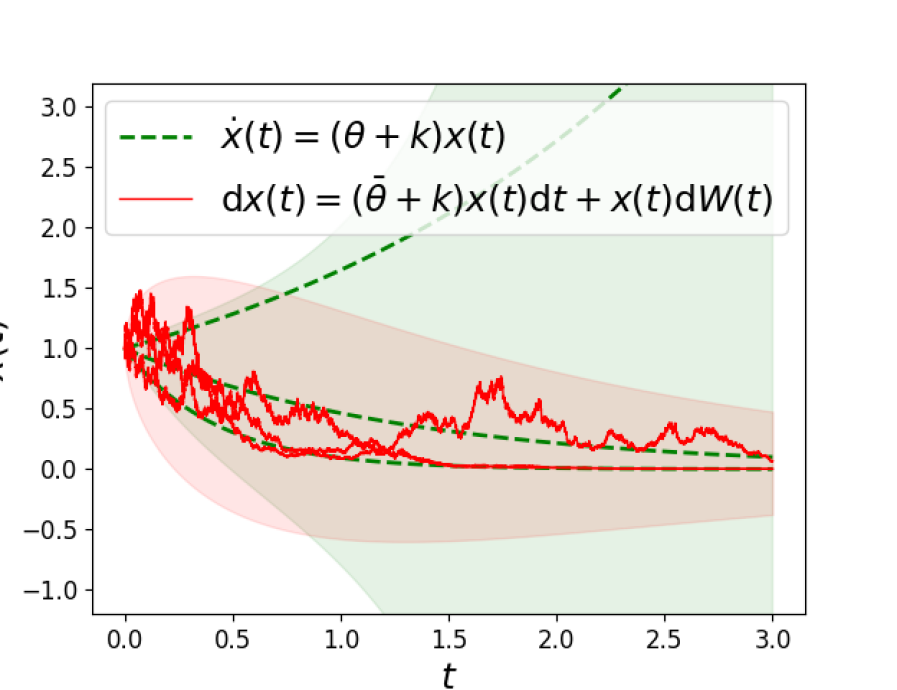

Starting from , consider the controlled linear system

| (6) |

where is a feedback gain and is the state-feedback control policy. Solutions to (6) take the form . Choosing the gain and simulating from , one obtains the sample paths shown in Figure 2. We observe that some sampled trajectories are unstable, corresponding to samples of such that .

Note that the substitution yields the SDE

| (7) |

The solution of this SDE is a geometric Brownian motion . Choosing the same control gain and plotting sample paths in Figure 2, we observe that the system (7) is stochastically stable.

II-C Discrete-time system

The observation we make in this note is well-known in the discrete-time problem setting. For example, starting from , the linear system with multiplicative uncertainty

| (8) |

is different from the system with additive disturbances

| (9) |

where the disturbances are independent and identically distributed. We refer to [7, Chapter 4.7] for further discussions about this topic. We also refer to [8] for a recent analysis of systems of the form of (8) where the parameters are resampled at each time .

III Implications and Possible Solutions

As (2) and (3) are generally not equivalent, the stability and constraint satisfaction guarantees derived for the SDE (3) in recent research [3, 4, 5, 6] do not necessarily hold for the system (2).222For instance, the generator of the Markov process solving the SDE (3) (see [9]) is used to prove stability in [3, 4, 5, 6]. Unfortunately, (2) does not yield a Markov process. Thus, it would be necessary to adapt the concept of generator to solutions of (2) before concluding the stability of the original system. This could yield undesired behaviors when applying such algorithms, developed on an SDE formulation of dynamics (3), to safety-critical systems where uncertainty is better modeled by (2), i.e., dynamical systems with uncertain parameters that are not changing over time.

Although (3) is not equivalent to (2), it is interesting to ask whether (3) is a conservative reformulation of (2) for the purpose of safe control. For instance, given a safe set , if one opts to encode safety constraints through joint chance constraints of the form

| (10) |

where is a tolerable probability of failure, there may be settings where a controller satisfying (10) for the SDE (3) may provably satisfy (10) for the uncertain model (2). Indeed, as solutions of (3) may have unbounded total variation (as in the example presented above), which is not the case for solutions of (2), we make the conjecture that for long horizons , a standard proportional-derivative-integral (PID) controller may better stabilize (2) than (3), and that similar properties hold for adaptive controllers.

Alternatively, approaches which bound the model error through the Bayesian posterior predictive variance [10] or confidence sets holding jointly over time [11, 12] exist. Given these probabilistic bounds, a policy can be synthesized yielding constraints satisfaction guarantees.

References

- [1] C. K. Williams and C. E. Rasmussen, Gaussian processes for machine learning. MIT press, 2006.

- [2] M. A. Álvarez, L. Rosasco, and N. D. Lawrence, “Kernels for vector-valued functions: A review,” Foundations and Trends in Machine Learning, vol. 4, no. 3, pp. 195–266, 2012.

- [3] G. Chowdhary, H. A. Kingravi, J. P. How, and P. A. Vela, “Bayesian nonparametric adaptive control using Gaussian processes,” IEEE Transactions on Neural Networks, vol. 26, no. 3, pp. 537–550, 2015.

- [4] D. D. Fan, J. Nguyen, R. Thakker, N. Alatur, A. Agha-mohammadi, and E. A. Theodorou, “Bayesian learning-based adaptive control for safety critical systems,” in Proc. IEEE Conf. on Robotics and Automation, 2020.

- [5] Y. K. Nakka, A. Liu, G. Shi, A. Anandkumar, Y. Yue, and S. J. Chung, “Chance-constrained trajectory optimization for safe exploration and learning of nonlinear systems uncertainties,” IEEE Robotics and Automation Letters, vol. 1, no. 1, pp. 1–9, 2020.

- [6] G. Joshi and G. Chowdhary, “Stochastic deep model reference adaptive control,” in Proc. IEEE Conf. on Decision and Control, 2021.

- [7] A. McHutchon, “Nonlinear modelling and control using gaussian processes,” Ph.D. dissertation, University of Cambridge, 2014.

- [8] R. R. Smith and B. Bamieh, “Median, mean, and variance stability of a process under temporally correlated stochastic feedback,” IEEE Control Systems Letters, vol. 5, no. 3, 2021.

- [9] J. F. Le Gall, Brownian Motion, Martingales, and Stochastic Calculus. Springer, 2016.

- [10] M. J. Khojasteh, V. Dhiman, M. Franceschetti, and N. Atanasov, “Probabilistic safety constraints for learned high relative degree system dynamics,” in 2nd Annual Conference on Learning for Dynamics & Control, 2020.

- [11] T. Koller, F. Berkenkamp, M. Turchetta, and A. Krause, “Learning-based model predictive control for safe exploration,” in Proc. IEEE Conf. on Decision and Control, 2018.

- [12] T. Lew, A. Sharma, J. Harrison, A. Bylard, and M. Pavone, “Safe active dynamics learning and control: A sequential exploration-exploitation framework,” 2021, available at https://arxiv.org/abs/2008.11700.