On the lower expected star discrepancy for jittered sampling than simple random sampling

Abstract.

We compare expected star discrepancy under jittered sampling with simple random sampling, and the strong partition principle for the star discrepancy is proved.

Key words and phrases:

Expected star discrepancy; Stratified sampling.2010 Mathematics Subject Classification:

65C10, 11K38, 65D30.1. Introduction

Classical jittered sampling (JS) acquires better expected star discrepancy bounds than using traditional Monte Carlo (MC) sampling, see [9]. This means jittered sampling is the refinement of the traditional Monte Carlo method, the problem now is that is there a direct comparison of star discrepancy itself, not a bound comparison. This involves the strong partition principle for the star discrepancy version, see an introduction in [13]. Among various techniques for measuring the irregularities of point distribution, star discrepancy is the most efficient, which is well established and has found applications in various areas such as computer graphics, machining learning, numerical integration, and financial engineering, see [15, 1, 7, 10].

Star discrepancy. The star discrepancy of a sampling set is defined by:

| (1.1) |

where denotes the -dimensional Lebesgue measure and denotes the characteristic function defined on the -dimensional rectangle .

The special deterministic point set constructions are called low discrepancy point sets, the best known asymptotic upper bounds for star discrepancy are of the form

where for fixed . Studies on such point sets, see [14, 6]. We introduce random factors in the present paper. Formers have conducted extensive research on the comparisons between expected discrepancy. In [13], it is proved that jittered sampling constitutes a set whose expected discrepancy is smaller than that of purely random points. Further, a theoretical conclusion that the jittered sampling does not have the minimal expected discrepancy is presented in [12]. We study expected star discrepancy under different partition models, which are the following,

| (1.2) |

where denotes a simple random sampling set, denotes a stratified sampling point set that is uniformly distributed in the grid-based stratified subsets. Since 2016 in [16], F. Pausinger and S. Steinerberger have given the upper and lower bounds for expected star discrepancy under jittered sampling, and they proved the strong partition principle for discrepancy, they mentioned whether this conclusion could be generalized to star discrepancy. Then, in 2021 [12], M. Kiderlen and F. Pausinger referred again to the proof of the strong partition principle for the star discrepancy.

The rest of this paper is organized as follows. Section 2 presents preliminaries on stratified sampling and covers. Section 3 presents our main results, which provide comparisons of the expected star discrepancy for simple random sampling and stratified sampling under grid-based equivolume partition. Section 4 includes the proofs of the main result. Finally, in section 5 we conclude the paper with a short summary.

2. Preliminaries on stratified sampling and covers

Before introducing the main result, we list the preliminaries used in this paper.

2.1. Jittered sampling

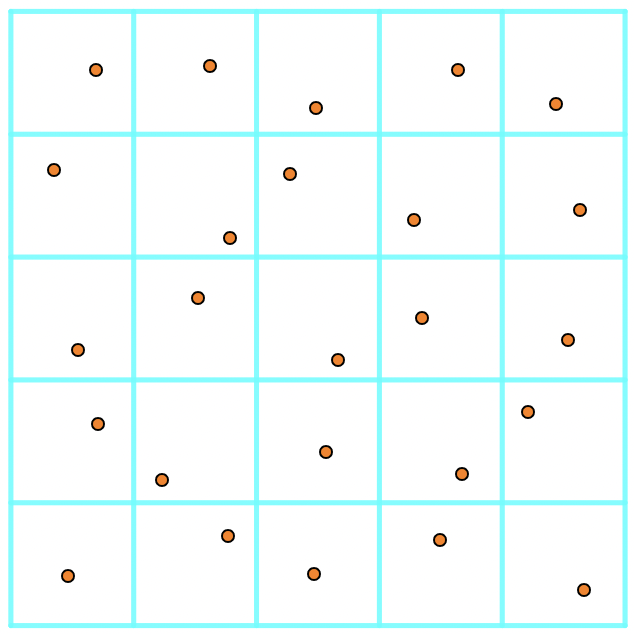

Jittered sampling is a type of grid-based equivolume partition. is divided into axis parallel boxes each with sides see illustration of Figure 1. Research on the jittered sampling are extensive, see [4, 9, 12, 13, 16].

For jittered sampling, we consider a rectangle (we shall call it the test set in the following) in anchored at . For the corresponding isometric grid partition of , if we put

and

which means the cardinality of the index set . Then for , easy to show that

| (2.1) |

2.2. covers

Secondly, to discretize the star discrepancy, we use the definition of covers as in [8], which is well known in empirical process theory, see, e.g., [17].

Definition 2.1.

For any , a finite set of points in is called a -cover of , if for every , there exist such that and . The number denotes the smallest cardinality of a -cover of .

From [8, 11], combining with Stirling’s formula, the following estimation for holds, that is, for any and ,

| (2.2) |

Let and be covers, then

where

| (2.3) |

Formula (2.3) provides convenience for estimating the star discrepancy.

3. Expected star discrepancy for stratified and simple random sampling

In this section, a comparison of expected star discrepancy for jittered sampling and simple random sampling is obtained, which means the strong partition principle for star discrepancy holds.

3.1. Comparison of expected star discrepancy under jittered sampling and simple random sampling

Theorem 3.1.

Let with . Let , is a simple random sampling set. Stratified random dimension point set is uniformly distributed in the grid-based stratified subsets of , then

| (3.1) |

Remark 3.2.

4. Proofs

In this section, we present the proof of Theorem 3.1. We first introduce Bernstein’s inequality, which is a standard result that can be found in textbooks on probability, see, e.g., [5] and we shall omit the proof here.

Lemma 4.1 (Bernstein’s inequality).

Let be independent random variables with expected values and variances for . Assume (C is a constant) for each and set , then for any ,

4.1. Proof of Theorem 3.1

For the arbitrary test set , we unify a label for the sampling point sets formed by different sampling models, and we consider the following discrepancy function,

| (4.1) |

For the grid-based equivolume partition , we divide the test set into two parts, one is the disjoint union of entirely contained by and another is the union of remaining pieces which are the intersections of some and , i.e.,

| (4.2) |

where are two index sets.

We set

Besides, for an equivolume partition of and the corresponding stratified sampling set , we have

| (4.3) | ||||

For sampling sets and , we have

| (4.4) |

and

| (4.5) |

Hence, we have

| (4.6) |

Now, we exclude the equality case in (4.6), which means the following formula holds,

| (4.7) |

for and for almost all .

Hence,

| (4.8) |

for almost all and all , which implies , which is not impossible for

For set , we have

| (4.9) |

The same analysis for set as , we have

| (4.10) |

For test set , we choose and such that and , then (,) constitute the covers. For and , we can divide them into two parts as we did for (4.2) respectively. Let

and

We have the same conclusion for and . In order to unify the two cases and (Because and are generated from two test sets with the same cardinality, and the cardinality is the covering numbers), we consider a set which can be divided into two parts

| (4.11) |

where are two index sets. Moreover, we set the cardinality of at most (the covering numbers, where we choose ), and we let

We define new random variables , as follows

Then,

| (4.12) | ||||

Since

we get

| (4.13) |

| (4.14) |

Let

Therefore, from Lemma 4.1, for every , we have,

Let then using covering numbers, we have

| (4.15) |

Combining with (4.14), we get

| (4.16) | ||||

For point sets and , if we let

Then (4.10) implies

Besides, as (4.16), we have

| (4.17) | ||||

and

respectively.

Suppose , and we choose

in (4.17), then we have

| (4.18) |

Hence, from (4.18), it can easily be verified

| (4.19) |

holds with probability at least .

From (2.3), combining with covering numbers(where ), we get,

| (4.20) | ||||

holds with probability at least , the last inequality in (4.20) holds because holds for all .

Same analysis with point set , we have

| (4.21) | ||||

holds with probability at least .

Now, we fix a probability value in (4.20), i.e., we suppose (4.20) holds with probability exactly , where . Choose this in (4.21), we have

holds with probability

Therefore from , we obtain,

| (4.22) |

holds with probability where .

We use the following fact to estimate the expected star discrepancy

| (4.23) |

where denotes the star discrepancy of point set .

Plugging into (4.20), we have

| (4.24) |

holds with probability . Then (4.24) is equivalent to

Now releasing and let

| (4.25) |

| (4.26) |

and

| (4.27) |

Then

| (4.28) |

Thus from (4.23) and , we have

| (4.29) | ||||

Furthermore, from (4.22), we have

From we obtain

Hence,

| (4.30) |

5. Conclusion

We study expected star discrepancy under jittered sampling. A strong partition principle for the star discrepancy is presented. The next most direct task is to find the expected star discrepancy better than jittered sampling, which may be closely related to the variance of the characteristic function defined on the convex test sets.

References

- [1] H. Avron, V. Sindhwani, J. Yang and M. W. Mahoney, Quasi-Monte Carlo feature maps for shift-invariant kernels, J. Mach. Learn. Res., 17(2016), 1–38.

- [2] J. Beck, Some upper bounds in the theory of irregularities of distribution, Acta Arith., 43(1984), 115-130.

- [3] J. Beck, Irregularities of distribution. I, Acta Math., 159(1987), 1-49.

- [4] K. Chiu, P. Shirley and C. Wang, Multi-jittered sampling, Graphics Gems, 4(1994), 370-374.

- [5] F. Cucker and D. X. Zhou, Learning theory: an approximation theory viewpoint, Cambridge University Press., 2007.

- [6] J. Dick and F. Pillichshammer, Digital Nets and Sequences. Discrepancy Theory and quasi-Monte Carlo Integration, Cambridge University Press., 2010.

- [7] J. Dick, F. Y. Kuo and I. H. Sloan, High-dimensional integration:the quasi-Monte Carlo way, Acta Numer., 22(2013), 133–288.

- [8] B. Doerr, M. Gnewuch and A. Srivastav, Bounds and constructions for the star-discrepancy via covers, J. Complexity, 21(2005), 691-709.

- [9] B. Doerr, A sharp discrepancy bound for jittered sampling, Math. Comp.(2022), http://doi.org/10.1090/mcom/3727.

- [10] P. Glasserman, Monte Carlo Methods in Financial Engineering. Springer-Verlag, New York, 2004.

- [11] M. Gnewuch, Bracketing numbers for axis-parallel boxes and applications to geometric discrepancy, J. Complexity, 24(2008), 154-172.

- [12] M. Kiderlen and F. Pausinger, On a partition with a lower expected -discrepancy than classical jittered sampling, J. Complexity (2021), https://doi.org/10.1016/j.jco.2021.101616.

- [13] M. Kiderlen and F. Pausinger, Discrepancy of stratified samples from partitions of the unit cube, Monatsh. Math., 195(2021), 267-306.

- [14] H. Niederreiter, Random number generation and Quasi-Monte Carlo methods, SIAM, Philadelphia, 1992.

- [15] A. B. Owen, Quasi-monte carlo sampling. In Monte Carlo Ray Tracing: ACM SIGGRAPH 2003 Course Notes 44(2003), 69–88.

- [16] F. Pausinger and S. Steinerberger, On the discrepancy of jittered sampling, J. Complexity, 33(2016), 199-216.

- [17] A. W. van der Vaart and J. A. Wellner, Weak convergence and empirical processes, Springer Series in Statistics, Springer-Verlag, New York, With applications to statistics, 1996.