On the effect of normalization layers on Differentially Private training of deep Neural networks

Abstract

Differentially private stochastic gradient descent (DPSGD) is a variation of stochastic gradient descent based on the Differential Privacy (DP) paradigm which can mitigate privacy threats that arise from the presence of sensitive information in training data. One major drawback of training deep neural networks with DPSGD is a reduction in the model’s accuracy. In this paper, we study the effect of normalization layers on the performance of DPSGD. We demonstrate that normalization layers have a large beneficial impact on the utility of deep neural networks with noisy parameters and should be considered essential ingredients of training with DPSGD. In particular, we propose a novel method for integrating batch normalization with DPSGD without incurring an additional privacy loss. With our method, we are able to train deeper networks and achieve a better utility-privacy trade-off.

1 Introduction

Training deep neural networks typically requires large and representative data collections to achieve high performance. However, depending on the application domain, some datasets may contain sensitive information such as medical records of patients or personal financial data. This has motivated the development of dedicated training methods in order to address privacy concerns (see e. g. [30]).

Differential Privacy (DP) [6] provides a concrete cryptography-inspired notion of privacy. In practice, DP algorithms are obtained from non-private algorithms by means of appropriate randomization [8]. Differential Privacy has been integrated into deep learning [25, 1] where privacy issues can arise when the trained model permits the reconstruction of sensitive information that exists in the training data. The proposed method in [1] is based on clipping gradients and adding random noise to them in each iteration of stochastic gradient descent (SGD). Combined with a moments accountant method for tracing the privacy loss, this differentially private SGD (DPSGD) technique has enabled deep neural networks to be trained under a modest privacy budget at the cost of a manageable reduction in the model’s test accuracy. However, for low privacy budgets (i. e. a small , see Section 3), which corresponds to a large privacy guarantee, this accuracy drops significantly under DPSGD.

The central role of input noise in the application of DPSGD deserves a more detailed analysis. While adding a small amount of noise during training can benefit the generalization capability of the model, too much noise leads to inferior performance because of the high sensitivity of the output to perturbation in the parameters. In spite of this, we show that neural networks augmented with batch/layer normalization layers are strongly robust against random noise injection in their weights.

In this work, we investigate the impact of batch normalization [11] and layer normalization [2] on the performance of training under privacy constraints. Normalization layers such as batch normalization [11] and layer normalization [2] are indispensable components of nearly all state-of-the-art deep neural networks. These techniques are very essential to robustly train deep neural networks without carefully custom non-linearities [13] or choosing a specific initialization scheme [26]. They also improve generalization by preventing overfitting when training very deep networks [31].

One important property of normalization methods is the invariance of the model to weight matrix re-scaling [2]. We argue that this invariance suggests robustness against noise injection and confirm this hypothesis empirically. In particular, we show that batch-normalization can be integrated with DPSGD without any additional loss in privacy during the training process. We compare our proposal with the current state-of-the-art methods by conducting a series of experiments on Computer Vision and Natural Language Processing tasks. In summary, our contributions are as follows:

-

•

We demonstrate that normalization layers have a substantial impact on the performance of models with noisy parameters and should be considered essential ingredients in robust differentially private training.

-

•

We propose an efficient method for using batch normalization layers without incurring an additional privacy loss in the training procedure. To the best of our knowledge, our work is the first to apply a DP mechanism in the presence of batch normalization.

-

•

We establish new accuracy records for differentially private trained deep networks under DPSGD on the MNIST and CIFAR10 datasets.

The rest of the paper is organized as follows. We study the effect of noise on the performance of models in Section 2. Section 3 introduces our approach for differentially private training deep networks with batch normalization. We compare our method with the existing approaches in Section 4. Finally, in section 5, we discuss the related works on differential privacy.

2 Noise and normalization

In this section, we investigate how random Gaussian noise affects a network’s performance in the presence of normalization layers. More specifically, we sample the weights from a Gaussian distribution with learnable mean parameters and constant variance . Backpropagation is performed by making use of the standard reparametrization trick [24]. This way of training is very similar to variational Bayesian learning of neural networks [3], where weights are represented by probability distributions rather than having a fixed value. Unlike the Bayesian approach though, where the goal is learning the true posterior distribution of the weights given the training data, here the noise is introduced via an ad-hoc distribution function.

Batch normalization [11] and layer normalization [2] are introduced to speed up deep neural network training by regularizing neuron dynamics via mean and variance statistics and reducing variance in the input to each node. Normalization techniques in combination with other architecture innovations like residual connections [10] make training of very deep networks feasible.

Both batch and layer normalization ensure zero mean and unit variance in the output of a layer but using different statistics. Batch normalization (BN) calculates the mean and variance statistics across samples in a mini-batch for each neuron independently, while layer normalization (LN) standardizes each summed input to a node utilizing the statistics over all hidden units.

More precisely, if we denote the weighted summed inputs to the -th layer by where is the activation in layer and is the weight matrix, then the normalization operators rescale and shift according to:

| (1) |

where and are learnable parameters which are set to one and zero respectively at the beginning of the training. The parameters and are estimated as follows for batch normalization:

| (2) |

and as follows for layer normalization:

| (3) |

where is the number of hidden units in the layer. The expectations are estimated using samples from the training mini-batches. In the case of batch normalization, at test time, these statistics are replaced with an exponential running average of corresponding mean and variance computed during the training phase.

In this work, we focus on the scale invariance property of normalization methods. It is well known that both batch and layer normalization are invariant under scaling of the weight matrix (matrices) with arbitrary [2]. In order to demonstrate the effect of this symmetry, consider a deep neural network which characterizes the relationship from input to output with trained parameters . The optimal weights are usually learnt by minimizing a non-convex objective function, , over the training dataset using a variant of stochastic gradient descent (SGD). The training procedure also includes tuning hyperparameters, like learning rate and number of epochs. This tuning is usually done by maximizing the performance of the network on a validation dataset. The training procedure always leaves some small uncertainty of order on the final values of weights. Consequently, the performance of the model with parameters and will be essentially identical. For example, we may change the number of iterations or the learning rate very slightly without harming the performance of the network.

We can make invariant with respect to scaling of the weight matrix by augmenting it with batch/layer normalization operators after each learnable layer, . The re-scaling invariance implies that if the weight uncertainty in the original network is of order then in the augmented network it can be of order without affecting the overall performance. This suggests that neural networks with batch/layer normalization layers should be robust against the noise in their weights. In the rest of this section, we empirically confirm this hypothesis by injecting noise into the weights of augmented networks during the training and testing procedure.

To test our hypothesis empirically, we train standard fully-connected as well as convolutional neural networks with noise injected into their weights on MNIST [16] and CIFAR-10 [14]. More specifically, we sample the weights from a Gaussian distribution with learnable mean parameters and constant variance . Backpropagation is performed by making use of the standard reparametrization trick [24].

In particular, we investigate thoroughly LeNet-300-100 and LeNet-5 [15] and variants of ResNet [10] and VGG [27] models. The structure of the models is outlined in Table 1. For each model, we construct a normalized augmented version by adding batch or layer normalization after each trainable layer. All models are implemented in PyTorch [21] and trained with the Adam optimizer [12].

| Network | LeNet-5 | ResNet-18 | VGG |

|---|---|---|---|

| Convolutions | 6, pool, 16, pool | 64, 2x[64, 64] 2x[128, 128] 2x[256, 256] 2x[512, 512] | 2x64 pool 2x128 pool, 4x256, pool |

| FC Layers | 120, 84, 10 | avg-pool, 10 | avg-pool, 512, 10 |

Table 2 shows the accuracy of the augmented models on the MNIST test dataset, averaged over ten runs against unnormalized baselines. The BN/LN prefixes in this table denote models that are obtained by adding batch normalization or layer normalization layers to the original architectures, respectively. It is evident from this experiment that all augmented models are tolerant to noise while the baselines are not. Indeed, their accuracy does not change at all for a large range of noise levels within the statistical error as promised by the scale invariance property of the networks. On the other hand, the baseline models are very sensitive to small weight perturbations. Notably, disturbing the weights of the baseline models by a small noise of order results in non-converging training.

| Model | Noise Level () | ||||

|---|---|---|---|---|---|

| 0 | 0.01 | 0.1 | 1 | 2 | |

| LeNet-300-100 | No-convergence | No-convergence | |||

| BN-LeNet-300-100 | |||||

| LN-LeNet-300-100 | |||||

| LeNet-5 | No-convergence | No-convergence | |||

| BN-LeNet-5 | |||||

| LN-LeNet-5 | |||||

| Model | Noise Level () | ||||

|---|---|---|---|---|---|

| 0 | 0.01 | 0.1 | 1 | 2 | |

| ResNet-18 | |||||

| ResNet-18-mod | |||||

| BN-ResNet-18 | |||||

| VGG-16 | No-convergence | ||||

| VGG-16-mod | No-convergence | ||||

| BN-VGG-16 | |||||

Experiments on CIFAR-10 with ResNet and VGG networks show similar trends (see Table 3). Unlike LeNet models, the original ResNet and VGG networks already contain BN layers after all except the last trainable layer. This leads to some degree of protection against noise as demonstrated in Table 3. For example, the accuracy of ResNet-18 with a noise level of reduces only to instead of to random prediction.

To illustrate the role of normalization layers further, we also present results on ResNet-18-mod and VGG-16-mod which are obtained by removing the last normalization layer from the original architectures. As shown by the performance degradation with increasing noise levels in Table 3, these models are more vulnerable to the added noise.

Next, we extended our experiments to a more complex task, viz. natural language text classification, where we observe a similar effect. For this, we trained a BiLSTM model with one linear/dense layer (DL) with/without layer normalization on the AG News Corpus111http://groups.di.unipi.it/~gulli/AG_corpus_of_news_articles.html, a popular text classification dataset with four categories of news: World, Sports, Business and Sci/Tech. Each class has 30,000 training examples and 1,900 test examples. In total, the dataset consists of 120,000 training examples and 7,600 test examples. We further split the training examples into training/validation set where we use 96,000 examples for training the models and 24,000 for validation (e.g early stopping and for tuning adaptive learning rate). We trained the models with different noise levels for 25 epochs. We find that the higher the noise level, the more epochs are needed to maintain the accuracy of the baseline model. Our results on the language data in Table 4 suggest that layer normalization makes the BiLSTM model robust to noise with minimal drops in accuracy (). Our findings make us conclude that the LSTM and CNN architectures are robust to noise when equipped with layer and batch normalization.

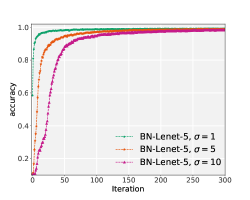

It is worth mentioning that achieving the same accuracy in the presence of noise does not come for free as it affects the training time: the larger the noise, the slower the training. Figure 1 illustrates the evolution of the accuracy of models on the validation set, for different levels of noise. As it is evident from these plots, models with different values of noise converge to the same accuracy, albeit with different rates. For example, increasing the noise level from 1 to 10 slows down training by a factor of order 7 for LeNet and ResNet models. More details on this can be found in Appendix 2.

| Model | Noise Level () | ||||

|---|---|---|---|---|---|

| 0 | 0.01 | 0.1 | 1 | 2 | |

| BiLSTM-DL | |||||

| LN-BiLSTM-DL | |||||

3 DPSGD and Normalization

Differential privacy (DP) is a systematic approach to quantifying a privacy guarantee while querying a dataset. quantifying the leakage of information due to query a dataset. DP protects privacy by bounding the influence of any sample on the outcome of queries. It provides a provable guarantee for individuals. We proceed by briefly recalling some preliminaries on differential privacy and then propose our approach for training deep neural networks in a differentially private way.

Let us denote the domain of data points by . We call two datasets neighboring if they differ are the same except one data point, i. e., , where is the Hamming distance.

Definition 3.1.

(Differential Privacy [7]). A randomized algorithm with domain and range is differential private if for all measurable sets and for all neighboring datasets and , it holds that

| (4) |

Intuitively a differential private mechanism guarantees that the absolute value of privacy leakage will be bounded by with probability at least for adjacent datasets. The higher the value of , the more the chance of data re-identification and so information leakage.

A standard approach for achieving differential privacy is to add some random noise to the output of queries, , and to tune the noise by the sensitivity of the query. sensitivity is defined as the maximum change in the outcome of a query for two neighboring datasets and measures the maximum influence that a single data point can have on the result of the query:

| (5) |

The special case where is calibrated with sensitivity and sampled according to the normal distribution is of special importance and is termed Gaussian mechanism:

| (6) |

Here is the normal distribution with mean zero and standard deviation . It can be shown that this mechanism satisfies differential privacy provided that [8].

Differential privacy has been integrated into deep learning in [25] and subsequently in [1] for the setting where an adversary has access to the network architecture and learned weights, . In particular, the method in [1] preserves privacy by adding noise to the SGD updates:

| (7) |

where is the averaged gradient, is the learning rate and is sampled from the Gaussian distribution . To control the influence of training samples on the parameters, the gradients are clipped by the -norm.

| (8) |

where is the gradient corresponding to the -th sample and is the clipping factor. It has been shown in [1] that each step of DPSGD is -differential private once we tune the noise as with .

It is known that batch normalization is not consistent with DP training. Indeed, in a non-private setting, one usually keeps track of the running averages of mean and variance statistics (Eq. 2) during the training procedure and reuses this collected information at test time to normalize the inputs to neurons. More specifically, the update rule for running averages at each iteration is as follows:

| (9) | |||

| (10) |

where (, ) are estimated mean and variance statistics, (, ) are new observed values on iteration of training according to Eq. 2 and is the momentum of moving averages.

Since these running averages are also a part of the model’s outputs, in a private training, we have to add noise also to these statistics at each iteration and distribute the privacy budgets among the weights and moving averages to make the overall procedure differentially private. Additionally, we need to truncate the summed inputs of neurons to bound the sensitivity of means and variances, which are given by [28]:

where is the clipping threshold for neurons activations and is the batch size. Empirically, we have found that if we tune the noise with the worst-case scenario according to the above sensitivities, the performance of the model drops drastically. Therefore we employ a more sophisticated approach to deal with batch normalization situation as shown in algorithm 1.

First of all, we do not track the running averages during the training phase and instead computed fresh statistics from the current batch are used to normalize the neurons. But to be able to deal with batch normalization at test time we concatenate a fixed amount of data points with size taken from a public dataset, disjoint from the training data, to the input of the network, both in the training and the test phase. These samples only contribute to the statistics and not to the cost function directly.

Therefore, in the training phase, the cost is computed via , where is a batch of size from the training data, and denotes the slice of the first elements. At test time, when iterating over the dataset, the same public data points are also concatenated to each test sample, , and the output of network is computed as . This leads to privacy preserving batch normalization and allows us to compute the normalization statistics over a batch of size without any reference to the training data.

In the next section, we present the impact of normalization layers on DP training using two image recognition benchmarks, i.e. MNIST and CIFAR-10, as well as text classification task in natural language processing using AG News Corpus.

4 Experiments

In this section, we report some results of applying our method and compare them with existing DP mechanisms. The purpose of these experiments is two-fold: we show that (1) similar to non-private training (section 2), normalization layers improve the performance of models trained with DPSGD; and (2) that training very deep networks is feasible with our private version of batch normalization.

All models, as well as DPSGD, have been implemented in PyTorch [20]. To track the privacy loss over the whole training procedure, we employ the Rényi-DP technique [17]. It provides a tighter bound on the privacy loss compared to the strong composition theorem [9]. We use the open-source implementation of the Rényi DP accountant from the TensorFlow Privacy package [29] 222https://github.com/tensorflow/privacy. The total privacy loss is computed as a function of the noise multiplier , size of dataset , size of lot , the number of iterations and .

Table 5 depicts the accuracy of LeNet-5 model on the MNIST test set for ranging from high to very low privacy budgets. We have trained LeNet-5 with and without normalization layers, using DPSGD. For training the model augmented with batch normalization, we employed 128 images of KMNIST [5] as the public dataset. The probability parameter is set to in all our experiments.

The results illustrate that the use of normalization layers consistently improves the performance of DPSGD for all finite values of privacy loss. Further, we observe that the effect of batch normalization is greater than that of layer normalization. Remarkably, we gain around and for very low privacy budgets of and with our private batch normalization technique.

We now turn our attention to the CIFAR-10 dataset. Table 6 summarizes the results of DPSGD on the TensorFlow tutorial model considered in [1]. We follow the same experimental setting as in [1], i.e. we fine tune the linear layers of a pretrained model trained on the CIFAR-100 dataset. For training the model with batch normalization we also use 128 images of CIFAR-100, which has completely different image examples and classes from those of CIFAR-10, as our public dataset.

As Table 6 illustrates, using batch normalization results in better accuracies than layer normalization as well as raw TensorFlow tutorial model. We also show the results of training a light weight VGG model in this table (See appendix A for the details of architecture). The non-private accuracy of this model is comparable with the TF-tutorial model but it leads to a much lower gap for finite privacy budgets. It should be mentioned that it is not feasible to train such models without making use of the batch normalization as the training is extremely unstable. Therefore our private friendly batch normalization technique allows training much more deeper and complex networks and establishing new scores for the performance of differentially private models.

Next, we extended our experiments to a more complex task, viz. natural language text classification, where we observe a similar effect. For this, we trained a BiLSTM model with one linear/dense layer (DL) with/without layer normalization (LN) on the AG News Corpus 333http://groups.di.unipi.it/~gulli/AG_corpus_of_news_articles.html, a popular text classification dataset with 4 categories of news: World, Sports, Business and Sci/Tech. Table 7 shows the result of our experiment on text classification. As before, layer normalization has a large impact on the performance of the model.

| DP Algorithm | privacy budget () | ||||||

|---|---|---|---|---|---|---|---|

| 7 | 3 | 1 | 0.5 | 0.1 | 0.05 | ||

| DPSGD (LeNet-5) | |||||||

| DPSGD (LN-LeNet-5) | |||||||

| DPSGD (BN-LeNet-5) | |||||||

| DP Algorithm | privacy budget () | |||

|---|---|---|---|---|

| DPSGD (TF-tutorial) [1] | ||||

| DPSGD (LN-TF-tutorial) | ||||

| DPSGD(BN-TF-tutorial) | ||||

| DPSGD (BN-VGG) | ||||

| DP Algorithm | privacy budget () | |||||

|---|---|---|---|---|---|---|

| 7 | 3 | 1 | 0.5 | 0.1 | ||

| DPSGD (BiLSTM-DL) | ||||||

| DPSGD (LN-BiLSTM-DL) | ||||||

5 Related Work

A number of different methods have been developed to preserve privacy in machine learning models. [25] proposed a distributed multiparty learning mechanism for a network without sharing input datasets, however, the obtained privacy guarantee was very loose. [1] developed an efficient differentially private SGD for training networks with a large number of parameters. Also, one may employ tighter bounds provided by the Rényi differential privacy [18] in conjunction with DPSGD. A method for adding less noise to the weights of neural networks is proposed in [23] by using adaptive clipping of the gradients. [19] shows that learning with DPSGD requires optimization of the model architectures and initializations. Perturbing the objective function as an alternative way to protect privacy has been suggested in [22]. A model based on the local differentially private mechanism is proposed in [4] to train deep convolutional networks in a way that a data owner can add a randomization layer before data leave data owners’ device. A comprehensive list of works in this area can be found in [32].

6 Conclusion

In this paper, we proposed a novel method for integrating batch normalization with differentially private stochastic gradient descent. Our method makes training very deep neural networks, such as ResNet and VGG, feasible under very strong privacy guarantees. We also have demonstrated that normalization layers are essential ingredients for a robust private training.

7 Acknowledgments

We would like to thank Marius Mosbach and Xiaoyu Shen for proof-reading and valuable comments. The presented research has been funded by the European Union’s Horizon 2020 research and innovation programme project COMPRISE (http://www.compriseh2020.eu/) under grant agreement No. 3081705.

References

- [1] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, pages 308–318. ACM, 2016.

- [2] J. L. Ba, J. R. Kiros, and G. E. Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- [3] C. Blundell, J. Cornebise, K. Kavukcuoglu, and D. Wierstra. Weight uncertainty in neural networks. arXiv preprint arXiv:1505.05424, 2015.

- [4] M. Chamikara, P. Bertok, I. Khalil, D. Liu, and S. Camtepe. Local differential privacy for deep learning. arXiv preprint arXiv:1908.02997, 2019.

- [5] T. Clanuwat, M. Bober-Irizar, A. Kitamoto, A. Lamb, K. Yamamoto, and D. Ha. Deep learning for classical japanese literature. CoRR, abs/1812.01718, 2018.

- [6] C. Dwork, K. Kenthapadi, F. McSherry, I. Mironov, and M. Naor. Our data, ourselves: Privacy via distributed noise generation. In Annual International Conference on the Theory and Applications of Cryptographic Techniques, pages 486–503. Springer, 2006.

- [7] C. Dwork, F. McSherry, K. Nissim, and A. Smith. Calibrating noise to sensitivity in private data analysis. In Theory of cryptography conference, pages 265–284. Springer, 2006.

- [8] C. Dwork, A. Roth, et al. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- [9] C. Dwork, G. N. Rothblum, and S. Vadhan. Boosting and differential privacy. In 2010 IEEE 51st Annual Symposium on Foundations of Computer Science, pages 51–60. IEEE, 2010.

- [10] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [11] S. Ioffe and C. Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167, 2015.

- [12] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. CoRR, abs/1412.6980, 2014.

- [13] G. Klambauer, T. Unterthiner, A. Mayr, and S. Hochreiter. Self-normalizing neural networks. In Advances in neural information processing systems, pages 971–980, 2017.

- [14] A. Krizhevsky. Learning multiple layers of features from tiny images. Technical report, CIFAR, 2009.

- [15] Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. Gradient-based learning applied to document recognition. In Proceedings of the IEEE, pages 2278–2324, 1998.

- [16] Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, et al. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- [17] I. Mironov. Rényi differential privacy. In 2017 IEEE 30th Computer Security Foundations Symposium (CSF), pages 263–275. IEEE, 2017.

- [18] I. Mironov. Rényi differential privacy. In 2017 IEEE 30th Computer Security Foundations Symposium (CSF), pages 263–275, Aug 2017.

- [19] N. Papernot, S. Chien, S. Song, A. Thakurta, and U. Erlingsson. Making the shoe fit: Architectures, initializations, and tuning for learning with privacy, 2020.

- [20] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer. Automatic differentiation in pytorch. nips, 2017.

- [21] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala. Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems 32, pages 8024–8035. Curran Associates, Inc., 2019.

- [22] N. Phan, Y. Wang, X. Wu, and D. Dou. Differential privacy preservation for deep auto-encoders: an application of human behavior prediction. In Thirtieth AAAI Conference on Artificial Intelligence, 2016.

- [23] V. Pichapati, A. T. Suresh, F. X. Yu, S. J. Reddi, and S. Kumar. Adaclip: Adaptive clipping for private sgd, 2019.

- [24] J. Schulman, N. Heess, T. Weber, and P. Abbeel. Gradient estimation using stochastic computation graphs. In Advances in Neural Information Processing Systems, pages 3528–3536, 2015.

- [25] R. Shokri and V. Shmatikov. Privacy-preserving deep learning. In Proceedings of the 22nd ACM SIGSAC conference on computer and communications security, pages 1310–1321. ACM, 2015.

- [26] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [27] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition, 2014.

- [28] M. Swanberg, I. Globus-Harris, I. Griffith, A. Ritz, A. Groce, and A. Bray. Improved differentially private analysis of variance. arXiv preprint arXiv:1903.00534, 2019.

- [29] https://github.com/tensorflow/privacy. Accessed: 2020-January.

- [30] Y. Yang, L. Wu, G. Yin, L. Li, and H. Zhao. A survey on security and privacy issues in internet-of-things. IEEE Internet of Things Journal, 4(5):1250–1258, 2017.

- [31] H. Zhang, Y. N. Dauphin, and T. Ma. Fixup initialization: Residual learning without normalization. arXiv preprint arXiv:1901.09321, 2019.

- [32] T. Zhu, G. Li, W. Zhou, and S. Y. Philip. Differential privacy and applications, volume 69. Springer, 2017.