On the Convergence Theory of Meta Reinforcement Learning with Personalized Policies

Abstract

Modern meta-reinforcement learning (Meta-RL) methods are mainly developed based on model-agnostic meta-learning, which performs policy gradient steps across tasks to maximize policy performance. However, the gradient conflict problem is still poorly understood in Meta-RL, which may lead to performance degradation when encountering distinct tasks. To tackle this challenge, this paper proposes a novel personalized Meta-RL (pMeta-RL) algorithm, which aggregates task-specific personalized policies to update a meta-policy used for all tasks, while maintains personalized policies to maximize the average return of each task under the constraint of the meta-policy. We also provide the theoretical analysis under the tabular setting, which demonstrates that the convergence of our pMeta-RL algorithm. Moreover, we extend the proposed pMeta-RL algorithm to a deep network version based on soft actor-critic, making it suitable for continuous control tasks. Experiment results show that proposed algorithms outperform other previous Meta-RL algorithms on Gym and MuJoCo suites.

Index Terms:

Meta Reinforcement Learning, Personalized policies.I Introduction

Reinforcement learning (RL) [1, 2] has long been an interesting research topic under the development of artificial intelligence, which has demonstrated extraordinary capabilities in many fields, such as playing games [3, 4] and robot control [5, 6]. Despite its successful applications, deep RL still suffers from data inefficiency when training agents in specific environments. And the learned policy may be overfitted and cannot be well generalized to other unseen tasks.

Meta-learning, also known as learning to learn, has recently gained increasing attention for its success in improving sample efficiency in regression, classification and RL tasks. Unlike meta-supervised learning, meta reinforcement learning (meta-RL) needs to automatically extract prior knowledge from previous tasks and achieve fast adaptation [7]. Mainstream meta RL methods are based on model-agnostic meta-learning algorithms, such as MAML [8], E-MAML [9], which update model parameters from gradients across tasks by discriminating the learning process and adaptation. However, these methods do not consider the problem of task diversity.

Some other Meta-RL algorithms attempt to learn an inference network from historical transition samples of different tasks during the meta-training process and build a mapping from observation data to task-relevant information [10, 11, 12]. Then the agent can distinguish the current task according to the inference network and perform action selection to alleviate the gradient conflict problem. However, these methods jointly learn diverse robot manipulation tasks with a shared policy network, which may hurt the final performance compared to independent training in each task [13]. A major reason is that it is unclear how tasks will interact with each other when jointly trained, and optimizing some tasks may negatively affect others [14].

To tackle this problem, in this paper, we propose a personalized meta reinforcement learning (pMeta-RL) framework without sharing trajectories. More specifically, we let each task train its own policy and obtain a meta-policy for multiple tasks by sharing policy model parameters and aggregation. In order for each task to have its own personalized policy while contributing to the meta-policy, we introduce a personalization constraint in the optimization objective function to associate the meta-policy and the personalized policy. Furthermore, we extend the framework to deep RL scenario and employ alternating optimization methods to solve the problems. Under the constraint of the meta-policy, each task updates the personalized policy according to its corresponding transition samples, and maintains an auxiliary policy that can be used for meta-policy learning.

I-A Main Contributions

First, we propose a personalization framework to improve the performance of meta-RL on distinct tasks, which learns a meta-policy and personalized policies for all tasks and specific tasks, respectively. In particular, by learning multiple task-specific personalized policies, the performance on each task can be improved. Meanwhile, to aggregate these differentiated personalized policies to obtain a meta-policy applicable to all tasks, our framework addresses the gradient conflict problem by adopting personalization constraint in the objective function. Under the tabular setting, we constrain the meta Q-table and personalized Q-tables by formulating a differentiable function to encourage each task to pursue its personalized Q-tables around the meta Q-table. Under the deep RL setting, we obtain the personalization performance by constraining the personalized and meta networks (e.g., actor, critic, and inference networks), so that the networks can refer to each other during training.

Second, we propose an alternating minimization algorithm, named pMeta-RL, to solve the personalized meta-RL problem. The personalized policies are updated based on the value iteration, while the auxiliary policies used to aggregate the meta-policy are updated based on the gradient descent. More importantly, theoretical analysis shows that the proposed algorithm can converge well, and the convergence speed is basically linear with the iteration number. And we give an upper bound on the difference between the personalized policies and meta-policy, which is influenced by the regularization parameter and task diversity. Moreover, we extend the proposed pMeta-RL algorithm to a deep network version (named deep pMeta-RL) based on soft actor-critic (SAC), making it suitable for continuous control tasks.

Finally, we evaluate the performance of pMeta-RL and deep pMeta-RL on the Gridworld environment and the MuJoCo suites, respectively. The experimental results show that the proposed pMeta-RL can achieve better performance than the model averaging method, and verify the conclusion of the convergence analysis. Compared to other previous Meta-RL algorithms, our deep pMeta-RL algorithm can achieve the highest average return on the MuJoCo locomotion tasks.

II Related Works

II-A Meta Reinforcement Learning:

Existing meta-RL methods learn the dynamics models and policies that can quickly adapt to unseen tasks, which is built on the meta-learning framework in the context of RL [7, 15, 8]. [15] formulate the meta-learning problem as a second RL procedure, it represent a “fast” RL algorithm as a recurrent neural network and learn it from data but update the parameters at a “slow” pace. MAML [8] meta-learns model parameters by differentiating the learning process for fast adaptation on unseen tasks. E-MAML and E- [9] explicitly optimize the per-task sampling distributions during adaptation with respect to the expected future returns, which is closely related to the MAML algorithm. ProMP [16] theoretically analyses the MAML formulation and addresses the biased meta-gradient issue. To achieve sample efficiency, PEARL [10] performs structured exploration by reasoning about uncertainty over tasks and enables fast adaptation by accumulating experience online. VariBAD [11] learns a policy that conditions on this posterior belief, which can trade off exploration and exploitation under task uncertainty. Other meta-RL works, such as MAME [17] and MetaCURE [12], learn a separate exploration policy for general dense and sparse-reward tasks.

II-B Multi-task Reinforcement Learning:

Multi-task RL methods have demonstrated that learning with different tasks can benefit each other in robotics and other fields [18, 19, 20]. However, the gradient conflict problem still exists in multi-task RL, various approaches based on compositional models [21, 22, 23, 24], gradients similarity [25, 26], or policy distillation [27, 14, 28] have been proposed to solve this challenge. In particular, multi-head multi-task SAC [29] [13] and experience sharing networks [22, 23] training with diverse robot manipulation tasks jointly with a sharing network backbone and multiple task-specific heads for actions. Hard routing [21] and Soft modularization [24] learn a routing network to reconfigure different modules for different tasks automatically without explicitly specifying the policy structure, which can avoid the gradients conflicts effectively. Other works such as gradient surgery [25] and normalization [26], leverage the similarity between gradients from different tasks to enhance the learning process and mitigate interference. Existing algorithms have shown superior performance in multiple tasks, however, they need to share trajectories between tasks and are difficult to generalize to unseen tasks.

II-C Federated Reinforcement Learning:

Another related work is the federated reinforcement learning (FRL), these methods encourage multiple agents to federatively build a better decision-making policy in a privacy-preserving manner, which have been applied in robot navigation and Internet of things [30, 31, 32]. [31] analyzes the communication overhead in FRL and gives the convergence bound. [32] studies adversarial attacks in FRL systems in detail and gives theoretical guarantees in the event of participant failure. However, exist FRL algorithms usually only consider the same tasks and lack the ability to handle multiple tasks.

III Problem Formulation

III-A Preliminaries

Reinforcement learning (RL) algorithms are designed to solve sequential decision problems [2, 1, 3]. Generally speaking, the agent makes action decisions at each discrete time step based on its observation of the environment . After performing an action, the environment generates a reward and transfers the agent to a new state. This can be formulated as a standard Markov decision process (MDP), consisting of a tuple , where is a finite set of states, is a finite set of actions, is the bounded reward function with , denotes the state transition function, and is the discount factor. The goal of RL is to find the optimal policy by maximizing the expected long-term return.

III-B Conventional Meta-RL methods

Most gradient-based meta-RL algorithms are model-agnostic meta-learners built upon MAML, performing vanilla policy gradient steps towards maximizing the performance of the policy for each task. These methods generally consider tasks drawn from a distribution , where each task is a different MDP. During the meta-training time, the goal of Meta-RL is to learn a policy , which can adapt to the multiple tasks. In particular, this policy can be optimized by maximizing the average expected return across the task distribution,

| (1) |

where is the initial state-distribution, and is the state-action value function.

III-C Towards Personalized Meta-RL

Existing meta-RL algorithms leverage the transitions from similar tasks to improve the sample efficiency and achieve good performance on future new tasks. However, most gradient-based methods suppose that the task distribution is “homogeneous”, which means that all tasks are from the same domain, (i.e. a single dataset, multiple datasets with same input feature space [33] or robot control with different transition functions but similar dynamics [8]). This limits the application of these algorithms to distinct or “hetergeneous” tasks, i.e., different tasks may vary in environments or transition functions with a large interval [34]. Figure 1(a) shows several distinct tasks, such as navigating to two completely opposite directions on Ant robot or controlling the speed of the Half-Cheetah to reach two endpoints of a speed interval. In this setting, optimizing some tasks in meta-RL algorithms may negatively affect others.

Figure 1(b) illustrates these problem. For distinct tasks, gradient aggregation makes the values of different state-action pairs similar, i.e., a recommended action may not be given accurately. Other Meta-RL methods, such as PEARL [10], distinguish different tasks by learning a task-relevant context based on the history. These algorithms maintain an inference network , parameterized by , to encode salient information about tasks from context , which consists of the transitions of different tasks. Nevertheless, the gradient conflict problem still exists due to the shared policy network parameters. To solve this challenge, we aim to learn a meta-policy that adapts to multiple distinct tasks, while optimizing a personalized policy for each task. In this subsection, we first give the definition of personalized Meta-RL under the tabular and deep network settings, while the corresponding algorithm for solving personalized Meta-RL is proposed in the next section.

Definition 1 (Personalized Meta-RL)

Assume that there are tasks and the -th () task drawn from . Define as the meta Q-table, where and with and being the set of the -th task’s states and initial state distribution, respectively. The personalized Meta-RL aims to train a meta-policy by maximizing

| (2) |

where

with being a weight parameter that controls the level of personalization; being the personalized Q-table of the -th task; being the expected long-term return under the policy ; and being a differentiable constraint function, such as -norm.

Definition 1 describes a personalization approach based on Q-learning, where the personalized constraint enables each task optimizes its own policy around . Since Q learning is difficult to adapt to large-scale problems, we use a function approximator to represent the Q-values, and extend Definition 1 to deep personalized meta-RL based on SAC [29] and inference network .

Definition 2 (Deep Personalized Meta-RL)

Assume that there are training tasks drawn from . Define as the meta-policy, where is the task-relevant vector. Deep personalized meta-RL aims to train a meta actor model , a meta Q function and a meta inference network by alternately minimizing

where

with , and being the personalized actor model, soft Q function model and inference model of the -th task respectively, and being the actor and critic objective function, which is given by

and

respectively, where indicates that gradients are not being computed through it.

Definition 2 considers the personalization approach to three models , and establishes the relationship between them to optimize the personalized policy based on the meta-policy. In the next section, we will propose the corresponding algorithms to solve these problems.

IV Proposed Algorithms

In this section, we first propose the pMeta-RL algorithm to solve personalized Meta-RL in Definition 1 and present the corresponding theoretical analysis. Then we extend this algorithm with deep neural network to solve deep personalized Meta-RL in Definition 2.

IV-A Personalized Multi-task Value Iteration

To solve personalized Meta-RL, our pMeta-RL algorithm updates and by alternately minimizing the two subproblems of pMeta-RL. More specifically, we first update by solving

| (3) |

then we introduce an auxiliary meta Q-values for each task, which can be updated by solving

| (4) |

After that, all auxiliary meta Q-values can be aggregated to update the .

We first focus on solving (3). Note that in the model-free RL, the standard Q-learning can be used to solve . For each state-action pair , is estimated by iteratively applying the Bellman optimal operator , i.e.,

where is the Q-table at the -th iteration. Then the exact or an approximate maximization scheme is used to recover the greedy policy. This inspires us to update by performing the following iterations

| (5) |

where

We solve (5) via one step gradient descent based on the gradient as the following

| (6) | ||||

where and is a learning rate. However, it is not straightforward to update based on (6) since the system transition function is usually unknown. In most model-free RL algorithms, we need to sample transition data to update Q-value. We hence leverage several samples to obtain an approximated Q-table as the following,

| (7) |

The iterations in (6) and (IV-A) go until the maximum iteration number is reached. Then we have the following Theorem 1, which is proved in Appendix A-C. Theorem 1 indicates that the error generated by using the approximated Q-table is minimal after sufficient iterations. We hence set .

Theorem 1

For a large enough , there exists a small satisfies

Once is available, we update the auxiliary Q values in (4) as the following

| (8) | ||||

where is a learning rate.

We solve these two subproblems alternately until the maximum number of iterations is reached and then update the meta Q-values. Note that since , the states and actions in the meta Q-table may not necessarily be in a specific personalized Q-table. Hence all the auxiliary meta Q-tables are collected to perform the following aggregation

| (9) |

where is a weight parameter, is an indicator operator, and denotes the number of tasks that satisfy , i.e., . We repeat the above process until the meta-policy converges or reaches the maximum number of iterations .

IV-B Theoretical Analysis

In this subsection, we present the convergence analysis of our pMeta-RL algorithm. First, we present the following assumption, which quantifies the diversity of the reward and transition function among tasks.

Assumption 1

For any state-action pair , the reward function of each task can be bounded by , while the transition function is bounded by .

Theorem 2

Remark 1

Theorem 2(a) shows the convergence of the meta-policy. The first term is caused by the initial error , which decreases linearly with the increase of training iterations. Moreover, the second and third terms are respectively due to the tasks drift and initial error , which decreases as the increase of training iterations. Finally, the last term shows that the gradient of the optimal policy model can be close to zero when is small enough. Theorem 2(b) describes an upper bound on the distance between the personalized policy and the meta-policy. The first term denotes the convergence rate consistent with the meta-policy. The in the second term indicates that the diversity of tasks can increase the upper bound, while a more prominent regularization factor can strengthen the connection to the meta-policy.

IV-C Deep Personalized Meta-Reinforcement Learning

In this subsection, we extend the meta-policy iteration algorithm to a deep learning version with neural network function approximators. Taking the actor model as an example, the deep pMeta-RL updates the personalized policy by solving the following two sub-problems:

| (10) | ||||

| (11) |

where is the auxiliary model, which is used to update the meta-model .

To solve (10), similar with Theorem 1, we sample a mini-batch data to obtain an approximated by calculating . Then, the personalized policy is updated based on the stochastic gradient descent by

| (12) |

where we use the -norm constraint such that and is the personalized policy learning rate.

For the subproblem (11), is updated by

| (13) |

where denotes the auxiliary model learning rate. Moreover, the critic and inference models are also updated similarly, which we omit here for brevity.

After iterations, the meta-policy can be updated as the following

| (14) |

where is a weight parameter and represents the current number of update rounds of the meta-policy. Finally, we obtain a well-trained meta-policy . We summarize pMeta-RL and deep pMeta-RL algorithms in Algorithm 1 for clarification.

Input: and a set of training tasks from .

Remark 2

Algorithm 1 integrates two algorithms, pMeta-RL and deep pMeta-RL. For the tabular setting, our pMeta-RL algorithm updates without using lines 3-7 in Algorithm 1. Note that our personalization method is a plug-and-play module that can directly combine with many RL algorithms (e.g., DQN, SAC). For example, each task learns a SAC policy whose actor and critic models are updated according to lines 9-11. The vector used to distinguish different tasks can be replaced by a task embedding vector, such as a one-hot vector. Therefore, we can learn a policy (line 15) to solve multiple distinct tasks in a decentralized manner.

The deep pMeta-RL in Algorithm 1 proposes a general meta-training procedure, and the meta-testing phase is consistent with PEARL. By training an inference network, deep pMeta-RL can estimate the state-value function under different tasks and generalize to unseen tasks.

V Experiment Results

V-A Environments Setting

For the Gym suite [35], we consider two classical control environments, “CartPole”, and “MountainCar” 111The IDs of these environments in the OpenAI Gym library are: CartPole-v0 and MonutainCar-v0. For each environment, we modify its physical parameters to induce different transitions as different tasks.

| Environments | Parameters | task1 | task2 | task3 | task4 | task5 |

|---|---|---|---|---|---|---|

| CartPole | force | 20.0 | 1.0 | 10.0 | 10.0 | 10.0 |

| masspole | 0.1 | 0.1 | 1.0 | 0.01 | 0.1 | |

| lengthpole | 0.5 | 0.5 | 0.5 | 0.5 | 0.05 | |

| MountainCar | force | 0.001 | 0.001 | 0.001 | 0.001 | 0.001 |

| gravity | 0.0025 | 0.0025 | 0.0025 | 0.0025 | 0.0025 | |

| inclination | 3.0 | 3.5 | 4.0 | 4.5 | 5.0 |

CartPole: The goal of CartPole is to balance a pole on the top of the cart. The agent can get the position and velocity of the cart, the angle and angular velocity of the pole as observations, which is a four-dimensional continuous space. We can make the cart move left or right as the action space. This task ends when the pole falls over or the cart out of bounds. The system parameters contain the force, the mass of pole (masspole) and the length of pole (lengthpole).

MountainCar: The goal of MounatianCar is to reach a specific position on a one dimensional track between two mountains. The agent learns to drive back and forth to obtain enough power to allow the car to reach its goal. The states are the position and velocity of the car and the action consist of pushing left, right and no push. The system transition function can be written as

| (15) |

where represent the push left,right and no push, respectively, is the magnitude of the force, is the gravity, and controls the inclination of the track. We modify to generate different tasks, which is shown in table II.

Ant-Fwd-Back: Move forward or backward (2 train tasks, 2 test tasks).

Half-Cheetah-Dir: Move forward or backward (2 train tasks, 2 test tasks).

Half-Cheetah-Vel: Reach a target velocity (10 train tasks, 3 test tasks).

Walker-2D-Params: The agent is randomly initialized with different system dynamics parameters and keep walking (10 train tasks, 3 test tasks).

| Hyperparameters | Value | ||

|---|---|---|---|

| CartPole | MountainCar | ||

| Learning rate | 1e-3 | 1e-3 | |

| Hidden layer size | [512 512] | [512 512] | |

| Batch size | 64 | 64 | |

| Replay buffer size | 2e5 | 2e5 | |

| Meta-policy update round | 30 | 30 | |

| Personalized update round | 200 | 500 | |

| Action selector | -greedy | -greedy | |

| -start | 0.3 | 0.3 | |

| -finish | 0.01 | 0.01 | |

| Target update interval | 350 | 350 | |

| Regularization coefficient | 20 | 20 | |

| Discount factor | 0.99 | 0.99 |

| Hyperparameters | Value | |||

|---|---|---|---|---|

| Ant-Fwd-Back | Half-Cheetah-Dir | Half-Cheetah-Vel | Walker-2D-Params | |

| Actor Learning rate | 1e-3 | 1e-3 | 1e-3 | 1e-3 |

| Critic Learning rate | 1e-3 | 1e-3 | 1e-3 | 1e-3 |

| Actor Hidden layer size | [512 512] | [512 512] | [512 512] | [512 512] |

| Critic Hidden layer size | [512 512] | [512 512] | [512 512] | [512 512] |

| Batch size | 256 | 256 | 256 | 256 |

| Replay buffer size | 1e5 | 1e5 | 2e4 | 2e4 |

| Meta-policy update round | 200 | 300 | 100 | 100 |

| Personalized update round | 5000 | 4000 | 2000 | 2000 |

| Total timesteps | 2e6 | 2.4e6 | 2e6 | 4e6 |

| Regularization coefficient | 15 | 15 | 20 | 20 |

| Target smoothing coefficient | 0.005 | 0.005 | 0.005 | 0.005 |

| Discount factor | 0.99 | 0.99 | 0.99 | 0.99 |

V-B Experiments Details

We implement our personalized method based on DQN with a prioritized experience replay buffer, all the details can be found in Table II. Moreover, our code is based on Tianshou [37] framework222https://github.com/thu-ml/tianshou, and all experiments were conducted on a NVIDIA Quadro RTX 6000 environment.

Then we present all the hyperparameter settings in the Gym and MuJoCo tasks. Table II shows the hyperparameters used on CartPole and MountainCar. All hyperparameters are basically set to be the same as in [38]. Table III shows the hyperparameters used on MuJoCo suite, where all hyperparameters are basically set to be the same as in [10, 12]. For all the compared algorithms, we set the number of training steps to be the same as that used in our pMeta-RL. The rest of the hyperparameters in their algorithm are consistent with the settings recommended in their papers [10, 12].

V-C Performance of pMeta-RL: A Warm-Up

To evaluate the performance of the proposed pMeta-RL, we construct a simple Gridworld environment with a finite state-action space based on [39]. In this Gridworld environment, the goal of each task is to reach the landmark in the grid and the reward is set as the distance between the agent and landmark. The number of tasks is , and the tasks vary in different grid sizes. We use the model averaging method of the Q-values as the baseline, whose performance of this method has been validated in [38]. In particular, this method only performs weighted average aggregation on the Q tables of each task to obtain the multi-task Q-table. We set in the experiments. Figure 2 shows the convergence rate of pMeta-RL, the average returns obtained by the personalized policy and meta-policy are better than that obtained by the model-averaging method. Note that the performance of the model-averaging method can gradually approach the personalized model as the training epoch increases. This is because the multi-task Q-table also retains all the state-action values of each task, which can adapt to different tasks. Furthermore, we directly extend the Q-learning-based approach to DQN and test the performance in the Gym environments, whose tasks vary the transition function. We can find that the performance of the personalized policy is better than the averaged-DQN, which is not well adapted to distinct tasks from Figure 2.

Figure 2 shows the performance of pMeta-RL in Gridworld environment with different values of , where we set . When , pMeta-RL achieves the best performance. However, a larger may degrade performance which mean this parameter need to be chosen carefully for various environments.

V-C1 Effects of regularization

Table IV shows the performance of pMeta-RL with different values of , where we set . In our experiments, we found that a proper can achieve better performance, while a very larger may hurt performance. Therefore, should be carefully designed for various environments. We hence choose for “CartPole” and “MountainCar” in the remaining experiments.

| Average Return of pMeta-RL | ||||||

|---|---|---|---|---|---|---|

| CartPole | Change | 5 | 20 | 30 | 50 | |

| MP | 185.11 2.52 | 192.54 1.56 | 191.51 1.23 | 190.86 2.09 | ||

| PP | 196.47 0.89 | 197.13 0.45 | 196.53 1.03 | 196.72 0.68 | ||

| MountainCar | Change | 5 | 20 | 30 | 50 | |

| GM | -111.15 4.31 | -107.08 3.21 | -110.49 3.89 | -108.41 2.78 | ||

| PM | -93.05 1.07 | -92.13 0.95 | -92.41 1.25 | -98.16 2.13 | ||

| Algorithms | CartPole | MountainCar |

|---|---|---|

| DQN* | 198.05 1.03 | -92.96 2.08 |

| DQN | 169.20 2.89 | -133.96 3.25 |

| Model-Averaging | 191.92 1.36 | -111.34 5.95 |

| pMeta-RL (MP) | 192.54 2.54 | -107.15 4.10 |

| pMeta-RL (PP) | 197.13 0.45 | -93.05 0.95 |

V-C2 Ablation study

We compare the performance of the proposed personalization method with DQN [3], Averaged-DQN [38]. Table V shows the best average reward achieved by various algorithms. The denotes the average reward obtained by the optimal DQN policy for each task, while DQN denotes the average reward achieved by the DQN policy on multiple tasks. We can find that our personalization policy is closer to the optimal policy, and our meta-policy is better than other algorithms. Figure 3 shows that the personalized policy is more suitable for its specific task than meta-policy, and illustrates the generalization ability of the personalized policy under the constraints of the meta-policy, which can generalize to other tasks while completing its corresponding task.

V-D Performance of Deep pMeta-RL

To evaluate the proposed deep pMeta-RL algorithm, we conducted extensive experiments on the continuous control tasks in the MuJoCo suits [40], which are benchmarks commonly used by meta-RL algorithms. These tasks vary in either the reward function (target velocity for Half-Cheetah-Vel, and walking direction for Half-Cheetah-Fwd-Back, Ant-Fwd-Back) or transition function (Walker-Params). We compare deep pMeta-RL against several representative meta-RL algorithms, including PEARL [10], ProMP [16], MetaCURE [12] and MAML-TRPO [8]. We also compare the recurrence-based policy gradient method as [10]. For a fair comparison, we set the maximum episode length to 200 for all the above tasks, which is similar to PEARL. In addition, we set the same number of training steps for each epoch for the above algorithms.

Figure 4 shows that the personalized policy of deep pMeta-RL based on SAC can outperform prior meta-RL methods across all domains in terms of the average returns. The meta-policy achieves comparable performance to other algorithms, albeit with a drop in performance in the Half-Cheetah-Vel environment. This may be mainly due to the increase in the number of tasks in this environment and the increase in the similarity between tasks, resulting in a weakened personalization ability and a slower meta-policy convergence rate. Furthermore, we also observe that MetaCURE is unable to obtain reasonable results in Walker-2D-Params under the same setting. MetaCURE is an efficient algorithm for solving the sparse rewards problem. However, in the context of dense rewards, the intrinsic rewards proposed in the original paper may conflict with dense rewards, resulting in performance degradation. The average returns obtained using the personalized policies are much better than other meta-RL algorithms (e.g., and than PEARL in Ant-Fwd-Back and Cheetah-Fwd-Back environments, respectively), but only slightly gain in the Cheetah-Vel and Walker-2D-Params environments. And we found PEARL can obtains almost the same performance as the personalized policy in Walker-2D-Params. Summarizing the above, we can infer that our personalization method is better suited for more distinct tasks, e.g., navigating to the exact opposite direction.

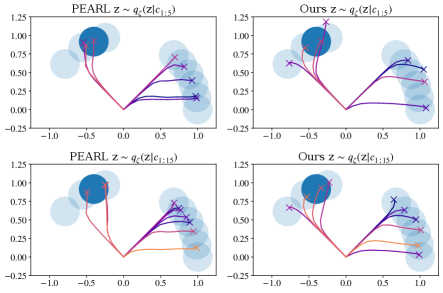

We visualized the trajectories to illustrate the superiority of the personalized policy on the Sparse-Point-Robot, which is a 2D navigation task in which a point robot must navigate to different goal locations on edge of a semicircle. A reward is given only when the robot is within a certain radius of the goal, which is set as 0.2 in our experiments. By randomly sampling 10 goals, we compare PEARL with different context and , whose subscripts denote the number of trajectories contained in the context. Specifically, the first trajectory is collected with probabilistic context variable sampled from the prior and the subsequent trajectories are collected with where context is aggregated over all collected trajectories. As shown in Figure 5, we can observe that that the agent with personalized policy can navigate to more targets than PEARL and achieve higher returns. Moreover, the robot can navigate more accurately with more context information, for example, a robot making decisions with can navigate to the center of the goals, while using may go beyond the goals radius area.

We also validate the performance of the meta-policy on the unseen tasks in Figure 6, which is almost consistent with the performance in Figure 4. We find that the meta-policy can adapt well to multiple tasks in most environments, except for the slower convergence on Half-Cheetah-vel. Combining Figure 4 and Figure 6, we conclude that the performance of the personalized policy is promising, such that our algorithm can be more suitable for decentralized multi-task learning, each task maintains its personalized policy while learning a meta-policy for multiple tasks without sharing trajectories.

VI Conclusions

In this paper, we propose a personalization approach in meta-RL to solve the gradient conflict problem, which learns a meta-policy and personalized policies for all tasks and specific tasks, respectively. By adopting a personalization constrain in the objective function, our algorithm encourages each task to pursue its personalized policy around the meta-policy under the tabular and deep network settings. We introduce an auxiliary policy to decouple the personalized and meta-policy learning process and propose an alternating minimization method for policy improvement. Moreover, theoretical analysis shows that our algorithm converges linearly with the iteration number and gives an upper bound on the difference between the personalized policies and meta-policy. Experimental results demonstrate that pMeta-RL outperforms many advanced meta-RL algorithms on the continuous control tasks.

Appendix A Proof of Main Theorems

A-A Some Useful Results

Proposition 1

[Jensen’s inequality] For any vector , we have

Proposition 2

[Jensen’s inequality] For any vector , we have

for any constant .

Lemma 1

[[41]] The random process taking values in and defined as

converges to zero with probability 1 under the following assumptions:

-

•

, and ;

-

•

, with ;

-

•

, for .

where the stands for the past at step , and the notation refers to some weighted maximum norm.

A-B Some Important Lemmas

Lemma 2

For each state-action pair, the meta Q-values update as

where and

| (16) |

with .

Lemma 3

Let Assumption 1 hold. For any and , the variance of each task is bounded

Lemma 4

Lemma 5

The task drift error

A-C Proof of Theorem 1

We first prove that the updated form in (6) is a contraction mapping. By setting the derivative of to 0, we have one step optimal solution as the following

for each state-action pair .

To simplify notations, we omit the subscript , and from now on. We define an additional Bellman backup operator as

| (17) |

and a norm on values as .

Suppose that , then we have

Therefore

| (18) |

when is constant and . Hence is a contraction, and there exist a fixed point such that .

We next prove that the update method of in (IV-A) can iterate to this fixed point. We rewrite (IV-A) ignoring the superscript as the following

where and is the iteration index.

By subtracting from both sides the fixed point and defining

we can obtain

| (19) |

where

with being a random sample state obtained from the Markov chain . Then we have

where uses the fact in (17) and leverages the property of the fixed point in a contraction, i.e., .

According to (18), we have

Moreover, the variance of can be written as

| (20) |

Since is bounded and is constant in each personalized iteration, there exist a constant such that

A-D Proof of Theorem 2

We first consider the smoothness property of . For the first term , when is Boltzmann policy [42], i.e., , is a differentiable function. In addition, the regularization term is -norm, which is -smooth function. Thus can be a smooth function.

We next proof the convergence of the meta-policy. Let be a -smoothness function, then we have

| (21) |

By using Proposition 1, can be decomposed into

| (22) |

According the Cauchy-Swartz and AM-GM inequalities, substituting (22) into (21) yields

Then we have

| (23) |

where is based on Lemma 5 and the fact that for random variable , and is obtained by Lemma 4. When , we have and assume that

then we have .

By taking average over , we have

where , and .

Let , consider one case, when and , we have

A-E Proof of Important Lemmas

A-E1 Proof of Lemma 2

A-E2 Proof of Lemma 3

Note that is bounded reward function such that is bounded by , then we have

where is based on Assumption 1. We finish the proof.

A-E3 Proof of Lemma 4

For is a smooth function, we have . Then

where is due to the property of the fixed point of mentioned in (17), i.e.,

and is due to Proposition 1. Taking the average over the number of tasks, we have

| (27) | ||||

where is due to Assumption 1 and Proposition 1, and is based on Jensen’s inequality. By re-arranging the terms in , we obtain

where . Then we finish the proof.

A-E4 Proof of lemma 5

References

- [1] R. S. Sutton, “Learning to predict by the methods of temporal differences,” Machine learning, vol. 3, no. 1, pp. 9–44, 1988.

- [2] C. J. Watkins and P. Dayan, “Q-learning,” Machine learning, vol. 8, no. 3-4, pp. 279–292, 1992.

- [3] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski et al., “Human-level control through deep reinforcement learning,” nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [4] V. Mnih, A. P. Badia, M. Mirza, A. Graves, T. Lillicrap, T. Harley, D. Silver, and K. Kavukcuoglu, “Asynchronous methods for deep reinforcement learning,” in International conference on machine learning. PMLR, 2016, pp. 1928–1937.

- [5] S. Levine, C. Finn, T. Darrell, and P. Abbeel, “End-to-end training of deep visuomotor policies,” The Journal of Machine Learning Research, vol. 17, no. 1, pp. 1334–1373, 2016.

- [6] T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra, “Continuous control with deep reinforcement learning,” arXiv preprint arXiv:1509.02971, 2015.

- [7] S. Thrun and L. Pratt, Learning to learn. Springer Science & Business Media, 2012.

- [8] C. Finn, P. Abbeel, and S. Levine, “Model-agnostic meta-learning for fast adaptation of deep networks,” in International Conference on Machine Learning. PMLR, 2017, pp. 1126–1135.

- [9] B. C. Stadie, G. Yang, R. Houthooft, X. Chen, Y. Duan, Y. Wu, P. Abbeel, and I. Sutskever, “Some considerations on learning to explore via meta-reinforcement learning,” arXiv preprint arXiv:1803.01118, 2018.

- [10] K. Rakelly, A. Zhou, C. Finn, S. Levine, and D. Quillen, “Efficient off-policy meta-reinforcement learning via probabilistic context variables,” in International conference on machine learning. PMLR, 2019, pp. 5331–5340.

- [11] L. Zintgraf, K. Shiarlis, M. Igl, S. Schulze, Y. Gal, K. Hofmann, and S. Whiteson, “Varibad: A very good method for bayes-adaptive deep rl via meta-learning,” arXiv preprint arXiv:1910.08348, 2019.

- [12] J. Zhang, J. Wang, H. Hu, T. Chen, Y. Chen, C. Fan, and C. Zhang, “Metacure: Meta reinforcement learning with empowerment-driven exploration,” in International Conference on Machine Learning. PMLR, 2021, pp. 12 600–12 610.

- [13] T. Yu, D. Quillen, Z. He, R. Julian, K. Hausman, C. Finn, and S. Levine, “Meta-world: A benchmark and evaluation for multi-task and meta reinforcement learning,” in Conference on Robot Learning. PMLR, 2020, pp. 1094–1100.

- [14] Y. Teh, V. Bapst, W. M. Czarnecki, J. Quan, J. Kirkpatrick, R. Hadsell, N. Heess, and R. Pascanu, “Distral: Robust multitask reinforcement learning,” Advances in Neural Information Processing Systems, vol. 30, 2017.

- [15] Y. Duan, J. Schulman, X. Chen, P. L. Bartlett, I. Sutskever, and P. Abbeel, “: Fast reinforcement learning via slow reinforcement learning,” arXiv preprint arXiv:1611.02779, 2016.

- [16] J. Rothfuss, D. Lee, I. Clavera, T. Asfour, and P. Abbeel, “Promp: Proximal meta-policy search,” arXiv preprint arXiv:1810.06784, 2018.

- [17] S. Gurumurthy, S. Kumar, and K. Sycara, “Mame: Model-agnostic meta-exploration,” in Conference on Robot Learning. PMLR, 2020, pp. 910–922.

- [18] L. Pinto, D. Gandhi, Y. Han, Y.-L. Park, and A. Gupta, “The curious robot: Learning visual representations via physical interactions,” in European Conference on Computer Vision. Springer, 2016, pp. 3–18.

- [19] L. Pinto and A. Gupta, “Learning to push by grasping: Using multiple tasks for effective learning,” in 2017 IEEE international conference on robotics and automation (ICRA). IEEE, 2017, pp. 2161–2168.

- [20] M. Riedmiller, R. Hafner, T. Lampe, M. Neunert, J. Degrave, T. Wiele, V. Mnih, N. Heess, and J. T. Springenberg, “Learning by playing solving sparse reward tasks from scratch,” in International Conference on Machine Learning. PMLR, 2018, pp. 4344–4353.

- [21] C. Rosenbaum, T. Klinger, and M. Riemer, “Routing networks: Adaptive selection of non-linear functions for multi-task learning,” arXiv preprint arXiv:1711.01239, 2017.

- [22] C. D’Eramo, D. Tateo, A. Bonarini, M. Restelli, and J. Peters, “Sharing knowledge in multi-task deep reinforcement learning,” in International Conference on Learning Representations, 2019.

- [23] T. L. Vuong, D. V. Nguyen, T. L. Nguyen, C. M. Bui, H. D. Kieu, V. C. Ta, Q. L. Tran, and T. H. Le, “Sharing experience in multitask reinforcement learning,” 2019.

- [24] R. Yang, H. Xu, Y. WU, and X. Wang, “Multi-task reinforcement learning with soft modularization,” Advances in Neural Information Processing Systems, vol. 33, pp. 4767–4777, 2020.

- [25] T. Yu, S. Kumar, A. Gupta, S. Levine, K. Hausman, and C. Finn, “Gradient surgery for multi-task learning,” arXiv preprint arXiv:2001.06782, 2020.

- [26] Z. Chen, V. Badrinarayanan, C.-Y. Lee, and A. Rabinovich, “Gradnorm: Gradient normalization for adaptive loss balancing in deep multitask networks,” in International Conference on Machine Learning. PMLR, 2018, pp. 794–803.

- [27] E. Parisotto, J. L. Ba, and R. Salakhutdinov, “Actor-mimic: Deep multitask and transfer reinforcement learning,” arXiv preprint arXiv:1511.06342, 2015.

- [28] Z. Xu, K. Wu, Z. Che, J. Tang, and J. Ye, “Knowledge transfer in multi-task deep reinforcement learning for continuous control,” arXiv preprint arXiv:2010.07494, 2020.

- [29] T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine, “Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor,” in International conference on machine learning. PMLR, 2018, pp. 1861–1870.

- [30] B. Liu, L. Wang, and M. Liu, “Lifelong federated reinforcement learning: a learning architecture for navigation in cloud robotic systems,” IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 4555–4562, 2019.

- [31] X. Wang, C. Wang, X. Li, V. C. Leung, and T. Taleb, “Federated deep reinforcement learning for internet of things with decentralized cooperative edge caching,” IEEE Internet of Things Journal, vol. 7, no. 10, pp. 9441–9455, 2020.

- [32] X. Fan, Y. Ma, Z. Dai, W. Jing, C. Tan, and B. K. H. Low, “Fault-tolerant federated reinforcement learning with theoretical guarantee,” Advances in Neural Information Processing Systems, vol. 34, 2021.

- [33] R. Vuorio, S.-H. Sun, H. Hu, and J. J. Lim, “Multimodal model-agnostic meta-learning via task-aware modulation,” arXiv preprint arXiv:1910.13616, 2019.

- [34] J. Chen and A. Zhang, “Hetmaml: Task-heterogeneous model-agnostic meta-learning for few-shot learning across modalities,” arXiv preprint arXiv:2105.07889, 2021.

- [35] G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “Openai gym,” 2016.

- [36] M. Li, Z. Qin, Y. Jiao, Y. Yang, J. Wang, C. Wang, G. Wu, and J. Ye, “Efficient ridesharing order dispatching with mean field multi-agent reinforcement learning,” in The World Wide Web Conference, 2019, pp. 983–994.

- [37] J. Weng, H. Chen, D. Yan, K. You, A. Duburcq, M. Zhang, H. Su, and J. Zhu, “Tianshou: A highly modularized deep reinforcement learning library,” arXiv preprint arXiv:2107.14171, 2021.

- [38] O. Anschel, N. Baram, and N. Shimkin, “Averaged-dqn: Variance reduction and stabilization for deep reinforcement learning,” in International conference on machine learning. PMLR, 2017, pp. 176–185.

- [39] M. Chevalier-Boisvert, L. Willems, and S. Pal, “Minimalistic gridworld environment for openai gym,” https://github.com/maximecb/gym-minigrid, 2018.

- [40] E. Todorov, T. Erez, and Y. Tassa, “Mujoco: A physics engine for model-based control,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2012, pp. 5026–5033.

- [41] T. Jaakkola, M. I. Jordan, and S. P. Singh, “On the convergence of stochastic iterative dynamic programming algorithms,” Neural computation, vol. 6, no. 6, pp. 1185–1201, 1994.

- [42] R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.