On the Capacity of Generalized Quadrature Spatial Modulation

Abstract

In this letter, the average mutual information (AMI) of generalized quadrature spatial modulation (GQSM) is first derived for continuous-input continuous-output channels. Our mathematical analysis shows that the calculation error induced by Monte Carlo integration increases exponentially with the signal-to-noise ratio. This nature of GQSM is resolved by deriving a closed-form expression. The derived AMI is compared with other related SM schemes and evaluated for different antenna activation patterns. Our results show that an equiprobable antenna selection method slightly decreases AMI of symbols, while the method significantly improves AMI in total.

Index Terms:

Capacity, mutual information, spatial modulation (SM), quadrature spatial modulation (QSM), generalized quadrature spatial modulation (GQSM).(45pt,10pt) Accepted for publication in IEEE Wireless Communications Letters. This is the author’s version which has not been fully edited and content may change prior to final publication. Citation information: DOI 10.1109/LWC.2023.3310586

I Introduction

Spatial modulation (SM) is a technique that modulates information by assigning it to an index of active transmit antennas, in addition to data symbols [1]. SM has been extensively studied as a potential solution for striking the fundamental trade-off between performance and complexity in wireless communications [2].

The transmission rate of SM is given by , where is the constellation size and is the number of transmit antennas. To improve the spectral efficiency of SM, a number of extensions have been proposed. Introducing representative schemes, generalized spatial modulation (GSM) [3] extends the number of data symbols from to a general integer , resulting in an improved transmission rate . In contrast, quadrature spatial modulation (QSM) [4] defines different activation patterns (APs) independently for the real and imaginary parts of the codeword, resulting in an improved transmission rate . A hybrid of the above two SM extensions, generalized quadrature spatial modulation (GQSM) [5], has been proposed. Currently, GQSM is considered to be the most advanced SM, offering the highest transmission rate . Additionally, other equivalent techniques have been proposed in the context of orthogonal frequency division multiplexing (OFDM), generally termed index modulation (IM), such as OFDM-IM [6, 7] and OFDM-I/Q-IM [8].

GQSM requires APs of antennas to be designed carefully, where APs are selected out of possible candidates. This AP selection determines achievable performances, leading to studies on the efficient design of APs [7, 9, 10]. One approach, known as combinatorial design, was proposed in [7]; it is equivalent to selecting APs from candidates in lexicographic order. Another approach, known as equiprobable design, was proposed in [9]; APs are constructed such that each transmit antenna is activated with an equal probability. In addition, an integer linear programming (ILP) design is proposed in [10], where the equiprobable antenna selection is formulated as an ILP problem and it is compared with other design methods [7, 9] for discrete-input channels.111The open-source implementations of [7, 9, 10] are provided in [10].

The channel capacity is an essential metric for evaluating a communication system, and many studies have addressed that analysis of SM. Although the distribution of the channel input that maximizes the average mutual information (AMI) of SM is unknown [11], the AMI of SM and GSM has been derived under the assumption of Gaussian input distribution [12]. Under the same assumption, it was shown in [13] that, when , the AMI of QSM is equal to the channel capacity of MIMO [14]. To the best of our knowledge, the AMI of QSM or GQSM at a specific number of transmit antennas has yet to be derived, although it is an important metric predicting an upper bound of achievable rates in coded scenarios.

In this letter, we newly derive the AMI of GQSM assuming continuous-input channels and compare it with those of SM and GSM, where a non-trivial problem of calculation errors is solved by our partially closed-form expressions. In addition, using the derived AMI, we investigate differences between the three methods [7, 9, 10] of APs and clarify that the difference is maximized at medium signal-to-noise ratios (SNRs).

II System Model

Consider an multiple-input multiple-output (MIMO) system and assume independent and identically distributed (i.i.d.) frequency-flat Rayleigh fading channels in which each element of a channel matrix and a noise vector independently follow complex Gaussian distributions and , respectively. Given the noise variance and the transmission power , the SNR is defined by . Let be the -th element of a GQSM codeword . The real and imaginary parts of classic symbols are denoted by and , while the -th elements of and are denoted by and , respectively. Similarly, the element of a matrix is denoted by . Then, the APs corresponding to the real and imaginary parts of the codeword are defined by

| (1) |

and

| (2) |

where we have relationships and . Denoting all APs as using the Cartesian product , the number of APs, , satisfies a constraint for additional bit allocation of GQSM.222Here, is not limited to the maximum value, which can be adjusted to achieve additional gain at the expense of a reduced transmission rate [10]. The received signal is represented as

| (3) |

and the codeword is constructed by

| (4) |

where denotes the imaginary number. Note that this generalized system model can represent QSM by imposing a constraint and GSM by imposing a condition for all APs . In addition, by setting the off-diagonal elements of the channel matrix to , it becomes equivalent to an idealized system model of OFDM-I/Q-IM.

III Capacity Analysis

AMI is defined as the expected value of the maximum number of bits that can be conveyed without error at a given SNR, and ergodic capacity is defined as the maximum value of AMI over the distribution of the codeword [14]. In general, the ergodic capacity of MIMO is achieved when each element of the codeword independently follows a complex Gaussian distribution with the same variance [14]. However, a distribution that achieves the capacity of SM has not yet been found [11]. Therefore, in this study, we derive the AMI of GQSM when the input symbols independently follow a complex Gaussian distribution with the same variance as in previous studies [12] and use it as an evaluation metric instead of the actual capacity.

III-A AMI of Discrete-Input Channel [15]

If the input symbols follow a discrete probability distribution, the AMI of a general MIMO scheme is expressed as [15]

| (5) |

where

| (6) |

is the -th element of a codebook , and is the transmission rate.

III-B AMI of Continuous-Input Channel

In the following, the AMI of GQSM is newly derived for continuous-input continuous-output channels. If the input symbols follow a continuous probability distribution, similar to [12, Eq. (31)], the AMI of GQSM can be divided into that of the symbols, , and that of the APs, , expressed as

| (7) |

First, we derive . From (7), we obtain

| (8) |

where denotes the entropy of a random variable. Supposing , the argument of the expectation can be transformed into a Monte Carlo integration [1, 11] of

| (9) |

Substituting (III-B) for (III-B) yields

| (10) |

Second, we derive . From (7), we obtain

| (11) |

Using Bayes’ theorem, is expressed as

| (12) |

Assuming that APs are chosen uniformly at random, i.e., , we obtain

| (13) |

Substituting (13) for (11) yields

| (14) |

Overall, from (10) and (14), the AMI of GQSM for continuous-input channels is derived as

| (15) |

where the Monte Carlo method is used to calculate the expected values and .

III-C Error Analysis of Monte Carlo Integration

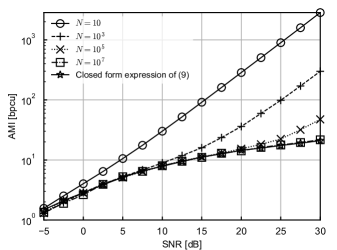

The expression in (III-B) seems straightforward; however, it is actually difficult to calculate with high accuracy. Fig. 1 shows calculated from (10) using the Monte Carlo integration for different sample sizes , where , and each element of the input symbol follows a complex Gaussian distribution independently. calculated using the closed form expression of (III-B) is also shown, which will be discussed in Section III-D. As shown in Fig. 1, the approximate value of increases exponentially from a certain SNR if the sample size is relatively small. This result indicates that the calculation errors increase exponentially with respect to SNR, which is analyzed below.

First, the expectation of (III-B) is transformed into another form of

| (16) |

Here, the variables , , and in (III-B) other than can be regarded as given constants. Therefore, because of the reproductive property of the Gaussian distribution, if each element of the input symbol follows a complex Gaussian distribution independently, then is also a probability vector whose elements follow complex Gaussian distributions with different variances. As a result, its squared norm is a random variable defined by the sum of the squares of random variables following Gaussian distributions. To investigate the asymptotic properties of (III-B) with respect to SNR, we consider the following random variable as a simplified model of (III-C) expressed by

| (17) |

where is a random variable following a Gaussian distribution . Now, we introduce a new random variable . Its expected value is given by

| (18) |

and , where is the cumulative distribution function of a half-Gaussian distribution with unit variance. From (18), increases linearly with SNR. Here, the following inequality holds:

| (19) |

Since , we have

| (20) |

If the sample size is constant, then asymptotically holds because of . Also, for the expected value of , the following approximation holds:

| (21) |

Thus, asymptotically, increases linearly with SNR. In other words, increases exponentially with SNR in units of decibels.

The above analysis explains the phenomenon observed in Fig. 1. The expected value calculation in log-sum-exp, as in (III-B), requires a sufficient sample size because the error increases with SNR. That is, at high SNRs, it is a challenging task to calculate accurate AMI of GQSM in terms of computational complexity.

III-D Closed-Form Expression of (III-B)

The calculation error in (III-B) induced by the Monte Carlo integration increases exponentially with SNR. Here, a closed-form expression of (III-B) can be derived, which eliminates the calculation error and provides a more accurate value of AMI. Assuming that each element of the input symbol independently follows a complex Gaussian distribution , (III-B) can be expressed as

| (22) |

| (23) |

In general, (22) can be expressed in closed form by repeating the Gaussian integral times. By substituting the closed-form expression of (22) into (III-B), the AMI of GQSM for continuous-input channels can be obtained with minimal error. Although the closed form of the expected value can be obtained in (III-B), it is still necessary to calculate by the Monte Carlo method, which induces no significant calculation errors. In repeating the Gaussian integral times, we used symbolic computation with the computer algebra system GiNaC [16] and the linear algebra library Eigen [17].

As an example, in the case of , (22) is given by (III-D), where is the -th column vector of , and and are the real and imaginary parts of the element of . The real and imaginary parts of the input symbol are denoted by and , while the real and imaginary parts of the -th element of the received signal are denoted by and , respectively. The constant parameters in (III-D) are defined as

| (24) | ||||

| (25) | ||||

| (26) | ||||

| (27) |

Integrating (III-D) with respect to gives

| (28) |

where

| (29) |

Finally, integrating (28) with respect to gives the closed-form expression of (22) as

| (30) |

where

| (31) |

IV Numerical Results

In this section, we compare the AMI of QSM and GQSM with those of related SM schemes.333The AMI of QSM derived in [13] is omitted here since it is equal to the MIMO channel capacity [14] when . All the results were obtained through Monte Carlo simulations over independent channel realizations. Each element of the input symbols was assumed to independently follow a complex Gaussian distribution.

First, Fig. 2 shows the AMI of QSM and SM, where . The dashed and dotted lines in the figure correspond to and in (III-B), respectively. The figure shows that the difference in AMI between QSM and SM can be mainly attributed to , and there was little difference in . Since is based on a finite number of APs, it converged to at high SNRs.

Next, Fig. 3 shows the AMI of GSM and GQSM, where . Note that of GSM is equal to the ergodic capacity of MIMO. Although the results were basically the same as those shown in Fig. 2, of GQSM was slightly smaller than that of GSM. This can be attributed to the fact that GQSM is more susceptible to channel fading than GSM when the APs of the real and imaginary parts do not match, i.e., . Specifically, GSM is affected by channel elements, while GQSM can be affected by up to channel elements. Interestingly, in the SNR range below dB, the decrease in exceeded the increase in , resulting in the AMI of GQSM being lower than that of GSM.444Since holds in Figs. 2 and 3, the number of APs is equal to the cardinality of all candidates, and there is no room for designing APs.

Finally, Fig. 4 shows the AMI of GQSM for different AP designs [7, 9, 10], where . For simplicity, the same APs were used for both the real and imaginary parts of the codewords, i.e., . As shown, the differences in AMI appeared to be small. To further analyze these differences, in Fig. 5, we focus on the differences in AMI between the three AP designs, which shows that the differences in AMI depended on the SNR and were maximized at medium SNRs. The ILP [10] and equiprobable [9] designs maximized the equiprobability of the active transmit antennas and also increased the probability of being affected by channel fading, leading to a slight decrease in . However, the decrease in was negligible compared with the increase in , resulting in overall improvements in AMI compared with the combinatorial design [7].

V Conclusions

In this letter, we derived the AMI of GQSM for continuous-input channels, which clarified a significant difference in AMI between GQSM and GSM at high SNRs. Additionally, the impact of AP designs on AMI was maximized at medium SNRs, and the maximum AMI was achieved by the ILP design. The analyses given in this letter are applicable to the schemes subsumed by GQSM, such as QSM and OFDM-I/Q-IM.

References

- [1] N. Ishikawa, S. Sugiura, and L. Hanzo, “50 years of permutation, spatial and index modulation: From classic RF to visible light communications and data storage,” IEEE Communications Surveys & Tutorials, vol. 20, no. 3, pp. 1905–1938, 2018.

- [2] M. Wen et al., “A survey on spatial modulation in emerging wireless systems: Research progresses and applications,” IEEE Journal on Selected Areas in Communications, vol. 37, no. 9, pp. 1949–1972, 2019.

- [3] J. Jeganathan, A. Ghrayeb, and L. Szczecinski, “Generalized space shift keying modulation for MIMO channels,” in IEEE International Symposium on Personal, Indoor and Mobile Radio Communications, Cannes, France, Sep. 2008, pp. 1–5.

- [4] R. Mesleh, S. S. Ikki, and H. M. Aggoune, “Quadrature spatial modulation,” IEEE Transactions on Vehicular Technology, vol. 64, no. 6, pp. 2738–2742, 2015.

- [5] F. R. Castillo-Soria et al., “Generalized quadrature spatial modulation scheme using antenna grouping,” ETRI Journal, vol. 39, no. 5, pp. 707–717, 2017.

- [6] R. Abu-alhiga and H. Haas, “Subcarrier-index modulation OFDM,” in 2009 IEEE 20th International Symposium on Personal, Indoor and Mobile Radio Communications, Sep. 2009, pp. 177–181.

- [7] E. Başar et al., “Orthogonal frequency division multiplexing with index modulation,” IEEE Transactions on Signal Processing, vol. 61, no. 22, pp. 5536–5549, 2013.

- [8] B. Zheng et al., “Low-complexity ML detector and performance analysis for OFDM with in-phase/quadrature index modulation,” IEEE Communications Letters, vol. 19, no. 11, pp. 1893–1896, 2015.

- [9] M. Wen et al., “Equiprobable subcarrier activation method for OFDM with index modulation,” IEEE Communications Letters, vol. 20, no. 12, pp. 2386–2389, 2016.

- [10] N. Ishikawa, “IMToolkit: An open-source index modulation toolkit for reproducible research based on massively parallel algorithms,” IEEE Access, vol. 7, pp. 93 830–93 846, 2019.

- [11] D. A. Basnayaka, M. Di Renzo, and H. Haas, “Massive but few active MIMO,” IEEE Transactions on Vehicular Technology, vol. 65, no. 9, pp. 6861–6877, 2016.

- [12] B. Shamasundar and A. Nosratinia, “On the capacity of index modulation,” IEEE Transactions on Wireless Communications, vol. 21, no. 11, pp. 9114–9126, 2022.

- [13] A. Younis et al., “Quadrature spatial modulation for 5G outdoor millimeter–wave communications: Capacity analysis,” IEEE Transactions on Wireless Communications, vol. 16, no. 5, pp. 2882–2890, 2017.

- [14] E. Telatar, “Capacity of multi-antenna Gaussian channels,” European Transactions on Telecommunications, vol. 10, no. 6, pp. 585–595, 1999.

- [15] S. X. Ng and L. Hanzo, “On the MIMO channel capacity of multidimensional signal sets,” IEEE Transactions on Vehicular Technology, vol. 55, no. 2, pp. 528–536, 2006.

- [16] J. Vollinga, “GiNaC—symbolic computation with C++,” Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 559, no. 1, pp. 282–284, 2006.

- [17] G. Guennebaud, B. Jacob et al., “Eigen v3,” 2010.