On Sub-optimality of Random Binning for Distributed Hypothesis Testing

Abstract

We investigate the quantize and binning scheme, known as the Shimokawa-Han-Amari (SHA) scheme, for the distributed hypothesis testing. We develop tools to evaluate the critical rate attainable by the SHA scheme. For a product of binary symmetric double sources, we present a sequential scheme that improves upon the SHA scheme.

I Introduction

In [1], Berger introduced a framework of statistical decision problems under communication constraint. Inspired by his work, many researchers studied various problems of this kind [2, 3, 4, 5, 6, 7, 8]; see [9] for a thorough review until 90s. More recently, the problem of communication constrained statistics has regained interest of researchers; see [10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24] and references therein.

In this paper, we are interested in the most basic setting of hypothesis testing in which the sender and the receiver observe correlated sources and , respectively, and the observation is distributed according to the product of the null hypothesis or the alternative hypothesis ; the sender transmit a message at rate , and the receiver decide the hypotheses based on the message and its observation.

In [2], Ahlswede and Csiszár introduced the so-called quantization scheme, and showed that the quantization scheme is optimal for the testing against independence, i.e., the alternative hypothesis is . In [3], Han introduced an improved version of the quantization scheme that fully exploit the knowledge of the marginal types. In [25], Shimokawa, Han, and Amari introduced the quantize and binning scheme, which we shall call the SHA scheme in the following. For a long time, it has not been known if the SHA is optimal or not. In [13], Rahman and Wagner showed that the SHA scheme is optimal for the testing against conditional independence.111In fact, for the testing against conditional independence, it suffices to consider a simpler version of quantize and binning scheme than the SHA scheme. More recently, Weinberger and Kochman [17] (see also [26]) derived the optimal exponential trade-off between the type I and type II error probabilities of the quantize and binning scheme. Since the decision is conducted based on the optimal likelihood decision rule in [17], their scheme may potentially provide a better performance than the SHA scheme; however, since the expression involves complicated optimization over multiple parameters, and a strict improvement has not been clarified so far.

A main objective of this paper is to investigate if the SHA scheme is optimal or not. In fact, it turns out that the answer is negative, and there exists a scheme that improves upon the SHA scheme. Since the general SHA scheme is involved, we focus on the critical rate, i.e., the minimum rate that is required to attain the same type II exponent as the Stein exponent of the centralized testing. Then, under a certain non-degeneracy condition, we will prove that the auxiliary random variable in the SHA scheme must coincide with the source itself, i.e., we must use the binning without quantization. By leveraging this simplification, for the binary symmetric double sources (BSDS), we derive a closed form expression of the critical rate attainable by the SHA scheme. Next, we will consider a product of the BSDS such that each component of the null hypothesis and the alternative hypothesis is “reversely aligned.” Perhaps surprisingly, it turns out that the SHA is sub-optimal for such hypotheses. Our improved scheme is simple, and it uses the SHA scheme for each component in a sequential manner; however, it should be emphasized that we need to conduct binning of each component separately, and our scheme is not a naive random binning.

II Problem Formulation

We consider the statistical problem of testing the null hypothesis on finite alphabets versus the alternative hypothesis on the same alphabet. The i.i.d. random variables distributed according to either or are observed by the sender and the receiver; the sender encodes the observation to a message by an encoding function

and the receiver decides whether to accept the null hypothesis or not by a decoding function

When the block length is obvious from the context, we omit the subscript . For a given testing scheme , the type I error probability is defined by

and the type II error probability is defined by

In the following, (or ) means that is distributed according to (or ).

In the problem of distributed hypothesis testing, we are interested in the trade-off among the communication rate and the type I and type II error probabilities. Particularly, we focus on the optimal trade-off between the communication rate and the exponent of the type II error probability when the type I error probability is vanishing, which is sometimes called “Stein regime”.

Definition 1

A pair of the communication rate and the exponent is defined to be achievable if there exists a sequence of testing schemes such that

and

The achievable region is the set of all achievable pair .

For a given communication rate , the maximum exponent is denoted by

When there is no communication constraint, i.e., , the sender can send the full observation without any encoding, and the receiver can use a scheme for the standard (centralized) hypothesis testing. In such a case, the Stein exponent is attainable. We define the critical rate as the minimum communication rate required to attain the Stein exponent:

III On Evaluation of SHA Scheme

When the communication rate is not sufficient to send , the standard approach is to quantize using a test channel for some finite auxiliary alphabet . For and with , let

In [3], a testing scheme based on quantization was proposed, and the following achievability bound was derived: for any test channel ,

In [25], a testing scheme based on quantization and binning was proposed, and the following achievability bound was derived: for any test channel such that ,

where , and

Particularly, by taking to be noiseless test channel, i.e., , we have

| (1) | ||||

| (2) |

where

In the following, we focus on the critical rate. Under some mild conditions, in order to attain the Stein exponent, we must take in the quantization-bining bound, i.e., no quantization. In the rest of this section, we prove this claim.

Suppose that and have full support. Let be a function on defined by222We suppose that alphabets are and ; taking as a special symbol in (3) is just notational convenience.

| (3) |

Proposition 2

Suppose that, for every , rows and are distinct. Then, only if there does not exist and such that .

Proof:

Since we assumed that and have full support and since , and have the same support, which we denote by . Let . Note that and have full support. Let be the linear family of distributions satisfying

for every and

for every . Then, we can write as

| (4) |

If

then must be the (unique) minimizer in (4). Then, the I-projection theorem (cf. [27, Theorem 3.2]) implies that the minimizer lies in the exponential family generated by and the constraints of , i.e., it has of the form

for some . Equivalently, this condition can be written as

for some functions on and on . If there exist and such that , then, for every , we have

which contradict the assumption that and are distinct. ∎

Since the quantization-binning bound is at most , when the assumption of Proposition 2 is satisfied, is the only choice of test channel to attain the Stein exponent.333Even though we can take a redundant test channel such that for any , such a test channel does not improve the attainable exponent.

In Proposition 2, the assumption that every raws of are distinct cannot be replaced by the assumption that every raws of the log-likelihood function

are distinct; for instance, when and are given by

for some , then every raws are distinct but . In fact, as is shown in the following Proposition 3, the symbols and in this example can be merged while maintaining . Even though Proposition 3 is not used in the rest of the paper, it may be of independent interest.

Proposition 3

Let be a function such that if and only if , and let . Then, for any satisfying and , we have

| (5) | ||||

Particularly, we have

| (6) |

Proof:

Remark 4

Introducing the function as in (3) is motivated by the problem of distributed function computing. Note that, in order to conduct the likelihood ratio test, the receiver need not compute the log-likelihood ratios for a given observation ; instead, it suffices to compute the summation

In the terminology of distributed computing [28, Definition 4], we can verify that the function is -informative.

IV Binary Symmetric Double Source (BSDS)

Let , and let and be given by

for and . Since and

the assumption of Proposition 2 is satisfied. Thus, we need to set in the quantization-binning bound to attain the Stein exponent. In the minimization of (1), since and are the uniform distribution, the only free parameter is the crossover probability . Then, we can easily find that the binning bound for BSDS is

| (9) | ||||

for . Furthermore, this bound implies that the critical rate attainable by the SHA scheme is

| (12) |

V Product of BSDS

Next, let us consider the case where and , and and for are DSBSs with parameters , respectively. Particularly, we consider the case where , , where . Note that, for the product source, the function defined by (3) can be decomposed as

where

Furthermore, we have , , and . Thus, by denoting , we have

and the assumption of Proposition 2 is satisfied. Thus, we need to set in the quantization-binning bound to attain the Stein exponent.

Since and , the conditional entropies and with respect to and satisfy . Thus, we find that the inner minimization of the binning bound is attained by , and

Furthermore, this bound implies that the critical rate attainable by the SHA scheme is

| (13) |

VI Sequential Scheme for Product of BSDS

Now, we describe a modified version of SHA scheme for the product of BSDS. For , let be the SHA scheme (without quantization) for versus . For a given rate , we split the rate as , and consider sequential scheme constructed from as follows. Upon observing , the sender transmit and .444Note that and include indices of random binning as well as marginal types of and . Then, upon receiving and observing , the receiver decides if and . The type I and type II error probabilities of this sequential scheme can be evaluated as

by the union bound, and

Thus, if the type I error probabilities of each scheme is vanishing, then the type I error probability is also vanishing. On the other hand, the type II exponent of the sequential scheme is the summation of the type II exponent of each scheme.

As in Section V, suppose that and . Furthermore, let . Then, from the above argument, and (9), we find that the following exponent is attainable by the sequential scheme:

| (14) |

for and . By setting and , we can derive the following bound on the critical rate:

| (15) |

Interestingly, the bound on the critical rate in (15) improves upon the bound in (13).

From an operational point of view, this improvement can be explained as follows. In the SHA scheme (without quantization), we use the minimum entropy decoder to compute an estimate of ; then, if the joint type is close to , we accept the null hypothesis. When the empirical conditional entropy is such that , even if there exists satisfying , the type II testing error does not occur though the receiver may erroneously compute .555Note that implies that is not close to . Consequently, the type II testing error may occur only if satisfies .

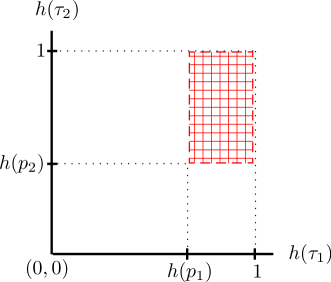

For the product of BSDSs, when the types of modulo sums and are and , the type II testing error may occur only if ; see Fig. 1a(a). In the modified SHA scheme, and are binned and estimated separately. Because of this, the constraint of type II testing error event become more stringent, i.e., and ; see Fig. Fig. 1a(b).

VII Discussion

In this paper, we have developed tools to evaluate the critical rate attainable by the SHA scheme, and exemplified that the SHA scheme is sub-optimal for a product of BSDSs. A future problem is to generalize the idea of sequential scheme in Section VI and develop a new achievability scheme for the distributed hypothesis testing. Another future problem is a potential utility of the scheme in [17]; as we mentioned in Section I, it has a potential to improve upon the SHA scheme, but strict improvement is not clear since the expression involves complicated optimization over multiple parameters.

Lastly, it should be pointed out that certain kinds of “structured” random coding is known to improve upon the naive random coding in the context of the mismatched decoding problem; eg. see [29, 30] and references therein. Even though there is no direct connection between the two problems, it might be interesting to pursue a mathematical connection between these problems.

Acknowledgment

The author appreciates Marat Burnashev for valuable discussions at an early stage of the work. The author also thanks Te Sun Han and Yasutada Oohama for fruitful discussions.

References

- [1] T. Berger, “Decentralized estimation and decision theory,” in Presented at the IEEE 7th Spring Workshop on Information Theory, Mt. Kisco, NY, September 1979.

- [2] R. Ahlswede and I. Csiszár, “Hypothesis testing with communication constraints,” IEEE Trans. Inform. Theory, vol. 32, no. 4, pp. 533–542, July 1986.

- [3] T. S. Han, “Hypothesis testing with multiterminal data compression,” IEEE Trans. Inform. Theory, vol. 33, no. 6, pp. 759–772, November 1987.

- [4] Z. Zhang and T. Berger, “Estimation via compressed information,” IEEE Trans. Inform. Theory, vol. 34, no. 2, pp. 198–211, March 1988.

- [5] S. Amari and T. S. Han, “Statistical inference under multiterminal rate restrictions: A differential geometric approach,” IEEE Trans. Inform. Theory, vol. 35, no. 2, pp. 217–227, March 1989.

- [6] S. Amari, “Fisher information under restriction of Shannon information in multi-terminal situations,” Annals of the Institute of Statistical Mathematics, vol. 41, no. 4, pp. 623–648, 1989.

- [7] T. S. Han and K. Kobayashi, “Exponential-type error probabilities for multiterminal hypothesis testing,” IEEE Trans. Inform. Theory, vol. 35, no. 1, pp. 2–14, January 1989.

- [8] H. M. H. Shalaby and A. Papamarcou, “Multiterminal detection with zero-rate data compression,” IEEE Trans. Inform. Theory, vol. 38, no. 2, pp. 254–267, March 1992.

- [9] T. S. Han and S. Amari, “Statistical inference under multiterminal data compression,” IEEE Trans. Inform. Theory, vol. 44, no. 6, pp. 2300–2324, October 1998.

- [10] S. Amari, “On optimal data compression in multiterminal statistical inference,” IEEE Trans. Inform. Theory, vol. 57, no. 9, pp. 5577–5587, September 2011.

- [11] M. Ueta and S. Kuzuoka, “The error exponent of zero-rate multiterminal hypothesis testing for sources with common information,” in Proc. ISITA 2014, Melbourne, Australia, 2014, pp. 559–563.

- [12] Y. Polyanskiy, “Hypothesis testing via a comparator,” in Proc. IEEE Int. Symp. Inf. Theory 2012, Cambridge, MA, 2012, pp. 2206–2210.

- [13] M. S. Rahman and A. B. Wagner, “On the optimality of binning for distributed hypothesis testing,” IEEE Trans. Inform. Theory, vol. 58, no. 10, pp. 6282–6303, October 2012.

- [14] Y. Xiang and Y.-H. Kim, “Interactive hypothesis testing against independence,” in IEEE International Symposium on Information Theory, 2013, pp. 2840–2844.

- [15] W. Zhao and L. Lai, “Distributed testing with cascaded encoders,” IEEE Trans. Inform. Theory, vol. 64, no. 11, pp. 7339–7348, November 2018.

- [16] S. Watanabe, “Neyman-Pearson test for zero-rate multiterminal hypothesis testing,” IEEE Trans. Inform. Theory, vol. 64, no. 7, pp. 4923–4939, July 2018.

- [17] N. Weinberger and Y. Kochman, “On the reliability function of distributed hypothesis testing under optimal detection,” IEEE Trans. Inform. Theory, vol. 65, no. 8, pp. 4940–4965, August 2019.

- [18] S. Salehkalaibar, M. Wigger, and L. Wang, “Hypothesis testing over the two-hop relay network,” IEEE Trans. Inform. Theory, vol. 65, no. 7, pp. 4411–4433, July 2019.

- [19] U. Hadar, J. Liu, Y. Polyanskiy, and O. Shayevitz, “Communication complexity of estimating correlations,” in Proceedings of the 51st Annual ACM Symposium on Theory of Computing (STOC ’19). ACM Press, 2019, pp. 792–803.

- [20] U. Hadar and O. Shayevitz, “Distributed estimation of Gaussian correlations,” IEEE Trans. Inform. Theory, vol. 65, no. 9, pp. 5323–5338, September 2019.

- [21] P. Escamilla, M. Wigger, and A. Zaidi, “Distributed hypothesis testing: Cooperation and concurrent detection,” IEEE Trans. Inform. Theory, vol. 66, no. 12, pp. 7550–7564, December 2020.

- [22] M. V. Burnashev, “New upper bounds in the hypothesis testing problem with information constraints,” Problems of Information Transmission, vol. 56, no. 2, pp. 64–81, 2020.

- [23] S. Sreekumar and D. Gündüz, “Distributed hypothesis testing over discrete memoryless channels,” IEEE Trans. Inform. Theory, vol. 66, no. 4, pp. 2044–2066, April 2020.

- [24] K. R. Sahasranand and H. Tyagi, “Communication complexity of distributed high dimensional correlation testing,” IEEE Trans. Inform. Theory, vol. 67, no. 9, pp. 6082–6095, September 2021.

- [25] H. Shimokawa, T. S. Han, and S. Amari, “Error bound of hypothesis testing with data compression,” in Proceedings of 1994 IEEE International Symposium on Information Theory, 1994, p. 114.

- [26] E. Haim and Y. Kochman, “Binary distributed hypothesis testing via Körner-Marton coding,” in 2016 IEEE Information Theory Workshop, 2016.

- [27] I. Csiszár and P. C. Shields, Information Theory and Statistics: A Tutorial. Now Publishers Inc., 2004.

- [28] S. Kuzuoka and S. Watanabe, “On distributed computing for functions with certain structures,” IEEE Trans. Inform. Theory, vol. 63, no. 11, pp. 7003–7017, November 2017.

- [29] A. Lapidoth, “Mismatched decoding and the multiple-access channel,” IEEE Trans. Inform. Theory, vol. 42, no. 5, pp. 1439–1452, September 1996.

- [30] J. Scarlett, A. Somekh-Baruch, A. Martinez, and A. G. i Fábregas, “A counter-example to the mismatched decoding converse for binary-input discrete memoryless channels,” IEEE Trans. Inform. Theory, vol. 10, no. 10, pp. 5387–5359, October 2015.