On Proximal Causal Learning with Many Hidden Confounders

Abstract

We generalize the proximal g-formula of Miao, Geng, and Tchetgen Tchetgen (2018) for causal inference under unobserved confounding using proxy variables. Specifically, we show that the formula holds true for all causal models in a certain equivalence class, and this class contains models in which the total number of levels for the set of unobserved confounders can be arbitrarily larger than the number of levels of each proxy variable. Although straightforward to obtain, the result can be significant for applications. Simulations corroborate our formal arguments.

1 Introduction

The gold standard in causal inference is randomized control trials. However, randomization can often be impractical, uneconomical, or even unethical, in which case the alternative is to infer the desired causal effects from observational data Hernán and Robins, (2020); Pearl, (2009); Rubin, (2005). In this paper we address the problem of causal inference from observational data when unobserved confounders are present. This is a hard problem in general, but a very relevant one for many applications. In a seminal paper, Miao, Geng, and Tchetgen Tchetgen Miao et al., (2018) demonstrated that the causal effect of a variable on some other variable can be identified nonparametrically even under unobserved confounding, as long as a set of sufficient conditions are satisfied. Their approach, dubbed Proximal Causal Learning Tchetgen Tchetgen et al., (2020), relies on the existence of two observed proxy variables that must be associated to the latent confounders in a certain way. Proximal Causal Learning can be particularly useful for industrial applications, where domain knowledge is often leveraged for reasoning about possible hidden confounders, and a large number of potential proxies are available (e.g., from detailed information on users or items).

As a motivating example from industry, consider users of an online service who perform a specific action, say interacting with a specific element in the service’s UI. Let be a (binary) random variable that models the event that a user undertook the specific action, and let denote the (binary) outcome that we are interested in studying, say if the user subsequently makes a purchase within the service. A causal quantity of interest is the average treatment effect

| (1) |

where the notation implies that we want to simulate the effect on of fixing to a specific value Pearl, (2009). The estimation of the effect in (1) can be difficult when unobserved confounders are present. In our industry example, those confounders could be unobserved external sources of variation such as social buzz or other competing services, as well as unobserved user characteristics such as demographics or price consciousness. Unless we account for such confounders, statistical inference of the treatment effect (1) may be prone to bias.

One way to account for an unobserved confounder is to employ two proxy variables, call them and , that are known to be coupled to in a certain way. In that case, and under certain (sufficient) conditions, the causal quantity is nonparametrically identifiable Miao et al., (2018); Shi et al., (2020); Tchetgen Tchetgen et al., (2020). An example of a causal graph for which identification is possible is shown in Fig. 1(d).

In the case where all variables are discrete, which we assume in this work, one of the conditions for identifiability in Miao et al., (2018) is that each of the two proxies and must have the same number of levels (i.e., cardinality of their range) as the unobserved . In this work we show that this condition can be significantly relaxed. As we elaborate in Section 4, the identification formula of Miao et al., (2018) holds true for all causal models in a certain equivalence class, and this class contains models in which the total number of levels for the set of unobserved confounders can be arbitrarily larger than the number of levels of or . This result, although easy to obtain, can be very important for applications: It opens the way to apply Proximal Causal Learning in a much wider class of problems than previously thought possible.

2 Causal graphs and -calculus

In this section we provide a short description of causal graphs and -calculus, mainly following Bareinboim and Pearl, (2016). A causal graph is defined by a set of unobserved (latent) variables and a set of observed variables, which are assumed to be coupled by local causal dependencies (deterministic functions) that give rise to a directed acyclic graph. For example, the causal graph of Fig. 1(a) involves two observed variables and a latent variable , which are coupled by local functions as indicated by the arrows.

A causal graph allows to predict the effect on a variable of intervening on some other variable by setting . In Pearl’s notation this is written as and it corresponds to the probability that takes the value in a modified causal graph in which has been fixed to value and all its incoming arrows in the original graph have been removed. The quantity can also be expressed as , where is the distribution of the latent variable , and is the potential outcome of unit had been assigned treatment Rubin, (2005).

A notable property of a causal graph is that, regardless of the form of the coupling functions among variables and regardless of the distribution of the latent variables, the distribution of the observed variables must obey certain conditional independence relations, which can be characterized by means of a graphical criterion known as d-separation: A set of nodes is said to block a path if either (i) contains at least one arrow-emitting node that is in or (ii) contains at least one collision node that is outside and has no descendant in . If a set blocks all paths from a set to a set , then we say that d-separates and , in which case it holds that and are independent given , which we write . For example, in Fig. 1(c) the variable d-separates the variables and in the induced graph in which the outgoing arrow from is removed.

Quantities such as can be estimated nonparametrically from the observational distribution using an algebra known as -calculus Pearl, (2009). The latter is a set of rules that stipulate d-separation conditions in certain induced subgraphs of the original graph. An example of -calculus is the ‘backdoor’ criterion for specifying admissible sets of variables: A set is admissible for estimating the causal effect of on if (i) no element of is a descendant of and (ii) the elements of block all backdoor paths from to (those are paths that end with an arrow pointing to ). Using counterfactuals, these two conditions imply (see Bareinboim and Pearl, (2016)), which is known as ‘conditional ignorability’ in the potential outcomes literature Rosenbaum and Rubin, (1983). An admissible set allows expressing the interventional distribution via observational quantities that are directly estimable from the data, using the g-formula Hernán and Robins, (2020):

| (2) |

As an example, in Fig. 1(c) the variable is admissible because (i) it is not a descendant of and (ii) it blocks the single backdoor path from to . Hence we can use (2) to estimate . Note that when all variables are discrete, the required quantities in (2) amount to simple histogram calculations.

The algebra of -calculus has been shown to be complete, meaning that if the rules of -calculus cannot establish a way to write as a functional of , then the effect is not identifiable Pearl, (2009).

3 Proximal Causal Learning

In practical applications, it may be hard to identify an admissible set of variables to use the backdoor g-formula (2), and reality may not be plausibly captured by a graph such as the one in Fig. 1(c) (for example, there might be omitted variables that cause to directly affect ; that would appear in Fig. 1(c) as an extra arrow from to ). In such cases, identification of may nonetheless still be possible if a pair of proxy variables (aka negative controls) are available, such as the variables and in the graph of Fig. 1(d). Assuming all variables are discrete, the corresponding identifiability result, due to Miao, Geng, and Tchetgen Tchetgen Miao et al., (2018), relies on the following assumptions:

- 1 Cardinalities -

-

(the variables have equal number of levels).

- 2 Backdoor -

-

The unobserved variable is admissible, blocking all backdoor paths from to .

- 3 Conditional independence -

-

The observed variables and the unobserved jointly satisfy the following two conditional independence relations:

(i) (ii) - 4 Matrix rank -

-

The matrix , whose th row and th column is , is invertible for each value .

Note that condition 4 is testable from the data, whereas conditions 1–3 involve the unobserved variable and hence their validity must rely on domain knowledge about the area of study. If all conditions 1–4 are met, then the quantity is identifiable by the proximal g-formula

| (3) |

where and are row and column vectors whose entries are and , respectively, for all values of and . For a proof sketch of (3), note that condition 2 allows us to write

| (4) |

and the latter inner product can be re-expressed as

| (5) |

which, by condition 3(ii), simplifies to the RHS of (3).

Note that the only difference of the proximal g-formula (3) with the g-formula (2) is that the terms of (2) have been replaced with . In analogy with (2), when all random variables are discrete, the required quantities in (3) can be computed by simple histogram calculations on the observed data. We refer to Miao et al., (2018); Shi et al., (2020); Tchetgen Tchetgen et al., (2020) for more details and extensions.

4 An equivalence class of models

The full proof of (3) in Miao et al., (2018) reveals that the condition 1 above (that , and have the same number of levels) is necessary for the proximal g-formula (3) to hold. This may at first seem too restrictive: The total number of levels for the unobserved may be hard to know in practice, and even if this number were somehow available, finding two proxy variables and that simultaneously satisfy conditions 1, 3, and 4, can be a daunting task. Condition 1 can be relaxed to , and similarly for , replacing the matrix inverse in (3) with a pseudoinverse Shi et al., (2020). However, by increasing and (e.g., by introducing more proxy variables) we may start violating conditions 3 and 4. Fortunately, as we show next, condition 1 is not as restrictive as it may first appear.

Our key observation is that the proximal g-formula (3) must be true for all causal models in an equivalence class for which conditions 1–4 are true. This class contains more expressive graphs than the graph shown in Fig. 1d. Critically, some of these graphs can involve additional latent nodes (see, e.g., Fig. 2b), without violating conditions 1–4. This opens the avenue for applications in which , the total number of levels of the set of all latent confounders, is arbitrarily higher than or . We do not attempt to provide a complete characterization of this equivalence class in this work; that would require different graphical tools Jaber et al., (2019). Here we only slightly modify the set of conditions 1–4, and show examples of causal graphs that are significantly more expressive than the graph in Fig. 1d, and for which the proximal g-formula (3) still holds. To maintain consistency with the notation we used in the previous sections, in the rest of the paper we will be using to denote a subset of all latent confounders such that , and we will be using different notation (e.g., ) for additional latent confounders.

Our modified set of conditions is obtained by re-expressing the backdoor criterion 2 by an equivalent set of conditional independence relations as follows (see Pearl, (2009, Section 11.3.3) for details). Let stand for the set of all direct parents of (observed and unobserved), excluding if is a direct parent of (in our generalization the node need not be a direct parent of ). The set may contain and/or additional nodes not appearing in Fig. 1(d); see Fig. 2 for examples. Then the backdoor criterion 2 can be replaced by the following:

- 2 Backdoor-surrogate

-

(i) (ii)

Note that the above two conditions are subsumed by the single conditional independence criterion 3(ii) when and is a direct parent of , as in Fig. 1(d).

Given the above, the equivalence class is defined by the set of models that satisfy conditions 1 and 4 from Section 3, together with the following set of graphical conditions (replacing conditions 2 and 3 from Section 3):

- Equivalence class:

-

(i) (ii) (iii) (iv)

In Fig. 2 we show a few examples of graphs in the equivalence class that can capture real-world dynamics and applications. The graph of Figure 2(a) uses a post-treatment variable to perform inference. Post treatment variables are known to bias regression estimators, but they can be used in the Proximal Causal Learning framework as long as the conditional independence relation holds Shi et al., (2020); Tchetgen Tchetgen et al., (2020).

The graph of Fig. 2(b) has the potential to capture arbitrary high-dimensional confounding in . Returning to our industry example, this graph is particularly well suited for applications that involve interactions with each of the many elements within the UI: Engagement with each element can be studied independently by grouping the engagement with all other elements in . That would be an example where we treat observed covariates as part of a latent in order to satisfy the sufficient conditions of the equivalence class for the proximal g-formula (3) to hold; alternatively we can condition on those covariates and use a modified estimator (Tchetgen Tchetgen et al.,, 2020).

The graph of Fig. 2(c) allows for high-dimensional and , as long as they do not simultaneously influence the low-dimensional (i.e., is not a collider). In our running industry example, can capture engagement with UI elements other than the one being studied, while can capture competition, other UI elements, marketing, or word-of-mouth effects that influence how a user interacts with the service. In this model, can be viewed as a low-dimensional ‘bottleneck’ from to .

5 Simulations

Real-world observational settings lack both ground truth estimates of the average treatment effect in (1) and the ability to verify the cardinality and conditional independence assumptions. We can evaluate how well Proximal Causal Learning can recover causal effects through simulated data with ground truth. Next we describe the structure of the simulations and how we leverage these simulations to understand properties of a simple histogram-based estimator of (3) when the assumptions in Section 3 are violated. Where relevant, we benchmark the results against a regression estimator.

5.1 Simulation setup and notation

For grounding, we first discuss the simulation of the graphical model of Fig. 1(d). We will leverage and extend this simulation in the following subsections to handle the graphs in Fig. 2, violations of conditional independence, and a high dimensional . All nodes are binary in all cases. We introduce difference parameters for the connection from node to node , e.g., . From there on, given a draw from a Bernoulli distribution with probability , the values of the nodes are populated according to:

where the average treatment effect is encoded by the difference parameter .

For additional details on the above structure, as well as extensions performed below, we refer to the repository333The repository for the code that produced the simulations and their results is located at https://github.com/hebda/proximal_causal_learning.

5.2 Bias in the model equivalence class

| Graph | Regression | Proximal Causal Learning |

|---|---|---|

| Fig. 1(d) | ||

| Fig. 2(a) | ||

| Fig. 2(b) | ||

| Fig. 2(c) |

Table 1 contains the results for the graphs of Fig. 2, including the base case of Fig. 1(d). We extend the simulation to include additional nodes , , and where applicable. We compare the Proximal Causal Learning method against a baseline regression approach that uses the same covariates. The comparison is between each estimator’s relative bias, which is defined as the relative difference between the estimated and true average treatment effect in (1). Proximal Causal Learning gives an effectively unbiased result for the three graphs in Fig. 2, with the small, non-zero values coming from sample variation. The regression benchmark is significantly biased in all cases; however we note that regression is typically able to leverage many more covariates, which may result in a reduced, though non-zero, bias, depending on the application.

5.3 Implications of the invertibility of

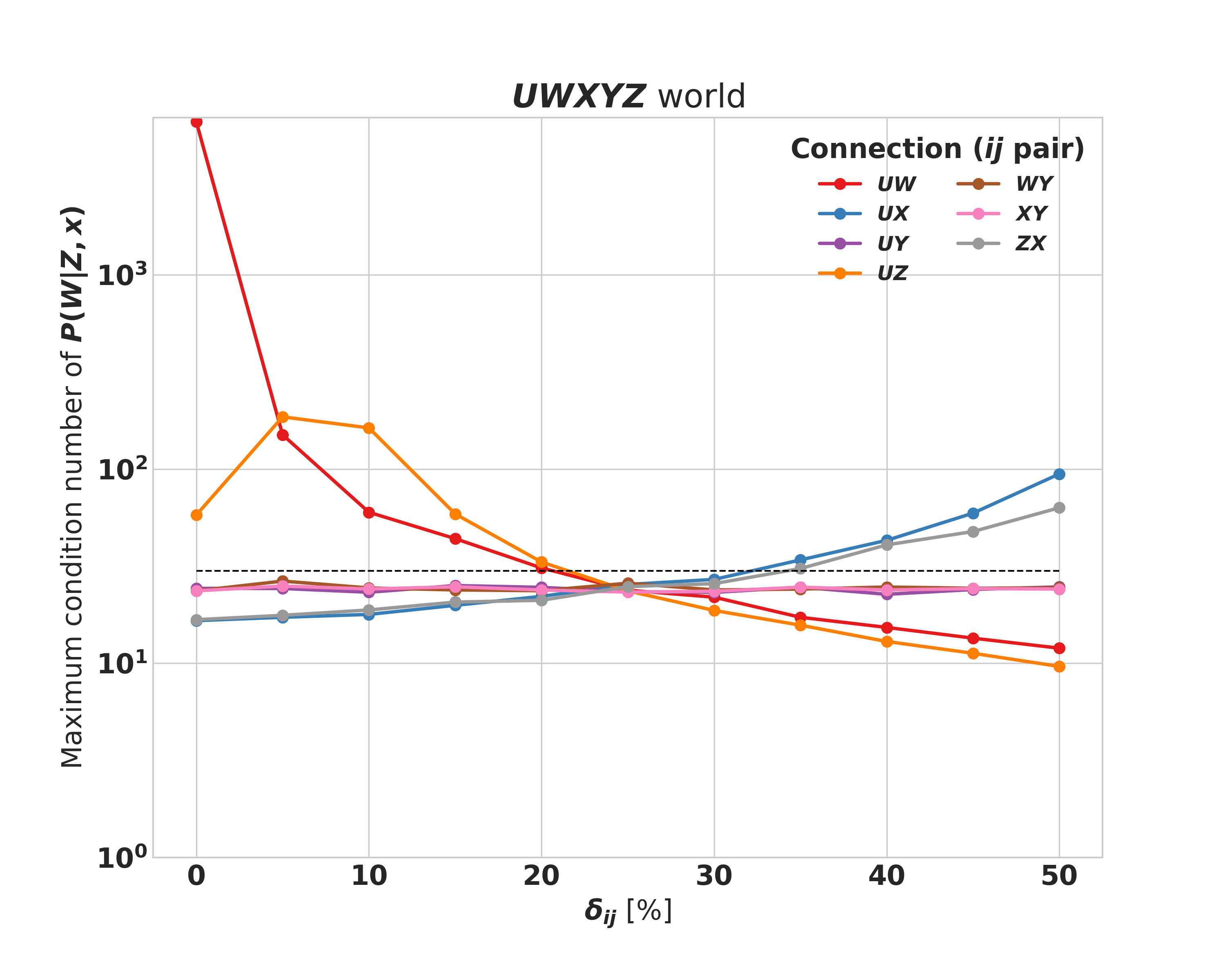

The invertibility of (criterion 4 from Section 3) can be directly tested from the data by looking at the condition number of each . For the 2-dimensional case, we have two matrices to consider, one for each value of , and we use the larger of the two condition numbers to describe the stability of the matrix inversion.

In Fig. 3 we can see that requiring a condition number of 30, which would lead to stable matrix inversion, corresponds to an implicit claim on the strength of certain connections, captured through the s in the structure of the simulation. These are that cannot be arbitrarily small, and that cannot be arbitrarily large. Furthermore, the connections can be of arbitrary strength. In short, the unobserved confounder must be sufficiently captured by the proxies, without exerting too much force on the treatment condition.

The requirement of having a maximum condition number of 30 is somewhat arbitrary and context dependent. Ultimately, this quantity is related to the variance of the estimator. Numerically stable matrix inversion provides low variance, while unstable inversion provides the opposite.

5.4 Violations of the independence assumptions

In practical applications, the selection of variables and is very important since the independence assumptions are not testable. In temporal data, one could use certain future observations as proxies, as the proxies do not need to be pre-treatment Shi et al., (2020). In industry settings, variables are generally easier to discover: Most interventions can only affect an outcome of interest through user interactions with the service. Variables are generally harder to find, as they should affect the outcome without affecting interactions with the service.

In this subsection, we examine the bias in the average treatment effect that is induced into an estimator of (3) when the independence assumption is violated, and we benchmark this bias against a regression estimator. We impose this violation into the simulation by modifying the -step in the simulation to include the term . In this way, is conditionally dependent on in addition to and .

Fig. 4 shows the bias of the two estimators for arbitrary . We see that the bias for regression is roughly independent, though non-zero, for all values of . For Proximal Causal Learning, we see that the bias is strongly dependent on the strength of the connection. Careful selection of the variable will lead to a variable for which is not large. Additionally, the invertibility of provides protection against arbitrarily high values of bias, since large values of cause this testable criterion to fail. (Such points are omitted from the plot.)

5.5 Bias when the dimensionality of is large

| Simulation Setup | Regression | Proximal Causal Learning |

|---|---|---|

| Fig. 2(b), binary | ||

| Fig. 2(b), 5-dimensional | ||

| Fig. 1(d), constant in | ||

| Fig. 1(d), decreases linearly in | ||

| Fig. 1(d), decreases linearly in , constant in | ||

| Fig. 1(d), constant in , decreases linearly in | ||

| Fig. 1(d), for , constant in | ||

| Fig. 1(d), constant in , for |

In most applications, it is hard to have a good estimate of the dimensionality of the unobserved confounder . In addition, the variables and need to have the same number of levels as the total number of levels of , for the matrix to be invertible. In this subsection, we examine the bias under a high dimensional that violates the (untestable) cardinality assumption 1 for the proximal g-formula (3). We focus on the graphs of Figures 1(d) and 2(b).

In this simulation we maintain binary and and allow to contain a total of 10 binary dimensions. This produces an unobserved confounder space with a total of 1024 levels. We encode difference parameters for each binary dimension by treating the relevant variables as 10-dimensional vectors rather than scalars. Table 2 presents the results under different types of confounding effects. When comparing with Table 1 we see that, when the dimensionality of increases to 10, our estimates increase in bias, and this bias does not depend on the dimensionality of . Nevertheless, in all types of confounding we simulated, this bias is relatively small for Proximal Causal Learning, and it is consistently smaller than when using regression.

6 Conclusions and discussion

We have shown that the identification result of Miao, Geng, and Tchetgen Tchetgen Miao et al., (2018) for Proximal Causal Learning under unobserved confounding holds true for all causal models in a certain equivalence class. This class contains models in which the total number of levels for the set of unobserved confounders can be arbitrarily higher than the number of levels of the two proxy variables, an important result for industry applications.

In the simulations we have also studied a number of different properties of a simple histogram-based estimator of (3), such as its sensitivity to misspecification or violation of some of the sufficient conditions. The simulations have additionally identified that, for low dimensional proxies, even the presence of a high dimensional will yield less biased estimates under the Proximal Causal Learning estimator than with standard regression methods. Overall, our results provide further evidence that Proximal Causal Learning can be a very promising causal inference method in an observational setting.

An interesting open question is to completely characterize the equivalence class of models for which identification holds (using tools such as ancestral graphs Jaber et al., (2019)), and to establish a necessary condition for identifiability. Another useful direction is to derive the population moments of an estimator of (3), both under a specified as well as under a misspecified causal graph model, and see how the risk of the estimator varies with the problem inputs (for instance, what is the precise dependence of the variance of the estimator on properties of , as we have alluded to above). Our simulations are already providing some answers to those questions. Nonetheless, an analytical treatment would be valuable as it would offer more intuition into the applicability of Proximal Causal Learning in practical causal inference problems, especially those often encountered in the industry.

References

- Bareinboim and Pearl, (2016) Bareinboim, E. and Pearl, J. (2016). Causal inference and the data-fusion problem. Proceedings of the National Academy of Sciences, 113(27):7345–7352.

- Hernán and Robins, (2020) Hernán, M. A. and Robins, J. M. (2020). Causal inference: What if. Boca Raton: Chapman & Hill/CRC.

- Jaber et al., (2019) Jaber, A., Zhang, J., and Bareinboim, E. (2019). Causal identification under Markov equivalence: Completeness results. In International Conference on Machine Learning, pages 2981–2989.

- Miao et al., (2018) Miao, W., Geng, Z., and Tchetgen Tchetgen, E. J. (2018). Identifying causal effects with proxy variables of an unmeasured confounder. Biometrika, 105(4):987–993.

- Pearl, (2009) Pearl, J. (2009). Causality. Cambridge University Press, 2nd edition.

- Rosenbaum and Rubin, (1983) Rosenbaum, P. R. and Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1):41–55.

- Rubin, (2005) Rubin, D. B. (2005). Causal inference using potential outcomes: Design, modeling, decisions. Journal of the American Statistical Association, 100(469):322–331.

- Shi et al., (2020) Shi, X., Miao, W., Nelson, J. C., and Tchetgen Tchetgen, E. J. (2020). Multiply robust causal inference with double-negative control adjustment for categorical unmeasured confounding. Journal of the Royal Statistical Society: Series B (Statistical Methodology).

- Tchetgen Tchetgen et al., (2020) Tchetgen Tchetgen, E. J., Ying, A., Cui, Y., Shi, X., and Miao, W. (2020). An introduction to proximal causal learning. arXiv:2009.10982.