On Non-Negative Quadratic Programming

in Geometric Optimization

[12]\fnmSiu Wing \surCheng \equalcontThese authors contributed equally to this work.

These authors contributed equally to this work.

1]\orgdivDepartment of Computer Science and Engineering,

\orgname

The Hong Kong University of Science and Technology, \orgaddress

\cityHong Kong, \countryChina

2]ORCID: 0000-0002-3557-9935

3]ORCID: 0000-0002-5682-0003

On Non-Negative Quadratic Programming in Geometric Optimization

Siu Wing Cheng111Corresponding author. E-mail: [email protected]. ORCID: 0000-0002-3557-993533footnotemark: 3 and Man Ting Wong222Contributing author. Email: [email protected]. ORCID: 0000-0002-5682-0003333These authors contributed equally to this work.

Department of Computer Science and Engineering,

The Hong Kong University of Science and Technology,

Hong Kong, China.

Abstract

We study a method to solve several non-negative quadratic programming problems in geometric optimization by applying a numerical solver iteratively. We implemented the method by using quadprog of MATLAB as a subroutine. In comparison with a single call of quadprog, we obtain a 10-fold speedup for two proximity graph problems in on some public data sets, and a 2-fold or more speedup for deblurring some gray-scale space and thermal images via non-negative least square. In the image deblurring experiments, the iterative method compares favorably with other software that can solve non-negative least square, including FISTA with backtracking, SBB, FNNLS, and lsqnonneg of MATLAB. We try to explain the observed efficiency by proving that, under a certain assumption, the number of iterations needed to reduce the gap between the current solution value and the optimum by a factor is roughly bounded by the square root of the number of variables. We checked the assumption in our experiments on proximity graphs and confirmed that the assumption holds.

Keywords: Convex quadratic programming, Geometric optimization, Active set methods, Non-negative solution.

1 Introduction

We study a method to solve several non-negative convex quadratic programming problems (NNQ problems): minimize subject to and . It applies a numerical solver on the constrained subproblems iteratively until the optimal solution is obtained. Given a vector , we use to denote its -th coordinate. We use and to denote -dimensional vectors that consist of 1s and 0s, respectively.

The first problem is fitting a proximity graph to data points in , which finds applications in classification, regression, and clustering [1, 2, 3, 4]. Daitch et al. [1] defined a proximity graph via solving an NNQ problem. The unknown is a vector , specifying the edge weights in the complete graph on the input points . Let denote the coordinate of that stores the weight of the edge . Edge weights must be non-negative; each point must have a total weight of at least 1 over its incident edges; these constraints can be modelled as and , where is the incidence matrix for the complete graph, and is the -dimensional vector with all coordinates equal to 1. The objective is to minimize , which can be written as for some matrix . We call this the DKSG problem. The one-dimensional case can be solved in time [5].

We also study another proximity graph in defined by Zhang et al. [4] via minimizing for some constants . The vector stores the unknown edge weights. For all distinct , the coordinate of is equal to . The matrix is the incidence matrix for the complete graph. The objective function can be written as , where and . The only constraints are . We call this the ZHLG problem.

The third problem is to deblur some mildly sparse gray-scale images [6, 7]. The pixels in an image form a vector of gray scale values in ; the blurring can be modelled by the action of a matrix that depends on the particular point spread function adopted [7]; and is the blurred image. Working backward, given the matrix and an observed blurred image , we recover the image by finding the unknown that minimizes , which is a non-negative least square problem (NNLS) [8, 9]. The problem is often ill-posed, i.e., is not invertible.

1.1 Related work

Random sampling has been applied to select a subset of violated constraints in an iterative scheme to solve linear programming and its generalizations (which includes convex quadratic programming) [10, 11, 12, 13, 14]. For linear programming in with constraints, Clarkson’s Las Vegas method [10] starts with a small working set of constraints and runs in iterations; it draws a random sample of the violated constraints at the beginning of each iteration, adds them to the working set of constraints, and recursively solves the linear programming problem on the expanded working set. This algorithm solves approximately subproblems in expectation, with constraints in each subproblem. In addition, Clarkson [10] provides an iterative reweighing algorithm for linear programming that solves subproblems in expectation with constraints in each subproblem. A mixed algorithm is proposed in [10] by following the first algorithm but solving every subproblem using the iterative reweighing algorithm. All algorithms run in the RAM model and the expected running time of the mixed algorithm is . Subsequent research by Sharir and Welzl [14] and Matoušek et al. [13] improved the expected running time to in the RAM model which is subexponential in . Brise and Gärtner [15] showed that Clarkson’s algorithm works for the larger class of violator spaces [16].

Quadratic programming problems are convex when the Hessian is positive (semi-)definite. When the Hessian is indefinite, the problem becomes non-convex and NP-hard. For non-convex quadratic programming, the goal is to find a local minimum instead of the global minimum.

When all input numbers are rational numbers, one can work outside the RAM model to obtain polynomial-time algorithms for convex quadratic programming. Kozlov et al. [17] described a polynomial-time time algorithm for convex quadratic programming that is based on the ellipsoid method [18, 19]. Kapoor and Vaidya [20] showed that convex quadratic programming with constraints and variables can be solved in arithmetic operations, where is bounded by the number of bits in the input. Monteiro and Adler [21] showed that the running time can be improved to , provided that .

A simplex-based exact convex quadratic programming solver was developed by Gärtner and Schönherr. It works well when the constraint matrix is dense and there are few variables or constraints [22]. However, it has been noted that this solver is inefficient in high dimensions due to the use of arbitrary-precision arithmetic [23]. Generally, iterative methods are preferred for large problems because they preserve the sparsity of the Hessian matrix [24].

Our method is related to solving large-scale quadratic programming by the active set method. The active set method can be viewed as turning the active inequality constraints into equality constraints, resulting in a simplified subproblem. Large-scale quadratic programming algorithms often assume box constraints, i.e., each variable has an upper bound and lower bound; however, these bounds may not necessarily be finite. In the subsequent discussion, we will provide a brief overview of the existing active-set algorithms for large-scale quadratic programming found in the literature.

Box constraints only

Coleman and Hulbert [25] proposed an active set algorithm that works for both convex and non-convex quadratic programming problems. The algorithm is designed for the case that there are only box constraints with finite bounds. Therefore, having a variable in the active set means requiring that variable to be at its lower or upper bound. As in [26, 27, 28], their method identifies several variables to be added to the active set. Given a search direction, the projected search direction is a projection of the search direction that sets all its coordinates that are in the active set to zero. Consequently, following the projected search direction would not alter the variables in the active set. This descent process begins by following the projected search direction until a coordinate hits its lower or upper bound. The algorithm updates the active set by adding the variable that just hits its lower or upper bound. This process is repeated until the projected search direction is no longer a descent direction or a local minimum is reached along the projected search direction. If the solution is not optimal, the algorithm uses the gradient to identify a set of variables in the active set such that, by allowing these variables to change their values, the objective function value will decrease. In each iteration, the algorithm releases this set of variables from the active set. Thus allows for the addition and removal of multiple variables from the active set in each iteration, guaranteeing that the active set does not experience monotonic growth. This is considered advantageous because active set algorithms often suffer from only being able to add or delete a single constraint from the active set per iteration.

Yang and Tolle [24] proposed an active set algorithm for convex quadratic programming with box constraints that can remove more than one box constraint from the active set in each iteration. The descent in each iteration is performed using the conjugate gradient method [29], which ignores the box constraints and generates a descent path that either stops at the optimum or at the intersection with the boundary of the feasible region. In the latter case, some variables reach their lower or upper bounds. The algorithm maintains a subset of such variables and the bounds reached by them that were recorded in previous iterations. In the main loop of the algorithm, the first step is to compute the set of variables that are at their lower or upper bounds such that the objective function value is not smaller at any feasible solution obtained by adjusting the variables in . Then, the algorithm removes one constraint at a time from until the shrunken induces a descent direction. If becomes empty and there is no feasible descent direction, then the solution is optimal. If there exists a descent direction, then forms the new active set. The algorithm solves the constrained problem induced by this new active set using the conjugate gradient method [29] which we already mentioned earlier. Empirically, the algorithm performs better for problems that have a small set of free variables at optimality, and for problems with only non-negativity constraints.

Moré and Toraldo [30] proposed an algorithm for strictly convex quadratic programs subject to box constraints. The box constraints induce the faces of a hyper-rectangle, which form the boundaries of the feasible region. Furthermore, an active set identifies a face of the hyper-rectangle. The main loop iterates two procedures. The first procedure performs gradient descent until the optimal solution is reached or the gradient descent path is intercepted by a facet of the feasible region. In the first case, the algorithm terminates. In the latter case, the affine subspace of is defined by an active set of variables at their upper or lower bounds, and the second procedure is invoked. It runs the conjugate gradient method on the original problem constrained by the active set ; it either finds the optimum of this constrained problem in the interior of , or descends to a boundary face of . In either case, the algorithm proceeds to the next iteration of the main loop.

Friedlander et al. [31] proposed an algorithm for convex quadratic programs subject to box constraints. The structure of the algorithm is similar to [30]. The aglorithm performs gradient descent until the descent path is intercepted by a facet of the feasible region. To solve the original problem constrained on the facet , instead of using the conjugate gradient method, it employs the Barzilai-Borwein method to find the optimum of this constrained problem. The advantage of the Barzilai-Borwein method is that it requires less memory storage and fewer computations than the conjugate gradient method.

Box constraints and general linear constraints

Gould [32] proposed an active set algorithm for non-convex quadratic programming problems subject to box constraints and linear constraints. The algorithm adds or removes at most one constraint from the active set per iteration. In each iteration, the algorithm constructs a system of linear equations induced by the active set, solves it, and adds this solution to current feasible solution of the quadratic program. The algorithm maintains an LU factorization of the coefficient matrix to exploit the sparsity of the system of equations. This approach allows for efficient updates to the factorization as the active set changes.

Bartlett and Biegler [33] proposed an algorithm for convex quadratic programming subject to box constraints and linear inequality constraints. The algorithm maintains a dual feasible solution and a primal infeasible solution in the optimization process. In each iteration, the algorithm adds a violated primal constraint to the active set. Then, the Schur complement method [34] is used to solve the optimisation problem constrained by the active set. If a dual variable reaches zero in the update, the corresponding constraint is removed from the active set.

Our contributions

The method that we study greedily selects a subset of variables as free variables—the non-free variables are fixed at zero—and calls the solver to solve a constrained NNQ problem in each iteration. At the end of each iteration, the set of free variables is updated in two ways. First, all free variables that are equal to zero are made non-free in the next iteration. Second, a subset of the non-free variables that violate the dual feasibility constraint the most are set free in the next iteration. However, if there are too few variables that violate the dual feasibility constraint or the number of iterations exceeds a predefined threshold, we simply set free all variables that violate the dual feasibility constraint.

A version of the above greedy selection of free variables in an iterative scheme was already used in [1] for solving the DKSG problem because it took too long for a single call of a quadratic programming solver to finish. However, it is only briefly mentioned in [1] that a small number of the most negative gradient coordinates are selected to be freed. No further details are given. We found that the selection of the number of variables to be freed is crucial. The right answer is not some constant number of variables or a constant fraction of them. Also, one cannot always turn free variables that happen to be zero in an iteration into non-free variables in the next iteration as suggested in [1]; otherwise, the algorithm may not terminate in some cases.

Our method also uses the gradient to screen for descent directions, allowing us to release more than one variable at each iteration. However, instead of setting free all variables that have a non-zero gradient from the active set as in [15, 25, 31, 30], we choose a parameter that enables us to remove just the right amount of variables from the active set so that our subproblem does not become too large. Heuristically, this approach is faster than freeing all variables with a non-zero gradient or freeing only one variable, as in [33, 32]. Similar to the method presented in [24], we remove variables from the free variable set, enabling us to keep our subproblem small. This is in contrast to the non-decreasing size of the free variable set, as in [15, 10]. Thus, our algorithm performs well when solution sparsity is observed.

Clarkson’s linear programming algorithm [10] is designed for cases when the number of variables is much less than the number of given constraints. However, this is not the case for some other problems. For example, consider the DSKG problem with input points. Although the ambient space is , there are variables and constraints. By design, the number of constraints in a subproblem of Clarkson’s algorithm is proportional to the product of the number of variables and the square root of the total number of constraints. In our context, the subproblem has constraints. Consequently, solving such a subproblem is not easier than solving the whole problem. Therefore, Clarkson’s algorithm cannot be directly applied to our proximity graph and NNLS problems, as in these problems, the number of constraints and variables are proportional to each other.

We implemented the iterative method by using quadprog of MATLAB as a subroutine. In comparison with a single call of quadprog, we obtain a 10-fold speedup for DKSG and ZHLG on some public data sets; we also obtain a 2-fold or more speedup for deblurring some gray-scale space and thermal images via NNLS. In the image deblurring experiments, the iterative method compares favorably with other software that can solve NNLS, including FISTA with backtracking [35, 36], SBB [8], FNNLS [9], and lsqnonneg of MATLAB. We emphasize that we do not claim a solution for image deblurring as there are many issues that we do not address; we only seek to demonstrate the potential of the iterative scheme.

The iterative method gains efficiency when the solutions for the intermediate constrained subproblems are sparse and the numerical solver runs faster in such cases. Due to the overhead in setting up the problem to be solved in each iteration, a substantial fraction of the variables in an intermediate solution should be zero in order that the saving outweighs the overhead.

We try to offer a theoretical explanation of the observed efficiency. Let denote the objective function of the NNQ problem at hand. Let denote the inner product of two vectors and . A unit direction is a descent direction from a feasible solution if and lies in the feasible region for some . Let be the solution produced in the -th iteration. Let be the optimal solution. Using elementary vector calculus, one can show that , where is minimum in direction from , and is the unit vector . We prove that there exists a descent direction from for which only a few variables need to be freed and is roughly bounded from below by the reciprocal of the square root of the number of variables. Therefore, if we assume that the distance between and the minimum in direction is at least for all , where is some fixed value, then for some that is roughly bounded by times the square root of the number of variables. That is, the gap from will be reduced by a factor in at most iterations. It follows that SolveNNQ will converge quickly to the optimum under our assumption. The geometric-like convergence still holds even if the inequality is satisfied in only a constant fraction of any sequence of consecutive iterations (the iterations in that constant fraction need not be consecutive).

We ran experiments on the DKSG and ZHLG problems to check the assumption of our convergence analysis. We checked the ratios using the descent direction taken by our algorithm as . Therefore, is . Our experiments show that in every iteration, with the exception of the outliers from the DKSG problem. However, the convergence still holds despite the outliers. Most of the runs achieve better results, as shown in Figures 3(a) and (b) in Section 3. For the ZHLG problem, we observe that holds in every iteration. For the DKSG problem, the majority of the ratios are greater than or equal to , with only a few exceptions that are less than .

2 Algorithm

Notation

We use uppercase and lowercase letters in typewriter font to denote matrices and vectors, respectively. The inner product of and is written as or . We use to denote the -dimensional zero vector and the -dimensional vector with all coordinates equal to 1.

The span of a set of vectors is the linear subspace spanned by them; we denote it by . In , for , define the vector to have a value 1 in the -th coordinate and zero at other coordinates. Therefore, form an orthonormal basis of .

A conical combination of a set of vectors is for some non-negative real values . For example, the set of all conical combinations of form the positive quadrant of .

Given a vector , we denote the -th coordinate of by putting a pair of brackets around and attaching a subscript to the right bracket, i.e., . This notation should not be confused with a vector that is annotated with a subscript. For example, and for all . As another example, means the intermediate solution vector that we obtain in the -th iteration, but refers to the -th coordinate of . The vector inside the brackets can be the result of some operations; for example, refers to the -th coordinate of the vector formed by multiplying the matrix with the vector . We use to denote .

| s.t. | , , | s.t. | , | |||

| , | . | |||||

| . | ||||||

| (a) Constrained NNQ | (b) Lagrange dual | |||||

Lagrange dual

Let the objective function be denoted by . Assume that the constraints of the original NNQ problem are , , and for some matrices and . Let be the number of rows of . The number of rows of will not matter in our discussion.

For , the -th iteration of the method solves a constrained version of the NNQ problem in which a subset of variables are fixed at zero. Figure 1(a) shows this constrained NNQ problem. We use to denote the set of indices of these variables that are fixed at zero, and we call an active set. For convenience, we say that the variables whose indices belong to are the variables in the active set . We call the variables outside the free variables.

We are interested in the Lagrange dual problem as it will tell us how to update the active set when proceeding to the next iteration [37]. Let and be the vectors of Lagrange multipliers for the constraints and , respectively. The dimensions of and are equal to number of rows of and , respectively. As a result, . In the dual problem, the coordinates of are required to be non-negative as the inequality signs in are greater than or equal to, whereas the coordinates of are unrestricted due to the equality signs in . Similarly, let be the vector of Lagrange multipliers that correspond to the constraints for and for . So is required to be non-negative for whereas is unrestricted for . The Lagrange dual function is . The Lagrange dual problem is shown in Figure 1(b). Note that is unrestricted in the dual problem.

KKT conditions and candidate free variable

By duality, the optimal value of the Lagrange dual problem is always a lower bound of the optimal value of the primal problem. Since all constraints in Figure 1(a) are affine, strong duality holds in this case which implies that the optimal value of the Lagrange dual problem is equal to the optimal value of the primal problem [37, Section 5.2.3].

Let denote the optimal solution of the constrained NNQ problem in Figure 1(a). That is, is the optimal solution in the -th iteration. Let be the corresponding solution of the Lagrange dual problem. For convenience, we say that is the optimal solution of constrained by , and that is the optimal solution of constrained by .

The Karush-Kuhn-Tucker conditions, or KKT conditions for short, are the sufficient and necessary conditions for to be an optimal solution for the Lagrange dual problem [37]. There are four of them:

-

•

Critical point: .

-

•

Primal feasibility: , , , and , .

-

•

Dual feasibility: and , .

-

•

Complementary slackness: for all , , and for all , .

If is not an optimal solution for the original NNQ problem, there must exist some such that if is removed from , the KKT conditions are violated and hence is not an optimal solution for constrained by . Among the KKT conditions, only the dual feasibility can be violated by removing from ; in case of violation, we must have . Therefore, for all , if , then is a candidate to be removed from the active set. In other words, is a candidate variable to be set free in the next iteration.

Algorithm specification

We use a trick that is important for efficiency. For , before calling the solver to compute in the -th iteration, we eliminate the variables in the active set because they are set to zeros anyway. Since there can be many variables in especially when is small, this step allows the solver to potentially run a lot faster. Therefore, the solver cannot return any Lagrange multiplier that corresponds to the variable in the active set because is eliminated before the solver is called. This seems to be a problem because our key task at the end of the -th iteration is to determine an appropriate subset of such that . Fortunately, the first KKT condition tells us that , so we can compute all entries in . In other words, the optimal solution of the Lagrange dual problem constrained by is fully characterized by .

SolveNNQ in Algorithm 1 describes the iterative method. In line 14 of SolveNNQ, we kick out all free variables that are zero in the current solution , i.e., if and , move to . This step keeps the number of free variables small so that the next call of the solver will run fast. When the computation is near its end, moving a free variable wrongly to the active set can be costly; it is preferable to shrink the active set to stay on course for the optimal solution . Therefore, we have a threshold on and set in line 11 if . The threshold gives us control on the number of free variables. In some rare cases when we are near , the algorithm may alternate between excluding a primal variable from the active set in one iteration and moving the same variable into the active set in the next iteration. The algorithm may not terminate. Therefore, we have another threshold on the number of iterations beyond which the active set will be shrunk monotonically in line 11, thereby guaranteeing convergence. The threshold is rarely exceeded in our experiments; setting when also helps to keep the the algorithm from exceeding the threshold . In our experiments, we set , , and .

Using elementary vector calculus, one can prove that as stated in Lemma 1 below, where is any unit descent direction from , is the minimum in direction from , and is the unit vector .

In Sections 4 and 5, for the DKSG, ZHLG, and NNLS problems, we prove that there exists a descent direction from that involves only free variables in and is roughly bounded from below by the square root of the number of variables. Therefore, if we assume that the distance between and the minimum in direction is at least for all , where is some fixed value, then for some that is roughly bounded by times the square root of the number of variables. It follows that if is not exceeded, SolveNNQ will converge efficiently to the optimum under our assumption. The geometric-like convergence still holds even if the inequality is satisfied in only a constant fraction of any sequence of consecutive iterations (the iterations in that constant fraction need not be consecutive). If is exceeded, Lemma 2 below shows that the number of remaining iterations is at most ; however, we have no control on the number of free variables.

In the following, we derive Lemma 1 for convex quadratic functions. Property (i) of Lemma 1 is closely related to the first-order condition [37] for convex functions. For completeness, we include an adaptation of it to the convex quadratic functions. For simplicity in representation, we assume that the optimal solution is unique without loss of generality. However, the correctness of our proofs does not require the optimal solution to be unique.

Lemma 1.

Let be any unit descent direction from . Let for some be the feasible point that minimizes on the ray from in direction . Let be an active set that is disjoint from . Let be the optimal solution for constrained by . Then, , where is the unit vector .

Proof.

We prove two properties: (i) , and (ii) .

Consider (i). For all , define . By the chain rule, we have . We integrate along a linear movement from to . Using the fact that , we obtain

It follows immediately that

This completes the proof of (i).

Consider (ii). Using and the fact that , we carry out a symmetric derivation:

| (2) | |||||

Combining (2) and (2) gives . As is the feasible point that minimizes the value of on the ray from in direction , we have . Therefore, , completing the proof of (ii).

By applying (i) to the descent direction , we get . Since is disjoint from , the feasible points on the ray from in direction are also feasible with respect to . Therefore, . By applying (ii) to the descent direction , we get . Dividing the lower bound for by the upper bound for proves that . Hence, . ∎

Lemma 2.

Proof.

By the KKT conditions, , so . By the complementary slackness, we have and . The former equation implies that . By primal feasibility, which implies that . As a result,

| (3) |

By primal and dual feasibilities, and . So .

We claim that for some . Assume to the contrary that for all . Then, for all , which implies that . Substituting and into (3) gives . However, since is not optimal, by the convexity of and the feasible region of the NNQ problem, is a descent direction from , contradicting the relation . This proves our claim.

By our claim, there must exist some index such that . This index must be included in , which means that because . As a result, each iteration of lines 10 and 11 sets free at least one more element of . After at most more iterations, all elements of are free variables, which means that the solver will find in at most more iterations. ∎

3 Experimental results

In this section, we present our experimental results on the DKSG, ZHLG, and image deblurring problems. Our machine configuration is: Intel Core 7-9700K 3.6Hz cpu, 3600 Mhz ram, 8 cores, 8 logical processors. We use MATLAB version R2020b. We use the option of quadprog that runs the interior-point-convex algorithm.

As explained in Section 2, we eliminate the variables in the active set in each iteration in order to speed up the solver. One implementation is to extract the remaining rows and columns of the constraint matrix; however, doing so incurs a large amount of data movements. Instead, we zero out the rows and columns corresponding to the variables in the active set.

We determine that is a good setting for the DKSG and ZHLG data sets. If we increase to , the number of iterations is substantially reduced, but the running time of each iteration is larger; there is little difference in the overall efficiency. If we decrease to , the number of iterations increases so much that the overall efficiency decreases. We also set in the experiments with image deblurring without checking whether this is the best for them. It helps to verify the robustness of this choice of .

We set to be and to be 15. Since we often observe a geometric reduction in the gap between the current objective function value and the optimum from one iteration to the next, we rarely encounter a case in our experiments that the threshold is exceeded.

3.1 DKSG and ZHLG

For both the DKSG and ZHLG problems, we ran experiments to compare the performance of SolveNNQ with a single call of quadprog on the UCI Machine Learning Repository data sets Iris, HCV, Ionosphere, and AAL [38]. The data sets IRIS and Ionosphere were also used in the experiments in the paper of Daitch et al. [1] that proposed the DKSG problem. For each data set, we sampled uniformly at random a number of rows that represent the number of points and a number of attributes that represent the number of dimensions . There are two parameters and in the objective function of the ZHLG problem. According to the results in [4], we set and .

| Ionosphere | |||||||

|---|---|---|---|---|---|---|---|

| DKSG | ZHLG | ||||||

| quad | ours | nnz | quad | ours | nnz | ||

| 70 | 2 | 4.57s | 1.84s | 44% | 2.51s | 1.06s | 47% |

| 4 | 5.78s | 2.25s | 47% | 2.20s | 0.97s | 49% | |

| 10 | 4.65s | 1.55s | 47% | 1.86s | 1.67s | 59% | |

| 100 | 2 | 26.67s | 5.41s | 33% | 16.31s | 3.35s | 36% |

| 4 | 43.60s | 7.06s | 35% | 14.87s | 2.77s | 36% | |

| 10 | 28.53s | 5.46s | 35% | 13.34s | 5.42s | 44% | |

| 130 | 2 | 192.41s | 11.56s | 27% | 144.27s | 5.53s | 28% |

| 4 | 261.54s | 13.13s | 27% | 141.70s | 6.23s | 29% | |

| 10 | 208.24s | 12.02s | 27% | 134.95s | 12.43s | 36% | |

| 160 | 2 | 660.25s | 25.39s | 23% | 614.71s | 9.14s | 24% |

| 4 | 888.06s | 27.20s | 23% | 622.58s | 10.74s | 24% | |

| 10 | 979.92s | 22.03s | 22% | 598.55s | 29.97s | 31% | |

| AAL | |||||||

|---|---|---|---|---|---|---|---|

| DKSG | ZHLG | ||||||

| quad | ours | nnz | quad | ours | nnz | ||

| 70 | 2 | 3.99s | 1.72s | 44% | 2.36s | 1.19s | 52% |

| 4 | 4.64s | 1.94s | 46% | 2.32s | 1.27s | 50% | |

| 10 | 4.89s | 1.80s | 48% | 1.93s | 1.69s | 58% | |

| 100 | 2 | 22.91s | 5.30s | 34% | 15.25s | 3.24s | 37% |

| 4 | 38.63s | 7.81s | 37% | 13.38s | 2.68s | 35% | |

| 10 | 36.58s | 6.00s | 35% | 13.34s | 4.59s | 43% | |

| 130 | 2 | 175.43s | 15.81s | 27% | 145.83s | 6.58s | 28% |

| 4 | 221.80s | 15.89s | 29% | 142.01s | 5.88s | 30% | |

| 10 | 257.41s | 14.60s | 28% | 135.08s | 10.03s | 35% | |

| 160 | 2 | 550.44s | 26.06s | 24% | 613.45s | 15.01s | 26% |

| 4 | 864.51s | 31.71s | 24% | 610.71s | 14.62s | 26% | |

| 10 | 984.85s | 20.86s | 22% | 601.50s | 22.42s | 30% | |

| IRIS | |||||||

|---|---|---|---|---|---|---|---|

| DKSG | ZHLG | ||||||

| quad | ours | nnz | quad | ours | nnz | ||

| 70 | 2 | 5.61s | 2.00s | 43% | 2.76s | 1.21s | 47% |

| 3 | 5.66s | 2.09s | 44% | 2.61s | 1.15s | 48% | |

| 4 | 4.88s | 2.10s | 45% | 2.23s | 1.18s | 50% | |

| 100 | 2 | 43.87s | 6.55s | 33% | 17.63s | 2.13s | 34% |

| 3 | 37.56s | 5.92s | 33% | 15.92s | 2.64s | 36% | |

| 4 | 42.69s | 5.95s | 33% | 15.24s | 2.95s | 37% | |

| 130 | 2 | 241.59s | 13.29s | 26% | 148.34s | 5.62s | 29% |

| 3 | 199.22s | 12.20s | 27% | 150.54s | 5.34s | 28% | |

| 4 | 256.28s | 13.96s | 27% | 151.87s | 6.20s | 30% | |

| 150 | 2 | 504.21s | 20.15s | 23% | 414.79s | 8.38s | 25% |

| 3 | 510.55s | 19.81s | 24% | 398.06s | 7.80s | 26% | |

| 4 | 565.89s | 21.04s | 24% | 390.47s | 7.36s | 26% | |

| HCV | |||||||

|---|---|---|---|---|---|---|---|

| DKSG | ZHLG | ||||||

| quad | ours | nnz | quad | ours | nnz | ||

| 70 | 2 | 3.68s | 1.16s | 43% | 2.65s | 0.84s | 45% |

| 4 | 5.22s | 1.71s | 44% | 2.13s | 0.88s | 45% | |

| 10 | 4.55s | 1.35s | 43% | 1.88s | 2.06s | 62% | |

| 100 | 2 | 13.02s | 3.50s | 32% | 15.20s | 2.00s | 32% |

| 4 | 39.50s | 4.84s | 33% | 13.03s | 2.35s | 32% | |

| 10 | 29.79s | 3.65s | 31% | 12.78s | 8.32s | 48% | |

| 130 | 2 | 108.10s | 6.90s | 28% | 141.20s | 3.07s | 24% |

| 4 | 180.42s | 12.05s | 27% | 138.05s | 3.78s | 24% | |

| 10 | 184.51s | 8.16s | 24% | 133.91s | 21.17s | 38% | |

| 160 | 2 | 551.43s | 17.32s | 24% | 613.29s | 5.22s | 20% |

| 4 | 868.60s | 23.93s | 22% | 613.04s | 8.10s | 21% | |

| 10 | 633.97s | 14.48s | 20% | 588.64s | 45.33s | 32% | |

Tables 1, 2, 3, and 4 show some average running times of SolveNNQ and quadprog. When and , SolveNNQ is often 10 times or more faster. For the same dimension , the column nnz shows that the percentage of non-zero solution coordinates decreases as increases, that is, the sparsity increases as increases. Correspondingly, the speedup of SolveNNQ over a single call of quadprog also increases as reflected by the running times.

|

|

|

|

|

Figure 2 shows four plots, one for each of the four data sets, that show how the natural logarithm of the average speedup of SolveNNQ over a single call of quadprog increases as the value of increases. In each plot, data points for distinct dimensions are shown in distinct colors, and the legends give the color coding of the dimensions. For both DKSG and ZHLG and for every fixed , the average speedup as a function of hovers around . The average speedup data points for HCV shows a greater diversity, but the smallest speedup is still roughly proportional to . As a result, SolveNNQ gives a much superior performance than a single call of quadprog even for moderate values of .

To verify our assumption that the distance between and the minimum in direction is at least , we used box-and-whisker plots for visualizations. A box and whisker plot displays the distribution of a dataset. It shows the middle 50% of the data as a box, with the median shown using a horizontal segment inside the box; whiskers are used to show the spread of the remaining data. We exclude two outliers of the ratios for the DKSG problem. These outliers occur when , so the ratios describe the behavior between the first and second iterations of the algorithm. These outliers can be ignored, as the initialization does not induce any part of the active set from the gradient. The active set in the first iteration is arbitrary and distinct from the rest of the algorithm. As a result, any abnormality involving the first iteration does not impact the convergence of the algorithm, since it is just one of many iterations. As shown in Figures 3(a) and (b), the ratio is greater than . Thus, our algorithm takes a descent direction that meets our assumption that is larger than or equal to a not so small constant of . The observed convergence rate is even better. As illustrated in Figure 4, the gap is reduced by a factor in the range in every iteration on average; in fact, most runs converge more rapidly than a geometric convergence.

|

|

|

| (a) | (b) |

|

|

|

|

| nph | sts | hst |

3.2 Image deblurring

We experimented with four space images nph, sts, and hst from [39, 40]. We down-sample and sparsify by setting nearly black pixels (with intensities such as 10 or below, or 20 or below) to black. Figure 5 shows the four resulting space images: nph is , and both sts and hst are . These images are sparse (i.e., many black pixels); therefore, they are good for the working of SolveNNQ.

For space images, it is most relevant to use blurring due to atmospheric turbulence. In the following, we describe a point spread function in the literature that produces such blurring effects [41]. Let denote a two-dimensional pixel array (an image). We call the pixel at the -th row and -th column the -pixel. Let denote the intensity of the -pixel in the original image. Let denote its intensity after blurring.

The atmospheric turbulence point spread function determines how to distribute the intensity to other pixels. At the same time, the -pixel receives contributions from other pixels. The sum of these contributions and the remaining intensity at the -pixel gives . The weight factor with respect to an -pixel and a -pixel is for some positive constant . It means that the contribution of the -pixel to is . We assume the point spread function is spatially invariant which makes the relation symmetric; therefore, the contribution of the -pixel to is .

The atmospheric turbulence point spread function needs to be truncated as its support is infinite. Let be a square centered at the -pixel. The truncation is done so that the -pixel has no contribution outside , and no pixel outside contributes to the -pixel. For any , the weight of the entry of at the -th row and the -th column is . We divide every entry of by the total weight of all entries in because the contributions of the -pixel to other pixels should sum to 1. The coefficient no longer appears in the normalized weights in , so we do not need to worry about how to set .

How do we produce the blurring effects as a multiplication of a matrix and a vector? We first transpose the rows of to columns. Then, we stack these columns in the row order in to form a long vector . That is, the first row of becomes the top entries of and so on. Let be an array with the same row and column dimensions as . Assume for now that the square centered at the -entry of is fully contained in . The entries of are zero if they are outside ; otherwise, the entries of are equal to the normalized weights at the corresponding entries in . Then, we concatenate the rows of in the row order to form a long row vector; this long row vector is the -th row of a matrix . Repeating this for all defines the matrix . The product of the -th row of and is a scalar, which is exactly the sum of the contributions of all pixels at the -pixel, i.e., the intensity . Consider the case that some entries of lie outside . If an entry of is outside , we find the vertically/horizontally nearest boundary entry of and add to it the normalized weight at that “outside” entry of . This ensures that the total weight within still sums to 1, thus making sure that no pixel intensity is lost. Afterwards, we linearize to form a row of as before. In all, is the blurred image by the atmospheric turbulence point spread function.

Working backward, we solve an NNLS problem to deblur the image: find the non-negative that minimizes , where is the vector obtained by transposing the rows of the blurred image into columns, followed by stacking these columns in the row order in the blurred image.

In running SolveNNQ on the space images, we compute the gradient vector at the zero vector and select the most negative coordinates. (Recall that and is the total number of pixels in an image in this case). We call quadprog with these most negative coordinates as the only free variables and obtain the initial solution . Afterwards, SolveNNQ iterates as before.

|

|

|

|

| hst, original | hst, | SolveNNQ, 0.002, 21.6s | FISTABT, 0.1, 11.5s |

|

|

|

|

| SBB, 0.08, 600s | FNNLS, , 694s | lsqnonneg, , 1610s | quadprog, 0.002, 72.1s |

| SolveNNQ | FISTABT | SBB | |||||

|---|---|---|---|---|---|---|---|

| Data | rel. err | time | rel. err | time | rel. err | time | |

| nph | 1 | 3s | 0.009 | 2s | 171s | ||

| 1.5 | 5.6s | 0.04 | 4.3s | 0.009 | 600s | ||

| 2 | 8.2s | 0.06 | 6.8s | 0.02 | 600s | ||

| sts | 1 | 2.1s | 0.01 | 2.3s | 206s | ||

| 1.5 | 4.9s | 0.06 | 4.7s | 0.01 | 600s | ||

| 2 | 9.7s | 0.1 | 7.1s | 0.04 | 600s | ||

| hst | 1 | 2.5s | 0.02 | 2.3s | 275s | ||

| 1.5 | 0.001 | 6.5s | 0.06 | 4.7s | 0.02 | 600s | |

| 2 | 0.001 | 11.1s | 0.1 | 7.4s | 0.05 | 600s | |

| FNNLS | lsqnonneg | quadprog | |||||

|---|---|---|---|---|---|---|---|

| Data | rel. err | time | rel. err | time | rel. err | time | |

| nph | 1 | 15.4s | 25s | 8.8s | |||

| 1.5 | 60s | 105s | 17.9s | ||||

| 2 | 94s | 181s | 33.3s | ||||

| sts | 1 | 14s | 22.7s | 9.4s | |||

| 1.5 | 48.9s | 87s | 20.4s | ||||

| 2 | 80s | 156s | 0.001 | 38.6s | |||

| hst | 1 | 79.4s | 182s | 9.4s | |||

| 1.5 | 272s | 651s | 0.001 | 20.9s | |||

| 2 | 459s | 1060s | 0.003 | 47.2s | |||

|

|

|

|

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

|

|

|

|

| (a) | (b) | (c) | (d) |

|

|

|

|

|

|

|

|

| (a) | (b) | (c) | (d) |

We compared SolveNNQ with several software that can solve NNLS, including FISTA with backtracking (FISTABT) [35, 36], SBB [8], FNNLS [9], lsqnonneg of MATLAB, and a single call of quadprog. Table 5 shows the statistics of the relative mean square errors and the running times. Figure 6 shows the deblurred images of hst produced by different software. When the relative mean square error of an image is well below 0.01, it is visually non-distinguishable from the original. In our experiments, SolveNNQ is very efficient and it produces a very good output. Figures 7–9 show some vertical pairs of blurred images and deblurred images recovered by SolveNNQ. We emphasize that we do not claim a solution for image deblurring as there are many issues that we do not address; we only seek to demonstrate the potential of the iterative scheme.

| SolveNNQ | FISTABT | SBB | |||||

|---|---|---|---|---|---|---|---|

| rel. err | time | rel. err | time | rel. err | time | ||

| walk | 2 | 9.6s | 350s | 5.4s | |||

| 3 | 19.1s | 1340s | 16.4s | ||||

| 4 | 33.1s | 2630s | 43.5s | ||||

| heli | 2 | 1.9s | 800s | 4.9s | |||

| 3 | 4.6s | 800s | 8.1s | ||||

| 4 | 8.2s | 800s | 36.8s | ||||

| lion | 2 | 0.9s | 104s | 0.9s | |||

| 3 | 1.6s | 185s | 1s | ||||

| 4 | 3.2s | 800s | 3.7s | ||||

| FNNLS | lsqnonneg | quadprog | |||||

|---|---|---|---|---|---|---|---|

| rel. err | time | rel. err | time | rel. err | time | ||

| walk | 2 | 144s | 220s | 26.9s | |||

| 3 | 270s | 447s | 76.3s | ||||

| 4 | 414s | 748s | 163s | ||||

| heli | 2 | 57.2s | 93.8s | 12.3s | |||

| 3 | 103s | 187s | 37.2s | ||||

| 4 | 157s | 289s | 54.7s | ||||

| lion | 2 | 4.8s | 6.8s | 5.4s | |||

| 3 | 8.9s | 13s | 11.7s | ||||

| 4 | 14.1s | 21.2s | 20.5 | ||||

|

|

|

|

| walk, thermal | walk, extracted | walk, | SolveNNQ, rel. err = |

|

|

|

|

| heli, thermal | heli, extracted | heli, | SolveNNQ, rel. err = |

|

|||

| lion, thermal | lion, extracted | lion, | SolveNNQ, rel. err = |

|

|

|

| (a) Thermal, original. | (b) Red, thresholded. | (c) Green, thresholded. |

|

|

|

| (d) Blue, thresholded. | (e) Recombined. |

| SolveNNQ | FISTABT | SBB | ||||||||||

| R | red | green | blue | total | red | green | blue | total | red | green | blue | total |

| 2 | 0.5s | 0.6s | 2.6s | 3.7s | 362s | 48.1s | 160s | 570.1s | 2.1s | 0.7s | 1.5s | 4.3s |

| 3 | 1s | 1.3s | 5.1s | 7.4s | 663s | 176s | 301s | 1140s | 2.4s | 1.3s | 2.8s | 6.5s |

| 4 | 2s | 1.9s | 7.7s | 11.6s | 800s | 583s | 800s | 2183s | 11.5s | 5.5s | 9.8s | 26.8s |

| FNNLS | lsqnonneg | quadprog | ||||||||||

| R | red | green | blue | total | red | green | blue | total | red | green | blue | total |

| 2 | 4.7s | 4.8s | 30.6s | 40.1s | 6.5s | 6.6s | 42.5s | 55.6s | 8.7s | 9.3s | 10.6s | 28.6s |

| 3 | 8.4s | 8.1s | 54.1s | 70.6s | 12.2s | 11.3s | 79.2s | 102.7s | 23.1s | 26.6s | 30.6s | 80.3s |

| 4 | 14s | 13.2s | 78.1s | 105.3s | 19.4s | 18.6s | 130s | 168s | 38.6s | 41.7s | 42.3s | 122.6s |

|

|

|

|

|

| Red, | Green, | Blue, | Recombined, | SolveNNQ |

|

|

|

|

|

| Red, | Green, | Blue, | Recombined, | SolveNNQ |

|

|

|

|

|

| Red, | Green, | Blue, | Recombined, | SolveNNQ |

We also experimented with some thermal images walk, heli, lion, and dog [42, 43, 44]; such thermal images are typically encountered in surveillance. In each case, we extract the dominant color, down-sample, and apply thresholding to obtain a gray-scale image that omits most of the irrelevant parts. Afterwards, we apply the uniform out-of-focus point spread function for blurring [7]—the atmospheric turbulence point spread function is not relevant in these cases. There is a positive parameter . Given an -pixel and a -pixel, if , the weight factor for them is ; otherwise, the weight factor is zero. The larger is, the blurrier is the image. The construction of the matrix works as in the atmospheric turbulence point spread function.

We use a certain number of the most negative coordinates of the gradient at the zero vector, and then we call quadprog with these coordinates as the only free variables to obtain the initial solution . Afterwards, SolveNNQ iterates as described previously. Figure 10 shows the thermal images, the extracted images, the blurred images, and the deblurred images produced by SolveNNQ.

We did another experiment on deblurring the red, green, and blue color panes of a dog thermal image separately, followed by recombining the deblurred color panes. Figure 11(a) shows the original thermal image of a dog. Figures 11(b)–(d) show the gray-scale versions of the red, green, and blue pixels which have been thresholded for sparsification. Figure 11(e) shows the image obtained by combining Figures 11(b)–(d); the result is in essence an extraction of the dog in the foreground. We blur the different color panes; Figure 12 shows these blurred color panes and their recombinations. We deblur the blurred versions of the different color panes and then recombine the deblurred color panes. The rightmost column in Figure 12 shows the recombinations of the deblurred output of SolveNNQ. As in the case of the images walk, heli, and lion, the outputs of SolveNNQ, FISTABT, SBB, FNNLS, lsqnonneg, and a single call quadprog are visually non-distinguishable from each other. Table 7 shows that the total running times of SolveNNQ over the three color panes are very competitive when compared with the other software.

4 Analysis for NNLS and ZHLG

In this section, we prove that there exists a unit descent direction in the -th iteration of SolveNNQ such that for NNLS and ZHLG, where , and for NNLS and for ZHLG. Combined with the assumption that the distance between and the minimum in direction is at least , we will show that the gap is decreased by a factor after every iterations.

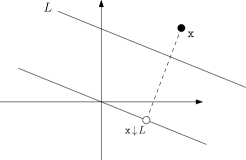

Let be an affine subspace in . For every , define to be the projection of to the linear subspace parallel to —the translate of that contains the origin. Figure 13 gives an illustration. Note that may not belong to ; it does if is a linear subspace. The projection of to can be implemented by multiplying with an appropriate matrix , i.e., . Thus, , so we will write without any bracket.

Since there are only the non-negativity constraints for NNLS and ZHLG, we do not have the Lagrange multipliers and . So is the optimal solution of that SolveNNQ solves in the -th iteration under the constraints that for all .

Recall that, in the pseudocode of SolveNNQ, is a sequence of indices such that , and is sorted in non-decreasing order of . Define . The following result follows from the definitions of and .

Lemma 3.

For all , if , then ; otherwise, . Moreover, is a conical combination of .

We show that there exists a subset of indices such that is bounded away from zero.

Lemma 4.

For any , there exists in with rank at most such that for every , if or precedes in , then .

Proof.

Consider a histogram of against . The total length of the vertical bars in is equal to . Consider another histogram of against . The total length of the vertical bars in is equal to , which is equal to 1 because . Recall that the indices of are sorted in non-decreasing order of . As for all , the ordering in is the same as the non-increasing order of . Therefore, the total length of the vertical bars in for the first indices is at least . It implies that when we scan the indices in from left to right, we must encounter an index among the first indices such that the vertical bar in at is not shorter than the vertical bar in at , i.e., for every that precedes in . This index satisfies the lemma. ∎

Next, we boost the angle bound implied by Lemma 4 by showing that makes a much smaller angle with some conical combination of .

Lemma 5.

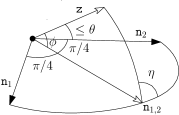

Take any . Let be a vector in for some . Suppose that there is a set of unit vectors in such that the vectors in are mutually orthogonal, and for every , . There exists a conical combination of the vectors in such that .

Proof.

Let . If , we can pick any vector as because for . Suppose not. Let be a maximal subset of whose size is a power of 2. Arbitrarily label the vectors in as . Consider the unit vector . Let . Refer to Figure 14. By assumption, . Let be the non-acute angle between the plane and the plane . By the spherical law of cosines, . Note that as . It implies that . The same analysis holds between and the unit vector , and so on. In the end, we obtain vectors for such that . Call this the first stage. Repeat the above with the unit vectors in the second stage and so on. We end up with only one vector in stages. If we ever produce a vector that makes an angle at most with before going through all stages, the lemma is true. Otherwise, we produce a vector in the end such that . ∎

Lemma 6.

The vectors in have a unit conical combination such that is a descent direction from and .

Proof.

Since is a conical combination of , makes a non-obtuse angle with any vector in . Then, Lemmas 4 and 5 imply that the vectors in have a unit conical combination such that , where . As , we have which implies that . For any feasible solution and any non-negative values , is also a feasible solution. It follows that is a feasible solution for all . For NNLS and ZHLG, the primal problem has no constraint other than the non-negativity constraints . By the KKT conditions, we have , which implies that . Moreover, because and so the component of orthogonal to vanishes in the inner product. Then, the acuteness of implies that . In all, is a descent direction. ∎

We use Lemma 6 to establish a convergence rate under an assumption.

Lemma 7.

Let be a unit descent direction from that satisfies Lemma 6. Let be the feasible point that minimizes on the ray from in direction .

-

(i)

, where .

-

(ii)

If there exists such that , then .

Proof.

Irrespective of whether is computed in line 11 or 14 of SolveNNQ, is disjoint from , which makes Lemma 1 applicable.

Since for an NNLS or ZHLG problem, we have . The last equality comes from the fact that , so the component of orthogonal to vanishes in the inner product. By Lemma 6, .

The inequality above takes care of the nominator in the bound in Lemma 1 multiplied by . The denominator in the bound in Lemma 1 multiplied by is equal to .

Recall that is for and zero otherwise. Therefore, is zero for and otherwise. If , then , which implies that . By the complementary slackness, if , then , which implies that as is non-negative. Altogether, we conclude that for , if or (, then ; otherwise, . Therefore, . As a result, .

Hence, , where . This completes the proof of (i).

Substituting (i) into Lemma 1 gives . It then follows from the assumption of in the lemma that . ∎

Let denote the time complexity of solving a convex quadratic program with variables and constraints. It is known that , where is bounded by the number of bits in the input [21]. Theorem 1 below gives the performance of SolveNNQ on NNLS and ZHLG.

Theorem 1.

Consider the application of SolveNNQ on an NNLS problem with constraints in or a ZHLG problem for points in . Let be for NNLS or for ZHLG. The initialization of SolveNNQ can be done in time for NNLS or time for ZHLG. Suppose that the following assumptions hold.

-

•

Assume that the threshold on the total number of iterations is not exceeded.

-

•

For all , let be a unit descent direction from that satisfies Lemma 6, assume that , where is some fixed value and is the feasible point that minimizes on the ray from in the direction .

Then, for all , for some , and each iteration in SolveNNQ takes time, where .

Proof.

Since the threshold is not exceeded by assumption, the set contains at least indices for all . Therefore, Lemma 7 implies that the gap decreases by a factor in iterations. It remains to analyze the running time.

For NNLS, is and we compute in time. For ZHLG, is and is a vector of dimension . Recall that , where is the incidence matrix for the complete graph. Therefore, each row of has at most three non-zero entries, meaning that can be computed in time.

The initial solution is obtained as follows. Sample indices from . The initial active set contains all indices in the range except for these sampled indices. We extract the rows and columns of and coordinates of that correspond to these sampled indices. Then, we call the solver in time to obtain the initial solution .

At the beginning of every iteration, we compute and update the active set. This step can be done in time because has at most non-zero entries. At most indices are absent from the updated active set because the threshold is not exceeded. We select the rows and columns of and coordinates of that correspond to the indices absent from the active set in time. The subsequent call of the solver takes time. So each iteration runs in time.

∎

We assume in Theorem 1 that for all for ease of presentation. The geometric-like convergence still holds even if the inequality is satisfied in only a constant fraction of any sequence of consecutive iterations (the iterations in that constant fraction need not be consecutive).

5 Analysis for DKSG

Let be the input points. So there are edges connecting them, and the problem is to determine the weights of these edges. Suppose that we have used SolveNNQ to obtain the current solution with respect to the active set . We are to analyze the convergence rate of SolveNNQ when computing the next solution with respect to the next active set .

In our analysis, we assume that the next active set is computed as , which is the same assumption that we made in the case of NNLS and ZHLG. Recall that is the subset of that SolveNNQ extracted so that variables in will be freed in the next iteration.

To analyze the convergence rate achieved by , we transform the original system to an extended system and analyze an imaginary run of SolveNNQ on this extended system. First, we convert the inequality constraints to equality constraints by introducing slack variables. Second, we need another large entry in each row of the contraint matrix for a technical reason. So we introduce another dummy variables. We give the details of the transformation in the next section.

5.1 Extended system

The original variables are for . We add one slack variable per constraint in . This gives a vector of slack variables. We also add a vector of dummy variables, one for each constraint in . As a result, the ambient space expands from to . We use to denote a point in . The idea is to map to a point in by appending slack and dummy variables:

Let be an arbitrarily large number. Define the following matrix :

The constraints for the extended system in are:

That is, for each point , the sum of the weights of edges incident to , minus , and plus must be equal to . If we can force to be , we can recover the original requirement of the sum of the weights of edges incident to being at least 1 because the slack variable can cancel any excess weight.

We can make nearly by changing the objective function from to . Although may not be exactly at an optimum, we will argue that it suffices for our purpose. Let denote the new objective function . Note that is quadratic. We have

Let denote the -dimensional vector of multipliers corresponding to the constraints . Let denote the -dimensional vector of multipliers corresponding to the constraints .

Lemma 8.

Let be an optimal solution for the original system with respect to the active set . There is a feasible solution for the extended system with respect to the active set such that the following properties hold:

-

(i)

.

-

(ii)

.

-

(iii)

, where and .

-

(iv)

.

-

(v)

and approaches as increases.

Proof.

With respect to the active , we claim that a feasible solution of the extended system can be obtained by solving the following linear system. We use to denote the first coordinates of and the next coordinates of .

| (4) |

| (5) |

Equation (4) models the requirement of for some particular settings of , , and . Equation (5) models one of the primal feasibility constraints: .

First, we argue that (4) and (5) can be satisfied simultaneously. Observe that is a KKT condition of the original system, so it can be satisfied by setting , and . We set and . These settings of , , , , and satisfy (4). To satisfy (5), we use and the setting of to set . The satisfiability of (4) and (5) is thus verified. These settings yield the following candidate solution for the extended system and establishes the correctness of (i)–(iv):

We do not know whether is a feasible solution for the extended system yet. We argue that satisfy the primal and dual feasibilities of the extended system. Equation 5 explicitly models one of the primal feasibility conditions: . The other two feasibility conditions that need to be checked are and for . By the dual feasibility of the original system, we have and for , which implies that for . By the primal feasibility of the original system, we have . Since , we have . As by the primal feasibility of the original system, we have . Hence, .

Finally, , which approaches as increases. This proves (v). ∎

We remark that may not be an optimal solution for the extended system. Indeed, one can check that may be positive for some , implying that may not satisfy complementary slackness.

We will analyze an imaginary run of SolveNNQ on this extended system starting from . Since no index in the range is put into an active set by SolveNNQ, the run on the extended system mimics what SolveNNQ does on the original system.

5.2 A descent direction for the extended system

Let be a feasible solution of the extended solution corresponding to as promised by Lemma 8. Recall that, in the pseudocode of SolveNNQ, is a sequence of indices such that . Without loss of generality, we assume that and that the edge with index 1 is .

In an imaginary run of SolveNNQ on the extended system starting from , we will free the variable corresponding to the first coordinate of . So we will increase the variable , which means that the descent direction is some appropriate component of the vector in . We introduce some definitions in order to characterize the descent direction.

Definition 1.

Define the following set of indices

The role of for the extended system is analogous to the role of for the original system. All dummy variables belong to . A slack variable is in if and only if the correponding constraint in is not tight.

Definition 2.

For all , let . So and it tells us the entries in the -th constraint in that are affected if is increased. Note that always contains .

Definition 3.

For all , define the following quantities:

In the definition of , since we set all variables not in to zero, the slack variables that are excluded must be subtracted from the vector on the right hand side of the constraints . By Lemma 8, for any , if , the value of the corresponding slack variable is . This consideration justifies the definition of .

Definition 4.

Let . It is a non-empty subspace of because it contains the projection of to .

Definition 5.

For all , define a vector in as follows:

Observe that . Also, if , then ; otherwise, . It is because the column index is the index of edge by assumption.

Note that every is orthogonal to . The vectors and the vectors that are parallel to span the linear subspace .

We want to analyze the effect of using as a descent direction. The direction is equal to . However, the vectors are not orthonormal, so we first orthonormalize using the Gram-Schmidt method [45] in order to express . The method first generates mutually orthogonal vectors so that we can normalize each individually later.

First, define . For in this order, define

This process generates by subtracting the component of that lies in . The set of vectors are mutually orthogonal. Then, we define the following set of orthonormal vectors:

Lemma 9.

The following properties are satisfied.

-

(i)

For all , .

-

(ii)

, and for , .

-

(iii)

For all and , for .

Proof.

Observe that for , for all . This implies that for all and hence . This proves (i).

Similarly, for , we have , which imples that . Then, coupled with the fact that , we get . This proves (ii).

We show (iii) inductively. The base case is trivial as . Consider the computation of . We can argue as in proving (i) that for all . Also, for all . Therefore, the inner product for any does not receive any contributions from the entries with indices in the range . By a similar reasoning, there are no contributions from the entries with indices in the range . It follows that, for any , as the largest magnitude of the first entries of is 1, and the largest magnitude of the first entries of is at most by induction assumption. By (i), . As a result, the largest magnitude of the first entries of is at most by induction assumption and the assumption that . Therefore, among the first entries of , the smallest entry is at least and the largest entry is at most . This proves (iii). ∎

Let . We analyze the value of and show that is a descent direction.

Lemma 10.

For a large enough , .

Proof.

We first obtain a simple expression for . Since the vectors are orthonormal, we have . The only non-zero entry of is a 1 at position 1. By Lemma 9, for . Note that and by Lemma 9(ii), . Therefore,

| (6) |

Let . Therefore, for all , if , then . We claim that for a sufficiently large ,

| (7) |

The proof goes as follows. By (6),

Clearly, which is equal to by Lemma 8. Recall that is the most negative entry of by assumption. For , if , then by the defintion of ; otherwise, which is zero by Lemma 8. Take any . By Lemma 9(i) and (iii), and are at most . Therefore, and have negligible magnitude for a large enough . It follows that is no more than plus a non-negative number of negligible magnitude in the worst case. The result is thus less than . This completes the proof of (7).

For all , the -th constraint in the extended system is a hyperplane in for which the -th row of is a normal vector. As a result, for any direction from the feasible solution that is parallel to the hyperplanes encoded by , the vector is orthogonal to , which gives

| (8) |

The last step uses the relation of from Lemma 8(iv).

As we move from in direction , our projection in moves in the positive direction, and so increases. During the movement, for any , the coordinate remains unchanged by the definition of . Specifically, if , then remains at zero; if , is fixed at , where . Some coordinates among , which are positive, may decrease. The feasibility constraints are thus preserved for some extent of the movement. By (8), , which is equal to as . By (7), . Clearly, . So . ∎

5.3 Convergence

Our analysis takes a detour via the extended system. Since the extended system is also a non-negative quadratic programming problem, Lemma 1 is applicable. The natural goal is thus to use the descent direction from and prove lower bounds for and , where is the feasible point that minimizes in direction from , is the optimal solution of the extended sytsem, and .

We prove a lower bound for . As in the case of NNLS and ZHLG, we do not know a lower bound for . Therefore, we derive a convergence result based the assumption that is bounded from below.

Let be an active set that is a subset of disjoint from . So is a descent direction from with respect to . Let be any feasible solution of the extended system with respect to such that . Let be the unit vector from towards . We bound the ratio from below. Note that is a descent direction from with respect to . Therefore, both and are negative, and the ratio is thus positive.

Lemma 11.

Let be any feasible solution of the extended system with respect to an active set that is a subset of disjoint from . Assume that . Let . For a sufficiently large ,

Proof.

As in the proof of Lemma 10, let . Therefore, for all , if , then . We claim that there exists a non-negative that approaches zero as increases such that

| (9) |

Since , it suffices to prove that .

If , then ; otherwise, . Therefore, if , then ; otherwise, by Lemma 8, .

Take any . If , then and by Lemma 8, which implies that because by the primal feasibility constraint. If , then , so , where , which implies that .

We conclude that either is negative, or it is at most some negligible postive value for a large enough . As a result, . This completes the proof of (9).

By (8), Lemma 10, and (9), we have

Observe that is positive because, by (8) and (9), which is positive for a large enough . As and is a unit vector, we have . We conclude that for a large enough , we have

| (10) |

We analyze . Take any . There are three cases.

-

•

. Then and by Lemma 8, which means that contributes to if and only if .

-

•

. Then, must belong to , which implies that , where . By the complementary slackness of the original system, which implies that by Lemma 8.

-

•

. Then, by Lemma 8.

We conclude that . Substituting into (10) gives the lemma. ∎

Let be the active set used by SolveNNQ for the next iteration on the original system. Note that is a subset of disjoint from . It follows that is disjoint from as well. Let be the point that minimizes in the direction from . Let be the optimal solution of the extended system (with an empty active set). Recall is the solution of the original system with respect to computed by SolveNNQ. Let be the optimal DKSG solution. We prove that if , then is closer to than by a factor of .

Lemma 12.

If where is a fixed value independent of , then for a large enough ,

Proof.

Let be the optimal solution of the extended system with respect to the active set . By Lemmas 1 and 11 and the assumption that , we have

We obtain a feasible solution of the original system from as follows. Let be the projection of to the first coordinates. First, we set . Second, for each , if , then let , we pick an arbitrary such that , and we increase by . This completes the determination of . Since we move the excess of the dummy variables over 1 to the positive coordinates of , one can verify that . So is a feasible solution of the original system with respect to . Note that for any , must approach zero as increases. Otherwise, as consists of the term , would tend to as increases, contradicting the fact that which is bounded as increases. Therefore, as increases, tends to which is the first term in . The second term of is non-negative, which means that . We conclude that tends to as increases.

By a similar reasoning, we can also show that tends to as increases. By Lemma 8(v), tends to as increases. Hence, for a large enough ,

Hence, . ∎

As set in the definition of and as argued in the proof of Lemma 12, the contributions of to the coordinates of , , and become negligible as increases. Therefore, whether there exists a fixed in Lemma 12 such that is unaffected by when is large enough. Still, we have to leave the condition as an assumption to obtain the convergence result.

Theorem 2.

Consider the application of SolveNNQ on the DKSG problem with points in . The initialization of SolveNNQ can be done in time. Suppose that the following assumptions hold.

-

•

The threshold on the total number of iterations is not exceeded.

- •

Then, for all , for some , and each iteration in SolveNNQ takes time, where .

Proof.

By Lemma 12, the gap decreases by a factor in iterations. Thus, for some . It remains to analyze the running times.

We first compute . Recall that is , and resembles the incidence matrix for the complete graph in the sense that every non-zero entry of the incidence matrix gives rise to non-zero entries in the same column in . There are at most two non-zero entries in each column of the incidence matrix. Therefore, computing takes time. We form an initial graph as follows. Randomly include edges by sampling indices from . Also, pick a node and connect it to all other nodes in order to guarantee that the initial graph admits a feasible solution for the DKSG problem. There are at most edges. The initial active set contains all indices in the range except for the indices of the edges of the initial graph. We extract in time the rows and columns of that correspond to the edges of the initial graph. Then, we call the solver in time to obtain the initial solution . Therefore, the initialization time is .

At the beginning of every iteration, we compute and update the active set. This can be done in time because has at most non-zero entries. Since the threshold is not exceeded, at most indices are absent from the updated active set. We extract the corresponding rows and columns of in time. The subsequent call of the solver takes time, which dominates the other processing steps that take time. So each iteration runs in time. ∎

6 Conclusion

We presented a method that solves non-negative convex quadratic programming problems by calling a numerical solver iteratively. This method is very efficient when the intermediate and final solutions have a lot of zero coordinates. We experimented with two proximity graph problems and the non-negative least square problem in image deblurring. The method is often several times faster than a single call of quadprog of MATLAB. From our computational experience, we obtain a speedup when the percentage of positive coordinates in the final solution drops below 50%, and the speedup increases significantly as this percentage decreases. We proved that the iterative method converges efficiently under the assumption. We checked the assumption in our experiments on proximity graph and confirmed that the assumption hold. A possible research problem is proving a better convergence result under some weaker assumptions. The runtime of our method is sensitive to solution sparsity. As far as we know, we are the first to introduce a parameter explicitly to control the size of the intermediate problems. Another possible line of research is to explore the choice of given some prior knowledge of the solution sparsity.

References

- \bibcommenthead

- Daitch et al. [2009] Daitch, S.I., Kelner, J.A., Spielman, D.A.: Fitting a graph to vector data. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 201–209 (2009). https://doi.org/10.1145/1553374.1553400

- Jebara et al. [2009] Jebara, T., Wang, J., Chang, S.-F.: Graph construction and -matching for semi-supervised learning. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 441–448 (2009). https://doi.org/10.1145/1553374.1553432

- Kalofolias [2016] Kalofolias, V.: How to learn a graph from smooth signals. In: Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, pp. 920–929 (2016)

- Zhang et al. [2014] Zhang, Y.-M., Huang, K., Hou, X., Liu, C.-L.: Learning locality preserving graph from data. IEEE Tranactions on Cybernetics 44(11), 2088–2098 (2014) https://doi.org/%****␣paper.bbl␣Line␣100␣****10.1109/TCYB.2014.2300489

- Cheng et al. [2021] Cheng, S.-W., Cheong, O., Lee, T., Ren, Z.: Fitting a graph to one-dimensional data. Theoretical Computer Science 867, 40–49 (2021) https://doi.org/10.1016/j.tcs.2021.03.020

- Hanke et al. [2000] Hanke, M., Nagy, J.G., Vogel, C.: Quasi-Newton approach to nonnegative image restorations. Linear Algebra and Its Applications 316, 223–236 (2000) https://doi.org/10.1016/S0024-3795(00)00116-6

- Lagendijk and Biemond [1991] Lagendijk, R.L., Biemond, J.: Iterative Identification and Restoration of Images. Springer, New York (1991). https://doi.org/10.1007/978-1-4615-3980-3

- Kim et al. [2013] Kim, D., Sra, S., Dhillon, I.S.: A non-monotonic method for large-scale non-negative least squares. Optimization Methods and Software 28(5), 1012–1039 (2013) https://doi.org/10.1080/10556788.2012.656368

- Lawson and Hanson [1995] Lawson, C.L., Hanson, R.J.: Solving Least Squares Problems. Society for Industrial and Applied Mathematics, Philadelphia (1995). https://doi.org/10.1137/1.9781611971217

- Clarkson [1995] Clarkson, K.L.: Las Vegas algorithms for linear and integer programming when the dimension is small. Discrete and Computational Geometry 42, 488–499 (1995) https://doi.org/10.1145/201019.201036

- Dyer and Frieze [1989] Dyer, M.E., Frieze, A.M.: A randomized algorithm for fixed-dimensional linear programming. Mathematical Programming 44, 203–212 (1989) https://doi.org/%****␣paper.bbl␣Line␣200␣****10.1007/BF01587088

- Gärtner [1995] Gärtner, B.: A subexponential algorithm for abstract optimization problems. SIAM Journal on Computing 24, 1018–1035 (1995) https://doi.org/10.1137/S0097539793250287

- Matoušek et al. [1996] Matoušek, J., Sharir, M., Welzl, E.: A subexponential bound for linear programming. Algorithmica 16, 498–516 (1996) https://doi.org/10.1007/BF01940877

- Sharir and Welzl [1992] Sharir, M., Welzl, E.: A combinatorial bound for linear programming and related problems. In: Proceeeings of the 9th Annual Symposium on Theoretical Analysis of Computer Science, pp. 569–579 (1992). https://doi.org/10.1007/3-540-55210-3_213

- Brise and Gärtner [2011] Brise, Y., Gärtner, B.: Clarkson’s algorithm for violator spaces. Computational Geometry: Theory and Applications 44, 70–81 (2011) https://doi.org/10.1016/j.comgeo.2010.09.003

- Gärtner et al. [2008] Gärtner, B., Matoušek, J., Rüst, L., Škovroň, P.: Violator spaces: structure and algorithms. Discrete Applied Mathematics 156, 2124–2141 (2008) https://doi.org/10.1016/j.dam.2007.08.048

- Kozlov et al. [1979] Kozlov, M.K., Tarasov, S.P., Khachiyan, L.G.: Polynomial solvability of convex quadratic programming. Soviet Mathematics Doklady 20, 1108–1111 (1979) https://doi.org/10.1016/0041-5553(80)90098-1

- Bland et al. [1980] Bland, R.G., Goldfarb, D., Todd, M.J.: The ellipsoid method: A survey. (1980). https://doi.org/10.1287/opre.29.6.1039

- Khachiyan [1980] Khachiyan, L.G.: Polynomial algorithms in linear programming. USSR Computational Mathematics and Mathematical Physics 20(1), 53–72 (1980) https://doi.org/10.1016/0041-5553(80)90061-0

- Kapoor and Vaidya [1986] Kapoor, S., Vaidya, P.M.: Fast algorithms for convex quadratic programming and multicommodity flows. In: Proceedings of the 18th Annual ACM Symposium on Theory of Computing, pp. 147–159 (1986). https://doi.org/10.1145/12130.12145

- Monteiro and Adler [1989] Monteiro, R.D.C., Adler, I.: Interior path following primal-dual algorithms. Part II: convex quadratic programming. Mathematical Programming 44, 43–66 (1989) https://doi.org/10.1007/BF01587076