On new data sources for the production of official statistics

Abstract

In the past years we have witnessed the rise of new data sources for the potential production of official statistics, which, by and large, can be classified as survey, administrative, and digital data. Apart from the differences in their generation and collection, we claim that their lack of statistical metadata, their economic value, and their lack of ownership by data holders pose several entangled challenges lurking the incorporation of new data into the routinely production of official statistics. We argue that every challenge must be duly overcome in the international community to bring new statistical products based on these sources. These challenges can be naturally classified into different entangled issues regarding access to data, statistical methodology, quality, information technologies, and management. We identify the most relevant to be necessarily tackled before new data sources can be definitively considered fully incorporated into the production of official statistics.

1 Introduction

In October 2018, the 104th DGINS conference (DGINS, 2018), gathering all directors general of the European Statistical System (ESS), “[a]gree[d] that the variety of new data sources, computational paradigms and tools will require amendments to the statistical business architecture, processes, production models, IT infrastructures, methodological and quality frameworks, and the corresponding governance structures, and therefore invite[d] the ESS to formally outline and assess such amendments”. Certainly, this statement is valid for producing official statistics in any statistical office.

More often than not, this need for the modernisation of the production of official statistics is associated with the rising of Big Data (e.g. DGINS, 2013). In our view, however, this need is also naturally linked to the use of administrative data (e.g. ESS, 2013) and even earlier to the efforts to boost the consolidation of an international industry for the production of official statistics through shared tools, common methods, approved standards, compatible metadata, joint production models and congruent architectures (HLGMOS, 2011; UNECE, 2019).

Diverse analyses can be found in the literature providing insights about the challenges of Big Data and new digital data sources, in general, for the production of official statistics (Struijs

et al., 2014; Landefeld, 2014; Japec et al., 2015; Reimsbach-Kounatze, 2015; Kitchin, 2015b; Hammer et al., 2017; Giczi and

Szőke, 2018; Braaksma and

Zeelenberg, 2020). These analyses are mostly strategic, high-level, and top-down. In this work we undertake a bottom-up approach mainly aiming at identifying those factors underpinning the reason why statistical offices are not producing outputs based on all these new data sources yet. Simply put: why are statistical offices not producing routinely official statistics based on these new digital data sources?

Our main thesis is that for statistical products based on new data sources to become routinely disseminated according to updated legal national and international regulations, at least, all the issues identified below must be provided with a widely acceptable solution. Should we fail to cope with the challenges behind one of these issues, the new products cannot be achieved. Thus, we are facing an intrinsically multifaceted problem. Furthermore, we shall argue that new data sources are compelling a new role of statistical offices derived from the social, statistical, and technical complexity of the new challenges.

These challenging issues are discussed separately in each section. In section 2 we revise relevant aspects of the concept of data and its implications to produce official statistics. In section 3 we tackle the issue of access to these new data sources. In section 4 we briefly identify issues regarding the new statistical methodology necessary to undertake the production with both the traditional and the new data. In section 5 we deal with the implications regarding the quality assurance framework. In section 6 we shortly approach the questions about the information technologies. In section 7 we pose some reflections regarding skills, human resources, and management in statistical offices. We close with some conclusions in section 8.

2 Data: survey, administrative, digital

2.1 Some definitions

The production of official statistics is a multifaceted concept. Many of these facets are affected by the nature of the data. We pose some reflections about some of them. In a statistical office three basic data sources are nowadays identified: survey, administrative, digital. This distinction runs parallel to the historical development of data sources.

A survey is “scientific study of an existing population of units typified by persons, institutions, or physical objects” (Lessler and

Kalsbeek, 1992). This is not to be confused with the idea of sampling itself, introduced in Official Statistics in 1895 by A. Kiær as the representative method (Kiær, 1897), provided with a solid mathematical basis and promoted to probability sampling firstly by Bowley (1906) and definitively by Neyman (1934), further developed originally in the US Census Bureau (Deming, 1950; Hansen

et al., 1966; Hansen, 1987) (see also Smith, 1976), and still in common practice by statistical offices worldwide (Bethlehem, 2009a; Brewer, 2013). It has been the preferred and traditional tool to elaborate and produce official information about any finite population. The advent of different technologies in the 20th century produced a proliferation of so-called data collection modes (CAPI, CATI, CAWI, EDI, etc.) (cf. e.g. Biemer and Lyberg, 2003), but the essence of a survey is still there.

Administrative data is “the set of units and data derived from an administrative source”, i.e. from an “organisational unit responsible for implementing an administrative regulation (or group of regulations), for which the corresponding register of units and the transactions are viewed as a source of statistical data” (OECD, 2008). Some experts (see Deliverable 1.3 of ESS, 2013) drop the notion of units to avoid potential confusion and just refer to data. All in all, these definitions refer to registers developed and maintained for administrative and not statistical purposes. Apart from the diverse traditions in countries for the use of these data in the production of official statistics, in the European context the Regulation No. 223/2009 provides the explicit legal support for the access to this data source by national statistical offices for the development, production and dissemination of European statistics (see Art. 24 of European Parliament and

Council Regulation 223/2009, 2009). Curiously enough, the Kish tablet from the Sumerian empire (ca 3500 bC), one of the earliest examples of human writing, seems to be an administrative record for statistical purposes.

More recently, the proliferation of digital data in an increasing number of human activities has posed the natural challenge for statistical offices to use this information for the production of official statistics. The term Big Data has polarised this debate with the apparent abuse of the Vs definitions (Laney, 2001; Normandeau, 2013). But the phenomenon goes beyond this characterization extending the potentiality for statistical purposes to any sort of digital data. In parallel to administrative data, we propose to define digital data as the set of units and data derived from a digital source, i.e. from a digital information system, for which the associated databases are viewed as a source of statistical data.

Notice that the OECD (2008) does not include this as one of the types of data sources, probably because this definition of digital data may be read as falling within the more general one of administrative data above, since administrative registers are nowadays also digitalised. We shall agree on restricting administrative data to the public domain, in agreement with current practice in statistical offices and in application of EU Regulation 223/09.

This discrimination between the three sources of data runs parallel to their collection modalities: surveys are essentially collected through structured interviews administered directly to the statistical unit of interest, administrative registers are collected from public administrative units, and digital data offers an undefined variety of potential private data providers (either individual or organizational). However, we want to emphasise that differences among these data sources are deeper than just their collection modalities. Furthermore, these differences lie at the core of many of the challenges described in the next sections.

2.2 Statistical metadata

The first determining factor for the differences in these data sources is the presence/absence of statistical metadata, i.e. metadata for statistical purposes. Not only is it relevant to understand what this means but also especially to identify the reason why this is introducing differences. Data such as survey data generated with statistical structural metadata embrace variables following strict definitions directly related to target indicators and aggregates under analysis (unemployment rates, price indices, tourism statistics, …). These definitions are operationalised in careful designs of questionnaire items. Data are processed using survey methodology, which provides a rigorous inferential framework connecting data sets with target populations at stake.

On the contrary, data such as administrative and digital data generated without statistical structural metadata embrace variables with a faint connection with target indicators and aggregates. This impinges on their further processing in many aspects and especially regarding data quality and the inference with respect to target populations. The ultimate reason for this absence of statistical metadata is that this data is generated to provide a non-statistical transactional service (taxes, medical care benefits, financial transactions, telecommunication, …). This has already been identified in the literature (Hand, 2018). In contraposition to survey data, administrative and digital data are generated before their corresponding statistical metadata. They do have metadata, but not for statistical purposes.

In our view, the key distinguishing factor, derived from this absence of statistical metadata, arises from the explicit or (mostly) implicit conception of information behind data. This plays a critical role in the statistical production process. The concept of information gathers three complementary aspects, namely (i) syntactic aspects concerning the quantification of information, (ii) semantic problems related to meaning, and (iii) utility issues regarding the value of information (see e.g. Floridi, 2019). When considering the traditional production of official statistics, we all are aware of the substantial investment on the system of metadata providing rigorous and unambiguous definitions for each of the variables collected in a survey, work conducted prior to data collection. This is providing survey data with a purposive semantic layer and noticeably increasing its value (all three aspects of the concept of information meet in survey data). On the contrary, administrative data are not generated under this umbrella of statistical metadata, but their semantic content is often still close enough to the statistical definitions used in a statistical office (think e.g. of the notions of employment, taxes, education grades, etc.). Nonetheless, the quality of administrative data for statistical purposes is still an issue (see e.g. Agafiţei et al., 2015; Foley

et al., 2018; Keller

et al., 2018). The situation with digital data is extreme. This data is generated to provide some kind of service completely extraneous to statistical production. Thus, meaning and value must be carefully worked out for the new data to be used in the production of official statistics (only the first layer of the concept of information is present in digital data). Some proposed architectures for the incorporation of new data sources (Ricciato, 2018b; Eurostat, 2019b) reflect this situation: a non-negligible amount of preprocessing is required prior to incorporate digital data into the statistical production process.

This different informational content of data for producing official statistics will prove to have far-reaching consequences on the production methodology. We can borrow a well-known episode in the History of Science to illustrate this difference and its consequences: the Copernican scientific revolution substituting the Ptolemaic system by the Newtonian law of universal gravitation (Kuhn, 1957). The Ptolemaic system enables us to compute and predict the behaviour of any celestial body by introducing more and more computational elements such as epicycles and deferents. Newton’s law of universal gravitation also enables us to compute this behavior under a completely different perspective. We can assimilate the former with a purely syntactic usage of data whereas the latter is somewhat incorporating meaning (theory). This is not a black-or-white comparison, since there is some theory behind the Ptolemaic system (Aristotelian Physics) but the difference in the comprehension of natural phenomena provided by both systems is appealing, even using the same set of data. In other words, in the former case we just introduce our observed astronomical data into a more or less entangled computation system whereas in the latter case we make use of underlying assumptions providing context, meaning, and explanations for all the observed data. In an analogous way, let us now consider the difference between a regression model and a random forest, also for the same set of (big) data. In the former, some meaning is incorporated or at least postulated through the choice of a functional form between regressand and regressors (linear, logistic, multinomial, etc.). In the latter, only weaker computational assumptions are made. The situation is similar to the cosmological picture above and indeed lies at the dichotomy between the so-called “theory-driven” and “data-driven” approaches to data analysis (see e.g. Hand, 2019). This also runs parallel to the model-based vs. design-based inference (Smith, 1994), whose finally adopted solution in favour of the latter can be summarised with the following statement by Hansen

et al. (1983, p. 785): “[…] it seems desirable, to the extent feasible, to avoid estimates or inferences that need to be defended as judgments of the analysts conducting the survey”. Avoiding prior hypotheses about data generation is possible using probability sampling (survey data), but with new data sources this is not the case anymore. This duality has already been identified in the use of Big Data as the historical debate between rationalism and empiricism (Starmans, 2016).

Thus, as a challenging issue, we may enquire whether statistical offices should still adopt a merely computational (empiricist) point of view à la Ptolomy or should they pursue theoretical (rationalistic) findings à la Newton perhaps searching for a better system of computation and estimation. No clear position is recognised in our community yet and this will impinge not only on the statistical methodology for new data sources but on the whole role of statistical offices in society. This change will indeed be very deep.

2.3 Economic value

The second main difference among the three data sources arises from their economic value. Traditional survey data has little economic value for a data holder/provider in comparison with digital data. For example, when a company owing a database for an online job vacancy advertisement service is requested to provide data about their turnover, number of employees, R+D investment, etc., sharing this information does not reasonably seem to be as critical as sharing this whole database for official statistical production. In the case of administrative data, whose public dimension we agreed upon above, the economic value for the public administration is secondary (statistical offices are indeed part of the public administration).

This economic value entails diverse consequences for the incorporation of digital data sources into official statistics production. Data collection is clearly more demanding. On the one hand, technological challenges lie ahead about retrieving, preprocessing, storing, and/or transmitting these new databases. On the other hand, and more importantly, by accessing to the business core of data holders, the degree of disruption of official statistical production into their business processes is certainly higher. Moreover, technical staff is usually required to access these data sources and even to preprocess and interpret them for statistical purposes (e.g. telco data). This also impinges directly on the capability profiles of official statisticians. Thus, it is appealingly different to collect (either paper or electronic) questionnaires than to access huge business databases.

The economic value of digital data constitutes a key feature which demands careful attention by national and international statistical systems. The perception of risk for e.g. settling public-private partnerships (Robin

et al., 2015) runs indeed parallel to this economic value. High economic value comes usually as a result of high investments, therefore sharing core business data with statistical offices may be easily perceived as too high a risk. However, if these public-private partnerships are perceived as an opportunity to increase this economic value (increasing e.g. data quality, the quality of commercial statistical products, and the social dimension of private economic activities), the statistical production and the information and knowledge generation thereof can be reinforced in society.

As we shall discuss in a later section, this suggests to broaden the scope of official statistical outputs from traditionally closed and embedded in statistical domains (usually according to a strict legal regulation) to some enriched intermediate high-quality datasets for further customised production by other economic and social actors (researchers, companies, NGOs, …) in a variety of socioeconomic domains. This is also a deep change in National Statistical Systems.

2.4 Third people

The third main difference stems out from the fact that these digital data refer to third people, not to data holders themselves. These third people are clients, subscribers, etc. sharing their private information in return of a business service. Implications immediately arise. Issues about the legal support for access are obvious (see section 3), but this factor is not entirely new. In survey methodology we already have the notion of proxy respondent (see e.g. Cobb, 2018) and in administrative data, information about citizens and not about the data-holding public institutions is the core of this data source.

Confidentiality and privacy issues naturally arise. Already in the traditional official statistical process a whole production step is dedicated to statistical disclosure control (Hundepool

et al., 2012) reducing the re-identification risk of any sampling unit while assuring the utility of the disseminated statistics. Now, the data deluge has made this risk increase since it is more feasible to identify individual population units (Rocher

et al., 2019), even despite data are not personally identified anymore (in contrast to survey and administrative data). Apart from the spread of privacy-by-design statistical processes, now more advanced cryptographical techniques such as Multiparty Secure Computation (see Zhao et al., 2019, and multiples references therein) must be taken into consideration, especially regarding data integration.

Ethical issues should also be considered. Long can be written about ethics of requesting private information to both people or enterprises in a general setting. Regarding new data sources, the debate about accessing data for the production of official statistics has received attention, in particular regarding privacy and confidentiality. Since we do not have a clear position regarding this issue, we just want to provocatively share two reflections. Firstly, with both survey and administrative sources, data for official statistics is personal data where either people and business units are univocally identified in internal sets of microdata at statistical offices. Take for example the European Health Survey (Eurostat, 2019a). Items like the following are included in the questionnaires:

-

HC08

When was the last time you visited a dentist or orthodontist on your own behalf (that is, not while only accompanying a child, spouse, etc.)?

-

HC09

During the past four weeks ending yesterday, that is since (date), how many times did you visit a dentist or orthodontist on your own behalf?

This sensitive information is collected together with a full identification of each respondent. Other example is a historical and fundamental statistics for society: causes of death (Eurostat, 2020a). People committing suicide or dead due to alcoholic abuse, for example, are clearly identified in internal sets of microdata at statistical offices. Most digital sources provide anonymous (or pseudo-anonymous) data. Notice that for the case of the European Health Survey even duly anonymised microdata are publicly shared. Have statistical offices not been careful enough so far in the application of IT security and statistical disclosure control to scrupulously protect both privacy and confidentiality of statistical units in their traditional statistical products? Uses other than strictly statistical purposes have not been followed in the usage of this information by statistical offices. Even despite the risk of identifiability, should the production of official statistics revise the ethics of its activity? Even for traditional sources?

Secondly, the fast generation of digital data nowadays clearly poses an immediate question. Should or should not society elaborate accurate and timely information for those matters of public interest (CPI, GDP, unemployment rates, …and even potentially novel insights) taking advantage of this data deluge? In all cases this is posed in full compatibility with increasing economic sectors around digital data both for statistical and nonstatistical purposes.

Obviously, this debate is part of the social challenges behind the generation of such an amount of digital information. Statistical offices cannot be aside and should assume their role.

3 Access

Needless to say, should statistical offices have no access to new digital data sources, no official statistical product can be offered thereof. Let us consider the increasingly common situation in which a new data source is identified to improve or refurbish an official statistical product. What lies ahead preventing a statistical office to access the data? We have empirically identified four sets of issues: legal issues, data characteristics, access conditions, and business decisions.

3.1 Legal issues

Legal issues constitute apparently the most evident obstacle for a statistical office to access new digital data. It is relevant to underline that access to administrative data has been explicitly included in the main European regulation behind European statistics (European Parliament and

Council Regulation 223/2009, 2009). In this sense, some countries have already introduced changes in their national regulations to explicitly include these new data sources in their Statistical Acts (see e.g. LoiiNum16a).

Certainly, very deep legal discussions can be initiated around the interpretation and scope of the different entangled regulations in the international and national legal systems but, in our opinion, all boil down to three factors: (i) Statistical Acts, (ii) specific data source regulations, and (iii) general personal data and privacy protection regulations. Regarding Statistical Acts, two main considerations are to be taken into account. On the one hand, by and large these regulations provide legal support for statistical offices to request data to different social agents. On the other hand, more rarely, these regulations also establish legal obligations for these agents to provide the requested data resorting to sanctions in case of nonresponse (LFEP, 1989). Regarding data sources such as mobile network data, financial transaction data, online databases, etc. there exist commonly specific regulations protecting these data restricting their use only for their specific purposes (telecommunication, finance, online transactions, etc.). These regulations may pose unsolved conflicts with the preceding Statistical Acts. Besides, personal data and privacy protection regulations, whose implementation is usually enacted through Data Protection Agencies, increases the degree of complexity since exceptions for statistical purposes do not explicitly clarify the type of data source to be used for the production of official statistics.

When requesting sustainable access in time, all these issues must be surmounted having in mind the perspectives of statistical offices, data holders, and statistical units (citizens and business units). Simultaneously (i) legal support for statistical offices must be clearly stated, (ii) data holders must be also legally supported in providing data, especially about third people (statistical units), and (iii) privacy and confidentiality of all social agents’ data must be guaranteed by Law and in practice. Needless to say, Law must be an instrument to preserve rights and establish legal support for all members of society.

3.2 Data characteristics

Data ecosystems for new data sources are highly complex and of very different nature. For example, telco data are generated in a complex cellular telecommunication network for many different internal technical and business purposes. Accessing data for statistical purposes implicitly implies the identification of those subsets of data needed for statistical production. Not every piece of data is useful for statistical purposes. Moreover, raw data are not useful for these purposes and they need some preprocessing. Even worse, raw digital data have an unattainable volume for usual production standards at statistical offices and require technical assistance by telco engineers. Thus, some form of preprocessed or even intermediate data may be instead required, but then details about this data processing or intermediate aggregating step need to be shared for later official statistical processing.

All in all, the characteristics of new data for the production of official statistics strongly compel the collaboration with data holders. This is completely novel for statistical offices.

3.3 Access conditions

As a result of the complexity behind new data sources, one of the considered options to use this data for statistical purposes is the in-situ access, thus avoiding the risk that data leaves the information systems of data holders. This possibility alleviates the privacy and confidentiality issues, but the operational aspect must then be tackled, since the statistical office will have to access somehow these private information systems. A second option may be to transmit the data from the data holders’ premises to the statistical offices’ information systems. No access to the private information systems is needed but privacy and confidentiality issues must then be solved in advance, both from the legal and the operational points of view. Finally, a trusted third party may enter the scene who will receive the data from the data holders and then, possibly after some preprocessing, will transmit them to the statistical office. The confidentiality and privacy issue remains open and part of the official statistical production process is further delegated.

A second condition comes from the exclusivity for statistical offices to access and use these data. Should there been more social agents requesting access and use of these data sources (e.g. other public agencies, ministries, international organizations, etc.), the access conditions from the data holders’ point of view would be extremely complex. This raises a natural enquiry about the potential social leading role of statistical offices in making this data available for public good.

A third condition revolves around the issue of intellectual property rights and/or industrial secrecy requirements. Accessing these data sources usually entails core industrial process for the data holders, who rightfully wants to protect their know-how from their competitors. Statistical offices must not disrupt the market competence by leaking this information from one agent to another. Guarantees must be offered and fixed in this sense.

Fourthly, new data sources will be more efficient when combined among them and with administrative and survey data. Furthermore, in a collaborating environment with data holders it seems naturally to consider the choice to share this data integration (e.g. considering this intermediate output as a new statistical product). Operational aspects of this data integration step (especially regarding statistical disclosure control) must be tackled (e.g. with secure multiparty computation techniques (Zhao et al., 2019); see also section 6.5).

Finally, as partially mentioned above, costs associated to data retrieval, access, and/or processing brought by the complexity of these data sources must be also taken into account. Occasionally this issue does not arise when collaborating for research and for one-shot studies, but it stands as an issue for the long term data provision for standard production. Let us remind the principle 1 of the UN Principles for Access to Data for Official Statistics (UNGWG, 2016), where this data provision is called upon free of charge and on a voluntary basis. However, principle 6 explicitly states the “[t]he cost and effort of providing data access, including possible pre-processing, must be reasonable compared to the expected public benefit of the official statistics envisaged”. Moreover, this is complemented by principle 3 stating that “[w]hen data is collected from private organizations for the purpose of producing official statistics, the fairness of the distribution of the burden across the organizations has to be considered, in order to guarantee a level playing field”. Thus, these principles arise as pertinent. However, the issue of the cost is extremely intricate. Firstly, the essential principle of Official Statistics by which data provision for these purposes must be made completely free of charge must be respected. Yet, the costs associated to data extraction and data handling for statistical purposes need a careful assessment and this depends very sensitively on the concrete situation of the data holders. Different details need consideration: staff time in data processing, hardware computing time, hardware buy and deployment (if necessary), software development or licenses (if necessary), …In addition, the compensation for these costs may be given shape in different ways, from a direct payment to an implicit contribution to a long-term collaboration partnership. In any case, notice that this compensation of costs should not be for the data themselves, but for the data extraction and data handling. Data must be granted access free of charge. Furthermore, if several data holders are at stake for the same data source, equal treatment must be procured for each of them. This is a wholly new social scenario for the production of official statistics.

3.4 Business decisions

Apart from the preceding factors, also apparently potential conflicts of interest and risk assessments can advice decision-makers in private organizations not to establish partnerships with statistical offices. The conflicts of interest may arise because of the perception of a potential collision in the target markets between statistical offices and private data holders/statistical producers. Our view is that this is only apparent, that statistical products for the public good considered in National Statistical Plans are of limited profit for private producers, and that in potentially intersecting insights a collaboration will increase the value of all products. Furthermore, corporate social responsibility and activities for social good naturally invite private organizations to set up this public-private collaboration broadening the scope of their activities to increase the economic and social value of their data, to contribute to the development of national data strategies, and to support policy making more accordingly to their information needs.

All in all, access and use of new data sources depend on a highly entangled set of challenging factors for many public and private organizations but offering an extraordinary opportunity to contribute to the production and dissemination of information in the present digital society. Statistical offices should strive to reshape their role to become an active actor in this new scenario.

4 Methodology

As stated in section 2, the lack of statistical metadata of new data sources and having data generated before planning and design necessarily impinge directly on the core of traditional survey methodology, especially (but not only) on the limitation in the use of sampling designs for these new data sources. This means that an official statistician accessing a new data source cannot resort to the tools in the traditional (current, indeed) production framework to produce a new statistical output. This does not mean whatsoever that there do not exist statistical techniques to process and analyse this new data. Indeed, there exists a great deal of statistical methods (see e.g. Hall/CRC, 2020). We just lack a new extended production framework to cover methodological needs in every statistical domain for each new data source.

We shall focus in this section on key methodological aspects in the production of official statistics and share some reflections on the new methods.

4.1 Key methodological aspects: representativeness, bias, and inference

There exist key concepts in traditional survey methodology such as sample representativeness, bias, and inference, which should be assessed in the light of the new types of data. Certainly, survey methodology is limited with new data sources, but it offers a template mirror for a new refurbished production framework to look at: it provides modular statistical solutions for a diversity of different methodological needs along the statistical process in all statistical domains (sample selection, record linkage, editing, imputation, weight calibration, variance estimation, statistical disclosure control, …). Furthermore, the connection between collected samples and target populations is firmly rooted on scientific grounds using design-based inference.

When considering an inference method other than sampling strategies (sampling designs together with asymptotically unbiased linear estimators), many official statisticians immediately react alluding to sample representativeness. This combination of sampling designs and linear estimators is indeed in the DNA of official statisticians and some first explorations of statistical methods to face this inferential challenge still resemble these sampling strategies (Beresewicz et al., 2018). In our view, the introduction of new methods should come with an address on these key concepts (sample representativeness, bias, etc.).

To grasp the differences in these concepts in the statistical methods for survey data and for new data sources, we shall shortly include our view on the origin of the strength felt by official statisticians around these concepts in the traditional production framework. As T.M.F. Smith (1976) already pointed out, the design-based inference seminally introduced by J. Neyman (1934) allows the statistician to make inferences about the population regardless of its structure. Also in our view, this is the essential trait of design-based methodology in Official Statistics over other alternatives, in particular, over model-based inference. As M. Hansen (1987) already remarked, statistical models may provide more accurate estimates if the model is correct, thus clearly showing the dependence of the final results on our a priori hypotheses about the population in model-based settings. Sampling designs free the official statistician to make hypotheses sometimes difficult to justify and to communicate.

This essential trait appears in the statistical methodology under the use of (asymptotically) design-unbiased linear estimators of the form , where denotes the sample, are the so-called sampling weights (possibly dependent on the sample ) and stands for the target variable to estimate the population total . A number of techniques does exist to deal with diverse circumstances regarding both the imperfect data collection and data processing procedures so that non-sampling errors are duly dealt with (Lessler and

Kalsbeek, 1992; Särndal and

Lundström, 2005). These techniques lead us to the appropriate sampling weights usually dependent on auxiliary variables . Sampling weights are also present in the construction of the variance estimates and thus of confidence intervals for the estimates.

The interpretation of a sampling weight is extensively accepted as providing the number of statistical units in the population represented by unit in the sample , thus settling the notion of representativeness on apparently firm grounds. This combination of sampling designs and linear estimators, complemented with this interpretation of sampling weights, stands up as a robust defensive argument against any attempt to use new statistical methodology with digital sources. Indeed, one of the first rightful questions when facing the use of digital data is how data represent the target population. With many new digital sources (mobile network data, web-scraped data, financial transaction data, …) the question is clearly meaningful.

However, before trying to give due response with new methodology, we believe that it is of utmost relevance to be aware of the limitations of the sampling design methodology in the inference exercise linking sampled data and target populations. This will help producers and stakeholders be conscious about changes brought by new methodological proposals and view the challenges in the appropriate perspective.

Firstly, the notion of representativeness is slippery business. This concept was already analyzed by Kruskal and

Mosteller (1979a, b, c, 1980) in this line. Surprisingly enough, a mathematical definition in classical and modern textbooks is not found, providing Bethlehem (2009a) an exception in terms of a distance between the empirical distributions of a target variable in the sample and in the target population. Obviously, this definition comes with very difficult practical implementation (we would need to know the population distribution). Nonetheless, this has not been an obstacle for the extended use of the concept of representativeness even in a dangerous way. From time to time, one can hear that the construction of linear estimators is undertaken upon the basis of being the number of population units represented by the sampled unit , thus amounting to the part of the population aggregate accounted for by unit in the sample , finally being the total population aggregate to estimate. A strong resistance is partially perceived in Official Statistics against any other technique not providing some similar clear-cut reasoning accounting for the representativeness of the sample. This argument is indeed behind the restriction upon sampling weights for them not to be lesser than (interpreted as a unit not representing itself) or for them to be positive in sampling weight calibration procedures (see e.g. Särndal (2007)). In our view, the interpretation of a unit in a sample as representing units in the population can be impossible to justify even in such a simple example as a Bernoulli sampling design of probability in a finite population of size : if, e.g., , how should we understand that these two units represent population units?

Ultimately, the goal of an estimation procedure is to provide an estimate as close as possible to the real unknown target quantity together with a measure of the accuracy. The concept of mean square error, and its decomposition in bias and variance components (Groves, 1989), is essential here. Estimators with a lower mean square error guarantee a high-quality estimation. No mention to representativeness is needed. Furthermore, not even the requirement of exact unbiasedness is rigorously justified: compare the estimation of a population mean using an expansion (Horvitz-Thompson) estimator and using the Hájek estimator (Hájek, 1981).

The randomization approach does allow the statistician not to make prior hypotheses on the structure of the population to conduct inferences, i.e. the confidence intervals and point estimates are valid for any structure of the population. But this does not necessarily entail that the estimator must be necessarily linear. Given a sample randomly selected according to a sampling design and the values of the target variable, a general estimator is any function , being linear estimators a specific family thereof (Hedayat and

Sinha, 1991). Thus, what prevents us to use more complex functions provided we search for low mean square error? Apparently nothing. A linear estimator may be viewed as a homogeneous first-order approximation to an estimator such as , but why not a second-order approximation Or even a complete series expansion (see e.g. Lehtonen and

Veijanen (1998))?

However, the multivariate character of the estimation exercise at statistical offices provides a new ingredient shoring up the idea of representativeness, especially through the concept of sampling weight. Given the public dimension of Official Statistics usually disseminated in numerous tables, numerical consistency (not just statistical consistency) is strongly requested on all disseminated tables, even among different statistical programs. For example, if a table with smoking habits is disseminated broken down by gender and another table with eating habits is also disseminated broken down by gender, the number of total women and men inferred from both tables must be exactly equal. Not only is this restriction of numerical consistency demanded among all disseminated statistics in a survey but also among statistics of different surveys, especially for core variables such as gender, age, or nationality. Linear estimators can be made easily fulfilled this restriction by forcing the so-called multipurpose property of sampling weights (Särndal, 2007). This entails that the same sampling weight is used for any population quantity to estimate in a given survey. For inter-survey consistency, sometimes the calibration of sampling weights is (dangerously) used. This elementarily guarantees the numerical consistency of all marginal quantities in disseminated tables.

Notice, however, that this property has to be forced. Indeed, the different techniques to deal with non-sampling errors (e.g. non-response or measurement errors) rely on auxiliary information so that sampling weights are functions of these auxiliary covariates . Forcing the multipurpose property amounts to forcing the same behaviour in terms of non-response, measurement errors, etc. (thus in terms of social desirability or satisficing response mechanisms) regarding all target variables in the survey. Apparently it would be more rigorous to adjust the estimators for non-sampling errors on a separate basis looking only for a statistical consistency among marginal quantities. However, this is much harder to explain in the dissemination phase and traditionally the former option is prioritized paving the way for the representativeness discourse (now every sampled unit is thought to “truly” represent population units).

Secondly, sampling designs are thought of as a life jacket against model misspecification. For example, even not having a truly linear model between the target variable and covariates , the GREG estimator is still asymptotically unbiased (Särndal et al., 1992). But (asymptotical) design-unbiasedness does not guarantee a high-quality estimate. A well-known example can be found in Basu’s elephants story (Basu, 1971). Apart from implications in the inferential paradigm, this story clearly shows how a poor sampling design drives us to a poor estimate, even using exactly design-unbiased estimators. A design-based estimate is good if the sampling design is correct.

Finally, as already well-known in small area estimation techniques (Rao and

Molina, 2015) and as R. Little (2012) called inferential schizophrenia, sampling designs cannot provide a full-fledged inferential solution for all possible sample sizes out of a finite population. Traditional estimates based on sampling designs show their limitations when the size of the sample for population domains begins to decrease dramatically. With new digital data one expects to avoid this problem by having plenty of data, but in the same line one of the expected benefits of the new data sources is to provide information at an unprecedented space and time scale. So, the problem may still remain in rare population cells.

In our view, thus, we must keep the spirit for representativeness in an abstract or diffuse way, for lack of bias, and for low variances, as in traditional survey methodology. But we should avoid some restrictive misconceptions and open the door to find solutions in the quest for accurate statistics with new data sources. There exist multiple statistical methods which should be identified to conform a more general statistical production framework. Probability theory can still provide a firm connection between collected data sets and target populations of interest.

4.2 New production framework: probability theory, learning, and artificial intelligence

We do not dare to provide an enumeration of statistical methods conforming the new production framework. Much further empirical exploration and analysis of the new data sources are needed to furnish a solid production framework and this will take time. However, some ideas can already be envisaged. The impossibility of using sampling designs necessarily makes us resort to statistical models, which essentially amounts to the conception of data as realizations of random variables (Lehmann and

Casella, 1998). As stated above, notice that this was not the case for the inferential step in survey methodology (although it was supplementarily made for other production steps as e.g. imputation).

The consideration of random variables as a central element brings immediately into scene the distinction between the enumerative and analytical aims of official statistical production (Deming, 1950). Let us use an adapted version of exercise 1 in page 254 of the book by Deming (1950). Consider an industrial machine producing bolts according to a given set of technical specifications (geometrical form, temperature resistance, weight, etc.). These bolts are packed into boxes of a fixed capacity (say, bolts) which are then distributed for retail trade. We distinguish two statistically different (though related) questions about this situation. On the one hand, we may be interested to know the number of defective bolts in each box. On the other hand, we may be interested to know the rate of production of defective bolts by the machine. Both questions are meaningful. The retailer will naturally be interested in the former question whereas the machine owner will also be interested in the latter. Statistically, the former question amounts to the problem of estimation in a finite population (Cassel

et al., 1977) while the latter is a classical inference problem (Casella and

Berger, 2002). Indeed, the concept of sample in both situations is different (see the definition of sample by Cassel

et al. (1977) for a finite population setting and that by Casella and

Berger (2002) for an inference problem). Notice that the use of inferential samples is not extraneous to the estimation problem in finite populations. The prediction-based approach to finite-population estimation (Valliant

et al., 2000; Chambers and

Clark, 2012) already makes use of target variables as random variables. In traditional official statistical production, the former sort of question is solved (number of unemployed people, of domestic tourists, of hectares of wheat crop, etc.). With new data sources and the need to consider data values as realizations of random variables, should Official Statistics begin considering also the new questions?

In this line, there already exists an important venue of Statistics and Computer Science research which Official Statistics, in our view, should incorporate in the statistical outputs included in National Statistical Plans. Traditionally, the focus of the estimation problem in finite population has been totals of variables providing aggregate information for a given population of units broken down into different dissemination population cells. The wealth of new digital data opens up the possibility to investigate the interaction between those population units, i.e. to investigate networks. Indeed, a recent discipline has emerged focusing on this feature of reality (see Barabási (2008) and multiple references therein). Aspects of society with public interest regarding the interaction of population units should be in the focus of production activities in statistical offices. New questions as the representativeness of interactions in a given data set with respect to a target population arise as a new methodological challenge in Official Statistics.

A closer look at the mathematical elements behind this network science will reveal the versatile use of graph theory (Bollobas, 2002; van Steen, 2010) to cope with complexity. As a matter of fact, the combination of probability theory and graph theory is a powerful choice to process and analyse large amounts of data. Probabilistic graphical models (Koller and

Friedman, 2009), in our view, should be part of the methodological tools to produce official statistics with new data sources. They provide an adaptable framework to deal with many situations such as speech and pattern recognition, information extraction, medical diagnosis, genetics and genomics, computer vision and robotics in general, …This is already bringing a new set of statistical and learning techniques into production.

This immediately takes us to machine learning and artificial intelligence techniques. In this regard, we should distinguish between the inferential step connecting data and target populations and the rest of production steps. Many tasks, old and new, can be envisaged as incorporating these recent techniques to gain efficiency. Traditional activities such as data collection, coding, editing, imputation, etc. can be presumably improved with random forests, support vector machines, neural networks, natural language processing, etc. New activities such as pattern and image recognition, record deduplication, […] will also be conducted with these new techniques. Further research and innovation must be carried out in this line.

For the inferential step, however, we cannot see these new techniques as a definitive improvement. Our reasoning goes as follows. An essential ingredient in machine learning and artificial intelligence is experience (Goodfellow

et al., 2016), i.e. the accumulation of past data from which the machine or the intelligent agent will learn. Learning to make inferences for a target population entails that we know and accumulate the ground truth so that algorithms can be trained and tested. The ground truth for a target population is never known. Thus, the inference step must receive the same attention as in traditional production. There may be situations in which the wealth and nature of digital data may bring the case where the whole target population is sampled (e.g. a whole national territory can be covered by satellite images to measure the extensions of crops), but even in those cases the treatment of non-sampling errors must be taken into account (as already envisaged by Yates (1965)).

This incorporation of new techniques from fields like machine learning and artificial intelligence entails a necessity to set up a common vocabulary and understanding of many related concepts in these disciplines and in traditional statistical production. Let us focus, e.g., on the notion of bias. This arises once and another both in machine learning and in estimation theory. In traditional finite population estimation, the bias of an estimator of a population total is defined with respect to the sampling design as , which amounts basically to an expectation value over all possible samples. In survey methodology, estimators are (asymptotically) unbiased by construction. This notion of bias is not to be confused with the difference between the true population total and an estimate from the selected sample . This estimate error is never known and can be non-zero even for exactly unbiased estimators. When the prediction approach is assumed and the population total is also considered a random variable, the concept of (prediction) bias is slightly different: , where stands for the data model. These notions of population bias are not to be confused with the measurement error , where stands for the raw value observed in the questionnaire and stands for the true value for unit of variable . Indeed, in statistical learning this is very often referred to as bias. We model variable , indeed. An effort to build a precise terminology when new techniques are used is needed in order to assure a common understanding of the mathematical concepts at stake. Another example comes from the reference to linear regression as a “machine learning algorithm” (Goodfellow

et al., 2016). New techniques bring new useful perspectives even in the traditional process but the community of official statistics producers must be sure that communication barriers do not arise.

Finally, apart from machine learning and artificial intelligence and in connection with different aspects of data access and data use already mentioned in section 3, we must make a special mention to data collection and data integration. New digital data per se will provide individually a high value to official statistical products but arguably it is the integration and combination of them together with survey and administrative sources which will boost the scope of future statistical products. At this moment, this integration and combination is thought to be potentially conducted only with no disclosure of each integrated database. This drives us necessarily to cryptology and the incorporation of cryptosystems in the production of official statistics. Notice, however, that this does not substitute the statistical disclosure control upon final outputs, which must still be conducted. Now it is also at the input of the statistical process where data values are not to be undisclosed. The cryptosystem must be able to carry out complex statistical processing in an undisclosed way. A lot of research in this line is needed.

All in all, new methods are to be incorporated with the new data sources, many of them already existing in other disciplines. The challenge is to furnish a new production framework. New data and new methods bring necessarily considerations for quality, for the technological environment, and for staff capabilities and management within statistical offices.

5 Quality

Quality has been a distinguishing feature of official statistical production for many decades and lots of efforts have been traditionally devoted to reach high-quality standards in survey-data-based publicly disseminated statistical products. With new data sources these high-quality standards must be also pursued.

We identify key notions in current quality systems in Official Statistics and try to understand how they are to be affected by the nature of the new data sources and the new needs in statistical methodology. We underline three important notions. Firstly, the concept of quality in Official Statistics evolved from the exclusive focus on accuracy to the present multidimensional conception in terms of (i) relevance, (ii) accuracy and reliability, (iii) timeliness and punctuality, (iv) coherence and comparability, and (v) accessibility and clarity (ESS, 2014). Current quality assurance frameworks in national and international statistical systems implement this multidimensional concept of quality (or slight variants thereof). Will new quality dimensions be needed? Will existing quality dimensions be unnecessary? Secondly, a statistical product is understood to have a high-quality standard if it has been produced by a high-quality statistical process. How will the changes in the statistical process affect quality? Thirdly, quality is mainly conceived of as “fit for purposes”(Eurostat, 2020b). How will statistical products based on new data sources be fit for purposes? Certainly, these are not orthogonal unrelated notions, but they can jointly offer a wide overview of the main quality issues.

5.1 Quality dimensions, briefly revisited

Regarding the quality dimensions, we do not foresee a need to reconsider the current five-dimensional conception mentioned above. Already with traditional data, alternative more complex multidimensional views of data quality could already be found in the literature (see e.g. Wand and

Wang, 1996, and multiple references therein). In our view, the nature of new data sources will certainly require a revision of the existing dimensions, especially the conceptualization and computation of some quality indicators, but not the suppression and/or consideration of new dimensions. Let us consider as an immediate relevant example the consequences of using model-based inference (possibly deeply integrated in complex machine learning or artificial intelligence algorithms). Parameter setting, model choice, and any form of prior hypothesis regarding the model construction must be clearly assessed and communicated. This ingredient impinging on accuracy, comparability, accessibility, and clarity gains in relevance with new data sources.

We comment very briefly on the aforementioned quality dimensions:

-

•

Relevance is essentially an address to current and potential statistical needs of users. This dimension is deeply entangled with our third question regarding being fit for purpose. We will deal with this dimension more extensively below.

-

•

Accuracy is directly impinged by the new methodological scenario. Inference cannot be design-based with new data sources, thus model-based estimates will gain more presence. Furthermore, since these new data sources come mostly from event-register systems, the usual reasoning on target units and target variables is not directly applicable, thus reducing the validity of the usual classification of errors (sampling, coverage, non-response, measurement, processing). These errors are severely survey-oriented and despite the possibility of more generic readings of current definitions we find it necessary to undergo a detailed revision. Let us consider a hypothetical situation in which a statistical office has access to all call detail records (CDRs) in a country for a given time period of analysis to estimate present population counts. These network events are generated by an active usage of a mobile device. Discard children, very elderly people, imprisoned people, severely deprived homeless people, and any rather evident non-subscriber of these mobile telecommunication services. Can all CDRs be considered a sample with respect to our (remaining) target population? There is no more CDR data, however we cannot be sure that all target individuals can be considered included in the dataset. Indeed, there is no enumeration of the target population and the “error[s] […] which cannot be attributed to sampling fluctuations”(ESS, 2014) cannot be clearly identified. The line distinguishing coverage and sampling errors becomes thinner (as a matter of fact, the concept of frame population in this new setting loses its meaning).

Reliability and the corresponding plan of revisions can still be considered under the same approach as in traditional data sources, only potentially affected by both the higher degree of breakdown and availability of data. When dissemination cells are very small and publicly released more frequently, the variability of estimates are expected to be much higher. Thus, an assessment to discern between random fluctuations because of small-sized samples and fluctuations because of real effects (e.g. population counts attending music festivals or sport events) is needed. The plan of revisions should be accommodated to the chosen degree of breakdown in the dissemination stage.

-

•

Timeliness arises as one of the most clearly improved quality dimensions when incorporating new data sources. Indeed, with digital sources even (quasi) real-time estimates may be an important novelty. However, this is intimately connected to the design and implementation of the new statistical production process and the relationship with data holders. Real-time estimates entail real-time access and processing, which is usually highly disruptive and requires a higher investment on the data retrieval and data preprocessing stages, presumably on data holders’ premises. Therefore, guarantees (both legal and technical) for access sustained in the long term must be provided. Once timeliness can be improved, new output release calendars can be considered in legal regulations for each statistical domain, thus binding statistical offices to disseminate final products with the same punctuality standards.

-

•

The role of coherence and comparability is to be reinforced with new data sources. The reconciliation among other sources, other statistical domains, and other time-frequency statistics is now more critical. It is not only that the data deluge will allow statistical offices to reuse the same source to produce different statistics for different statistical domains (e.g. financial transactional data for retail trade statistics, for tourism statistics, …), but also that different sources will possibly lead to estimates for the same phenomenon (e.g. unmanned aerial images, satellite images, administrative data, survey data for agriculture). This is naturally connected also to comparability, since statistical products must still be comparable between geographical areas and over time. The criticality is intensified because the wealth of statistical methods and algorithms potentially applicable on the same data can lead to multiple different results where the comparison is not immediate. This demands a closer collaboration in statistical methodology in the international community.

-

•

Accessibility and clarity in relation to users is essential (e.g. to the point expressed above of strongly nailing the non-mathematical notion of representativeness in the world of Official Statistics). The challenge raised by the wealth of statistical methods and machine-learning algorithms to solve a given estimation problem stands now as an extraordinary exercise in communication strategy and policy. Furthermore, this communication strategy and policy should not only embrace but also get deeply entangled with the access and use of the new data sources. The promotion of statistical literacy will need to be strengthened.

5.2 Process quality

Changes in the process will certainly be needed according to the new methodological ingredients mentioned in section 4. As a matter of fact, the implementation of new data sources in the production of some official statistics is already bringing the need for new business functions such as trust management, communication management, visual analyses, …(Bogdanovits

et al., 2019; Kuonen and

Loison, 2020). However, in our view the farthest-reaching element will come from the need to include data holders as active actors in the early (and not so early) stages of the production process. This will especially affect those deeply technology-dependent data sources with a clear data preprocessing need for statistical purposes. In other words, data holders have changed their roles from just input data providers, either through electronic or paper questionnaires, to also data wrangler for further statistical processing.

Being official statistics a public good, it seems natural to request that this participation of data holders will need to be reflected in quality assurance frameworks to assess their impact on final products. In our view, this entails far-reaching consequences and strongly imposes conditions on the partnerships between statistical offices and data holders. These conditions are two-fold, since restrictions both for the public and private sector must be observed. For example, the statistical methodology driving us from the raw data to the final product must be openly disseminated, communicated and available to all stakeholders as an integral elemental of the statistical production metadata system. Furthermore, to guarantee coherence and comparability it seems logical to share this statistical methodology among different data holders, i.e. in any preprocessing stage. However, guarantees must also be provided to avoid sensitive information leakage among different agents in the private sector, especially in highly competitive markets. statistical offices cannot become malicious vectors of industrial secrecies and know-hows endangering an increasing economic sector based on data generation and data analytics. Another example comes from data sources providing geolocated information (e.g. to estimate population counts of diverse nature). Current data technologies allow us to reach unprecedented degrees of breakdown (e.g. providing data every second minute at postal code geographical level). Freely disseminating population counts at this level of breakdown in a statistical office website would certainly ruin any business initiative to commercialise and/or to foster private agreements to produce statistical products. Partnerships must include formulas of collaboration where both private and public interests not only can, in our opinion, coexist, but even also positively feedback each other.

In this line of thought, synthetic data can play a strategic role, even beyond traditional quality dimensions and traditional metadata reporting. In our view, an important aspect of the public-private partnerships with data holders is a deep knowledge of metadata of the new data sources. This would enable statistical offices to generate synthetic data with similar properties to real data. This synthetic data can play a two-fold role. On the one hand, for all data sources, providing synthetic data together with process metadata will enable users and stakeholders to get acquainted with the underlying statistical methodology thus increasing the overall quality in the process. For example, a frame population of synthetic business units can be synthetically created so that the whole process from the sample selection to the final dissemination phase and monitoring can be reproduced. On the other hand, for new data sources with those challenges in access and use reported above, methodological and quality developments as well as software tools can be investigated without incurring on those obstacles with real data. Notice that the utility of this synthetic data will sensitively depend on their similarity with real data, thus demanding a good knowledge of their metadata, i.e. calling for a close collaboration with data holders.

5.3 Relevance

Relevance is a quality attribute measuring the degree to which statistical information meets the needs of users and stakeholders. Thus, it is intimately related to outputs being fit for purpose. Moreover, relevance is one of the key issues in the Bucharest Memorandum (DGINS, 2018) clearly pointing out the risk for public statistical systems in case of not incorporating new data sources into the production process (among other things).

In more mathematical terms, let us view relevance in terms of the nature of statistical outputs and aggregates. Up to current dates, most (if not all) statistical outputs are estimates of population totals or functions of population totals . They may be the total number of unemployed resident citizens, the number of domestic tourists, the number of employees in an economic sector, etc., but also volume and price indices, rates, and so on. This sort of outputs is basically built using estimates of quantities such as , where denotes a population domain and stands for the the fixed values of a target variable. In our view, the wealth of data provides now the opportunity to investigate a wider class of indicators. Network science (Barabási, 2008) provides a generic framework to investigate new kinds of target information, in particular, that derived from the interaction between population units. Graph theory stands up as a versatile tool to pursue these ideas. If nodes represent the target population units, edges express the relationship among these population units. An illustrative example can be found in mobile network data, where edges between mobile devices can represent the communication between people and/or with telecommunication services. If the geolocation of this data is also taken into account and they are combined with other data sources (e.g. financial transaction data – also potentially geolocated), many new possibilities arise to investigate e.g. segregation, inequalities in income, access to information and other services, etc. New statistical needs naturally arise. Should statistical offices act reactively waiting for users to express these new needs or should they act proactively searching for new forms of information, new indicators, and new aggregates? In our view, innovation activities and collaboration with research centres and universities should be strengthened to promote proactive initiatives.

6 Information technologies

6.1 IT for survey and administrative data

The very fast evolution of the information technologies has changed our lives. Nowadays, almost every human activity leaves a digital footprint: from searching information on Internet using a search engine to using a mobile phone for a simple call or paying a product with a credit card, the traces of these activities are stored somewhere in a digital database. Accordingly, these enormous quantities of data draw the attention of statisticians who started to consider their potential for computing new indicators. The distinct characteristics of these new data sources that were emphasized in the previous sections also changed the IT tools needed to tackle with them. While using the classical survey data to produce statistical outputs doesn’t raise special computational problems, collecting and processing new types of data (that are most of the times very big in volume) requires an entire new computing environment as well as new skills for the people that work with them. In this section we will shortly review the computing technologies used in official statistics for dealing with survey data and we will describe the new technologies needed to handle new big data sources. We emphasize that the computing technologies are evolving with an unprecedented speed and what it seems to be now the best solution, in few years could be totally outdated. We will also provide some examples of concrete computing environments used for experimental studies in the official statistics area.

The computing technology needed for a specific type of a data source is intrinsically related to the nature of the data source. Survey data are structured data with a reasonable size, properties that make them easy to store with traditional relational databases. The IT tools used for surveys can be classified according to the specific stage in the production pipeline and for this purpose we will consider the GSBPM as the general framework describing the official statistics production process.

Different phases of the statistical production process such as drawing the samples, data editing and imputation, calculation of aggregates, calibration of the sampling weights, seasonal adjustments of the time series, performing statistical matching or record linkage use specialized software routines, most of the time developed in-house by some statistical agencies and then shared with the rest of the statistical community, that are implemented using commercial products like SAS, SPSS, Stata or open source software like R or Python.

While in the past most of the official statistics bureaus where strongly dependent on a commercial software packages like SAS or Stata for example, nowadays we are witnessing a major change in this field. The benefits of the open source software were reconsidered by the official statistics organizations and more and more software packages are now ported to the R or Python ecosystems (van der Loo, 2017).

The data collection stage in the production pipeline requires specialized software. Even if the paper questionnaires are still in use in several countries around the world, the main trend today is to collect survey data using electronic questionnaires (Bethlehem, 2009b; Salemink et al., 2019) by either CAPI or CAWI method. In both cases, specific software tools are required to design the questionnaires and to effectively collect the data. We mention here some examples of software tools in this category:

-

•

BLAISE (CBS, 2019) is a computer aided interviewing system (CAI) developed by CBS which is currently used worldwide in several fields: from household to business and economic or labor force surveys. According to the official web page of the software (https://www.cbs.nl/en-gb/our-services/blaise-software) more than 130 countries use this system. It allows statisticians to create multilingual questionnaires that can be deployed on a variety of devices (both desktops and mobile devices), it is supported by all major browsers and operating systems (Windows, Android, iOS) and has a large community of users. More, BLAISE is not only a questionnaire designer and data collection tool but it can also be used in all stages of the data processing.

-

•

CSPro (Census and Survey Processing System) is a freely available software framework for designing applications for both data collection and data processing. It is developed by the U.S. Census Bureau and ICF International. The software can be run only on Windows systems to design data collection applications that can be deployed on devices running Android or Windows OS. It is used by official statistics institutes, international organizations, academic institutions and even by private companies in more than 160 countries (https://census.gov/data/software/cspro.html).

-

•

Survey Solutions (The World Bank, 2018b) is a free CAPI, CAWI and CATI software developed by the World Bank for conducting surveys. The software has capabilities for designing the questionnaires, deploying them on mobile devices or on Web servers, collect the data, perform different survey management tasks and it is used in more than 140 countries (The World Bank, 2018a).

There are also other software tools for data collection but they are used on a smaller scale being built by statistical offices for their specific needs.

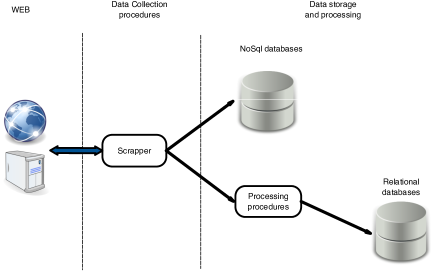

All these tools used in official statistics for data collection are built around a well know technology: the client-server model. Even this model dates from the 1960s and 1970s when the foundations of the ARPANET where built (Shapiro, 1969; Rulifson, 1969) it becomes very popular with the appearance and the development of the Web system that transformed the client into the ubiquitous Web browser, making the entire system easier to deploy and maintain. Nowadays there are a plethora of computing technologies supporting this model: Java and .NET platforms, PHP together with a relational database, etc. Figure 1 describes the architecture of a typical client-server application when the client is a Web browser.

The client which is usually a browser running on a mobile device or on a desktop loads a questionnaire used to collect the data from households or business units. These data are subject to preliminary validation operations and then they are sent to the server side where a Web server manages the communications via HTTP/HTTPS protocol and an application server implements the logic of the information system. Usually, some advanced data validation procedures are performed before the data are sent to a relational database. From this database, the datasets are retried by the productions units that start the processing stage.

The last stages of the production pipeline, i.e. the dissemination of the final aggregates, require also specialized technologies.

Statistical disclosure control (SDC) methods are special techniques with the aim of preserving the confidentiality of the disseminated data to guarantee that no statistical unit can be identified. These methods are implemented in software packages, most of them being in the open source domain. We can mention here sdcMicro (Templ

et al., 2015) and sdcTable (Meindl, 2019) R packages or tauArgus (de Wolf et al., 2014) and muArgus (Hundepool et al., 2014) Java programs.

Even for disseminating the results on paper, software tools are still needed: starting from the classical office packages which are easy to use by statisticians to more complex tools like Latex that require specific skills, all the paper documents are produced using IT tools. In the digital era the dissemination of the statistical results switched to the Web pages where technologies based on Javascript libraries like D3 (Bostock

et al., 2011) or R packages like ggplot2 (Wickham, 2016) are widespread.

In general, the administrative sources are treated using the same software technologies like survey data with the exception of the data collection step which is not needed in this case.

6.2 IT for digital data: overview