On Negative Transfer and Structure of Latent Functions

in Multi-output Gaussian Processes

Supplementary materials:

On Negative Transfer and Structure of Latent Functions

in Multi-output Gaussian Processes

Abstract

The multi-output Gaussian process () is based on the assumption that outputs share commonalities, however, if this assumption does not hold negative transfer will lead to decreased performance relative to learning outputs independently or in subsets. In this article, we first define negative transfer in the context of an and then derive necessary conditions for an model to avoid negative transfer. Specifically, under the convolution construction, we show that avoiding negative transfer is mainly dependent on having a sufficient number of latent functions regardless of the flexibility of the kernel or inference procedure used. However, a slight increase in leads to a large increase in the number of parameters to be estimated. To this end, we propose two latent structures that scale to arbitrarily large datasets, can avoid negative transfer and allow any kernel or sparse approximations to be used within. These structures also allow regularization which can provide consistent and automatic selection of related outputs.

1 Introduction

The multi-output, also referred to as multivariate/vector-valued/multitask, Gaussian process () draws it root from transfer learning, specifically multitask learning. The goal is to integratively analyze multiple outputs in order to leverage their commonalities and hence improve predictive and learning accuracy. Indeed, the multi-output () has seen many success stories in the last decade. This success can be largely attributed to the convolution construction which provided the capability to account for heterogeneity and non-trivial commonalities in the outputs.

The convolution process () is based on the idea that a , can be constructed by convolving a latent Gaussian process with a smoothing kernel . This construction, first proposed by Ver Hoef & Barry (1998) and Higdon (2002), is equivalent to stimulating a linear filter characterized by the impulse response . The only restriction is that the filter is stable, i.e., . Given the construction, if we share multiple latent functions across the outputs , then all outpouts can be expressed as jointly distributed , i.e., an (Álvarez & Lawrence, 2011). This is shown in (1).

| (1) |

where denotes a convolution. The key feature in (1) is that it allows information to be shared through different kernels (with different parameters) which enables great flexibility in describing the data. Further, many models used to build cross correlations across outputs including the large class of separable covariances and the linear model of corregonialization are special cases of the convolution construction (Alvarez et al., 2012; Fricker et al., 2013).

Since then, work on has mainly focused on two trends: (1) Efficient inference procedures that address the computational complexity (a challenge inherited from the ) (Wang et al., 2019; Nychka et al., 2015; Gramacy & Apley, 2015; Damianou et al., 2016) . This literature has mainly focused on variational inference which laid the theoretical foundation for the commonly used class of inducing point/kernel approximation (Burt et al., 2019). Interestingly, variational inference also reduced overfitting and helped generalization due to its regularizing impact. Some work in this area include: Zhao & Sun (2016); Nguyen et al. (2014); Moreno-Muñoz et al. (2018), to name a few. (2) Building expressive kernels (Parra & Tobar, 2017; Chen et al., 2019; Ulrich et al., 2015) that often can represent certain unique features of the data studied. Most of which are based on spectral kernels, despite the fact that convolved covariance based on the exponential, Gaussian or Matérn kernels are still most common in applications. Relating to this, recent work has also studied a non-linear version of the convolution operator (Álvarez et al., 2019).

However, a key question is yet to be answered. The is based on the assumption that outputs share commonalities, but what happens if this assumption does not hold? would negative transfer occur? which in turn leads to forced correlation and decreased performance relative to learning each output independently (Caruana, 1997). This question is especially relevant when using the , which implicitly implies that outputs have heterogeneous, possibly unique, features. For instance, following recent literature, would an expressive kernel and an efficient inference procedure for finding good kernel parameter estimates automatically avoid spurious correlations? or say in an extreme case where all outputs share no commonalities, would the automatically collapse into independent s?.

In this article we shed light on the aforementioned questions. Specifically, we first define negative transfer in the context of an . We then show that addressing the challenge above is mainly dependent on having a sufficient number of latent functions, i.e., in (1). However, even when is relatively small, a small increase in would cause the number of parameters to be estimated to skyrocket. This renders estimation in such a non-convex and highly nonlinear setting impractical, which explains why current literature including the above cited papers only use . To this end, we propose easy-to-implement relaxation models on the structure that scale to arbitrarily large datasets, can avoid negative transfer and allow any kernel or sparse approximations to be used within. A key feature of our models, is that they allow regularization penalties on the hyper-parameters which can provide consistent selection of related/unrelated outputs.

We organize the remaining paper as follows. Sec. 2 provides some preliminaries. Sec. 3 defines negative transfer and provide necessary conditions to avoid it in the . In Sec. 4 we provide scalable relaxation models on the structure, we then explore regularization schemes that help generalization and automatic selection in the relaxation models. In Sec. 5 we provide a statistical guarantee on the performance of the penalized model. A proof of concept and illustration on real-data is given in Sec. 6. Finally, we conclude our paper in Sec. 7. Technical details and a detailed code are given in the supplementary materials.

2 Preliminaries

Consider the set of noisy output functions and let be the corresponding index set.

where is zero mean multivariate process with covariance for and represents additive noise. For the th output the observed data is denoted as , where , , and represents the number of observations for output . Now let and , then the predictive distribution for output at a new input point is given as

| (2) |

where corresponding to the latent function values , such that , is the covariance matrix from the operator and is a block diagonal matrix with as the identity matrix. Finally, , where and ; .

As shown in (2), information transfer is facilitated via . Under the in (1) and assuming independent latent function with ( is the Dirac delta function) we have

| (3) |

where denotes a convolution. Here we note that a more general case can be used where is a generated from a , i.e., . In the appendix we show that the following results also hold under such a case.

3 Negative Transfer: Definition and Conditions

In this section, we first give a strict definition of negative transfer in , and then explore necessary conditions needed to avoid negative transfer.

3.1 Definition of negative transfer

Similar to multi-task learning, negative transfer draws its roots from transfer learning (Pan & Yang, 2009). A widely accepted description of negative transfer is stated as “transferring knowledge from the source can have a negative impact on the target learner”. In an , negative transfer could be defined similarly: the integrative analysis of all outputs can have negative impact on the performance of the model compared with separate modeling of each output or a subset of them.

Definition 1

Consider an with possible outputs, and assume represents the target output. Let the index set of all outputs comprise of non-empty disjoint subsets . Then, we can define the information transfer metric () of the output , as follows:

where is a using data , defines the expected risk using some loss function and denotes the predicted random variable in (2). We say negative transfer occurs if is positive.

Definition 1 implies that negative transfer happens when using leads to worse accuracy compared to using a subset of the data or just an individual . Therefore, one can provide a model flexible enough to avoid negative transfer if there exists an such that

| (4) |

One can think of as the index for the subset of outputs that share commonalities with ( here includes ). For instance, if shares no commonalities with any other output () then the should be able to have for all , i.e., the conditional predictive distribution in (2) for output is independent of all other outputs. In other words, we need an that is able to collapse to independent or an with only related outputs.

Building a model that can achieve (4) is a challenging task since, following the conditional predictive distribution in (2), (4) occurs if and only if (Whittaker, 2009)

| (5) |

In the following section we study the necessary condition for the to achieve (5). Specifically we show that if is known (i.e., we know how many distinct/unrelated subgroups of outputs exist) then to achieve (5) the necessary condition is that . However the fact that is not known before hand implies that we need .

3.2 Conditions to avoid negative transfer

We first provide a lemma based on the covariance in (3) needed to establish our result.

Lemma 1

Given two outputs, and , modeled using one latent function . Assume, the kernels , , satisfy one of the following conditions:

-

•

, and . Typical cases include squared exponential, Matern, quadratic kernel, periodic and local periodic.

- •

Then for , if and only if at least one of and is identically equal to zero.

The technical details for Lemma 1 are given in the Appendix A. Clearly when using one latent function if one of the kernels is identically zero then the is invalid (). On the other hand, if we use latent functions, then the model has enough flexibility to construct from different latent functions, i.e. and . In this case, . Hence, Lemma 1 implies that only if we can achieve .

In Lemma 1 the assumption that kernels belong to the space is also needed for a stable construction in (1). Here we note that despite the fact that the conditions presented satisfy most (if not all) of the kernels currently used in the , in the appendix we also provide some simple means to check the conditions. For instance, for any even kernel , then . Hence, since the Fourier operator is injective, it suffices to show that if and only if or is identically zero.

We now give the main theorem for the necessary condition to avoid negative transfer.

Theorem 2

Given that satisfies the conditions in Lemma 1 then there exists an , constructed using a , that can achieve if and only if we have latent functions.

The technical details for Theorem 2 are given in the Appendix B. It is crucial to note here that in reality we do not know , i.e., we do not know how many distinct subgroups that are uncorrelated exist. Thus, in order to guarantee that negative transfer can be avoided we need . This also implies that the model is flexible enough to collapse to independent s and hence predict each output independently. An illustrative example is also given in Appendix C.

3.3 Induced Challenges

Despite the many works in the previous decade on reducing the computational complexity of both the and , the results in Sec.3.2 induce another key challenge for : the high dimensional parameter space. This challenge is inherited from the construction which provides different covariance parameters (via the kernels) to different outputs levels. For instance, assume any kernel has parameters to be estimated then using the , this implies estimating parameters, where the added parameters are for . Following our results, a model that can avoid negative transfer thus needs at least . Note here that also increases with , i.e., the dimension of . Obtaining good estimates in such a high dimensional space is an impractical task specifically under a non-convex and highly nonlinear objective, be it the exact Gaussian likelihood or its variational bound. Indeed, it is crucial to note that computational complexity and parameter space are two separate challenges and the many papers that tackle the former still suffer from the latter challenge. We conjecture that for this reason, most (if not all) literature (including all aforementioned cited papers) have used .

To address this challenge, in Sec. 4 we provide relaxation models that can significantly reduce the parameters space and scale to arbitrarily large datasets by parallelization. Further, our proposed relaxations allow any sparse approximation to be directly plugged in. This in turns allows utilization of the many advances in reducing the computational complexity (inducing point, state space approximation, etc..).

4 Relaxation models

In this section we propose two relaxation models: The arrowhead and pairwise model. Without loss of generality, we focus on predicting output using the other outputs. We use to index all outputs except .

4.1 Arrowhead Model

The idea of an arrowhead model is originated from the arrowhead matrix. While still using latent functions, we can assume that all outputs , , are independent and only share information with , the output of interest. This implies that . The structure and covariance matrix are highlighted in Fig. 1(a) and (6) respectively. As shown in the figure, possesses unique features encoded in and shared features with other outputs encoded in .

| (6) |

The arrowhead structure in fact poses many unique advantages: (1) Linear increase of parameter space dimension with : The number of parameters to be estimated is reduced to . (2) provides enough flexibility to achieve (5) and hence avoid negative transfer. For instance, if and then outputs and are predicted independently. (3) can be parallelized, where independent arrowhead models are build to predict each output. (4) One nice interpretation of the arrowhead structure is through a Gaussian directed acyclic graphical model (DAG) with vertices . Unlike typical DAGs, each vertex in this graph is in itself a fully connected undirected Gaussian graphical model, i.e. a functional response. This is shown in Fig.1(b). Based on this, the full likelihood factorizes over parent nodes. To see this, let denote the likelihood of the dataset, where , such that and are kernel and noise parameters. Then, . This reduces the complexity of exact inference to assuming , i.e, complexity of independent s. This complexity is similar to the well known inducing point sparse approximation in Alvarez & Lawrence (2009), however without the assumption of conditional independence given discrete observations from the latent functions.

Despite reduced complexity, the main advantge is the reduction in the parameter space. Here it is crucial to note that any sparse approximation, be it an inducing point/variational approximation, a state space approximation, a matrix tapering approach or just a faster matrix inversion/determinant calculation scheme, can be plugged in into this structure.

4.1.1 Encouraging sparsity via regularization

Besides the fact that the arrowhead model can avoid negative transfer, an interesting feature is that we can add regularization that helps reduce negative transfer and is capable of automatic variable selection. Here variable selection implies selection of which functions should be predicted independently or not. To see this, let where and . A penalized version of is defined as

| (7) |

where is a penalty function and . Possible choices of and statistical guarantees on (7) are provided later in Sec. 5. One can directly observe that for , then . Therefore when

and hence outputs and will be predicted independently. Thus any shrinkage penalty will encourage the arrowhead model to limit information sharing across unrelated output. Another advantage besides automatic shrinkage is functional variable selection where the sparse elements in would identify which outputs are related to .

4.2 Pairwise Model

Despite the linear increase in parameter space in the arrowhead model, when is extremely large model estimation can still be prohibitive. To this end, and inspired by the work of Fieuws & Verbeke (2006), we propose distributing the into a group of bivariate s which are independently built. Predictions are then obtained through combining predictions from each bivariate . As previously mentioned we focus on predicting output 1 through borrowing strengths from the other outputs.

Fig. 2(a) illustrates the pairwise submodel between and , where two latent functions are used to avoid negative transfer. Note here that the structure in Fig. 2(b) is proposed for efficient regularization and is discussed later in Sec. 4.2.1. The key advantage of the pairwise structure is that: (1) it can scale to an arbitrarily large by parallelization where each submodel is estimated with a limited number of parameters () and with complexity of (assuming exact inference with no approximations and that ) (2) It can avoid negative transfer without the need for latent functions.

After building the sub-models, combining predictions boils down to combining predictive distributions in (2). This can be readily done using the rich literature on product of experts and Bayesian committee machines (see Deisenroth & Ng (2015), Moore & Russell (2015) and Tresp (2000) for an overview). The key idea across such approaches, in our context, is that sub-models that are uncertain about their predictions of (i.e., have larger predictive variance) will get less weight.

4.2.1 Encouraging sparsity in pairwise model

Similar to the arrowhead model, the pairwise approach also facilitates regularization and automatic variable selection. For the structure illustrated in Fig. 2(a), unlike (7), we have that . Therefore to encourage sparsity, a group penalty on and is needed.

| (8) |

One well-known option for is the group Lasso .

Alternatively, one can utilize the structure in Fig. 2(b) and instead of penalizing the kernels, one can regularize the shared latent function . For instance, one can augment the covaraince of with a parameter such that . Then,

| (9) |

It can be directly verified that as outputs and are predicted independently.

5 Guarantee on Penalization

In this section we establish a statistical guarantee on the estimates , under the penalized setting in (7). Our result extends the well known consistency for independent and identically normal data to the case with correlated data. Prior to that, we first briefly discuss different forms of . Possible well-known choices include the ridge penalty , penalty , bridge penalty , and SCAD penalty which includes two tuning parameters ( and ) if , if , if (Fan & Li, 2001). The tuning parameters can be estimated using cross validation (Friedman et al., 2001). Next we provide the main theorem.

Assume that corresponds to the true parameters, “" denotes a derivative and is a sequence such that as , then we have that

Theorem 3

Given that , and if . If , then in , such that , where .

Theorem 3 shows that for the penalized likelihood, the true parameter estimates would still be asymptotically retained. This provide theoretical justification for our regularization approach in both the arrowhead and pairwise models. Technical details and further discussions are deferred to Appendix D.

6 Proof of Concept and Case Studies

Since negative transfer is a subject yet to be explored in , we dedicate most of this section towards a proof of concept for: (1) the impact of negative transfer, (2) the need for sufficient latent functions as shown in theorem 2, (3) the advantageous properties of the proposed latent structures, (4) the role of regularization in selection of related outputs as shown in theorem 3. Two case studies are then presented, while additional studies and numerical examples are differed to Appendix G.

6.1 Illustration of Negative Transfer

6.1.1 Convolved Squared exponential Kernel

In this setting, we aim to illustrate theorem 2 using the well-known convolved squared exponential kernel in Álvarez & Lawrence (2011). We generate outputs , and from

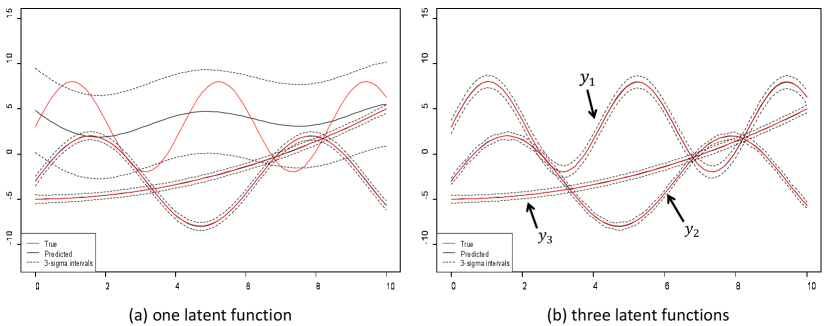

where is evenly spaced in , and . In Table 1 we report the means squared error (MSE), averaged over the 3 outputs, on uniformly spaced points in when and . Table 1 provides many interesting insights. Indeed from the function specifications, it is clear that they have very different shape and length scales (i.e., frequency and amplitude). As a result, when using one or two latent functions negative transfer leads to large predictive errors. It is also noticeable that the result of using does not have much difference with that of . This confirms our theorem which implies that with at least latent functions an is capable of avoiding negative transfer.

| Q | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| MSE | 25.183 | 11.464 | 0.00159 | 0.00157 |

6.1.2 Spectral Kernel

The immediate follow up question is what if we use the recently proposed, more flexible class of spectral kernels. The aim is to illustrate that as shown in Lemma 1, avoiding negative transfer is mainly independent of what kind of kernel we use, i.e. even if we use a more flexible kernel. We use the same data with that in setting 6.1.1. The covariance function is given as:

where . Formulation of and , are given in Appendix E. This covariance is the result of a convolution across spectral kernels.

The predictive results for the three outputs are illustrated in Fig. 3. The results confirm that even with a flexible kernel negative transfer will detrimentally effect model performance without enough latent functions. Indeed in Fig. 3(a) one can observe that and have larger length scales and hence are smoother. As a results when , output is forced to have a larger length scale in lieu of the two other outputs. This however, can be avoided with a sufficient number of latent functions as shown in Fig. 3(b).

6.2 Role of Regularization

Still under the setting of Sec 6.1.1, we try to verify theorem 3 and the impact of on automatic selection of related output. We use the pairwise model described in Fig. 2(b) where two bivariate submodels are used to predict : and . The covariance function of and () constructed using Fig. 2(b) are given as

We applied the pairwise model with a regularization term respectively to the data. For the penalty we use where . Table 2 shows the estimated parameters.

| pair | ||||

|---|---|---|---|---|

| () | 8.27 e-7 | 1.91 | 6.61 | -1.21 |

| () | -3.27 e-6 | 0.93 | 2.37 | 1.77 |

One can directly observe from Table 2 that when adding regularization, is shrunk to nearly zero in both submodels. This implies that and as is almost identically zero and hence is predicted independently (for exact sparse solutions one can use SCAD or the norm). This not only confirms that regularization can limit information sharing but also illustrates that in the proposed models, one can automatically perform variable selection (cluster the outputs that ought to be predicted independently). A user might then choose to perform a separate on the selected subsets. To the best of our knowledge this is the first model to achieve simultaneous estimation and functional selection using dependent s.

6.3 Illustration with Subsets of Correlated Outputs

6.3.1 Low Dimensional Setting

For this setting we study the case when subsets of outputs are correlated. We first perform inference in a low dimensional regime to compare with the full that does not face the challenge of large complexity and extremely high dimensional parameter space. We generate outputs from

Here we focus on predicting outputs , using the following models: (1) where and respectively, (2) Pairwise model where predictions are combined using the robust product of experts in Deisenroth & Ng (2015) (see Appendix F), (3) Arrowhead model, (4) a univariate on , (5) A bivariate with outputs and (i.e. outputs in ) denoted as . We use , for and for . The squared exponential kernel is used. Results for the MSE over uniformly spaced points in are given in Fig. 4 where the experiment is replicated 30 times. Also Tukey’s multiple comparison test is done and only significant results are reported in the discussion below.

The first result to observe is that outperformed which confirms that negative transfer occurred since when outputs from are analyzed separately they produce better predictive results. However the key observation is that the pairwise and arrowhead models outperform . This is because, when learning an output from , both pairwise and arrowhead models can leverage the correlation with other outputs and still avoid negative transfer evidenced through . Also both proposed latent structures had comparable performance with , which confirms their capability to provide competitive predictive results with lower number of parameters and computational complexity. Another interesting result is that and have similar performance. Indeed, this is expected based on theorem 3, where if we have distinct subsets we only need to avoid negative transfer. However in reality is not given in in advance.

6.3.2 Moderate and Large Dimensional Setting

In this setting we aim to compare our proposed structures when the number of parameters is significantly increased. Specifically and outputs are used. For the settings, outputs are generated from a with zero mean and under the following settings: (We slightly abuse notation with being an indicator here)

For the , the settings can be found in Appendix G. We generate points evenly spaced in for each output where 8 points are used for training 7 for testing. Similar to the setting in Sec. 6.3.1 we test on under 30 replications. The results for are shown in Fig. 5. From the result we can see that , and the arrowhead model yield similar results (also confirmed via Tukey’s test). This once again confirms that the arrowhead model has competitive performance and that with enough latent functions one can avoid negative transfer. Yet the interesting result is the fact that the pairwise model showed much better performance. The reason is due to the fact that in each pair the number of estimated parameters is very small and thus one can except better estimators compared with competing models as parameter dimension increases. This fact is further illustrated through the results of shown in Fig. 6.

In Fig. 6 for the we use thus we have parameters to estimate. The results show that with there is a huge decrease in predictive performance. This result is expected as it is extremely challenging to obtain good parameter estimates specifically for a likelihood function which is known to be highly non-linear with many local critical point with bad generalization power. Indeed, similar decrease in performance in a high dimensional parameter space has been reported in Li & Zhou (2016) and Li et al. (2018). Here both the arrowhead and pairwise models offer a solution that, not only scales with any , but also can lead to better performance.

We note that on average with took hours to estimate despite the computational complexity being relatively small with . While the arrowhead model took minutes and seconds for the pairwise model. In practice its very common to have , this indeed exacerbates the challenge above and further highlights the needs for the proposed relaxation models.

6.4 Case Studies

In this setting, we use the real data set from the pacific exchange rate service (http://fx.sauder.ubc.ca/data.html). Our goal is to predict the foreign exchange rate compared to the United States dollar currency. We utilized the exchange rates of the top ten international currencies during the 157 weeks of from 2017 to 2020. Each output is adjusted to have zero mean and unit variance. We randomly choose 100 points as training points and the remaining 57 points as testing points. Table 3 shows the predictive arrow using different models while Fig. 7 provides illustartions. Once again the results confirm the need for the proposed relaxation models as parameter estimates tend to deteriorate as the parameter dimension increases.

| model | pairwise | arrowhead | |

|---|---|---|---|

| MSE(MXN/USD) | 0.040 | 0.031 | 0.217 |

| MSE(KRW/USD) | 0.015 | 0.035 | 0.322 |

Note that analysis on a Parkinson dataset to predict a disease symptom score is provided in Appendix G. The dataset is available on http://archive.ics.uci.edu/ml/datasets/Parkinsons+Telemonitoring.

7 Conclusion

This article addresses the key challenge of constructing an that can borrow strength across outputs without forcing correlation which can lead to negative transfer. We show that this is achieved by having a sufficient number of latent functions regardless of the kernel used. This however comes with the challenge of greatly augmenting the parameter space. To this end, we propose two latent structures that can avoid negative transfer and maintain estimation in a low-dimensional parameter space. A key feature of our structures is that they allow functional variable selection via regularization. Further analysis into the use of such latent structures and other dependent models for selection in functional data settings or probabilistic graphical models can be an interesting topic to explore.

References

- Alvarez & Lawrence (2009) Alvarez, M. and Lawrence, N. D. Sparse convolved gaussian processes for multi-output regression. In Advances in neural information processing systems, pp. 57–64, 2009.

- Álvarez & Lawrence (2011) Álvarez, M. A. and Lawrence, N. D. Computationally efficient convolved multiple output gaussian processes. Journal of Machine Learning Research, 12(May):1459–1500, 2011.

- Alvarez et al. (2012) Alvarez, M. A., Rosasco, L., Lawrence, N. D., et al. Kernels for vector-valued functions: A review. Foundations and Trends® in Machine Learning, 4(3):195–266, 2012.

- Álvarez et al. (2019) Álvarez, M. A., Ward, W. O., and Guarnizo, C. Non-linear process convolutions for multi-output gaussian processes. Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics (AISTATS), 2019.

- Basawa et al. (1976) Basawa, I., Feigin, P., and Heyde, C. Asymptotic properties of maximum likelihood estimators for stochastic processes. Sankhyā: The Indian Journal of Statistics, Series A, pp. 259–270, 1976.

- Basawa (1980) Basawa, I. V. Statistical Inferences for Stochasic Processes: Theory and Methods. Elsevier, 1980.

- Burt et al. (2019) Burt, D. R., Rasmussen, C. E., and Van Der Wilk, M. Rates of convergence for sparse variational gaussian process regression. arXiv preprint arXiv:1903.03571, 2019.

- Caruana (1997) Caruana, R. Multitask learning. Machine learning, 28(1):41–75, 1997.

- Chen et al. (2018) Chen, K., Groot, P., Chen, J., and Marchiori, E. Spectral mixture kernels with time and phase delay dependencies. arXiv preprint arXiv:1808.00560, 2018.

- Chen et al. (2019) Chen, K., van Laarhoven, T., Groot, P., Chen, J., and Marchiori, E. Multioutput convolution spectral mixture for gaussian processes. IEEE Transactions on Neural Networks and Learning Systems, 2019.

- Damianou et al. (2016) Damianou, A. C., Titsias, M. K., and Lawrence, N. D. Variational inference for latent variables and uncertain inputs in gaussian processes. The Journal of Machine Learning Research, 17(1):1425–1486, 2016.

- Deisenroth & Ng (2015) Deisenroth, M. P. and Ng, J. W. Distributed gaussian processes. Proceedings of the 32 nd International Conference on Machine Learning, 2015.

- Fan & Li (2001) Fan, J. and Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American statistical Association, 96(456):1348–1360, 2001.

- Fieuws & Verbeke (2006) Fieuws, S. and Verbeke, G. Pairwise fitting of mixed models for the joint modeling of multivariate longitudinal profiles. Biometrics, 62(2):424–431, 2006.

- Fricker et al. (2013) Fricker, T. E., Oakley, J. E., and Urban, N. M. Multivariate gaussian process emulators with nonseparable covariance structures. Technometrics, 55(1):47–56, 2013.

- Friedman et al. (2001) Friedman, J., Hastie, T., and Tibshirani, R. The elements of statistical learning, volume 1. Springer series in statistics New York, 2001.

- Gramacy & Apley (2015) Gramacy, R. B. and Apley, D. W. Local gaussian process approximation for large computer experiments. Journal of Computational and Graphical Statistics, 24(2):561–578, 2015.

- Higdon (2002) Higdon, D. Space and space-time modeling using process convolutions. In Quantitative methods for current environmental issues, pp. 37–56. Springer, 2002.

- Langley (2000) Langley, P. Crafting papers on machine learning. In Langley, P. (ed.), Proceedings of the 17th International Conference on Machine Learning (ICML 2000), pp. 1207–1216, Stanford, CA, 2000. Morgan Kaufmann.

- Lehmann & Casella (2006) Lehmann, E. L. and Casella, G. Theory of point estimation. Springer Science & Business Media, 2006.

- Li & Zhou (2016) Li, Y. and Zhou, Q. Pairwise meta-modeling of multivariate output computer models using nonseparable covariance function. Technometrics, 58(4):483–494, 2016.

- Li et al. (2018) Li, Y., Zhou, Q., Huang, X., and Zeng, L. Pairwise estimation of multivariate gaussian process models with replicated observations: Application to multivariate profile monitoring. Technometrics, 60(1):70–78, 2018.

- Moore & Russell (2015) Moore, D. and Russell, S. J. Gaussian process random fields. In Advances in Neural Information Processing Systems, pp. 3357–3365, 2015.

- Moreno-Muñoz et al. (2018) Moreno-Muñoz, P., Artés, A., and Álvarez, M. Heterogeneous multi-output gaussian process prediction. In Advances in neural information processing systems, pp. 6711–6720, 2018.

- Nguyen et al. (2014) Nguyen, T. V., Bonilla, E. V., et al. Collaborative multi-output gaussian processes. In UAI, pp. 643–652, 2014.

- Nychka et al. (2015) Nychka, D., Bandyopadhyay, S., Hammerling, D., Lindgren, F., and Sain, S. A multiresolution gaussian process model for the analysis of large spatial datasets. Journal of Computational and Graphical Statistics, 24(2):579–599, 2015.

- Pan & Yang (2009) Pan, S. J. and Yang, Q. A survey on transfer learning. IEEE Transactions on knowledge and data engineering, 22(10):1345–1359, 2009.

- Parra & Tobar (2017) Parra, G. and Tobar, F. Spectral mixture kernels for multi-output gaussian processes. In Advances in Neural Information Processing Systems, pp. 6681–6690, 2017.

- Tresp (2000) Tresp, V. A bayesian committee machine. Neural computation, 12(11):2719–2741, 2000.

- Ulrich et al. (2015) Ulrich, K. R., Carlson, D. E., Dzirasa, K., and Carin, L. Gp kernels for cross-spectrum analysis. In Advances in neural information processing systems, pp. 1999–2007, 2015.

- Ver Hoef & Barry (1998) Ver Hoef, J. M. and Barry, R. P. Constructing and fitting models for cokriging and multivariable spatial prediction. Journal of Statistical Planning and Inference, 69(2):275–294, 1998.

- Wang et al. (2019) Wang, K., Pleiss, G., Gardner, J., Tyree, S., Weinberger, K. Q., and Wilson, A. G. Exact gaussian processes on a million data points. In Advances in Neural Information Processing Systems, pp. 14622–14632, 2019.

- Whittaker (2009) Whittaker, J. Graphical models in applied multivariate statistics. Wiley Publishing, 2009.

- Zhao & Sun (2016) Zhao, J. and Sun, S. Variational dependent multi-output gaussian process dynamical systems. The Journal of Machine Learning Research, 17(1):4134–4169, 2016.

Appendix A Proof of Lemma 1

Consider the model with 2 outputs and , modeled with one latent function , where and is Dirac Delta function. We will show that for any , = 0 if and only if at least one of , is identically equal to zero. The sufficiency is obvious, then we prove necessity.

First assume and satisfy the first condition, i.e. such that and , where and . Gaussian, Matern, rational quadratic, periodic and locally periodic kernels are typical examples for this case. For any two inputs points and , denote . Then

Since and , for . Thus, if and only if at least one of , is equal to zero, i.e. at least one of , is identically equal to 0.

Then consider the second case when has the form with parameters . Here for simplicity, we only prove for one dimension case when and the proof for general case is similar. We rewrite , as . Since now ,

where is the Fourier operator and denote the convolution operator. The last equality is derived using the conclusion of Convolution Theorem. Hence if and only if

where

Since , we have for any and . Therefore, we reach the conclusion either or , i.e. at least one of , is identically equal to 0.

For general case when is a constructed from , i.e.

where . Consider the first case when there such that , and , where , and .

Similar as the argument when is Dirac Delta function, we have if and only if one of and is identically equal to 0.

Now consider the case when , and satisfy the second condition, then

Hence if and only if . Similar as the proof when is Dirac Delta function, we reach the conclusion that if and only if one of and is identically equal to 0.

Appendix B Proof of Theorem 2

We use an induction argument to establish the proof.

In Lemma 1, we have shown that if we model two outputs using one latent function, then for any . On the other hand, if we use latent functions, then the model has enough flexibility to construct , from different latent functions, i.e. and , where . In this case, for any and .

i.e. we have proved that when , the model could achieve

if and only if the number of latent function .

Then we use induction: Assume the conclusion holds for : Consider with outputs . Let the index set of all outputs comprise of non-empty disjoint subsets . If for any and , , then we at least need latent functions, where and . Now consider . We could separate this problem into two steps: first, we want disjoint subsets of to be uncorrelated. Denote the index of these subsets as . Follow the assumption in the induction, we at least need latent functions , i.e. any output in , is constructed from the convolution of and a smooth kernel: , . Then, we want the outputs with index to be uncorrelated with the outputs in the previous subsets. If we still use latent functions, then the outputs , has to be constructed using the latent functions in . Then, similar to the case when we have 2 outputs, there must exist a subset , , such that , has non-zero covariance function with the outputs in , i.e. these two subsets are correlated. On the other hand, if we use latent functions, then the model has capability to construct the outputs in , from different latent functions, i.e. any output , can be constructed as , . In this case,

for any and , where and are respectively arbitrary outputs with index in and .

Therefore, we have proved that when , where are non-empty disjoint subset of , for any and if and only if the number of latent functions , where and . That is to say, the model could achieve

if and only if .

Appendix C Illustrative example of Theorem 2

Here we present a simple example when and to illustrate Theorem 2. We have proved that in this case, the model could achieve

For any new input point , the integrative analysis of and leads to the prediction:

where and , ; , The prediction result is exactly the same with that when we model and independently, which means that the model has capability to make the model collapse into two 2 independent , hence we achieve our goal of avoiding negative transfer between and .

Appendix D Proof of Theorem 3

To establish the proof we start with the fact that the unpenalized likelihood is consistent such that (Basawa, 1980; Basawa et al., 1976), under the usual regularity conditions similar to those of independent normal data (refer to chapter 7 of Basawa (1980) and Lehmann & Casella (2006)). Our aim is to study .

The results here provide similar results to that of Fan & Li (2001) yet under the correlated setting. For consistent notation we maximize the log-likelihood with form where . To prove theorem 2, we need to show that given there exists a a constant such that

where . This implies that there exists a such that . We now have that

where denotes the element in corresponding to . Here we recall that the penalty is non-negative; , and if . Therefore, when .

However, we have that where is the gradient vector and is the hessian at . Also, . Therefore,

The remainder of the proof is identical to theorem 1 in Fan & Li (2001).

Appendix E Formula of covariance functions using spectral kernels

Consider the model with two outputs and one latent function

where

From the proof of Lemma 1, we know that

K Compute the Fourier Transform of and respectively:

Thus

We hence get the covariance function between and :

where

Note that in our simulation, we use the kernel with and the number of latent functions is . Thus the covariance function between and becomes

Appendix F Case Study

F.1 Setting

For setting, we generate 50 outputs from the Gaussian Process with mean zero and under the following setting

We have 15 points evenly spaced in and we randomly choose 8 points from them as training data and the left 7 points are testing points. For the full MGP model in this setting, we use 20 latent functions to construct the model.

F.2 Parkinson Data

We use the Parkinson data set to predict the disease symptom score(motor UPDRS and total UPDRS) of Parkinson patients at different times. The data set is available on http://archive.ics.uci.edu/ml/datasets/Parkinsons+Telemonitoring. At each time, we randomly choose 10 patients from the data set to model a model with 10 outputs and randomly split 60% data of each patient as training sets and 40% as testing sets. Our goal is to predict the motor UPDRS and total UPDRS of the 10th patient in each round. We run our model for 70 times. Figure 8 shows the predictive error using different models.