On length measures of planar closed curves and the comparison of convex shapes

Abstract.

In this paper, we revisit the notion of length measures associated to planar closed curves. These are a special case of area measures of hypersurfaces which were introduced early on in the field of convex geometry. The length measure of a curve is a measure on the circle that intuitively represents the length of the portion of curve which tangent vector points in a certain direction. While a planar closed curve is not characterized by its length measure, the fundamental Minkowski-Fenchel-Jessen theorem states that length measures fully characterize convex curves modulo translations, making it a particularly useful tool in the study of geometric properties of convex objects. The present work, that was initially motivated by problems in shape analysis, introduces length measures for the general class of Lipschitz immersed and oriented planar closed curves, and derives some of the basic properties of the length measure map on this class of curves. We then focus specifically on the case of convex shapes and present several new results. First, we prove an isoperimetric characterization of the unique convex curve associated to some length measure given by the Minkowski-Fenchel-Jessen theorem, namely that it maximizes the signed area among all the curves sharing the same length measure. Second, we address the problem of constructing a distance with associated geodesic paths between convex planar curves. For that purpose, we introduce and study a new distance on the space of length measures that corresponds to a constrained variant of the Wasserstein metric of optimal transport, from which we can induce a distance between convex curves. We also propose a primal-dual algorithm to numerically compute those distances and geodesics, and show a few simple simulations to illustrate the approach. Keywords: planar curves, length measures, convex domains, isoperimetric inequality, metrics on shape spaces, optimal transport, primal-dual scheme.

1. Introduction

There is a long history of interactions between geometric analysis and measure theory that goes back to the early 20th century alongside the development of convex geometry [2, 23] and later on, in the 1960s, with the emergence of a whole new field known as geometric measure theory [22] from the works of Federer, Fleming and their students. Since then, ideas from geometric measure theory have found their way into applied mathematics most notably in areas such as computational geometry [16, 1, 10, 31] and shape analysis [29, 26, 14, 41] with applications in physics, image analysis and reconstruction or computer vision among many others.

A key element explaining the success of measure representations in geometric analysis and processing is their ability to encompass geometric structures of various regularities being either smooth immersed or embedded manifolds or discrete objects such as polytopes, polyhedra or simplicial complexes. They usually provide a comprehensive and efficient framework to capture the most essential geometric features in those objects. One of the earliest and most illuminating example is the use of the concept of area measures [2] in convex geometry which has proved instrumental to the theory of mixed volumes and the derivation of a multitude of geometric inequalities, in particular of isoperimetric or Brunn-Minkowski types [24, 42]. Area measures are typically defined for convex domains in through the classical notion of support function of a convex set and can then be interpreted as a measure on the sphere that represents the distribution of area of the domain’s boundary along its different normal directions. A fundamental property of the area measure, that resulted from a series of works by Minkowski, Alexandrov, Fenchel and Jessen, is that there is a one-to-one correspondence between convex sets (modulo translations) and area measures: in particular the area measure characterizes a convex set up to translation and therefore fully encodes its geometry.

In this paper, we are interested in area measures in the special and simplest case of planar curves. These are more commonly referred to as length measures and are finite measures on the unit circle that represents the distribution of length of the curve along its different tangent directions in the plane. Yet, unlike most previous works such as [34] which are mainly focused on convex objects, one of our purpose is to first introduce and investigate length measures of general rectifiable closed curves in the plane. Our objective is also to provide as elementary and self-contained of an introduction as possible to these notions from the point of view and framework of shape analysis without requiring preliminary concepts from convex geometry. Although, in sharp contrast to the convex case, there are infinitely many rectifiable curves that share the same length measure, we will emphasize some simple geometric properties of the underlying curve that can be recovered or connected to those of its length measure. Furthermore, this approach will allow us to formulate and prove a new characterization of the unique convex curve associated to a fixed measure on given by the Minkowski-Fenchel-Jessen theorem in the form of an isoperimetric inequality. Specifically, we show that this convex curve is the unique maximizer of the signed area among all the rectifiable curves with the same length measure. This extends some previously known results about polygons [8] from the field of discrete geometry.

One of the fundamental problem of shape analysis is the construction of relevant metrics on spaces of shapes such as curves, surfaces, images… which is often the first and crucial step to subsequently extend statistical methods on those highly nonlinear and infinite dimensional spaces. Considering the shape space of convex curves, the one-to-one representation provided by the length measure can be particularly advantageous in that regard. Indeed, various types of generalized measure representations of shapes such as currents [27, 19], varifolds [14, 32] or normal cycles [41] have been used in the recent past to define notions of distances between geometric shapes from corresponding metrics on those measure spaces. A common downside of all these frameworks however is that the mapping that associates a shape to its measure is only injective but not surjective. This typically prevents those metrics to be directly associated to geodesics on the shape space without adding more constraints and/or exterior regularization, for example through deformation models. In contrast, the bijectivity of the length measure map from the space of convex curves modulo translations to the space of all measures on satisfying only a simple linear closure constraint hints at the possibility of constructing a geodesic distance based on the length measure. In this paper, we propose an approach that relies on a constrained variant of the Wasserstein metric of optimal transport for probability measures on which, as we show, turns the set of convex curves of length 1 modulo translations into a geodesic space. We also adapt and implement a primal-dual algorithm to numerically estimate the distance and geodesics.

The paper is organized as follows. In Section 2, we define the length measure of a Lipschitz regular closed oriented curve of the plane and provide a few general geometric and approximation properties. In Section 3, we discuss specifically the case of convex curves and recap some of the fundamental connections between convex geometry and length measures, in particular the Minkowski-Fenchel-Jessen theorem. Section 4 is dedicated to the statement and proof of an isoperimetric inequality for curves of prescribed length measure, from which we can also find again the classical isoperimetric inequality for planar curves. Section 5 focuses on the construction of geodesic distances on the space of convex curves and in particular on our proposed constrained Wasserstein distance and its numerical computation. We also present and compare a few examples of estimated geodesics. Finally, Section 6 concludes the paper by discussing some current limitations of this work and potential avenues for future improvements and extensions.

2. Length measures of Lipschitz closed curves

2.1. Definitions

In all the paper, we will identify the 2D plane with the space of complex numbers . We start by introducing the space of closed, immersed oriented Lipschitz parametrized curves in the plane which we define as:

Depending on the context, we will identify either with the interval or the circle . We recall that Lipschitz continuous curves are indeed differentiable almost everywhere and that the derivative is integrable on , which implies that the length is always finite. Note that we do not assume a priori that curves are simple. In anticipation to what follows, we also introduce the space of unparametrized oriented curves up to translations which we define as the quotient space

the equivalence relation being that for , if and only if there exists such that and the orientations of and coincide. This will constitute the actual space of shapes, since objects in are essentially planar curves modulo reparametrizations and translations or in other words oriented rectifiable closed curves modulo translations in the plane. Furthermore, for any parametrization of a curve in or , we let be the Gauss map, i.e. for all , which is well-defined for almost all . Lastly, we will write the arc-length measure of , which is a positive measure on . In all this paper, we will denote by the set of all positive finite Radon measures on and the set of signed finite Radon measures on . Also, we recall that the pushforward of a measure by a mapping is the measure denoted such that for all Borel subset . Finally, we will denote by the Lebesgue measure on . We can now introduce the notion of length measure which is the focus of this work.

Definition 2.1 (Length measure of a curve).

For , we define the length measure of that we write , as the positive Radon measure on obtained as the pushforward of by the Gauss map i.e. . In other words, for all Borel set of :

| (1) |

For any such that in , one has leading to the well-defined mapping from to the space of positive measures on .

The invariance of to the equivalence relation in Definition 2.1 is immediate from the fact that the arclength measure and Gauss map both only depend on the geometric image of the curve and are invariant to translations in the plane. In fact, the length measure of can be also interpreted as the pushforward of the Hausdorff measure on the plane by the mapping such that for any , is the direction of the tangent vector of the curve at . Note however that does depend on the orientation of : more specifically, if the orientation is reversed, the associated length measure of the resulting is the reflection of that is for all Borel set of (or equivalently if is identified with , ). Thus is a geometric quantity associated to unparametrized oriented curves modulo translations of the plane. For simplicity, we will still write to denote the length measure associated to whole equivalence class .

Remark 2.2.

The measure can be intuitively understood as the distribution of length along the different directions of tangents to the curve , as we will further illustrate below. We point out that our definition of length measure departs slightly from the traditional concept of length measure (or perimetric measure as it is sometimes called) introduced initially for convex objects by Alexandrov, Fenchel and Jessen [2, 23], in that we consider here the direction of unit tangent vectors rather than unit normal vectors to the planar curve. Note that these two definitions only differ by a simple global rotation of the measure by an angle of and so have a straightforward relation to one another. The reason for choosing this alternative convention is that this work focuses on length measures of planar curves and not area measures for general hypersurfaces, and our presentation will become a little simpler in this setting.

Lastly, thanks to the classical Riesz-Markov-Kakutani representation theorem, we also recall that signed measures on can be alternatively interpreted as elements of the dual to the space of continuous functions on . In the case of a length measure, the measure acts on any as follows:

| (2) |

By straightforward extension, we can also consider the action of on continuous complex-valued functions given by the same expression as in (2). Before looking into the properties of length measures, we add a final remark on the definition itself.

Remark 2.3.

Length measures can be also connected to some other concept of geometric measure theory namely the 1-varifolds as introduced in [3] and more precisely the oriented varifold representation of curves which is discussed and analyzed for instance in [32]. Indeed, the oriented varifold associated to a curve is by definition the positive Radon measure on the product space given for all continuous compactly supported function on by:

and is once again independent of the choice of in the equivalence class . By comparison to (2), the 1-varifold can be thought as a spatially localized version of the length measure , that is a distribution of unit directions at different positions in the plane. Equivalently, the length measure is obtained by marginalizing with respect to its spatial component. This loss in spatial localization explains the lack of injectivity of the length measure representation (even after quotienting out translations) that is discussed below.

2.2. Basic geometric properties

Let us now examine more closely the most immediate properties of the length measure, in particular how it relates to various geometric quantities and transformations of the underlying curve. For a general smooth mapping , we will write the curve .

Proposition 2.4.

Let and its length measure. Then

-

(1)

For all :

In particular, the total length of is .

-

(2)

For any rotation of angle , namely for all , .

-

(3)

For any , , where denotes the rescaling of by a factor .

Note that property (1) above implies in particular that the length measures of curves of length one are probability measures on . Combined with property (3) on the action of scalings, one could further define a mapping from , the space of curves modulo translation and scaling, into the space of probability measures on by essentially renormalizing curves to have length one. In all cases, by identifying with , a convenient way to represent and visualize is through its cumulative distribution function (cdf):

which is always a non-decreasing and right-continuous function.

|

|

|

There are some further constraints that length measures must satisfy. Most notably, since the curve is closed, we obtain from (2) that:

In other words, all length measures are such that the expectation of on vanishes. Together with the above, this leads us to introduce the following subset of :

Definition 2.5.

We denote by (resp. ) the space of all positive (resp. signed) Radon measures on which are such that:

We then define the mapping by which does not depend on the choice of in the equivalence class .

Again, we will often replace an element of the quotient by one of its representant and then write instead of .

Remark 2.6.

Note that any positive measure on such that for all Borel subsets of belongs to . Indeed the assumption implies that:

where the third equality follows from the change of variable and the fact that by assumption. As a consequence, given any positive measure on , one can always symmetrize it by defining and obtain a measure of . As a side note, convex curves for which the length measure satisfy are called central-symmetric and are an important class of objects in convex geometry.

It is obvious that the mapping in Definition 2.5 cannot be injective on . In fact, given , there are infinitely many other curves that share the same length measure as . In Figure 1, we show several examples of curves having the same length measure which c.d.f is plotted underneath. On the other hand, is surjective as we shall see in the next section. For now, we will just illustrate the different types of length measures depending on he nature of the underlying curve with a few examples.

|

|

|

|

Example 2.7.

Assume that for is the unit circle in . Then and thus is the uniform measure on .

Example 2.8.

Consider a closed polygon with vertices and the faces . For all , let , (by convention ) and . Then, one can construct a parametrization of the polygon as follows. We define for . Then, on , , take . It follows that for and one can easily check that it leads to . The length measure of a polygon is thus always a sum of Dirac masses, c.f Figure 2 for an illustration.

Example 2.9.

Lastly, we consider an example of a singular continuous length measure. To construct it, let’s introduce the standard Cantor distribution that we rescale to the interval and denote it by . Its support is the Cantor set and its cumulative distribution function is the well-known devil’s staircase function on . The measure is not in but following Remark 2.6, we can consider instead its symmetrization which c.d.f is given by . Now, letting being the pseudo-inverse of , such that for all by , we define the curve :

which is and satisfies and . As we will show more in details and in general in the proof of Theorem 3.2, it follows that we have and therefore is the above symmetrized Cantor distribution . We simulated such a curve using an approximation at level 12 of the Cantor measure, which is shown in Figure 2.

Those examples show that length measures of curves in may have density with respect to the Lebesgue measure on , be singular discrete measures but also singular continuous measures as the last example shows. Furthermore, we have the following connection between the length measure density of a sufficiently regular curve and its curvature:

Proposition 2.10.

If is in addition twice differentiable with bounded and a.e non-vanishing curvature then where the density is given for a.e by:

| (3) |

where is the curvature of .

Proof.

First, we notice that . Now, let be a continuous test function . By definition, we have:

Since is a Lipschitz function, by the coarea formula ([4] Theorem 2.93), the above integral can be rewritten as:

and furthermore for almost all , is a finite set so we obtain:

∎

A consequence is that the c.d.f of the length measure of a curve satisfying the assumptions of Proposition 2.10 is given by and thus does not have any jumps. Such jumps can only occur with the presence of flat faces in the curve, in particular for polygons as in Example 2.8. Note also that if the curve is smooth and strictly convex, the curvature is non-vanishing everywhere and the mapping is a bijection which implies in this case that .

2.3. Convergence of length measures

It will be useful for the rest of the paper to examine the topological properties of the mapping . Specifically, we want to determine under what notion of convergence in the space of curves, we can recover convergence of the corresponding length measures. We remind that a sequence of measures is said to converge weakly to , which will be written , when for all , as .

First, it is clear that uniform convergence in (or equivalently convergence in Hausdorff distance of the unparametrized curves in ) does not imply that converges to even weakly. Indeed it suffices to consider a sequence of staircase curves as the one displayed in Figure 3 which converges in Hausdorff distance to the diamond curve in blue; however, for all , the length measure is identical and equal to whereas .

However, assuming in addition some convergence of the derivatives, we have the following:

Proposition 2.11.

Let be a sequence of with uniformly bounded Lipschitz constant such that there exists for which for almost every . Then converges weakly to and for all Borel set with one has .

Proof.

Let be a continuous real-valued function on .

By the assumptions above, outside a set of measure in , we have with and for all . Thus for almost all in , and thus

since is continuous. Furthermore, as is bounded, there exists , such that for all and by assumption, there also exists such that . It results from Lebesgue dominated convergence theorem that:

and so . The second statement is a classical consequence of weak convergence of Radon measures (see [20] Theorem 1.40). ∎

Another important question is whether polygonal approximation of a curve leads to consistent length measures which we answer in the particular case of piecewise smooth curves:

Proposition 2.12.

Let be a curve of which is assumed in addition to be piecewise twice differentiable with bounded second derivative. If is a sequence of polygonal curves with vertices given by with and as , then converges weakly to .

Proof.

Using Proposition 2.11, we simply need to show that converges pointwise to a.e and bound the Lipschitz constant of uniformly. As is piecewise smooth, we can treat each of the intervals separately and fix with being twice differentiable at . For , let us denote . For large enough, let with twice differentiable on . Then we have by definition for a certain by Taylor’s theorem which, using the boundedness of the second derivative of , gives

Thus converges to pointwise except maybe on the finite set of points where is not twice differentiable. Furthermore, the above equation also implies that which is uniformly bounded in and therefore Proposition 2.11 leads to the conclusion. ∎

Note that the above approximation property requires more regularity on . We will see in the next section that the result holds for all convex curves as well.

3. Convex curves and length measures

As already pointed out, the length measure does not characterize the curve itself since there are in fact infinitely many curves of in the fiber for any given . Yet, remarkably, it is the case if one restricts to convex curves in . This follows from the fundamental Minkowski-Fenchel-Jessen theorem (also in part due to Alexandrov) established in [23, 2] that shows in general dimension the uniqueness of a convex domain associated to any given area measure. For the specific case of planar curves which is the focus of this paper, we provide a more direct and constructive proof of this result in the following section before highlighting a few other well-known connections with convex geometry.

3.1. Characterization of convex curves by their length measure

In all the following, we will denote by the set of curves in which are simple, convex and positively oriented. For technical reasons that will appear later, will adopt the convention that degenerate convex curves made of two opposite segments (which length measures are of the form ) belong to . For simplicity, we shall still write for the restriction of the previous length measure mapping to . Before stating the main connection between curves in and length measures, let us start with the following lemma.

Lemma 3.1.

Let such that there exists with . Then there exist such that with strict equality unless is a segment.

Proof.

By contradiction, let’s assume that given as above, we have for all where is defined. Up to a rotation and translation of the curve, we may assume that and that for . Assuming that a.e on would lead to which is impossible. On the other hand, if on a subset of of non-zero measure, then we would have:

| (4) |

strictly positive which is again a contradiction. It results that we can find such that . Furthermore, if , it can be easily seen from a similar reasoning as above and (4) that in this case, one must have or a.e on and therefore the curve is a subset of the horizontal line. ∎

The following result is a reformulation of the Minkowski-Fenchel-Jessen theorem in the special setting of this paper. The usual proof of this theorem in general dimensions is quite involved and requires many preliminary results and notions from convex geometry. We propose here an alternative proof specific to the case of curves which is more elementary and constructive.

Theorem 3.2.

The length measure mapping is a bijection from to .

Proof.

1. We first show the surjectivity of . Thus, taking , we want to construct , a parametrization of a curve in such that . To do so, let us first assume, without loss of generality thanks to Proposition 2.4 on the action of rescaling on length measures, that . Then let be the c.d.f of as we defined previously and let’s introduce its pseudo-inverse :

Note that, as , the pseudo-inverse is a non-decreasing function with . We now define as follows:

| (5) |

We first see that . Indeed, by construction, is differentiable almost everywhere with giving thus and is a Lipschitz immersion. Next, we obtain that by checking the equality . This is simply a consequence of the fact that and the easy verification that so that

Incidentally, this also shows that the curve is indeed closed as:

One still needs to verify that the image of belongs to . As and is non-decreasing, is locally convex and we only need to show that is simple or is supported by a straight segment. By contradiction, let’s assume that there exists such that . We have to consider the two following cases:

-

•

If is not a segment then, since is non-decreasing on , there is a limit and we have that is strictly above the line passing by and directed by . Furthermore, using Lemma 3.1, we deduce that for all , we have which implies that for all , is below the line passing by directed by which contradicts the fact that .

-

•

If is a segment, then by Lemma 3.1 and the fact that is non-decreasing, we have that for all with even strict inequality if is not a segment of the same direction as . The latter case is not possible since we would then find that for , is strictly below the line containing the segment and thus . Finally, by a similar argument, we find that the image of on is again a segment of the same direction, which shows that is eventually a segment.

Thus the curve is either simple, convex and positively oriented or is a segment, and in all cases belong to . 2. Let us now prove the injectivity of . Specifically, we show that if is the arclength parametrization of a curve in , which we assume once again to have length , and then for almost all , . By assumption, we have and since the curve is convex and positively oriented, up to a shifting of the parameter, we may assume that is non-decreasing from to . Let us denote . From we get that . We need to show that . On the one hand, we have since for any we have . This leads to . On the other hand, for any we have that by definition of and consequently for all leading to . ∎

Remark 3.3.

Note that from the above proof, one can technically reconstruct a convex curve up to translation from its length measure directly based on (5). When the measure is discrete i.e. where and for all , the reconstruction becomes particularly simple. In this case, one can see that the corresponding convex polygon is obtained by selecting an initial vertex (e.g. at the origin) and ordering the edges such that the angles are in ascending order, which is a well-known algorithm for convex planar objects. However, this reconstruction is a significantly more difficult problem for area measures in higher dimensions, c.f. the discussion in Section 6.

3.2. Length measures and Minkowski sum of convex sets

The above correspondence between convex shapes and length measures has many interesting consequences and applications, in particular for the study of mixed areas and Brunn-Minkowski theory, as developed for example in [34, 30, 42]. We will not go over all of these in detail but only recap in this section a few results which shall be relevant for the rest of the paper.

First, in the category of planar convex curves, one has the following stronger version of the approximation result of Proposition 2.12:

Proposition 3.4.

Let be a planar convex domain with boundary and a sequence of convex polygons of boundary that converges in Hausdorff distance to . Then as . Furthermore, the area of converges to the area of i.e. .

This is classical property of convex sets and length measures which proof can be found in [42] (Theorem 1.8.16 and Theorem 4.1.1).

We now recall the definition of the Minkowski sum. If and are two convex planar domains, their Minkowski sum (also known as dilation in mathematical morphology) is defined by which is also a convex planar domain. More generally, one can define the Minkowski combination for as . This allows to view the set of all convex domains as a convex cone for this Minkowski addition. The length measure mapping has the following interesting property ([34] Theorem 3.2):

Proposition 3.5.

Let and be two convex domains and their boundary curves. Then, denoting a parametrization of the boundary of , it holds that .

Proof.

This is easily shown by approximating the convex domains and by sequences of convex polygons and using Proposition 3.4. The fact that the result holds for two convex polygons is well-known and is actually used algorithmically for the computation of the Minkowski sum of polygons in the plane in linear time, c.f. for example [44] (Chap. 13). ∎

By combining the above with Theorem 3.2 and Proposition 2.4 (3), we can summarize the properties of the length measure mapping as follows:

Corollary 3.6.

The map is an isomorphism of convex cones between and .

|

|

|

|

This implies that if and are two convex domains with length measures and , their Minkowski sum is such that which could be directly computed using the inversion formula (5) or, in the case of discrete measures and polygons, by adequately sorting the Diracs appearing in with angles in ascending order as explained earlier in Remark 3.3. We show an example of Minkowski sum computed with this approach in Figure 4.

4. An isoperimetric characterization

The previous section showed that there is a unique convex curve of positive orientation in the preimage for any measure . Several previous works on length and area measures have investigated variational characterizations of these convex objects in the context of shape optimization and geometric inequalities, see for instance the survey of [24] and [12]. These are typically expressing some variational property within the set of convex shapes only. We prove here a distinct characterization which can be rather interpreted as an isoperimetric inequality in each of the fiber , namely the convex curve of is also the one of maximal signed area among all the curves in of length measure . Our result extends to the whole class of Lipschitz regular curves some related results on polytopes in discrete geometry that were stated in [8]. We will also see how the classical isoperimetric inequality on curves can be recovered as a consequence.

Let us first recall that the signed area of a curve in with Lipschitz parametrization is given by (cf [46] Chap 1.10):

| (6) |

where denotes the unit normal vector to the curve. Note that for a simple and positively oriented curve, (6) is the usual area enclosed by this curve. We begin by reminding a few preliminary properties of the signed area. The first one is that the signed area is additive with respect to the “gluing” of two cycles, namely:

Lemma 4.1.

Let and . Consider any given Lipschitz open curve with and and denote the same curve but with opposite orientation. Define , the two closed curves in obtained by respectively concatenating with and with . Then:

Proof.

This is just a direct verification from the definition (6). Indeed, we have for and:

which leads to the result. ∎

We also have the following well-known approximation property of the signed area:

Lemma 4.2.

Let . There exists a sequence of polytopes (i.e. piecewise linear curves) in such that and converges weakly to .

Proof.

Let and by invariance to translation, let’s also assume that . Then since is Lipschitz regular, we have . Using the density of step functions in , we can construct a sequence of step functions such that as . By the converse of Lebesgue’s dominated convergence theorem ([9] Theorem 4.9), up to extraction of a subsequence, we can assume that converges to almost everywhere in and that there exists such that for almost all . Let us then define which is a piecewise linear curve in such that:

showing that as and as a consequence there exists such that for all . Now, by Proposition 2.11, we deduce that weakly converges to . Furthermore, for any , we have:

and for almost all , , , as . In addition,

As , Lebesgue dominated convergence theorem leads to:

∎

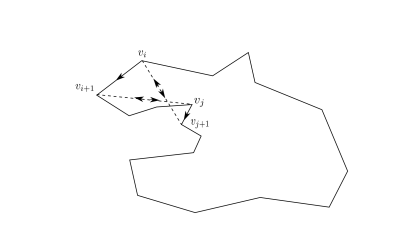

We will also need the following which is a particular case of our main result for polytopes with fixed number of edges, which proof is adapted from the one outlined in [21, 8]. We call a polytope non-degenerate if it does not lie entirely along a single line (in other words if its length measure is not a sum of two oppositely oriented Diracs).

|

|

|

Lemma 4.3.

Let be a non-degenerate polytope. Then the convex polygon such that satisfies .

Proof.

Let be a polytope that we represent by its ordered list of vertices . Its corresponding list of edges are denoted by where we assume the directions of the to be consecutively distinct. Recall that, from Theorem 3.2, there is a unique convex and positively oriented polygon such that . Let us define as the set of polygonal curves obtained by all the different permutations of the edges in . Note that for any polygonal curve , we have . Let us show that:

| (7) |

which will imply in particular that . Since is finite, the maximum is attained: let be a curve in of maximal signed area. By contradiction, assume that is not the convex positively oriented polygon (modulo translations).

Let us first consider the trivial cases. When , there are only two triangles up to translation in and thus is the negatively oriented one which signed are is clearly strictly smaller than the one of . For , the polytope being distinct from , one can see that, up to a cyclic permutation of its edges, we can assume without loss of generality that , i.e. the angle is decreasing between the first and second edge. Now the signed area (6) of the piecewise linear curve can be written more simply as (cf [46] Chap 1.10):

where are the consecutive vertices of and is any reference point in the plane. The above expression is independent of the choice of this reference point, thus choosing , we obtain after simplifications:

Then we can define the quadrilateral in which the ordering of the edges and is switched. This is still a polytope of and we have:

which contradicts the fact that is maximal.

Let us now assume . Then, by the assumption on , we can find four vertices () such that the corresponding quadrilateral with edges is not both convex and positively oriented. Let us write this quadrilateral and introduce in addition the polytope with successive vertices and the polytope with vertices . This amounts in decomposing the original polygon into three distinct cycles as depicted in Figure 5 (left). By the additivity property of the signed area from Lemma 4.1, this implies that . Now, from the case treated above, we can find a permutation of the edges of giving the convex positively oriented quadrilateral which satisfies . We then obtain the three polytopes , and shown respectively in green, blue and red in the example of Figure 5. By translation of , we can superpose the edge in with the edge in . Similarly, by translation of , we match the edge of on the edge of . Then, removing the trivial back and forth edges resulting from this superposition, one obtains a new polytope as shown in the right image in Figure 5. By construction, this polytope has the same list of edge vectors as and thus belongs to . Moreover, its signed area is:

which contradicts the maximality of among polytopes of . ∎

The convex polygon is sometimes referred to as the convexification of (which is distinct from the convex hull of ). We now state and prove the main result of this section.

Theorem 4.4.

Let such that the support of is not of the form for some . Among all curves in , the convex positively oriented curve is the unique maximum of the signed area and this maximum is given by:

| (8) |

Proof.

Let be a measure in satisfying the above assumption on its support. This means that for any curve in , the image of does not lie within a single straight line. Furthermore, we have . By the standard isoperimetric inequality, this implies that the area of any simple closed curve in is bounded by and thus the supremum of these areas is indeed finite. Let us first show that this maximal area is achieved and by the convex positively oriented curve of . We let be a maximizing sequence i.e and as . 1. As a first step, we want to replace this maximizing sequence by a sequence of piecewise linear curves. For a fixed , by Lemma 4.2, we can construct a sequence of polytopes such that and weakly converges to as . From this, let us construct a sequence of polytopes such that and as . Indeed, recall that the weak convergence of finite measures of is metrizable, for instance by the bounded Lipschitz distance ([45] Chap. 6). Thus, we have for all , as . Since , for any , we can find an increasing function such that . In addition, we also obtain an increasing function such that for any , we have and . We then set which gives on the one hand:

and thus . And on the other hand:

and therefore . 2. Now, using Lemma 4.3, we obtain a sequence of convex, positively oriented polygons such that and for all , . Using once again the invariance to translation, we can further assume that each passes through the origin. We then point out that the length converges to as since . This implies that is bounded uniformly in by some constant . If we denote by the polygonal domain delimited by i.e. , it is then easy to see that for all , is included in the fixed ball . In other words, the sequence is bounded in the space of compact subsets of the plane equipped with the Hausdorff metric. 3. We can therefore apply Blaschke selection theorem [7] which allows to assume, up to extraction of a subsequence, that the sequence of convex polygonal domains converges in Hausdorff distance to a convex domain which oriented boundary curve we write . Then by Proposition 3.4, we deduce that weakly converges to . As a consequence, and is thus the (unique) convex curve associated to given by Theorem 3.2. In addition, still from Proposition 3.4, we get that as and since the boundary curves and are simple and positively oriented it follows that as . Now, since for all , , we conclude that and therefore achieves the maximal area among all curves in .

|

|

4. The uniqueness of the maximum can be now showed by essentially adapting the argument used in the proof of Lemma 4.3 for polytopes. Assume by contradiction that is non-convex and that . Then we can find such that the quadrilateral with successive vertices is not convex positively oriented and is not degenerate. Let us further introduce defined as the concatenation of the portion of curve , the oriented segment and . In addition, let be the concatenation of and the segment , the curve obtained by concatenating and the segment and the concatenation of and . See Figure 6 for an illustration. Now from Lemma 4.1, we deduce that:

By reordering of the edges in , we obtain the convexified quadrilateral which, with the same argument used in the proof of Lemma 4.3, is such that . We can then rearrange the different cycles introduced above accordingly, as shown in Figure 6 (right). This is done by translating to match the corresponding edges in . As a result, we obtain a new closed curve which by construction still belongs to since it is just obtained by interchanging sections of . Then applying again Lemma 4.1:

This contradicts the fact that . 5. Finally, the expression of the area of the convex curve with respect to its length measure is well-known and can be recovered for example from [34], although the definition and presentation of length measures is slightly different than in the present paper. For completeness, we provide a direct proof. It suffices to show (8) for a convex polygon and the general case will directly follow from the above approximation argument. Let be a convex polygon with ordered list of edges and vertices . Its length measure is then where and . Since the polygon is convex and positively oriented, the successive angles of the edges are non-decreasing so we may assume without loss of generality that . Therefore the right hand side of (8) is:

By splitting the inner sum into two sums from and , after an easy calculation, we obtain:

since for all . This is in turn leads to:

| (9) |

since . Now the right hand side of (9) is exactly the signed area of . ∎

Another useful expression of the maximal area is through the Fourier coefficients of the measure . Let us define:

Proposition 4.5.

For all , the following holds:

| (10) |

Proof.

Let be for now fixed and . After some calculations that we skip for the sake of concision, one can show that the Fourier coefficients of are given by:

Now, writing which converges everywhere on and recalling that , we get that:

and since the series of functions in the above equation is uniformly converging on :

∎

Note that as a direct corollary of Theorem 4.4 and Proposition 4.5, we recover the standard isoperimetric inequality in its general form, that is:

Corollary 4.6.

For any curve in , we have:

with equality if and only if is a circle.

Proof.

By adequate rescaling, we can first restrict the proof to the case where . Then, from Theorem 4.4, we have that . Now, for any such that , we have and:

Therefore, with equality if and only if which implies that in the above we have for all . Therefore, is the uniform measure on and as , by the uniqueness of the maximizer in Theorem 4.4, we obtain that is the unit circle. ∎

5. A geodesic space structure on convex sets of the plane

The correspondence between convex curves and length measures that is given by Theorem 3.2 also suggests the idea of comparing convex sets through their associated length measures. In other words, one can transpose the construction of metrics on the set of convex domains to that of building metrics on the measure space . What makes this advantageous is that there are already many existing and well-known distances between measures that can be introduced to that end, and several past works [47, 1] have exploited this idea for various purposes. Yet most of these works are focused on the computation and/or mathematical properties of the distance only. In the field of interest of the authors, namely shape analysis, an often equally important aspect is to define distances (typically Riemannian or sub-Riemannian) which also lead to relevant geodesics on the shape space: such geodesics indeed provide a way of interpolating between two shapes and are crucial to extend many statistical or machine learning tools to analyze shape datasets. In this section, we will therefore be interested in building numerically computable geodesic distances on which will in turn induce metrics and corresponding geodesics on . Note that although we discuss this problem for planar curves as it is the focus of this paper, most of what follows can be adapted to larger dimensions by considering the general area measures of convex domains in . We first review several standard metrics on the space of measures of and analyze their potential shortcomings when it comes to comparing planar convex curves. We then propose a new constrained optimal transport distance.

5.1. Kernel metrics

As a dual space, the space of measures on can be endowed with dual metrics based on a choice of norm on a set of test functions. These include classical measure metrics such as the Lévy-Prokhorov or the bounded Lipschitz distance. Those distances metrizes the weak convergence between measures and have many appealing mathematical properties. However, they are typically challenging to compute or even approximate in practice. An alternative is the class of metrics derived from reproducing kernel Hilbert spaces (RKHS) which have been widely used in shape analysis [26, 19, 32] and in statistics [28, 35]. We briefly recap and discuss such metrics in our context. The starting point is a continuous and positive definite kernel to which, by Aronszajn theorem [5], corresponds a unique RKHS of functions on . Let us denote this space by and by its dual. Now taking as our space of test functions, one can introduce the norm on defined by:

| (11) |

and the corresponding distance . In general, (11) only gives a pseudo-distance on but is shown in [43] Theorem 6 that a necessary and sufficient condition to recover a true distance is for the kernel to satisfy a property known as -universality, which includes several families of well-known kernels such as Gaussian, Cauchy… An important advantage of this RKHS framework is that, thanks to the reproducing kernel property, the distance can be directly expressed based on as follows:

For discrete measures and , the above integrals become double sums and can be all evaluated in closed form once the kernel is specified which makes these distances easy to compute in practice. However, since all are essentially dual metrics to some Hilbert space, it is easy to see that the resulting metric space is flat, namely the constant speed geodesic between two positive measures and on is given by for . Furthermore, if both and belong to then so does for all since:

Therefore is a totally geodesic space for the metric .

|

|

|

|

|

|

|

|

Now if we look at the metric on that results from the identification of convex curves with their length measure in , we see that, by the isomorphism property of the mapping given by Corollary 3.6, the geodesic is simply the Minkowski combination of the two convex curves associated to and . Note that although the distance will depend on the choice of kernel , the geodesics are however independent of . While the Minkowski sum may seem like a natural way to interpolate between two convex sets, it may also not be the most optimal from the point of view of shape comparison. Figure 7 shows an example of what a geodesic looks like both in the space of convex sets and in the space of measures. It is for instance clear from this example that the measure geodesic do not involve actual transportation of mass which in this case would be a more natural behavior. This is the shortcoming that the metrics of the following sections will attempt to address.

5.2. Wasserstein metrics

A natural class of distances between probability measures is given by optimal transport and the Wasserstein metrics [45]. Let us consider the usual geodesic distance on defined by . Given two probability measures , the 2-Wasserstein distance between them is defined by:

where is the set of all transport plans between and i.e.

An optimal transport plan can be shown to exist and it is known that the Wasserstein metric makes into a length space with the corresponding geodesic being given by where for all , is a unit constant speed geodesic between and , that is specifically:

Alternatively, this means that for all continuous function :

Unlike in the previous kernel framework, the Wasserstein distance and geodesics cannot be expressed in closed form but there are many well-established and efficient approaches to numerically estimate those, such as combinatorial methods [18] or Sinkhorn algorithm [17] in the situation of discrete measures as well as methods based on the dynamical formulation of optimal transport [6, 37] for densities.

|

|

|

|

|

|

|

|

When it comes to the comparison of convex shapes, the use of Wasserstein metrics on the length or area measures was proposed for instance in [47]. Note that since is defined between probability measures, this amounts in restricting to convex curves of length i.e. comparing curves modulo rescaling which is often natural in shape analysis. However, an important downside of the Wasserstein metric for this problem is that the subspace of probability measures in is not totally geodesic for that metric, namely the above path of measures connecting to does not generally stay in when . This means that the intermediate measures cannot be canonically associated to a convex curve (or even to a closed curve as a matter of fact). We illustrate this in Figure 8 that shows the Wasserstein geodesic between the same two discrete measures as in Figure 7 and the curves obtained from the reconstruction procedure of Remark 3.3. This issue could be addressed a posteriori: for example the authors in [47] propose to consider a slightly modified path defined from the optimal transport plan. Specifically, they introduce defined by:

for which it is straightforward to check that for all . A clear remaining downside however is that, despite relying on the optimal transport between and , the paths of measures and corresponding convex curves are not actual geodesics associated to a metric.

5.3. A constrained Wasserstein distance on

In this section we shall instead modify the formulation of the Wasserstein metric so as to directly enforce the constraint defining . To do so, we will need to shift our focus to the so called dynamical formulation of optimal transport which was first introduced by the works of Benamou and Brenier in [6] and derive a primal-dual scheme adapted from the work of [37] to tackle the corresponding optimization problem.

5.3.1. Mathematical formulation

Formally, the above Wasserstein metric can be obtained by minimizing over all vector fields and path of measures that satisfy the boundary constraints , and the continuity equation on . To make this formulation more rigorous, one has to introduce the following definitions.

Definition 5.1.

Let be a couple of measures on which we will write . We say that satisfy the continuity equation with boundary conditions and in the distribution sense if:

| (12) |

for all .

We recall the following usual property of the continuity equation

Property 5.2.

If satisfies the above continuity equation with boundary conditions and , then the time marginal of is the Lebesgue measure on . In other words the measure disintegrates as .

Proof.

Next we define the set and we will denote its convex indicator function: for and otherwise. We also define by:

Note that is a 1-homogeneous function and is the convex conjugate of . Then it has been shown that given two measures and , their Wasserstein distance is equal to:

| (13) |

where is any measure on such that and denotes the Radon-Nykodym derivative (the choice of such does not affect the value in (13) due to the homogeneity of ). Furthermore, the existence of optimal measures and can be proved and the path of measures given by Property 5.2 is a geodesic between and .

We now modify the above expression so as to enforce that the path remains in the subspace .

Definition 5.3.

Let and be two probability measures in . With the same conventions as above, we define the constrained Wasserstein distance on by:

| (14) |

As we show next, defines a distance and we also recover the existence of geodesics.

Theorem 5.4.

For any two probability measures and in such that , there exist achieving the minimum in (14). Moreover, defines a distance on the space .

Proof.

The proof mainly requires adapting similar arguments developed for other variations of the optimal transport problem [11, 15]. For any and , let us define

where

One can easily check that and are proper convex functions and are lower semi-continuous. Furthermore, taking for instance with and , we see that is continuous at since is in the interior of . We can thus apply the Fenchel-Rockafellar duality theorem, which leads to

| (15) |

We now compute for :

and we find

from the definition of the weak continuity equation (12). It follows that the supremum on the right hand side of (15) can be restricted to measures and satisfying the continuity equation. Therefore, by Property 5.2, we obtain that

and similarly

from which we deduce that

| (16) |

Finally the convex conjugate of is computed for instance in [15] based on Theorem 5 of [40] and can be shown to be:

This allows to conclude that (16) corresponds to and, since we assume that , we obtain by Fenchel-Rockafellar theorem the existence of a minimizer to (14).

In addition, we have by construction that and therefore implies that . The symmetry of is also immediate from the fact that for any measure path satisfying the conditions in (14), one can consider the time-reversed paths which satisfy , , and while:

Finally, the triangular inequality can be shown in similar way as for the standard Wasserstein metric i.e. by concatenation. Let and assume that and (otherwise the triangular inequality is trivially satisfied). From the above, we know that there exist and that achieve the minimum in the distances and respectively. Letting and , we define the following concatenated path :

for which one can easily verify that , , and for almost all . It follows that:

∎

In general, one cannot guarantee that the distance is finite between any two measures. Nevertheless, it holds in particular when:

Proposition 5.5.

Let and be two measures in with densities with respect to the Lebesgue measure on with , and assume that there exists such that , for almost all . Then .

Proof.

With the above assumptions, let us consider the linear interpolation path and define where . Then one easily checks that we have in the sense of distributions. Furthermore,

Then, taking the Lebesgue measure on and since for almost all , we find:

and thus is also finite. ∎

Note that as a special case of this proposition, we deduce that the distance is finite between any two continuous strictly positive densities on .

Now, thanks to the bijection induced by between the set of convex curves of length one modulo translation which we will denote and the set of probability measures of , we can equip with the structure of a geodesic space by setting:

| (17) |

for any . A geodesic between the two curves is then given by where is a geodesic between the measures and for the metric .

Remark 5.6.

The above distance on is in addition equivariant to the action of rotations of the plane in the sense that for any , we have . This follows from the equivariance of the constrained Wasserstein distance with respect to rotations. Indeed, we see that for any probability measures and in and any , we have and . Furthermore, if is a pair of measures satisfying the continuity equation together with the boundary conditions , and the closure condition for almost all then one easily verifies that defined by and still satisfy the continuity equation, the closure condition and that:

Then taking the infimum leads to . This equivariance property allows to further quotient out rotations and obtain a distance between unit length convex curves modulo rigid motions that writes:

5.3.2. Numerical approach

We now derive a numerical method to estimate the constrained Wasserstein distance between two discretized densities of and thereby the distance between convex curves given by (17). Our proposed approach mainly follows and adapts the first-order proximal methods introduced in [37] for the computation of Wasserstein metrics, specifically the primal-dual algorithm which itself is a special instantiation of [13]. We briefly present the main elements of the algorithm in the following paragraphs.

We shall consider measures on discretized over two types of regular time/angle grids: centered and a staggered grids. We define the centered grid with time samples and angular samples as:

Discrete measure pairs sampled on the centered grid will be then represented as an element of the space . The staggered grids consist of time and angular samples shifted to the middle of the samples of . Specifically, we introduce a time and a space staggered grid defined as follows:

We shall then denote an element of which represents a pair of discretized measures of on the staggered grids and we introduce the interpolation operator defined by with

Note that the slight difference between the time and angular components and with the approach of [37] is due to the periodic boundary condition on the samples . We also define a discrete divergence operator as for all . This allows to write a discrete version of (14) as a convex minimization problem:

| (18) |

where

and if , if .

To solve the convex problem (18), we will use a primal-dual method adapted from the work of [13]. We first recall the definition of the proximal operator of a convex function :

as well as the convex dual of :

The function to minimize in (18) is of the form . The primal-dual algorithm we implement consists of the following iterative updates on the sequences starting from an initialization , and :

| (19) | ||||

where , are positive step sizes and an inertia parameter. It follows from the general result of [13] that the algorithm converges to a solution of (18) if where denotes the operator norm of . We typically have as is here an interpolation operator.

It only remains to specify the exact expressions of the proximal operators appearing in the first two equations of (19). First, by Moreau’s identity, one has that for all :

Now the proximal of is computed in [37] Proposition 1 from which we obtain for all :

where

with being the largest real root of the polynomial equation .

To express the second update in (19), we first point out that is given by with:

Moreover since is here the convex indicator function of the constraint set , we have for any and the orthogonal projector on the convex set . In addition to the usual boundary and divergence constraints, we also have to deal with the two extra closure conditions. We introduce the operator defined for any by:

which allows us to write . Thus, we can express the orthogonal projection on as:

where can be interpreted as a modified Laplace operator on the spatial-angular domain, which in practice we can precompute together with its inverse before iterating (19) or alternatively implement in the Fourier domain.

5.3.3. Numerical results

|

|

|

|

|

|

|

|

We present a few numerical results obtained with the above approach which we implemented in Python. In all experiments, we take angular samples on and time samples in . As for the step parameters of the primal-dual algorithm, we picked , and . We run the algorithm (19) for 1000 iterations although we observe in practice that convergence to a minimum is typically reached after around 250 iterations (cf the energy plot in Figure 10).

First, in Figure 9, is shown the geodesic between the same polygons as in Figures 7 and 8 for the new constrained Wasserstein metric. Observe that unlike kernel metrics, the geodesic involves mass transportation rather than pure interpolation between the measures but that unlike the standard Wasserstein distance, the closure constraint is satisfied along the path and the corresponding shapes all stay within the set of closed convex curves. We further illustrate those effects with another example of a geodesic between two density measures and the corresponding geodesic between the convex curves in Figure 10.

|

|

|

|

|

|

|

|

6. Some open problems and future directions

As a conclusion to this paper, we discuss a few possible topics and tracks for future investigation that arise as natural follow-ups to this work and the results we presented.

Unbalanced optimal transport for convex shape analysis

In section 5.3, we introduced a distance based on a constrained version of the Wasserstein metric between length measures which turns the set of convex closed curves of length one into a length space. There are yet several questions which are left partly unaddressed when it comes to this distance and its properties. In particular, it remains to be studied whether is finite between any pair of probability measures of or at least if the result of Proposition 5.5 could be extended to other families of measures. Besides, is only defined for probability measures which implies that the resulting distance in (17) requires the convex curves to be normalized to have unit length. This makes such a distance unadapted to retrieve and quantify e.g. global or local changes in scale.

We believe that both of the previous issues could be addressed by considering an unbalanced extension of the metric to all measures of , along similar lines as the unbalanced frameworks for standard optimal transport proposed in works such as [38, 36, 15]. In the context of this paper, this could be done by adding a source term to the continuity equation, namely consider with being a signed measure of that models an additional transformation of the measure in conjunction with mass transportation. Then, as in [36, 15], one could penalize this extra source term based on the Fischer-Rao metric of , which would lead to define the following distance between any :

where is such that and is given by:

with a weighting parameter between the two components of the cost. In this case, it becomes easy to show that is finite for any measures by simply considering the special path , and for all . Although we leave it for future work, we expect the existence of geodesics and distance properties to hold for by extending the proof of Theorem 5.4. Moreover, the precise analysis of its topological properties and how the resulting metric between convex curves compare to other geometric distances remain to be examined.

Generalization to higher dimension

Although this work focused on the case of planar curves, the concept of length measure extends to closed hypersurfaces in any dimension which is known as area measures, as mentioned in the introduction. Area measures, particularly of surfaces in , is a central concept in the Brunn-Minkowski theory of mixed volumes [42] but also appear in some applications such as object recognition [29] or surface reconstruction from computerized tomography [39]. The area measure of a -dimensional closed oriented submanifold (more generally rectifiable subset) of can be defined, similarly to Definition 2.1, as the positive Radon measure on given by for all Borel subset where denotes the unit normal vector to at and is the volume measure i.e. the Hausdorff measure of dimension . Importantly, the Minkowski-Fenchel-Jessen theorem still holds for general area measures, namely the area measure again characterizes a convex set up to translation. However, there are two significant differences when compared to the situation of planar curves. First the Minkowski sum of two convex does not generally correspond to the sum of their area measures. Second, reconstructing the convex shape from its area measure, even for discrete measures and polyhedra, is no longer straightforward: it is in fact an active research topic and several different approaches and algorithms have been proposed, see e.g. [47, 33, 25].

In connection to the results presented in this paper, we expect for instance that an isoperimetric characterization of convex sets similar to Theorem 4.4 should hold for the positive volume enclosed by -dimensional non self-intersecting rectifiable sets that share the same area measure under the adequate regularity assumptions. Such a property has been shown in particular for polyhedra in [8]. As for the construction of metrics and geodesics between convex shapes, the mathematical construction of the constrained Wasserstein metric presented in Section 5.3.1 can be a priori adapted to area measures in any dimension, by replacing the closure constraint to for almost all . However, the numerical implementation of such metrics along the lines of the approach of Section 5.3.2 would induce additional difficulties. Indeed, the computation of the operators on grids over higher-dimensional sphere becomes more involved and numerically intensive. Moreover, recovering the convex curves associated to a geodesic in the space of area measures would further require, as explained above, applying some reconstruction algorithm a posteriori. Finally, an important issue for future investigation is to derive an efficient implementation of the variation of the distance with respect to the discrete distributions, which could be then used for instance to estimate Kärcher means in the space of convex sets.

Acknowledgements

The authors would like to thank F-X Vialard for some useful insights in relation to the optimal transport model presented in this paper. This work was supported by the National Science Foundation (NSF) under the grant 1945224.

References

- [1] H. Abdallah and Q. Mérigot, On the reconstruction of convex sets from random normal measurements, Proceedings of the thirtieth annual symposium on Computational geometry, 2014, pp. 300–307.

- [2] A. Alexandrov, Zur theorie der gemischten volumina von konvexen koörpern. i. verallgemeinerung einiger begriffe der theorie von konvexen körpern, Matematicheskii Sbornik 44 (1937), no. 5, 947–972.

- [3] W.K. Allard, On the first variation of a varifold, Annals of mathematics (1972), 417–491.

- [4] L. Ambrosio, N. Fusco, and D. Pallara, Functions of bounded variation and free discontinuity problems, Oxford : Clarendon Press, 2000.

- [5] N. Aronszajn, Theory of reproducing kernels, Trans. Amer. Math. Soc. 68 (1950), 337–404.

- [6] J-D. Benamou and Y. Brenier, A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem, Numerische Mathematik 84 (2000), no. 3, 375–393.

- [7] W. Blaschke, Kreis und kugel, Verlag von Veit Comp, 1916.

- [8] K. Böröczky, I. Bárány, E. Makai, and J. Pach, Maximal volume enclosed by plates and proof of the chessboard conjecture, Discrete Mathematics 60 (1986), 101 – 120.

- [9] H. Brezis, Functional analysis, sobolev spaces and partial differential equations, Universitext, Springer New York, 2010.

- [10] B. Buet, G.P. Leonardi, and S. Masnou, Discretization and approximation of surfaces using varifolds, Geometric Flows 3 (2018), no. 1, 28–56.

- [11] P. Cardaliaguet, G. Carlier, and B. Nazaret, Geodesics for a class of distances in the space of probability measures, Calculus of Variations and Partial Differential Equations 48 (2013), no. 3-4, 395–420.

- [12] G. Carlier, On a theorem of Alexandrov, Journal of nonlinear and convex analysis 5 (2004), no. 1, 49–58.

- [13] A. Chambolle and T. Pock, A first-order primal-dual algorithm for convex problems with applications to imaging, Journal of mathematical imaging and vision 40 (2011), no. 1, 120–145.

- [14] N. Charon and A. Trouvé, The varifold representation of nonoriented shapes for diffeomorphic registration, SIAM Journal on Imaging Sciences 6 (2013), no. 4, 2547–2580.

- [15] L. Chizat, G. Peyré, B. Schmitzer, and F-X. Vialard, An Interpolating Distance Between Optimal Transport and Fisher-Rao Metrics, Foundations of Computational Mathematics 18 (2018), no. 1, 1–44.

- [16] D. Cohen-Steiner and J.M Morvan, Restricted Delaunay triangulation and normal cycle, Comput. Geom (2003), 312–321.

- [17] M. Cuturi, Sinkhorn Distances: Lightspeed Computation of Optimal Transportation Distances, Advances in Neural Information Processing Systems 26 (2013).

- [18] J. Delon, J. Salomon, and A. Sobolevski, Fast transport optimization for Monge costs on the circle, SIAM Journal on Applied Mathematics 70 (2010), no. 7, 2239–2258.

- [19] S. Durrleman, X. Pennec, A. Trouvé, and N. Ayache, Statistical models of sets of curves and surfaces based on currents, Medical image analysis 13 (2009), no. 5, 793–808.

- [20] L. Evans and R. Gariepy, Measure theory and fine properties of functions, CRC press, 2015.

- [21] I. Fáry and E. Makai, Isoperimetry in variable metric, Studiu Scientiarum Mathematicarum Hungarica 17 (1982), 143 – 158.

- [22] H. Federer, Geometric measure theory, Springer, 1969.

- [23] W. Fenchel and B. Jessen, Mengenfunktionen und konvexe körper, Matematisk-fysiske meddelelser, Levin & Munksgaard, 1938.

- [24] R. Gardner, The Brunn-Minkowski inequality, Bulletin of the American Mathematical Society 39 (2002), no. 3, 355–405.

- [25] R. Gardner, M. Kiderlen, and P. Milanfar, Convergence of Algorithms for Reconstructing Convex Bodies and Directional Measures, The Annals of Statistics 34 (2006), no. 3, 1331–1374.

- [26] J. Glaunès, A. Trouvé, and L. Younes, Diffeomorphic matching of distributions: A new approach for unlabelled point-sets and sub-manifolds matching, Computer Vision and Pattern Recognition (CVPR) 2 (2004), 712–718.

- [27] J. Glaunès and M. Vaillant, Surface matching via currents, Proceedings of Information Processing in Medical Imaging (IPMI), Lecture Notes in Computer Science 3565 (2006), no. 381-392.

- [28] A. Gretton, K. Borgwardt, M. Rasch, B. Schölkopf, and A.J. Smola, A kernel method for the two-sample-problem, Advances in neural information processing systems, 2007, pp. 513–520.

- [29] M. Hebert, K. Ikeuchi, and H. Delingette, A spherical representation for recognition of free-form surfaces, IEEE transactions on pattern analysis and machine intelligence 17 (1995), no. 7, 681–690.

- [30] H. Heijmans and A. Tuzikov, Similarity and symmetry measures for convex shapes using Minkowski addition, IEEE Transactions on Pattern Analysis and Machine Intelligence 20 (1998), no. 9, 980–993.

- [31] Y. Hu, M. Hudelson, B. Krishnamoorthy, A. Tumurbaatar, and K.R. Vixie, Median Shapes, Journal of Computational Geometry 10 (2019), 322–388.

- [32] I. Kaltenmark, B. Charlier, and N. Charon, A general framework for curve and surface comparison and registration with oriented varifolds, Computer Vision and Pattern Recognition (CVPR) (2017).

- [33] T. Lachand-Robert and E. Oudet, Minimizing within convex bodies using a convex hull method, SIAM Journal on Optimization 16 (2005), no. 2, 368–379.

- [34] G. Letac, Mesures sur le cercle et convexes du plan, Annales scientifiques de l’Université de Clermont-Ferrand 2. Série Probabilités et applications 76 (1983), no. 1, 35–65.

- [35] Y. Li, K. Swersky, and R. Zemel, Generative moment matching networks, International Conference on Machine Learning, 2015, pp. 1718–1727.

- [36] M. Liero, A. Mielke, and G. Savaré, Optimal transport in competition with reaction: The Hellinger–Kantorovich distance and geodesic curves, SIAM Journal on Mathematical Analysis 48 (2016), no. 4, 2869–2911.

- [37] N. Papadakis, G. Peyré, and E. Oudet, Optimal transport with proximal splitting, SIAM Journal on Imaging Sciences 7 (2014), no. 1, 212–238.

- [38] B. Piccoli and F. Rossi, Generalized Wasserstein Distance and its Application to Transport Equations with Source, Archive for Rational Mechanics and Analysis 211 (2014), no. 1, 335–358.

- [39] J.L. Prince and A. S. Willsky, Reconstructing convex sets from support line measurements, IEEE Transactions on Pattern Analysis and Machine Intelligence 12 (1990), no. 4, 377–389.

- [40] R. Rockafellar, Integrals which are convex functionals. II, Pacific Journal of Mathematics 39 (1971), no. 2, 439–469.

- [41] P. Roussillon and J. Glaunès, Kernel Metrics on Normal Cycles and Application to Curve Matching, SIAM Journal on Imaging Sciences 9 (2016), no. 4, 1991–2038.

- [42] R. Schneider, Convex Bodies: The Brunn-Minkowski Theory, 2 ed., Encyclopedia of Mathematics and its Applications, Cambridge University Press, 2013.

- [43] B. Sriperumbudur, K. Fukumizu, and G. Lanckriet, Universality, characteristic kernels and RKHS embedding of measures, Journal of Machine Learning Research 12 (2011), 2389–2410.

- [44] M. Van Kreveld, O. Schwarzkopf, M. de Berg, and M. Overmars, Computational geometry algorithms and applications, Springer, 2000.

- [45] C. Villani, Optimal transport: old and new, vol. 338, Springer Science & Business Media, 2008.

- [46] L. Younes, Shapes and diffeomorphisms, Springer, 2019.

- [47] H. Zouaki, Representation and geometric computation using the extended Gaussian image, Pattern recognition letters 24 (2003), no. 9-10, 1489–1501.