On Learning Domain-Invariant Representations for Transfer Learning with Multiple Sources

Abstract

Domain adaptation (DA) benefits from the rigorous theoretical works that study its insightful characteristics and various aspects, e.g., learning domain-invariant representations and its trade-off. However, it seems not the case for the multiple source DA and domain generalization (DG) settings which are remarkably more complicated and sophisticated due to the involvement of multiple source domains and potential unavailability of target domain during training. In this paper, we develop novel upper-bounds for the target general loss which appeal to us to define two kinds of domain-invariant representations. We further study the pros and cons as well as the trade-offs of enforcing learning each domain-invariant representation. Finally, we conduct experiments to inspect the trade-off of these representations for offering practical hints regarding how to use them in practice and explore other interesting properties of our developed theory.

1 Introduction

Although annotated data has been shown to be really precious and valuable to deep learning (DL), in many real-world applications, annotating a sufficient amount of data for training qualified DL models is prohibitively labour-expensive, time-consuming, and error-prone. Transfer learning is a vital solution for the lack of labeled data. Additionally, in many situations, we are only able to collect limited number of annotated data from multiple source domains. Therefore, it is desirable to train a qualified DL model primarily based on multiple source domains and possibly together with a target domain. Depending on the availability of the target domain during training, we encounter either multiple source domain adaptation (MSDA) or domain generalization (DG) problem.

Domain adaptation (DA) is a specific case of MSDA when we need to transfer from a single source domain to an another target domain available during training. For the DA setting, the pioneering work [3] and other following work [45, 55, 56, 22, 19, 57, 23] are abundant to study its insightful characterizations and aspects, notably what domain-invariant (DI) representations are, how to learn this kind of representations, and the trade-off of enforcing learning DI representations. Those well-grounded studies lay the foundation for developing impressive practical DA methods [14, 50, 46, 55, 7, 54, 38].

Due to the appearance of multiple source domains and possibly unavailability of target domain, establishing theoretical foundation and characterizing DI representations for the MSDA and especially DG settings are significantly more challenging. A number of state-of-the-art works in MSDA and DG [59, 41, 20, 11, 43, 28, 29, 35, 15, 58, 52, 37, 39] have implicitly exploited and used DI representations in some sense to achieve impressive performances, e.g., [29, 29, 11] minimize representation divergence during training, [43] decomposes representation to obtain invariant feature, [58] maximizes domain prediction entropy to learn invariance. Despite the successes, the notion DI representations in MSDA and DG is not fully-understood, and rigorous studies to theoretically characterize DI representations for these settings are still very crucial and imminent. In this paper, we provide theoretical answers to the following questions: (1) what are DI representations in MSDA and DG, and (2) what one should expect when learning them. Overall, our contributions in this work can be summarized as:

-

•

In Section 2.2, we first develop two upper-bounds for the general target loss in the MSDA and DG settings, whose proofs can be found in Appendix A. We then base on these bounds to characterize and define two kinds of DI representations: general DI representations and compressed DI representations.

-

•

We further develop theory to inspect the pros and cons of two kinds of DI representations in Section 2.3, aiming to shed light on how to use those representations in practice. Particularly, two types of DI representations optimize different types of divergence on feature space, hence serving different purposes. Appendix B contains proofs regarding these characteristics.

-

•

Finally, we study in Section 2.4 the trade-off of two kinds of DI representations which theoretically answers the question whether and how the target performance is hurt when enforcing learning each kind of representation. We refer reader to Appendix C for its proof.

-

•

We conduct experiments to investigate the trade-off of two kinds of representations for giving practical hints regarding how to use those representations in practice as well as exploring other interesting properties of our developed theory.

It is worth noting that although MSDA has been investigated in some works [19, 57], our proposed method is the first work that rigorously formulates and provides theoretical analysis for representation learning in MSDA and DG. Specifically, our bounds developed in Theorems 1 and 3 interweaving both input and latent spaces are novel and benefit theoretical analyses in deep learning. Our theory is developed in a general setting of multi-class classification with a sufficiently general loss, which can be viewed as a non-trivial generalization of existing works in DA [31, 3, 56, 32, 57], each of which considers binary classification with specific loss functions. Moreover, our theoretical bounds developed in Theorem 8 is the first theoretical analysis of the trade-off of learning DI representations in MSDA and DG. Particularly, by considering the MSDA setting with single source and target domains, we achieve the same trade-off nature discovered in [56], but again our setting is more general than binary classification setting in that work.

2 Our Main Theory

2.1 Notations

Let be a data space on which we endow a data distribution with a corresponding density function . We consider the multi-class classification problem with the label set , where is the number of classes and . Denote as the simplex label space, let be a probabilistic labeling function returning a -tuple , whose element is the probability to assign a data sample to the class (i.e., ). Moreover, a domain is denoted compactly as pair of data distribution and labeling function . We note that given a data sample , its categorical label is sampled as which a categorical distribution over .

Let be a loss function, where with and specifies the loss (e.g., cross-entropy, Hinge, L1, or L2 loss) to assign a data sample to the class by the hypothesis . Moreover, given a prediction probability w.r.t. the ground-truth prediction , we define the loss . This means is convex w.r.t. the second argument. We further define the general loss caused by using a classifier to predict as

We inspect the multiple source setting in which we are given multiple source domains over the common data space , each of which consists of data distribution and its own labeling function . Based on these source domains, we need to work out a learner or classifier that requires to evaluate on a target domain . Depending on the knownness or unknownness of a target domain during training, we experience either multiple source DA (MSDA) [32, 30, 44, 19] or domain generalization (DG) [25, 29, 28, 35] setting.

One typical approach in MSDA and DG is to combine the source domains together [14, 29, 28, 35, 1, 11, 48] to learn DI representations with hope to generalize well to a target domain. When combining the source domains, we obtain a mixture of multiple source distributions denoted as , where the mixing coefficients can be conveniently set to with being the training size of the th source domain.

In term of representation learning, input is mapped into a latent space by a feature map and then a classifier is trained based on the representations . Let be the original labeling function. To facilitate the theory developed for latent space, we introduce representation distribution being the pushed-forward distribution , and the labeling function induced by as [22]. Going back to our multiple source setting, the source mixture becomes , where each source domain is , and the target domain is .

Finally, in our theory development, we use Hellinger divergence between two distributions defined as , whose squared is a proper metric.

2.2 Two Types of Domain-Invariant Representations

Hinted by the theoretical bounds developed in [3], DI representations learning, in which feature extractor maps source and target data distributions to a common distribution on the latent space, is well-grounded for the DA setting. However, this task becomes significantly challenging for the MSDA and DG settings due to multiple source domains and potential unknownness of target domain. Similar to the case of DA, it is desirable to develop theoretical bounds that directly motivate definitions of DI representations for the MSDA and DG settings, as being done in the next theorem.

Theorem 1.

Consider a mixture of source domains and the target domain . Let be any loss function upper-bounded by a positive constant . For any hypothesis where with and , the target loss on input space is upper bounded

| (1) |

where is the absolute of single point label shift on input space between source domain , the target domain , and .

The bound in Equation 1 implies that the target loss in the input or latent space depends on three terms: (i) representation discrepancy: , (ii) the label shift: , and (iii) the general source loss: . To minimize the target loss in the left side, we need to minimize the three aforementioned terms. First, the label shift term is a natural characteristics of domains, hence almost impossible to tackle. Secondly, the representation discrepancy term can be explicitly tackled for the MSDA setting, while almost impossible for the DG setting. Finally, the general source loss term is convenient to tackle, where its minimization results in a feature extractor and a classifier .

Contrary to previous works in DA and MSDA [3, 32, 57, 7] that consider both losses and data discrepancy on data space, our bound connects losses on data space to discrepancy on representation space. Therefore, our theory provides a natural way to analyse representation learning, especially feature alignment in deep learning practice. Note that although DANN [14] explains their feature alignment method using theory developed by Ben-david et al. [3], it is not rigorous. In particular, while application of the theory to representation space yield a representation discrepancy term, the loss terms are also on that feature space, and hence minimizing these losses is not the learning goal. Finally, our setting is much more general, which extends to multilabel, stochastic labeling setting, and any bounded loss function.

From the upper bound, we turn to first type of DI representations for the MSDA and DG settings. Here, the objective of is to map samples onto representation space in a way that the common classifier can effectively and correctly classifies them, regardless of which domain the data comes from.

Definition 2.

Consider a class of feature maps and a class of hypotheses. Let be a set of source domains.

i) (DG with unknown target data) A feature map is said to be a DG general domain-invariant (DI) feature map if is the solution of the optimization problem (OP): . Moreover, the latent representations induced by is called general DI representations for the DG setting.

ii) (MSDA with known target data) A feature map is said to be a MSDA general DI feature map if is the solution of the optimization problem (OP): which satisfies (i.e., ). Moreover, the latent representations induced by is called general DI representations for the MSDA setting.

The definition of general DI representations for the DG setting in Definition 2 is transparent in light of Theorem 1, wherein we aim to find and to minimize the general source loss due to the unknownness of . Meanwhile, regarding the general DI representations for the MSDA setting, we aim to find satisfying and to minimize the general source loss . Practically, to find general representations for MSDA, we solve

where is a trade-off parameter and can be any divergence (e.g., Jensen Shannon (JS) divergence [16], -divergence [40], MMD distance [28], or WS distance [44]).

In addition, the general DI representations have been exploited in some works [60, 27] from the practical perspective and previously discussed in [2] from the theoretical perspective. Despite the similar definition to our work, general DI representation in [2] is not motivated from minimization of a target loss bound.

As pointed out in the next section, learning general DI representations increases the span of latent representations on the latent space which might help to reduce for a general target domain. On the other hand, many other works [28, 29, 15, 52, 11] have also explored the possibility of enhancing generalization by finding common representation among source distributions. The following theorem motivates the latter, which is the second kind of DI representations.

Theorem 3.

Consider a mixture of source domains and the target domain . Let be any loss function upper-bounded by a positive constant . For any hypothesis where with and , the target loss on input space is upper bounded

| (2) | ||||

Evidently, Theorem 3 suggests another kind of representation learning, where source representation distributions are aligned in order to lower the target loss’s bound as concretely defined as follows.

Definition 4.

Consider a class of feature maps and a class of hypotheses. Let be a set of source domains.

i) (DG with unknown target data) A feature map is a DG compressed DI representations for source domains if is the solution of the optimization problem (OP): which satisfies (i.e., the pushed forward distributions of all source domains are identical). The latent representations is then called compressed DI representations for the DG setting.

ii) (MSDA with known target data) A feature map is an MSDA compressed DI representations for source domains if is the solution of the optimization problem (OP): which satisfies (i.e., the pushed forward distributions of all source and target domains are identical). The latent representations is then called compressed DI representations for the MSDA setting.

2.3 Characteristics of Domain-Invariant Representations

In the previous section, two kinds of DI representations are introduced, where each of them originates from minimization of different terms in the upper bound of target loss. In what follows, we examine and discuss their benefits and drawbacks. We start with the novel development of hypothesis-aware divergence for multiple distributions , which is necessary for our theory developed later.

2.3.1 Hypothesis-Aware Divergence for Multiple Distributions

Let consider multiple distributions on the same space, whose density functions are . We are given a mixture of these distributions with a mixing coefficient and desire to measure a divergence between . One possible solution is employing a hypothesis from an infinite capacity hypothesis class to identify which distribution the data come from. In particular, given , the function outputs the probability that . Note that we use the notation in this particular subsection to denote a domain classifier, while everywhere else in the paper denotes label classifier. Let be the loss when classifying using provided the ground-truth label . Our motivation is that if the distributions are distant, it is easier to distinguish samples from them, hence the minimum classification loss is much lower than in the case of clutching . Therefore, we develop the following theorem to connect the loss optimization problem with an f-divergence among . More discussions about hypothesis-aware divergence can be found in Appendix B of this paper and in [13].

Theorem 5.

Assuming the hypothesis class has infinite capacity, we define the hypothesis-aware divergence for multiple distributions as

| (3) |

where depends only on the form of loss function and value of . This divergence is a proper f-divergence among in the sense that and , and if .

2.3.2 General Domain-Invariant Representation

As previously defined, the general DI feature map (cf. Definition 2) is the one that minimizes the total source loss

| (4) |

From the result of Theorem 5, we expect that the minimal loss should be inversely proportional to the divergence between the class-conditionals . To generalize this result to the multi-source setting, we further define as the mixture of the class conditional distributions of the source domains on the latent space, where are the mixing coefficients, and are label marginals. Then, the inner loop of the objective function in Eq. 4 can be viewed as training the optimal hypothesis to distinguish the samples from for a given feature map . Therefore, by linking to the multi-divergence concept developed in Section 2.3.1, we achieve the following theorem.

Theorem 6.

Assume that has infinite capacity, we have the following statements.

1. , where is defined as above.

2. Finding the general DI feature map via the OP in Eq. 4 is equivalent to solving

| (5) |

Theorem 6, especially its second claim, discloses that learning general DI representations maximally expands the coverage of latent representations of the source domains by maximizing . Hence, the span of source mixture is also increased. We believe this demonstrates one of the benefits of general DI representations because it implicitly enhance the chance to match a general unseen target domains in the DG setting by possibly reducing the source-target representation discrepancy term (cf. Theorem 1). Additionally, in MSDA where we wish to minimize explicitly, expanding source representation’s coverage is also useful.

2.3.3 Compressed Domain-Invariant Representations

It is well-known that generalization gap between the true loss and its empirical estimation affects generalization capability of model [51, 47]. One way to close this gap is increasing sampling density. However, as hinted by our analysis, enforcing general DI representation learning tends to maximize the cross-domain class divergence, hence implicitly increasing the diversity of latent representations from different source domains. This renders learning a classifier on top of those source representations harder due to scattering samples on representation space, leading to higher generalization gap between empirical and general losses. In contrast, compressed DI representations help making the task of learning easier with a lower generalization gap by decreasing the diversity of latent representations from the different source domains, via enforcing . We now develop rigorous theory to examine this observation.

Let the training set be , consisting of independent pairs drawn from the source mixture, i.e., the sampling process starts with sampling domain index , then sampling (i.e., and ), and finally assigning a label with (i.e., is a distribution over ). The empirical loss is defined as

| (6) |

where is the number of samples drawn from the -th source domain and .

Here, is an unbiased estimation of the general loss . To quantify the quality of the estimation, we investigate the upper bound of the generalization gap with the confidence level .

Theorem 7.

For any confident level over the choice of , the estimation of loss is in the -range of the true loss

where is a function of for which is proportional to

Theorem 7 reveals one benefit of learning compressed DI representations, that is, when enforcing compressed DI representation learning, we minimize , which tends to reduce the generalization gap for a given confidence level . Therefore, compressed DI representations allow us to minimize population loss more efficiently via minimizing empirical loss.

2.4 Trade-off of Learning Domain Invariant Representation

Similar to the theoretical finding in Zhao et al. [56] developed for DA, we theoretically find that compression does come with a cost for MSDA and DG. We investigate the representation trade-off, typically how compressed DI representation affects classification loss. Specifically, we consider a data processing chain , where is the common data space, is the latent space induced by a feature extractor , and is a hypothesis on top of the latent space. We define and as two distribution over in which to draw , we sample , , and , while similar to draw . Our theoretical bounds developed regarding the trade-off of learning DI representations are relevant to .

Theorem 8.

Consider a feature extractor and a hypothesis , the Hellinger distance between two label marginal distributions and can be upper-bounded as:

1.

2.

Here we note that the general loss is defined based on the Hellinger loss which is define as

(more discussion can be found in Appendix C).

Remark. Compared to the trade-off bound in the work of Zhao et al. [56], our context is more general, concerning MSDA and DG problems with multiple source domains and multi-class probabilistic labeling functions, rather than single source DA with binary-class and deterministic setting. Moreover, the Hellinger distance is more universal, in the sense that it does not depend on the choice of classifier family and loss function as in the case of -divergence in [56].

We base on the first inequality of Theorem 8 to analyze the trade-off of learning general DI representations. The first term on the left hand side is the source mixture’s loss, which is controllable and tends to be small when enforcing learning general DI representations. With that in mind, if two label marginal distributions and are distant (i.e., is high), the sum tends to be high. This leads to 2 possibilities. The first scenario is when the representation discrepancy has small value, e.g., it is minimized in MSDA setting, or it happens to be small by pure chance in DG setting. In this case, the lower bound of target loss is high, possibly hurting model’s generalization ability. On the other hand, if the discrepancy is large for some reasons, the lower bound of target loss will be small, but its upper-bound is higher, as indicated Theorem 1.

Based on the second inequality of Theorem 8, we observe that if two label marginal distributions and are distant while enforcing learning compressed DI representations (i.e., both source loss and source-source feature discrepancy are low), the sum is high. For the MSDA setting, the discrepancy is trained to get smaller, meaning that the lower bound of target loss is high, hurting the target performance. Similarly, for the DG setting, if the trained feature extractor occasionally reduces for some unseen target domain, it certainly increases the target loss . In contrast, if for some target domains, the discrepancy is high by some reasons, by linking to the upper bound in Theorem 3, the target general loss has a high upper-bound, hence is possibly high.

This trade-off between representation discrepancy and target loss suggests a sweet spot for just-right feature alignment. In that case, the target loss is most likely to be small.

3 Experiment

To investigate the trade-off of learning DI representations, we conduct domain generalization experiments on the colored MNIST dataset (CC BY-SA 3.0) [2, 24]. In particular, the task is to predict binary label of colored input images generated from binary digit feature and color feature . We refer readers to Appendix D for more information of this dataset.

We conduct source domains by setting color-label correlation where for , while two target domains are created with for . Here we note that colored images in the target domain with are more similar to those in the source domains, while colored images in the target domain with are less similar.

We wish to study the characteristics and trade-off between two kinds of DI representations when predicting on various target domains. Specifically, we apply adversarial learning [16] similar to [14], in which a min-max game is played between domain discriminator trying to distinguish the source domain given representation, while the feature extractor (generator) tries to fool the domain discriminator. Simultaneously, a classifier is used to classify label based on the representation. Let and be the label classification and domain discrimination losses, the training objective becomes:

where the source compression strength controls the compression extent of learned representation. More specifically, general DI representation is obtained with , while larger leads to more compressed DI representation. Finally, our implementation is based on DomainBed [17] repository, and all training details as well as further MSDA experiment and DG experiment on real datasets are included in Appendix D.111Our code could be found at: https://github.com/VinAIResearch/DIRep

3.1 Trade-off of Two Kinds of Domain-Invariant Representations

We consider target domain which is similar to source domain in this experiment. To govern the trade-off between two kinds of DI representations, we gradually increase the source compression strength with noting that means only focusing on learning general DI representations. According to our theory and as shown in Figure 1, learning only general DI representations () maximizes the cross-domain class divergence by encouraging the classes of source domains more separate arbitrarily, while by increasing , we enforce compressing the latent representations of source domains together. Therefore, for an appropriate , the class separation from general DI representations and the source compression from compressed DI representations (i.e., just-right compressed DI representations), are balanced as in the case (b) of Figure 1, while for overly high , source compression from compressed DI representations dominates and compromises the class separation from general DI representations (i.e., overly-compressed DI representations).

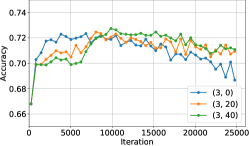

Figure 2a shows the source validation and target accuracies when increasing (i.e., encouraging the source compression). It can be observed that both source validation accuracy and target accuracy have the same pattern: increasing when setting appropriate for just-right compressed DI representations and compromising when setting overly high values for overly-compressed DI representations. Figure 2b shows in detail the variation of the source validation accuracy for each specific . In practice, we should encourage learning two kinds of DI representations simultaneously by finding an appropriate trade-off to balance them for working out just-right compressed DI representations.

3.2 Generalization Capacity on Various Target Domains

In this experiment, we inspect the generalization capacity of the models trained on the source domains with different compression strengths to different target domains (i.e., close and far ones). As shown in Figure 3, the target accuracy is lower for the far target domain than for the close one regardless of the source compression strengths . This observation aligns with our upper-bounds developed in Theorems 1 and 3 in which larger representation discrepancy increases the upper-bounds of the general target loss, hence more likely hurting the target performance.

Another interesting observation is that for the far target domain, the target accuracy for peaks. This can be partly explained as the general DI representations help to increase the span of source latent representations for more chance to match a far target domain (cf. Section 2.3.2). Specifically, the source-target discrepancy could be small and the upper bound in Theorem 1 is lower. In contrast, for the close target domain, the compressed DI representations help to really improve the target performance, while over-compression degrades the target performance, similar to result on source domains. This is because the target domain is naturally close to the source domains, the source and target latent representations are already mixed up, but by encouraging the compressed DI representations, we aim to learning more elegant representations for improving target performance.

4 Related Works

Domain adaptation (DA) has been intensively studied from both theoretical [3, 9, 6, 56, 34, 45] and empirical [46, 55, 14, 7, 54] perspectives. Notably, the pioneering work [3] and other theoretical works [45, 55, 56, 22, 31, 6] have investigated DA in various aspects which lay foundation for the success of practical works [14, 50, 46, 55, 14, 7, 54]. Multiple-source domain adaptation (MSDA) extends DA by gathering training set from multiple source domains [8, 30, 21, 44, 45, 12, 57, 53, 42]. Different from DA with abundant theoretical works, theoretical study in MSDA is significantly limited. Notably, the works in [32, 33] relies on assumptions about the existence of the same labeling function, which can be used for all source domains; and target domain is an unknown mixture of the source domains. They then show that there exists a distributional weighted combination of these experts performing well on target domain with loss at most , given a set of source expert hypotheses (with loss is at most on respective domain). Hoffman et al. [19] further develop this idea to a more general case where different labeling functions corresponding to different source domains, and the target domain is arbitrary. Under this setting, there exists a distributional weighted combination of source experts such that the loss on target domain is bounded by a source loss term and a discrepancy term between target domain and source mixture. On the other hand, Zhao et al. [57] directly extend Ben-david et al.’s work with an improvement on the sample complexity term.

Domain generalization (DG) [17, 26, 4, 20] is the most challenging setting among the three due to the unavailability of target data. The studies in [4, 10] use kernel method to find feature map which minimizes expected target loss. Moreover, those in [28, 29, 35, 15, 52, 11] learn compressed DI representations by minimizing different types of domain dissimilarity. Other notions of invariance are also proposed, for example, [2] learns a latent space on which representation distributions do not need to be aligned, but share a common optimal hypothesis. Another work in [43] uncovers domain-invariant hypothesis from low-rank decomposition of domain-specific hypotheses. Moreover, there are other works which try to strike a balance between two types of representation spaces [5].

5 Conclusion

In this paper, we derive theoretical bounds for target loss in MSDA and DG settings which characterize two types of representation: general DI representation for learning invariant classifier which works on all source domains and compressed DI representation motivated from reducing inter-domain representation discrepancy. We further characterize the properties of these two representations, and develop a lower bound on the target loss which governs the trade-off between learning them. Finally, we conduct experiments on Colored MNIST dataset and real dataset to illustrate our theoretical claims.

References

- [1] Albuquerque, I., Monteiro, J., Darvishi, M., Falk, T. H., and Mitliagkas, I. Generalizing to unseen domains via distribution matching, 2020.

- [2] Arjovsky, M., Bottou, L., Gulrajani, I., and Lopez-Paz, D. Invariant risk minimization, 2020.

- [3] Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., and Vaughan, J. W. A theory of learning from different domains. Mach. Learn. 79, 1–2 (May 2010), 151–175.

- [4] Blanchard, G., Lee, G., and Scott, C. Generalizing from several related classification tasks to a new unlabeled sample. In Advances in Neural Information Processing Systems (2011), vol. 24.

- [5] Chattopadhyay, P., Balaji, Y., and Hoffman, J. Learning to balance specificity and invariance for in and out of domain generalization. arXiv preprint arXiv:2008.12839 (2020).

- [6] Cortes, C., and Mohri, M. Domain adaptation in regression. In Algorithmic Learning Theory - 22nd International Conference, ALT 2011, Proceedings (2011), Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), pp. 308–323. Copyright: Copyright 2011 Elsevier B.V., All rights reserved.; 22nd International Conference on Algorithmic Learning Theory, ALT 2011 ; Conference date: 05-10-2011 Through 07-10-2011.

- [7] Cortes, C., Mohri, M., and Medina, A. M. Adaptation based on generalized discrepancy. Journal of Machine Learning Research 20, 1 (2019), 1–30.

- [8] Crammer, K., Kearns, M., and Wortman, J. Learning from multiple sources. Journal of Machine Learning Research 9, 57 (2008), 1757–1774.

- [9] David, S. B., Lu, T., Luu, T., and Pal, D. Impossibility theorems for domain adaptation. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics (13–15 May 2010), vol. 9 of Proceedings of Machine Learning Research, PMLR, pp. 129–136.

- [10] Deshmukh, A. A., Lei, Y., Sharma, S., Dogan, U., Cutler, J. W., and Scott, C. A generalization error bound for multi-class domain generalization, 2019.

- [11] Dou, Q., de Castro, D. C., Kamnitsas, K., and Glocker, B. Domain generalization via model-agnostic learning of semantic features. In Advances in Neural Information Processing Systems (2019), pp. 6450–6461.

- [12] Duan, L., Xu, D., and Chang, S.-F. Exploiting web images for event recognition in consumer videos: A multiple source domain adaptation approach. In 2012 IEEE Conference on Computer Vision and Pattern Recognition (2012), pp. 1338–1345.

- [13] Duchi, J. C., Khosravi, K., and Ruan, F. Multiclass classification, information, divergence, and surrogate risk, 2017.

- [14] Ganin, Y., Ustinova, E., Ajakan, H., Germain, P., Larochelle, H., Laviolette, F., March, M., and Lempitsky, V. Domain-adversarial training of neural networks. Journal of Machine Learning Research 17, 59 (2016), 1–35.

- [15] Ghifary, M., Bastiaan Kleijn, W., Zhang, M., and Balduzzi, D. Domain generalization for object recognition with multi-task autoencoders. In Proceedings of the IEEE international conference on computer vision (2015), pp. 2551–2559.

- [16] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems (2014), vol. 27, Curran Associates, Inc.

- [17] Gulrajani, I., and Lopez-Paz, D. In search of lost domain generalization. CoRR abs/2007.01434 (2020).

- [18] He, K., Zhang, X., Ren, S., and Sun, J. Deep residual learning for image recognition, 2015.

- [19] Hoffman, J., Mohri, M., and Zhang, N. Algorithms and theory for multiple-source adaptation. In Advances in Neural Information Processing Systems (2018), S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, Eds., vol. 31, Curran Associates, Inc.

- [20] Huang, Z., Wang, H., Xing, E. P., and Huang, D. Self-challenging improves cross-domain generalization. Proceedings of the European Conference on Computer Vision (ECCV) (2020).

- [21] Ievgen Redko, Amaury Habrard, M. S. On the analysis of adaptability in multi-source domain adaptation. Mach. Learn. 108 (June 2019), 1635–1652.

- [22] Johansson, F. D., Sontag, D., and Ranganath, R. Support and invertibility in domain-invariant representations. In Proceedings of Machine Learning Research (2019), vol. 89, pp. 527–536.

- [23] Le, T., Nguyen, T., Ho, N., Bui, H., and Phung, D. Lamda: Label matching deep domain adaptation. In ICML (2021).

- [24] LeCun, Y., Cortes, C., and Burges, C. Mnist handwritten digit database. ATT Labs [Online]. Available: http://yann.lecun.com/exdb/mnist 2 (2010).

- [25] Li, D., Yang, Y., Song, Y.-Z., and Hospedales, T. Learning to generalize: Meta-learning for domain generalization.

- [26] Li, D., Yang, Y., Song, Y.-Z., and Hospedales, T. M. Deeper, broader and artier domain generalization. In Proceedings of the IEEE international conference on computer vision (2017), pp. 5542–5550.

- [27] Li, D., Zhang, J., Yang, Y., Liu, C., Song, Y.-Z., and Hospedales, T. M. Episodic training for domain generalization. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (October 2019).

- [28] Li, H., Pan, S. J., Wang, S., and Kot, A. C. Domain generalization with adversarial feature learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (June 2018).

- [29] Li, Y., Tian, X., Gong, M., Liu, Y., Liu, T., Zhang, K., and Tao, D. Deep domain generalization via conditional invariant adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) (September 2018).

- [30] Mansour, Y., Mohri, M., Ro, J., Theertha Suresh, A., and Wu, K. A theory of multiple-source adaptation with limited target labeled data. In Proceedings of The 24th International Conference on Artificial Intelligence and Statistics (13–15 Apr 2021), A. Banerjee and K. Fukumizu, Eds., vol. 130 of Proceedings of Machine Learning Research, PMLR, pp. 2332–2340.

- [31] Mansour, Y., Mohri, M., and Rostamizadeh, A. Domain adaptation: Learning bounds and algorithms. vol. 22.

- [32] Mansour, Y., Mohri, M., and Rostamizadeh, A. Domain adaptation with multiple sources. In Advances in Neural Information Processing Systems (2009), D. Koller, D. Schuurmans, Y. Bengio, and L. Bottou, Eds., vol. 21, Curran Associates, Inc.

- [33] Mansour, Y., Mohri, M., and Rostamizadeh, A. Multiple source adaptation and the renyi divergence. In Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence (Arlington, Virginia, USA, 2009), UAI ’09, AUAI Press, p. 367–374.

- [34] Mansour, Y., and Schain, M. Robust domain adaptation. Annals of Mathematics and Artificial Intelligence 71 Issue 4 (2014), 365–380.

- [35] Muandet, K., Balduzzi, D., and Schölkopf, B. Domain generalization via invariant feature representation. In Proceedings of the 30th International Conference on Machine Learning (Atlanta, Georgia, USA, 17–19 Jun 2013), S. Dasgupta and D. McAllester, Eds., vol. 28 of Proceedings of Machine Learning Research, PMLR, pp. 10–18.

- [36] Muller, R., Kornblith, S., and Hinton, G. E. When does label smoothing help? In Advances in Neural Information Processing Systems (2019), vol. 32, Curran Associates, Inc.

- [37] Nguyen, T., Le, T., Zhao, H., Tran, H. Q., Nguyen, T., and Phung, D. Most: Multi-source domain adaptation via optimal transport for student-teacher learning. In UAI (2021).

- [38] Nguyen, T., Le, T., Zhao, H., Tran, H. Q., Nguyen, T., and Phung, D. Tidot: A teacher imitation learning approach for domain adaptation with optimal transport. In IJCAI (2021).

- [39] Nguyen, V. A., Nguyen, T., Le, T., Tran, Q. H., and Phung, D. Stem: An approach to multi-source domain adaptation with guarantees. In Proceedings of the IEEE/CVF International Conference on Computer Vision (2021), pp. 9352–9363.

- [40] Nguyen, X., Wainwright, M. J., and Jordan, M. Estimating divergence functionals and the likelihood ratio by penalized convex risk minimization. In Advances in Neural Information Processing Systems (2008), J. Platt, D. Koller, Y. Singer, and S. Roweis, Eds., vol. 20, Curran Associates, Inc.

- [41] Peng, X., Bai, Q., Xia, X., Huang, Z., Saenko, K., and Wang, B. Moment matching for multi-source domain adaptation. In 2019 IEEE/CVF International Conference on Computer Vision (ICCV) (2019), IEEE Computer Society, pp. 1406–1415.

- [42] Peng, X., Bai, Q., Xia, X., Huang, Z., Saenko, K., and Wang, B. Moment matching for multi-source domain adaptation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (October 2019).

- [43] Piratla, V., Netrapalli, P., and Sarawagi, S. Efficient domain generalization via common-specific low-rank decomposition. In Proceedings of the 37th International Conference on Machine Learning (13–18 Jul 2020), vol. 119 of Proceedings of Machine Learning Research, PMLR, pp. 7728–7738.

- [44] Redko, I., Courty, N., Flamary, R., and Tuia, D. Optimal transport for multi-source domain adaptation under target shift. In Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics (16–18 Apr 2019), K. Chaudhuri and M. Sugiyama, Eds., vol. 89 of Proceedings of Machine Learning Research, PMLR, pp. 849–858.

- [45] Redko, I., Habrard, A., and Sebban, M. Theoretical analysis of domain adaptation with optimal transport, 2017.

- [46] Saito, K., Kim, D., Sclaroff, S., and Saenko, K. Universal domain adaptation through self supervision. In Advances in Neural Information Processing Systems (2020), H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, Eds., vol. 33, Curran Associates, Inc., pp. 16282–16292.

- [47] Shalev-Shwartz, S., and Ben-David, S. Understanding machine learning: From theory to algorithms. Cambridge university press, 2014.

- [48] Sicilia, A., Zhao, X., and Hwang, S. J. Domain adversarial neural networks for domain generalization: When it works and how to improve, 2021.

- [49] Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna, Z. Rethinking the inception architecture for computer vision. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016), pp. 2818–2826.

- [50] Tzeng, E., Hoffman, J., Saenko, K., and Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (July 2017).

- [51] Vapnik, V., and Vapnik, V. Statistical Learning Theory. Wiley, 1998.

- [52] Wang, H., He, Z., Lipton, Z. C., and Xing, E. P. Learning robust representations by projecting superficial statistics out. In International Conference on Learning Representations (2019).

- [53] Xu, R., Chen, Z., Zuo, W., Yan, J., and Lin, L. Deep cocktail network: Multi-source unsupervised domain adaptation with category shift. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (June 2018).

- [54] Yan, S., Song, H., Li, N., Zou, L., and Ren, L. Improve unsupervised domain adaptation with mixup training, 2020.

- [55] Zhang, Y., Liu, T., Long, M., and Jordan, M. Bridging theory and algorithm for domain adaptation. In Proceedings of the 36th International Conference on Machine Learning (09–15 Jun 2019), K. Chaudhuri and R. Salakhutdinov, Eds., vol. 97 of Proceedings of Machine Learning Research, PMLR, pp. 7404–7413.

- [56] Zhao, H., Combes, R. T. D., Zhang, K., and Gordon, G. On learning invariant representations for domain adaptation. In Proceedings of the 36th International Conference on Machine Learning (09–15 Jun 2019), K. Chaudhuri and R. Salakhutdinov, Eds., vol. 97 of Proceedings of Machine Learning Research, PMLR, pp. 7523–7532.

- [57] Zhao, H., Zhang, S., Wu, G., Moura, J. M. F., Costeira, J. P., and Gordon, G. J. Adversarial multiple source domain adaptation. In Advances in Neural Information Processing Systems (2018), S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, Eds., vol. 31, Curran Associates, Inc.

- [58] Zhao, S., Gong, M., Liu, T., Fu, H., and Tao, D. Domain generalization via entropy regularization. In Advances in Neural Information Processing Systems (2020), H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, Eds., vol. 33, Curran Associates, Inc., pp. 16096–16107.

- [59] Zhao, S., Wang, G., Zhang, S., Gu, Y., Li, Y., Song, Z., Xu, P., Hu, R., Chai, H., and Keutzer, K. Multi-source distilling domain adaptation. Proceedings of the AAAI Conference on Artificial Intelligence 34, 07 (Apr. 2020), 12975–12983.

- [60] Zhou, K., Yang, Y., Hospedales, T., and Xiang, T. Learning to generate novel domains for domain generalization. In European Conference on Computer Vision (2020), Springer, pp. 561–578.

This supplementary material provides proofs for theoretical results stated in the main paper, as well as detailed experiment settings and further experimental results. It is organized as follows

-

•

Appendix A contains the proofs for the upper bounds of target loss introduced in Section 2.2 of our main paper.

-

•

Appendix B contains the proofs for characteristics of two representations as mentioned in Section 2.3 of our main paper.

-

•

Appendix C contains the proof for trade-off theorem discussed in Section 2.4 of our main paper.

-

•

Finally, in Appendix D, we present the generative detail of the Colored MNIST dataset, the experimental setting together with additional results for MSDA on Colored MNIST and DG on the real-world PACS dataset.

Appendix A Appendix A: Target Loss’s Upper Bounds

We begin with a crucial proposition for our theory development, which further allows us to connect loss on feature space to loss on data space.

Proposition 9.

Let where with and . Let be a positive function.

i) For any , we have

ii) For any , we have

where is the density of a distribution on , is the density of the distribution , and are labeling functions, and and are labeling functions on the latent space induced from , i.e., and .

Proof.

i) Using the definition of , we manipulate the integral on feature space as

In , we use the definition of push-forward distribution . In , can be put inside the integral because for any . In , the integral over restricted region is expanded to all space with the help of , whose value is if and otherwise. In , Fubini theorem is invoked to swap the integral signs.

ii) Using the same technique, we have

∎

Proposition 9 allows us to connect expected loss on feature space and input space as in the following corollary.

Corollary 10.

Consider a domain with data distribution and ground-truth labeling function . A hypothesis is , where with and . Then, the labeling function on input space induces a ground-truth labeling function on feature space . Let be the loss function, then expected loss can be calculated either w.r.t. input space or w.r.t. feature space . If we assume the loss has the formed mentioned in the main paper, that is,

for any two simplexes , where and . Then the losses w.r.t. input space and feature space are the same, i.e.,

Normally, target loss is bounded by source loss , a label shift term , and a data shift term [3]. Here, this kind of bound is developed using data distribution on input space and labeling function from input to label space, which are not convenient in understanding representation learning, since are data nature and therefore fixed. In order to relate target loss to properties of learned representations, another bound in which is the loss w.r.t. feature space and is the data shift on feature space is more favorable. However, this naive approach presents a pitfall, since the loss is not identical to the loss w.r.t. input space , which is of ultimate interest, e.g., to-be-bounded target loss , or to-be-minimized source loss . Using the previous proposition and corollary, we could bridge this gap and develop a target bound connecting both data space and feature space.

Theorem 11.

(Theorem 1 in the main paper) Consider a mixture of source domains and the target domain . Let be any loss function upper-bounded by a positive constant . For any hypothesis where with and , the target loss on input space is upper bounded

| (7) |

where is the absolute of single point label shift on input space between source domain , the target domain , , and the feature distribution of the source mixture .

Proof.

First, consider the hybrid domain , with be the feature distribution of the source mixture, and is the induced ground-truth labeling function of target domain. The loss on the hybrid domain is then upper bounded by the loss on source mixture and a label shift term. We derive as follows:

Firstly, using Corollary 10, the loss terms on feature space and input space are equal

Secondly, the difference term, can be transformed into label shift on input space using Proposition 9

Here we note that results from (ii) in Proposition 9 with for any and . Furthermore, is the absolute single point label shift on input space between the source domain and the target domain .

With these two terms, we have the upper bound for hybrid domain as

Next, we relate the loss on target to hybrid domain , which differs only at the feature marginals.

In the above proof, we repeatedly invoke Cauchy-Schwartz inequality . Moreover, for the sake of completeness, we reintroduce the definition of the square root Hellinger distance

To this end, we obtain the upper bound for target loss related to loss on souce mixture, a label shift term on input space, and a data shift term between target domain and source mixture on feature space.

| (8) |

Finally, using the fact that loss on feature space equal loss on input space (Corollary 10), we have

That concludes our proof. ∎

This bound is novel since it relates loss on input space and data shift on feature space. This allows us to further investigate how source-source compression and source-target compression affect learning. First, we prove a lemma showing decomposition of data shift between target domain and source mixture to a sum of data shifts between target domain and source domains.

Lemma 12.

Given a source mixture and a target domain, we have the following

Proof.

Firstly, we observe that

Secondly, we use Cauchy-Schwartz inequality to obtain

Therefore,we arrive at

∎

Now we are ready to prove the bound which motivate compressed DI representation.

Theorem 13.

(Theorem 3 in the main paper) Consider mixture of source domains and target domain . Let be any loss function upper-bounded by a positive constant . For any hypothesis where with and , the target loss on input space is upper bounded

| (9) | ||||

Appendix B Appendix B: DI Representation’s Characteristics

B.1 General Domain-Invariant Representations

In the main paper, we defined general DI representation via minimization of source loss . We then proposed to view the optimization problem as calculating a type of divergence, i.e., hypothesis-aware divergence. To understand the connection between the two, we first consider the classification problem where samples are drawn from a mixture , with defined on and density being , and the task is to predict which distributions the samples originate from, i.e., labels being . Here, the hypothesis class is assumed to have infinite capacity, and the objective is to minimize .

B.1.1 Hypothesis-Aware Divergence

Theorem 14.

(Theorem 5 in the main paper) Assuming the hypothesis class has infinite capacity, we define the hypothesis-aware divergence for multiple distributions as

| (10) |

This divergence is a proper divergence among in the sense that for all and , and if .

Proof.

Data is sampled from the mixture by firstly sampling domain index , then sampling data and label with . We examine the the total expected loss for any hypothesis , which is

We would like to minimize this loss, which leads to

| (11) | ||||

For , is introduced as the density of the mixture, whereas for , we use the fact that has infinite capacity, leading to the equality . Moreover, for , the property of concave function with is invoked, i.e., .

This hints us to define a non-zero divergence between multiple distributions as

which is a proper f-divergence, since is a convex function, and is just a constant. Moreover, for all and due to the previous inequality 11. The equality happens if there is some such that, for all

This means , where is a constant dependent on index . However, this leads to

i.e., . In other words, the equality happens when all distributions are the same . ∎

B.1.2 General Domain-Invariant Representations

For a fixed feature map , the induced representation distributions of source domains are . We then find the optimal hypothesis on the induced representation distributions by minimizing the loss

| (12) |

The general domain-invariant feature map is defined as the one that offers the minimal optimal loss as

| (13) |

We denote as the class conditional distribution of the source domain on the latent space and as its density function. The induced representation distribution of source domain is a mixture of as where .

We further define where . Obviously, we can interpret as the mixture of the class conditional distributions of the source domains on the latent space. The objective function in Eq. (12) can be viewed as training the optimal hypothesis to distinguish the samples from for a given feature map . Therefore, by linking to the multi-divergence concept developed in Theorem 14, we achieve the following theorem.

Theorem 15.

(Theorem 6 in the main paper) Assume that has infinite capacity, we have the following statements.

1. , where is defined as above.

2. Finding the general domain-invariant feature map via the OP in (13) is equivalent to solving

| (14) |

Proof.

We investigate the loss on mixture

Therefore, the loss on mixture is actually a loss on joint class-conditional distributions . Using result from Theorem 14, we can define a divergence between these class-conditionals

∎

B.2 Compressed Domain-Invariant Representations

Theorem 16.

(Theorem 7 in the main paper) For any confident level over the choice of , the estimation of loss is in the -range of the true loss

where is a function of for which is proportional to

Proof.

Let be a sample of data points sampled from the mixture domain, i.e., i.e., , and labeling with corresponding . The loss of a hypothesis on a sample for some domain index is . To avoid crowded notation, we denote this loss as .

Let , where each is the number of sample drawn from domain . The estimation of loss on a particular domain is

This estimation is unbiased estimation, i.e., . Furthermore, loss estimation on source mixture is

This estimation is also an unbiased estimation

Therefore, we can bound the concentration of around its mean value using Chebyshev’s inequality

which is equivalent to

The variance of is

| (15) | ||||

is true since is the sum of i.i.d. random variable with sampled from the same distribution . In , the variance of w.r.t. a distribution mixture is related to mean and variance of constituting distribution, i.e., .

Therefore, the right hand side of 15 is upper by , where is

This concludes our proof, where the concentration inequality is

∎

Appendix C Appendix C: Trade-Off in Learning DI Representations

Lemma 17.

Given a labeling function and a hypothesis , let denote and as two label marginal distributions induced by and on the data distribution . Particularly, to sample , we first sample (i.e., is the data distribution with the density function ) and then sample , while similar to sample . We then have

where the loss is defined based on the Hellinger loss .

Proof.

We have

where we note that in the derivation in , we use Cauchy-Schwarz inequality: .

Therefore, we reach the conclusion as

∎

Lemma 18.

Consider the hypothesis . We have the following inequalities w.r.t. the source and target domains:

(i) where is the label marginal distribution induced by on , while is the label marginal distribution induced by on .

(ii) , where with to be induced by on and with to be induced by on (i.e., equivalently, the label marginal distribution induced by on ).

Proof.

(i) The proof of this part is obvious from Lemma 17 by considering as and as .

(ii) By the convexity of , which is a member of -divergence family, we have

where the derivation in is from Lemma 17. Therefore, we reach the conclusion as

∎

Theorem 19.

(Theorem 8 in the main paper) Consider a feature extractor and a hypothesis , the Hellinger distance between two label marginal distributions and can be upper-bounded as:

(i)

(ii)

Here we note that the general loss is defined based on the Hellinger loss defined as .

Proof.

(i)We define and as in Lemma 18. Recap that to sample , we sample (i.e., ), compute (i.e., ) , and , while similar to draw .

Using triangle inequality for Hellinger distance, we have

Referring to Lemma 18, we achieve

From the monotonicity of Hellinger distance, when applying to and with the same transition probability for obtaining , we have

Finally, we reach the conclusion as

(ii) From Lemma 12, we can decompose the data shift term and use triangle inequality again, hence arriving at

This concludes our proof. ∎

The loss in Theorem 19 defined based on the Hellinger loss defined as , while theory development in previous sections bases on the loss which has the specific form

| (16) |

To make it more consistent, we discuss under which condition the Hellinger loss is in the family defined in (16). It is evident that if the labeling function satisfying and , for example, we apply label smoothing [49, 36] on ground-truth labels, the Hellinger loss is in the family of interest. That is because the following derivation:

where we consider .

Appendix D Appendix D: Additional Experiments

D.1 Experiment on Colored MNIST

D.1.1 Dataset

We conduct experiments on the colored MNIST dataset [2] whose data is generated as follow. Firstly, for any original image in the MNIST dataset [24], the value of digit feature is if the image’s digit is from , while is assigned to image with digit from . Next, the ground-truth label for the image is also binary and sampled from either or , depending on the value of digit feature . These binomial distributions are such that . Next, the color feature binary random variable is assigned to each image conditioning on its label, i.e., or with , depending on the domain. Finally, we color the image red if or green if .

For both DG and MSDA experiments, there are 7 source domains generated by setting where for . In our actual implemetation, we take . The two target domains are created with for . After domain creation, data from each domain is split into training set and validation set. For DG experiment, no data from the target domain is used in training. On the other hand, the same train-validation split is applied to target domains in MSDA and the unlabeled training splits are used for training, while the validation splits are used for testing.

D.1.2 Model

We train a hypothesis and minimize the classification loss w.r.t. entire source data

where CE is the cross-entropy loss, is the number of samples from domain , and is the total number of source samples.

To align source-source representation distribution, we apply adversarial learning [16] as in [14], in which a min-max game is played, where the domain discriminator tries to predict domain labels from input representations, while the feature extractor (generator) tries to fool the domain discriminator, i.e., . The source-source compression loss is defined as

where is the domain label. It is well-known [16] that if we search in a family with infinite capacity then

.

Similarly, alignment between source and target feature distribution is enforced by employing adversarial learning between another discriminator and the encoder , with the objective is . The loss function for source-target compression is

where is the source mixture and is the chosen target domain among the two.

Finally, for DG we optimize the objective

| (17) |

where being the trade-off hyperparameter: corresponds to DG’s general DI representation, while larger corresponds to more compressed DI representation. On the other hand, the objective for MSDA setting is

| (18) |

with controls the source-source compression and controls the source-target compression.

Our implementation is based largely on Domain Bed repository [17]. Specifically, the encoder is a convolutional neural network with 4 cnn layers, each is accompanied by RELU activation and batchnorm, while the classifier and discriminators are densely connected multi-layer perceptions with 3 layers. Our code can be found in the zip file accompanying this appendix. Moreover, our experiments were run on one Tesla V100 GPU and it took around 30 minutes for one training on Colored MNIST.

D.1.3 Multiple-source Domain Adaptation

In additional to DG experiment provided in Section 3 in the main paper, further experiment is conducted on MSDA, in which source-target compression is applied in additional to source-source compression. Specifically, unlabeled data from a target domain is supplied for training, whose label is while all labeled source data has label , and the source-target discriminator is tasked with classifying them. We experiment with 2 target domains separately and report accuracy on target domain for different compression strength. The result is presented in Figure 5.

It is evident from the figure that the more compression on both source-source and source-target representation, the lower the accuracy. This result aligns with our previous bound (Theorem 8 in main paper), i.e.,

When source-source and source-target compression is applied, the term is minimized, raising the lower bound of the loss terms. Subsequently, as source loss is minimized, the target loss is high and hence performance is hindered.

However, the drop in accuracy for large compression on close target domain is not as significant as in distant target domain, as indicated in Figure 5b and 5c. In fact, accuracy for some compressed representation is higher than no compressed representation at larget iteration. It is possible that the negative effect of raising lower bound as in trade-off Theorem is counteracted by the benefit of compressed DI representation (Section 2.3.3 of main paper), i.e., the learned classifier for compressed DI representation better approximates the ground-truth labeling function. On the other hand, model with general DI representation cannot approximate this ground-truth labeling function as accurately, but overfit to training dataset, resulting in target accuracy drop at larger iteration.

D.2 Experiment on PACS dataset

In order to verify our theoretical finding on real dataset, we conduct further experiment on PACS dataset [26], which has 4 domains: Photo, Art Painting, Cartoon, and Sketch. Among the 4 domains, Photo and Cartoon are chosen as training domains, while Art Painting is chosen as target domain close to the training ones, and Sketch is the target domain distant from the training ones. We use Resnet18 [18] as the feature map, while label classifier and domain discriminator are multi-layer perceptrons. We only investigate DG setting on this dataset, in which training objective function is Eq. 17. The result in Figure 6 illustrates similar pattern to DG experiment on Colored MNIST. Specifically, the accuracies for both target domains raise until a peak is reached and then decrease, which confirms our developed theory for benefit and trade-off of compressed DI representation.