On Finite-Time Mutual Information

Abstract

Shannon-Hartley theorem can accurately calculate the channel capacity when the signal observation time is infinite. However, the calculation of finite-time mutual information, which remains unknown, is essential for guiding the design of practical communication systems. In this paper, we investigate the mutual information between two correlated Gaussian processes within a finite-time observation window. We first derive the finite-time mutual information by providing a limit expression. Then we numerically compute the mutual information within a single finite-time window. We reveal that the number of bits transmitted per second within the finite-time window can exceed the mutual information averaged over the entire time axis, which is called the exceed-average phenomenon. Furthermore, we derive a finite-time mutual information formula under a typical signal autocorrelation case by utilizing the Mercer expansion of trace class operators, and reveal the connection between the finite-time mutual information problem and the operator theory. Finally, we analytically prove the existence of the exceed-average phenomenon in this typical case, and demonstrate its compatibility with the Shannon capacity.

Index Terms:

Finite-time mutual information, exceed-average, Mercer expansion, trace class, operator theory.I Introduction

The Shannon-Hartley theorem [1] has accurately revealed the fundamental theoretical limit of information transmission rate , which is also called as the Shannon capacity, over a Gaussian waveform channel of a limited bandwidth . The expression for Shannon capacity is , where and denote the signal power and the noise power, respectively. The derivation of Shannon-Hartley Theorem heavily depends on the Nyquist sampling principle [2]. The Nyquist sampling principle, which is also named as the theorem [3], claims that one can only obtain independent samples within an observation time window in a channel band-limited to [4].

Based on the Nyquist sampling principle, the Shannon capacity is derived by multiplying the capacity of a Gaussian symbol channel [5, p.249] with at first, and then dividing the result by , finally letting . Note that this approximation only holds when . Therefore, the Shannon capacity only asymptotically holds as becomes sufficiently large. When is of finite value, the approximation fails to work. Thus, when the observation time is finite, the Shannon-Hartley Theorem cannot be directly applied to calculate the capacity in a finite-time window. To the best of our knowledge, the evaluation of the finite-time mutual information has not yet been investigated in the literature. It is worth noting that real-world communication systems transmit signals in a finite-time window, thus evaluating the finite-time mutual information is of practical significance.

In this paper, to fill in this gap, we analyze the finite-time mutual information instead of the traditional infinite-time counterpart, and prove the existence of exceed-average phenomenon within a finite-time observation window111Simulation codes will be provided to reproduce the results presented in this paper: http://oa.ee.tsinghua.edu.cn/dailinglong/publications/publications.html.. Specifically, our contributions are summarized as follows:

-

•

We derive the mutual information expressions within a finite-time observation window by using dense sampling and limiting methods. In this way, we can overcome the continuous difficulties that appear when analyzing the information contained in a continuous time interval. These finite-time mutual information expressions make the analysis of finite-time problems possible.

-

•

We conduct numerical experiments based on the discretized formulas. In the numerical results under a special setting, we reveal the exceed-average phenomenon, i.e., the mutual information within a finite-time observation window exceeds the mutual information averaged over the whole time axis.

-

•

In order to analytically prove the exceed-average phenomenon, we first derive an analytical finite-time mutual information formula based on Mercer expansion [6], where we can find the connection between the mutual information problem and the operator theory [7]. To make the problem tractable, we construct a typical case in which the transmitted signal has certain statistical properties. Utilizing this construction, we obtain a closed-form mutual information solution in this typical case, which leads to a rigorous proof of the exceed-average phenomenon.

Organization: In the rest of this paper, the finite-time mutual information is formulated and evaluated numerically in Section II, where the exceed-average phenomenon is first discovered. Then, in Section III, we derive a closed-form finite-time mutual information formula under a typical case. Based on this formula, in Section IV, the exceed-average phenomenon is rigorously proved. Finally, conclusions are drawn in Section V.

Notations: denotes a Gaussian Process; denotes the autocorrelation function; are the Power Spectral Density (PSD) of the corresponding process ; Boldface italic symbols denotes the column vector generated by taking samples of on instants ; Upper-case boldface letters such as denote matrices; denotes the expectation; denotes the indicator function of the set ; denotes the collection of all the square-integrable functions on window ; denotes the imaginary unit.

II Numerical Analysis of the Finite-Time Mutual Information

In this section, we numerically evaluate the finite-time mutual information. In Subsection II-A, we model the transmission problem by Gaussian processes, and derive the mutual information expressions within a finite-time observation window; In Subsection II-B, we approximate the finite-time mutual information by discretized matrix-based formulas; In Subsection II-C, we reveal the exceed-average phenomenon by numerically evaluating the finite-time mutual information.

II-A The Expressions for finite-time mutual information

Inspired by [8], we model the transmitted signal by a zero-mean stationary Gaussian stochastic process, denoted as , and the received signal by . The noise process is also a stationary Gaussian process independent of . The receiver can only observe the signal within a finite-time window , where is the observation window span. We aim to find the maximum mutual information that can be acquired within this time window.

To analytically express the amount of acquired information, we first introduce sampling points inside the time window, denoted by , and then let to approximate the finite-time mutual information. By defining and , the mutual information on these samples can be expressed as

| (1) |

and the finite-time mutual information is defined as

| (2) |

Then, the transmission rate can be defined as . After these definitions, we can then define the limit mutual information as by letting .

II-B Discretization

Without loss of generality, we fix the sampling instants uniformly onto fractions of : . Since the random vectors and are samples of a Gaussian process, they are both Gaussian random vectors with mean zero and covariance matrices and , where are symmetric positive-definite matrices defined as

| (3) | ||||

Note that is the independent sum of and , thus the autocorrelation functions satisfy , and similarly the covariance matrices satisfy .

The mutual information is defined as , where denotes the differential entropy. Utilizing the entropy formula for -dimentional Gaussian vectors [5], we obtain

| (4) |

II-C Numerical Analysis

In order to study the properties of mutual information as a function of , we set the autocorrelation function of the signal process and noise process to the following special case

| (5) | ||||

where , and the corresponding PSDs are and . In order to compare the finite-time mutual information with the averaged mutual information, the Shannon limit with colored noise spectrum is utilized, which is a generalized version of the well-known formula . The averaged mutual information of colored noise PSD [5] is expressed as

| (6) |

Then, plugging (5) into (6) yields the numerical result for .

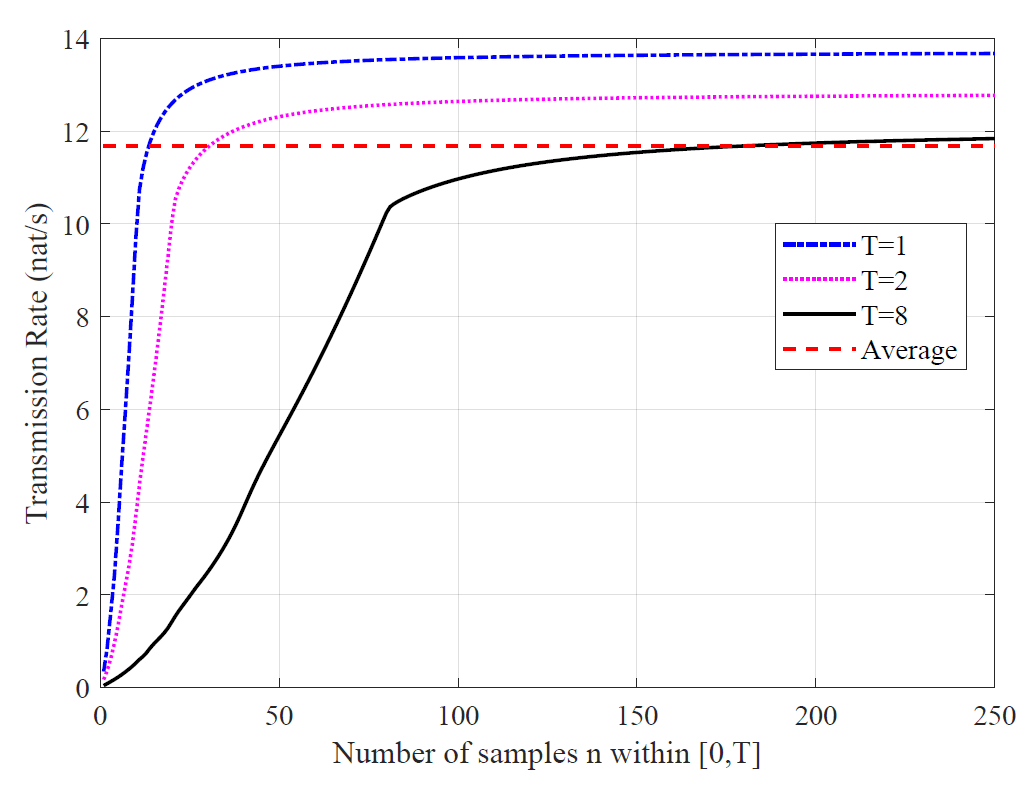

We calculate the finite-time transmission rate and the average mutual information against the number of samples within the observation window . The numerical results are collected in Fig. 1. It is shown that is an increasing function of , and for fixed values of , the approximated finite-time mutual information tends to a finite limit under the correlation assumptions given by (5). The most amazing observation is that, it is possible to obtain more information within finite-time window than the prediction given by averaging the mutual information along the entire time axis (6). We call this phenomenon the exceed-average phenomenon.

III A Closed-Form Finite-Time Mutual Information Formula

In this section, we first introduce the Mercer expansion in Subsection III-A as a basic tool for our analysis. Then we derive the series representation of the finite-time mutual information, and the corresponding power constraint in Subsection III-B, under the assumption of AWGN noise.

III-A The Mercer Expansion

By analyzing the structure of the autocorrelation functions, it is possible to obtain and directly. In fact, if we know the Mercer expansion [6] of the autocorrelation function on interval , then we can calculate more easily [9]. In the following discussion, we assume the Mercer expansion of the source autocorrelation function to be in the following form

| (7) |

where the eigenvalues are positive: , and the eigenfunctions form an orthonormal set:

| (8) |

The Mercer’s theorem [6] ensures the existence and uniqueness of the eigenpairs , and furthermore, the kernel itself can be expanded under the eigenfunctions:

| (9) |

III-B Finite-Time Mutual Information Formula

Based on Mercer expansion, we obtain a closed-form formula in the following Theorem 1.

Theorem 1 (Source expansion, AWGN noise)

Suppose the information source, modeled by the transmitted process , has autocorrelation function . An AWGN noise of PSD is imposed onto , resulting in the received process . The Mercer expansion of on is given by (7), satisfying (8). Then the finite-time mutual information within the observation window between the processes and can be expressed as

| (10) |

Proof:

The Mercer expansion decomposes the AWGN channel into an infinite number of independent parallel subchannels, each with signal power and noise variance . Thus, accumulating all the mutual information values of these subchannels yields the finite-time mutual information . ∎

From Theorem 1 we can conclude that the finite-time mutual information of AWGN channel is uniquely determined by the Mercer spectra of within . However, it remains unknown whether the series representation (10) converges. In fact, the convergence is closely related to the signal power, which is calculated in the following Lemma 1.

Lemma 1 (Operator Trace Coincide with Power Constraint)

Proof:

Integrating both sides of (9) on the interval gives the conclusion immediately. ∎

Remark 1

From the above Lemma 1, we can conclude that the sum of is finite when is finite. It can be immediately derived that , since .

Remark 2

The finite-time mutual information formula (10) is closely related to the operator theory [7] in functional analysis. The sum of all the eigenvalues is called the operator trace in linear operator theory. As is mentioned in Lemma 1, the autocorrelation function can be treated as a linear operator on .

IV Proof of the Existence of Exceed-Average Phenomenon

In this section, we first give a proof of the existence of the exceed-average phenomenon in a typical case, then we discuss the compatibility with the Shannon-Hartley theorem.

IV-A Closed-Form Mutual Information in a Typical Case

To prove the exceed-average phenomenon, we only need to show that the finite-time mutual information is greater than the averaged mutual information in a typical case. Let us consider a finite-time communication scheme with a finitely-powered stationary transmitted signal autocorrelation222The signal autocorrelation is often observed in many scenarios, such as passing a signal with white spectrum through an RC lowpass filter., which is specified as

| (12) |

where , under AWGN channel with noise PSD being . The power of signal is . According to Lemma 1, the trace of the corresponding Mercer operator is finite. Then the finite-time mutual information given by Theorem 1 is also finite, as is shown in Remark 1. Finding the Mercer expansion is equivalent to finding the eigenpairs . The eigenpairs are determined by the following characteristic integral equation [10]:

| (13) |

Differentiating both sides of (13) twice with respect to yields the boundary conditions and the differential equation that must satisfy:

| (14) | ||||

Let denote the resonant frequency of the above harmonic oscillator equation, and let be the sinusoidal form of the eigenfunction. Using the boundary conditions we obtain

| (15) | ||||

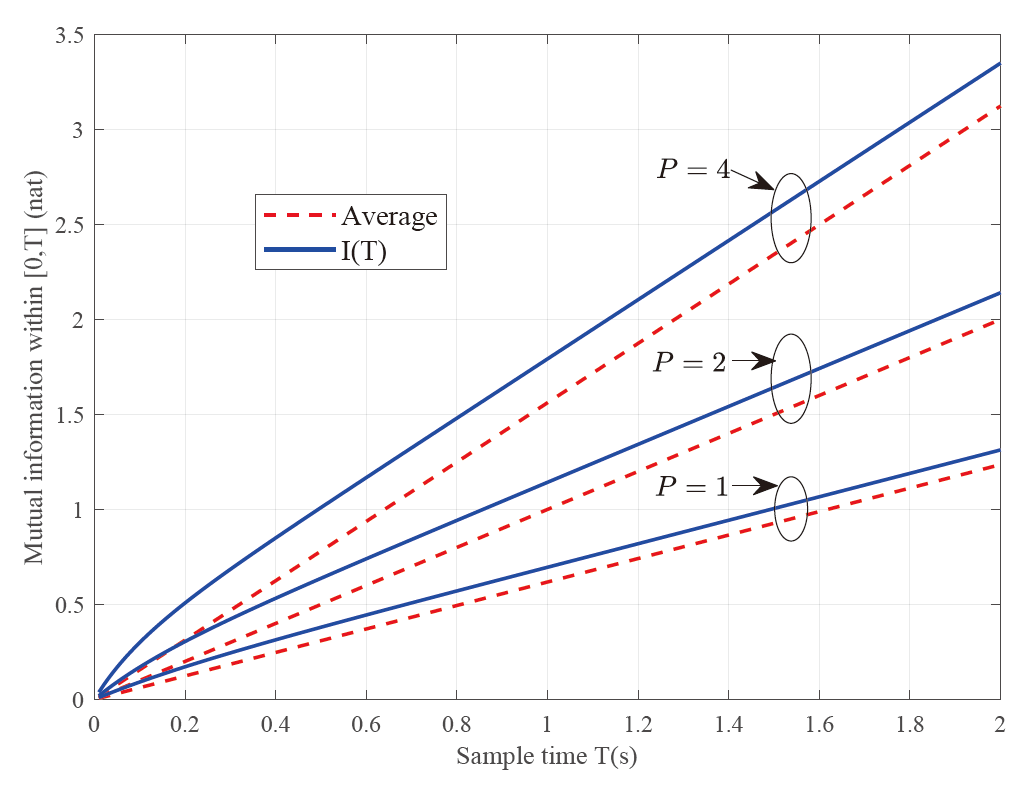

To ensure the existence of solution to the homogeneous linear equations (15) with unknowns , the determinant must be zero. Exploiting this condition, we find that naturally satisfy the transcendental equation . By introducing an auxillary variable , this transcendental equation can be simplified as , i.e., there exists positive integer such that . The integer can be chosen to be equal to . From the function images of and (Fig. 2), we can determine , and then . To sum up, the solution to the characteristic equation (13) are collected into (16) as follows.

| (16) | ||||

where denotes the normalization constants of on to ensure orthonormality.

Equation (16) gives all the eigenpairs , from which we can calculate by applying Theorem 1. As for the Shannon limit , by applying (6) and evaluating the integral with [11] we can obtain

| (17) |

After all the preparation works above, we can rigorously prove that under the typical case of (12), as long as the transmission power is smaller than a constant . The following Theorem 2 proves this result.

Theorem 2 (Existence of exceed-average phenomenon in a typical case)

Proof:

See Appendix A. ∎

To support the above theoretical analysis, numerical experiments on are conducted based on evaluations of (16) and (17). As shown in Fig. 3, it seems that we can always harness more mutual information in a finite-time observation window than the averaged mutual information. This fact is somehow unsurprising because the observations inside the window can always eliminate some extra uncertainty outside the window due to the autocorrelation of .

IV-B Compatibility with the Shannon-Hartley theorem

Though the exceed-average effect do imply mathematically that the mutual information within a finite-time window is higher than the average mutual information (which coincides with the Shannon limit (6) in this case), in fact, it is still impossible to construct a long-time stable data transmission scheme above the Shannon capacity by leveraging this effect. So the exceed-average phenomenon does not contradict the Shannon-Hartley Theorem. Placing additional observation windows cannot increase the average information rate, because the posterior process does not carry as much information as the original one, causing a rate reduction in the later windows. It is expected that, the finite-time mutual information would ultimately decrease to the average mutual information as the total observation time tends to infinity (i.e., ), and the analytical proof, as well as the achievability of this finite-time mutual information, is still worth investigation in future works.

V Conclusions

In this paper, we provided rigorous proofs of the existence of the exceed-average phenomenon under typical autocorrelation settings of the transmitted signal process and the noise process. Our discovery of the exceed-average phenomenon provided a generalization of Shannon’s renowned formula to the practical finite-time communications. Since the finite-time mutual information is a more precise estimation of the capacity limit, the optimization target may shift from the average mutual information to the finite-time mutual information in the design of practical communication systems. Thus, it may have guiding significance for the performance improvement of modern communication systems. In future works, general proofs of , independent of the concrete autocorrelation settings, still require further investigation. Moreover, we need to answer the question of how to exploit this exceed-average phenomenon to improve the communication rate, and whether this finite-time mutual information is achievable by a certain coding scheme. In addition, although we have discovered numerically that the finite-time mutual information agrees with the Shannon capacity when , an analytical proof of this result is required in the future.

Appendix A

Proof Of Theorem 2

Plugging (17) into the right-hand side of (18), and differentiate both sides w.r.t . Notice that if , then both sides of (18) are equal to 0. Thus, we only need to prove that the derivative of left-hand side is strictly larger than that of right-hand side within a small interval :

| (19) |

Multiply both sides of (19) by and define , and then from Lemma 1 we obtain . In this way, (19) is equivalent to

| (20) |

Since is convex on , by applying Jensen’s inequality to the left-hand side of (20), we only need to prove that

| (21) |

From the definition of we can derive that . So we go on to calculate . That is equivalent to calculate , where corresponds to the integral kernel:

| (22) |

Evaluating the kernel on the diagonal , and integrating this kernel on the diagonal of gives , i.e., :

| (23) | ||||

By substituting (23) into (21), we just need to prove that

| (24) |

Define the dimensionless number . Since the function is strictly positive and less than 1 at , we can conclude that, there exists a small positive such that (24) holds for . The number can be chosen as

| (25) |

which implies that (19) holds for any . Thus, integrating (19) on both sides from to gives rise to the conclusion (18), which completes the proof of Theorem 2.

Acknowledgment

This work was supported in part by the National Key Research and Development Program of China (Grant No.2020YFB1807201), in part by the National Natural Science Foundation of China (Grant No. 62031019), and in part by the European Commission through the H2020-MSCA-ITN META WIRELESS Research Project under Grant 956256.

References

- [1] C. E. Shannon, “A mathematical theory of communication,” The Bell Syst. Techni. J., vol. 27, no. 3, pp. 379–423, Jul. 1948.

- [2] H. Landau, “Sampling, data transmission, and the nyquist rate,” Proc. IEEE, vol. 55, no. 10, pp. 1701–1706, Oct. 1967.

- [3] H. Nyquist, “Certain topics in telegraph transmission theory,” Trans. American Institute of Electrical Engineers, vol. 47, no. 2, pp. 617–644, Apr. 1928.

- [4] D. Slepian, “On bandwidth,” Proc. IEEE, vol. 64, no. 3, pp. 292–300, Mar. 1976.

- [5] T. M. Cover, Elements of information theory. John Wiley & Sons, 1999.

- [6] J. Mercer, “Functions of positive and negative type and their connection with the theory of integral equations,” Philos. Trans. Royal Soc., vol. 209, pp. 4–415, 1909.

- [7] K. Zhu, Operator theory in function spaces. American Mathematical Soc., 2007, no. 138.

- [8] A. Balakrishnan, “A note on the sampling principle for continuous signals,” IRE Trans. Inf. Theory, vol. 3, no. 2, pp. 143–146, Jun. 1957.

- [9] J. Barrett and D. Lampard, “An expansion for some second-order probability distributions and its application to noise problems,” IRE Trans. Inf. Theory, vol. 1, no. 1, pp. 10–15, Mar. 1955.

- [10] D. Cai and P. S. Vassilevski, “Eigenvalue problems for exponential-type kernels,” Comput. Methods in Applied Math., vol. 20, no. 1, pp. 61–78, Jan. 2020.

- [11] I. S. Gradshteyn and I. M. Ryzhik, Table of integrals, series, and products. Academic press, 2014.