On Decidability of Existence of Nonblocking Supervisors Resilient to Smart Sensor Attacks

Abstract

Cybersecurity of discrete event systems (DES) has been gaining more and more attention recently, due to its high relevance to the so-called 4th industrial revolution that heavily relies on data communication among networked systems. One key challenge is how to ensure system resilience to sensor and/or actuator attacks, which may tamper data integrity and service availability. In this paper we focus on some key decidability issues related to smart sensor attacks. We first present a sufficient and necessary condition that ensures the existence of a smart sensor attack, which reveals a novel demand-supply relationship between an attacker and a controlled plant, represented as a set of risky pairs. Each risky pair consists of a damage string desired by the attacker and an observable sequence feasible in the supervisor such that the latter induces a sequence of control patterns, which allows the damage string to happen. It turns out that each risky pair can induce a smart weak sensor attack. Next, we show that, when the plant, supervisor and damage language are regular, it is possible to remove all such risky pairs from the plant behaviour, via a genuine encoding scheme, upon which we are able to establish our key result that the existence of a nonblocking supervisor resilient to smart sensor attacks is decidable. To the best of our knowledge, this is the first result of its kind in the DES literature on cyber attacks. The proposed decision process renders a specific synthesis procedure that guarantees to compute a resilient supervisor whenever it exists, which so far has not been achieved in the literature.

keywords:

discrete-event systems, smart sensor attacks, decidability of existence of resilient supervisory control1 Introduction

Cyber-attack resilience refers to properties of service availability and data integrity. With the continuous advancement of information and communications technology (ICT), in particular, the recent 5G-based IoT technologies, we are enjoying unprecedented connectivity around the world. Nevertheless, the threat of cyber attacks that may potentially cause significant damage to human lives and properties has more frequently become the center of attention, and has been attracting lots of research from different communities. Basically, an attacker aims to inflict damage on a target system by disrupting its control loop. This could be achieved either by intercepting and changing the controller’s input signals (in terms of sensor attacks), or by intercepting and changing the controller’s output signals (in terms of actuator attacks), or by completely blocking the data transmission between the controller and the plant (in terms of denial-of-service attacks). An attack can be either brute-force, e.g., via hardware destruction or signal jamming, or covert (or stealthy), i.e., to inflict damage without being detected by relevant monitoring mechanisms.

A good survey of cyber attacks and cyber defence with a systems-and-control perspective can be found in [1]. Typically, linear systems are considered in existing works that rely on system identification and control techniques. Within the DES community, most works rely on the control system setup introduced in the Ramadge-Wonham supervisory control paradigm [23], where the plant generates observable outputs, received by the supervisor via an observation channel, and each control command specified as a set of allowed (or disallowed) events is generated by the supervisor and fed to the plant via a command channel. The plant nondeterministically picks one event from a received control command and executes it. The event execution process is assumed to be asynchronous, i.e., up to one event execution at each time instant, and instantaneous. Unlike attacks in time-driven systems described in [1], attacks under consideration in a DES aim to change the sequence of events in specific system runs.

There are two different streams of research on cyber attacks and resilient control. The first stream refers to a set of black-box methods that treat attacks as undesirable (either intentional or unintentional) uncontrollable and mostly unobservable disturbances to a given closed-loop system. Existing works include, e.g., a game theoretical approach [29], fault-tolerance based approaches such as [5] and [19] on sensor attacks, [2] on actuator attacks, and [3] [6] [4] on sensor+actuator attacks, and transducer-based modelling and synthesis approaches such as [7] [8]. In the black-box methods, system vulnerability is typically modelled by concepts similar to diagnosability described in, e.g., [34], and system resilience bears similarity to fault tolerant control described in, e.g., [28] [32], that concerns whether there is a supervisor that can perform satisfactorily under the worst case attack scenarios. The second stream refers to a set of white-box methods, aiming to develop a specific “smart” attack model that ensures certain intuitive properties such as covertness and guaranteed (strong or weak) damage infliction. Existing works include, e.g., [9] [10] [11] [13] [12] [12] [20] on smart sensor attacks, [14] [18] and [17] on smart actuator attacks, and [16] [21] and [15] on smart sensor+actuator attacks. With such smart attack models, existing research works address the impact of a specific attack on the closed-loop behaviour, the vulnerability of a system to such an attack, and finally the resilience of a supervisor to a concerned attack.

After examining those existing works on smart cyber attacks, it is clear that most works focus on how to derive a proper smart attack model. Various synthesis algorithms have been proposed under relevant assumptions. Nevertheless, the existence of a supervisor that is resilient to all possible smart cyber attacks is still open for research. In [35] [36] the authors present synthesis methods for resilient control against a specific sensor attack model described by a finite-state automaton in different scenarios. Thus, the synthesized supervisor is not designed to be resilient to all possible smart sensor attacks. In case of a worst-case sensor attack scenario, no smartness in terms of, e.g., covertness, is considered by the authors. There are a few heuristic synthesis approaches proposed in the literature, e.g., [10] proposes one algorithm against smart sensor attacks, [16] proposes one algorithm that generates a resilient supervisor whose state set is bounded by a known value, and [18] presents an algorithm to synthesize a supervisor, which is control equivalent to an original supervisor and resilient to smart actuator attacks. But none of those existing algorithms can guarantee to find one resilient supervisor, if it exists. That is, when those algorithms terminate and return an empty solution, it does not necessarily mean that there is no solution.

Before any attempt of overcoming a complexity challenge in order to derive a resilient solution, it is critical to answer a computability question first, that is, how to decide whether a solution exists. To address this important decidability issue, in this paper we focus only on sensor attacks, but hoping that our derived result may shed light on research of other types of attacks. We follow a sensor attack model proposed in [10], which associates each observed sequence from the plant with an altered observable sequence that becomes the input of a given supervisor. After slightly improving the concept of attackability originally introduced in [10] and the corresponding definition of smart sensor attacks, our first contribution is to identify conditions that ensure the existence of a smart sensor attack. It turns out that the existence of a smart weak sensor attack, which is not necessarily regular (i.e., representable by a finite-state automaton), is solely determined by the existence of at least one risky pair that consists of a damage string desired by the attacker and an observable sequence feasible in the supervisor such that the latter induces a sequence of control patterns, which allows the concerned damage string to happen. Because any strong sensor attack is also a weak attack, the existence of such a risky pair becomes the sufficient and necessary condition for the existence of a smart sensor attack. In [9] and its journal version [10], by imposing language normality to the closed-loop behaviour, it is shown that the supremal smart sensor attack language can be synthesized, whenever it exists, upon which a specific smart sensor attack model can be derived. In [11] and its journal version [12], the language normality is dropped, and it is shown that a smart sensor attack model (not necessarily supremal) can be synthesized via a special insertion-deletion attack structure, whenever it exists. However, none of these works reveals the aforementioned demand-supply relationship reflected in risky pairs that capture the nature of sensor attacks. Due to this insightful concept of risky pairs, our second contribution is to show that the existence of a nonblocking controllable and observable supervisor that is resilient to all regular smart sensor attacks is decidable. To this end, we develop a genuine encoding mechanism that reveals all possible sequences of control patterns required by a regular sensor attack and all sequences of control patterns feasible in the plant, allowing us to remove the set of all risky pairs from the plant behaviour. After that, we introduce a language-based concept of nonblocking resilient supervisor candidate and its automaton-counterpart control feasible sub-automaton that does not contain any risky pair, upon which we are able to decide the existence of a resilient supervisor. As our third contribution, the proposed decision process renders a concrete synthesis procedure that guarantees to compute a nonblocking supervisor resilient to smart sensor attacks, whenever it exists.

The remainder of the paper is organized as follows. In Section 2 we present a motivation example. Then in Section 3 we review the basic concepts and operations of discrete event systems introduced in [26], followed by a specific smart sensor attack model, where the concept of attackability is introduced. Then we present a sufficient and necessary condition to ensure a smart sensor attack in Section 4, where the key concept of risky pairs is introduced. After that, we present a sufficient and necessary condition for the existence of a nonblocking supervisor that is resilient to smart sensor attacks in Section 5, and show that this sufficient and necessary condition is verifiable in Section 6, which finally establishes the decidability result for the existence of a resilient supervisor. Conclusions are drawn in Section 7. Long proofs for Theorems 1-4 are shown in the Appendix.

2 A motivation example - attack of navigator

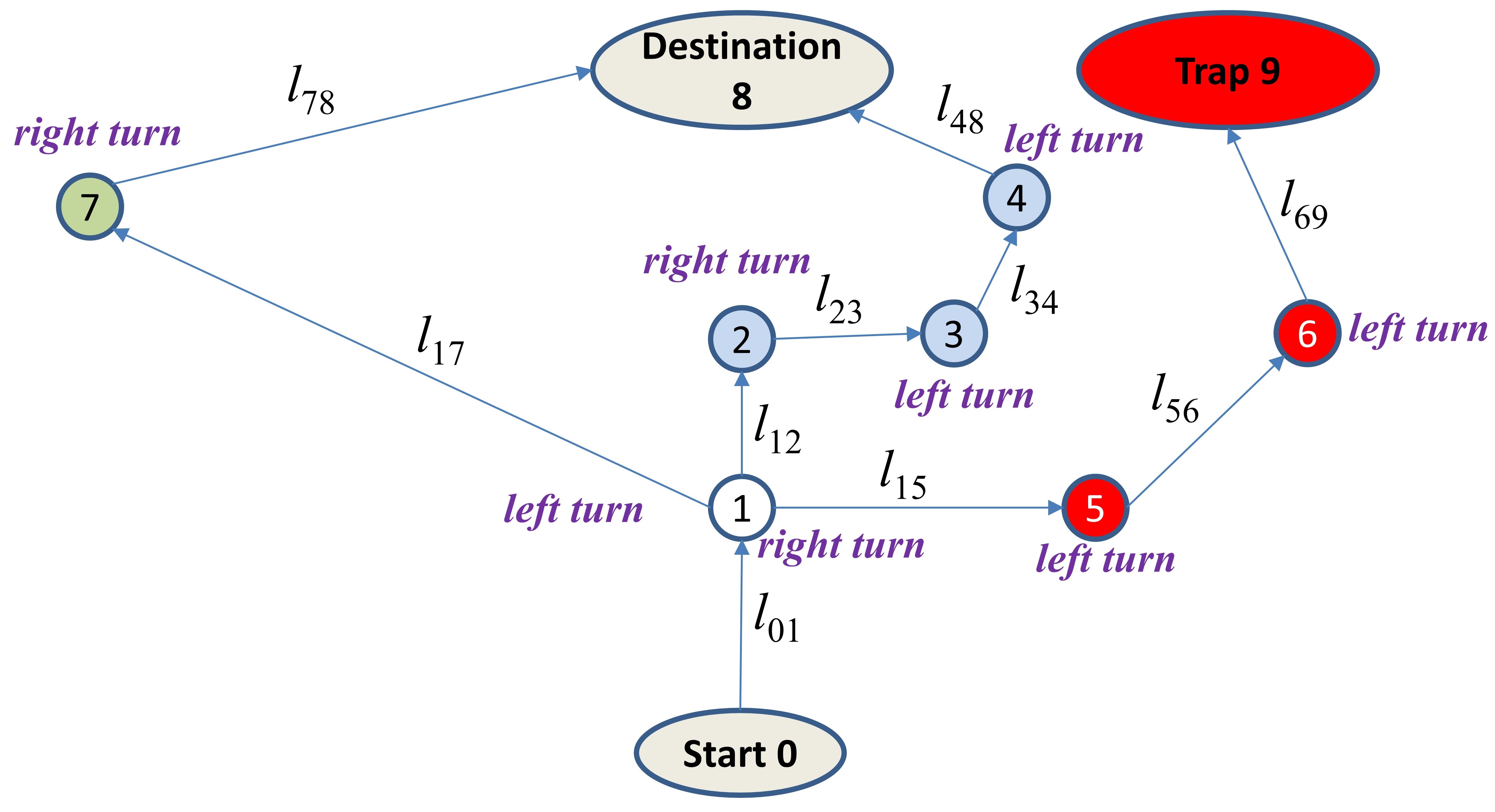

Image that Bob would like to ride his autonomous car from his home to his office. There is a GPS navigator installed inside his car. The navigator first generates a shortest path based on traffic conditions, then guides the vehicle to make required turns at planned junctions. However, Bob’s friend Peter plans to play a prank on Bob by tricking the navigator to lead Bob’s car to another location via GPS spoofing, shown in Figure 1.

Peter has the city road map and also knows Bob’s home address and office address. In addition, he has a navigator of the same model as the one installed in Bob’s car. Thus, by running his own navigator over the same origin-destination pair, Peter will know Bob’s route plan. Figure 2 depicts the system setup,

where Bob’s home is at “start 0” node, his office is at “Destination 8” node, and the prank location is at “Trap 9” node. Each symbol “” denotes the length of the road segment between node and node . In addition, the position of each node in the map is publicly known. By simply running the navigator of the same model, Peter knows that Bob’s car will choose the shortest path “”, which leads to the following navigation commands: (1) right turn (at node 2), followed by (2) left turn (at node 3), followed by (3) left turn (at node 4). To trick the navigator to issue the same sequence of commands but at incorrect junctions, say, right turn at node 1, followed by left turn at node 5, and followed by left turn at node 6, Peter only needs to buy a GPS spoofing device available in the market that can send fake GPS position signals to Bob’s navigator. Peter can easily determine the spoofed GPS position signal as follows. Assume that denotes the position of “start 0” node, and and denote the actual and spoofed GPS positions in a 2D map. The travel distance made by Bob’s car between time and time () can be calculated by a simple line integral shown below:

where is the parametric function of the path from node “start 0” to node 1, , denotes the magnitude of and ‘’ is the dot product of vectors. For each , Peter uniquely determines the value of , which is the parametric function of the path from node “start 0” to node 2 with , such that

which ensures that, when the navigator receives the spoofed signal, indicating that node 2 is reached, the actual node reached is node 1. With a similar GPS position spoofing scheme, Peter can misguide Bob’s car to reach node 9, instead of node 8, without being detected. Such GPS spoofing is one specific example of a smart sensor attack, whose formal definition will be given in the next section. Intuitively, it contains the following basic characteristics: by knowing sufficient information in advance, an attacker can trick a victim to issue the correct order of commands but at incorrect states (or locations), which leads to an unwanted consequence.

If Bob somehow knows that Peter will use GPS spoofing to play a trick on his car, he can simply choose the path “”. In this case, even Peter knows this new path, he cannot spoof the GPS signals to trick Bob’s car to node 9 without being detected, as the new path generates a new sequence of navigation commands: (1) left turn (at node 1), then (2) right turn (at node 7), which, no matter how Peter changes GPS position signals, cannot bring Bob to node 9. Such a path plan is a specific example of a resilient supervisor against smart sensor attacks, whose definition will be given later in this paper. Intuitively, a resilient supervisor will ensure that, for any sensor attack, either it can be detected before inflicting damage, or it will not lead to any damage.

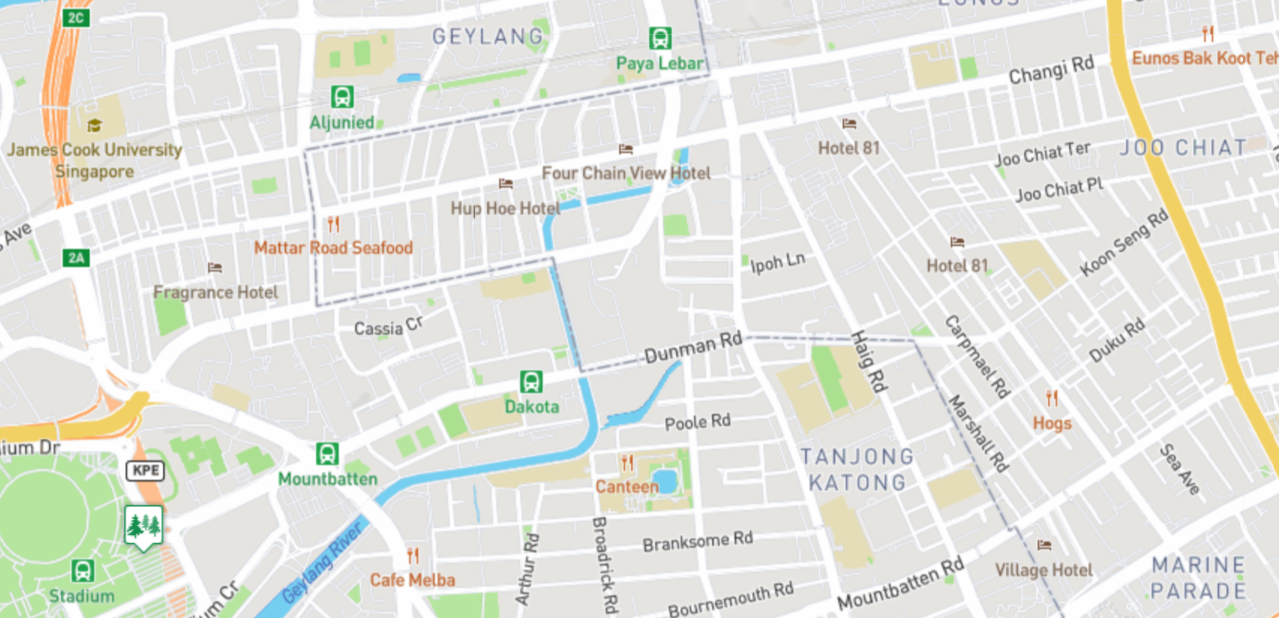

One big question is, for an arbitrary network, see, e.g., a road map of a small region in Singapore shown in Figure 3,

how to decide whether a resilient path plan (or navigation supervisor) exists. In this paper, we will investigate this decidability problem against smart sensor attacks. The computational efficiency, i.e., the complexity issue, however, will not be addressed here.

3 A smart sensor attack model

In this section we first recall basic concepts used in the Ramadge-Wonham supervisory control paradigm [23]. Then we recall a smart sensor attack model introduced in [10]. Most notations in this paper follow [26].

3.1 Preliminaries on supervisory control

Given a finite alphabet , let be the free monoid over with the empty string being the unit element and the string concatenation being the monoid binary operation. We use to denote non-empty strings in , i.e., . Given two strings , we say is a prefix substring of , written as , if there exists such that , where denotes the concatenation of and . For any string with , we use to denote the post substring such that . We use to denote the length of , and by convention, . Any subset is called a language. The prefix closure of is defined as . For each string , let be the set of events that can extend in . Given two languages , let denote the concatenation of two sets. Let . A mapping is called the natural projection with respect to , if

-

1.

,

-

2.

-

3.

.

Given a language , . The inverse image mapping of is

A given target plant is modelled as a deterministic finite-state automaton, , where stands for the state set, for the alphabet, for the (partial) transition function, for the initial state and for the marker state set. We follow the notation in [26], and use to denote that the transition is defined. For each state , let be the set of events enabled at in . The domain of can be extended to , where for all , and . The closed behaviour of is defined as , and the marked behaviour of is . is nonblocking if . We will use to denote natural numbers, (or ) for the size of the state set , and for the size of . Given two finite-state automata (), the meet of and , denoted as , is a (reachable) finite-state automaton whose alphabet is such that and . A sub-automaton of is an automaton such that

that is, each transition of must be a transition in , but the opposite may not be true. When the transition map is , where denotes the power set of , we call a nondeterministic finite-state automaton. If for each , there exists such that , then is co-reachable. For the remainder of this paper, unless explicitly mentioned, all automata are assumed to be deterministic.

We now recall the concept of supervisors. Let , where () and () are disjoint, denoting respectively the sets of controllable (observable) and uncontrollable (unobservable) events. For notational simplicity, let . Let , where each is one control pattern (or control command). A supervisory control map of under partial observation is defined as . Clearly,

namely the supervisory control map never tries to disable an uncontrollable transition. In addition,

namely any two strings in that are observably identical, their induced control patterns are equal.

Let denote the closed-loop system of under the supervision of , i.e.,

-

•

,

-

•

For all and

-

•

.

The control map is finitely representable if can be described by a finite-state automaton, say , such that

-

•

and ,

-

•

,

-

•

.

The last condition indicates that if . Such a supervisor can be computed by existing synthesis tools such as TCT [30] or SuSyNA [31]. It has been shown that, as long as a closed-loop language is controllable [23], observable [22] and -closed, i.e., , there always exists a finitely-representable supervisory control map such that and . From now on we make the following assumption.

Assumption 1.

is nonblocking, i.e., , and finitely representable by .

We will use or interchangeably, depending on the context. They will be called a (nonblocking) supervisor.

3.2 A smart sensor attack model

We assume that an attacker can observe each observable event generated by the plant , and replace the observable event with a sequence of observable events from , including the empty string , in order to “fool” the given supervisor , known to the attacker. Considering that in practice any event occurrence takes a non-negligible amount of time, it is impossible for an attacker to insert an arbitrarily long observable sequence to replace a received observable event. Thus, we assume that there exists a known number such that the length of any “reasonable” observable sequence that the attacker can insert is no more than . Let be the set of all -bounded observable sequences possibly inserted by an attacker. A sensor attack is a total map , where

-

•

,

-

•

,

-

•

.

The first condition states that, before any observation is obtained, the attack cannot generate any non-empty output, because, otherwise, such a fake observation sequence may reveal the existence of an attack, if the plant has not started yet, whose starting time is unknown to the attacker. The second and third conditions state that each received observation will trigger a fake string in . This model captures moves of insertion, deletion and replacement introduced in, e.g., [11] [6] [12].

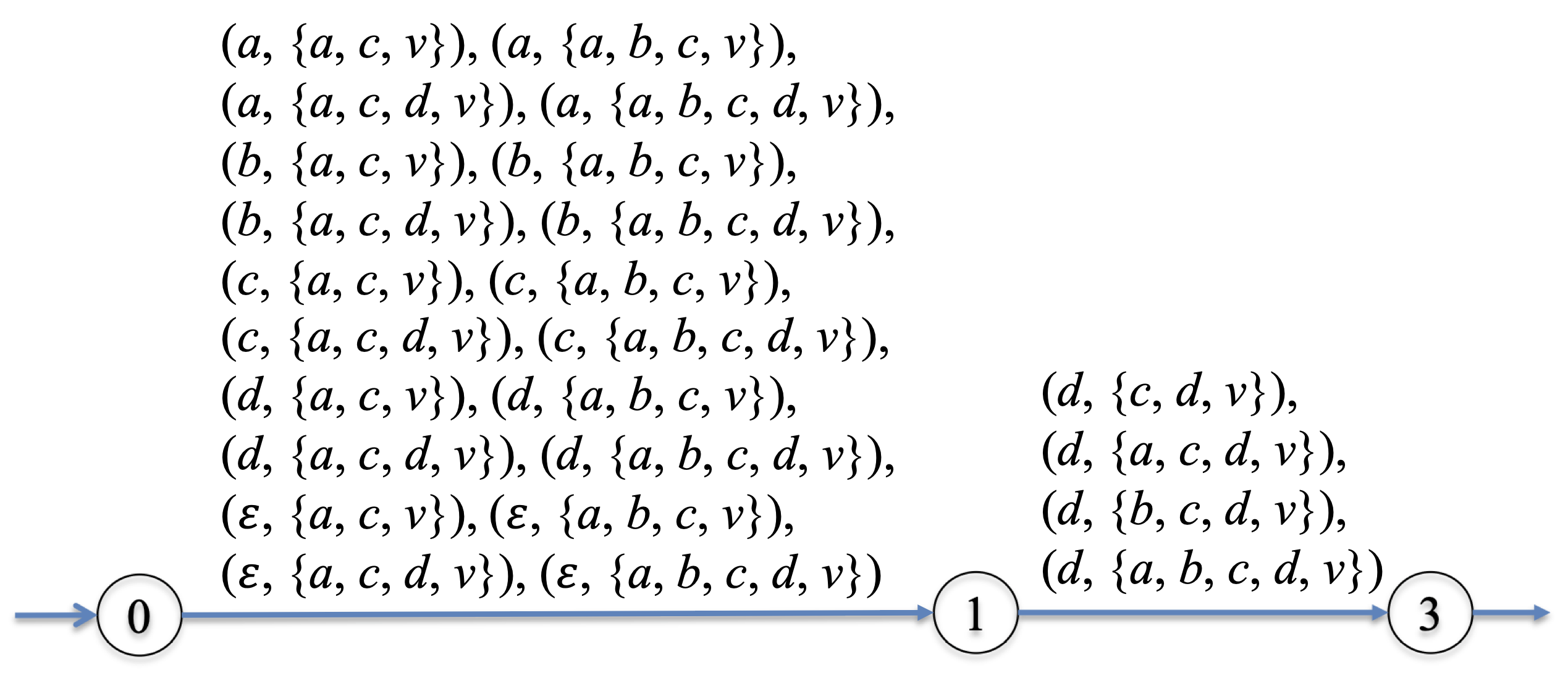

An attack model is regular if there exists a finite-state transducer , where , is the (partial) transition mapping such that if and then , i.e., if there is no observation input, then there should be no observation output. The functions and are the input and output mappings, respectively, such that for each , and . We require that, for each , we have and . Since is a function, we know that for all , if , then , that is, the same input should result in the same output. Notice that, in [9] [10], an attack model is directly introduced as a finite-state transducer, which may not necessarily be representable by an attack map , because a finite-transducer model allows nondeterminism, i.e., for the same observation input, an attacker may choose different attack moves, as long as they are allowed by the transducer model. In this sense, the attack model concerned in this paper is a special case of the one introduced in [9] [10], and bears more resemblance to the model introduced in [12], as both treat an attack as a function. But since there exists a nondeterministic attack model if and only if there exists a deterministic one, the decidability results derived in this paper shall be applicable to nondeterministic attack models introduced in [9] [10]. To see this fact, it is clear that each deterministic model is a nondeterministic model. Thus, we only need to show that from each nondeterministic model we can derive at least one deterministic model. We will use a simple example to illustrate the construction procedure. Assume that the nondeterministic attack model adopted from [10] is shown in Figure 4, which is a transducer. We first start from damage states (i.e., marker states), and perform co-reachability search to find all states in the nondeterministic model that satisfy the following two conditions: (1) each state is reachable from the initial state, (2) at each state, each observable event is associated with only one transition (denoting an attack move). After that, we perform reachability search upon those states derived from the first step and add new necessary states in so that the following condition holds: at each state, each observable event is associated with only one transition (denoting an attack move) if and only if it is associated with at least one transition in the original nondeterministic model. This construction will result in a deterministic smart sensor attack model, shown in Figure 4.

In this example, we can see that there can be several deterministic attack models derivable from the nondeterministic model.

Assumption 2.

Only regular attacks are considered.

The combination of the attack and the supervisor forms a new supervisor , where

We call an attacked supervisor under . This definition consumes the standard style of one command per each received (fake) observation used in, e.g., [6] [35], as a special case, when is set to 1. The closed and marked behaviours, and , of the closed-loop system are defined accordingly. We call the attacked language of under . The closed-loop system is depicted in Figure 5.

Definition 1.

Given a plant and a supervisor realized by , let be a damage language. The closed-loop system is attackable with respect to , if there exists an attack , called a smart sensor attack of , such that the following hold:

-

1.

Covertness: Event changes made by are covert to the supervisor , i.e.,

(1) -

2.

Damage infliction: causes “damage” to , i.e.,

-

•

strong attack: Any string may lead to damage:

(2) -

•

weak attack: Some string may lead to damage:

(3)

-

•

If is not attackable with respect to , then is resilient to smart attacks with respect to .

The concept of attackability introduced in Def. 1 simplifies the concept of attackability introduced in [10] by, firstly, dropping the requirement of control existence, as automatically allows all uncontrollable transitions, thus, ensuring controllability, and secondly, dropping the normality requirement, as we directly model an attack as a function over the plant’s observable behaviours, instead of a language used in [10] (which is equivalent to a nondeterministic attack), making the closed-loop language observable automatically.

Remark: In [10], a special subset of observable events called protected events is introduced, which is denoted by , representing observable events in the plant that cannot be changed by any sensor attack. This feature makes the modeling framework more general. However, it diminishes the chance of having a smart sensor attack, due to the challenge of ensuring the covertness property, when the system trajectory is outside the legal language and there are a few protected system output events that will inevitably reveal the attack. Due to this complication, we lack a simple sufficient and necessary condition to characterize the existence of a smart sensor attack, making the subsequent study of the existence of a supervisor resilient to such smart sensor attacks infeasible. To overcome this challenge, we could restrict the damage language to be , i.e., any string outside is a damage string. This will allow us to relax the covertness property to be

that is, an attacker does not need to make any event change, after the system trajectory is outside , as damage has been inflicted. Then all results presented in this paper will still be valid. So a user of this theory has two options for the system setup, that is, either and , as adopted in Def. 1 of this paper, or and .

Let be the collection of all attacked languages caused by smart sensor attacks. Clearly, is a partially ordered set. When is required to be normal [22], i.e., only observable events may be disabled, and the attack model is nondeterministic, i.e., for the same observable input, may have more than one output choice, it has been shown in [10] that over all smart strong attacks becomes an upper semilattice, and the supremal strong attacked language exists such that for any smart strong sensor attack , we have . In this case, the supremal strong attacked language is computable, as shown in [10]. With a similar spirit, the supremal weak attacked language exists and is also computable, as briefly mentioned in the conclusion of [10]. When only deterministic attack models are adopted, it turns out that the supremal deterministic attack model may not always exist. However, by computing the supremal nondeterministic smart weak attacked language first, which induces a finite-state transducer, as shown in [10], we can show that a deterministic attack model derivable from the finite-state transducer by applying the transformation procedure shown in Figure 4 results in a maximal attacked language in . Detailed arguments are omitted here, due to limited space and the focus of this paper that is not about supremality or maximality of attacked languages. In the example depicted in Figure 4, the illustrated deterministic smart attack model results in a maximal attacked language. However, there is no supremal attacked language induced by a deterministic smart weak attack.

4 A sufficient and necessary condition for the existence of a smart sensor attack

Let us start with a small example, which is depicted in Figure 6.

Assume that the attacker wants to achieve a string , which leads to a damage state. Assume that event is contained in control pattern , event is in control pattern , and event in control pattern . After event fires, the attacker wants the control pattern to be issued. Since event does not lead to control pattern , but event does, the attacker will replace event with to trick the supervisor to generate . Assume that is fired afterwards. The attacker wants to be issued. Since event does not lead to , instead, event does, the attacker replaces event with event to trick the supervisor to issue , if event happens afterwards, the attacker achieves his/her goal without being detected by the supervisor. The attacker could continue this trick as long as it is possible. So essentially, by faking some observable string, the attacker hopes to trick the supervisor to issue a sequence of control patterns, which contain some damaging strings, without being detected by the supervisor. We now generalize this idea. For notational simplicity, given a string with , for each , we use to denote the prefix substring . By convention, .

Theorem 1.

Given a plant , a supervisor and a damage language , there is a smart weak sensor attack , if and only if the following condition holds: there exists , with , and , and with such that (1) ; (2) for each , ; (3) .

As an illustration, in Example 1 depicted in Figure 6, we can see that , , , , , , .

The strings and in Theorem 1 form a risky pair such that, by mapping to , the attacker can rely on the existing supervisor to inflict a weak attack on the plant , without being detected by the supervisor. Since the existence of a risky pair is sufficient and necessary for the existence of a smart weak sensor attack, we will use this fact to determine the existence of a resilient supervisor. But before that, we would like to state the following result about the decidability of the existence of a regular smart weak sensor attack.

Theorem 2.

Given a plant , a regular supervisor , and a regular damage language , the existence of a regular smart weak sensor attack is decidable.

By the proof of Theorem 2 shown in the Appendix, the computational complexity of deciding the existence of a regular smart weak sensor attack is , where is an automaton realization of and is an automaton recognizing .

In [12] the authors have shown that a deterministic attack function that ensures the covertness and weak damage infliction can always be synthesized, when it exists. But since the attack model adopted in this paper is different from the one used in [12], e.g., the latter does not requires (thus, non-existence of an attack model in our definition does not necessarily means the non-existence of an attack model in [12]), and encodes attack moves differently, Theorem 2 has its own value by providing another way of synthesizing a regular deterministic smart weak sensor attack, whenever it exists.

5 Supervisor resilient to smart sensor attacks

In this section we explore whether there exists a sufficient and necessary condition to ensure the existence of a supervisor that is resilient to all regular smart sensor attacks, i.e., the closed-loop system is not attackable by any regular smart sensor attack. In Section 3 we have shown that there is a sufficient and necessary condition for the existence of a smart weak sensor attack shown in Theorem 1. Since each strong attack is also a weak attack, if we can effectively eliminate those risky pairs described in Theorem 1, we shall be able to prevent the existence of any smart sensor attack. Since, given a plant and a requirement , we can always synthesize a controllable and observable sublanguage of , without loss of generality, we assume that the plant satisfies all given requirements. Thus, we will only focus on the following problem.

Problem 1.

Given a plant and a damage language , synthesize a supervisor such that is not attackable by any regular smart sensor attack with respect to .

To solve this problem, we first intend to find a proper way of encoding all risky pairs. Given a string , we use to denote the last event of . If , by convention, . In addition, we use to denote the longest prefix substring of , whose last event is observable, i.e., . Thus, if , then we can derive that .

Let be a partial mapping, where

-

•

;

-

•

.

In Example 1, we have . What the map does is to map each string to a set of sequences of control patterns such that each derived control pattern sequence, say , contains the string in the sense that . By applying the map to the damage language , the result presents all possible sequences of control patterns, each of which contains at least one string in - in other words, each string in may potentially result in damage.

To further illustrate how this function works, we introduce another simple example depicted in Figure 7,

where , and . To simplify our illustration, in this example we assume that , i.e., . The damage language , which is shown by a dashed line leading to state 3. Figure 8 depicts the outcome of .

A smart sensor attack replaces each intercepted observable event with a string in , unless is silent, i.e., . To capture the impact of such changes on the control pattern sequences, we introduce a mapping , where

-

•

;

-

•

For each and , we have

We extend the domain of to languages in the usual way, i.e., for all , .

To explicitly describe how a smart attack may utilize possible sequences of control patterns, we introduce one more mapping

where

-

•

;

-

•

For all ,

-

•

For all , , and ,

-

•

.

As an illustration, we apply the map to the damage language in Figure 8, where . To simplify illustration, we assume that an attacker can, but prefers not to, change events and . The outcome is depicted in Figure 9. Notice that when event is intercepted by the attacker, it can be replaced by any other strings in . Because , we have - in this case, the outcome of equals .

Next, we determine all control pattern sequences in the plant that may be used by a smart attack. Let be a Boolean map, where for each ,

For each , let

Let be a projection map, where

-

•

;

-

•

.

And let be a projection map, where

-

•

;

-

•

.

Let be a total mapping, where

-

•

;

-

•

For all and with ,

where

We call the augmented closed behaviour of . The augmented marked behaviour of induced by is defined as .

This definition of indicates that, except for control patterns generated initially, i.e., when , each control pattern will be changed only after an observable event is received, i.e., when . This matches the definition of a supervisor that changes its output only when a new observation is received. In addition, if a control pattern contains unobservable events, it will be contained in a self-loop of the augmented event , i.e., , denoting that the control pattern may be used more than once by the plant, as long as no new observable event has been received. Again, this matches the Ramadge-Wonham supervisory control paradigm, where execution of any unobservable transition allowed by the current control pattern will not change the current control pattern - recall that in a finite-state automaton realization of , unobservable events are self-looped at relevant states.

As an illustration, we apply to the plant model depicted in Figure 7. Part of the outcome is depicted in Figure 10.

Because the total state set is , which is too big to be shown entirely in the picture, we only include states that have at least one future extension, unless they are marker states (i.e., states , and ), except for one blocking state , which is left there for an illustration purpose that will be explained shortly. The marker states in Figure 10 denote the augmented marked behaviour of in Example 2.

Till now, we have provide sufficient means to describe all risky pairs, which are captured by at the attacker’s demand side, and at the plant’s supply side. To avoid such risky pairs, we only need to remove from . The reason why we concatenate at the end of is to denote all possible augmented strings that may contain some strings in as prefix substrings. Thus, all safe supervisory control pattern sequences shall be contained in in order to prevent any sequence of control patterns from being used by an attacker.

Figure 11 depicts

the outcome of subtracting risky control pattern sequences from (part of) shown in Figure 10. It is clear that there cannot be any sequence of control patterns such that and .

To extract a proper supervisor from , we need a few more technical preparations. Let be the prefix closure of . All tuples are considered to be controllable, except for tuples . We introduce the concept of conditional controllability inspired by the standard notion of controllability in [27].

Definition 2.

A sublanguage is conditionally controllable with respect to and , if

In other words, as long as is not defined at the beginning, i.e., , it should not be disabled, if it follows a non-empty string . We can briefly explain the motivation as follows. If an event does not appear at the beginning, by the definition of and subsequently that of , it must be incurred by another string such that – clearly, we can stop , if , by not choosing ; but after is chosen and some unobservable event allowed by occurs, the same control pattern will continuously remain active, i.e., will still be allowed, until a new observation is generated, leading to a new control pattern. But the situation is different initially, as we can directly disable the control pattern , thus stop the event . It is clear that conditional controllability is also closed under set union.

Let be the set of all prefix-closed sublanguages of , which is conditionally controllable with respect to and . It is clear that the supremal conditionally controllable sublanguage in under the partial order of set inclusion exists. We denote this unique sublanguage as . Notice that contains no sequence of control patterns that may be used by a smart attack to inflict damage. Later, we will show that contains all feasible supervisors that are resilient to smart sensor attacks, as long as such a supervisor exists. We now introduce techniques to extract a feasible resilient supervisor out of , if it exists. To this end, we introduce a few more concepts.

Let be a mapping, where

-

•

For all ,

-

•

For all and with , if , then ; otherwise,

The map essentially associates each string with the corresponding state estimate of . Let be the marking coreachability map associated with the plant , where for each with ,

-

•

;

-

•

If , then let , where for all and ,

and , where

-

–

;

-

–

.

-

–

Definition 3.

A resilient supervisor candidate is nonblocking with respect to , if for all ,

Definition 4.

A sublanguage is a nonblocking resilient supervisor candidate if for all ,

-

1.

;

-

2.

;

-

3.

is nonblocking with respect to .

Notice that means that , and means that the incurred control patterns by and are the same. Thus, the first condition in Def. 4 essentially states that, all observably identical strings must lead to the same control pattern – consequently, any silent transition cannot generate any new control pattern other than the current one. The second condition states that an “observable” event is allowed by , i.e., , if and only if it is allowed by the control pattern incurred by , i.e., , and also allowed by , i.e., . The last condition refers to nonblockingness of .

As an illustration, we calculate and remove all states that violate either one of the conditions of Def. 4. Figure 12 depicts

the outcome. We can see that the state in Figure 11 needs to be removed because it is blocking, violating the third condition in Def. 4. In addition, states and and in Figure 11 also need to be removed because they clearly violate the second condition of Def. 4, as the event is defined in control patterns and of states and , respectively, but no outgoing transitions containing are allowed at these two states in , even though these transitions are allowed in , and event is defined in the control pattern of state , but no outgoing transition containing is allowed in , even though such a transition is allowed in .

We now state the following theorem, which is the first step towards solving the decidability problem of the existence of a supervisor resilient to smart sensor attacks.

Theorem 3.

Given a plant and a damage language , let be defined above. Then there exists a supervisor such that is not attackable w.r.t. , iff there exists a nonblocking resilient supervisor candidate .

As an illustration, we can check that any marked sequence in Figure 12 is a nonblocking resilient supervisor candidate. For example, take a look at the sublanguage . We can check that is conditional controllable with respect to and . Thus, . In addition, is nonblocking and satisfies conditions in Def. 4. Thus, is a nonblocking resilient supervisor candidate of . By Theorem 3, we know that there must exist a resilient supervisor that does not allow any smart sensor attack. Based on the construction shown in the proof of Theorem 3, the corresponding supervisor is , and . For any other observable string , we simply set .

Theorem 3 indicates that, to decide whether there exists a nonblocking supervisor that disallows smart sensor attacks, we only need to decide whether there exists a nonblocking resilient supervisor candidate . Next, we shall discuss how to determine the existence of such a language .

6 Decidability of the existence of a supervisor resilient to smart sensor attacks

In the previous section we present a sufficient and necessary condition for the existence of a resilient supervisor. However, the computability issue is not addressed. In this section, we discuss how to compute all those sets and languages introduced in the previous section, and eventually show how to decide the existence of a resilient supervisor, i.e., to decide when that sufficient and necessary condition mentioned in Theorem 3 holds for a given plant and a regular damage language .

We first discuss how to compute . As shown in Section 4, let recognize , i.e., . We construct another finite-state automaton , where is the (partial) transition map such that for each and ,

Proposition 1.

.

Proof: It is clear from the construction of .

Next, we describe how to calculate . Let , where is the (partial) transition map such that for each and , we have if one of the following holds:

-

•

;

-

•

.

Proposition 2.

.

Proof: By the construction of and the definition of , the proposition follows. ,

Next, we describe how to calculate by modifying . For each transition , if and , we make the following changes to . Assume that with and (). We create new states such that for each sequence with (), we define , () and . Add newly created states to the state set of and new transitions to . Continue this process until all transitions are processed. Let the final finite-state automaton be .

Proposition 3.

.

Proof: By the construction of and the definition of , the proposition follows.

Next, we will show how to compute . We construct a nondeterministic finite-state automaton , where

is the nondeterministic transition map such that

-

•

For all , ;

-

•

For all , and , we have that

-

–

if and and , then

-

–

if , then

-

–

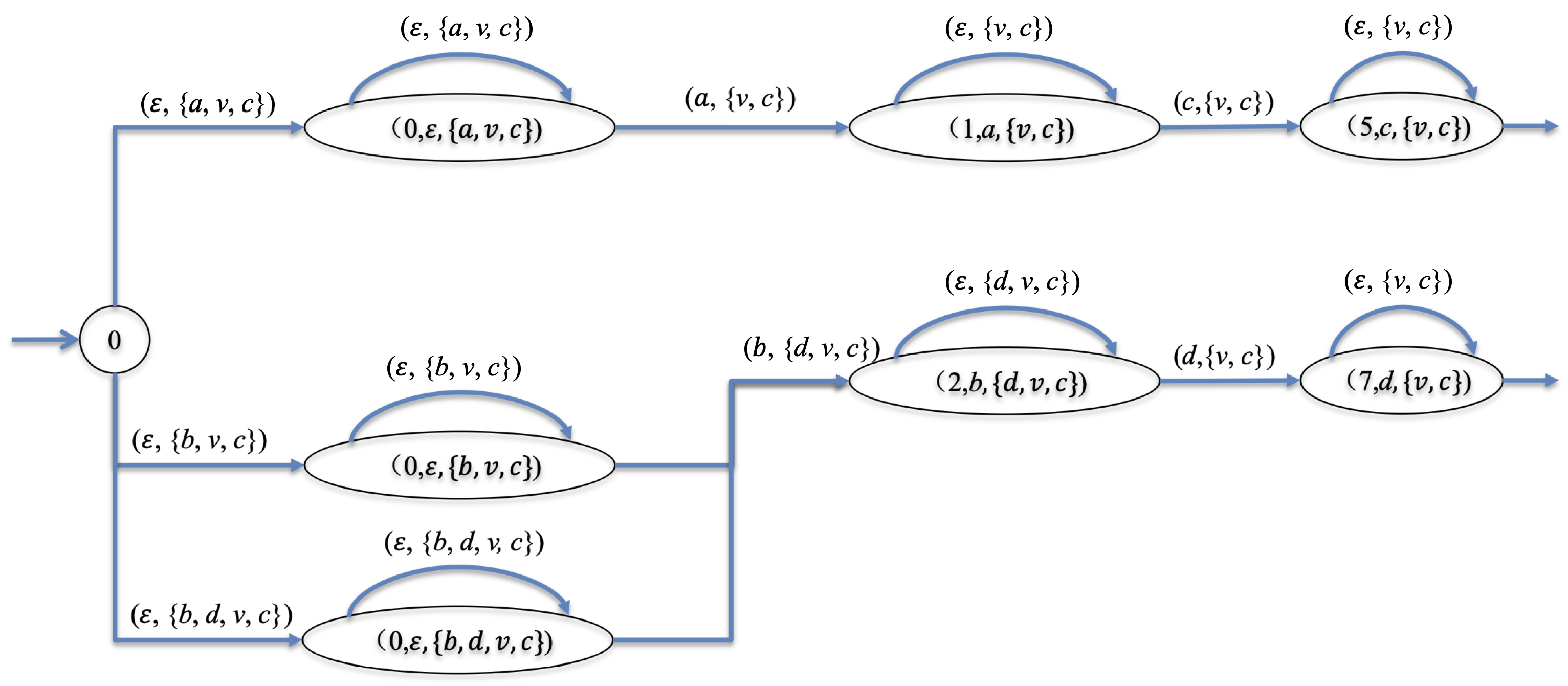

To illustrate the construction procedure for , a small example is depicted in Figure 13,

where , and . Thus, there are only two control patterns and . The outcome of is shown in Figure 13, where nondeterministic transitions occur at both (augmented) states and .

Proposition 4.

.

Proof: By the definition of and the construction of , it is clear that . So we only need to show that . We use induction. At the initial state , for each , if , we have and , namely . By the definition of , we know that . If , then we have , namely . By the definition of , we know that . Thus, the base case holds. Assume that , and , we need to show that . If , then since , we know that and . Since and and , we know that . If , then clearly there exists such that and . Clearly, and . Thus, by the definition of , we know that . Thus, the induction holds, which completes the proof.

Notice that in , except for being at the initial state , no transition between two different states can be unobservable.

Since the map introduced before is a projection, it is not difficult to check that is regular, as both and are shown to be regular. Thus, its prefix closure is also regular. Let the alphabet be and the uncontrollable alphabet be . Since is nondeterministic, can be recognized by a nondeterministic automaton, without masking out necessary marking information inherited from , which will be used later. By using a synthesis algorithm similar to the one proposed in [24] [25], which is realized in [31], we can show that is also regular, and generated by a nondeterministic finite-state automaton , where and with being the state set of the recognizer of . That is . Next, we will develop a computational method to determine whether a nonblocking resilient supervisor candidate in exists.

To handle partial observation induced by , we undertake the following subset-construction style operation on . Let , where

-

•

, ;

-

•

;

-

•

The transition map is defined as follows: for each and , if , then

otherwise, we have

where

Remarks: It is clear that . In addition, since all unobservable transitions in are selflooped at relevant states, by the construction of , we can check that the recognizer also selfloops all unobservable transitions. Due to this property, we have in the definition of , where either equals or in .

To illustrate the construction procedure for , assume that in Example 3 depicted in Figure 13, .

After applying the construction procedure for , the outcome is depicted in Figure 14, where

Definition 5.

Given , a reachable sub-automaton of is control feasible if the following conditions hold:

-

1.

For all with ,

-

2.

For all , we have ;

-

3.

For each ,

-

4.

For all and , if , then for all ,

-

5.

is co-reachable.

The first condition in Def. 5 essentially states that in no uncontrollable transitions allowed by shall be disabled, which is similar to the concept of state controllability in [24] that handles nondeterministic transitions. Based on the construction of , if is allowed at state in , then and . The second condition states that all strings observably identical in must result in the same control pattern. The third condition states that, for any state in , an observable event is allowed at state if and only if it is allowed both by the plant and the corresponding control pattern associated with . The fourth condition is similar to the concept of state observability in [24] to handle nondeterminism, which requires that all states in reachable by strings observably identical to some string in , must be included in . The last condition is self-explained.

As an illustration, Figure 15 depicts one choice of derived from in Example 3.

We can see that clearly no self-looped uncontrollable events are disabled. So the first condition in Def. 5 holds. Due to the second condition in Def. 5, in we choose to keep , and thus, only states and will be kept in . Similarly, in the control pattern is chosen; in the control pattern is chosen; in the pattern is chosen; and in the pattern is chosen. Due to the third condition in Def. 5, we can see that in both outgoing transitions and of state must be chosen in , as both events and are allowed by the control pattern and the augmented plant . In , due to the fourth condition in Def. 5, both nondeterministic outgoing transitions towards and must be allowed in . Clearly, all reachable states in is co-reachable. Thus, after removing all unreachable states in Figure 15, the remaining structure is a control feasible sub-automaton of in Example 3. The corresponding supervisory control map can be derived as follows: , , , and . Similarly, we can check that in Example 2, each marked trajectory in Figure 12 leads to one control feasible sub-automaton , which satisfies all conditions in Def. 5.

Theorem 4.

Let be constructed as shown above. Then there exists a nonblocking resilient supervisor candidate of if and only if there exists a control feasible reachable sub-automaton of .

The complexity of computing is . To determine the existence of a control feasible sub-automaton of , in the worst case we need to check each sub-automaton. There are sub-automata. For each sub-automaton , whose state set is , we need to check all four conditions defined in Def. 5, whose complexity is . Typically, we have and . The final complexity of finding a control feasible sub-automaton is . Notice that , where with . The final complexity is .

Theorem 5.

Given a plant and a damage language , it is decidable whether there exists a nonblocking supervisor such that the closed-loop system is not attackable with respect to .

Proof: By Theorem 3, there exists a nonblocking supervisor which disallows any regular smart sensor attack with respect to if and only if there exists a nonblocking resilient supervisor candidate . By Theorem 4, we know that there exists a nonblocking resilient supervisor candidate if and only if there exists a control feasible sub-automaton of , which recognizes . Since there exists a finite number of sub-automata in , the existence of a control feasible sub-automaton of is decidable. Thus, the existence of a nonblocking supervisor which disallows any regular smart sensor attack with respect to is decidable.

It is interesting to point out that, in general, there are typically many choices of a control feasible sub-automaton , leading to possibly many resilient supervisors. It is unfortunate that the most permissive resilient supervisor in terms of set inclusion of closed-loop behaviours typically does not exist. For example, in Example 2 there are up to three different supervisory control maps depicted in Figure 12, leading to two non-compatible maximally permissive supervisors: one generates the closed-loop behaviour of and the other one generates . It is an interesting question whether the structure could be used to directly synthesize a maximally permissive nonblocking resilient supervisor, as it conceptually contains all resilient supervisors.

7 Conclusions

Although in our early work [9] [10], the concept of smart sensor attacks was introduced, and syntheses of a smart sensor attack and a supervisor resilient to smart sensor attacks were presented, it has not been shown whether the existence of a nonblocking supervisor resilient to all smart sensor attacks is decidable, as the synthesis algorithm presented in [10] does not guarantee to find a resilient supervisor, even though it may exist. In this paper we have first shown that the existence of a regular smart weak sensor attack is decidable, and in case it exists, it can be synthesized. Our first contribution is to identify risky pairs that describe how a legal sequence of control patterns may be used by a sensor attack to inflict weak damage, which is stated in Theorem 1 that there exists a smart weak sensor attack if and only if there exists at least one risky pair. Notice that this result is valid, regardless of whether the attack model is regular, i.e., representable by a finite-state automaton. With this key idea, to ensure the existence of a supervisor resilient to smart sensor attacks, we only need to make sure that there should be no risky pairs. Our second contribution is to show that all risky pairs can be identified and removed from the plant behaviours, via a genuine encoding scheme, upon which a verifiable sufficient and necessary condition is presented to ensure the existence of a nonblocking supervisor resilient to smart sensor attacks. This establishes the result that the existence of a supervisor resilient to all smart sensor attacks is decidable. Finally, as our third contribution, the decision process renders a synthesis algorithm for a resilient supervisor, whenever it exists, which has never been addressed in any existing works.

The decidability result established in this paper may shed light on future research on cyber attack related resilient synthesis, e.g., to decide existence of a resilient supervisor for smart actuator attacks or smart attacks with observations different from those of the supervisor, which are gaining more and more attention recently. This decidability result allows us to focus more on computational efficiency related to smart sensor attacks.

Appendix

1. Proof of Theorem 1: (1) We first show the IF part. Assume that there exist , with , and , and with such that (1) ; (2) for each , ; (3) . We now explicitly design an attack model as follows.

-

1.

;

-

2.

for each , where has been defined, for each with ,

Clearly, is well defined. We now show that , i.e., is covert, by using induction on the length of strings in . Clearly, , and . Assume that for all strings with , where , we have . We need to show that for each with , we have . If , where , then we have . If , then we have two cases to consider. Case 1: . Then . Case 2: . We will show that, . Since , we have . Because , there must exist such that . For all , if , we know that . By the definition of , we know that must also be in . Thus, . But this contradicts our assumption that . Thus, the only possibility is that . Since , we know that and . If , then clearly . Thus, by Definition of , we have . With a similar argument, we know that for all , implies that , and . If , then we can always find and with such that but . Then with the same argument, we know that , namely . Thus, the induction part holds, which means is covert.

Since results in weak damage due to the existence of , by Def. 1, is a smart weak sensor attack.

(2) Next, we show the ONLY IF part. Assume that there exists a smart weak sensor attack . By Def. 1, we know that and . Thus, there exists with , and , such that , ; and with for all ; and finally,

Let . Since , by the definition of , we know that (1) ; (2) for each , ; (3) . Thus, the theorem follows.

2. Proof of theorem 2: Since is regular, there is a finite-state automaton that realizes . We follow an idea adopted from [10], and start with a single-state transducer , where and for all , we have . contains all possible attack moves. Since is regular, there exists a finite-state automaton such that and . We now form a combination of all relevant finite-state transition structures. Let

where

-

•

, ;

-

•

;

-

•

;

-

•

is the partial transition map: for each and ,

if and only if the following conditions hold:

-

1.

;

-

2.

;

-

3.

, , , and .

-

1.

Let the controllable alphabet of be

Let be a projection, where

-

•

;

-

•

;

-

•

.

Similarly, let be a projection, where

-

•

;

-

•

;

-

•

.

We calculate a controllable [23] prefix-closed sublanguage w.r.t. and , i.e.,

which satisfies the following properties:

-

•

;

-

•

;

-

•

.

We can check that Condition 1 of states a weak nonblocking property. Condition 2 is an “extended” controllability property, which states that, after a string , each outgoing transition is allowed, as long as the plant allows it, i.e., , and the supervisor also allows it, i.e., . Because the supervisor allows all uncontrollable transitions, thus, no uncontrollable events in shall be disabled here, which is the reason why we call it a special controllability property. Condition 3 states that any two strings and , “observably” identical in the sense that , must lead to the same (fake) observable strings in , i.e., , which means implies the same attack move - thus, may result in a deterministic attack function. Based on this interpretation, by adopting either a power set construction over via the projection and a state pruning algorithm similar to the one proposed in [24] that originally aims to compute a supremal nonblocking supervisor with respect to , that is state-controllable and state-normal, or one algorithm proposed in [33] that computes a maximally controllable and observable nonblocking supervisor, we can show that the existence of such a is decidable.

To complete the proof, we only need to show that there exists a regular smart weak sensor attack if and only if there exists such a language .

To show the IF part, we define an attack model , where

-

•

;

-

•

for all and ,

Since for all , if , then , we know that is well defined.

Next, we show that conditions (1) and (3) stated in Def. 1 hold for , meaning that is a smart weak sensor attack.

We first show that Condition (1) in Def. 1 holds, i.e., . We first consider all . Since , by the definition of and Condition 2 of , we write string as , where with , , and such that (, ) and with . Thus, , as all unobservable transitions are self-looped in . Thus, . For each string , there exists and, by the definition of , .

Next, we show that Condition (3) in Def. 1 holds, that is, . To see this, notice that and since , we have . Thus, by Def. 1, is a smart weak sensor attack.

To show the ONLY IF part, assume that there exists a regular smart weak sensor attack represented by a finite-transducer . We construct as shown above and let . Since is a smart weak sensor attack, conditions (1) and (3) in Def. 1 hold. Clearly, is controllable and prefix closed. We know that , due to Condition (3) in Def. 1. Due to the covertness condition in Def. 1, we know that, for all , we have . By the definition of , we know that

Finally, because the attack model is a map, which maps all strings observably identical to the same observable string acceptable by , by the definition of , we have

Thus, all required conditions for hold. This completes the proof.

3. Proof of Theorem 3: (1) We first show the IF part. Assume that there exists a nonblocking resilient supervisor candidate . For each , if then let ; otherwise, for any , let . For the latter case, we first show that is well defined. Assume that it is not true, then there exist such that and . But this violates Condition 1 of Def. 4, thus, contradicts our assumption that is a nonblocking resilient supervisor candidate. So must be well defined, that is, for each , is uniquely defined.

Secondly, since is a control pattern for , it is clear that . Since maps all strings observably identical to a same control pattern, we know that is observable. Finally, by the third condition of Def. 4, it is clear that is nonblocking. By the construction of , and the second condition of Def. 4, where for all ,

we can show that . Thus, by the third condition of Def. 4, we have that is a nonblocking supervisory control map. Clearly, does not allow any weak sensor attack damage. Thus, it is resilient to any smart sensor attack, regardless of whether the attack is a strong or weak one.

(2) We now show the ONLY IF part. Assume that there exists a supervisor , which does not allow any smart sensor attack. Since each strong attack is also a weak attack, we will only need to consider weak sensor attacks. We define the following language induced from :

-

i)

;

-

ii)

;

-

iii)

For all , and and ,

-

iv)

All strings in are generated in Steps (1)-(3).

Clearly, . Because is a resilient supervisor, by Theorem 1 we know that - otherwise, there must exist a smart weak attack. By the construction of , we know that is conditionally controllable with respect to and . Thus, , namely, . Since is a nonblocking supervisor, we can check that the first and last conditions in Def. 4 hold. Since , we know that . By Steps (iii)-(iv), we know that . Thus, we have . On the other hand, by Step (iii), we know that . Thus, we finally have

which means the second condition of Def. 4 holds. Thus, is a nonblocking resilient supervisor candidate, which completes the proof.

4. Proof of Theorem 4: (1) To show the IF part, assume that is a control feasible reachable sub-automaton of . Let . By condition (1) of Def. 5, we know that is conditionally controllable with respect to and . Thus, . For all and , let be induced by and with . Then by condition (2) of Def. 5, we have . Thus, the first condition in Def. 4 holds. In addition, we have

By condition (3) of Def. 5, we have , by condition (4) of Def. 5, we have

where . Thus, we conclude that , namely the second condition of Def. 4 holds. Finally, since is co-reachable, and together with condition (4) of Def. 5, we know that is nonblocking. Thus, by Def. 4, is a nonblocking resilient supervisor candidate of .

(2) To show the ONLY IF part, assume that there exists a nonblocking resilient supervisor candidate . We need to show that there exists a control feasible sub-automaton of . We construct a sub-automaton , where

and . The transition map is the restriction of over .

Let be the sub-automaton . Since is a supervisor candidate, by the first condition of Def. 4, we have the following property:

By the construction of , we know that for each state reachable by , say , and each state reachable by , say , we have . Thus, if and , by the property , we have , which means the second condition of Def. 5 holds. Based on the construction of , it is also clear that the condition (1) of Def. 5 holds because is conditionally controllable due to the conditional controllability of . Because is derived from a language , the fourth condition of Def. 5 holds for . In addition, since is a resilient supervisor candidate, by the second condition of Def. 4, we know that the third condition of Def. 5 holds. Finally, since is nonblocking, based on Def. 3, we know that each state in must be co-reachable. This completes the proof that is indeed control feasible.

References

- [1] Dibaji, S. M., Pirani, M., Flamholz, D. B., Annaswamy, A. M., Johansson, K. H., & Chakrabortty, A. (2019). A systems and control perspective of CPS security. Annual Reviews in Control, 47: 394–411.

- [2] Carvalho, L. K., Wu, Y.-C., Kwong, R., & Lafortune, S. (2016). Detection and prevention of actuator enablement attacks in supervisory control systems. In Proceedings of the 13th International Workshop on Discrete Event Systems (WODES), pp. 298–305.

- [3] Lima, P., Alves, M. V., Carvalho, L., & Moreira, M. (2017). Security against network attacks in supervisory control systems. IFACPapersOnLine, 50(1):12333–12338.

- [4] Lima, P., Carvalho, L., & Moreira, M. (2018). Detectable and undetectable network attack security of cyber-physical systems. IFACPapersOnLine, 51(7):179–185.

- [5] Gao, C., Seatzu, C., Li, Z., & Giua, A. (2019). Multiple attacks detection on discrete event systems. In Proceedings of 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), pp. 2352–2357.

- [6] Carvalho, L., Wu, Y., Kwong, R., & Lafortune, S. (2018). Detection and mitigation of classes of attacks in supervisory control systems. Automatica, 97:121–133.

- [7] Wang, Y., & Pajic, M. (2019a). Attack-resilient supervisory control with intermittently secure communication. In Proceedings of the 58th IEEE Conference on Decision and Control (CDC), pp. 2015–2022.

- [8] Wang, Y., & Pajic, M. (2019b). Supervisory control of discrete event systems in the presence of sensor and actuator attacks. In Proceedings of the 58th IEEE Conference on Decision and Control (CDC), pp. 5350–5355.

- [9] Su, R. (2017). A cyber attack model with bounded sensor reading alterations. In Proceedings of 2017 American Control Conference (ACC), pp. 3200–3205.

- [10] Su, R. (2018). Supervisor synthesis to thwart cyber attack with bounded sensor reading alterations. Automatica, 94:35–44.

- [11] Meira-Góes, R., Kang, E., Kwong, R., & Lafortune, S. (2017). Stealthy deception attacks for cyber-physical systems. In Proceedings of the 56th IEEE Conference on Decision and Control (CDC), pp. 4224–4230.

- [12] Meira-Góes, R., Kang, E., Kwong, R., & Lafortune, S. (2020). Synthesis of sensor deception attacks at the supervisory layer of cyber-physical systems. Automatica, 121:109172 (online access).

- [13] Meira-Góes, R., Kwong, R., & Lafortune, S. (2019). Synthesis of sensor deception attacks for systems modeled as probabilistic automata. In Proceedings of 2019 American Control Conference (ACC), pp. 5620–5626.

- [14] Lin, L., Thuijsman, S., Zhu, Y., Ware, S., Su, R., & Reniers, M. (2019a). Synthesis of supremal successful normal actuator attackers on normal supervisors. In Proceedings of 2019 American Control Conference (ACC), pp. 5614–5619.

- [15] Lin, L., & Su, R. (2021). Synthesis of covert actuator and sensor attackers. Automatica , 130:109714 (early access).

- [16] Lin, L., Zhu, Y., & Su, R. (2019b). Towards bounded synthesis of resilient supervisors. In Proceedings of the 58th IEEE Conference on Decision and Control (CDC), pp. 7659–7664.

- [17] Lin, L., Zhu, Y., & Su, R. (2020). Synthesis of covert actuator attackers for free. Journal of Discrete Event Dynamic Systems, 30:561-577.

- [18] Zhu, Y., Lin, L., & Su, R. (2019). Supervisor obfuscation against actuator enablement attack. In Proceedings of 2019 European Control Conference (ECC), pp. 1760–1765.

- [19] Wakaiki, M., Tabuada, P., & Hespanha, J. P. (2019). Supervisory control of discrete-event systems under attacks. Dynamic Games and Applications, 9(4):965-983.

- [20] Fritz, R., & Zhang, P. (2018). Modeling and detection of cyber attacks on discrete event systems. In Proceedings of the 14th International Workshop on Discrete Event Systems (WODES), pp. 285-290.

- [21] Khoumsi, A. (2019). Sensor and actuator attacks of cyber-physical systems: a study based on supervisory control of discrete event systems. In Proceedings of the 8th International Conference on Systems and Control (ICSC), pp. 176-182.

- [22] Lin, F., & Wonham, W. M. (1988). On observability of discrete-event systems. Information Sciences, 44(3), 173-198.

- [23] Ramadge, P. J., & Wonham, W. M. (1987). Supervisory control of a class of discrete event systems. SIAM J. Control and Optimization, 25(1), 206–230.

- [24] Su, R., Van Schuppen, J. H., & Rooda, J. E. (2010). Aggregative synthesis of distributed supervisors based on automaton abstraction. IEEE Trans. Automatic Control, 55(7), 1627-1640.

- [25] Su, R., Van Schuppen, J. H., & Rooda, J. E. (2012). Maximally permissive coordinated distributed supervisory control of nondeterministic discrete-event systems. Automatica, 48(7), 1237-1247.

- [26] Wonham, W. M. (2014). Supervisory Control of Discrete-Event Systems. Systems Control Group, Dept. of ECE, University of Toronto. URL: www.control.utoronto.ca/DES.

- [27] Wonham, W. M., & Ramadge, P. J. (1987). On the supremal controllable sublanguage of a given language. SIAM J. Control and Optimization, 25(3), 637-659.

- [28] Paoli, A., Sartini, M., & Lafortune, S. (2011). Active fault tolerant control of discrete event systems using online diagnostics. Automatica, 47(4), 639-649.

- [29] Rasouli, M., Miehling, E., & Teneketzis, D. (2014). A supervisory control approach to dynamic cyber-security. Proceedings of 2014 International Conference on Decision and Game Theory for Security, (pp. 99-117).

- [30] TCT: A Computation Tool for Supervisory Control Synthesis. http://www.control.utoronto.ca/DES/Research.html.

- [31] SuSyNA: Supervisor Synthesis for Nondeterministic Automata. http://www.ntu.edu.sg/home/rsu/Downloads.htm.

- [32] Alves, M., Basilio, J. C., da Cunha, A., Carvalho, L. K., & Moreira, M. V. (2014). Robust supervisory control against intermittent loss of observations. Proceedings of the 12th Int. Workshop on Discrete Event Syst., (pp. 294-299).

- [33] Yin, X., & Lafortune, S. (2016). Synthesis of maximally permissive supervisors for partially observed discrete event systems. IEEE Trans. Automatic Control, 61(5), 1239-1254.

- [34] Sampath, M., Sengupta, R., Lafortune, S., Sinnamohideen, K., & Teneketzis, D. (1995). Diagnosability of discrete-event systems. IEEE Trans. on Automatic Control, 40(9), 1555-1575.

- [35] Meira-Goes, R., Marchand, H., & Lafortune, S. (2019). Towards resilient supervisors against sensor deception attacks. Proceedings of the IEEE 58th Conference on Decision and Control, (pp. 5144-5149).

- [36] Meira-Goes, R., Lafortune, S., & Marchand, H. (2021). Synthesis of supervisors robust against sensor deception attacks. IEEE Transactions on Automatic Control, 66(10), 4990-4997.

- [37] Meira-Góes, R., Kwong, R., & Lafortune, S. (2021). Synthesis of optimal multi-objective attack strategies for controlled systems modeled by probabilistic automata. IEEE Transactions on Automatic Control, 10.1109/TAC.2021.3094737 (early access).