[1]\fnmM. A. \surBotchev

[1]\orgnameKeldysh Institute of Applied Mathematics of Russian Academy of Sciences, \orgaddress\streetMiusskaya Sq., 4, \cityMoscow, \postcode125047, \countryRussia

On convergence of waveform relaxation for nonlinear systems of ordinary differential equations

Abstract

To integrate large systems of nonlinear differential equations in time, we consider a variant of nonlinear waveform relaxation (also known as dynamic iteration or Picard–Lindelöf iteration), where at each iteration a linear inhomogeneous system of differential equations has to be solved. This is done by the exponential block Krylov subspace (EBK) method. Thus, we have an inner-outer iterative method, where iterative approximations are determined over a certain time interval, with no time stepping involved. This approach has recently been shown to be efficient as a time-parallel integrator within the PARAEXP framework. In this paper, convergence behavior of this method is assessed theoretically and practically. We examine efficiency of the method by testing it on nonlinear Burgers, three-dimensional Liouville–Bratu–Gelfand, and three-dimensional nonlinear heat conduction equations and comparing its performance with that of conventional time-stepping integrators.

keywords:

waveform relaxation, Krylov subspaces, exponential time integrators, time-parallel methods, Burgers equation, Liouville–Bratu–Gelfand equation1 Introduction

Large systems of time-dependent ordinary differential equations arise in various applications and in many cases have to be integrated in time by implicit methods, see e.g. [1, 2]. Last decennia the niche of implicit methods has been gradually taken up by exponential time integrators [3]. For implicit and exponential methods the key issue is how to solve arising nonlinear systems and (or) to evaluate related matrix functions efficiently. To achieve efficiency, different approaches exist and widely applied, such as inexact Newton methods combined with powerful linear preconditioned solvers [4, 5, 6], splitting and Rosenbrock methods [7, 8, 9, 10, 1] and approximate iterative implicit schemes (which can be seen as stabilized explicit schemes) [11, 12, 13, 14, 15, 16, 17, 18, 19].

Another important approach to achieve efficiency in implicit and exponential time integrators is based on waveform relaxation methods [20, 21, 22], where iterative approximations are time dependent functions rather than time step values of a numerical solution. These methods have also been known as dynamic iteration or Picard–Lindelöf iteration [23]. They have been developed since the 80s [21, 24, 25, 26, 27] and have received attention recently in connection to the time-parallel exponential method PARAEXP [28] and its extension to nonlinear problems [29, 30].

Typically matrix function evaluations within exponential integrators are carried out by special linear algebra iterative procedures (often these are Krylov subspace or Chebyshev polynomial methods) [31, 32, 33, 14, 34, 35, 36]. The key attractive feature of the waveform relaxation methods is that they employ this linear algebra machinery across a certain time interval rather than within a time step, so that computational costs are distributed across time. This leads to a higher computational efficiency as well as to a parallelism across time [22].

One promising time-integration exponential method of this class is the nonlinear EBK (exponential block Krylov) method [29, 30]. It is based on block Krylov subspace projections [37] combined with waveform relaxation iteration employed to handle nonlinearities. The nonlinear EBK method possesses an across-time parallelism, and, in its parallel implementation, can be seen as an efficient version of the time-parallel PARAEXP method [29, 30].

Convergence of the waveform relaxation methods has been studied in different settings, in particular, for linear initial-value problems, for nonlinear Gauss–Seidel and Jacobi iterations (the working horses of classical waveform relaxation) and for time-discretized settings, see [23, 22] for a survey. Convergence results for waveform relaxation in general nonlinear settings are scarce, and it is often assumed that “studying the linear case carefully … might be what users really need” [23]. Except book [22], containing some general introductory convergence results, one of the papers where nonlinear waveform relaxation is studied is [38]. Below, in Remark 2, we comment more specifically on the results presented there. The aim of this paper is to extend convergence results for waveform relaxation to a nonlinear setting employed in the EBK method. This also yields an insight on its convergence behavior in practice.

The paper is organized as follows. In Section 2.1 a problem setting and the assumptions made are formulated. Main convergence results are presented in Section 2.2. Since the results are formulated for the error function which is in general unknown, in Section 2.3 an error estimate in terms of a computable nonlinear residual is formulated. At each waveform relaxation iteration a linear initial-value problem (IVP) has to be solved which, in current setting, is done by an iterative block Krylov subspace method (the linear EBK method). Therefore, in Section 2.4 we show how the nonlinear iteration convergence is affected if the linear IVP is solved inexactly. In Section 2.5 implementation issues are briefly discussed. Numerical experiments are described in Section 3.1 (for a 1D Burgers equation), Section 3.2 (for a 3D Liouville–Bratu–Gelfand equation), and Section 3.3 (for a 3D nonlinear heat conduction problem). We finish the paper with conclusions drawn in Section 4.

2 Nonlinear waveform relaxation and its convergence

2.1 Problem setting, assumptions and notation

We consider an IVP for nonlinear ODE system

| (1) |

where , and are given. Without loss of generality, we assume that the time dependence in the right hand side function is of the form

| (2) |

Otherwise, we can transform (1) to an equivalent autonomous form (usually this is done by extending the unknown vector function with an additional -th coordinate and adding an ODE to the system).

Iterative methods considered in this note are based on a family of splittings

| (3) |

with being the iteration number, so that matrices and mappings may vary from iteration to iteration. The mappings in (3) are all assumed to be Lipschitz continuous with a common Lipschitz constant , i.e.,

| (4) |

Here and throughout the paper denotes a vector norm in or a corresponding induced matrix norm. Furthermore, we assume that for all the matrices in (3) there exist constants and such that

| (5) |

For a particular matrix , condition (5) holds, for instance, in the 2-norm if the numerical range of lies in the complex halfplane . In this case and is the smallest eigenvalue of the symmetric part of . Condition (5) is closely related to the concept of the matrix logarithmic norm, see, e.g., [39, 1]. In particular, if is the logarithmic norm of then condition (5) holds, with and for all , if and only if [1, Theorem I.2.4]

In the analysis below, we also use functions [3, relation (2.10)], defined for as

| (6) |

where we set , which makes entire functions. In this paper, the following variation-of-constants formula (see, e.g., [1, Section I.2.3], [3, relation (1.5)]) is instrumental:

| (7) |

2.2 Nonlinear waveform relaxation

Let be an approximation to the unknown function for , with . Usually is taken to be for all . To solve (1), in this paper we consider nonlinear waveform relaxation iteration where we solve successfully, for , a linear inhomogeneous IVP

| (8) |

Here the matrices and the mappings form a splitting of , see (3), and satisfy, for all , the assumptions (4),(5). We emphasize that at each iteration an approximation is computed and stored for the whole time range . In Section 2.5 below we briefly discuss how to do this efficiently.

The following proposition provides a sufficient condition for iteration (8) to converge.

Proposition 1.

Proof.

Subtracting the iteration formula (8) from the ODE and taking into account that , we see that the error satisfies IVP

| (11) |

Then, applying the variation-of-constants formula (7) to IVP (11), we obtain

Therefore, using (4) and (5), we can bound

Thus, (9) is proved. Taking into account that is a monotonically increasing function of , we obtain (10). ∎

Remark 1.

Proposition 1 shows that we can choose a length of the time interval such that the nonlinear waveform relaxation converges. The larger the Lipschitz constant , the smaller should be taken.

Remark 2.

To solve an initial-value problem for an autonomous ODE system

in [38] a nonlinear waveform iteration

| (12) |

is considered, where is a matrix and . A convergence analysis of (12) is given in assumption that is Lipschitz-continuous with a Lipschitz constant less than one. In [38], a particular case of (12) is also considered with , i.e.,

Hence, our results here do not overlap with the results in [38].

In case is linear, waveform relaxation (8) is known to have an attractive property to converge superlinearly for final , see, e.g., [40, Theorem 5.1] and, for inexact waveform relaxation, [41, Theorem 2]. The following result shows that the superlinear convergence property is shared by nonlinear waveform relaxation (8).

Proposition 2.

Proof.

The proof is very similar to the proof of [40, Theorem 5.1], where a linear equivalent of (1),(8) is considered. The estimate (13) will be proven by induction. Note that . Therefore, the estimate (13) for holds, as it coincides with (9) for . Assume that (13) holds for a certain . From the proof of Proposition 1, we see that

| and, by the induction assumption, | ||||

where the relation is employed. Thus, the induction step is done. Finally, using the definition of and the fact that , it is not difficult to check that

which proves the second inequality in (13). ∎

2.3 Residual of the nonlinear problem

To control convergence of waveform relaxation (8) in practice, it is natural to consider a residual of an iterative approximation in (8). Since is defined by (8),(3) written for , it is natural to define the residual with respect to IVP (1) combined with splitting (3) for , i.e., for IVP

| (14) |

Hence, we define

| (15) |

and write

| (16) | ||||

Note that the residual possesses the backward error property, i.e., iterative approximation can be viewed as the exact solution of a perturbed IVP (14)

| (17) |

Subtracting the ODE of this problem from the ODE in (14), we obtain an IVP for the error

| (18) |

which is equivalent to the IVP (11). The following proposition shows that the residual can be seen as an upper bound bound for the error.

Proposition 3.

Proof.

As stated by proposition below, an upper bound for the nonlinear residual can be obtained in terms of the error at the previous iteration.

Proposition 4.

2.4 Linear inner iteration and its residual

In practice, the linear ODE system (8) at each waveform relaxation iteration can be solved by any suitable integrator inexactly, with a certain accuracy tolerance. In the context of the time-parallel PARAEXP scheme, the nonlinear waveform relaxation appeared to be efficient for fluid dynamics problems [29, 30], with linear IVP (8) solved by the exponential block Krylov subspace (EBK) method [37]. Thus, in this setting we have an inner-outer iterative process where each nonlinear waveform relaxation iteration (8) involves an inner Krylov subspace iterative process , with being the inner iteration number. For notation simplicity we omit the dependence on the inner iteration number in and write . These inner EBK iterations are controled by checking the norm of a residual defined, for , as

| (23) |

Here we assume that the previous iterative approximation is also computed inexactly and therefore we replace by . The inner iterations stop after a certain number of inner iterations as soon as the linear residual , defined by (23), is small enough in norm. The residual turns out to be easily computable as a by-product of the block Krylov subspace method [37]. Moreover, the residual in (23) can be used as a controller for the error in the linear IVP (8), see [37, relation (16)].

As Proposition 5 below shows, convergence in the nonlinear waveform relaxation is retained if the IVP (8) at each nonlinear iteration is solved approximately, such that residual (23) is small enough in norm. To formulate the proposition, it is convenient to assume that the linear residual is bounded in norm by the error achieved at a previous nonlinear iteration, cf. (24).

Proposition 5.

Let IVP (1) be solved iteratively by (8) and let assumptions (4),(5) and convergence condition (19) hold. Furthermore, let the IVP (8) at each nonlinear iteration be solved approximately with inner iterations such that for the inner iteration residual (23) one of the following two conditions holds:

| (24) | ||||

with a constant . Then for the error of the iterative solution in inexact inner-outer iteration (8),(23) holds, for ,

| (25) |

Moreover, the inexact iteration (8),(23) converges for to solution of IVP (1),(3) provided that

| (26) |

Proof.

Note that, due to (4),

and, hence, condition (24),(b) follows from condition (24),(a) Therefore, without loss of generality, we assume that (24),(a) holds. It follows from (23) that solves a perturbed ODE system

| (27) |

Subtracting this equation from the original nonlinear ODE and taking into account that , we obtain an IVP for the error (cf. (11)):

Application of variation-of-constants formula (7) leads to

Then, using (4),(5),(24) we get, for ,

Furthermore,

so that, for ,

Taking into account that and , we then obtain

Remark 3.

Note that for the obtained convergence condition (26) transforms into (19). In general, condition (24),(b) is probably easier to check in practice than (24),(a). However, IVPs where (24),(a) is easy to compute may occur as well. Since and are solutions of IVPs with the same initial vector , we may expect that .

2.5 Implementation of nonlinear waveform relaxation

We take initial guess function identically (i.e., for all ) equal to the initial vector . Then, at each iteration (8) we set and solve a linear IVP

| (28) |

Since this IVP is solved by the EBK method [37], the solution at every iteration is obtained as a linear combination of Krylov subspace vectors, stored columnwise in a matrix , with being the number of inner iterations,

where of is solution of a small-sized projected IVP (see [37] for details). This allows to store in a compact way for all . At each nonlinear iteration the EBK method stops as soon as the residual (23) is small enough in norm. The residual norm is easily computable and is checked for several values of .

Starting with the second iteration , before the IVP (28) is solved, the vector function is sampled at points , …, covering the time interval . Usually, it is sensible to take , and the other to be the Chebyshev polynomial roots:

The number of sample points should be taken such that is sufficiently well approximated by its linear interpolation at , …, . The computed samples , , are then stored as the columns of a matrix and the thin singular value decomposition of is employed to obtain a low rank representation

| (29) |

where typically . For more details of this procedure we refer to [37] or to [29]. Usually the computational work needed to obtain representation is negligible with respect to the other work in the EBK method. The nonlinear iteration (8) is stopped as soon as the nonlinear residual (16) is small enough in norm (in our limited experience, in practice it suffice to check the residual norm at final time only). The resulting algorithm is outlined below.

Algorithm 1

Given: IVP (1), initial value vector , tolerance

tol, block size , sample number .

Result: numerical solution of IVP (1).

Initialize nonlinear iteration:

(constant in time)

for (main iteration loop)

if () then

else

endif

if resnorm is small enough (cf. (32),(39)) then

stop iteration

endif

define , ,

set inner iteration tolerance

(cf. (33),(40))

compute the low rank approximation (29),

solve linear IVP (28) by inner block Krylov subspace iteration

(linear EBK)

obtain ,

endfor (main iteration loop)

3 Numerical experiments

3.1 1D Burgers equation

This IVP is taken from [42, Ch. IV.10]. To find unknown function , we solve a 1D Burgers equation

with viscosity and . Initial and boundary conditions are

and the final time is set to , , and . We discretize the Burgers equation by finite differences on a uniform mesh with internal nodes , , . To avoid numerical energy dissipation and to guarantee that a discretization of the advection term results in a skew-symmetric matrix [43], we apply the standard second-order central differences in the following way

The diffusion term is discretized by second-order central differences in the usual way. Then this finite difference discretization leads to an IVP of the form

| (30) |

where

is positive definite and for all . It is important to make sure that discretization of the convective term results in a skew-symmetric matrix [43, 44]. Otherwise, there are contributions of the convective term to the symmetric part of the Jacobian matrix which distort the proper energy dissipation in the ODE system and may prevent the the symmetric part from being positive semidefinite. In the context of this paper, this would mean that is not guaranteed anymore.

A straightforward way to apply the nonlinear iteration (8) to (30) would be setting in (3) , . However, to decrease the Lipschitz constant of (cf. condition (10)), we also include a linear part of to the term in (3). More specifically, we apply the nonlinear iteration (8) to semidiscrete Burgers equation (30) in the form

| (31) | |||

where . The nonlinear residual (16) in this case reads, for ,

Since the symmetric part of the matrix is , the value is the smallest eigenvalue of . The lowest eigenmodes should be well approximated even on the coarsest grid. Indeed, computing numerically (which is easy for this one-dimensional problem) yields approximately the same result on all grids: we get for and for . We stop our nonlinear iterative process (8) as soon as

| (32) |

where tol is a tolerance value. As we primarily aim at solving IVPs stemming from space discretization of PDEs, where space discretization error is significant, we settle for a moderate tolerance value in this test. To obtain the low rank representation (29) at each iteration we use sample points , and set the number of singular vectors to . The largest truncated singular value , serving as an indicator of the relative low rank representation error in (29), is then of order for and of order for .

For the inner iterative solver EBK employed to solve (8) we set the residual tolerance to , which means that inner iterations are stopped as soon as the inner linear residual satisfies

| (33) |

Krylov subspace dimension is set to . As the block size in the Krylov subspace process is , the EBK solver requires vectors to store. Since the problem becomes stiffer as the spatial grid gets finer, we use the EBK solver in the shift-and-invert (SAI) mode [45, 46], i.e., the block Krylov subspace is computed for the SAI matrix , , with defined in (31). A sparse (banded in this test) LU factorization of is computed once at each nonlinear iteration and used every time an action of is required.

To compare our nonlinear iterative EBK solver to another solver, we also solve this test with a MATLAB stiff ODE solver ode15s. This is a variable step size and variable order implicit multistep method [47]. The ode15s solver is run with absolute and relative tolerances set, respectively, to and , with . For these tolerance values both ode15s and our nonlinear EBK deliver a comparable accuracy. The relative error values reported below are computed as

| (34) |

where is a numerical solution at final time and is a reference solution computed at final time by the ode15s solver run with strict tolerance values and . As is computed on the same spatial grid as , the error value can be seen as a reliable measure of time accuracy [13].

Actual delivered accuracies and corresponding required computational work for both solvers are reported in Table 1 (for viscosity ) and Table 2 (for ). For our nonlinear EBK solver the work is reported as the number of nonlinear iterations, with one LU factorization and one matrix-vector product (matvec) per iteration, and the total number of linear systems solutions (which equals the total number of Krylov steps times the block size ). The reported total number of LU applications is not necessarily a multiple of the block size because at the first nonlinear iteration the approximate solution is constant in time and, hence, we set in (29) at the first iteration. The computational work for the ode15s solver is reported as the number of time steps, computed LU factorizations and the ODE right hand side function evaluations (fevals). In Tables 1 and 2 we also report the norm of which can be seen as a measure of the ODE system stiffness.

| method, | iter./ | LUs (LUs applic.), | relative | |

| tolerance | steps | matvecs/fevals | error | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (141), 5 | 5.17e-06 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (220), 7 | 2.03e-05 | |

| nonlin.EBK(), 1e-03 | 10 | 10 (340), 10 | 5.31e-05 | |

| ode15s, 1e-09 | 59 | 14 (73), 575 | 1.93e-06 | |

| ode15s, 1e-09 | 70 | 16 (86), 588 | 8.38e-06 | |

| ode15s, 1e-09 | 83 | 19 (118), 620 | 2.21e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (170), 5 | 5.06e-06 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (256), 7 | 2.00e-05 | |

| nonlin.EBK(), 1e-03 | 10 | 10 (389), 10 | 5.30e-05 | |

| ode15s, 1e-09 | 67 | 15 (83), 1085 | 1.76e-06 | |

| ode15s, 1e-09 | 78 | 17 (104), 1106 | 1.13e-05 | |

| ode15s, 1e-09 | 91 | 19 (134), 1136 | 2.48e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (177), 5 | 5.07e-06 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (277), 7 | 2.00e-05 | |

| nonlin.EBK(), 1e-03 | 11 | 11 (452), 11 | 4.38e-05 | |

| ode15s, 1e-09 | 73 | 16 (91), 2093 | 2.29e-06 | |

| ode15s, 1e-09 | 85 | 19 (107), 2109 | 7.96e-06 | |

| ode15s, 1e-09 | 98 | 22 (139), 2141 | 2.22e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (193), 5 | 5.06e-06 | |

| nonlin.EBK(), 1e-03 | 8 | 8 (347), 8 | 4.82e-06 | |

| nonlin.EBK(), 1e-03 | 11 | 11 (501), 11 | 4.38e-05 | |

| ode15s, 1e-09 | 79 | 18 (99), 4101 | 1.93e-06 | |

| ode15s, 1e-09 | 90 | 20 (112), 4114 | 8.25e-06 | |

| ode15s, 1e-09 | 103 | 23 (144), 4146 | 2.21e-05 | |

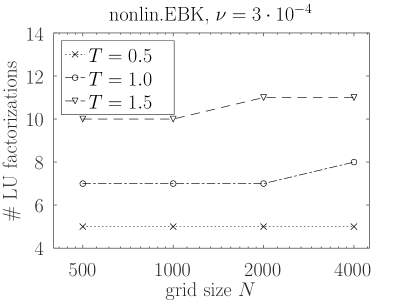

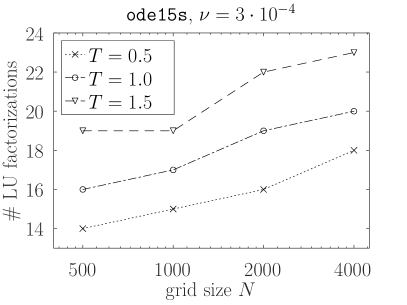

As we can see in Tables 1 and 2, our solver requires less LU factorizations than the ode15s solver. It does require more LU factorization actions but these are relatively cheap. Moreover, in EBK they are carried out simultaneously for blocks of right hand sides. For both solvers, in Figure 1 we plot the numbers of LU factorizations (presented in the tables) versus the grid size. The number of nonlinear iterations in the EBK solver (with one LU factorization per iteration) remains practically constant as the grid gets finer, whereas the number of LU factorizations in ode15s increases with the grid size. Furthermore, we see that the ode15s solver requires more time steps and significantly more fevals on the finer grids. is approximately the largest possible time for which our nonlinear EBK solver converges in this test. From Figure 1 we see that a higher efficiency is reached for larger values. Indeed, on the finest grid LU factorizations per unit time are required for , LU factorizations for and factorizations for .

Comparing Tables 1 and 2, we see that performance of the ode15s solver improves for the smaller viscosity value . This is expected as the stiffness of the space-discretized Burgers equation decreases with . Indeed, on finer grids, where contributions of (proportional to ) dominate those of (proportional to ), we have , see the values reported in the tables.

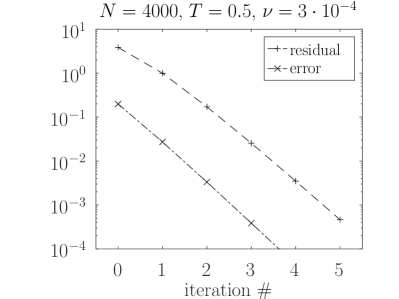

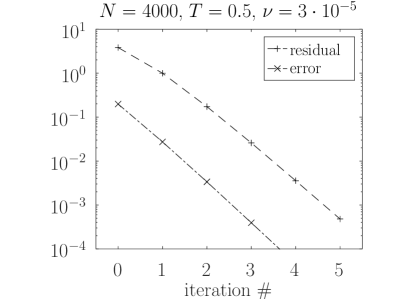

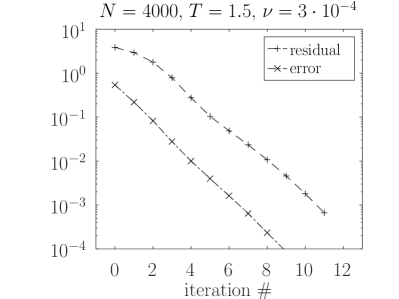

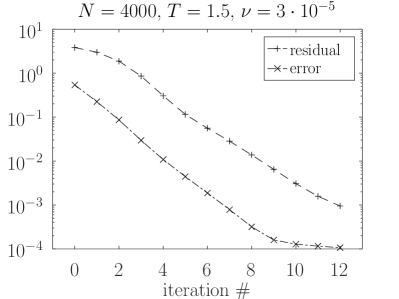

In Figure 2 we plot the reference solution and the first three iterative approximations , and . Convergence plots of the residual and error norms are shown in Figure 3. There, the shown residual norm is computed at according to (16) and the error norm is the relative norm defined in (34). As we see, the residual turns out to be a reliable measure of the error. Furthermore, convergence behavior is not influenced by viscosity , which is in accordance with theory: does not change the nonlinear term in (8) and its Lipschitz constant.

| method, | iter./ | LUs (LUs applic.), | relative | |

| tolerance | steps | matvecs/fevals | error | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (69), 5 | 1.82e-05 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (139), 7 | 2.26e-05 | |

| nonlin.EBK(), 1e-03 | 13 | 13 (414), 13 | 1.10e-04 | |

| ode15s, 1e-09 | 28 | 7 (38), 540 | 3.71e-06 | |

| ode15s, 1e-09 | 38 | 9 (53), 555 | 1.23e-05 | |

| ode15s, 1e-09 | 52 | 13 (89), 591 | 3.38e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (90), 5 | 6.20e-06 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (176), 7 | 2.25e-05 | |

| nonlin.EBK(), 1e-03 | 12 | 12 (430), 12 | 1.07e-04 | |

| ode15s, 1e-09 | 35 | 9 (44), 1046 | 2.44e-06 | |

| ode15s, 1e-09 | 45 | 10 (60), 1062 | 1.64e-05 | |

| ode15s, 1e-09 | 59 | 15 (99), 2102 | 3.83e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (120), 5 | 5.29e-06 | |

| nonlin.EBK(), 1e-03 | 7 | 7 (190), 7 | 2.22e-05 | |

| nonlin.EBK(), 1e-03 | 12 | 12 (494), 12 | 1.06e-04 | |

| ode15s, 1e-09 | 42 | 10 (55), 2057 | 3.91e-06 | |

| ode15s, 1e-09 | 52 | 12 (70), 2072 | 1.32e-05 | |

| ode15s, 1e-09 | 66 | 17 (108), 4111 | 3.32e-05 | |

| grid , | ||||

| nonlin.EBK(), 1e-03 | 5 | 5 (149), 5 | 5.24e-06 | |

| nonlin.EBK(), 1e-03 | 8 | 8 (276), 8 | 5.52e-06 | |

| nonlin.EBK(), 1e-03 | 12 | 12 (578), 12 | 1.07e-04 | |

| ode15s, 1e-09 | 47 | 11 (61), 4063 | 3.86e-06 | |

| ode15s, 1e-09 | 58 | 15 (80), 4082 | 1.22e-05 | |

| ode15s, 1e-09 | 71 | 18 (112), 8115 | 3.36e-05 | |

3.2 3D Bratu test problem

We now consider a nonstationary IVP for Liouville–Bratu–Gelfand equation [48]: find such that

| (35) | ||||

Here the source function is defined as

and the final time is either or . Solution to (35) is shown in Figure 4.

The regular second order finite-difference discretization of the spatial derivatives in (35) on a uniform grid yields an IVP

| (36) |

where the entries of the vector functions and contain the values of the numerical solution and function at the grid nodes, respectively, and

| (37) |

Furthermore, the components of the vector function are defined as

To apply nonlinear waveform relaxation (8) for solving (36), one could have chosen to set and . However, to have a smaller Lipschitz constant in , we supplement with a linear part of , setting

| (38) | |||

where is the Jacobian matrix of evaluated at and an approximation

is used. The nonlinear residual (16) takes in this case the form, for ,

Since this IVP is stiff and anisotropic, the nonlinear residual norm can be very large in norm, . Therefore we switch from the absolute stopping criterion used in the previous test to a relative stopping criterion

| (39) |

with tolerances tol varying in this test from to (the specific values are reported in Table 3 discussed below). Note that , as discussed in Section 2.5.

The low rank representation (29) at each iteration is computed at sample points , for either or low rank terms. The EBK solver applied to solve (8) is stopped as soon as

| (40) |

which means that the linear residual should be small with respect to the other terms in the perturbed ODE (27). We switch from the absolute inner stopping criterion (33) to (40) because in this stiff anisotropic problem the term can be very large in norm. Krylov subspace dimension is set to . Hence, for the block size , the EBK solver has to store vectors. Since the problem is stiff, just as in the previous test, the EBK solver is employed in the SAI mode, with a sparse LU factorization of computed and applied throughout each nonlinear iteration. The relative error values we report below are computed according to (34).

We compare our nonlinear EBK solver to the two-stage Rosenbrock method ROS2, see [10] or [1, Chapter IV.5]. For IVP (1), it can be written as

| (41) | ||||

Here is numerical solution at time step

()

obtained with a time step size .

This scheme is second order consistent for any matrix .

Here we take to be the Jacobian matrix of computed at

and . At each time step a sparse LU factorization of

can be computed and used to solve the two linear systems in .

The numerical tests presented here are carried in Matlab and, as it turns out,

for the grid sizes used,

it is much faster to compute the action of the matrix

by the Matlab backslash operator \ than to compute a sparse LU factorization

first and to apply it twice111To solve a linear system

Ax=b with a square nonsingular matrix A,

one can use the Matlab backslash operator as

x=Ab,

in which case the operator computes and applies

an LU factorization..

This is apparently because Matlab creates

an overhead to call sparse LU factorization routines

(of the UMFPACK software [49, 50]) and then to store the computed

LU factors as Matlab variables.

Note that both the backslash operator as well as the LU factorization for large

sparse matrices in Matlab are based on UMFPACK.

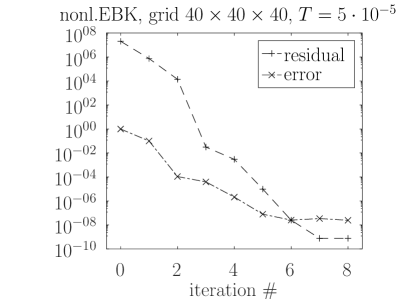

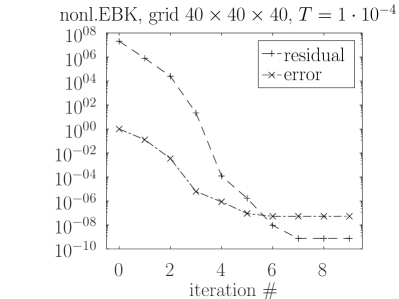

Comparison results of our nonlinear EBK solver and ROS2 method are presented in Table 3. The results clearly show that, for this test problem, the nonlinear EBK solver is much more efficient than the ROS2 method both in terms of required LU factorizations (and their actions) and the CPU time. We also see that the number of nonlinear waveform relaxation iterations hardly changes with grid size and with final time (see also Table 4). While the convergence independence on the grid size is expected (as the grid size does not affect the nonlinear part in the ODE), the weak convergence dependence on is probably a property of the specific test problem. From plots in Figure 5 we see that convergence behavior does depend on .

| method, time steps (t.s.) | iter./ | LUs (LUs applic.), | relative | CPU |

| tolerance / | steps | matvecs/fevals | error | time, s |

| grid , , | ||||

| nonlin.EBK(), 1 t.s., 1e-02 | 2 | 2 (37), 2 | 1.17e-04 | 6.3 |

| nonlin.EBK(), 1 t.s., 1e-04 | 3 | 3 (111), 3 | 4.04e-05 | 12.9 |

| ROS2, | 320 | 320 (640), 640 | 2.36e-04 | 225 |

| ROS2, | 640 | 640 (1280), 1280 | 5.73e-07 | 442 |

| grid , , | ||||

| nonlin.EBK(), 1 t.s., 1e-03 | 3 | 3 (70), 3 | 2.09e-05 | 10.4 |

| nonlin.EBK(), 1 t.s., 1e-03 | 3 | 3 (80), 3 | 1.38e-05 | 11.1 |

| ROS2, | 320 | 320 (640), 640 | 1.51e-05 | 201 |

| ROS2, | 320 | 640 (1280), 1280 | 7.51e-06 | 401 |

| grid , , | ||||

| nonlin.EBK(), 20 t.s., 1e-03 | 46 | 46 (908), 46 | 1.34e-05 | 148 |

| nonlin.EBK(), 10 t.s., 1e-03 | 30 | 30 (646), 30 | 1.19e-05 | 102 |

| ROS2, 3200 t.s., | 3200 | 3200 (6400), 3200 | 2.19e-06 | 2007 |

| grid , , | ||||

| nonlin.EBK(), 1 t.s., 1e-02 | 2 | 2 (41), 2 | 1.18e-04 | 51.5 |

| nonlin.EBK(), 1 t.s., 1e-04 | 3 | 3 (91), 3 | 6.91e-05 | 90 |

| ROS2, | 320 | 320 (640), 640 | 2.35e-04 | 2177 |

| ROS2, | 640 | 640 (1280), 1280 | 1.66e-05 | 4790 |

| grid , , | ||||

| nonlin.EBK(), 1 t.s., 1e-02 | 2 | 2 (38), 2 | 3.48e-03 | 51 |

| nonlin.EBK(), 1 t.s., 1e-03 | 3 | 3 (74), 3 | 2.05e-05 | 83 |

| ROS2, | 320 | 320 (640), 640 | 2.11e-05 | 2013 |

| ROS2, | 640 | 640 (1280), 1280 | 1.66e-05 | 4368 |

| grid , , | ||||

| nonlin.EBK(), 10 t.s., 1e-04 | 30 | 30 (671), 30 | 1.18e-05 | 800 |

| ROS2, | 3200 | 3200 (6400), 3200 | 2.18e-06 | 20017 |

Results presented in Table 3 for the nonlinear EBK solver are obtained with the block size and . The value is marginally acceptable for the accuracy point of view but does yield an acceptable error values. Therefore, the plots in Figure 5, made to get an insight in the error convergence, are produced with a larger block size and samples. A convergence stagnation is clearly visible in both plots. Although we do not have a precise explanation for the stagnation, we note that it disappears if we switch off the strong anisotropy in (37), taking to be a Laplacian. The accuracy level at which stagnation occurs, appears to be related to the condition number of the Jacobian of the ODE system being solved. The nonlinear EBK solver relies on repeatedly made low-rank approximations which are instrumental for the inner block Krylov subspace solver. Another source of possible inaccuracies is linear system solution within the block Krylov subspace solver where linear systems with matrices are solved for orders of magnitude larger than typical time step sizes in implicit time integrators. Nevertheless, the convergence stagnation is observed at an accuracy level which seems to be by far acceptable for PDE solvers.

Finally, we briefly comment on convergence of the ROS2 method. Since its convergence order can not be seen from error values shown in Table 3, we give an error convergence plot in Figure 6, where relative error values, computed as given in (34), are plotted versus the total number of time steps. The second order convergence is clearly seen.

| 2 | 2 | 2 | |

| 2 | 2 | 3 | |

| 3 | 3 | 3 |

3.3 3D nonlinear heat conduction

In the previous tests the ODE right hand side function has a linear part (namely, in the first test and in the second one, see (30) and (36), respectively). To examine whether our nonlinear EBK solver is able to handle nonlinear problems with no linear part, we now consider the following nonlinear heat conduction problem, described in [51]: find unknown which satisfies

| (42) |

We discretize this initial boundary value problem on a regular grid of nodes , , , , , , , with grid widths and . The shifted grid nodes are taken for the direction to accommodate the Neumann boundary conditions. The standard second order finite difference approximation is employed, which reads, for the derivative,

where . The same finite difference approximations are applied in and directions. This space discretization of the initial boundary value problem (42) yields an IVP

| (43) |

where the entries of the vector function contain the numerical solution values and the constant in time vector contains the inhomogeneous boundary condition contributions. The waveform relaxation iteration is applied with the splitting (3) where we set, for ,

In this relation, it is not difficult to recognize the same splitting as is used for the Burgers equation test (with and ).

The EBK inner-outer iterations are applied exactly in the same setting as in the previous test, with the same stopping criteria, see (39), (40) and the same number of samples . As in the previous test, we compare the EBK solver with the ROS2 integrator. The results of the test runs are presented in Table 5. As we see, on the grid the two time integration schemes perform equally well in terms of the CPU time. Note that the EBK solver requires much fewer LU factorizations. On the finer the EBK solver clearly outperforms ROS2, both in terms of the CPU time and required LU factorizations. When switching to the finer grid, to keep convergence in the EBK scheme, we have to decrease the time interval from to . Hence, we observe a deterioration of the EBK convergence in this test. There is also a moderate increase in the required number of nonlinear iterations: with EBK needs in total 73 and 94 iterations on the two grids, respectively.

| method, time steps (t.s.) | iter./ | LUs (LUs applic.), | relative | CPU |

| tolerance / | steps | matvecs/fevals | error | time, s |

| grid , , | ||||

| nonlin.EBK(), 10 t.s., 1e-02 | 52 | 52 (1223), 52 | 1.30e-04 | 387 |

| nonlin.EBK(), 10 t.s., 1e-02 | 52 | 52 (1936), 52 | 5.91e-05 | 448 |

| ROS2, | 250 | 250 (500) | 7.26e-05 | 347 |

| ROS2, | 500 | 500 (1000) | 2.02e-05 | 821 |

| grid , , | ||||

| nonlin.EBK(), 20 t.s., 1e-02 | 94 | 94 (2068), 94 | 4.41e-05 | 8229 |

| ROS2, | 500 | 500 (1000) | 3.99e-05 | 19578 |

4 Conclusions

In this paper nonlinear waveform relaxation iterations and their block Krylov subspace implementation (the nonlinear EBK method) are examined theoretically and practically. Theoretically we have shown that convergence of the considered nonlinear waveform relaxation iteration is determined by the Lipschitz constant of the nonlinear term in (3), the logarithmic norm bound of the linear term matrix and the time interval length , see relation (10). We have also established a superlinear convergence of our nonlinear iteration, which is known to hold in the linear case, see Proposition 2. Furthermore, we have linked the residual convergence in the nonlinear iteration to the error convergence, see relation (20). Finally, it is shown that inexact solution of the linear initial-value problem arising at each waveform relaxation iteration leads to a process whose convergence is equivalent to convergence of the exact waveform relaxation iteration with an increased Lipschitz constant , with being the inexactness tolerance.

Practical performance of nonlinear waveform relaxation heavily depends on the ability to solve the arising linear initial-value problem at each relaxation iteration quickly and to handle the iterative across-time solutions efficiently. As we show here, both these goals can be successfully reached by employing the exponential block Krylov subspace method EBK [37]. In the presented experiments the EBK method is used in the shift-and-invert (SAI) mode, i.e., it has been assumed that the SAI linear systems (similar to those arising in implicit time stepping) can be solved efficiently.

Comparisons with implicit time integration methods demonstrated superiority and potential of our EBK-SAI based nonlinear waveform relaxation iterations. The presented nonlinear residual stopping criterion has been proven to be a reliable way to control convergence and to estimate accuracy. Moreover, as the first two numerical tests demonstrate, the grid independent convergence of linear SAI Krylov subspace exponential time integrators (see [45, 46, 52]) can be inherited by the nonlinear waveform relaxation iterations.

As numerical tests and our experience suggest, the presented method has its limitations. First, it is not intended for strongly nonlinear problems with rapidly changing solutions. The method clearly profits if the right hand side function of the ODE system contains a linear part. Second, the linear systems arising in the presented nonlinear EBK method have a structure similar to linear systems occuring in implicit time integrators with significantly increased time step sizes. Therefore, the overall efficiency of the method for a particular application heavily depends on availability of efficient linear solvers. Third, the nonlinear EBK method is aimed at solving initial-value problems with a moderate accuracy (of order or ), which is usually enough for solving PDE problems. The accuracy of the presented method can be limited by the built-in low rank approximations and solving large ill-conditioned linear systems. Finally, some tuning (such as a proper choice of the block size and the time interval length) is required to apply the proposed approach successfully. Some of these issues can be addressed in more details in a future.

Nevertheless, as results in this paper show, the presented framework seems to be promising, has a potential and is of interest for the numerical community. Recently, an alternative approach based on exponential Krylov subspace integrators has been presented in [53, 54] for nonlinear heat equations. In this problem a rapid change in the solution seems to exclude using waveform relaxation with block Krylov subspaces as presented here. A further research should be done to get an insight on when to switch between the block Krylov subspace approach discussed here and the approach of [53]. Finally, it seems to be useful to gain more insight how these two approaches compare to other exponential time integrators for nonlinear initial-value problems [3, 55, 56].

Acknowledgments

The author thanks the anonymous reviewer for careful reading and suggesting several valuable improvements. We acknowledge the use of the hybrid supercomputer K-100 facilities installed in the Supercomputer center of collective usage at Keldysh Institute of Applied Mathematics of Russian Academy of Sciences.

References

- \bibcommenthead

- [1] W. Hundsdorfer, J.G. Verwer, Numerical Solution of Time-Dependent Advection-Diffusion-Reaction Equations (Springer Verlag, 2003)

- [2] H.C. Elman, D.J. Silvester, A.J. Wathen, Finite Elements and Fast Iterative Solvers with Applications in Incompressible Fluid Dynamics (Oxford University Press, Oxford, 2005)

- [3] M. Hochbruck, A. Ostermann, Exponential integrators. Acta Numer. 19, 209–286 (2010). 10.1017/S0962492910000048

- [4] P.N. Brown, Y. Saad, Convergence theory of nonlinear Newton–Krylov algorithms. SIAM J. Optimization 4(2), 297–330 (1994)

- [5] R. Choquet, J. Erhel, Newton–GMRES algorithm applied to compressible flows. Int. J. Numer. Meth. Fluids 23, 177–190 (1996)

- [6] D. Tromeur-Dervout, Y. Vassilevski, Choice of initial guess in iterative solution of series of systems arising in fluid flow simulations. Journal of Computational Physics 219(1), 210–227 (2006). https://doi.org/10.1016/j.jcp.2006.03.014. URL https://www.sciencedirect.com/science/article/pii/S0021999106001483

- [7] N.N. Yanenko, The method of fractional steps. The solution of problems of mathematical physics in several variables (Springer-Verlag, New York, 1971), pp. viii+160

- [8] E.G. D’yakonov, Difference systems of second order accuracy with a divided operator for parabolic equations without mixed derivatives. USSR Comput. Math. Math. Phys. 4(5), 206–216 (1964)

- [9] P. Csomós, I. Faragó, A. Havasi, Weighted sequential splittings and their analysis. Comput. Math. Appl. pp. 1017–1031 (2005)

- [10] J.G. Verwer, E.J. Spee, J.G. Blom, W. Hundsdorfer, A second order Rosenbrock method applied to photochemical dispersion problems. SIAM J. Sci. Comput. 20, 456–480 (1999)

- [11] P.J. van der Houwen, B.P. Sommeijer, On the internal stability of explicit -stage Runge–Kutta methods for large values of . Z. Angew. Math. Mech. 60, 479–485 (1980)

- [12] V.O. Lokutsievskii, O.V. Lokutsievskii, On numerical solution of boundary value problems for equations of parabolic type. Soviet Math. Dokl. 34(3), 512–516 (1987)

- [13] B.P. Sommeijer, L.F. Shampine, J.G. Verwer, RKC: An explicit solver for parabolic PDEs. J. Comput. Appl. Math. 88, 315–326 (1998)

- [14] H. Tal-Ezer, Spectral methods in time for parabolic problems. SIAM J. Numer. Anal. 26(1), 1–11 (1989)

- [15] V.I. Lebedev, Explicit difference schemes for solving stiff systems of ODEs and PDEs with complex spectrum. Russian J. Numer. Anal. Math. Modelling 13(2), 107–116 (1998). 10.1515/rnam.1998.13.2.107

- [16] J.G. Verwer, B.P. Sommeijer, W. Hundsdorfer, RKC time-stepping for advection–diffusion–reaction problems. Journal of Computational Physics 201(1), 61–79 (2004). https://doi.org/10.1016/j.jcp.2004.05.002. URL https://www.sciencedirect.com/science/article/pii/S0021999104001925

- [17] M.A. Botchev, G.L.G. Sleijpen, H.A. van der Vorst, Stability control for approximate implicit time stepping schemes with minimum residual iterations. Appl. Numer. Math. 31(3), 239–253 (1999). https://doi.org/10.1016/S0168-9274(98)00138-X

- [18] M.A. Botchev, H.A. van der Vorst, A parallel nearly implicit scheme. Journal of Computational and Applied Mathematics 137, 229–243 (2001). https://doi.org/10.1016/S0377-0427(01)00358-2

- [19] V.T. Zhukov, Explicit methods of numerical integration for parabolic equations. Mathematical Models and Computer Simulations 3(3), 311–332 (2011). https://doi.org/10.1134/S2070048211030136

- [20] E. Lelarasmee, A.E. Ruehli, A.L. Sangiovanni-Vincentelli, The waveform relaxation method for time-domain analysis of large scale integrated circuits. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 1(3), 131–145 (1982). 10.1109/TCAD.1982.1270004

- [21] A.R. Newton, A.L. Sangiovanni-Vincentelli, Relaxation-based electrical simulation. IEEE Transactions on Electron Devices 30(9), 1184–1207 (1983). 10.1109/T-ED.1983.21275

- [22] S. Vandewalle, Parallel Multigrid Waveform Relaxation for Parabolic Problems (Teubner, Stuttgart, 1993)

- [23] U. Miekkala, O. Nevanlinna, Iterative solution of systems of linear differential equations. Acta Numerica 5, 259–307 (1996). https://doi.org/10.1017/S096249290000266X

- [24] J. White, F. Odeh, A.L. Sangiovanni-Vincentelli, A. Ruehli, Waveform relaxation: Theory and practice. Tech. Rep. UCB/ERL M85/65, EECS Department, University of California, Berkeley (1985). www.eecs.berkeley.edu/Pubs/TechRpts/1985/543.html

- [25] U. Miekkala, O. Nevanlinna, Convergence of dynamic iteration methods for initial value problems. SIAM Journal on Scientific and Statistical Computing 8(4), 459–482 (1987). https://doi.org/10.1137/0908046

- [26] C. Lubich, A. Ostermann, Multi-grid dynamic iteration for parabolic equations. BIT Numerical Mathematics 27, 216–234 (1987). URL http://doi.org/10.1007/BF01934186. 10.1007/BF01934186

- [27] J. Janssen, S. Vandewalle, Multigrid waveform relaxation of spatial finite element meshes: The continuous-time case. SIAM J. Numer. Anal. 33(2), 456–474 (1996). 10.1137/0733024

- [28] M.J. Gander, S. Güttel, PARAEXP: A parallel integrator for linear initial-value problems. SIAM Journal on Scientific Computing 35(2), C123–C142 (2013)

- [29] G.L. Kooij, M.A. Botchev, B.J. Geurts, A block Krylov subspace implementation of the time-parallel Paraexp method and its extension for nonlinear partial differential equations. Journal of Computational and Applied Mathematics 316(Supplement C), 229–246 (2017). https://doi.org/10.1016/j.cam.2016.09.036

- [30] G. Kooij, M.A. Botchev, B.J. Geurts, An exponential time integrator for the incompressible Navier–Stokes equation. SIAM J. Sci. Comput. 40(3), B684–B705 (2018). https://doi.org/10.1137/17M1121950

- [31] T.J. Park, J.C. Light, Unitary quantum time evolution by iterative Lanczos reduction. J. Chem. Phys. 85, 5870–5876 (1986)

- [32] H.A. van der Vorst, An iterative solution method for solving , using Krylov subspace information obtained for the symmetric positive definite matrix . J. Comput. Appl. Math. 18, 249–263 (1987)

- [33] V.L. Druskin, L.A. Knizhnerman, Two polynomial methods of calculating functions of symmetric matrices. U.S.S.R. Comput. Maths. Math. Phys. 29(6), 112–121 (1989)

- [34] E. Gallopoulos, Y. Saad, Efficient solution of parabolic equations by Krylov approximation methods. SIAM J. Sci. Statist. Comput. 13(5), 1236–1264 (1992). http://doi.org/10.1137/0913071

- [35] V.L. Druskin, L.A. Knizhnerman, Krylov subspace approximations of eigenpairs and matrix functions in exact and computer arithmetic. Numer. Lin. Alg. Appl. 2, 205–217 (1995)

- [36] M. Hochbruck, C. Lubich, On Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 34(5), 1911–1925 (1997)

- [37] M.A. Botchev, A block Krylov subspace time-exact solution method for linear ordinary differential equation systems. Numer. Linear Algebra Appl. 20(4), 557–574 (2013). http://doi.org/10.1002/nla.1865

- [38] O. Nevanlinna, F. Odeh, Remarks on the convergence of waveform relaxation method. Numerical functional analysis and optimization 9(3-4), 435–445 (1987). https://doi.org/10.1080/01630568708816241

- [39] K. Dekker, J.G. Verwer, Stability of Runge–Kutta methods for stiff non-linear differential equations (North-Holland Elsevier Science Publishers, 1984)

- [40] M.A. Botchev, V. Grimm, M. Hochbruck, Residual, restarting and Richardson iteration for the matrix exponential. SIAM J. Sci. Comput. 35(3), A1376–A1397 (2013). http://doi.org/10.1137/110820191

- [41] M.A. Botchev, I.V. Oseledets, E.E. Tyrtyshnikov, Iterative across-time solution of linear differential equations: Krylov subspace versus waveform relaxation. Computers & Mathematics with Applications 67(12), 2088–2098 (2014). http://doi.org/10.1016/j.camwa.2014.03.002

- [42] E. Hairer, G. Wanner, Solving Ordinary Differential Equations II. Stiff and Differential–Algebraic Problems, 2nd edn. Springer Series in Computational Mathematics 14 (Springer–Verlag, 1996)

- [43] L.A. Krukier, Implicit difference schemes and an iterative method for solving them for a certain class of systems of quasi-linear equations. Sov. Math. 23(7), 43–55 (1979). Translation from Izv. Vyssh. Uchebn. Zaved., Mat. 1979, No. 7(206), 41–52 (1979)

- [44] R.W.C.P. Verstappen, A.E.P. Veldman, Symmetry-preserving discretization of turbulent flow. J. Comput. Phys. 187(1), 343–368 (2003). http://doi.org/10.1016/S0021-9991(03)00126-8

- [45] I. Moret, P. Novati, RD rational approximations of the matrix exponential. BIT 44, 595–615 (2004)

- [46] J. van den Eshof, M. Hochbruck, Preconditioning Lanczos approximations to the matrix exponential. SIAM J. Sci. Comput. 27(4), 1438–1457 (2006)

- [47] L.F. Shampine, M.W. Reichelt, The MATLAB ODE suite. SIAM J. Sci. Comput. 18(1), 1–22 (1997). Available at www.mathworks.com

- [48] J. Jon, S. Klaus, The Liouville–Bratu–Gelfand problem for radial operators. Journal of Differential Equations 184, 283–298 (2002). https://doi.org/10.1006/jdeq.2001.4151

- [49] T.A. Davis, A column pre-ordering strategy for the unsymmetric-pattern multifrontal method. ACM Trans. Math. Software 30(2), 167–195 (2004). 10.1145/992200.992205

- [50] T.A. Davis, Algorithm 832: UMFPACK V4.3—an unsymmetric-pattern multifrontal method. ACM Trans. Math. Software 30(2), 196–199 (2004). 10.1145/992200.992206

- [51] V.T. Zhukov, N. Novikova, O.B. Feodoritova, On direct solving conjugate heat transfer of gas mixture and solid body. KIAM Preprint 2023(12) (2023). https://doi.org/10.20948/prepr-2023-12

- [52] V. Grimm, Resolvent Krylov subspace approximation to operator functions. BIT 52(3), 639–659 (2012). 10.1007/s10543-011-0367-8

- [53] M.A. Botchev, V.T. Zhukov, Exponential Euler and backward Euler methods for nonlinear heat conduction problems. Lobachevskii Journal of Mathematics 44(1), 10–19 (2023). https://doi.org/10.1134/S1995080223010067

- [54] M.A. Botchev, V.T. Zhukov, Adaptive iterative explicit time integration for nonlinear heat conduction problems. Lobachevskii Journal of Mathematics 45(1), 12–20 (2024). https://doi.org/10.1134/S1995080224010086

- [55] D. Hipp, M. Hochbruck, A. Ostermann, An exponential integrator for non-autonomous parabolic problems. ETNA 41, 497–511 (2014). http://etna.mcs.kent.edu/volumes/2011-2020/vol41/

- [56] E. Hansen, A. Ostermann, High-order splitting schemes for semilinear evolution equations. BIT Numerical Mathematics 56(4), 1303–1316 (2016)