On a random model of forgetting

Abstract

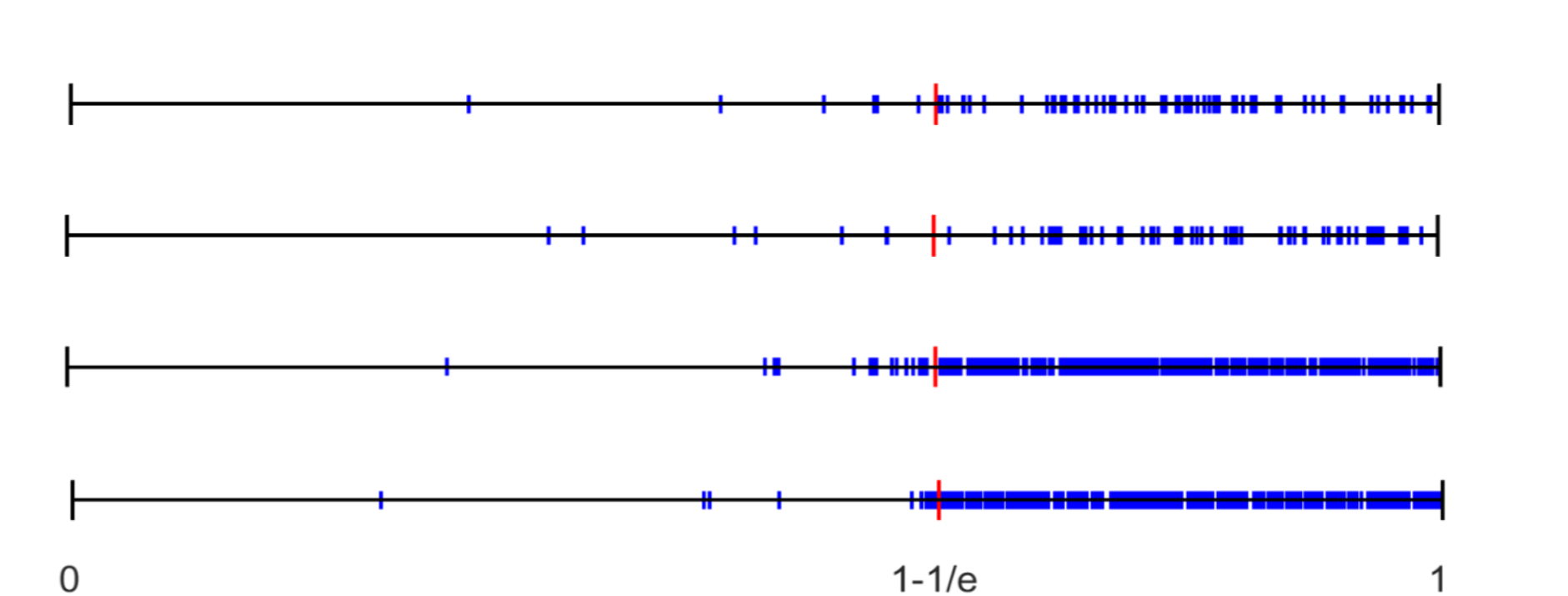

Georgiou, Katkov and Tsodyks considered the following random process. Let be an infinite sequence of independent, identically distributed, uniform random points in . Starting with , the elements join one by one, in order. When an entering element is larger than the current minimum element of , this minimum leaves . Let denote the content of after the first elements join. Simulations suggest that the size of at time is typically close to . Here we first give a rigorous proof that this is indeed the case, and that in fact the symmetric difference of and the set is of size at most with high probability. Our main result is a more accurate description of the process implying, in particular, that as tends to infinity converges to a normal random variable with variance . We further show that the dynamics of the symmetric difference of and the set converges with proper scaling to a three dimensional Bessel process.

1 Introduction

The following random process, which we denote by , is considered by Georgiou, Katkov and Tsodyks in [8] motivated by the study of a possible simplified model for the process of forgetting. Let be independent, identically distributed, uniform random points in . Starting with the set the elements enter the set one by one, in order, and if when enters it is larger than the minimum member of , this minimum leaves it. Let us note that in this process, the fact that are uniformly distributed is not crucial. Indeed, it only matters that the ordering of the arriving elements is a uniform random ordering and therefore the uniform distribution can be replaced with any other non-atomic distribution.

The set will be called the memory. We let be the finite process that stops at time . Simulations indicate that the expected size of the memory at time is where is the basis of the natural logarithm, and that in fact with high probability (that is, with probability approaching as tends to infinity) the size at the end is very close to the expectation. Tsodyks [12] suggested the problem of proving this behavior rigorously. Our first result here is a proof that this is indeed the case, as stated in the following theorem. Recall that for two function the notation means that for all large one has .

Theorem 1.

In the process the size of the memory at time is, with high probability, . Moreover, with high probability, the symmetric difference between the final content of the memory and the set is of size at most .

A result similar to Theorem 1 (without any quantitative estimates) has been proved independently by Friedgut and Kozma [7] using different ideas.

Our main result provides a more accurate description of the process as stated in the following theorems. To state the next theorem we let be the content of the list at time and let be the size of the list.

Theorem 2.

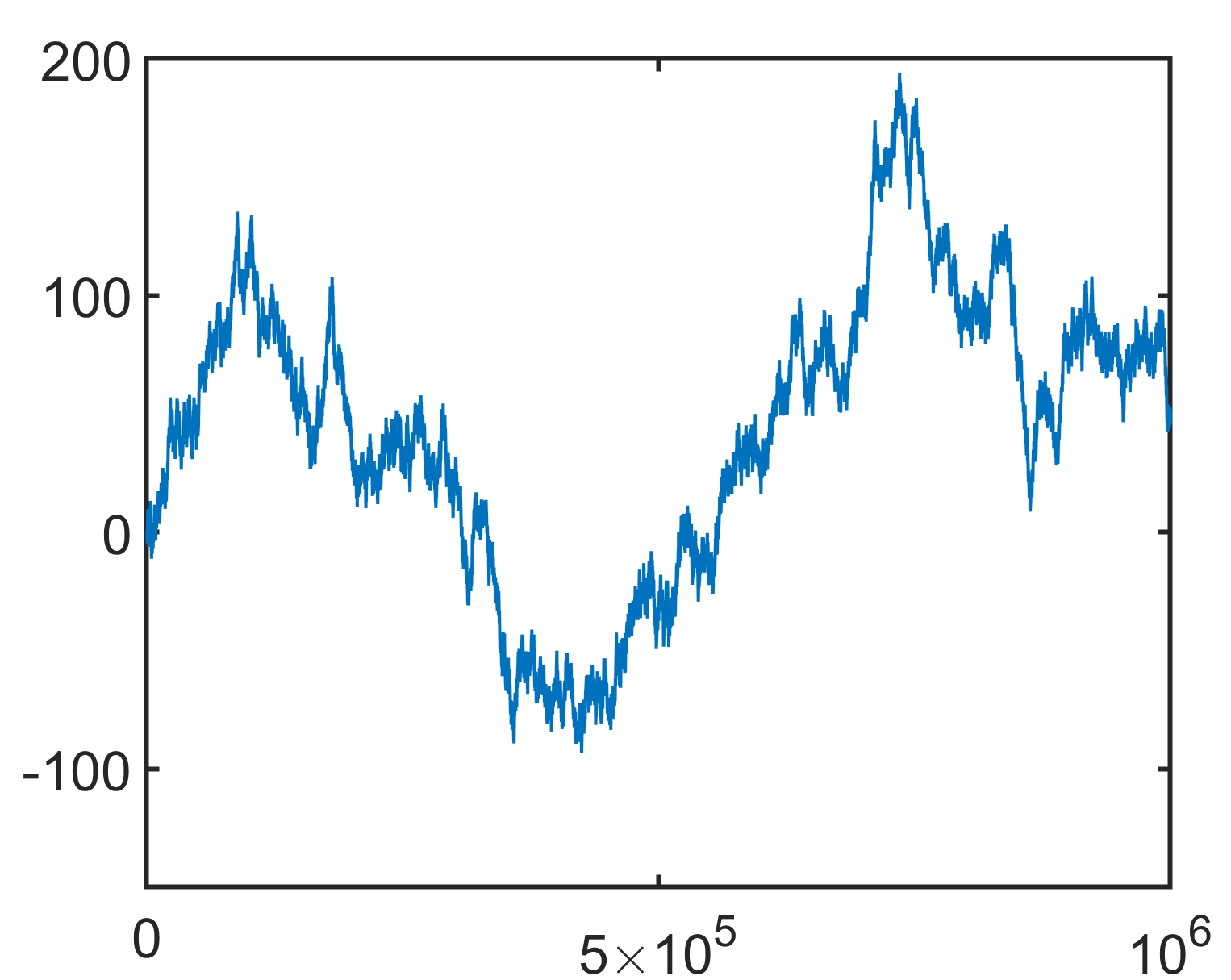

Let be a standard Brownian motion. We have that

Our next theorem describes the final set of points obtained in this process. In particular, it strengthens the estimate in Theorem 1 by removing the poly-logarithmic factor in the bound on the size of the symmetric difference between and the set where . The theorem also provides the limiting distribution of this difference. To state the theorem we define

Theorem 3.

As tends to infinity we have

where is a standard Brownian motion and is the maximum process. In particular, the evolution of the size of the symmetric difference is given by

| (1) |

where is a three dimensional Bessel process. It follows that the differences and the symmetric difference satisfy the following limit laws

| (2) |

where is a standard normal random variable and has the density

| (3) |

In fact, in the following theorem, we give a complete description of the evolution of the process in a neighbourhood around the critical point . For and we let be the set of elements in the list that are smaller than at time and let .

Theorem 4.

As tends to infinity we have

Remark 1.1.

The convergence in Theorems 2, 3 and 4 is a weak convergence in the relevant Skorohod spaces. It is equivalent to the following statements. In Theorem 2, we define the random function . Then, for all there exists a coupling of the sequence and a Brownian motion such that almost surely

In Theorem 4, we define . Then, for all , there exists a coupling such that almost surely

The convergence in Theorem 3 is similar.

The rest of the paper is organized as follows. In the next section we describe the proof of Theorem 1. It is based on subadditivity and martingale concentration applied to the natural Doob martingale associated with the process. The concentration part resembles the basic approach in [1]. In Section 3 we prove Theorem 2, Theorem 3 and Theorem 4. The main idea in the proofs is to consider the process

| (4) |

which we call “the branching martingale.” When is not empty, this process has a zero drift when , a negative drift when and a positive drift when . When is empty, can only increase in all cases. The intuition behind this magical formula comes from a certain multi-type branching process. In a multi-type branching process, there are individuals of different types. For each type , an offspring distribution on is given. In each step, every individual of type gives birth to a random number of individuals of each type according to the distribution . Recall that a single-type branching process is critical when the expected number of offspring is and in this case the size of the generation is a martingale. Similarly, for a multi-type branching process one defines the expectation matrix where is the expected number of children of type of an individual of type . The process is then critical when the maximal eigenvalue of is one and in this case the process

| (5) |

is a martingale where is the eigenvector of with eigenvalue and is the number of individuals of each type at time . For more background on multi-type branching processes see chapters V and VI in [3].

We think of our process as a multi-type branching process in which the type space is continuous. Given , we define a branching process with the type space . In this branching, the offspring distribution of an individual of type is given as follows. With probability , the individual has offspring, with probability it has one offspring of type that is uniformly distributed in and with probability it has two offspring, one of type and one of type that is uniformly distributed in .

We now explain the connection between this branching and our process. Suppose we start the process with a list such that the minimum of is , and we want to study the time until is removed from the list. We think of each point that is added to the list as an individual of type in the branching process and ignore points that fall to the right of . We claim that the number of individuals that were born before the extinction of the branching described above is exactly the time until is removed in the minimum process. Indeed, instead of exposing the branching in the usual way according to the generations, we expose the offspring of an individual when it becomes the current minimum of the list. When the element becomes the minimum, with probability it is removed and decreases by , with probability we remove from and replace it with a uniform element in and with probability we keep and add another element to that is uniformly distributed in .

The branching described above can be shown to be subcritical when , critical when and supercritical when , where . Now, in the critical case we look for a martingale of the form (5) which translates in the continuous case to the process

| (6) |

where is an eigenfunction of the expectation operator with eigenvalue . In order to find we let be the minimum of the list at time and write

| (7) |

The last expression is zero only when which leads to (4).

2 Proof of Theorem 1

For any integer and any let denote the set of numbers that are in and lie in after the first steps, that is, after have been inserted. Put . Therefore is the size of at the end of the process. Let denote the value just before enters it. Thus and .

We start with the following lemma showing that the expected value of the size of at the end is linear in .

Lemma 2.1.

For all we have

Proof: For each let be the indicator random variable whose value is if (and otherwise). Let be the indicator random variable with value if and . Note that is at most the number of , whose values are in . Indeed, whenever an element with leaves the memory set. The expected value of this number is , as and any other lies in with probability . Therefore the expectation of is at most . We claim that for every , the expected value of is at least . Indeed, if the expected value of is (and the expectation of is ). If , the expected value of is , and that of is . By linearity of expectation in this case the expected value of is . This proves the claim. Using again linearity of expectation we conclude that the expectation of is at least . Since the expectation of is at most it follows that the expectation of is at least . Note that the size of at the end is exactly , as it has size at the beginning, its size never decreases, and it increases by in step if and only if . The assertion of the lemma follows.

We also need the following simple deterministic statement.

Lemma 2.2.

Let be arbitrary finite disjoint subsets of . Let be the process above with the sequence starting with . Let denote the process with the same sequence , starting with . For and , let denote the set of numbers in that lie in after the first steps in the process . Similarly, let denote the set of numbers in that lie in after the first steps in the process . Then contains and moreover

Proof: We first describe the proof for . Apply induction on . The result is trivial for . Assuming it holds for consider step number in the two processes, when enters the memory . If is smaller than the minimum of both sets and then it joins the memory in both processes and the desired properties clearly hold. If it is larger than the minimum in then it is also larger than the minimum in , it joins the memory in both cases, and both minima leave. It is easy to check that the desired properties hold in this case too. Finally, if is smaller than the minimum in but larger than the minimum in then joins both memories and the minimum of leaves. In this case still contains , but the difference between their sizes decreases by . This also satisfies the desired properties, completing the proof of the induction step for . The statement for general follows from the statement for

The above lemma has two useful consequences which are stated and proved in what follows.

Corollary 2.3.

For each fixed the function is subadditive.

Proof: We have to show that for every , . Consider the process with the points . The process starts with the first steps, let be the content of the memory after these steps. We can now compare the process with the points starting with the empty set (which is identical to starting with ), and the process with the same points starting with . By Lemma 2.2, throughout the two processes, the number of elements in the process that lie in is always at most the number of elements in that lie in , plus . Taking expectations in both sides of this inequality implies the desired subadditivity.

Corollary 2.4.

For each and any integer , the random variable is -Lipschitz, that is, its value changes by at most if we change the value of a single . Therefore for any the probability that it deviates from its expectation by more than is at most

Proof: The effect that changing the value of to has on the content of after step is a change of to in , and possibly a removal of the minimum element of before step in one scenario and not in the other. In any case this means that one process can be converted to the other by adding an element to the memory and then by removing one or two elements from it. By Lemma 2.2 for each fixed choice of all other the first modification can only increase the value of , and can increase it by at most . The second modification can then only decrease this value, decreasing it by at most . This implies that the value changes by at most , that is, the function is -Lipschitz. The concentration inequality thus follows from Azuma’s Martingale Inequality, c.f. [4, Theorem 1.1].

Proof of Theorem 1: The symmetric difference of the sets and is of size at most two and therefore we may assume that is even throughout the proof of the theorem. We may also assume that is sufficiently large. Without any attempt to optimize the absolute constants, let be a real number so that the expected value of the number of elements in the memory that lie in at the end of the process with the first elements is . Since , by Lemma 2.1, and the function is continuous, there is such a value (provided .) We claim that the expected number of indices so that and the minimum in the memory satisfies , is smaller than . Indeed, it follows from Lemma 2.2 that the function is monotone increasing in . Thus, for every , By Corollary 2.4 (and using that ) this implies that for every fixed in this range the probability that is larger than is at most . Therefore, by linearity of expectation, the expected number of steps in which is smaller than , proving the claim.

By this claim, the expected number of steps so that and is, of course, also at most .

On the other hand, by the subadditivity established in Corollary 2.3, the expected value of is at most .

We next show that deviates from by To this end we compute the expectation of the random variable counting the number of indices for which and is being removed at a step in which the arriving element exceeds . By definition where denotes the indicator random variable whose value is if and is being removed at a step in which the arriving element is larger than .

For all and , let be the event that is removed by . Conditioning on and on the event , the random variable is uniformly distributed on the interval and therefore on the event we have

| (8) |

Thus, we obtain

The expected number of for which is not removed is at most , as all these elements belong to . Thus,

where in the third equality we used that is uniform in .

On the other hand, the expected number of steps in which the element arrived and caused the removal of an element for some is . Indeed, the expected number of with is , and almost each such removes an element , where the expected number of such that exceed is . In some of these steps the removed element may be for some , but the expected number of such indices is at most the expectation of which is only .

It follows that

Dividing by (noting that is bounded away from ) we conclude that

Since the expected number of elements for that fall in the interval

is we conclude that the expected number of steps satisfying that leave the memory during the process is . In addition, since it follows that the expected number of steps so that and stays in the memory at the end of the process is also at most .

By splitting the set of all steps into dyadic intervals we conclude that the expected size of the symmetric difference between the final content of the memory and the set of all elements larger than is . This clearly also implies that the expected value of deviates from by . Finally note that by Corollary 2.4, for any positive the probability that either or the above mentioned symmetric difference deviate from their expectations by more than is at most .

3 The branching martingale

In this section we prove Theorem 2, Theorem 3 and Theorem 4. The main idea in the proof is the following martingale which we call the branching martingale.

Let and let be the critical point. Recall that is the set of elements in the list at time that are smaller than . Define the processes

and let . The following claim is the fundamental reason to consider these processes.

Proposition 3.1.

On the event we have that

and therefore is a martingale. Moreover, is roughly minus the minimum process of . More precisely, we have almost surely

| (9) |

Remark 3.2.

Proposition 3.1 essentially explains all the results in this paper. See Theorem 4 for example. Since is a martingale it is a Brownian motion in the limit and is minus the minimum process of this Brownian motion. Thus, , which is closely related to the number of elements in the list that are smaller than is the difference between the Brownian motion and its minimum.

Proof of Proposition 3.1.

On the event we have

| (10) |

where is the minimum of the list at time and is the uniform variable that arrives at time in the process. Thus, on the event we have

It follows that is a martingale. Indeed on the event we have

Whereas on the event we have that .

We next turn to prove the second statement of the proposition. For all we have that

and therefore . Next, if then

If , we let be the last time before for which (assuming it exists). We have that

Finally suppose there is no such for which . Then for all , and hence for all , and . This finishes the proof of the proposition. ∎

In the following section we give some rough bounds on the process that hold with very high probability. We will later use these a priori bounds in order to obtain more precise estimates and in order to prove the main theorems.

3.1 First control of the process

Definition 3.1.

We say that an event holds with very high probability (WVHP) if there are absolute constants such that .

Let and recall that . Define the events

and

Lemma 3.3.

The event holds WVHP.

Proof.

Let and note that . Also note that if and , then for some . Thus, we have that

| (11) |

where the events are defined by

Next, we show that each of these events has a negligible probability. To this end, let and define

By Proposition 3.1, the process is a martingale. Moreover, since , we have that

Thus, using that has bounded increments and that , we get from Azuma’s inequality that

where the last inequality clearly holds both when and when . It follows from (11) that

In order to obtain this inequality simultaneously for all we first use a union bound to get it simultaneously for all and then argue that does not change by much WVHP when changes by . The details are omitted. It follows that .

We turn to bound the probability of . To this end, let and . The event

holds WVHP by the first part of the proof and by Chernoff’s inequality for the Binomial random variable . On this event we have that and therefore, using the discretization argument as above we obtain . ∎

The following lemma implies in particular that, with very high probability, the minimum is rarely above for any . For the statement of the lemma, define the random variable

and the events

and

Lemma 3.4.

The event holds WVHP.

Proof.

We claim that for any the process

| (12) |

is a martingale. Indeed, if then and therefore by Proposition 3.1 we have . Otherwise, using that we have

Moreover, when the martingale has bounded increments and therefore by Azuma’s inequality we have WVHP

| (13) |

Now, when , on the event we have

Substituting this back into (13) we obtain that WVHP

| (14) |

This shows that holds WVHP using the discretization trick. Indeed, WVHP each is the minimum at most times and therefore WVHP won’t change by more than when changes by .

We turn to bound the probability of . To this end, let and let . Using (13) and the fact that we obtain that WVHP for all

| (15) |

where the last inequality follows from the definition of . Thus, WVHP, for all we have that and therefore

where the second to last inequality holds WVHP by (14) with . Of course the same bound holds when (by slightly changing ) and therefore, using the discretization trick we get that holds WVHP. ∎

3.2 Proof of Theorem 4

The main step toward proving the theorem is the following proposition that determines the scaling limit of when is in a small neighborhood around .

Proposition 3.5.

We have that

Proof of Theorem 4.

By Proposition 3.5 and (9) we have that the joint distribution

converges to . Thus, using that we obtain

| (16) |

For the proof of Theorem 4 it suffices to show that in the equation* above can be replaced by . Intuitively, it follows as most of the terms in the sum in the definition of are close to . Formally, let and . By Lemma 3.3 we have WVHP that for all and

| (17) |

This shows that can be replaced by in equation (16) and therefore finishes the proof of the theorem. ∎

We turn to prove Proposition 3.5. The proposition follows immediately from the following two propositions.

Proposition 3.6.

We have that

Proposition 3.7.

We have WVHP for all with that

We start by proving Proposition 3.6. The main tool we use is the following martingale functional central limit theorem [5, Theorem 8.2.8] .

Theorem 3.8.

Let be a martingale with bounded increments. Recall that the predictable quadratic variation of is given by

Suppose that for all we have that

where the convergence is in probability. Then

where is a standard Brownian motion.

In order to use Theorem 3.8 we need to estimate the predictable quadratic variation of the martingale . To this end, we need the following lemma. Let us note that the exponent in the lemma is not tight. However, it suffices for the proof of the main theorems.

Lemma 3.9.

For any bounded function that is differentiable on we have

where the constants may depend on the function .

Proof.

Throughout the proof we let the constants and depend on the function . For the proof of the lemma we split the interval into small intervals where . For all we let

| (18) |

We have that

| (19) |

Next, we estimate the size of . Using Lemma 3.4 we have WVHP

where in the last equality we used the Taylor expansion of the function around . Thus, using that and for all we obtain

Substituting the last estimate back into (19) we obtain

where in the last equality we used Lemma 3.4 once again. ∎

We can now prove Proposition 3.6.

Proof of Proposition 3.6.

In order to use Theorem 3.8 we compute the predictable quadratic variation of . Clearly, on the event we have that . On the event we have

Thus, the predictable quadratic variation of is given by

| (20) |

where

Next, we have that

and therefore, by Lemma 3.9 we have WVHP that . It follows that for all we have in probability and therefore by Theorem 3.8 we have

as needed. ∎

We turn to prove Proposition 3.7. The main tool we use is the following martingale concentration result due to Freedman [6]. See also [4, Theorem 1.2].

Theorem 3.10 (Freedman’s inequality).

Let be a martingale with increments bounded by and let

be the predictable quadratic variation. Then,

We can now prove Proposition 3.7.

Proof of Proposition 3.7.

Let such that . It follows from Proposition 3.1 that the process

is a martingale. Next, consider the difference martingale and note that

Thus, by Lemma 3.4 we have WVHP

We obtain using Theorem 3.10 that

Finally, using the expansion we get that WVHP

This finishes the proof of the proposition using the discretization trick. ∎

3.3 Proof of Theorem 3

The main statement in Theorem 3 is the scaling limit result

| (21) |

We start by proving (21) and then briefly explain how the other statements in Theorem 3 follow.

Next, recall that and therefore, by (17), WVHP

| (24) |

The convergence in (21) clearly follows from (22), (23), (24) and the following lemma

Lemma 3.11.

We have WVHP

Proof.

We start with the first term. Note that and therefore

It follows that is a martingale. The predictable quadratic variation of is given by

where the last inequality holds WVHP by Lemma 3.4. Thus, using the same arguments as in the proof of Proposition 3.7 and by Theorem 3.10 we have that WVHP.

We turn to bound the second term in the right hand side of (25). We have

where in the second inequality we separated the sum to and and where the last inequality holds WVHP by Lemma 3.4.

The last term in the right hand side of (25) is bounded by WVHP using the same arguments as in the first term. Indeed, is clearly a martingale and its quadratic variation is bounded by WVHP. ∎

We turn to prove the other results in Theorem 3. By (21) we have that

| (26) |

Recall that a three dimensional Bessel process, , is the distance of a three dimensional Brownian motion from the origin. In [11] Pitman proved that

and therefore the convergence in (1) follows from (26). Next, using (21) once again we get that

| (27) |

It is well known (see [9, Theorem 2.34 and Theorem 2.21]) that . Substituting these identities into (27) finishes the proof of the first two limit laws in (2).

It remains to prove the last limit law in (2). By (1) with , it suffices to compute the density of . This is a straightforward computation as is the norm of a three dimensional normal random variable. We omit the details of the computation which lead to the density given in (3).

We note that one can compute the density of without using the result of Pitman [11]. The following elementary argument was given to us by Iosif Pinelis in a Mathoverflow answer [10]. By the reflection principle for all

| (28) |

Equation (28) gives the joint distribution of and . From here it is straightforward to compute the joint density of and the density of . Once again, the details of the computation are omitted.

3.4 Proof of Theorem 2

Lemma 3.12.

We have that

Lemma 3.13.

We have WVHP

We start by proving Lemma 3.12.

Proof of Lemma 3.12.

On the event we have that

Thus, expanding the terms in we get that on this event

Thus, we can write where is given by the right hand side of the last equation when . Moreover, we have that

Thus, by Lemma 3.9 we have with very high probability that

This finishes the proof of the lemma using Theorem 3.8. ∎

We turn to prove Lemma 3.13.

Proof of Lemma 3.13.

Recall that and let . By Proposition 3.1 the process

is a martingale. It is straightforward to check that

where

Next, we bound each of the WVHP. Any uniform point that arrived before time such that can be mapped to the time in which it was removed. Thus, using also Lemma 3.4 we obtain that WVHP

This shows that WVHP .

Using the same arguments as in (17) we get that .

By Lemma 3.4 we have WVHP .

In order to bound we use the same arguments as in the proof of Proposition 3.7. Define the martingale . We have that

Thus, using Theorem 3.10 we obtain that WVHP .

It follows from a second order Taylor expansion of around that .

Lastly, by Lemma 3.4 we have WVHP .

∎

Acknowledgment: We thank Ehud Friedgut and Misha Tsodyks for helpful comments, we thank Ron Peled, Sahar Diskin and Jonathan Zung for fruitful discussions and we thank Iosif Pinelis for proving in [10] that the density function of is given by .

References

- [1] N. Alon, C. Defant and N. Kravitz, The runsort permuton, arXiv:2106.14762, 2021.

- [2] N. Alon and J. H. Spencer, The Probabilistic Method, Fourth Edition, Wiley, 2016, xiv+375 pp.

- [3] K. B. Athreya and P. E. Ney, Branching processes, Courier Corporation, 2004.

- [4] B. Bercu and A. Touati, Exponential inequalities for self-normalized martingales with applications, The Annals of Applied Probability, 18(5), 1848–1869, 2008.

- [5] R. Durrett, Probability: theory and examples, volume 49, Cambridge university press, 2019.

- [6] D. A. Freedman, On tail probabilities for martingales, The Annals of Probability, 3(1), 100–118, 1975.

- [7] E. Friedgut and G. Kozma, Private communication.

- [8] A. Georgiou, M. Katkov and M. Tsodyks, Retroactive interference model of forgetting, Journal of Mathematical Neuroscience (2021) 11:4.

- [9] P. Mörters and Y. Peres, Brownian motion, volume 30, Cambridge University Press, 2010.

- [10] I. Pinelis, What is the distribution of where is the maximum process of the Brownian motion . MathOverflow question 409729.

- [11] J. W. Pitman, One-dimensional Brownian motion and the three-dimensional Bessel process, Advances in Applied Probability, 7(3), 511–526, 1975.

- [12] M. Tsodyks, Private Communication.