On a Bernoulli Autoregression Framework

for Link Discovery and Prediction

Abstract

We present a dynamic prediction framework for binary sequences that is based on a Bernoulli generalization of the auto-regressive process. Our approach lends itself easily to variants of the standard link prediction problem for a sequence of time dependent networks. Focusing on this dynamic network link prediction/recommendation task, we propose a novel problem that exploits additional information via a much larger sequence of auxiliary networks and has important real-world relevance. To allow discovery of links that do not exist in the available data, our model estimation framework introduces a regularization term that presents a trade-off between the conventional link prediction and this discovery task. In contrast to existing work our stochastic gradient based estimation approach is highly efficient and can scale to networks with millions of nodes. We show extensive empirical results on both actual product-usage based time dependent networks and also present results on a Reddit based data set of time dependent sentiment sequences.

1 Introduction

Predicting and recommending links in a future network is a problem of significant interest in many communities with problems of interest ranging from recommending friends Aiello et al., (2012), patent partners Wu et al., (2013) to applications in disease propagation Myers and Leskovec, (2010) and detection of abnormal communication Huang and Lin, (2009). From a higher perspective link prediction is not markedly different from the problem of matrix completion when the network is represented as a adjacency matrix. However, in many cases past link information is predictive of the future. In one such application, Richard et al., (2010) proposed an approach for time dependent graphs where past adjacency matrices and a forecast of a network feature sequence is used to estimate links at a future time. This is a dynamic extension of the static link prediction problem Liben-Nowell and Kleinberg, (2007) with origins in using network properties of a static snapshot to predict links. As a simple example consider the problem of predicting communication between two users over a network, past information is clearly predictive.

In this paper we discuss one variant of the dynamic link prediction problem and in contrast to Richard et al., (2010) present a highly scalable model with an efficient estimation approach. The underlying idea behind our approach is to exploit a sequence of auxiliary networks with feature data to inform links in the network sequence of interest. This is a fairly common scenario in a large corporation with multiple products and services, it can be immensely useful to use network information gathered from a product A to inform usage (via link prediction) on a product B. In the online retail setting111Example dataset: https://www.kaggle.com/retailrocket/ecommerce-dataset such as Amazon a variety of user-item interactions are possible including “view”, “add-to-cart” and “purchase”. One could generate networks at a user-item level for each type of event, and leverage the “view” network or the “add-to-cart” network to infer connections on the “purchase” network, i.e., when a user eventually buys an item. In such scenarios we have two network sequences, a main sequence that is expected to be sparse and a relatively dense auxiliary sequence with additional feature information that is predictive of a link in the main network. The introduction of the auxiliary sequences serves two purposes, one to provide candidates of links to predict from and a feature set that is possibly predictive of these links. However, the model we propose is general and holds even for a single network sequence with feature information. In the standard matrix completion framework, the observed entries are directly modeled with low rank constraints on the matrix. Instead, we model link probabilities using a Bernoulli parametrization, incorporate an auto-regressive structure to allow for dependence on past data and also include an additional dependence on logit transformed feature data. The equivalence of a low rank constraint in this framework is a relatively under-parametrized model as we shall see in Section 3.

2 Related Work

Existing work on link prediction has primarily focused on static networks with methods ranging from matrix factorization Koren, (2008), node neighborhood measures Sarkar et al., (2011); Liben-Nowell and Kleinberg, (2007) to network diffusion Myers and Leskovec, (2010). For an extensive survey see Wang et al., (2015). In contrast (dynamic) link prediction in the presence of a sequence of networks is relatively unexplored. Vu et al., (2011) propose a longitudinal network evolution approach that models edges at time as a multivariate counting process where the intensity function incorporates edge level features (network derived) using either the multiplicative Cox or additive Aalen form. Our setting is different in that we have two sequences of networks that we can exploit and the design of our estimation method enables us to discover new links which is not possible here. Moreover, the estimation methods require computation of large weight matrices and it is not clear if this can scale to networks with millions of nodes. Additionally, many real world networks tend to have low rank structures due to similar local node characteristics and explicit introduction of these constraints is a desirable feature. Closer in structure to our model is the nonparametric approach described in Sarkar et al., (2012), the edge probabilities are assumed to be Bernoulli distributed with a link function connecting the edge/node probabilities to the node/edge level features. Besides the difference in the problem setting, Sarkar et al., (2012) use a nonparametric estimator for the link function that uses a kernel weighted local neighborhood approach (in time and feature space) to estimate the link function. This requires a search over edge features that are similar to the edge being modeled and can be quite expensive, even with the use of fast search techniques it is unlikely to scale to extremely large graphs.

In the matrix completion line of work Richard et al., (2010) propose a graph feature tracking (GFT) framework for dynamic networks where the entire network at a future step is estimated by solving an appropriately regularized optimization problem. The optimization reflects a trade-off between minimizing the error between the estimated and the last adjacency matrix, a low rank constraint and a term involving the features. This term attempts to minimize the gap between the mapped features for the estimated matrix and the unknown adjacency matrix at the future time. The main intuition being that the evolving features are indicative of links in the sequence of networks. This combination of optimization terms allows the method to uncover links that are not previously observed. See Section 4 for more details of GFT considered in the simulation study. In this work we also introduce a feature regularization term to allow for link discovery but unlike Richard et al., (2010) our approach is highly scalable. While their method involves an expensive SVD operation at every iteration we propose a stochastic gradient based algorithm with each iteration involving simple operations. A later work Richard et al., (2014) extended the GFT framework to allow for features that evolve as a vector auto-regressive process and as such is not computationally feasible for very large networks.

Perhaps most importantly our problem setup is more general and applies to arbitrary binary sequences with mapped features. In Section 5.2 we apply our approach on a hyperlink network from Reddit and show its improved performance over a baseline approach. The Reddit hyperlink network represents a single network sequence and the applicability of our approach demonstrates its potential wide usage on modeling time-dependent binary sequences. The rest of the work is organized as follows. In Section 3 we propose the BAR model with its estimation problem in Section 3.1. We compare the BAR model with baselines (GFT, logistic) in a simulation study in Section 4. On two real datasets in Section 5, one from actual products A and B 222To maintain anonymity we don’t reveal the actual product names. and the other from Reddit, we apply the proposed model to show its superior performance in link prediction and discovery. Finally, we conclude the work with discussions in Section 6.

3 The BAR Model

Let the adjacency matrices and be the sequence of main and auxiliary networks for . Corresponding to the edges in the auxiliary sequence we also have a sequence of feature tensors such that, for all we have . We assume that an edge in a main network sequence exists at least once in the auxiliary sequence and that the number of nodes ( and ) is fixed over time. This is not an issue in practice since we can always set to be the largest values over and assume all rows at other times.

There are two important aspects of our model definition. First, we believe that the feature sequence and the history of connections are predictive of a link in the future. As an example, in the context of an email network this could imply that an increased exchange between two nodes is possibly indicative of a future calendar event. Second, the probability of link formation evolves with time and in that sense depends on the entire sequence of edge level features. For any link we have

| (1) |

where controls the trade-off between the dependence on the past and the current feature vector for a link . The model expresses our belief that given the features and link history, a connection at time is independent (but non-homogeneous) of all other links and that this probability evolves as an auto-regressive model with weights on probability of connection at time and a logit transformed probability induced by the features. We refer to the proposed model as Bernoulli Auto-Regressive (BAR). Note that the recursion of in Model (1) allows us to express the probability of connection at a given time as a weighted sum of past probabilities. This weighted sum along with a non-linear dependence on the features provides flexibility in modeling arbitrary sequences with observation level features. This is more general than modeling the probabilities as a logit transform of lagged feature vectors. In the choice of we can also use different link functions to extend our model to sequences of discrete outcomes of categories where (e.g., by using the softmax function), providing that is valued between 0 and 1 so that is a valid Bernoulli parameter.

For certain applications BAR may be under-parametrized. We can extend the model by having different coefficient vectors in the link probability for different sets of connections. These sets can be determined by using clustering on the features as a pre-processing step. More precisely we can have for

| (4) |

where is the cluster membership for link at time . We assume only one cluster for edge membership for the rest of the work.

The decaying contribution of historical features is more obvious once we express the recursion of in Model (1) equivalently as

| (5) |

The longer the time has elapsed since time , the smaller the weight () is applied on the probability and its underlying features from time . The diminishing contribution from features over elapsed time is a valid assumption in many applications (e.g., attribution model). The choice of governs the decaying rate: larger makes more dependent on historical probabilities, whereas smaller magnifies the dependence on current features. To illustrate the relation between and , we consider a simplified scenario: we let be 0.2 and be 0.8 for all . In Figure 1 we show how evolves with at various values. At extremes, takes constant value of (when ) or (when ). For , the larger is, the more slowly the contribution of historical features to the decays and the more slowly deviates from its initial assignment (0.2) towards the probability derived from current features (0.8).

3.1 Estimation

Model estimation is performed by maximizing the log-likelihood function for Model (1) since for each we can obtain a closed form expression for the probability of connection at time using the recursive formula (5). However, as we mentioned before the main network can be very sparse and so we cannot hope to learn much from a small number of observations. To cast our network to a wider set of connections we add a regularization term to the log-likelihood and propose to minimize the following problem

| (6) |

where are aggregated features at sender-level, i.e., encodes the th feature aggregated over recipients of sender .

The regularization term provides us a way to minimize deviation of first order statistics (for the features) between the network probabilities of interest and the observed auxiliary network. In another word, we want network aggregated features to be similar for the main and auxiliary sequences. The regularization and the probabilities are precisely what allow us to learn from auxiliary data, and Problem (6) represents a trade-off between the task of link prediction and discovery. If we have a lot more observed data in the main network sequence and it is of interest to predict the prevalence of existing connections in the future we should give more weight to the log-likelihood term (choose a small ) and if instead our goal is to discover newer connections we should focus on trying to minimize the regularizer term (with a larger ). If there is only one network sequence with feature information, we simply take to accommodate missing and adjust ’s dependence onto (see Section 5.2 for an example). These adjustments would make our model generalizable to single network sequence with relevant features.

4 Simulation Study

We simulate data from the generative model (1) and perform several experiments to gain more insight into its behavior and performance compared to competing models. Moreover, since the network sequences and edge features are highly likely to be statistically dependent, we employ settings that are indicative of real-world applications. To generate the first network in the auxiliary sequence we sample Erdős-Renyi graphs with different combinations of and edge generation probabilities . For subsequent time steps () we vary

| (9) |

For every edge we sample the features such that each element is distributed, where is a simple random walk. The coefficient vector is normalized to have unit Euclidean norm. Finally, the main network sequence is generated by Bernoulli trials with probability computed using the recursion in (1), where is initialized as the maximum likelihood estimate of edge probability.

We compare the BAR model with two competing models. The first model is graph feature tracking (GFT) from Richard et al., (2010) which incorporates a feature prediction and regularization procedure for estimating . In specific, Richard et al., (2010) define matrix of features that can capture the dynamics of , and project with a linear feature map . With historical feature maps Richard et al., (2010) propose using ridge regression to estimate to substitute . In the directed graph setting, we let the features where and are leading singular vectors and singular values from the SVD of , respectively. We solve Problem (10) to get a GFT estimator for :

| (10) |

where is a nuclear norm for inducing low rankness in . Since the GFT estimator is not guaranteed to have its values between 0 and 1, we threshold any value of outside to its closer boundary. In addition to GFT, we compare the BAR model to a logistic model with features being averaged historical features up to the current time.

| (11) |

where averaged features across historical time is defined as follows:

| (12) |

All three models under the comparison have access to historical data though they leverage the data differently.

| Task | Parameter | Node Degree | Models (AUC ROC) | ||

| BAR | GFT | Logistic | |||

| Standard | 1.8 | 0.972 | 0.936 | 0.505 | |

| 17.6 | 0.971 | 0.930 | 0.506 | ||

| Varying Network Dependence | 17.6 | 0.970 | 0.929 | 0.508 | |

| 17.1 | 0.967 | 0.926 | 0.527 | ||

| 13.3 | 0.941 | 0.893 | 0.648 | ||

| 6.7 | 0.901 | 0.799 | 0.725 | ||

| 3.0 | 0.855 | 0.700 | 0.670 | ||

The comparisons are made over two tasks: a standard setting where networks of different densities are considered, and a setting with varying network dependence for which adjacent network variation is gradually increased. For each scenario, we fix and to 10 thousands and to , but vary and over a range of values. We include all simulation settings in Table 1. For the task with varying network dependence, we gradually increase but fix all other parameters. Larger increases the probability of deleting an existing edge, injecting more variability into networks over time. By varying these parameters we highlight the advantages of our approach to a more traditional matrix completion framework (e.g., GFT from Richard et al., (2010)) and also gain insight into the workings of our method. Under each parameter setting, we generate 10 data samples and fit each model onto the samples. We finely tune each model with training data from the first 14 periods and test on the last period. We report the averaged AUC ROC over 10 repetitions for each model in Table 1. On all tasks the BAR significantly outperforms the GFT and the logistic by achieving higher AUC ROC. When network becomes more varied and more sparse with increasing , the challenge increases for all models; the BAR still constantly do better than the other two methods under this scenario.

5 Experiments

We exploit the performance of the BAR model on two real experiments. The first experiment uses product B as an auxiliary network to predict and discover connections on product A (the main network). The superior performance of the BAR model demonstrates the connections between the two underlying products. Moreover, the BAR model can fully leverage such connections to infer links on the product of interest (A). Our second experiment focuses on sentiment classification with a Reddit hyperlink network. We construct temporal network sequences for hyperlinks and use the BAR model (along with the baselines) to classify sentiment for the Reddit posts. We show that the BAR model performs at least as well as the logistic baselines even when minimal time dependence is present in network sequence.

5.1 Link Prediction and Discovery with Real Products

Products from large corporations often provide a connected ecosystem for users where the modality of connection is defined by a specific product or feature. For example, there could be a communication messaging and a social network. The different networks within such companies can have varying levels of connectivity. In our case product B’s network is much bigger and denser than A’s. Such an application is perfectly aligned with the framework we present here.

In the experiment we let the product A’s graph correspond to the main network and the B’s to the auxiliary network. The underlying intuition is that a network defining activity on product B can be indicative of users who might be interested in the feature provided by A. To prepare data, we extract directed links among users from A and B at weekly level. The network data covers a period of 32 weeks where the last week is reserved as a test set. We down sample the B’s network for computational reasons and use 15 weekly edge-level features corresponding only to activity. Note that we are also unable to detail the exact data and size descriptions due to privacy concerns. Finally we tune the BAR model over a grid of values between 0 and 1. For comparison, we use the same logistic model described in the simulation study in Section 4 that accounts for time dependence on historical features. Since the GFT does not scale to large networks we are unable to make a comparison to it. We evaluate the model performance on the hold-out test set.

We have two tasks at hand: link prediction that aims at accurately predicting recurrent links, and link discovery that targets on discovering new links that never existed before. We split the test set to properly evaluate the performance on the two tasks. The test set is made up of A’s links from the last week (i.e., the set of ones) and pair of users from A or B that did not connect on A in the week (i.e., the set of zeros). The test set is highly imbalanced: the set of zeros is larger than the set of ones by orders of magnitudes. From a practical standpoint, a predicted link on A is more likely to hold if one of the two users already use A. For link discovery, such an edge prediction can be directly translated into a recommendation setting. Hence, restricting to zero entries with exactly one user being an existing A user would help improve prediction accuracy for any model, and alleviate the imbalance in the test set. As is shown in the left panel of Figure 2, we partition the set of ones into links that existed in training (indexed as ) and those that never existed before (indexed as ). The set is suitable for gauging model performance on link prediction, whereas the set allows us to assess the link discovery task. In the right panel of Figure 2, we partition the set of zeros into four segments according to the memberships of the sender and receiver: and . We refer readers to Figure 2 for details of the partition.

| Task | Composition of Test Set | Models (AUC ROC) | ||

|---|---|---|---|---|

| Set of Ones | Set of Zeros | BAR | Logistic | |

| Link Prediction | 0.98 | 0.60 | ||

| Link Discovery | 0.67 | 0.63 | ||

Recall among Top Predictions

for Link Prediction

Recall among Top N Predictions

for Link Discovery

In general, link discovery is more challenging than link prediction since performance on the link discovery task is difficult to measure in the real setting specifically on offline data. This is because the generative mechanism of new links isn’t known and our model assumption bases them on B usage. A more reliable testing mechanism is perhaps randomized control trials. The contrast in the difficulty of the two taks is reflected in Table 2: the BAR model achieves almost perfect AUC ROC over link prediction while its corresponding metric for link discovery is much lower. Moreover, on both tasks the BAR model outperforms the logistic baseline. We also evaluate model performance with recall which is particularly suitable for evaluating link discovery. For each task, we compute recall from top predictions among the corresponding set of ones together with . As increases, we include more candidates which results in an improvement of recall for any model. In both panels of Figure 3, the BAR model achieves significantly better recall than the baseline at every . However, link discovery is more challenging which is reflected on the upper bound of the metric (0.4) at large enough (see the right panel of Figure 3). For link prediction (see the left panel of Figure 3), the BAR model with quickly converges to perfect recall. The very significant gap between the BAR curves and the logistic baseline curve from the left panel of Figure 3 points to the superiority of the BAR model.

5.2 Sentiment Classification on Reddit Hyperlink Network

In the previous experiment we demonstrate significant improvement of the BAR model over a more standard approach in predicting and discovering links on networks with millions of nodes. Here we show that the proposed model is easily generalizable to any dynamic binary classification task that spans over time. The Reddit hyperlink network333Reddit hyperlink network: https://snap.stanford.edu/data/soc-RedditHyperlinks.html Kumar et al., (2018) encodes the directed connections between two subreddit communities on Reddit from Jan 2014 to April 2017. The subreddit-to-subreddit hyperlink network is extracted from Reddit posts containing hyperlinks from one subreddit to another. The hyperlinks are time-stamped, directed and have attributes (a.k.a. features). Using crowd-sourcing and a text-based classifier, Kumar et al., (2018) assigned a binary label to each hyperlink, for which label 1 indicates that the source expresses a positive sentiment towards the target and -1 if the source sentiment is negative towards the target.

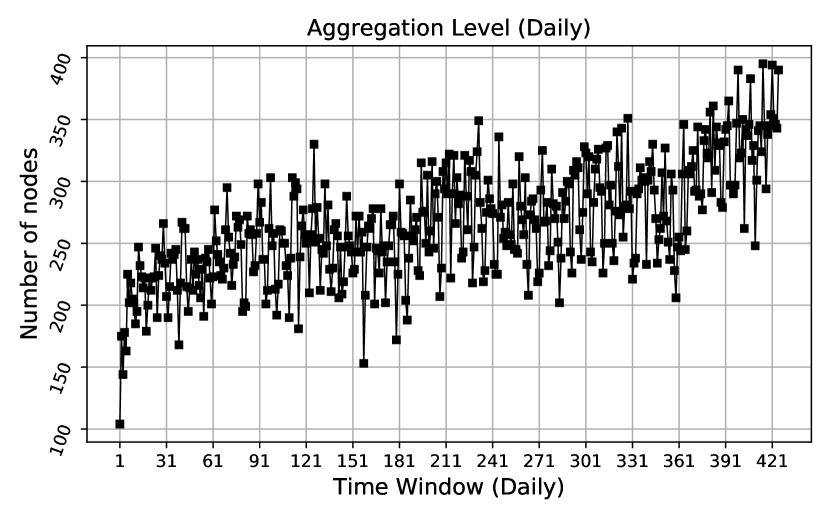

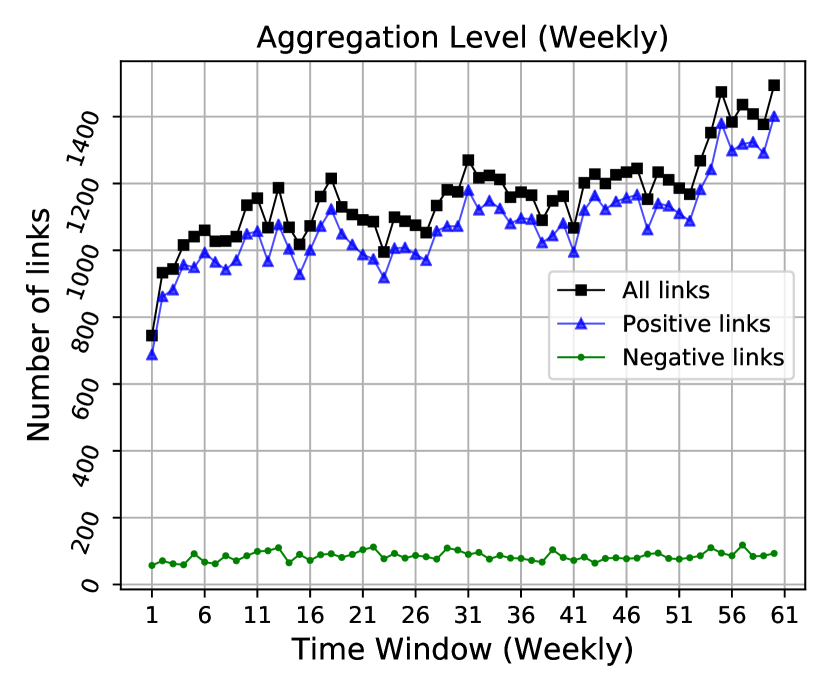

Our goal for the experiment is to classify sentiment for the hyperlinks with the BAR model and competing ones. The BAR model is designed for discrete times; however, the time stamps in the hyperlink network are continuous. To discretize the time stamps, we aggregate the hyperlinks at various granularities: daily, weekly and monthly. At each granularity we average the feature vectors corresponding to the same pair of (source subreddit, target subreddit) within each time window. Among the 86 features (see Kumar et al., (2018) for details) we omit 11 of them that are count features because they are likely to be dominated by longer posts after the averaging. For sentiment labels within each time window, we take the majority vote as the label for (source subreddit, target subreddit). We experiment on the first 14 months of data (or roughly equivalently, the first 60 weeks or 425 days) from Jan 2014 to Feb 2015. Figure 4 shows how the number of nodes and the number of links vary over time at different time granularities. At all three levels (daily, weekly and monthly) the number of nodes and links have an overall increasing trend over time. From the bottom row of Figure 4 we observe the highly imbalance of the sentiment labels: there are much more positive hyperlinks than negative ones at any of the time granularities. As we equally value positive and negative hyperlinks, we use AUC ROC to evaluate the model performance over test period which is reserved for the last two months (or roughly equivalently, data from the last 8 weeks or 60 days).

| Aggregation Level | Network Statistics | Train/Test Periods | Models (AUC ROC) | ||||

|---|---|---|---|---|---|---|---|

| Node Degree | % Recurrent Links | BAR | Logistic (Raw) | Logistic | |||

| Daily | 5.29 | 21.60% | 365 | 60 | 0.761 | 0.756 | 0.744 |

| Weekly | 4.96 | 20.99% | 52 | 8 | 0.760 | 0.755 | 0.743 |

| Monthly | 4.34 | 19.29% | 12 | 2 | 0.756 | 0.748 | 0.745 |

We modify the BAR model to adapt to the single network scenario. Let represent the sentiment from the th subreddit to the th subreddit at time window . We require the edge-level features to be from the network and update the dependency of onto

| (13) |

With single network we turn off the link discovery option by setting to zero in Problem (6). For comparison we consider two variations of the logistic model. The first variation keeps using averaged historical features for classifying sentiment at the current time window, as is used Section 4 and Section 5.1, which we label as Logistic in Table 3. The second variation of the logistic model, which we label with Logistic (Raw) in Table 3, simply use the features from the current time window for classifying sentiment. Table 3 has a comparison of the performance on corresponding test period across varying aggregation level. As we aggregate over longer time window (from daily to monthly), the network becomes sparser with node degree continuously decreasing. Comparing the two variations of the logistic model, it performs better without averaging historical features which indicates there is limited time dependence in the sentiment classification task. Indeed, our approach performs the best with daily aggregation when there is more time dependence, as is evidenced by the highest percentage of recurrent links. At all aggregation levels, the BAR model outperforms the logistic ones, showing its strength over binary classification task even with limited time dependence. As we mentioned before the competing GFT approach does not scale to large datasets. Moreover, the sentiment classification problem is not directly solvable with GFT’s matrix completion framework in (10). Thus, we left the GFT approach out in this comparison.

6 Conclusions

We proposed a generalized auto-regressive model for link prediction and discovery with network sequences. The proposed BAR model can exploit signals from the auxiliary network to infer connections on the main network. We also develop an optimization framework for estimating the model, and a stochastic gradient descent algorithm that is scalable to networks with millions of nodes. To demonstrate the superior performance of our model, we apply the BAR model along with baselines on both simulated data and real data, and show significant improvement in BAR’s performance over baselines. Our experiment on the product A’s network shows practical use of the BAR model at scale, while the Reddit hyperlink experiment validates the generalizability of BAR. For future line of research, an extension of the BAR model to continuous time would be useful for many real-world applications. We also plan to investigate the theoretical properties of our model.

Acknowledgements

The authors thank Sushama Murthy and Richard Johnston for supporting the work.

Appendix

A Algorithm for BAR Model Optimization

We derive the log-likelihood part and the regularizer part of Problem (6) as follows.

| Log-Likelihood | (14) | |||

| Regularizer | (15) |

For the log-likelihood part (14), we represent the summands using and defined as follows:

-

•

when ;

-

•

when .

The gradients of and can be derived by the Chain Rule:

-

•

;

-

•

;

where if and 0 otherwise. The gradient of the log-likelihood is

For the regularizer part (15), we represent the summand using of which the gradient can be derived by the Chain Rule as

The gradient of the regularizer is

A gradient descent update at the th iteration with step size has the form

We propose a computationally feasible substitute to the gradient descent. At the th iteration, we randomly sample a time stamp , a sender node for the log-likelihood gradient, and a sender node for the regularizer gradient. The Stochastic Gradient Descent (SGD) algorithm is detailed in Algorithm 1. To efficiently compute and from Lines 8-9 of the algorithm, we leverage the sparsity in the auxiliary sequence : for a given there are limited s with . Hence, only a small number of non-zero needs to be accounted for in computing the gradients of and .

References

- Aiello et al., (2012) Aiello, L. M., Barrat, A., Schifanella, R., Cattuto, C., Markines, B., and Menczer, F. (2012). Friendship prediction and homophily in social media. ACM Transactions on the Web (TWEB), 6(2):1–33.

- Huang and Lin, (2009) Huang, Z. and Lin, D. K. (2009). The time-series link prediction problem with applications in communication surveillance. INFORMS Journal on Computing, 21(2):286–303.

- Koren, (2008) Koren, Y. (2008). Factorization meets the neighborhood: a multifaceted collaborative filtering model. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, pages 426–434.

- Kumar et al., (2018) Kumar, S., Hamilton, W. L., Leskovec, J., and Jurafsky, D. (2018). Community interaction and conflict on the web. In Proceedings of the 2018 World Wide Web Conference on World Wide Web, pages 933–943. International World Wide Web Conferences Steering Committee.

- Liben-Nowell and Kleinberg, (2007) Liben-Nowell, D. and Kleinberg, J. (2007). The link-prediction problem for social networks. Journal of the American society for information science and technology, 58(7):1019–1031.

- Myers and Leskovec, (2010) Myers, S. and Leskovec, J. (2010). On the convexity of latent social network inference. In Advances in neural information processing systems, pages 1741–1749.

- Richard et al., (2010) Richard, E., Baskiotis, N., Evgeniou, T., and Vayatis, N. (2010). Link discovery using graph feature tracking. In Lafferty, J. D., Williams, C. K. I., Shawe-Taylor, J., Zemel, R. S., and Culotta, A., editors, Advances in Neural Information Processing Systems 23, pages 1966–1974. Curran Associates, Inc.

- Richard et al., (2014) Richard, E., Gaïffas, S., and Vayatis, N. (2014). Link prediction in graphs with autoregressive features. Journal of Machine Learning Research, 15(1):565–593.

- Sarkar et al., (2012) Sarkar, P., Chakrabarti, D., and Jordan, M. (2012). Nonparametric link prediction in dynamic networks. arXiv preprint arXiv:1206.6394.

- Sarkar et al., (2011) Sarkar, P., Chakrabarti, D., and Moore, A. W. (2011). Theoretical justification of popular link prediction heuristics. In Twenty-Second International Joint Conference on Artificial Intelligence.

- Vu et al., (2011) Vu, D. Q., Hunter, D., Smyth, P., and Asuncion, A. U. (2011). Continuous-time regression models for longitudinal networks. In Advances in neural information processing systems, pages 2492–2500.

- Wang et al., (2015) Wang, P., Xu, B., Wu, Y., and Zhou, X. (2015). Link prediction in social networks: the state-of-the-art. Science China Information Sciences, 58(1):1–38.

- Wu et al., (2013) Wu, S., Sun, J., and Tang, J. (2013). Patent partner recommendation in enterprise social networks. In Proceedings of the sixth ACM international conference on Web search and data mining, pages 43–52.