Off-Policy Evaluation with Irregularly-Spaced, Outcome-Dependent Observation Times

Abstract

While the classic off-policy evaluation (OPE) literature commonly assumes decision time points to be evenly spaced for simplicity, in many real-world scenarios, such as those involving user-initiated visits, decisions are made at irregularly-spaced and potentially outcome-dependent time points. For a more principled evaluation of the dynamic policies, this paper constructs a novel OPE framework, which concerns not only the state-action process but also an observation process dictating the time points at which decisions are made. The framework is closely connected to the Markov decision process in computer science and with the renewal process in the statistical literature. Within the framework, two distinct value functions, derived from cumulative reward and integrated reward respectively, are considered, and statistical inference for each value function is developed under revised Markov and time-homogeneous assumptions. The validity of the proposed method is further supported by theoretical results, simulation studies, and a real-world application from electronic health records (EHR) evaluating periodontal disease treatments.

Keywords: Irregular decision time points; Markov decision process; Reinforcement learning; Statistical inference.

1 Introduction

A dynamic policy is a sequence of decision rules guiding an agent to select time-varying actions based on updated information at each decision stage throughout its interaction with the environment. The frameworks for analyzing dynamic policies can be broadly categorized into two settings: (a) finite-horizon and (b) long/infinite-horizon, based on how they handle the number of decision stages. Traditional research in dynamic policies predominantly focused on the finite-horizon settings with a limited number of decision stages (Murphy et al., 2001; Murphy, 2003; Robins, 2004; Zhang et al., 2013; Zhao et al., 2015), where the potential outcome framework is commonly employed to describe the target of interest, and the classical causal inference assumptions, such as unconfoundedness and consistency, are sufficient to guarantee target estimability. Applications of these methods appear in multi-stage treatment settings for cancer, HIV infection, depression, and other chronic conditions. By contrast, infinite-horizon dynamic policies involve a relatively large number of decision stages. For example, consider our motivating periodontal disease (PD) treatment data extracted from the HealthPartners (HP) electronic health records (EHR), where patients are generally recommended for a regular check-up every 6 months (Clarkson et al., 2020). In this dataset, half of the patients visit their dentists for dental treatments more than 8 times, with the maximum value of treatment stages reaching 31 during the 8-year follow-up period. Similar decision policies also arise in the long-haul treatments of chronic diseases, intensively interventions provided during the stay in the ICU, and other sequential decision-making applications, such as in mobile health, gaming, robotics, and ride-sharing. In such cases, the backward induction algorithms used in the finite horizon settings may suffer from the curse of the horizon (Uehara et al., 2022). To mitigate this, off-policy evaluation methods developed within the reinforcement learning (RL) framework (Sutton and Barto, 2018) are preferred. Specifically, instead of directly estimating the target of interest, such as the value of a dynamic policy, researchers reformulate and identify the value function through an action-value function. Under time-homogeneous and Markov assumptions, the action-value function can be estimated by solving the Bellman Equation, followed by the estimation of the quantity of interest. Recently, interest has shifted to dynamic policies in long- or infinite-horizon settings, and statistical inferences for off-policy evaluations have also been developed (Ertefaie and Strawderman, 2018; Luckett et al., 2019; Kallus and Uehara, 2020; Shi et al., 2021, 2022).

This paper considers the evaluation of dynamic policies in an infinite horizon setting. As a variation to the aforementioned literature, our framework includes not only the sequential decision-making process but also an observation process which dictates the time points at which decisions are to be made. In the existing literature, it is common to assume that decisions are made at regular, pre-assigned time points for simplicity. However, in many real-world scenarios, such as those involving user-initiated visits, the observation process is often irregularly spaced and may depend on both the decision-making process and the outcomes (Yang et al., 2019; Yang, 2021). For example, in the PD treatment study, the gap times between the dental revisits, even for the same patient, can range from 3 to 24 months. Additionally, the length of recall intervals is correlated with not only the actions made at the previous visit (such as the clinic visit recommendations made by dentists) but also with the outcomes (such as the patient’s oral health status after treatment). The necessity of including such an irregularly spaced and outcome-dependent observation process in the analysis of dynamic policies arises for at least two reasons: (a) the gap times between decision stages might be useful for the decision-maker in choosing actions and, therefore, could be included as the inputs of dynamic policies along with the state; and more importantly, (b) replacing the regular decision stage number with its specific decision time point allows for more sensible and principled evaluations of dynamic policies (as will be discussed throughout the paper), regardless of whether the dynamic policy involves gap times.

Within the realm of developing dynamic policies with irregularly spaced and outcome-dependent observation times, this work advances the field in multiple ways. Our first contribution is offering two options for evaluating dynamic policies: one based on cumulative reward and the other on integrated reward. Following the OPE approaches with regular decision points, a simple way to define the value of a dynamic policy is to use the expected discounted cumulative reward, assuming all actions are taken according to the policy. However, because the value of a cumulative sum is typically highly correlated with the number of accumulators (especially when rewards are consistently non-negative or non-positive), the policy value tends to increase or decrease with the number of decision stages. This observation motivates us to consider a more principled evaluation, where the value of dynamic policies could be independent of the number of observed decision stages. Therefore, by treating the rewards observed at as discrete observations from a continuous reward process potentially available throughout the entire follow-up period, we define an alternative value function for the dynamic policies based on the integration of the reward function over the full follow-up time. The value function, when derived from integrated reward, differs in magnitude, significance, and application contexts compared to one based on cumulative reward. This difference necessitates additional effort in the policy evaluation process. Our next key contribution is developing a novel RL framework that addresses both the state-action and observation processes. The complex and often unknown relationship between these two processes implies that the value of a dynamic policy, whether based on cumulative or integrated reward, may vary with the time at which the policy is evaluated. To address this complexity, we introduce a revised assumption of time-homogeneity and Markov properties for states, actions, and gap times. Under these assumptions, we demonstrate that the value function at can be independent of both and , provided the current state and gap time are known. Furthermore, we validate the broad applicability of our assumptions using two data-generating schemes. These schemes show that our framework is closely related to Markov decision process models widely used in computer science and renewal process models developed in statistics. Finally, under the proposed RL framework and for each of the value functions, we develop two policy evaluation methods: one based on the classic Bellman equation and the other on a Bellman equation modulated by an observation process model. We demonstrate that the proposed observation process model is consistent with the revised time-homogeneity and Markov assumptions for states and gap times. The model also allows us to formalize the connection between the value function based on cumulative reward and that based on integrated reward, thus playing a crucial role in the policy evaluation procedure based on integrated reward.

The rest of the paper is organized as follows. In Section 2, we introduce the notations, value functions, assumptions about the data structure, and two data-generating schemes. Sections 3 and 4 present the off-policy evaluation methods for the value function based on cumulative and integrated reward, respectively. Section 5 conducts simulation studies to evaluate the empirical performance of the proposed methods. An application to the PD treatment dataset is given in Section 6. Section 7 concludes the paper with a discussion.

2 Markovian decision process with irregularly-spaced and outcome-dependent observation times

2.1 Notations and value functions

Consider a study where treatment decisions are made at irregularly-spaced time points . For each , let denote the gap times, and let be a vector of state variables that summarizes the subject’s information collected up to and including time . At each , investigators can observe the status of subject and the gap time , and then take an action based on and . The reward of action is observed at , and thus denoted as . For simplicity, we assume that the state space is a subspace of where is the number of state vectors, and the action space is a discrete space with denoting the number of actions. We also assume that , , and exist and are observable at . An illustration of the data structure is given in Figure 1.

Let denote a policy that maps the state and gap time to a probability mass function on . Under such a policy , a decision maker will take action with probability for and . Aiming at off-policy evaluation for any given dynamic policy , we first define the corresponding value functions. Unlike the existing literature (Shi et al., 2021), we treat as jump points of a counting process and propose the following two value functions

| (1) | ||||

| (2) |

Here, is a discounted factor that balances the immediate and long-term effects of treatments; , an extension of the aforementioned notation , is redefined as a reward process only observed at , but could be potentially available at time ; and denotes the expectation when all the actions follow policy . By the definition of , defined in (1) can be rewritten as an expectation of discounted cumulative reward, given as

| (3) |

Therefore, we refer to as the value function based on cumulative reward and as the value function based on integrated reward. Similarly, we define as the action-value function (or Q-function) based on cumulative reward, while the one based on integrated reward is defined as

Remark 1. Both value functions, whether based on cumulative or integrated reward, may depend not only on the current value of state , but also on the time at which the policy is evaluated. This differs from the value functions developed under regularly-spaced observations and introduces challenges to the policy evaluation process. To address this issue, we incorporate into the value functions and will demonstrate in subsequent sections that, under modified Markov and time-homogeneous assumptions, the value function at can be independent of and , given the current state and gap time .

Remark 2. The two value functions differ in terms of quantity, interpretation, application scenarios, and estimation procedures. First, the value function based on cumulative reward, , can be highly correlated with the rate of observation process . For example, assuming is a non-negative function, a higher rate of would yield more decision stages , leading to a higher policy value. In contrast, , which integrates the reward function over the follow-up period, provides a more principled evaluation of the policy as it remains independent of the number of observed decision stages. As a result, the two value functions are suited to different application scenarios, and the choice of value function should depend on how the reward function is defined. Taking our motivating PD treatment study as an example, when the outcome of interest is whether a patient’s probing pocket depths (PPD) are reduced after treatment, the reward is defined as an indicator of PPD reduction, making the value function based on cumulative reward preferable. Conversely, when the reward function is defined as the PPD measurement at time for , policy evaluation should be independent of the measurement times, and the value function based on integrated reward provides a comprehensive assessment of the patient’s oral health status. Finally, since is unobservable when , additional assumptions about and are required to ensure that the expectation of is estimable. This makes the evaluation process for different from that for ; further details are provided in Section 4.

2.2 Assumptions

Now, we posit assumptions on the states, actions, rewards, and gap times.

-

(A1)

(Markov and time-homogeneous) For all , and for any measurable set with and as the current state and gap times, respectively, the next state variable and gap time satisfy

Here, denotes the joint distribution of the next state and next gap time conditional on the current state, action, and gap time.

-

(A2)

(Conditional mean independence) For all and for some bounded function , the reward satisfies

Assumption A1 implies that, given the current state, action, and gap time, the joint distribution of does not depend on the historical observations , or the current observation time . Instead of assuming the marginal distributions of the states and the gap times separately, we present assumption A1 based on the joint distribution of the next state and the next gap time. This indicates that, not only the marginal distributions of and , but also the dependence between and , should be independent of the historical observations and the current observation time , conditionally on . For instance, in the PD treatment study, the recall interval often correlates strongly with the PD status at the subsequent visit . This correlation, along with the values of and , may be influenced by the current PD status of patients and the treatments provided by the dentists. However, it’s reasonable to assume conditional independence of these factors from historical states, treatments, and recall intervals, given the latest observations .

By denoting as an extended vector of state variables, it is not hard to see that A1 takes a similar form as the Markov and time-homogeneous assumption commonly adopted in the RL literature. However, in the following sections, we will demonstrate that the gap times and the state variables play different roles in the estimation procedure and theoretical properties. This distinction explains why we do not simply include in the state vector.

2.3 Example schemes

For illustration, we present two data-generating schemes that satisfy assumption A1, while A1 itself does not specify any structure on the relationship between and . The two schemes bridge the proposed framework with modulated renewal process models and Markov decision process models, as well as providing guidelines for constructing specific models on the states, actions, and gap times.

Scheme 1. Consider a stochastic process where, given , and , the next state is generated as

for any measurable set , while given the value of next state , the next gap time is generated as

for any . Here, denotes the transition function of the next state conditional on the current state, action, and gap time, and denotes the cumulative distribution function of next gap time conditional on the current state, gap time, action, as well as the next state.

Remark 3. As a special case, when consists of finite or countable number of states and is independent of the value of current gap time , (S1-s) and (S1-x) together form a time-homogenous semi-Markov decision process (Bradtke and Duff, 1994; Parr, 1998; Du et al., 2020), where is the Markov chain and is the sojourn time in the th state .

Remark 4. Considering that are the gap times of a counting process, we can restate the generation procedure in the model (S1-x) using the notations of the counting process. Let denote a right-continuous counting process with jumps at . Then, we have , , , , and for all . According to (S1-s), the distribution/generation of next state is fully determined by , and therefore could be assumed available at time . Let denote all information available at . It is not hard to observe that if satisfies

or some intensity function , then for each , the gap time with the hazard function satisfies model (S1-x) too. On the other hand, if the distribution function in the model (S1-x) is absolutely continuous wrt. the Lebesgue measure, then the counting process defined by also satisfies (S1-x′). As a matter of fact, by viewing as the covariates available at , (S1-x′) takes a similar form to the modulated renewal process model. The assumption is natural for user-initiated visits and has been popularly adopted in the statistical literature (Cox, 1972; Lin et al., 2013; Chen et al., 2018).

Scheme 2. As an alternative, scheme 2 generates the gap time first and then determines the value of the next state. Specifically, assume that the next gap time satisfies

for . Then, given the value , the next state satisfies

for any measurable set . Here, denotes the cumulative distribution function of the next gap time conditional on the current state, gap time, and action, while denotes the transition function of the next state conditional on the current state, action, gap time, as well as the next gap time. Let . With arguments similar to Remark 4, it can be shown that (S2-x) is equivalent to

for , and takes the form of a modulated renewal process.

3 Policy evaluation based on cumulative reward

In the following subsections, we initially propose a policy evaluation procedure, requiring only assumptions A1 and A2. Then, under a counting process model for the observation process, we develop an alternative policy evaluation method. The asymptotic properties of all the proposed estimates are given in the last subsection.

3.1 Utilizing the classic Bellman equation

To estimate and , we first present the following two lemmas, whose proof is available in Section A.1 of the Supplementary Material.

Lemma 1. Under assumptions A1 and A2, the action-value function based on cumulative reward exists and satisfies for all , , , and .

By Lemma 1, the value of is time-homogeneous, meaning the expected value of a policy does not depend on the number of past observations or the current observation time . Therefore, we can omit both and , and denote the action-value function simply as for brevity. Similarly, the state-value function of policy can also be presented as and the value function of policy under some reference distribution can be presented as both of which are independent of and . Furthermore, we derive a Bellman equation as formulated in Lemma 2.

Lemma 2. Under assumptions A1 and A2, the action-value function based on cumulative reward satisfies a Bellman equation

for all .

To solve the Bellman equation, let denote a model for indexed by , and satisfies for some . Define

According to Lemma 2, we have for all . Assume the map is differentiable everywhere for each fixed , and , and let denote the gradient of with respect to . Then, we construct an estimating function for as

| (4) |

where are independent and identically distributed trajectories of . denotes the total number of decisions observed on the th sequence, and

Generally, (4) can be solved by numerical methods, but in certain cases, it may have a closed form. For example, consider a B-spline model where is a vector consisting of B-spline basis functions, and is a -dimensional parameter vector for each . Denote and Then, the estimating equation takes the form and can be solved as

Therefore, we propose to estimate by estimate state-value function by , and estimate by where .

3.2 Utilizing the modulated Bellman equation via an observation process model

In this subsection, we further propose an alternative policy evaluation procedure that relies on a specified model for the observation process. Here, the motivation for modeling the observation process arises from two aspects. First, as demonstrated in Subsection 2.3, under the revised time homogeneity and Markov assumptions for states and gap times, the irregularly spaced and outcome-dependent observation times can be represented as the occurrence times of a renewal process modulated by the state and action sequences (Cox, 1972). Therefore, positing a modulated renewal process model on the observation process is consistent with the structure of the data and helps us make more accurate inferences. Second, by employing an intensity model for the observation process, the integration of rewards can be transformed into a cumulative form. This enables us to leverage policy evaluation techniques based on cumulative reward for estimating the value function based on integrated reward. More details are given in Section 4.

For brevity, we focus on Scheme 1 given in Section 2.3; similar derivations can also be applied to Scheme 2. The modification begins with the Bellman equation outlined in Lemma 2. Under assumption (S1-x), we can express the equation as

Let denote an estimate of the distribution function . Similar to (4), we construct with Further approximating action-value functions with B-splines , then the solution to takes the form of

| (5) |

with

Then, the estimator for is , the estimator for state-value function is and the estimator for the value function under given reference distribution is . Here, extra effort is required in estimating , the distribution of the next gap time conditional on and . As an illustration, we propose a Cox-type counting process model that satisfies (S1-), and takes the form

| (6) |

Here, , for some pre-specified -dimensional function , is a vector of unknown parameters and is an unspecified baseline intensity function. Let denote independent and identically distributed trajectories of . For and , define , . Then, we propose to estimate by solving the equation

| (7) |

where and is a positive constant satisfying for each . Let denote the obtained estimator. Then, we can further estimate by , and can estimate by for .

3.3 Asymptotic properties

We first introduce the notations and impose necessary assumptions. Denote . Let be the conditional density function satisfying for the joint distribution defined in (A1). Then, (A3) is a regularity condition ensuring the action function to be continuous (Shi et al., 2021, Lemma 1).

-

(A3)

There exists some , such that and are Hölder continuous functions with exponent and with respect to .

As discussed in Section 2.2, the transition trajectories of states and gap times form a time-homogeneous Markov chain under assumption (A1). Suppose has a unique invariant joint distribution with density function under some behavior policy . Similarly, when the reference distribution is absolutely continuous with respect to the Lebesgue measure, there exists a density function satisfying , which is also a joint distribution for the states and gap time. We posit the following assumptions on and .

-

(A4)

and are uniformly bounded away from 0 and .

Recall that and are obtained by solving and . Define , , and

The following assumption ensures the existence of and .

-

(A5)

There exist constants , such that and where denotes the minimum eigenvalue of a matrix.

The following assumption is required in constructing asymptotic approximation when . Specifically, the geometric ergodicity in A6(i) is part of the sufficient conditions for the Markov chain central limit theorem to hold, and A6(ii) guarantees the validity of estimating equation (7) under renewal process model (6).

-

(A6)

When , (i) the Markov chain is geometrically ergodic, and (ii) there exists a probability measure such that for any measurable set on , satisfying

Now, we provide bidirectional asymptotic properties for the estimated values and . The property of the estimated state-value functions follows directly by setting as a Dirac delta function at fixed . For any , , , define .

Theorem 1.

Assume assumptions - hold. Suppose , for , and there exists some constant , such that , and for any . Then, as , we have: where is given in Subsection A.2 of the Supplementary Material.

Theorem 2.

Here, conditions - are regularity conditions for constructing asymptotic properties of under model (6). They are commonly adopted in the modulated renewal process studies (Pons and de Turckheim, 1988; Lin et al., 2013), and we relegate them, together with the proofs, in Subsection A.2 of the Supplementary Material for brevity.

4 Policy evaluation based on integrated reward

As remarked in Section 2.1, additional efforts are required for policy evaluation based on integrated reward. Here, the key insight is to transform the integration in into a cumulative reward, thereby enabling the application of the method developed in Section 4 to . Assuming satisfies (S1-x’) and , we have

where is an inverse-intensity-weighted reward.

It is not hard to verify that satisfies assumption and thus, the policy evaluation methods proposed in Section 3 are applicable. For example, by Lemma 1, is also free of and , and hence, we can rewrite the Q-function as , the value function as and taking the expression under some reference distribution , free of and . Furthermore, by assuming for some B-spline basis , we can also obtain two estimates for as

and

respectively. Here, and denotes some estimator of for and . For example, under the Cox-type model (6), the estimated intensity function is with and where and is given in SubSection 3.2, is a kernel function, and is the kernel bandwidth.

Furthermore, we derive two estimates for action-value function as and . The estimates for value function are and , and the estimates for are and The following theorem presents the asymptotic properties of and , whose proof is given in Subsection A.2 of the Supplementary Material.

5 Simulation

In this section, we conduct simulation studies to examine the finite sample performance of the proposed methods. We begin by describing our setup. First, for the initial values, we generate from a Uniform distribution on , from an Exponential distribution with intensity 1/2, and from a Bernoulli distribution with expectation 1/2. Then, given the current state , gap time , and action , the next state is generated from one of the three models: (S1) ; (S2) ; (S3) , where, . The next gap time is generated from of the following intensity models: (X1) ; (X2) ; (X3) . All the actions are independently generated from a Bernoulli distribution with mean 0.5, and the reward of each action , which is observed at , is defined as with .

Although there could be numerous combinations of the generative models, here we only choose four representative scenarios for a brief illustration. The settings of the four scenarios are listed in Table 1 and corresponding data structures are plotted in Figure 2. In general, all four datasets satisfy the the time-homogeneous and Markov assumptions given in (A1), but they vary in the dependence structure among the states, actions, and gap times. In Scenario 1, the generation of does not depend on the values of or , and the value of does not affect the generation of either. This implies that the observation process is independent of the state-action transition process. In Scenario 2, we allow the generation of gap times to be correlated with the actions and states, but and are conditionally independent with each other, given . More general scenarios are given in scenarios 3 and 4. As noted in section 2.2, the Markov assumption in (A1) allows and to be correlated with each other, only with the restriction that their dependence structure satisfies the Markov property. Two example schemes are also developed in Section 2.3 illustrating two different dependence structures satisfying (A1). Here, Scenarios 3 and 4 represent Schemes 1 and 2, respectively. Specifically, in Scenario 3, we first generate , and then generate based on ; while in Scenario 4, we generate first, and then based on . A comparison of the data structures in Scenarios 1-4 is provided in Table 1.

| Settings | Properties | |||||

|---|---|---|---|---|---|---|

| State | Gaptime | A1& A2 | model (6) | |||

| Scenario 1 | ||||||

| Scenario 2 |

|

|||||

| Scenario 3 |

|

|

||||

| Scenario 4 |

|

|

|

|||

| Scenario 1 | Scenario 2 |

|

|

| Scenario 3 | Scenario 4 |

|

|

In the policy evaluation procedure, the discount factor is set as for all settings. For the reference distribution, we consider , with and for policy evaluation under cumulative rewards, and , with and for policy evaluation under integrated rewards. For the target policy, we considered a class of linear deterministic policies where is set as in Scenario 1, and set as in Scenarios 2-4. To calculate the true values, we simulate independent trajectories with distributed according to , and chosen according to target policy . Then, is approximated by and is approximated by .

Since both state and gap time might not have bounded supports in our settings, we transform the data by and , where is the cumulative distribution function of a standard normal random variable. Then, the basis functions are constructed from the tensor product of two cubic B-spline sets, whose knots are placed at equally spaced sample quantiles of the transformed states and gap times. When estimating the B-spline coefficients , three methods were considered, namely, the naive method, the standard method, and the modulated method. When the value of the policy is defined by cumulative reward, the standard method leads to the estimate . The modulated method, which depends on model (6) for the observation process, leads to the estimate . Finally, the naive estimate is which is directly obtained from Shi et al. (2021) by treating as the state variable. Similarly, when the value of the policy is defined by integrated reward, we adopt the standard estimate as , the modulated estimate as , and the naive estimate as

The performances of the three estimation methods are summarized in Tables 2 and 3. Within each scenario, we further consider 6 cases by setting sample sizes with the length of trajectory , as well as setting when the number of trajectory . In each case, we generate 1000 simulation replicates to approximate the bias and standard deviation of the naive estimators (BiasN, SDN), and the bias, standard deviation, estimated standard error, and empirical coverage probability of confidence intervals for standardized estimators (BiasS, SDS, SES, CPS) and modulated estimators (BiasM, SDM, SEM, CPM). From the results, we observe that both the standard method and the modulated method perform well in Scenarios 1-3: the estimators are asymptotically unbiased, the estimated standard errors are close to the standard deviation of the estimators, and the coverage probabilities of confidence interval match the nominal level when either or is large enough. As a comparison, the naive estimate is biased across all scenarios, including Scenario 1, where the observations follow a Poisson process of rate one. This implies the observation process is informative and should be included in the policy evaluation, even if it is independent of the state-action process. Moreover, by comparing and in Scenarios 2-3, we observe that the modulated estimates are more efficient than the standard ones when is relatively small. This suggests that the value function estimated using the modulated Bellman equation may lead to reduced variance in policy evaluation under small sample sizes, especially when the distribution of gap times depends on the state-action process.

| Naive | Standard | Modulated | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| K | |||||||||||

| Scenario 1 True value = -0.641 | |||||||||||

| 100 | 10 | -0.099 | 0.079 | -0.002 | 0.063 | 0.059 | 0.958 | 0.002 | 0.062 | 0.063 | 0.954 |

| 200 | 10 | -0.096 | 0.053 | 0.000 | 0.039 | 0.041 | 0.960 | 0.002 | 0.041 | 0.045 | 0.968 |

| 400 | 10 | -0.095 | 0.036 | -0.002 | 0.028 | 0.029 | 0.952 | -0.001 | 0.028 | 0.032 | 0.958 |

| 10 | 100 | -0.075 | 0.100 | -0.030 | 0.073 | 0.065 | 0.950 | 0.001 | 0.080 | 0.071 | 0.938 |

| 10 | 200 | -0.091 | 0.065 | 0.001 | 0.049 | 0.044 | 0.934 | 0.003 | 0.047 | 0.049 | 0.952 |

| 10 | 400 | -0.094 | 0.043 | -0.002 | 0.030 | 0.031 | 0.944 | -0.001 | 0.030 | 0.034 | 0.968 |

| Scenario 2 True value = 0.637 | |||||||||||

| 100 | 10 | -0.388 | 0.105 | 0.002 | 0.094 | 0.082 | 0.930 | -0.010 | 0.092 | 0.078 | 0.934 |

| 200 | 10 | -0.397 | 0.064 | -0.003 | 0.058 | 0.055 | 0.936 | -0.009 | 0.053 | 0.054 | 0.940 |

| 400 | 10 | -0.397 | 0.042 | -0.002 | 0.038 | 0.039 | 0.952 | -0.005 | 0.034 | 0.038 | 0.956 |

| 10 | 100 | -0.387 | 0.147 | -0.002 | 0.151 | 0.117 | 0.896 | -0.016 | 0.135 | 0.106 | 0.906 |

| 10 | 200 | -0.385 | 0.091 | -0.002 | 0.077 | 0.072 | 0.924 | -0.008 | 0.074 | 0.066 | 0.932 |

| 10 | 400 | -0.389 | 0.068 | -0.004 | 0.056 | 0.049 | 0.928 | -0.006 | 0.051 | 0.046 | 0.936 |

| Scenario 3 True value = 0.510 | |||||||||||

| 100 | 10 | -0.441 | 0.151 | 0.004 | 0.110 | 0.085 | 0.894 | -0.006 | 0.093 | 0.079 | 0.898 |

| 200 | 10 | -0.442 | 0.093 | -0.002 | 0.064 | 0.055 | 0.932 | -0.006 | 0.055 | 0.053 | 0.930 |

| 400 | 10 | -0.437 | 0.063 | 0.000 | 0.042 | 0.039 | 0.936 | -0.002 | 0.039 | 0.038 | 0.956 |

| 10 | 100 | -0.410 | 0.234 | -0.011 | 0.200 | 0.141 | 0.884 | 0.002 | 0.159 | 0.124 | 0.888 |

| 10 | 200 | -0.423 | 0.126 | -0.001 | 0.092 | 0.079 | 0.902 | -0.005 | 0.084 | 0.071 | 0.920 |

| 10 | 400 | -0.431 | 0.095 | -0.004 | 0.062 | 0.053 | 0.924 | -0.005 | 0.058 | 0.048 | 0.938 |

| Naive | Standard | Modulated | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| K | |||||||||||

| Scenario 1 True value = -0.413 | |||||||||||

| 100 | 10 | -0.019 | 0.105 | 0.001 | 0.080 | 0.071 | 0.934 | 0.005 | 0.083 | 0.068 | 0.910 |

| 200 | 10 | -0.023 | 0.072 | 0.000 | 0.056 | 0.049 | 0.929 | 0.003 | 0.053 | 0.048 | 0.908 |

| 400 | 10 | -0.028 | 0.053 | -0.003 | 0.040 | 0.034 | 0.929 | -0.001 | 0.041 | 0.034 | 0.912 |

| 10 | 100 | -0.020 | 0.099 | -0.001 | 0.079 | 0.070 | 0.935 | -0.012 | 0.078 | 0.066 | 0.904 |

| 10 | 200 | -0.023 | 0.072 | 0.002 | 0.054 | 0.048 | 0.929 | 0.004 | 0.054 | 0.046 | 0.898 |

| 10 | 400 | -0.027 | 0.049 | 0.000 | 0.038 | 0.034 | 0.925 | 0.001 | 0.038 | 0.033 | 0.924 |

| Scenario 2 True value = 0.383 | |||||||||||

| 100 | 10 | -0.045 | 0.098 | -0.002 | 0.122 | 0.104 | 0.943 | -0.003 | 0.111 | 0.096 | 0.938 |

| 200 | 10 | -0.044 | 0.066 | 0.001 | 0.091 | 0.078 | 0.948 | 0.000 | 0.085 | 0.074 | 0.960 |

| 400 | 10 | -0.048 | 0.051 | -0.004 | 0.069 | 0.061 | 0.947 | -0.004 | 0.066 | 0.059 | 0.944 |

| 10 | 100 | -0.047 | 0.094 | 0.000 | 0.120 | 0.107 | 0.949 | -0.006 | 0.110 | 0.098 | 0.934 |

| 10 | 200 | -0.044 | 0.066 | -0.001 | 0.086 | 0.076 | 0.934 | -0.003 | 0.084 | 0.071 | 0.934 |

| 10 | 400 | -0.046 | 0.048 | -0.002 | 0.064 | 0.058 | 0.949 | -0.004 | 0.063 | 0.056 | 0.957 |

| Scenario 3 True value = 0.460 | |||||||||||

| 100 | 10 | -0.138 | 0.069 | 0.004 | 0.076 | 0.073 | 0.950 | -0.001 | 0.076 | 0.069 | 0.940 |

| 200 | 10 | -0.150 | 0.051 | 0.002 | 0.050 | 0.051 | 0.945 | 0.000 | 0.049 | 0.049 | 0.949 |

| 400 | 10 | -0.160 | 0.042 | -0.001 | 0.037 | 0.036 | 0.944 | -0.002 | 0.035 | 0.035 | 0.945 |

| 10 | 100 | -0.134 | 0.066 | 0.007 | 0.077 | 0.071 | 0.956 | 0.001 | 0.073 | 0.067 | 0.945 |

| 10 | 200 | -0.140 | 0.047 | 0.003 | 0.053 | 0.049 | 0.946 | 0.001 | 0.054 | 0.048 | 0.939 |

| 10 | 400 | -0.142 | 0.034 | 0.003 | 0.037 | 0.035 | 0.936 | 0.002 | 0.037 | 0.034 | 0.931 |

Finally, since the modulated estimates require modeling the observation process , we also investigate the robustness of the proposed estimates when model (6) is misspecified under Scenario 4. Specifically, we generated data following Scheme 2, calculated the estimates using methods developed under Scheme 1, and report the results in Table 4 of the Supplementary Material. The results show that the standard estimator under cumulative reward , which only requires A1 and A2, still performs well in all settings, and, what is beyond the expectation is that, the estimates , and that are based on model (6), also demonstrate negligible bias and provide satisfactory variance estimation. Hence, the proposed methods are fairly robust to mild misspecification of the observation process model.

6 Application: HealthPartners Data

In this section, we illustrate our proposed methodology by applying it to the periodontal recall interval selection problem from a HealthPartners database (henceforth, HP data) of longitudinal electronic health records tracking PD status and progression, along with other covariates, among subjects around the Minneapolis, Minnesota area (Guan et al., 2020). The length of recall intervals continues to be a topic of debate and research while the current, insurance-mandated, standard of care is a recall interval of 6 months for all patients. However, recall frequencies are intimately related to patient outcomes, provider workload, and dental healthcare costs. Recent NICE guidelines recommend (Clarkson et al., 2018) that recall intervals vary with time and be determined based on disease levels and oral disease risk. Overall, the selection of an appropriate personalized recall interval is a multifaceted clinical decision that is challenging to evaluate mechanistically. Our HP dataset consists of 7654 dental visit records from 1056 patients enrolled within the HP EHR from January 1, 2007, to December 31, 2014. For each visit record, we have the recall visit date, probed pocket depth (PPD, measured in mm) from all available tooth-sites as the PD assessment endpoint, and the clinician-recommended recall interval for the follow-up visit. The total follow-up time for these subjects has a median of 5.95 years, with a maximum of 7.97 years (resembling about 8 years of data). The frequency of recall visits has a median of 8, with a range from 3 to 29. The gap time (actual recall intervals) has a median of 181 days (approximately 6 months), with a range of 1 to 2598 days. Due to the unavailability of full-mouth, site-level measures of clinical attachment level (another important biomarker for PD) in the HP database, we refrain from using the ACES framework (Holtfreter et al., 2024), the modified 2018 periodontal status classification scheme to epidemiological survey data, and instead considered the mean PPD (mean across all tooth-sites at a specific visit) as a plausible subject-level PD endpoint, with a median of 2.51mm (range: 0.0–6.2mm).

To apply our methods, let denote the record time (in days), denote the gap time (in days) between and , denote (mean) PPD measurements at , and be an indicator of the recommended recall interval at , where implies the patient is advised to revisit within 6 months, and otherwise. Within the HP database, the mean PPD measurements () are significantly correlated (-value 0.001) with the (true) recommended recall intervals, suggesting that the mean PPD measurements play a crucial role in the dentists’ decisions regarding recall intervals. On the other hand, although other covariates were available in the dataset, only PPD and clinical attachment loss (CAL) had a significant correlation with the treatment decisions made by dentists. Given the high correlation between PPD and CAL, we selected PPD as the only state variable. To measure the effects of the action , we considered two definitions for the reward . First, considering that the PPD around teeth would deepen in the presence of gum disease, and a reduction of PPD is one of the desired outcomes of periodontal therapy, we define the reward as an indicator of PPD reduction, i.e. . Based on this definition, the performance of any given policy could be evaluated in terms of the value function defined in (1), which is based on the cumulated sum of rewards. Moreover, since PPD 3 mm is usually considered a threshold to distinguish periodontally healthy sites from diseased ones, we also considered another definition of reward which takes the form: . In this case, could be viewed as a continuous function where denotes the potential PPD measurement at time , and therefore, a more reasonable evaluation of policies should be the value function defined in (2), which is based on integrated reward.

Under each value function, we considered three policies for comparison, namely, the observed stochastic policy , the observed fixed policy , and the optimal policy. Since the estimation of optimal policy depends on the choice of the value function, we considered two optimal policies, and , which correspond to and respectively. The plots of the obtained policies are shown in Figure 5 of the Supplementary Material. Typically, under the observed policies and , patients with deeper pocket depth and more frequent recalls are more likely to be recommended a shorter recall interval, while under the estimated optimal policies, there is no such linear relationship among actions, states, and gap times.

|

|

|

|

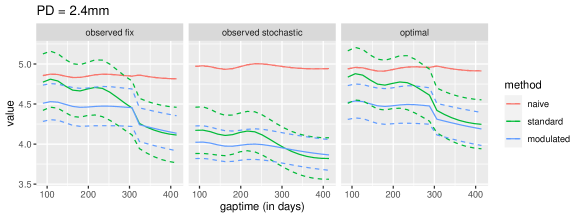

The data analysis results are summarized in Figures 3-4. In general, the estimated values change with not only the initial state but also the initial gap time, which indicates that the frequency of recall visits does have effects on the performance of the policies, and should be taken into account in the evaluation process. Moreover, by comparing the estimated results obtained from employing the standard method, modulated method, and naive method, we observe that the estimates from the standard method and modulated method are similar, while the results from the naive method exhibit significant differences from the former two in both the magnitude of values and the patterns of the function. For example, in Figure 3 and when PPD is fixed at 2.4 mm, the value functions estimated by the naive method remain around 5 as the gap time varies, even though both the standard and modulated methods reveal a significant decrease in the value as the gap time values increase. Furthermore, it can also be seen that the confidence intervals provided by the modulated method are generally narrower than those given by the standard method (for example, when gap time = 360 days). This result is consistent with the findings in simulation studies.

We also compare the estimated value functions of the observed stochastic policy, observed fix policy, and the optimal policy, under the modulated method and based on cumulative reward and integrated reward respectively. The plots of the confidence intervals for the value of three policies are given in Figure 6 of the Supplementary Material. From the results, it is evident that the alterations in the action assignment of the optimal policy, in comparison to the other two observed policies, effectively enhance the policy’s value. A more detailed discussion of the results is also given in section C of the Supplementary Material.

7 Discussion

In this paper, we construct a framework for dynamic policies with irregularly spaced and outcome-dependent observation process and develop an off-policy evaluation procedure under revised time-homogeneous and Markov assumptions. Similar Markov assumptions are commonly posited in the off-policy evaluation literature and, in real-world data applications, can be tested from the observed data using a forward-backwards learning algorithm (Shi et al., 2020). We also provide example schemes to demonstrate the connection between the proposed assumptions and existing ones. In particular, we show that the proposed time-homogeneous and Markov assumption requires the observation process to satisfy some modulated renewal process assumption (see S1-x’), and introduce an intensity-based modulated renewal process model (6) in the estimation procedure. It would be an interesting but challenging task to generalize the intensity model to a rate model, under which the existing Markov assumption no longer holds, or generalize the modulated renewal process to a counting process, under which the time-homogenous assumption may not hold. Finally, although our paper focuses on the off-policy evaluation of dynamic policies, it is not hard to adopt the proposed method to search for an optimal dynamic policy, as well as to make inferences on the value of the optimal dynamic policy.

Supplementary Material

This supplementary material is organized as follows. In Section A, we present proofs for Lemmas 1-2, Lemmas A.1-A.5 and Theorems 1-4. In Section B, we present additional simulation results. In Section C, we present additional figures from the application, together with some discussion on the results.

Appendix A Proofs for lemmas and theorems

For notational brevity, we assume to be a deterministic policy, with . Denote as the selected action under policy given state and gap time . for any , and . All derivations and results can be generalized to the evaluation of stochastic policies, straightforwardly.

A.1 Proof of Lemmas 1-2

Proof of Lemma 1 : Under assumptions A1 and A2, the existence of follows directly from the boundedness of and . Then, we can rewrite as

Note, denotes the joint distribution of the next gap time and next state. Under assumption , for all ,

Thus,

By similar arguments, we have

which does not depend on or for all . For all , define

Then, we have

Therefore, for all , , , and .

Proof of Lemma 2 (Bellman Equation)

Under assumptions A1-A2, can be rewritten as

Therefore, the Bellman Equation in Lemma 2 holds for all .

A.2 Proofs of Theorems 1-4.

For notational brevity, let denote , let denote , let denote , and let denote . For a set of -dimensional vectors, , let denote a dimension array, whose th row is , and taking the form

Moreover, the following notations and conditions are required in the proofs of Theorems 2-4. For , define with . The convergency of holds under assumption . So we denote the limit by for each and , and denote as for notational briefness. By Slutsky’s theorem, we can further denote as the limit of . Conditions - are regular conditions commonly adopted in the modulated renewal process studies (Pons and de Turckheim, 1988; Lin and Fine, 2009; Lin et al., 2013), and here used to construct asymptotic properties of under model 6 in SubSection 3.2. Conditions ensures the existence of .

-

C1

There exists a compact closure surrounding , such that is bounded away from zero on .

-

C2

is positive definite.

-

C3

is finite for and for each in a dense subset of including 0 and .

-

C4

For any ,

-

C5

is tight on for and .

- C6

Proof of Theorem 1:

The proof of Theorem 1 is similar to Appendix E.1 in Shi et al. (2021), so we omit the technical details and give an outline of the proof.

Firstly, by Lemma 1 of Shi et al. (2021) and Section 2.2 of Huang (1998), there exists that satisfies

| (8) |

for some constant . Then by the definition of , we can rewrite as

where and and . Lemma A.1 gives the properties of , , , and .

Lemma A.1 Under the conditions given in Theorem 1, we have

-

(i)

For any and , for some constant .

-

(ii)

. As , , , , wpa 1.

-

(iii)

, .

-

(iv)

As , , , , , wpa 1.

Together with inequality (8), Markov’s inequality and Cauchy-schwarz inequality, we can obtain , , , and therefore

| (9) |

Next, we study the asymptotic properties of . Recall that , define with . Lemma A.2 gives the properties of and .

Lemma A.2 Under the conditions given in Theorem 1, we have: (i) , (ii) for some constant .

By (8), (9) and Lemma A.2, it can be obtained that

when and . Using the martingale central limit theorem (McLeish, 1974, corrollary 2.8) and following arguments in section E.5 of Shi et al. (2021), we have .

Proof of Theorem 2:

Theorem 2 will be proved by three steps. In the first step, we give the asymptotic properties of under the modulated renewal process model (6) in SubSection 3.2. In the second step, we use the properties of to obtain Lastly, we give the asymptotic properties of and finishes the proof.

Step1. Asymptotic properties of .

Define and Then Lemma A.3 gives the asymptotic properties for and .

Lemma A.3.Under the conditions given in Theorem 2, we have: (i) converges weakly to a normal distribution , and can be asymptotically written as

(ii) converges weakly to a mean zero Gaussian process, and can be asymptotically written as

for each .

Furthermore, by Taylor’s expansion, we can rewrite as

which is also for each fixed and . Recall that . Therefore is asymptotically equivalent with

| (10) |

Step 2. Asymptotic properties of

Denote and define

By (8) and the definition of given in section 3.3, can be rewritten as

where Lemma A.4 gives the properties of , and .

Lemma A.4 Under the conditions given in Theorem 2, we have: (i) , wpa 1. (ii) , , .

Similar to the proof of Theorem 1, the first term in the above equation can be written as with For the second term, by the asymptotic expression given in (10), we have

Here, for , and the last equation follows from the convergence of , which could be obtained by Assumption A6. Lastly, denote and , then it is not hard to obtain

| (11) |

Step 3. Asymptotic properties of

Define with

| (12) |

Then similar with Lemma A.2, we can obtain and for some constant . Together with (11), can be written as

when and . Similar with the proof of Theorem 1, we can use martingale central limit theorem (McLeish, 1974, corrollary 2.8) to obtain that

Proof of Theorem 3(i):

We first give the following lemma which is required for the Kernel-based estimates. Lemma A.5. For any function , define . Then if has a bounded second-order derivative in a neighborhood of .

Theorem 3 will be proved by two steps.

In the first step, we obtain the asymptotic properties of

and .

Then, using the properties of ,

to derive the asymptotic properties of

and

.

Step 1. Asymptotic properties of

Rewrite

with .

For the first term, we first rewrite

Following Theorem 4.2.2 of Ramlau-Hansen (1983), we can show that converges weakly to a normal distribution for each , and therefore . Moreover, by Lemma A.5, , if has a bounded second derivative in a neighborhood of . Therefore, together with Lemma A.3(ii) and Lemma A.5, is asymptotically equivalent with

| (13) |

On the other hand, by Taylor expansion and Lemma A.3(i), we have

| (14) |

Step 2. Asymptotic properties of

Similar with (8), there exists that satisfies for some constant . Recall that and , we have

As shown in the proof of Theorem 1,

where

For the second term , since

when has a bounded second-order derivative in a neighborhood of . Then is can be asymptotically written as

For the third term , denote , then . Define , then is asymptotically equivalent with . Together with Lemma A.1(iv), we have

where and . Define with

| (16) |

Then is asymptotically equivalent with

when , and . Lastly, by the martingale central limit theorem, converges weakly to a normal variable with mean 0 and variance 1.

Proof of Theorem 3(ii): For , let denote the quantities obtained by replacing in with , and let denotes the expectations of . Then following the proofs of Theorems 1-3, can be rewritten as

where and Here, takes similar form with except that are replaced by .

Furthermore, define , , and with

| (17) |

Then, when , and ,

converges weakly to a normal distribution with mean 0 and variance 1.

A.3 Proof of Lemmas A.1-A.5

Proof of Lemma A.1

By assumption A3 and Lemma 1 in Shi et al. (2021),

is a

Hlder continuous function with exponent

with respect to ,

and thus (i) holds.

For (ii),

by Lemma E.1 in Shi et al. (2021),

there exists some constant such that

.

Therefore,

is .

The other results are proved in Lemma E.3 in Shi et al. (2021).

For (iii),

the boundedness of

follows from

given in Lemma A.1(i)

and given in the conditions of Theorem 1,

while the boundedness of follows from (8).

Lastly, the properties of

and

given in (iv) are consistent with Lemma E.2 in Shi et al. (2021)

and can be obtained with similar arguments.

Proof of Lemma A.2

By Lemma A.1(iii), . Then follows from Lemma A.1(iii).

Now we prove Lemma A.2(ii). Recall that Then by , it can be shown that

By Lemma A.1(ii),

we have

.

Moreover,

by given in Lemma A.1(iii),

there exists some constant

such that

.

Therefore,

with .

Proof of Lemma A.3

We first introduce some notations. For , , denote as for . Then for , can be rewritten as , and is the solution to . Under assumption A6, conditions C1-C5, and by Theorem 3.2 of Pons and de Turckheim (1988), converges weakly to a Gaussian variable with mean 0 and variance , where is defined in condition C2. Moreover, can be asymptotically written as

with . Rewrite as , and define , then Lemma A.3(i) follows directly.

Now we derive the asymptotic properties of . The convergence of follows from the Theorem 3.3 of Pons and de Turckheim (1988). To obtain the asymptotic expression in Lemma A.3(ii), we first rewrite as

where Then together with Lemma A.3(i), we have

Proof of Lemma A.4

We first prove Lemma A.4(i). The boundedness property of is similar with , and therefore can be similarly obtained by the arguments i the proof of Lemma A.1(iii). For the boundedness of , rewrite it as , where and . By (8), is bounded by . For , since converges to a Gaussian process with zero mean and continuous sample paths (see Theorem 3.3 in Pons and de Turckheim (1988)), then, together with the convergence of , it is not hard to obtain that also converges weakly to a stochastic process with zero mean and continuous sample paths for each fixed . This implies that for each fixed . Then is also bounded by when is large enough, and thus we finish the proof.

To prove Lemma A.4(ii), rewrite

Note that , then similar with Lemma A.1(iv), we have and .

On the other hand, rewrite as . Then following (10) and with arguments similar to the proof of Theorem 2 (Step 2), we can obtain and therefore, .

Furthermore, since , we have wpa 1. Together with given in Lemma A.1(iv), we have

for any . This implies wpa 1. Therefore,

is also

.

Proof of Lemma A.5

Similarly with Ramlau-Hansen (1983), we assume the kernel has support within and to simplify mathematics. Then by Taylor expansion,

for . Therefore, is when has a bounded second-order derivative in the neighborhood of .

Appendix B Additional Simulation Results

In this section, we present the simulation studies under scenario 4 to investigate the robustness of the proposed estimates. Specifically, the dataset is generated following scheme 2, the estimates are calculated by the methods developed under scheme 1, and therefore model (6) in Section 3.2 is mis-specified. The results are reported in Table 4. From the results, it is not hard to see that the naive method remains biased, the standard estimator under cumulative reward still performances well in all settings, and the estimates , and , which are based on model (6), demonstrate negligible bias and provide satisfactory variance estimation. Hence, the proposed methods are fairly robust to mild misspecification of the observation process model.

| Naive | Standard | Modulated | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| K | |||||||||||

| Scenario 4 Cumulative Rewards True value = 0.594 | |||||||||||

| 100 | 10 | -0.426 | 0.103 | 0.006 | 0.082 | 0.081 | 0.924 | -0.017 | 0.080 | 0.078 | 0.922 |

| 200 | 10 | -0.424 | 0.064 | 0.001 | 0.055 | 0.057 | 0.940 | -0.013 | 0.053 | 0.055 | 0.930 |

| 400 | 10 | -0.428 | 0.042 | 0.001 | 0.041 | 0.040 | 0.940 | -0.009 | 0.041 | 0.039 | 0.938 |

| 10 | 100 | -0.413 | 0.142 | 0.012 | 0.123 | 0.099 | 0.910 | -0.008 | 0.109 | 0.093 | 0.922 |

| 10 | 200 | -0.430 | 0.087 | 0.001 | 0.073 | 0.065 | 0.920 | -0.014 | 0.071 | 0.062 | 0.924 |

| 10 | 400 | -0.425 | 0.067 | 0.000 | 0.051 | 0.046 | 0.930 | -0.011 | 0.045 | 0.044 | 0.926 |

| Scenario 4 Integrated Rewards True value = 0.569 | |||||||||||

| 100 | 10 | -0.172 | 0.077 | 0.001 | 0.084 | 0.074 | 0.944 | -0.015 | 0.077 | 0.069 | 0.930 |

| 200 | 10 | -0.176 | 0.056 | 0.000 | 0.056 | 0.051 | 0.935 | -0.013 | 0.057 | 0.049 | 0.919 |

| 400 | 10 | -0.181 | 0.042 | -0.003 | 0.041 | 0.036 | 0.924 | -0.014 | 0.039 | 0.035 | 0.910 |

| 10 | 100 | -0.170 | 0.068 | 0.000 | 0.073 | 0.072 | 0.938 | -0.015 | 0.071 | 0.068 | 0.938 |

| 10 | 200 | -0.165 | 0.051 | 0.002 | 0.054 | 0.050 | 0.942 | -0.010 | 0.052 | 0.048 | 0.938 |

| 10 | 400 | -0.168 | 0.034 | 0.001 | 0.037 | 0.035 | 0.929 | -0.009 | 0.035 | 0.034 | 0.927 |

Appendix C Additional Application Results

In this section, we present additional application results to compare the observed stochastic policy, observed fix policy, and the optimal policy. The policies are estimated by 2488 visit records from 352 patients (approximately of the total number of study subjects) who are randomly selected from the full dataset, while the policy evaluation procedure used the remaining 5166 records from 704 patients. To obtain the observed stochastic policy, we first fitted a logistic regression model with the (binary) response and the explanatory variables and . Then, let the estimated probability of given be , and denote as the observed stochastic policy. Moreover, by setting the probability threshold as 0.48, we further obtain a deterministic function mapping from to , and denote it as the observed fixed policy . Finally, the optimal policy is estimated by double-fitted Q-learning (Shi et al., 2021), where the Bellman equation for the optimal policy can be obtained by a trivial extension of Lemma 2. Plots of the obtained policies are shown in Figure 5. From the plots, it is not hard to see that, under the observed policies and , patients with deeper pocket depth and more frequent recalls are more likely to be recommended a shorter recall interval, while under the estimated optimal policies, there is no such linear relationship among actions, states, and gap times.

| (a) | (b) |

|

|

| (c) | (d) |

|

|

Furthermore, under the modulated method, we compared the confidence intervals of the value functions for these policies in cases where the value function is defined by cumulative reward and integrated reward, respectively. The estimated results are presented in Figure 6. Together with Figures 5, we found that changes in the action assignment of the optimal policy, compared with observed policies, indeed improve the value of the policy. For example, when the reward is defined as PD measurement, the optimal policy suggests that patients with a gap time of three months and PD values less than 2.7 mm may schedule their subsequent visit at six months or later, and correspondingly, the value of the optimal policy at a gap time 90 days and PD2.2 mm is significantly higher than that of the two observed policies.

|

|

|

|

References

- (1)

- Bradtke and Duff (1994) Bradtke, S., and Duff, M. (1994), “Reinforcement Learning Methods for Continuous-Time Markov Decision Problems,” Advances in Neural Information Processing Systems, 7, 393–400.

- Chen et al. (2018) Chen, X., Ding, J., and Sun, L. (2018), “A Semiparametric Additive Rate Model for a Modulated Renewal Process,” Lifetime Data Analysis, 24, 675–698.

- Clarkson et al. (2018) Clarkson, J. E., Pitts, N. B., Bonetti, D., Boyers, D., Braid, H., Elford, R., Fee, P. A., Floate, R., Goulão, B., Humphris, G. et al. (2018), “INTERVAL (investigation of NICE technologies for enabling risk-variable-adjusted-length) dental recalls trial: a multicentre randomised controlled trial investigating the best dental recall interval for optimum, cost-effective maintenance of oral health in dentate adults attending dental primary care,” BMC Oral Health, 18, 1–10.

- Clarkson et al. (2020) Clarkson, J. E., Pitts, N. B., Goulao, B., Boyers, D., Ramsay, C. R., Floate, R., Braid, H. J., Fee, P. A., Ord, F. S., Worthington, H. V. et al. (2020), “Risk-based, 6-monthly and 24-monthly dental check-ups for adults: the INTERVAL three-arm RCT.,” Health Technology Assessment (Winchester, England), 24(60), 1–138.

- Cox (1972) Cox, D. R. (1972), “The Statistical Analysis of Dependencies in Point Processes,” in Stochastic Point Processes., New York: Wiley, pp. 55–66.

- Du et al. (2020) Du, J., Futoma, J., and Doshi-Velez, F. (2020), “Model-based Reinforcement Learning for Semi-Markov Decision Processes with Neural ODEs,” Advances in Neural Information Processing Systems, 33, 19805–19816.

- Ertefaie and Strawderman (2018) Ertefaie, A., and Strawderman, R. L. (2018), “Constructing Dynamic Treatment Regimes over Indefinite Time Horizons,” Biometrika, 105, 963–977.

- Guan et al. (2020) Guan, Q., Reich, B. J., Laber, E. B., and Bandyopadhyay, D. (2020), “Bayesian nonparametric policy search with application to periodontal recall intervals,” Journal of the American Statistical Association, 115(531), 1066–1078.

- Holtfreter et al. (2024) Holtfreter, B., Kuhr, K., Borof, K., Tonetti, M. S., Sanz, M., Kornman, K., Jepsen, S., Aarabi, G., Völzke, H., Kocher, T. et al. (2024), “ACES: A new framework for the application of the 2018 periodontal status classification scheme to epidemiological survey data,” Journal of Clinical Periodontology, 51(5), 512–521.

- Huang (1998) Huang, J. Z. (1998), “Projection Estimation in Multiple Regression with Application to Functional Anova Models,” The Annals of Statistics, 26, 242–272.

- Kallus and Uehara (2020) Kallus, N., and Uehara, M. (2020), “Double Reinforcement Learning for Efficient Off-Policy Evaluation in Markov Decision Processes,” Journal of Machine Learning Research, 21, 1–63.

- Lin et al. (2013) Lin, F.-C., Truong, Y. K., and Fine, J. P. (2013), “Robust Analysis of Semiparametric Renewal Process Models,” Biometrika, 100, 709–726.

- Lin and Fine (2009) Lin, F., and Fine, J. P. (2009), “Pseudomartingale Estimating Equations for Modulated Renewal Process Models,” Journal of the Royal Statistical Society. Series B (Statistical Methodology), 71, 3–23.

- Luckett et al. (2019) Luckett, D. J., Laber, E. B., Kahkoska, A. R., Maahs, D. M., Mayer-Davis, E., and Kosorok, M. R. (2019), “Estimating Dynamic Treatment Regimes in Mobile Health Using V-Learning,” Journal of the American Statistical Association, 115, 692–706.

- McLeish (1974) McLeish, D. L. (1974), “Dependent Central Limit Theorems and Invariance Principles,” The Annals of Probability, 2.

- Murphy (2003) Murphy, S. A. (2003), “Optimal Dynamic Treatment Regimes,” Journal of the Royal Statistical Society Series B: Statistical Methodology, 65, 331–355.

- Murphy et al. (2001) Murphy, S. A., van der Laan, M. J., and Robins, J. M. (2001), “Marginal Mean Models for Dynamic Regimes,” Journal of the American Statistical Association, 96, 1410–1423.

- Parr (1998) Parr, R. E. (1998), Hierarchical Control and Learning for Markov Decision Processes, PhD thesis, University of California,Berkeley.

- Pons and de Turckheim (1988) Pons, O., and de Turckheim, E. (1988), “Cox’s Periodic Regression Model,” The Annals of Statistics, 16, 678–693.

- Ramlau-Hansen (1983) Ramlau-Hansen, H. (1983), “Smoothing Counting Process Intensities by Means of Kernel Functions,” The Annals of Statistics, 11, 453–466.

- Robins (2004) Robins, J. M. (2004), Optimal Structural Nested Models for Optimal Sequential Decisions,, in Proceedings of the Second Seattle Symposium in Biostatistics: Analysis of Correlated Data, eds. D. Y. Lin, and P. J. Heagerty, Springer, New York, NY, pp. 189–326.

- Shi et al. (2022) Shi, C., Luo, S., Le, Y., Zhu, H., and Song, R. (2022), “Statistically Efficient Advantage Learning for Offline Reinforcement Learning in Infinite Horizons,” Journal of the American Statistical Association, pp. 1–14.

- Shi et al. (2020) Shi, C., Wan, R., Song, R., Lu, W., and Leng, L. (2020), Does the Markov Decision Process Fit the Data: Testing for the Markov Property in Sequential Decision Making,, in Proceedings of the 37th International Conference on Machine Learning, eds. H. D. III, and A. Singh, Vol. 119 of Proceedings of Machine Learning Research, PMLR, pp. 8807–8817.

- Shi et al. (2021) Shi, C., Zhang, S., Lu, W., and Song, R. (2021), “Statistical Inference of the Value Function for Reinforcement Learning in Infinite-Horizon Settings,” Journal of the Royal Statistical Society Series B: Statistical Methodology, 84, 765–793.

- Sutton and Barto (2018) Sutton, R. S., and Barto, A. G. (2018), Reinforcement learning: An introduction, Cambridge: MIT press.

- Uehara et al. (2022) Uehara, M., Shi, C., and Kallus, N. (2022), “A Review of Off-Policy Evaluation in Reinforcement Learning,” arXiv preprint arXiv:2212.06355, .

- Yang (2021) Yang, S. (2021), “Semiparametric Estimation of Structural Nested Mean Models with Irregularly Spaced Longitudinal Observations,” Biometrics, 78, 937–949.

- Yang et al. (2019) Yang, S., Pieper, K., and Cools, F. (2019), “Semiparametric Estimation of Structural Failure Time Models in Continuous-Time Processes,” Biometrika, 107, 123–136.

- Zhang et al. (2013) Zhang, B., Tsiatis, A. A., Laber, E. B., and Davidian, M. (2013), “Robust Estimation of Optimal Dynamic Treatment Regimes for Sequential Treatment Decisions,” Biometrika, 100, 681–694.

- Zhao et al. (2015) Zhao, Y.-Q., Zeng, D., Laber, E. B., and Kosorok, M. R. (2015), “New Statistical Learning Methods for Estimating Optimal Dynamic Treatment Regimes,” Journal of the American Statistical Association, 110, 583–598.