Off-Policy Evaluation Using Information Borrowing and Context-Based Switching

Abstract

We consider the off-policy evaluation (OPE) problem in contextual bandits, where the goal is to estimate the value of a target policy using the data collected by a logging policy. Most popular approaches to the OPE are variants of the doubly robust (DR) estimator obtained by combining a direct method (DM) estimator and a correction term involving the inverse propensity score (IPS). Existing algorithms primarily focus on strategies to reduce the variance of the DR estimator arising from large IPS. We propose a new approach called the Doubly Robust with Information borrowing and Context-based switching (DR-IC) estimator that focuses on reducing both bias and variance. The DR-IC estimator replaces the standard DM estimator with a parametric reward model that borrows information from the ‘closer’ contexts through a correlation structure that depends on the IPS. The DR-IC estimator also adaptively interpolates between this modified DM estimator and a modified DR estimator based on a context-specific switching rule. We give provable guarantees on the performance of the DR-IC estimator. We also demonstrate the superior performance of the DR-IC estimator compared to the state-of-the-art OPE algorithms on a number of benchmark problems.

1 Introduction

Contextual bandits framework finds a wide range of applications such as recommendations systems (Li et al.,, 2011), personalized healthcare (Zhou et al.,, 2017), advertising (Bottou et al.,, 2013), and education (Mandel et al.,, 2014). In contextual bandits, a decision maker (often an algorithm) observes a context and takes an action according to a policy and observes a reward. For a recommendation algorithm, the context can be the information about the user and the history is his past online clicks/purchases, and a reward is obtained when he clicks/buys the item. Importantly, the algorithm can only observe the reward for the selected actions, and not for the others. The goal is to maximize the expected reward by choosing the best policy out of a set of candidate policies. A natural approach towards that end is to evaluate the candidate policies by calculating their expected reward, called policy evaluation, and then choose the policy with the highest expected reward. We consider the fundamental problem of off-policy evaluation (OPE) in contextual bandits, where one uses the data gathered by a past policy, known as the logging policy, to estimate the expected reward of a new policy, known as the target policy. OPE eliminates the cost of performing policy evaluation via online experiments, and avoids the risks of exposing subjects to untested policies.

There are three main approaches to address the OPE problem: Direct Method (DM) first gets an estimate of the reward model and uses it to estimate the value of the target policy. DM often has low variance. However, its bias can be significant, especially when the true reward model does not belong to the function class used for the reward function estimation. Inverse Propensity Scoring (IPS) estimator (Horvitz and Thompson,, 1952) uses importance weighting to correct the mismatch between proportions of actions due to the difference between the logging and target polices. While the IPS estimator is unbiased, its variance can be really large due to large importance weights. Doubly Robust (DR) estimator (Robins and Rotnitzky,, 1995; Bang and Robins,, 2005; Dudík et al.,, 2011, 2014) is a combination of DM and IPS to achieve the low variance of DM and no bias of IPS. While DR is known to be asymptotically optimal under some assumption (Su et al.,, 2020), its finite-sample variance can still be quite high when importance weights are large.

Many recent works focus on reducing the variance of the DR estimator. Wang et al., (2017) introduced a switching approach that switches between using a DM and DR, depending on the importance weights. Su et al., (2020) takes a shrinkage approach where they replace the importance weights by smaller weights which optimizes the mean squared error. The variance of such estimators is usually caused by mismatches in supports of data-collecting policy and target policy. Mou et al., (2023) assume that the reward function lies within a reproducing kernel Hilbert space to address such mismatches. Khan et al., (2024) instead use partial identification methods to address these mismatches. There are however few works addressing the high bias of the DM method. Su et al., (2020) has revealed the importance of choosing an appropriate reward model. Through an extensive study, they have shown that different reward models result in the best estimates in different scenarios. Lichtenberg et al., (2023) use reward clipping approaches for achieving practical less-bias results.

In this work, we propose an innovative approach for the OPE problem, which we call the Doubly Robust with Information borrowing and Context-based switching (DR-IC) estimator. The approach is based on two key proposals for improving the performance of the standard DR estimator.

First, we propose a modified DM that uses an intuitive and widely applicable parametric reward model that can significantly reduce the bias. The fundamental idea of the proposed reward model is borrowing information from ‘similar’ and ‘important’ contexts through a correlation structure. In particular, this reward model assigns more weight to data points that are ‘similar’ to the test data point through the choice of a kernel. The bandwidth of the kernel is chosen adaptively, depending on the importance weight associated with the test data point. We call this new DM as DM with Information Borrowing (DM-IB). We theoretically show that the bias of the DM-IB goes to zero as the number of samples increases. We also empirically demonstrate the advantages of DM-IB.

Second, we propose a context-based switching scheme for adpatively choosing between the DM-IB and the DR estimator (which also uses a reward model with information borrowing). Unlike the switching scheme proposed in Wang et al., (2017) which does switching based on the importance weights, our approach uses the Kullback-Leibler (KL) divergence between the logging policy and target policy, given the context, to do the switching. We call this the DR-IC estimator. Through extensive experiments, we demonstrate the superior performance of our DR-IC estimator compared to the state-of-the-art methods (Wang et al.,, 2017; Farajtabar et al.,, 2018; Su et al.,, 2020) on a number of benchmark problems.

2 Preliminaries

In the contextual bandits problem, the algorithm observes a context , takes an action , and gets a scalar reward . We assume that the context space is continuous and the action space is finite. The contexts are sampled i.i.d. according to a distribution . Reward has a distribution conditioned on denoted by . We assume that is a Lipschitz function with respect to for any given , formalizing the intuition that similar context should give similar rewards for the same action. The algorithm selects actions according a decision rule called policy, which maps the observed context to a distribution over the action space. A policy is denoted as a conditional distribution which specifies the probability of selecting action when the context is observed. The value of a policy , denoted as , is defined as

| (1) |

In the following, we will also write to denote the joint distribution over context-action-reward tuples when actions are selected by the policy . i.e., .

In the off-policy evaluation problem, we are given a data set that consists of i.i.d. samples of context-action-reward tuple generated by a logging policy , i.e., . The goal is to estimate the value of a target policy using this offline dataset .

There are three standard and well known approaches for off-policy evaluation.

Direct Method: In DM, we train a reward model as

| (2) |

where is a suitable function class. The DM estimator is then given as

| (3) |

Though the DM estimator typically has low variance, it often suffers from large bias (Dudík et al.,, 2011).

Inverse Propensity Scoring Estimator : IPS estimator makes use of the importance weights, defined as , to get an unbiased estimate (Horvitz and Thompson,, 1952). The IPS estimator is given as

| (4) |

We make the standard assumption that whenever , ensuring that . Though the IPS estimator is unbiased, when there is a substantial mismatch between the policies and , the importance weights will be large and hence the variance will also be large.

Doubly Robust Estimator: DR approach combines DM and IPS estimator as

| (5) |

where is as given in (2). DR estimator preserves the unbiased nature of the IPS and hence is robust to a poor reward estimator . At the same time, because the IPS part in the DR estimator is using a shifted reward, the variance of the DR estimator is smaller than that of the IPS estimator. It is known that DR estimator is asymptotically optimal as long the reward estimator is consistent (Su et al.,, 2020). However, its finite-sample variance can be quite high due to large importance weights when the logging policy and the evaluation policy are different.

Recently, different approaches to further reduce the variance of the DR approaches have been studied. The closest to our work is (Wang et al.,, 2017), which proposed a switching approach based on the importance weights. The proposed switch estimator uses the IPS approach when the importance weights are less than a threshold and switches to the DM approach when the importance weights exceeds that threshold. Formally, switch estimator is given as

| (6) |

where is the threshold. The IPS part in the above estimator is can also be replaced by a DR estimator.

3 Our Approach

In this section, we introduce our new approach, which we call the Doubly Robust with Information borrowing and Context-based switching (DR-IC) estimator. The approach is based on two key proposals for improving the performance of the standard DR estimator. First, we propose a modified DM that uses a innovative reward model which borrows information from similar and important contexts in order to reduce the bias. We call this as DM with Information Borrowing (DM-IB). Second, we propose a context-based switching scheme for adpatively choosing between the DM-IB and the DR (which also uses the information borrowing reward model) for reducing the variance. We call this combined approach the DR-IC estimator.

3.1 Direct Method with Information Borrowing Reward Model

A major drawback of traditional DM estimators is that they do not exploit the information about the target policy while training a reward model as given in (3). So, each data sample is used uniformly in (3). However, depending on the importance weight, some of the samples are more relevant than others with respect to the off-policy evaluation objective. For example, if the importance weight is large for sample , this sample is more important in estimating than another sample with smaller importance weight. While performing a standard weighted regression with importance weights may address this problem partially, it does not exploit another important fact that “similar” contexts should produce “similar” rewards under the same action. So, a good reward model should be able to borrow more information from contexts that are “similar” than contexts that are different, in order to reduce the bias in estimation.

A standard reward model estimate used in the literature is least squares regression with regularization and Gaussian errors (Dudík et al.,, 2011; Wang et al.,, 2017; Su et al.,, 2020). Let be the context-action pairs, , be the matrix of context-action pairs, and be the vector of rewards obtained from the data . The reward estimate for any context-action pair according least squares regression with regularization is given by

| (7) |

We now consider a derived data set . Note that the cardinality of is and of is . Let be the context-action pair corresponding to the sample from the set and let . Our goal is to get a reward model that gives a reward estimate for each sample using correlation between and , where is the predicted rewards given a context-action pair . Note that the derived dataset comprises of the original datapoints paired with all possible actions , as we have explained in Section 3. We emphasize that we do not need additional data to implement our algorithm.

Since we don’t have an explicit correlation structure available a priori, we model the covariance matrix between and , , as

| (8) |

where , , , , and is the bandwidth and is an appropriate kernel.

The main motivation for defining such a covariance matrix is to exploit the fact that similar contexts should give similar rewards under the same action. Thus, the proposed covariance structure borrows more information from similar contexts due to the term . Also, if or are large, these samples are more important for the off-policy evaluation. So, our covariance structure borrows more information from such samples. In contrast, a standard least squares regression approach as given in (7) does not consider the similarity of contexts and borrows information uniformly from all samples, resulting in a higher bias. For our experiments, we take a specific covariance matrix of the form (8), namely a Gaussian kernel truncated at the same actions, as given by

| (9) |

Let be the covariance matrix for the original dataset. Assume that the true underlying covariance structure between and is given by (8). Then, the conditional mean of , denoted by , given the original rewards , the context-action pairs and and the regression parameter is our reward model (estimate) for the proposed approach:

| (10) | |||

where is an -dimensional vector which all of its entries are 1. The first term in the equation is simply the linear regression estimate if the correlation structure was assumed to be non-existent, as in usual linear regression. The second term is the information borrowing term which captures the borrowing of information based on the similarity among the contexts in the original dataset and the ‘derived’ dataset. The conditional expectation of given as a projection of is a natural estimator for and is the best estimator among all functions of in the sense that it minimizes the mean squared error (MSE), . We refer the reader to Brunk, (1961, 1963) and Šidák, (1957) for more details. In practice, we do not know and hence we replace it with an estimate. The scaling term ensures that the underlying joint covariance structure of and is positive definite.

Using the as the reward estimator, we now propose a new direct method for off-policy evaluation called DM-IB, given by

| (11) |

In the Section 4, we will show the superior properties of the proposed estimator compared to the standard direct method using the reward estimator as given in (7). In particular, we will show that the bias of converges to zero in probability as increases.

3.2 Doubly Robust Method with Context-Based Switching

While borrowing information through a correlation structure to get a reward estimator as proposed in (10) can reduce the bias compared to the standard DM method, the variance can still be significant compared to the standard doubly robust approach. On the other hand, simply using the proposed reward estimator in a doubly robust structure alone will not reduce the high variance of the DR estimator arising due to the difference between the logging policy and evaluation policy (and reflected in the large importance weights). To overcome this challenge, we propose a switching approach that adaptively selects between DM-IB and DR estimator. In particular, we propose to perform the switching based on the context-specific KL divergence between the logging policy and evaluation policy.

The KL divergence between two probability mass functions and is given by We define the context specific KL divergence for a given context , as Our DR-IC estimator is now given as

| (12) |

where is the threshold parameter that determines the switching. The third term in (12) is exactly our new direct method estimate given (11). The first two terms in (12) constitute a DR estimator which uses the information borrowing reward estimate as proposed in (10). The DR estimator is used only for contexts with KL divergence less than the threshold , and we switch to the DM method if the KL divergence exceeds the threshold.

We note that our approach for switching is different from that of Wang et al., (2017), which makes the switch for a specific context-action pair if the corresponding importance weight is too large. The proposed KL divergence based approach has an averaging effect on the individual weights since it is a weighted sum of the importance weights on a logarithmic scale and the thresholding is context-specific, rather than context-action pair specific. So, the chosen model is either the DM model or the DR model for the specific context and all possible actions. From a practical standpoint, the thresholding for the KL divergence based approach can be performed on a more compact grid than an importance weight based switching since the KL divergence uses the importance weights on a logarithmic scale, enabling easier optimization. We elaborate on the qualitative differences between the two techniques using a toy example in Section 4.2.

3.3 Optimizing the Threshold Parameter

One important part of implementing the DR-IC estimator is to find the optimal threshold parameter. We follow an approach similar to the one used in Wang et al., (2017) to select a that minimizes the MSE of the resulting estimator.

Let denotes the estimated reward given the context for a switching threshold . That is,

Since , we have the estimate of the variance, as

Next, we utilize the fact that as defined in (4) is an unbiased estimator of to obtain an estimate of squared bias as a function of the threshold , given by . To see this, note that

Here, to get , we have used the fact that goes to zero as we get more samples, since is an unbiased estimator of . To get , we replace the expectation by sample average.

However, due to possibly large importance weights, can be a poor estimate of the true expected reward . We overcome this issue as follows. Let . We then propose the following upperbound of the bias, :

Finally, we will use the following as our estimate for the squared bias:

With these estimates, we optimize the threshold paramater as

| (13) |

4 Analysis

4.1 Main Results

In this section, we investigate the theoretical properties of the information borrowing estimator for the reward function and show that it is asymptotically unbiased. More precisely, in Theorem 1, we show that the bias of the modified DM estimator as defined in (11) goes to zero when increases to infinity. In addition to this bias analysis, we also provide a bound on the MSE of the DR-IC estimator in Theorem 2.

In this section, we assume that action is continuous for the purpose of deriving general results. We also make the following assumptions about the problem.

Assumption 1.

(i). The reward function is continuous in and .

(ii) The importance weight is continuous in and . Also, there exists a positive constant such that for all .

(iii) The context distribution and the reward distribution are continuous.

We make the following assumption about the kernel we are using in (8).

Assumption 2.

Assume the kernel function is isotropic and satisfies , , .

We also make the following assumption about the least squares regression based estimate used in (7) and (10).

Assumption 3.

Let . and are bounded from above by some positive constant.

The following assumption is common among the problem of regression estimation, see Nadaraya, (1964) for more details.

Assumption 4.

The value of is for every , is a diagonal matrix and its diagonal entries are positive constants.

We now present our theorem about the bias of .

Theorem 1 (Asymptotic unbiasedness of ).

The DM-IB estimator inherits its asymptotically unbiased property from . In the following, we provide a brief intuition on the proof technique. The bias of can be written as three terms involving convolutions with the non-stationary kernel (8). As the bandwidth converges to zero with the sample size, the non-stationary kernel becomes degenerate at each sample in the derived data set such that the convolutions converge to the same sample in . Hence the convolution approximates the target and results in the elimination of the bias caused by least squares estimate with the information borrowing term in (10).

Remark 1.

Since the underlying reward function is non-linear, it is well-known that the bias of its least squares estimate is lower bounded by a constant. Hence, the bias of the traditional direct method estimator is lower bounded by a constant as well.

We now give an upper bound on the MSE for our DR-IC estimator given in (12).

Theorem 2 (Bound on the MSE of ).

Theorem 2 shows that the upper bound of the MSE of our DR-IC estimator is at most . A similar result of the switch-DR estimator can be found in Wang et al., (2017) where the upper bound is as well. So, in terms of the MSE, our proposed DR-IC estimator is at least as good as the switching method proposed in Wang et al., (2017). At the same time, our approach is better in terms of the bias, as shown in Theorem 1.

4.2 Comparing Different Switch Estimators

The idea of combining two different estimators in off-policy evaluation has been explored in Wang et al., (2017), where the authors proposed a switch estimator (switch-DR) which can switch between DM and DR (or IPS) depending on the magnitude of importance weights. The DR-IC estimator introduced in this paper is different from the switch-DR estimator, though it is also designed to switch between DM and DR. The switching criterion of the DR-IC estimator is the KL divergence between the logging policy and the target policy, given any context. This measures the overall closeness between the two policies, while the switch-DR estimator uses the importance weights for each individual samples.

To better demonstrate the differences between these two switching criteria, we consider a toy example designed as follows. Consider a setting with only two actions, denoted as . Assume that the logging policy has the form and . Also, consider the evaluation policy of the form and . The contexts are uniformly distributed between and . We generate 50 training samples and 50 test samples. The threshold of importance weights for switch-DR is 12.65 and the threshold of KL divergences for DR-IC is 2.20. We plot the KL divergences as a function of contexts in Fig. 1 and all 50 samples are marked with red circles or blue triangles based on which method (DM or DR) is employed in the switch-DR estimator. The plot are split into two parts by the threshold of KL divergences in the DR-IC estimator. It is obvious that two switching criteria do not agree with each other always. Some contexts with smaller importance weights where DR is employed in the switch-DR estimator have larger KL divergences, such that DM is employed in the DR-IC estimator.

5 Experiments

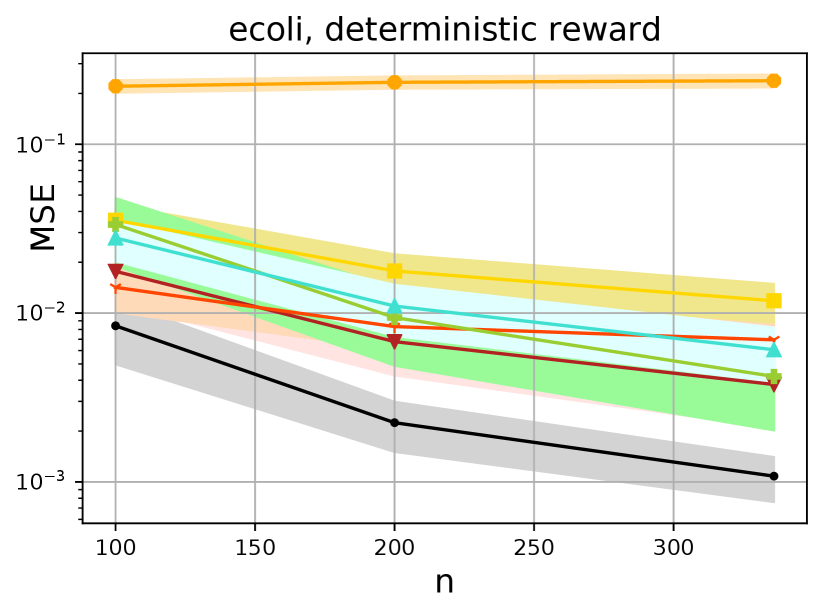

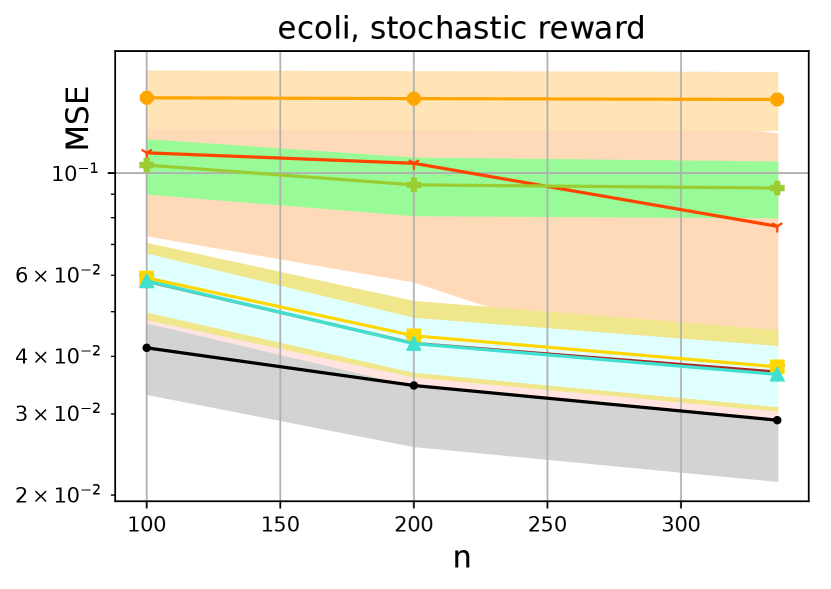

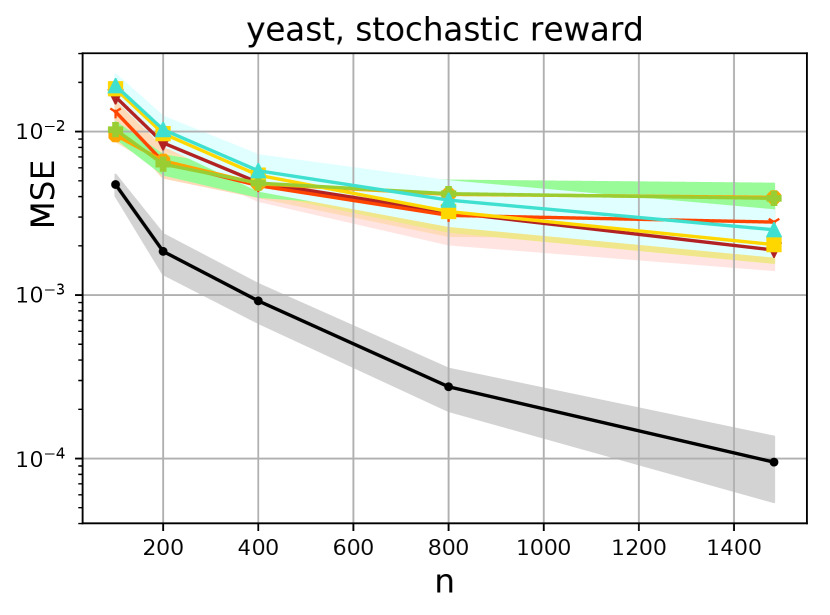

In this section, we outline our experimental results built on the Open Bandit Pipeline (Saito et al.,, 2020) library. For the choice of datasets, we follow (Dudík et al.,, 2011, 2014; Wang et al.,, 2017; Farajtabar et al.,, 2018; Su et al.,, 2020) to transform the UC Irvine (UCI) multi-class classification datasets (Dua and Graff,, 2017) into off-policy evaluation datasets. The notion of classes in a multi-class dataset becomes the action space in a contextual bandit problem, thus the predicted label becomes the action taken. The classification error becomes the observed loss . Note that this is equivalent to observing a reward , under the transformation . Specifically, we consider the indicator loss. That is, for a multi-class example , whenever the rewards are deterministic, then taking an action yields the reward , or whenever the rewards are stochastic, then taking an action yields the reward with probability and otherwise.

We split every dataset into two parts - a training set (70%) and a test set (30%). We generate a context-dependent logging policy based on the training set, and use this logging policy to generate the actions. Towards this end, we generate actions uniformly at random, and train a logistic regression model on this training set with randomized actions. We use this model on the test set to get the predicted action distribution that is used as a logging policy. This logging policy then is used to generate sized bandit data by sampling a context from the entire dataset, sampling an action , and then observing a deterministic or stochastic reward . The value of varies across different datasets.

We also use this training set to generate an evaluation policy by repeating the procedure of getting a logging policy, with the only difference is that the logistic regression model is trained with the true actions present in the training set. We use this evaluation policy to predict the actions in the testing set, and calculate the expected reward, which forms the ground truth value for the given UCI dataset.

We measure the performance of various estimators with clipped mean squared error (MSE), i.e. where is the value of the estimator and is the ground truth. We use replicates of bandit data generation to estimate the MSE and repeat this process for different seeds to generate the bands (which is the standard deviation) around the estimated MSE.

We compare the MSE of the following estimators. DR-IC: our proposed estimator given in (12) with the tuning parameters optimized according to (13), DR-IC (oracle): our proposed estimator with the tuning parameter optimized using the actual MSE with respect to the ground truth, DR-IC(): this is our proposed as given in (11), DM: as given (3) using traditional ridge regression reward estimate (7), DR: standard DR estimator according to (5) using the reward estimate (7), MR-DR: More robust Doubly robust estimator based on Farajtabar et al., (2018), DR-OS (oracle): the oracle tuned version of the shrinkage based estimator proposed in Su et al., (2020) , DR-OS: optimized version of the shrinkage estimator proposed in Su et al., (2020), switch-DR (oracle): the oracle version of the switch estimator proposed in Wang et al., (2017), and switch-DR : the switch estimator proposed in Wang et al., (2017) with tuning parameters optimized using an estimated MSE. In Appendix D, we include a separate simulation study comparing DR-IC() with a standard Nadaraya Watson estimator, to highlight the benefits of the specific kernel introduced in our model, in effectively borrowing information.

In Fig 2, we show several attractive properties of the proposed method with respect to the state of the art algorithms. First, in seven out of the eight cases considered, the borrowing of information displays a significant practical improvement as shown in the performance of DR-IC() (DM-IB) over the standard DM across all sample sizes. In fact, in five out of the eight cases, DR-IC () either outperforms or is as good as a traditional DR approach across the different sample sizes.

Second, the proposed context-based switching algorithm improves upon the performance of DR-IC() and clearly dominates the state-of-the-art methods in seven out of the eight cases considered. The oracle version of the proposed method DR-IC (oracle) significantly outperforms the oracle versions of other methods, while the tuned version DR-IC is either as good as or better than the tuned versions of the competitors. This also highlights the advantages of the KL based switching approach over the importance weight based switching approach of Wang et al., (2017). In five out of the eight cases, DR-IC shows significant improvement over switch-DR, and is as good as switch-DR in the remaining three cases.

Third, the results indicate that for the deterministic reward model, the DR-IC and DR-IC(oracle) are at least as good as, or significantly better than all their counterparts. For the stochastic reward model, the variability increases for all estimators. Nonetheless, DR-IC is still atleast as good as, or better than all the other estimators in three out of four cases.

Additional experiments: We note that we have included more experiment results and details of the implementation in the appendix. In Appendix D, we have provided a comparison of the proposed approach with a kernel based non-parametric reward estimator, and illustrate the advantages of the proposed formulation. We have also included the code in this github page.

6 Conclusions

In this work, we have introduced the idea of information borrowing which utilizes the similarity structure among context-action pairs and the importance weights associated with the logging and evaluation policies to significantly improve the practical properties of a traditional direct method for OPE. Under assumptions discussed in Section 4, the proposed reward estimator is asymptoticallly unbiased, which is usually not the case for traditional DM reward models. We further introduce a KL divergence based switching algorithm that employs the strengths of information borrowing, resulting in a context-based switch estimator that dominates the state of the art algorithms in most cases. These advantages are relevant not just for the fundamental problem of off-policy evaluation as considered in this article, but for more general problems in off-policy optimization and reinforcement learning as well. The notion of effective information borrowing becomes particularly crucial while assimilating information from multiple context distributions or logging policies with varying similarities to the evaluation policy. These problems indicate exciting possibilities for future research using the idea of effective policy dependent information borrowing introduced in this paper. Another exciting direction of research would be to identify better optimization schemes for the tuning parameter and get the tuned estimate closer to the oracle version.

References

- Bang and Robins, (2005) Bang, H. and Robins, J. M. (2005). Doubly robust estimation in missing data and causal inference models. Biometrics, 61(4):962–973.

- Bottou et al., (2013) Bottou, L., Peters, J., Quiñonero-Candela, J., Charles, D. X., Chickering, D. M., Portugaly, E., Ray, D., Simard, P., and Snelson, E. (2013). Counterfactual reasoning and learning systems: The example of computational advertising. Journal of Machine Learning Research, 14(11).

- Brunk, (1961) Brunk, H. (1961). Best fit to a random variable by a random variable measurable with respect to a -lattice. Pacific Journal of Mathematics, 11(3):785–802.

- Brunk, (1963) Brunk, H. (1963). On an extension of the concept conditional expectation. Proceedings of the American Mathematical Society, 14(2):298–304.

- Dua and Graff, (2017) Dua, D. and Graff, C. (2017). UCI machine learning repository.

- Dudík et al., (2014) Dudík, M., Erhan, D., Langford, J., and Li, L. (2014). Doubly robust policy evaluation and optimization. Statistical Science, 29(4):485–511.

- Dudík et al., (2011) Dudík, M., Langford, J., and Li, L. (2011). Doubly robust policy evaluation and learning. arXiv preprint arXiv:1103.4601.

- Farajtabar et al., (2018) Farajtabar, M., Chow, Y., and Ghavamzadeh, M. (2018). More robust doubly robust off-policy evaluation. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, volume 80 of Proceedings of Machine Learning Research, pages 1446–1455.

- Horvitz and Thompson, (1952) Horvitz, D. G. and Thompson, D. J. (1952). A generalization of sampling without replacement from a finite universe. Journal of the American statistical Association, 47(260):663–685.

- Khan et al., (2024) Khan, S., Saveski, M., and Ugander, J. (2024). Off-policy evaluation beyond overlap: Sharp partial identification under smoothness. In Forty-first International Conference on Machine Learning.

- Li et al., (2011) Li, L., Chu, W., Langford, J., and Wang, X. (2011). Unbiased offline evaluation of contextual-bandit-based news article recommendation algorithms. In Proceedings of the fourth ACM international conference on Web search and data mining, pages 297–306.

- Lichtenberg et al., (2023) Lichtenberg, J. M., Buchholz, A., Di Benedetto, G., Ruffini, M., and London, B. (2023). Double clipping: Less-biased variance reduction in off-policy evaluation. arXiv preprint arXiv:2309.01120.

- Mandel et al., (2014) Mandel, T., Liu, Y.-E., Levine, S., Brunskill, E., and Popovic, Z. (2014). Offline policy evaluation across representations with applications to educational games. In AAMAS, volume 1077.

- Mou et al., (2023) Mou, W., Ding, P., Wainwright, M. J., and Bartlett, P. L. (2023). Kernel-based off-policy estimation without overlap: Instance optimality beyond semiparametric efficiency. arXiv preprint arXiv:2301.06240.

- Nadaraya, (1964) Nadaraya, E. A. (1964). On estimating regression. Theory of Probability & Its Applications, 9(1):141–142.

- Robins and Rotnitzky, (1995) Robins, J. M. and Rotnitzky, A. (1995). Semiparametric efficiency in multivariate regression models with missing data. Journal of the American Statistical Association, 90(429):122–129.

- Saito et al., (2020) Saito, Y., Shunsuke, A., Megumi, M., and Yusuke, N. (2020). Open bandit dataset and pipeline: Towards realistic and reproducible off-policy evaluation. arXiv preprint arXiv:2008.07146.

- Šidák, (1957) Šidák, Z. (1957). On relations between strict-sense and wide-sense conditional expectations. Theory of Probability & Its Applications, 2(2):267–272.

- Su et al., (2020) Su, Y., Dimakopoulou, M., Krishnamurthy, A., and Dudík, M. (2020). Doubly robust off-policy evaluation with shrinkage. In International Conference on Machine Learning, pages 9167–9176.

- Wang et al., (2017) Wang, Y.-X., Agarwal, A., and Dudık, M. (2017). Optimal and adaptive off-policy evaluation in contextual bandits. In International Conference on Machine Learning, pages 3589–3597.

- Zhou et al., (2017) Zhou, X., Mayer-Hamblett, N., Khan, U., and Kosorok, M. R. (2017). Residual weighted learning for estimating individualized treatment rules. Journal of the American Statistical Association, 112(517):169–187.

Appendix A Proof of Theorem 1

For simplicity, we prove Theorem 1 when and are scalars and we assume and , where is the uniform distribution between 0 and 1 with density function . In addition, we assume there is no intercept term in the lest square estimate . For more general scenarios, some modifications of the assumptions may be necessary. The kernel function is

Main idea of the proof: We first show that the information borrowing estimator evaluated at any point is asymptotically unbiased. This essentially leads to the asymptotic unbiasedness of the direct method estimator with , . The proof follows three main steps:

(i). We decompose into two parts: a weighted sum of the least squared estimate , i.e., the first two terms in (14), and a weighted sum of , i.e., the third term in (14). Hence, its expectation with respect to follows the same construction where only and are replaced by their expectations, see (15).

(ii). We show and for any . They can be seen as three convolutions with the non-stationary kernel (8). Due to the asymptotic degeneracy of the non-stationary kernel when the bandwidth goes to zero with the sample size, all the three convolutions converge to its corresponding degenerate point , , , respectively.

(iii). As is norm-bounded, the first two terms in (15) converge to zero and the last term converges to the reward function at at . These observations lead to . and subsequently the asymptotic unbiasedness of .

The information borrowing estimator for the rewards function can be decomposed into three terms defined as follows.

| (14) |

where and Now,

| (15) |

Next we show and , where represents convergence in probability.

where , , and as and

Similarly, we can show as , . Thus,

Therefore, as . Similarly, we can show as . Finally, we show .

Similarly, we can show as , . Thus,

Therefore, as . By Assumption 3, .

We conclude that the bias of the direct method estimator with the information borrowing estimator goes to zero in probability as .

Appendix B Proof of Theorem 2

Our proof follows the same steps as of (Wang et al.,, 2017, Theorem 2). Without loss of generosity, we assume the context is a scalar. Let . The mean squared error can be decomposed into squared bias and variance,

| (16) |

For the bias part, notice we only need to consider for , so

| (17) |

Next, we derive the upper bound of the variance term in the MSE. For any random variable and ,

Thus,

| (18) |

Appendix C Additional Experiments and Implementation Details

We use multi-class classification datasets from the UCI Machine Learning Repository (available at https://archive.ics.uci.edu/ml/index.php) (Dua and Graff,, 2017). We use these datasets in the comma-separated values format provided by the Datahub Machine Learning Repository (available at https://datahub.io/machine-learning). Information of the datasets are given in Table 1.

| Dataset | Glass | Ecoli | Wdbc | Vehicle | Yeast | Page-Blocks | OptDigits | SatImage | PenDigits | Letter |

|---|---|---|---|---|---|---|---|---|---|---|

| Contexts dimension | 9 | 7 | 30 | 18 | 8 | 10 | 64 | 36 | 16 | 16 |

| No. of actions | 6 | 8 | 2 | 4 | 10 | 5 | 10 | 6 | 10 | 26 |

| Max sample size | 214 | 336 | 569 | 846 | 1484 | 5473 | 5620 | 6435 | 10992 | 20000 |

We now describe the hyperparameter grids we use in our experiments. We adapt from (Dudík et al.,, 2014; Wang et al.,, 2017; Su et al.,, 2020) for these grids.

(1) For our DR-IC estimator, we have two hyperparameters, namely, bandwidth ’s and the switching . With , we first find the best from a grid of 30 geometrically spaced values between the and . With these best bandwidths for different ’s, we choose the switching parameter from a grid of 30 geometrically spaced values between the quantile and quantile of the KL divergence of the contexts observed from the data. We also include the quantile in this grid for the switching parameter.

(2) For the DR-OS estimator, we choose the shrinkage coefficients from a grid of 30 geometrically spaced values between and where and are the 0.05 and 0.95 quantile of the importance weights () observed in the data.

(3) For the Switch-DR estimator, we choose the switching parameter from a grid of 25 exponentially spaced values between the quantile and quantile of the importance weights observed in the data.

We note that we use 15 grid values for the datasets with maximum sample size larger than 5000 due to the hardware limitations.

We use the Open Bandit Pipeline (available at https://github.com/st-tech/zr-obp) library to implement our DR-IC estimator and benchmark it with the other estimators. We worked with this commit-version (https://github.com/st-tech/zr-obp/commit/a4b61e9e14d1954aa2953d2b21b4e710a8a725d8) of the Open Bandit Pipeline library and provide the same in our supplementary material. We now describe practical implementation details of our DR-IC estimator, focusing on the discrete action setting.

Recall from (10) that

We use the Gaussian kernel for as in (9). We use the traditional logistic regression as the estimate with three-fold cross fitting. Finally, we set where is the variance of vector and is an appropriate sized identity matrix. Since is unknown in practice, we use the above estimate under an independent homoscedastic assumption.

We now summarize our results with the plots for all UCI datasets presented in Fig. 3. At foremost, we present the superior performance statistics of our estimator compared to seven different estimators from the off-policy evaluation literature in Tables 2 and 3. The uniformly superior performance of the information-borrowed DM (DR-IC ) over the traditional DM corroborates the recent findings of Su et al., (2020) that the choice of the DM is indeed important. We also demonstrate the uniformly dominating performance of the proposed DR-IC method over its counterparts across all the datasets, especially for the deterministic reward model. Even for the stochastic reward model, DR-IC beats its counterparts in most cases. Note that, for the current experiments, the stochasticity in the reward model causes the rewards to have a discrete jump (between and ) due to the model choice. We particularly expect a stronger performance for the proposed approach when the considered reward model is continuous in context and action space because the DR-IC approach can borrow information effectively from similar contexts. This suggests that the proposed approach is appropriate for a wide range of datasets and reward models. Furthermore, the KL approach to switching dominates the importance weights based switching in cases, and is equivalent in cases out of the cases considered. This clearly suggests that the KL approach is a preferable and robust choice of switching across a variety of datasets.

| Alg. | DM | DR | MRDR | DR-OS (oracle) | DR-OS | Switch-DR (oracle) | Switch-DR |

|---|---|---|---|---|---|---|---|

| DR-IC (oracle) | 10/0/0 | 10/0/0 | 10/0/0 | 10/0/0 | 10/0/0 | 10/0/0 | 10/0/0 |

| DR-IC | 10/0/0 | 8/2/0 | 7/0/3 | 5/1/4 | 8/1/1 | 3/1/6 | 8/2/0 |

| DR-IC () | 10/0/0 | - | - | - | - | - | - |

| Alg. | DM | DR | MRDR | DR-OS (oracle) | DR-OS | Switch-DR (oracle) | Switch-DR |

|---|---|---|---|---|---|---|---|

| DR-IC (oracle) | 10/0/0 | 10/0/0 | 10/0/0 | 7/1/2 | 10/0/0 | 6/1/3 | 10/0/0 |

| DR-IC | 10/0/0 | 3/6/1 | 3/6/1 | 2/5/3 | 5/3/2 | 4/2/4 | 4/5/1 |

| DR-IC () | 9/1/0 | - | - | - | - | - | - |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x11.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x12.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x13.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x14.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x15.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x16.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x17.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x18.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x19.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x20.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x21.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x22.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x23.png)

Appendix D Experiments with Nadaraya-Watson regression (non-parametric method)

Our proposed estimator is a semiparametric estimator, with a kernel function to model the correlation structure. In this section, we compare the performance of our proposed estimators with a standard Nadaraya-Watson (NW) estimator. An NW estimator is a very well known non-parametric technique for kernel regression. Given the rewards as responses, the context action pairs as predictors and a kernel function K, the NW estimate at a point is given by

Here, is a bandwidth parameter, which is often optimized (using cross validation, for example) given the data and acts as a hyperparameter. In this section we compare our estimators with the DM and DR estimators that use NW kernel regression models for estimating the rewards. We use Sklearn’s (https://scikit-learn.org/stable/related_projects.html) implementation of NW kernel regression for our experiments and this is made available in our code. In these experiments, we use radial basis functions as the kernel, that is, . For the hyperparameter , we choose the best among the grid of 20 logarithmically spaced values between 0.01 and 100 via the one-leave-out cross validation technique.

The experiments show the superior performance of our approach in most cases, as given in Fig.4. Interestingly, both our approach and NW approach have a single bandwidth parameter. However, our DM-IB (dr-ic( )) method has the importance weight terms in the kernel (Eq. ) which implicitly allows an adaptive bandwidth for different actions. This allows more flexible borrowing, which is not possible in a traditional implementation of NW estimator. This is crucial because the information shared across and should be different from information shared across and in general, when

Our formulation allows borrowing of information across and only when the corresponding actions are same, through an indicator function. A traditional nonparametric estimator will borrow information even if the actions are different, leading to higher bias, especially when the actions are nominal. Traditional nonparametric methods also suffer because their non-asymptotic terms become very large, especially in higher dimensional problems, as pointed out in Wang et al., (2017) and the references therein.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x32.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x33.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x34.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x35.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x36.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x37.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x38.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x39.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x40.png)

For a more fair comparison, we also emulate the indicator function for action covariates by choosing appropriate fixed bandwidth. These experiments, whose results are given in Fig.5, still show superior performance of our approach in most cases. The reason is that the role of the kernel function is fundamentally different in the two approaches. In the proposed estimator, the parametric estimate through the ridge regression performs the overall borrowing of information, while the bandwidth parameter controls the differential borrowing of information across context-action pairs when the actions are same (in an adaptive manner depending on the importance weights), which leads to a significant improvement in practical performance. As an example, if the bandwidth parameter is very small, resulting in no borrowing of information, the estimate for that context action pair reduces to the ridge regression estimate. In contrast, the NW estimator has a bandwidth parameter which completely controls the borrowing information across the observations. Thus, if the bandwidth parameter is very small, the final estimate reduces to Dirac delta spikes with no borrowing among observations whatsoever.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x43.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x44.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x45.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x46.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x47.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x48.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x49.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x50.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/f8a12eed-08a4-4fe0-90a3-e42ad9632761/x51.png)

We also repeat the experiments in Fig.5 with an adaptive version of NW estimator (labelled as dm-anw and dr-anw). That is, we use the adaptive radial basis function kernel where the weights are as in Eq.8. The experiments still show superior performance of our approach in most cases, as illustrated in Fig.6. However, we also note the improvement in NW estimate from the previous experiment. Thus, including the weights in the kernel leading to an adaptive nature improves the overall performance. The adaptive nature enables the algorithm to control the borrowing of information relative to the importance weights, which attributes to the improvement. Thus, irrespective of the kind of estimator we use, the effect of appropriately borrowing of information is the key to a better reward model, which can bring substantial improvement to practical performance of the off-policy evaluation.